Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation

Abstract

1. Introduction

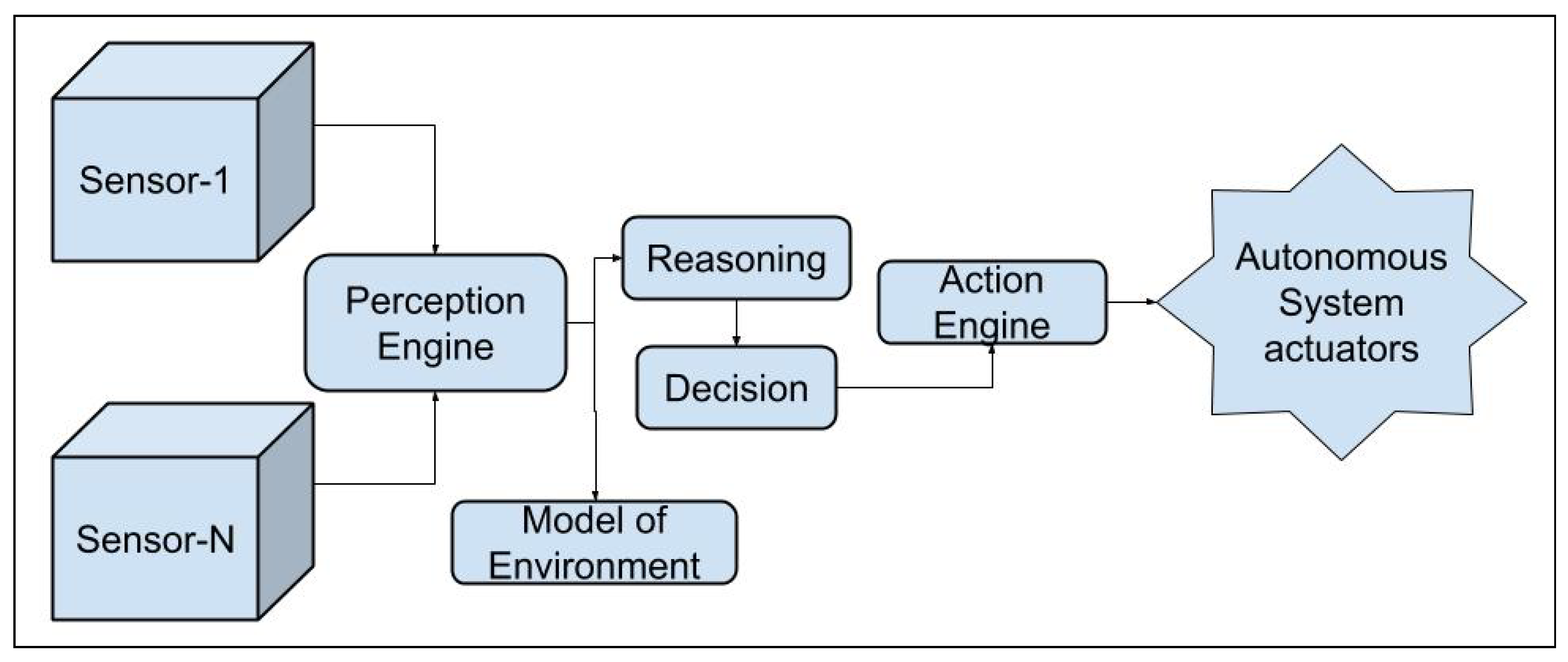

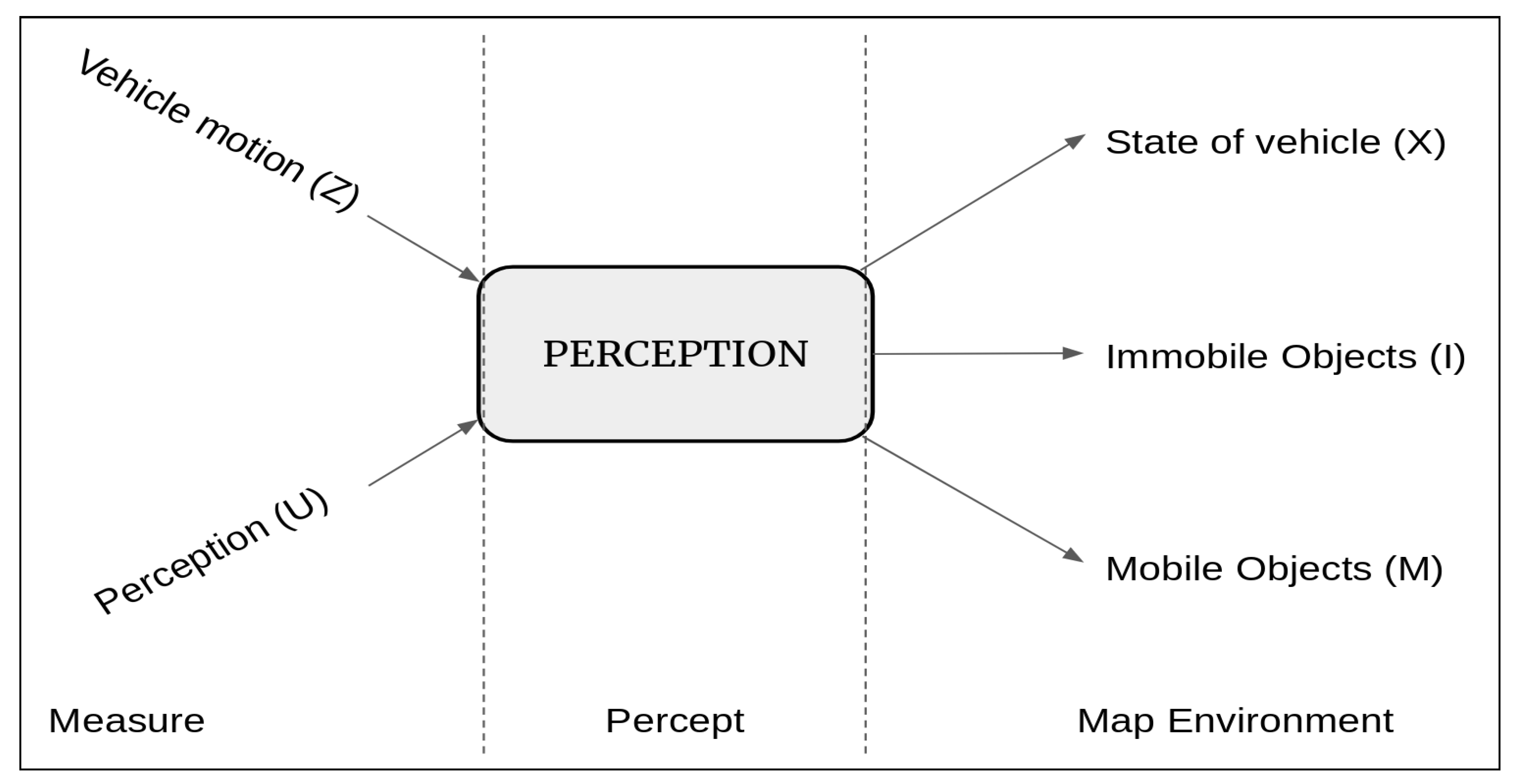

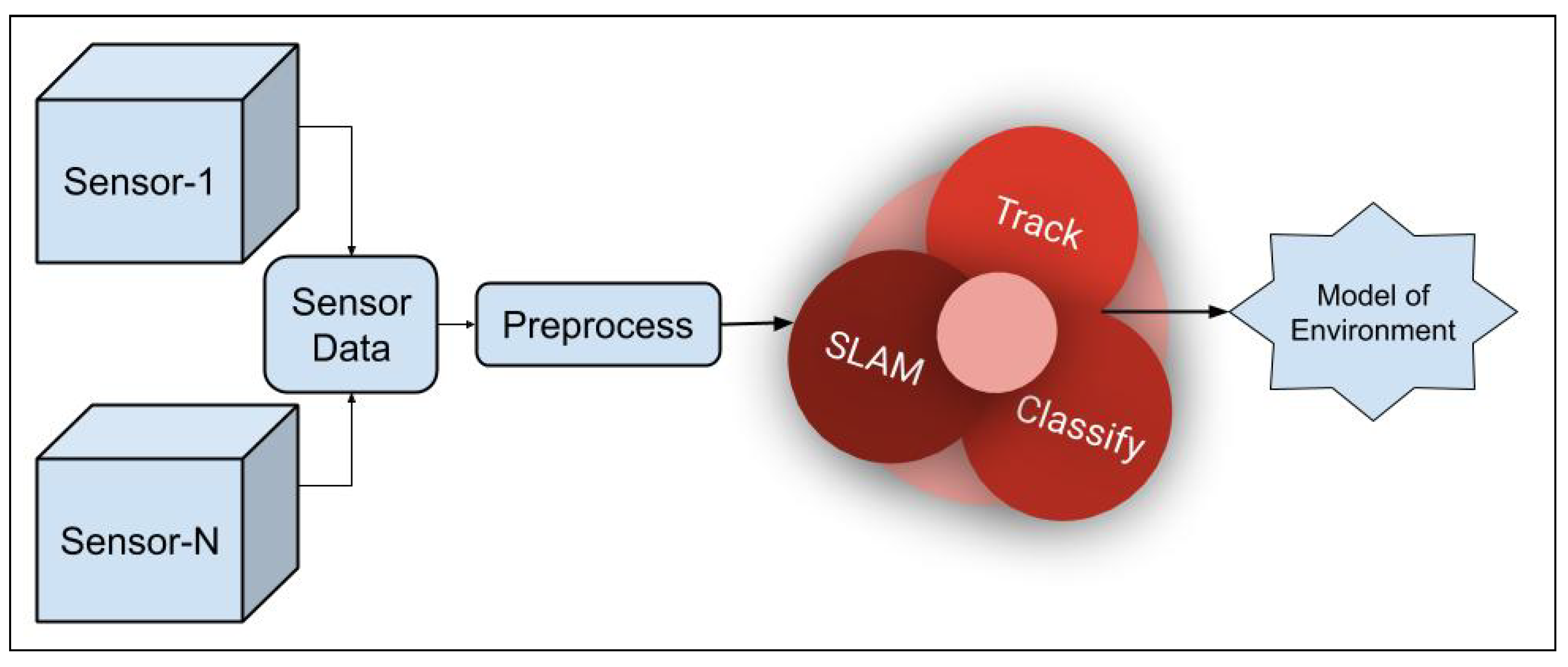

The process of maintaining an internal description of the external environment.

2. Data Fusion

2.1. Sensors and Their Input to Perception

The Collins dictionary defines a sensor as [23]:A device that responds to a physical stimulus (such as heat, light, sound, pressure, magnetism, or a particular motion) and transmits a resulting impulse (as for measurement or operating a control).

Many applications require multiple sensors to be present to achieve a task. This gives rise to the technique of data fusion, wherein the user will need to provide guidelines and rules for the best usage of the data that is given by the sensors. Several researchers have given their definition of data fusion. JDL’s definition of data fusion is quoted by Hall et al. [24] as:A sensor is an instrument which reacts to certain physical conditions or impressions such as heat or light, and which is used to provide information.

Stating that the JDL definition is too restrictive, Hall et al. [21,25,26] re-define data fusion as:A process dealing with the association, correlation, and combination of data and information from single and multiple sources to achieve refined position and identity estimates, and complete and timely assessments of situations and threats, and their significance. The process is characterized by continuous refinements of its estimates and assessments, and the evaluation of the need for additional sources, or modification of the process itself, to achieve improved results.

In addition to the sensors like LiDAR and Camera that are the focus in this survey, any sensor like sonar, stereo vision, monocular vision, radar, LiDAR, etc. can be used in data fusion. Data fusion at this high level will enable tracking moving objects as well, as given in the research conducted by Garcia et al. [27].Data fusion is the process of combining data or information to estimate or predict entity states.Data fusion involves combining data—in the broadest sense—to estimate or predict the state of some aspect of the universe.

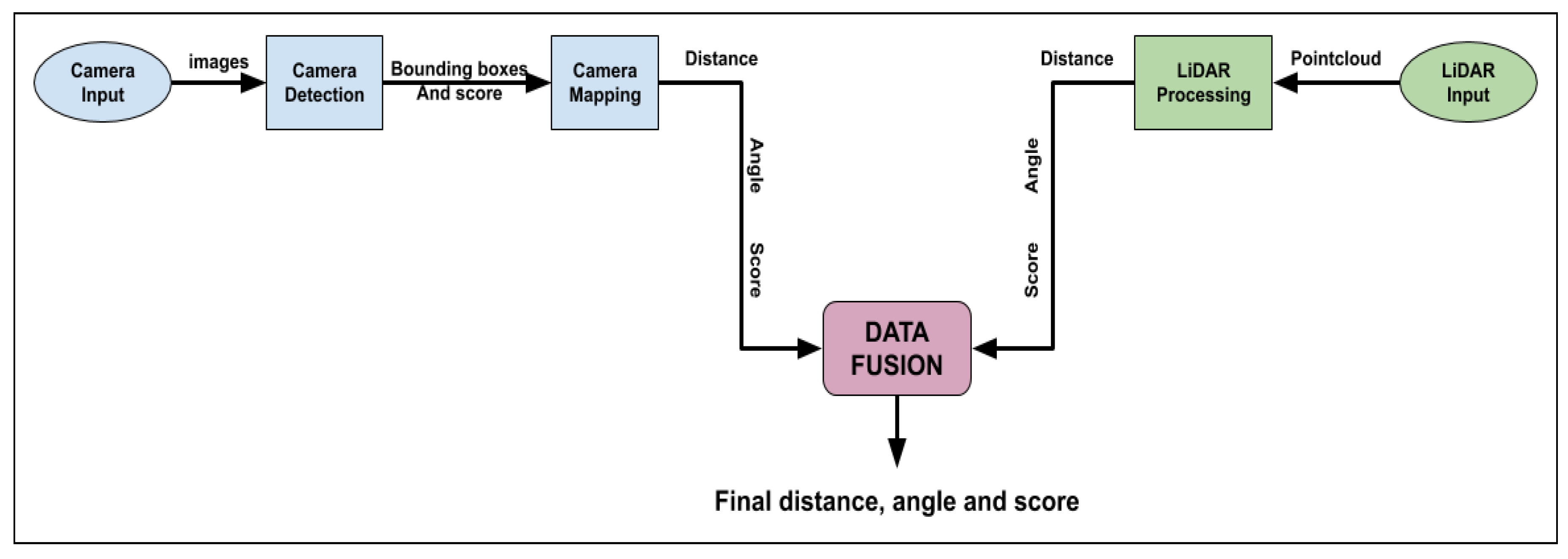

- Raw Data sensing: LiDAR is the primary sensor due to its accuracy of detection and also the higher resolution of data and it is effective in providing the shape of the objects in the environment that may contain hazardous obstacles to the vehicle. A stereo vision sensor can provide depth information in addition to the LiDAR. The benefit of using this combination is the accuracy, speed, and resolution of the LiDAR and the quality and richness of data from the stereo vision camera. Together, these two sensors provide an accurate, rich, and fast data set for the object detection layer [18,28,29].In a recent study in 2019, Rövid et al. went a step further to utilize the raw data and fuse it to realize the benefits early on in the cycle [30]. They fused camera image data with LiDAR pointclouds closest to the raw level of data extraction and its abstraction.

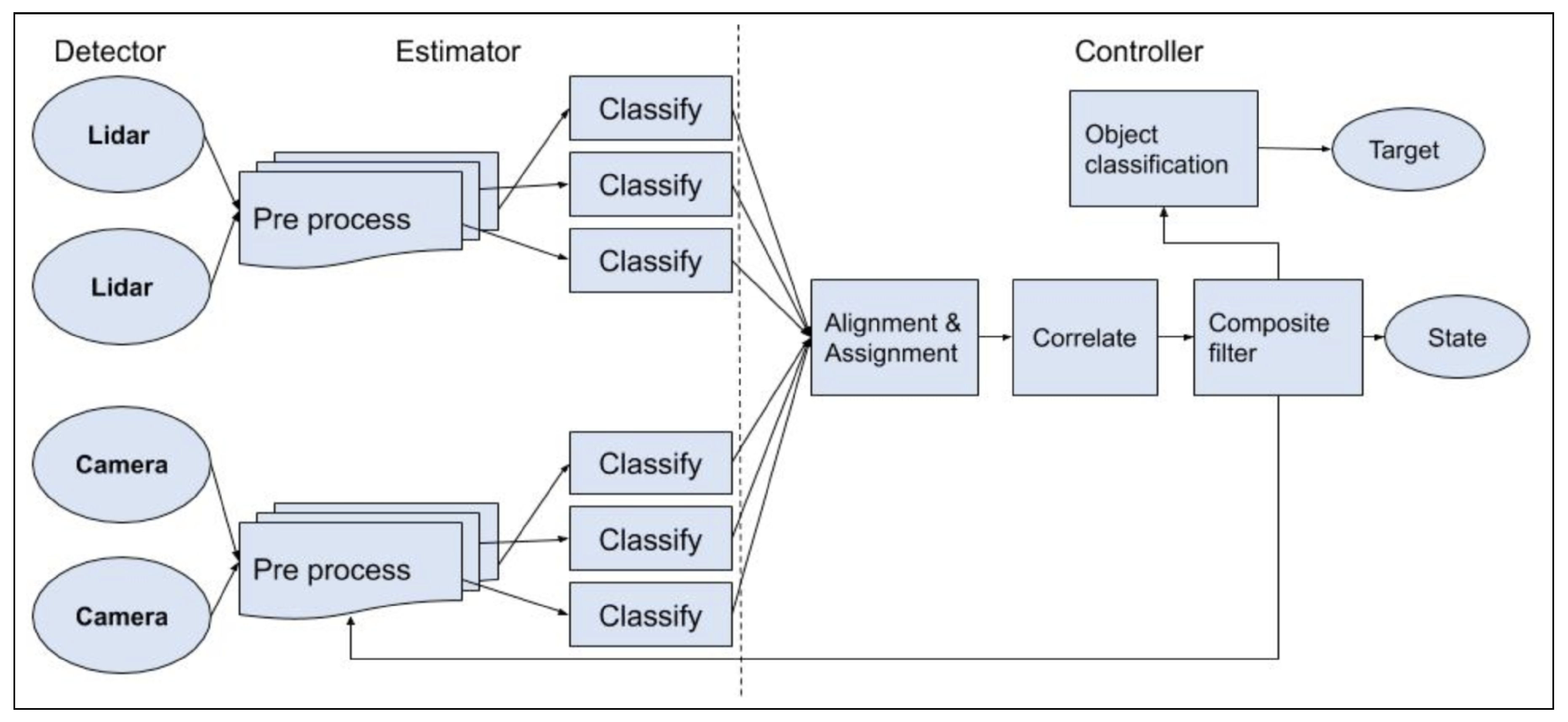

- Object Detection: Object Detection is the method of locating an object of interest in the sensor output. LiDAR data scan objects differently in their environment than a camera. Hence, the methodology to detect objects in the data from these sensors would be different as well. The research community has used this technique to detect objects in aerial, ground, and underwater environments [30,31,32,33,34].

- Object Classification: The Objects are detected and then they are classified into several types so that they can be grouped into small, medium, and large objects, or hazard levels of nonhazardous or hazardous, such that the right navigation can be handled for the appropriate object. Chavez-Garcia et al. [35] fuse multiple sensors including camera and LiDAR to classify and track moving objects.

- Data Fusion: After the classification, the data are fused to finalize information as input to the control layer. The data fusion layer output will provide location information of the objects in the map of the environment, so that the autonomous vehicle can, for instance, avoid the obstacle or stop if the object is a destination or wait for a state to be reached for further action if the object is deemed a marker or milestone. The control segment will take the necessary action, depending on the behavior as sensed by the sensor suite [18,28,29,35,36,37].

2.2. Multiple Sensors vs. Single Sensor

A man with one clock knows what time it is. A man with two clocks is never sure!

2.3. Need for Sensor Data Fusion

- Deprivation: If a sensor stops functioning, the system where it was incorporated in will have a loss of perception.

- Uncertainty: Inaccuracies arise when features are missing, due to ambiguities or when all required aspects cannot be measured

- Imprecision: The sensor measurements will not be precise and will not be accurate.

- Limited temporal coverage: There are initialization/setup time to reach a sensor’s maximum performance and transmit a measurement, hence limiting the frequency of the maximum measurements.

- Limited spatial coverage: Normally, an individual sensor will cover only a limited region of the entire environment—for example, a reading from an ambient thermometer on a drone provides an estimation of the temperature near the thermometer and may fail to correctly render the average temperature in the entire environment.

- Extended Spatial Coverage: Multiple sensors can measure across a wider range of space and sense where a single sensor cannot

- Extended Temporal Coverage: Time-based coverage increases while using multiple sensors

- Improved resolution: A union of multiple independent measurements of the same property, the resolution is better, i.e., more than that of single sensor measurement.

- Reduced Uncertainty: As a whole, when we consider the entire sensor suite, the uncertainty decreases, since the combined information reduces the set of unambiguous interpretations of the sensed value.

- Increased robustness against interference: An increase in the dimensionality of the sensor space (measuring using a LiDAR and stereo vision cameras), the system becomes less vulnerable against interference.

- Increased robustness: The redundancy that is provided due to the multiple sensors provides more robustness, even when there is a partial failure due to one of the sensors being down.

- Increased reliability: Due to the increased robustness, the system becomes more reliable.

- Increased confidence: When the same domain or property is measured by multiple sensors, one sensor can confirm the accuracy of other sensors; this can be attributed to re-verification and hence the confidence is better.

- Reduced complexity: The output of multiple sensor fusion is better; it has lesser uncertainty, is less noisy, and complete.

2.4. Levels of Data Fusion Application

- Raw-data or low-level data fusion. At this most basic or lowest level, better or improved data are obtained by integrating raw data directly from multiple sensors; such data can be used in tasks. This new combined raw data will contain more information than the individual sensor data. We have summarized the most common data fusion techniques and the benefits of using that technique as well [55].

2.5. Data Fusion Techniques

The definition of multi-sensor data fusion by Waltz and Llinas [44] and Hall [24] is given as:“Information Fusion encompasses theory, techniques, and tools conceived and employed for exploiting the synergy in the information acquired from multiple sources (sensor, databases, information gathered by human, etc.) such that the resulting decision or action is in some sense better (qualitatively or quantitatively, in terms of accuracy, robustness, etc.) than would be possible if any of these sources were used individually without such synergy exploitation.”

The definition, process, and one of the purposes of data fusion is elicited by Elmenreich et al. [80] as:The technology concerned with the combination of how to combine data from multiple (and possible diverse) sensors to make inferences about a physical event, activity, or situation

With respect to the output data types of the sensors, we can broadly categorize them into homogeneous sensor data and heterogeneous sensor data. Heterogeneous sensor data comprise of different types of sensing equipment, like imaging, laser, auditory, EEG, etc. For example, a monocular camera (RGB) will have pure image data, while a stereo vision camera (RGB-D) could have imaging data for both the cameras and a depth cloud for the depth information, an EEG could output signal details and LiDAR outputs’ location details of the object of interest with respect to the LiDAR. Systems with multi-sensor fusion are capable of providing many benefits when compared with single sensor systems. This is because all sensors suffer from some form of limitation, which could lead to the overall malfunction or limited functionality in the control system where it is incorporated.“Sensor Fusion is the combining of sensory data or data derived from sensory data such that the resulting information is in some sense better than would be possible when these sources were used individually”.

2.5.1. K-Means

- Simpler to implement compared to other techniques

- Good generalization to clusters of various shapes and sizes, such as elliptical clusters, circular, etc.

- Simpler and easy adaption to new examples

- Convergence is guaranteed.

- Scales to large data sets

- Centroid position can be warm-started

- Optimal solution for the cluster centers are not always found by the algorithm;

- The algorithm assumes that the covariance of the dataset is irrelevant or that it has been normalized already.

- The system must have knowledge of the number of clusters a priori.

- Assumption is made that this number is optimum.

2.5.2. Probabilistic Data Association (PDA)

- Tracking target excellence: Excellent for tracking targets that do not make sudden changes in their navigation

- Track loss: PDA might display poor performance when the targets are close to each other because it ignores the interference with other targets and hence there is a possibility that it could wrongly classify the closest tracks.

- Suboptimal Bayesian approximation: PDA gives suboptimal Bayesian approximation when the source of information is uncertain; this might be seen when a LiDAR scans a pole.

- One target: PDA gives incorrect results in the presence of multiple targets since the false alarm model does not work well. The Poisson distribution typically models the number of false, with an assumption of uniform distribution

- Track management: Problems of tracking algorithms must be provided for track initialization and track deletion since PDA needs this a priori.

2.5.3. Joint Probabilistic Data Association

- Robust: JPDA is robust compared to PDA and MHT.

- Multiple object tracking: The algorithm can be used to track multiple agents (however, with a caveat)

- Representation of multimodal data: Can represent multimodal state densities, which represent the increase in robustness of the underlying state estimation process

- Computationally expensive: JPDA is a computationally expensive algorithm when employed in multiple target environments since the number of hypotheses’ increments exponentially with the number of targets.

- Exclusive mechanism: It requires an exclusive mechanism for track initialization.

2.5.4. Distributed Multiple Hypothesis Test

- Very useful in distributed and de-centralized systems

- Outperforms JPDA for the lower densities of false positives

- Efficient at tracking multiple targets in cluttered environments

- Functions also as an estimation and tracking technique

- Exponential computational costs that are in the order of , where X is the number of variables to be estimated and n is the number of possible associations

2.5.5. State Estimation

2.5.6. Covariance Consistency Methods

- Efficient in distributed systems; i.e., multimodal multi-sensors as well

- Fault-tolerant for covariance means and estimates

- If the Kalman filter is used for estimation, the exact cross-covariance information must be determined. This could pose a big challenge.

- Suboptimal results are realized if the iterative application of the technique is used to process a sequence of estimates for a batch application for simultaneous fusion of the estimates.

2.5.7. Decision Fusion Techniques

- Enables the user to arrive at a single decision from a set of multiple classifiers or decision-makers

- Provides compensatory advantage for other sensors when one sensor is deficient, in a multi-sensor system

- Enables a user to adjust the decision rules to arrive at the optimum.

- Establishing a priori probabilities is difficult

- When a substantial number of events that depend on the multiple hypotheses occur, this will be very complex and a hypothesis must be mutually exclusive

- Decision uncertainty is difficult to finalize

2.5.8. Distributed Data Fusion

- Enables usage across dynamic and distributed systems

- Communication costs can be low since systems can communicate with each other after onboard processing at the individual agents/nodes

- Spatial and temporal information alignment

- Out-of-sequence measurements

- Data correlation challenges

- Systems may need robust communication systems to share information.

2.6. Classifications of Data Fusion Techniques

2.6.1. Data Type of Sensor Input and Output Values

2.6.2. Abstraction Levels

2.6.3. JDL Levels

- Layer 0: Processes source data comprised of pixel and signal. Information is extracted, processed, reduced, and output to higher layers.

- Layer 1: Data output from layer 0 is processed here and refined. Typical processes are alignment in the spatial-temporal information, correlation, clustering, association and grouping techniques, false-positive removal and reduction, state estimation, image feature data combination, and state estimations. Classification and identification: state and orientation are the typical outputs. It also performs input data transformation to obtain consistent and robust data-structures.

- Layer 2: Based on other output of layer 1 or the object refinement layer, analysis of the situation is performed. Based on the data input and the present and past decisions, the situation assessment is performed. A set of high-level inferences is the outcome of this layer. Identification of events and activities are performed.

- Layer 3: The output of layer 2 i.e., the significant activities and current events are assessed for impact on the system. Prediction of an outcome and threat analysis is performed at this layer.

- Layer 4: Overall processes from layer 0 through layer 3 are optimized and improved. Resource control and management, task scheduling, and prioritizing are performed to make improvements.

2.6.4. Data Source Relationships

2.6.5. System Architecture

- Distributed or decentralized systems: State estimation and data processing are performed locally and then communicated to the other systems. Single node to groups of systems form the range of processing in this architecture. The fusion node processes the result only after the individual data processing at the local level is completed [94,99,100].

- Hierarchical systems: A system architecture, wherein the higher-level nodes control the lower-level nodes and a mechanism of hierarchical control of data fusion is set up, is the hierarchical data fusion system. In this type of architecture, a combination of distributed decentralized nodes could be employed to achieve data fusion. Back in the second half of the 1990s, Bowman et al. proposed a hierarchical data fusion system [101] which was reviewed by Hall et al. [21]. Taropa et al. in 2006 proposed a hierarchical data fusion model [102] in which they use real-time objects in a highly flexible framework and provide these features through an API. Dieterle et al. proposed a data fusion system for object tracking [103]. In the publication, they combine sensor information using a hierarchical data fusion approach and show that this approach drastically improves robustness in object detection with respect to sensor failures and occlusions.

3. Sensor Hardware

3.1. LiDAR

LIDAR, which stands for Light Detection and Ranging, is a remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) to the Earth. These light pulses—combined with other data recorded by the airborne system—generate precise, three-dimensional information about the shape of the Earth and its surface characteristics [104].

3.1.1. Data Generation in a LiDAR

- Number of Returns: The Light pulses from a LiDAR can penetrate a canopy in a forest. This also means that LiDAR can hit the bare Earth or short vegetation.

- Digital Elevation Models: Digital Elevation Models (DEM) are earth (topographic) models of the Earth’s surface. A DEM can be built by using only ground returns. This is different from Digital Terrain Models (DTM), wherein contours are incorporated.

- Digital Surface Models: A Digital Surface Model (DSM) incorporates elevations from man-made and natural surfaces. For example, the addition of elevation from buildings, tree canopies, vehicular traffic, powerlines, vineyards, and other features.

- Canopy Height Model: Canopy Height Models (CHM) provides the true height of topographic features on the ground. This is also called a Normalized Digital Surface Model (nDSM).

- Light Intensity: Reflectivity or Light intensity varies with the composition of the object reflecting the LiDAR’s return. Light intensity is defined as the reflective percentages.

3.1.2. Classifying the LiDAR

- Data Returned by the LiDAR: LiDAR types based on storing the data returned from the object [106]:

- Discrete LiDAR: While scanning, the data returned are in the form of 1st, 2nd, and 3rd returns, due to the light hitting multiple surfaces. Finally, a large-final pulse is returned. This can be seen when a LiDAR hits a forest canopy [107]. When the LiDAR stores the return data individually/discretely, it takes each peak and separates each return.

- Continuous/Full waveform LiDAR: When the entire waveform is saved as one unit, its a continuous or full form LiDAR [108]. A lot of LiDARs use this form of recording.

- Lidar types based on technology: The following technology types can be considered as well while classifying LiDARs [105,109]

- Mechanical-scanners: Macro-scanners, Risley prisms, Micro-motion.

- Non-Mechanical-scanners: MEMS, Optical phased arrays, electro-optical, liquid crystal.

- Flash-LiDAR-non-scanners

- Structured light-non-scanners

- Multicamera-stereo-non-scanners

- Based on area of usage: Two types of LiDAR broadly used are: topographic and bathymetric [104]. Topographic LiDARs are typically used in land mapping, and they use near-infrared laser and bathymetric LiDARs use green light technology for water-penetration to measure river bed elevations and seafloor.In Topographic LiDAR, the two main types are 2D (single scan) and 3D (multiple scan). Some brands of topographic LiDAR are Velodyne [110], another model from Velodyne, the HDL-64E provides a 3D laser scan i.e., 360° horizontal and 26.9° vertical field of view (FOV), while 2D LiDARs like the TiM571 LiDAR scanning range finder from SICK provide a 2D 220° FOV this is very similar to RPLidar [111] from Slamtech, Ouster [112] from Ouster laser scanners, Eclipse mapping systems [113]. The Bathymetric LiDARs use the green spectrum technology and are predominantly used for water surface and underwater mapping tasks. A small listing and background of Bathymetric LiDARs are given by Quadros et al. from Quadros [114]. However, bathymetric LiDARs are out of the scope of this survey due to its nature of use.

3.1.3. Advantages and Disadvantages in Using LiDAR

3.2. Camera

3.2.1. RGB Family of Camera

3.2.2. 360° Camera

3.2.3. Time-of-Flight (TOF)

3.3. Implementation of Data Fusion with the Given Hardware

- Geometric alignment of the sensor data

- Resolution match between the sensor data

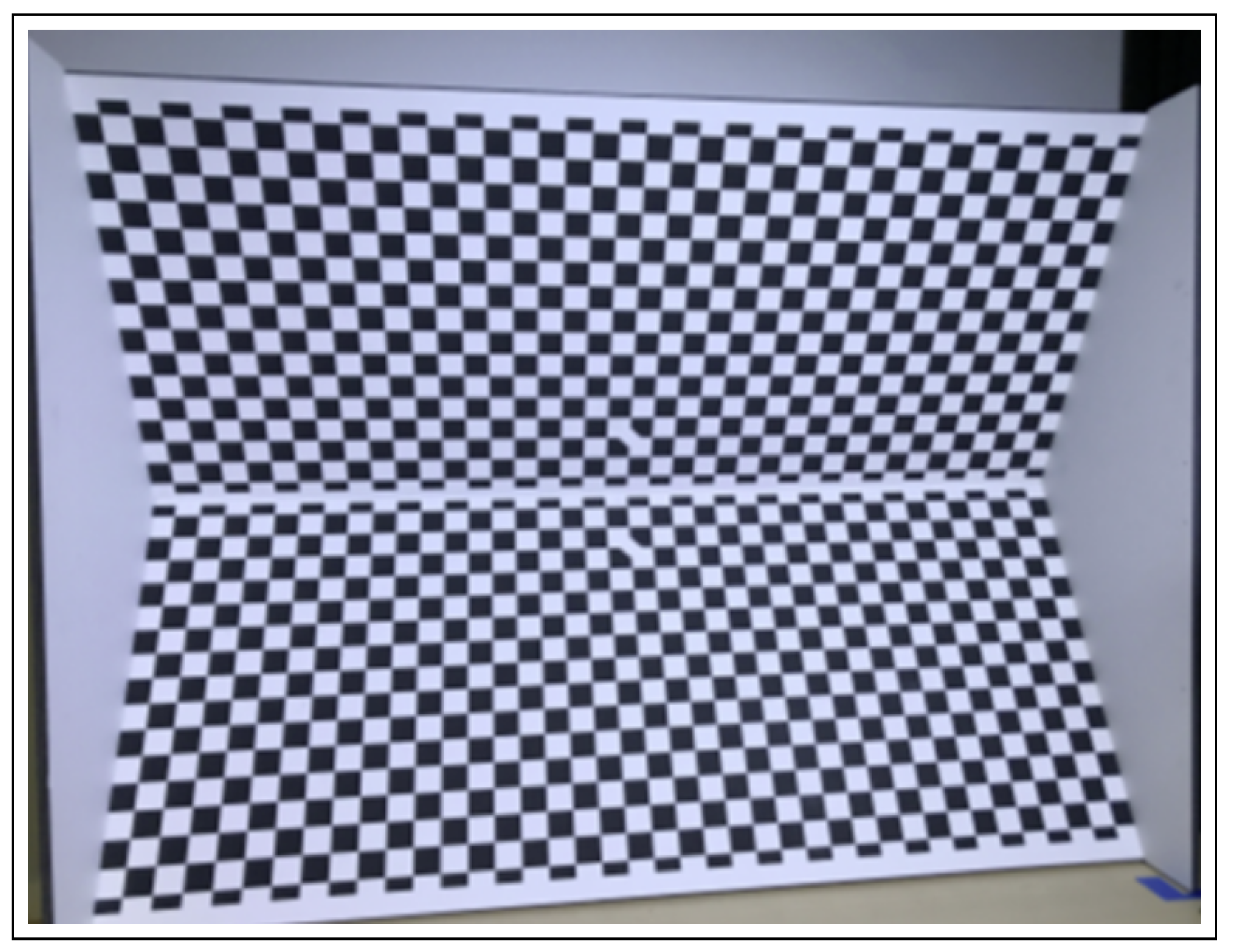

3.3.1. Geometric Alignment of the Sensor Data

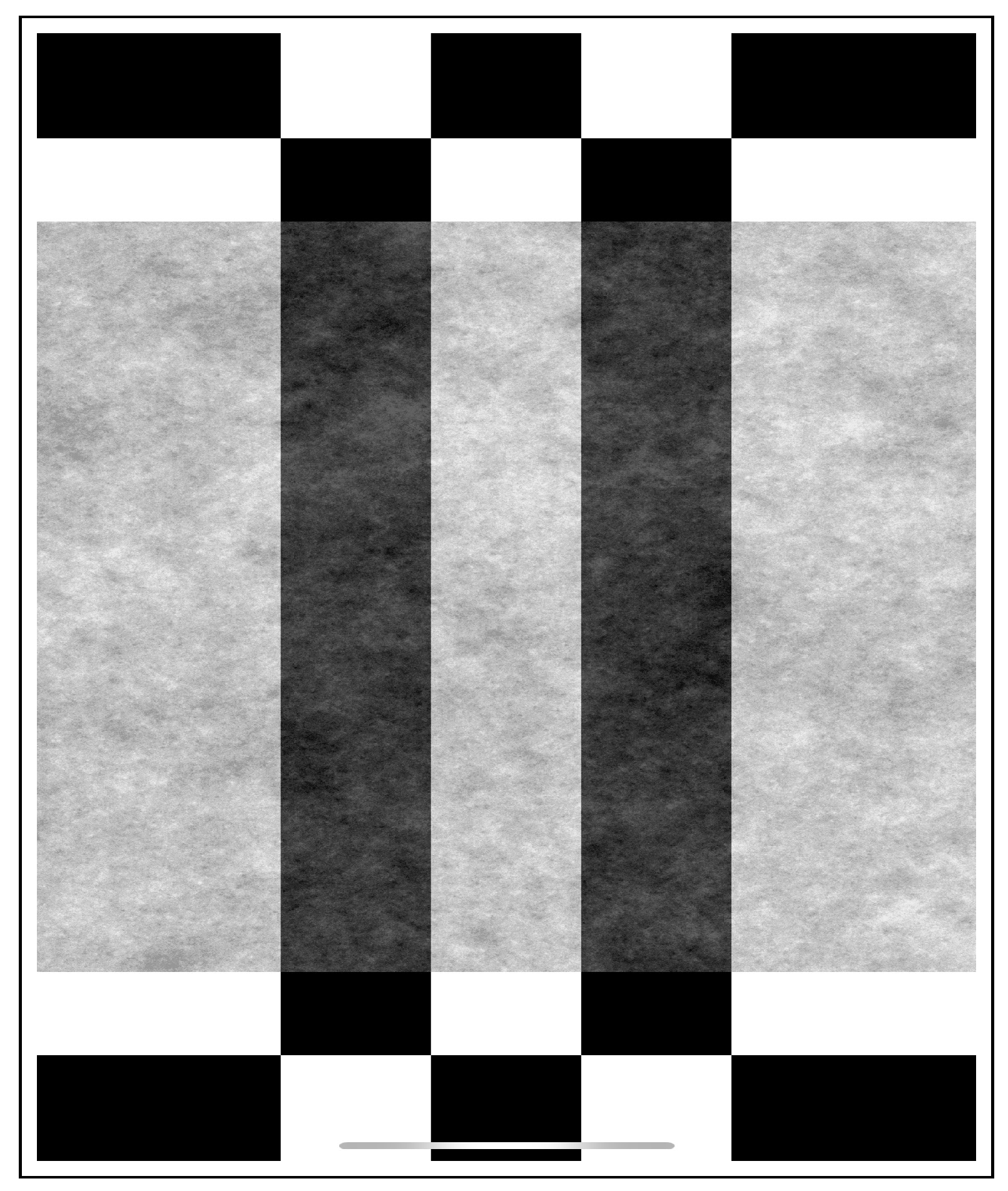

3.3.2. Resolution Match between the Sensor Data

3.4. Challenges with Sensor Data Fusion

3.5. Sensor Data Noise

3.5.1. Kalman Filters

- : Current estimate,

- : Estimate of the signal in Previous state,

- : Control signal,

- : Measured value from the sensors,

- : Process noise in the previous iteration,

- : Measurement noise in the present iteration.

- Predict state

- Measurement Update—Calculate the Kalman gain (weights): Kalman gain—The main and unknown value in this equation

- Update state

- Update state covariance

- Loop (now k becomes ), which is the next and subsequent iterations.where:: Prior error covariance Matrix,: Current Covariance Matrix, updated during each iteration,: Covariance Matrix,: Measurement Noise Covariance Matrix.

3.5.2. Extended Kalman Filter

3.5.3. Unscented Kalman Filters

- Select sigma points

- Model forecasting

- Data assimilation

Founded on the intuition that it is easier to approximate a probability distribution than it is to approximate an arbitrary nonlinear function or transformation

3.5.4. Distributed Kalman Filter

- Consensus on Estimates

- Local Kalman filtering

- Continuous-time Distributed Kalman filtering

- Iterative Kalman-Consensus filtering

- Consensus on sensor data fusion

3.5.5. Particle Filters

- Importance sampling:

- Sample the present trajectories and update

- Normalize the weights

- Selection:

- Samples that have high importance weights are multiplied

- Samples that have low importance weights are suppressed

- Markov Chain Monte Carlo Transition:

- Apply Markov transition kernel with an invariant distribution that is given by and obtain

3.6. Research Patents in Data Fusion

- Publication number: US9128185B2, Publication date: 08 September 2015, Inventors: Shuqing Zeng at GM Global Technology Operations LLC, Title: Methods and apparatus of fusing radar/camera object data and LiDAR scan points

- Publication number: US20100157280A1, Publication date: 24 June 2010; Inventors: Kresimir Kusevic, Paul Mrstik, Len Glennie

- Publication number: WO 2016/100814 A1, Publication date: 23 June 2016, Inventors: Michael J. GIERING, Kishore Reddy, Vivek Venugopalan, Title: Multi-Modal sensor data fusion for perception systems

- Publication number: EP 3396408 A1 20181031 (EN), Publication date: January 2013, Title: LiDAR and camera data fusion for automated vehicle

4. Autonomous Navigation

Research group BIS performed an analysis on the Global Vision and Navigation System Market for Autonomous Vehicle: They focused on Components (Camera, LiDAR, Radar, Ultrasonic Sensor, GPS, and IMU), Level of Autonomy, and Region and quotes [164]:Autonomous navigation means that a vehicle can plan its path and execute its plan without human intervention. An autonomous robot is one that not only can maintain its stability as it moves, but also can plan its movements. They use navigation aids when possible, but can also rely on visual, auditory, and olfactory cues. The Global Autonomous Navigation Market was valued at USD $2.52 Billion in 2019, and it is further estimated to grow at a CAGR of 16.2% from 2019 to reach USD $6.15 Billion by the year 2025. The Asia Pacific Autonomous Navigation Market is excepted to develop at the most elevated CAGR during the forecasted period 2019–2025.

The automotive industry is on the verge of a revolution with the gradual development of self-driven vehicles. The global vision and navigation system industry for autonomous vehicle depicts the market that is expected to witness a CAGR of 26.78%, during the forecast period from 2019 to 2024.

4.1. Mapping

4.1.1. Topological Mapping

4.1.2. Grid Based Approach

4.1.3. Metric Mapping

4.1.4. Hybrid Mapping

4.2. Localization

4.2.1. Dead Reckoning

4.2.2. Signal-Based Localization

4.2.3. Global Positioning

4.2.4. Network of Sensors Localization

4.2.5. Vision-Based Localization

4.2.6. Indoor VR Localization

4.3. Path Planning

- Complete or Heuristic: A complete type of path planner was designed by Wagner et al. [224] in which a multi system path planner uses both coupled and de-coupled algorithms and hence benefits from both of the techniques. Urdiales et al. designed a complete path planner [225] by using a pyramid structure for pre-processing the information to existing classical path planners. Heuristic approaches were applied by Mac et al. [226]. Vokhmintsev [227] designed yet another heuristic path planned that could be used in unknown dynamic environments,

- Global or Local: Global path planners use environment information available apriori to navigate. Information about the environment will be known a priori and can consist of maps, cells, grid, and so on. A complete path is generated from source to target, before the vehicle starts moving [228]. Some of the global planners are Voronoi [229] by Bhattacharya et al., Silhouette [230] by Canny et al., Dijkstra [231] by Skiena et al., A by Dechter et al. [232], Neural Network based by Yang et al. [233], and so on.A local path planner was proposed by Buniyamin et al. [220] in which they use bug algorithm to detect obstacles in the environments using onboard sensors and plan the path. This is a local planner that uses obstacle border to guide the vehicle towards the target, until the required target achievement conditions are met. They propose a new algorithm ’PointBug’ that minimizes the use of the border (outer periphery), in order to generate a path from source to target. Some of the local path planners are based on [228] Splines as given by Piazzi et al. [234], Bezier lines as given by Rastelli et al. [235], arcs and segments by Reeds et al. [236], Clothoids lines [237], and so on.

- Static or Dynamic: When an autonomous system encounters static objects in its path, it can perform static path planning and, if it encounters moving objects, it performs dynamic path planning.Kumar et al. did initial research on static and dynamic path planners on humanoid robots [238]. They developed a novel controller that represents static path planner as a single robot encountering random static obstacles and dynamic planner as multiple robots encountering random static obstacles. They use a Petri-net controller. Tuba et al. [239] developed an optimal path planner that encounters static obstacles. They used harmony search algorithm and adapted it to their requirements for static obstacles and danger or no-go zones. Dutta et al. [240] developed a static path planner for snake-like robots when they encounter static obstacles using a critical snakeBug algorithm.As recent as 2020, Gabardos et al. [241] discussed the methods for a variant of dynamic path planning that were based on multisensor fusion to detect the pose, size, and shape of the object along the planned route. The dynamic routing is accomplished by interpolation of the route poses, with some being re-positioned. Connell et al. developed dynamic path planners [242] for mobile robots with replanning using RRT. Liu et al. [243] developed a dynamic path planner using an improvized ant colony optimization algorithm. They simulate the algorithm on a grid map.

4.4. Obstacle Avoidance

5. Fusion of Sensor Data for Autonomous Navigation

5.1. Mapping

5.2. Localization

5.3. Path Planning

5.4. Obstacle Avoidance

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Simpson, R.C. Smart wheelchairs: A literature review. J. Rehabil. Res. Dev. 2005, 42, 423. [Google Scholar] [CrossRef] [PubMed]

- Fehr, L.; Langbein, W.E.; Skaar, S.B. Adequacy of power wheelchair control interfaces for persons with severe disabilities: A clinical survey. J. Rehabil. Res. Dev. 2000, 37, 353–360. [Google Scholar] [PubMed]

- Martins, M.M.; Santos, C.P.; Frizera-Neto, A.; Ceres, R. Assistive mobility devices focusing on smart walkers: Classification and review. Robot. Auton. Syst. 2012, 60, 548–562. [Google Scholar] [CrossRef]

- Noonan, T.H.; Fisher, J.; Bryant, B. Autonomous Lawn Mower. U.S. Patent 5,204,814, 20 April 1993. [Google Scholar]

- Bernini, F. Autonomous Lawn Mower with Recharge Base. U.S. Patent 7,668,631, 23 February 2010. [Google Scholar]

- Ulrich, I.; Mondada, F.; Nicoud, J. Autonomous Vacuum Cleaner. Robot. Auton. Syst. 1997, 19. [Google Scholar] [CrossRef]

- Mutiara, G.; Hapsari, G.; Rijalul, R. Smart guide extension for blind cane. In Proceedings of the 4th International Conference on Information and Communication Technology, Bandung, Indonesia, 25–27 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Bharucha, A.J.; Anand, V.; Forlizzi, J.; Dew, M.A.; Reynolds, C.F., III; Stevens, S.; Wactlar, H. Intelligent assistive technology applications to dementia care: current capabilities, limitations, and future challenges. Am. J. Geriatr. Psychiatry 2009, 17, 88–104. [Google Scholar] [CrossRef]

- Cahill, S.; Macijauskiene, J.; Nygård, A.M.; Faulkner, J.P.; Hagen, I. Technology in dementia care. Technol. Disabil. 2007, 19, 55–60. [Google Scholar] [CrossRef]

- Furness, B.W.; Beach, M.J.; Roberts, J.M. Giardiasis surveillance–United States, 1992–1997. MMWR CDC Surveill. Summ. 2000, 49, 1–13. [Google Scholar]

- Topo, P. Technology studies to meet the needs of people with dementia and their caregivers: A literature review. J. Appl. Gerontol. 2009, 28, 5–37. [Google Scholar] [CrossRef]

- First Sensors. Impact of LiDAR by 2032, 1. Available online: https://www.first-sensor.com/cms/upload/investor_relations/publications/First_Sensors_LiDAR_and_Camera_Strategy.pdf (accessed on 1 August 2019).

- Crowley, J.L.; Demazeau, Y. Principles and techniques for sensor data fusion. Signal Process. 1993, 32, 5–27. [Google Scholar] [CrossRef]

- Steinberg, A.N.; Bowman, C.L. Revisions to the JDL data fusion model. In Handbook of Multisensor Data Fusion; CRC Press: Boca Raton, FL, USA, 2017; pp. 65–88. [Google Scholar]

- McLaughlin, D. An integrated approach to hydrologic data assimilation: interpolation, smoothing, and filtering. Adv. Water Resour. 2002, 25, 1275–1286. [Google Scholar] [CrossRef]

- Van Mechelen, I.; Smilde, A.K. A generic linked-mode decomposition model for data fusion. Chemom. Intell. Lab. Syst. 2010, 104, 83–94. [Google Scholar] [CrossRef]

- McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Caputo, M.; Denker, K.; Dums, B.; Umlauf, G.; Konstanz, H. 3D Hand Gesture Recognition Based on Sensor Fusion of Commodity Hardware. In Mensch & Computer; Oldenbourg Verlag: München, Germany, 2012. [Google Scholar]

- Lanckriet, G.R.; De Bie, T.; Cristianini, N.; Jordan, M.I.; Noble, W.S. A statistical framework for genomic data fusion. Bioinformatics 2004, 20, 2626–2635. [Google Scholar] [CrossRef] [PubMed]

- Aerts, S.; Lambrechts, D.; Maity, S.; Van Loo, P.; Coessens, B.; De Smet, F.; Tranchevent, L.C.; De Moor, B.; Marynen, P.; Hassan, B.; et al. Gene prioritization through genomic data fusion. Nat. Biotechnol. 2006, 24, 537–544. [Google Scholar] [CrossRef] [PubMed]

- Hall, D.L.; Llinas, J. An introduction to multisensor data fusion. Proc. IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef]

- Webster Sensor Definition. Merriam-Webster Definition of a Sensor. Available online: https://www.merriam-webster.com/dictionary/sensor (accessed on 9 November 2019).

- Collins Dictionary Definition. Collins Dictionary Definition of a Sensor. Available online: https://www.collinsdictionary.com/dictionary/english/sensor (accessed on 9 November 2019).

- Hall, D.L.; McMullen, S.A. Mathematical Techniques in Multisensor Data Fusion; Artech House: Norwood, MA, USA, 2004. [Google Scholar]

- Hall, D.L.; Linn, R.J. A taxonomy of algorithms for multisensor data fusion. In Proceedings of the 1990 Joint Service Data Fusion Symposium, Gold Coast, Australia, 27–31 August 1990. [Google Scholar]

- Liggins, M.E.; Hall, D.L.; Llinas, J. Handbook of Multisensor Data Fusion: Theory and Practice; The Electrical Engineering and Applied Signal Processing Series; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Chavez-Garcia, R.O. Multiple Sensor Fusion for Detection, Classification and Tracking of Moving Objects in Driving Environments. Ph.D. Thesis, Université de Grenoble, Grenoble, France, 2014. [Google Scholar]

- De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and camera sensor data for environment sensing in driverless vehicles. arXiv 2018, arXiv:1710.06230v2. [Google Scholar]

- Rao, N.S. A Fusion Method that Performs Better than Best Sensor; Technical Report; Oak Ridge National Lab.: Oak Ridge, TN, USA, 1998.

- Rövid, A.; Remeli, V. Towards Raw Sensor Fusion in 3D Object Detection. In Proceedings of the 2019 IEEE 17th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 24–26 January 2019; pp. 293–298. [Google Scholar] [CrossRef]

- Thrun, S. Particle Filters in Robotics. In Proceedings of the 17th Annual Conference on Uncertainty in AI (UAI), Edmonton, AB, Canada, 1–4 August 2002. [Google Scholar]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by bayesian combination of edgelet part detectors. In Proceedings of the Tenth IEEE International Conference on Computer Vision, ICCV 2005, Beijing, China, 17–20 October 2005; Volume 1, pp. 90–97. [Google Scholar]

- Borenstein, J.; Koren, Y. Obstacle avoidance with ultrasonic sensors. IEEE J. Robot. Autom. 1988, 4, 213–218. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef]

- Chavez-Garcia, R.O.; Aycard, O. Multiple Sensor Fusion and Classification for Moving Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 525–534. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Baltzakis, H.; Argyros, A.; Trahanias, P. Fusion of laser and visual data for robot motion planning and collision avoidance. Mach. Vis. Appl. 2003, 15, 92–100. [Google Scholar] [CrossRef]

- Luo, R.C.; Yih, C.C.; Su, K.L. Multisensor fusion and integration: Approaches, applications, and future research directions. IEEE Sens. J. 2002, 2, 107–119. [Google Scholar] [CrossRef]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal Data Fusion: An Overview of Methods, Challenges, and Prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Shafer, S.; Stentz, A.; Thorpe, C. An architecture for sensor fusion in a mobile robot. In Proceedings of the 1986 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 7–10 April 1986; Volume 3, pp. 2002–2011. [Google Scholar]

- Roggen, D.; Tröster, G.; Bulling, A. Signal processing technologies for activity-aware smart textiles. In Multidisciplinary Know-How for Smart-Textiles Developers; Elsevier: Amsterdam, The Netherlands, 2013; pp. 329–365. [Google Scholar]

- Foo, P.H.; Ng, G.W. High-level information fusion: An overview. J. Adv. Inf. Fusion 2013, 8, 33–72. [Google Scholar]

- Luo, R.C.; Su, K.L. A review of high-level multisensor fusion: Approaches and applications. In Proceedings of the 1999 IEEE/SICE/RSJ International Conference on Multisensor Fusion and Integration for Intelligent Systems, MFI’99 (Cat. No. 99TH8480), Taipei, Taiwan, 18 August 1999; pp. 25–31. [Google Scholar] [CrossRef]

- Waltz, E.; Llinas, J. Multisensor Data Fusion; Artech House: Boston, MA, USA, 1990; Volume 685. [Google Scholar]

- Hackett, J.K.; Shah, M. Multi-sensor fusion: A perspective. In Proceedings of the 1990 IEEE International Conference on Robotics and Automation, Cincinnati, OH, USA, 13–18 May 1990; pp. 1324–1330. [Google Scholar]

- Grossmann, P. Multisensor data fusion. GEC J. Technol. 1998, 15, 27–37. [Google Scholar]

- Brooks, R.R.; Rao, N.S.; Iyengar, S.S. Resolution of Contradictory Sensor Data. Intell. Autom. Soft Comput. 1997, 3, 287–299. [Google Scholar] [CrossRef]

- Vu, T.D. Vehicle Perception: Localization, Mapping with dEtection, Classification and Tracking of Moving Objects. Ph.D. Thesis, Institut National Polytechnique de Grenoble-INPG, Grenoble, France, 2009. [Google Scholar]

- Vu, T.D.; Aycard, O. Laser-based detection and tracking moving objects using data-driven markov chain monte carlo. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’09), Kobe, Japan, 12–17 May 2009; pp. 3800–3806. [Google Scholar]

- Bosse, E.; Roy, J.; Grenier, D. Data fusion concepts applied to a suite of dissimilar sensors. In Proceedings of the 1996 Canadian Conference on Electrical and Computer Engineering, Calgary, AL, Canada, 26–29 May 1996; Volume 2, pp. 692–695. [Google Scholar]

- Jeon, D.; Choi, H. Multi-sensor fusion for vehicle localization in real environment. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 411–415. [Google Scholar]

- Chibelushi, C.C.; Mason, J.S.; Deravi, F. Feature-Level Data Fusion for Bimodal Person Recognition. Feature Level Datafusion. 1997. Available online: https://www.imvc.co.il/Portals/117/Shmoolik_Mangan.pdf (accessed on 1 August 2019).

- Ross, A.A.; Govindarajan, R. Feature level fusion of hand and face biometrics. In Proceedings of the Biometric Technology for Human Identification II, International Society for Optics and Photonics, Boston; Artech house: Boston, MA, USA, 2005; Volume 5779, pp. 196–204. [Google Scholar]

- Ross, A. Fusion, Feature-Level. In Encyclopedia of Biometrics; Springer: Boston, MA, USA, 2009; pp. 597–602. [Google Scholar] [CrossRef]

- Nehmadi, Y.; Mangan, S.; Shahar, B.-E.; Cohen, A.; Cohen, R.; Goldentouch, L.; Ur, S. Redundancy Schemes with Low-Level Sensor Fusion for Autonomous Vehicles; Google Patents publisher. U.S. Patent 10,445,928, 15 October 2019. [Google Scholar]

- bear.com. North American Bear. Senses and Abilities-North American Bear Center. Available online: https://bear.org/senses-and-abilities/ (accessed on 1 August 2019).

- Crowley, J.L. A Computational Paradigm for Three Dimensional Scene Analysis; Technical Report CMU-RI-TR-84-11; Carnegie Mellon University: Pittsburgh, PA, USA, 1984. [Google Scholar]

- Crowley, J. Navigation for an intelligent mobile robot. IEEE J. Robot. Autom. 1985, 1, 31–41. [Google Scholar] [CrossRef]

- Herman, M.; Kanade, T. Incremental reconstruction of 3D scenes from multiple, complex images. Artif. Intell. 1986, 30, 289–341. [Google Scholar] [CrossRef]

- Brooks, R. Visual map making for a mobile robot. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 824–829. [Google Scholar]

- Smith, R. On the estimation and representation of spatial uncertainty. Int. J. Robot. Res. 1987, 5, 113–119. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.F. Consistent integration and propagation of disparate sensor observations. Int. J. Robot. Res. 1987, 6, 3–24. [Google Scholar] [CrossRef]

- Maheswari, R.U.; Umamaheswari, R. Wind Turbine Drivetrain Expert Fault Detection System: Multivariate Empirical Mode Decomposition based Multi-sensor Fusion with Bayesian Learning Classification. Intell. Autom. Soft Comput. 2019, 10, 296–311. [Google Scholar] [CrossRef]

- Faugeras, O.; Ayache, N.; Faverjon, B. Building visual maps by combining noisy stereo measurements. In Proceedings of the 1986 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 7–10 April 1986; Volume 3, pp. 1433–1438. [Google Scholar]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Li, S.Z. Invariant surface segmentation through energy minimization with discontinuities. Int. J. Comput. Vis. 1990, 5, 161–194. [Google Scholar] [CrossRef]

- Koch, C.; Marroquin, J.; Yuille, A. Analog “neuronal” networks in early vision. Proc. Natl. Acad. Sci. USA 1986, 83, 4263–4267. [Google Scholar] [CrossRef] [PubMed]

- Poggio, T.; Koch, C. III-Posed problems early vision: From computational theory to analogue networks. Proc. R. Soc. London. Ser. B Biol. Sci. 1985, 226, 303–323. [Google Scholar]

- Blake, A.; Zisserman, A. Visual Reconstruction; MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Ou, S.; Fagg, A.H.; Shenoy, P.; Chen, L. Application of reinforcement learning in multisensor fusion problems with conflicting control objectives. Intell. Autom. Soft Comput. 2009, 15, 223–235. [Google Scholar] [CrossRef]

- Brownston, L.; Farrell, R.; Kant, E.; Martin, N. Programming Expert Systems in OPS5; Addison-Wesley: Boston, MA, USA, 1985. [Google Scholar]

- Forgy, C.L. Rete: A fast algorithm for the many pattern/many object pattern match problem. In Readings in Artificial Intelligence and Databases; Elsevier: Amsterdam, The Netherlands, 1989; pp. 547–559. [Google Scholar]

- Shortliffe, E.H.; Buchanan, B.G. A model of inexact reasoning in medicine. Addison-Wesley Reading, MA. In Rule-Based Expert Systems; John Wiley & Sons: New York, NY, USA, 1984; pp. 233–262. [Google Scholar]

- Hayes-Roth, B. A blackboard architecture for control. Artif. Intell. 1985, 26, 251–321. [Google Scholar] [CrossRef]

- Zadeh, L.A.; Hayes, J.; Michie, D.; Mikulich, L. Machine intelligence. In A Theory of Approximate Reasoning; IEEE: Berkeley, CA, USA, 20 August 1979; Volume 9, pp. 1004–1010. [Google Scholar]

- Duda, R.O.; Hart, P.E.; Nilsson, N.J. Subjective Bayesian methods for rule-based inference systems. In Readings in artificial intelligence; Elsevier: Amsterdam, The Netherlands, 1981; pp. 192–199. [Google Scholar]

- Shafer, G. A mathematical theory of evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 42. [Google Scholar]

- Hall, D.L.; McMullen, S.A. Mathematical Techniques in Multisensor Data Fusion; Artech House Inc.: Norwood, MA, USA, 1992; Volume 57. [Google Scholar]

- inforfusion. Information Fusion Definition. Available online: http://www.inforfusion.org/mission.htm (accessed on 1 August 2019).

- Elmenreich, W. An Introduction to Sensor Fusion; Vienna University of Technology: Vienna, Austria, 2002; Volume 502. [Google Scholar]

- Garcia, F.; Martin, D.; de la Escalera, A.; Armingol, J.M. Sensor Fusion Methodology for Vehicle Detection. IEEE Intell. Transp. Syst. Mag. 2017, 9, 123–133. [Google Scholar] [CrossRef]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef]

- Uhlmann, J. Simultaneous Map Building and Localization for Real Time Applications. Transfer Thesis, Univ. Oxford, Oxford, UK, 1994. [Google Scholar]

- Uhlmann, J.K. Covariance consistency methods for fault-tolerant distributed data fusion. Inf. Fusion 2003, 4, 201–215. [Google Scholar] [CrossRef]

- Castanedo, F.; Garcia, J.; Patricio, M.A.; Molina, J.M. Analysis of distributed fusion alternatives in coordinated vision agents. In Proceedings of the 2008 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 1–6. [Google Scholar]

- Bar-Shalom, Y.; Willett, P.K.; Tian, X. Tracking and Data Fusion; YBS Publishing: Storrs, CT, USA, 2011; Volume 11. [Google Scholar]

- Castanedo, F. A review of data fusion techniques. Sci. World J. 2013, 2013. [Google Scholar] [CrossRef]

- Fortmann, T.; Bar-Shalom, Y.; Scheffe, M. Sonar tracking of multiple targets using joint probabilistic data association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef]

- He, S.; Shin, H.S.; Tsourdos, A. Distributed joint probabilistic data association filter with hybrid fusion strategy. IEEE Trans. Instrum. Meas. 2019, 69, 286–300. [Google Scholar] [CrossRef]

- Goeman, J.J.; Meijer, R.J.; Krebs, T.J.P.; Solari, A. Simultaneous control of all false discovery proportions in large-scale multiple hypothesis testing. Biometrika 2019, 106, 841–856. [Google Scholar] [CrossRef]

- Olfati-Saber, R. Distributed Kalman filtering for sensor networks. In Proceedings of the 2007 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 5492–5498. [Google Scholar]

- Zhang, Y.; Huang, Q.; Zhao, K. Hybridizing association rules with adaptive weighted decision fusion for personal credit assessment. Syst. Sci. Control Eng. 2019, 7, 135–142. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Chen, L.; Cetin, M.; Willsky, A.S. Distributed data association for multi-target tracking in sensor networks. In Proceedings of the IEEE Conference on Decision and Control, Plaza de España Seville, Spain, 12–15 December 2005. [Google Scholar]

- Dwivedi, R.; Dey, S. A novel hybrid score level and decision level fusion scheme for cancelable multi-biometric verification. Appl. Intell. 2019, 49, 1016–1035. [Google Scholar] [CrossRef]

- Dasarathy, B.V. Sensor fusion potential exploitation-innovative architectures and illustrative applications. Proc. IEEE 1997, 85, 24–38. [Google Scholar] [CrossRef]

- Steinberg, A.N.; Bowman, C.L. Revisions to the JDL data fusion model. In Handbook of Multisensor Data Fusion; CRC Press: Boca Raton, FL, USA, 2008; pp. 65–88. [Google Scholar]

- White, F.E. Data Fusion Lexicon; Technical Report; Joint Directors of Labs: Washington, DC, USA, 1991. [Google Scholar]

- Carli, R.; Chiuso, A.; Schenato, L.; Zampieri, S. Distributed Kalman filtering based on consensus strategies. IEEE J. Sel. Areas Commun. 2008, 26, 622–633. [Google Scholar] [CrossRef]

- Mahmoud, M.S.; Khalid, H.M. Distributed Kalman filtering: A bibliographic review. IET Control Theory Appl. 2013, 7, 483–501. [Google Scholar] [CrossRef]

- Bowman, C. Data Fusion and Neural Networks, 1643 Hemlock Way Broomfield, CO. Personal communication, regarding Revisions to the JDL Data Fusion Model. 1995. [Google Scholar] [CrossRef]

- Taropa, E.; Srini, V.P.; Lee, W.J.; Han, T.D. Data fusion applied on autonomous ground vehicles. In Proceedings of the 2006 8th International Conference Advanced Communication Technology, Phoenix Park, Korea, 20–22 February 2006; Volume 1, p. 6. [Google Scholar]

- Dieterle, T.; Particke, F.; Patino-Studencki, L.; Thielecke, J. Sensor data fusion of LIDAR with stereo RGB-D camera for object tracking. In Proceedings of the 2017 IEEE SENSORS, Glasgow, UK, 29 October–1 November 2017; pp. 1–3. [Google Scholar]

- NOAA. What Is LiDAR? Available online: https://oceanservice.noaa.gov/facts/lidar.html (accessed on 19 March 2020).

- Yole Developpement, W. Impact of LiDAR by 2032, 1. The Automotive LiDAR Market. Available online: http://www.woodsidecap.com/wp-content/uploads/2018/04/Yole_WCP-LiDAR-Report_April-2018-FINAL.pdf (accessed on 23 March 2020).

- Kim, W.; Tanaka, M.; Okutomi, M.; Sasaki, Y. Automatic labeled LiDAR data generation based on precise human model. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 43–49. [Google Scholar]

- Miltiadou, M.; Michael, G.; Campbell, N.D.; Warren, M.; Clewley, D.; Hadjimitsis, D.G. Open source software DASOS: Efficient accumulation, analysis, and visualisation of full-waveform lidar. In Proceedings of the Seventh International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2019), International Society for Optics and Photonics, Paphos, Cyprus, 18–21 March 2019; Volume 11174, p. 111741. [Google Scholar]

- Hu, P.; Huang, H.; Chen, Y.; Qi, J.; Li, W.; Jiang, C.; Wu, H.; Tian, W.; Hyyppä, J. Analyzing the Angle Effect of Leaf Reflectance Measured by Indoor Hyperspectral Light Detection and Ranging (LiDAR). Remote Sens. 2020, 12, 919. [Google Scholar] [CrossRef]

- Warren, M.E. Automotive LIDAR technology. In Proceedings of the 2019 Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C254–C255. [Google Scholar]

- Velodyne. Velodyne Puck Lidar. Available online: https://velodynelidar.com/products/puck/ (accessed on 2 February 2020).

- A1, R.L. RP Lidar A1 Details. Available online: http://www.ksat.com/news/alarming-40-percent-increase-in-pedestrian-deaths-in-2016-in-san-antonio (accessed on 1 August 2019).

- Ouster. Ouster Lidar. Available online: https://ouster.com/lidar-product-details/ (accessed on 2 February 2020).

- Eclipse. Eclipse Mapping Systems. Available online: https://geo-matching.com/airborne-laser-scanning/eclipse-autonomous-mapping-system (accessed on 2 February 2020).

- Quadros, N. Unlocking the characteristics of bathymetric lidar sensors. LiDAR Mag. 2013, 3. Available online: http://lidarmag.com/wp-content/uploads/PDF/LiDARMagazine_Quadros-BathymetricLiDARSensors_Vol3No6.pdf (accessed on 2 February 2020).

- igi global. RGB Camera Details. Available online: https://www.igi-global.com/dictionary/mobile-applications-for-automatic-object-recognition/60647 (accessed on 2 February 2020).

- Sigel, K.; DeAngelis, D.; Ciholas, M. Camera with Object Recognition/data Output. U.S. Patent 6,545,705, 8 April 2003. [Google Scholar]

- De Silva, V.; Roche, J.; Kondoz, A. Robust fusion of LiDAR and wide-angle camera data for autonomous mobile robots. Sensors 2018, 18, 2730. [Google Scholar] [CrossRef] [PubMed]

- Guy, T. Benefits and Advantages of 360° Cameras. Available online: https://www.threesixtycameras.com/pros-cons-every-360-camera/ (accessed on 10 January 2020).

- Myllylä, R.; Marszalec, J.; Kostamovaara, J.; Mäntyniemi, A.; Ulbrich, G.J. Imaging distance measurements using TOF lidar. J. Opt. 1998, 29, 188–193. [Google Scholar] [CrossRef]

- Nair, R.; Lenzen, F.; Meister, S.; Schäfer, H.; Garbe, C.; Kondermann, D. High accuracy TOF and stereo sensor fusion at interactive rates. In Proceedings of the ECCV: European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Volume 7584, pp. 1–11. [Google Scholar] [CrossRef]

- Hewitt, R.A.; Marshall, J.A. Towards intensity-augmented SLAM with LiDAR and ToF sensors. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1956–1961. [Google Scholar] [CrossRef]

- Turnkey, A. Benefits and Advantages of TOF Industrial Cameras. Available online: http://www.adept.net.au/news/newsletter/201111-nov/article_tof_Mesa.shtml (accessed on 10 January 2020).

- Hinkel, R.; Knieriemen, T. Environment perception with a laser radar in a fast moving robot. In Robot Control 1988 (Syroco’88); Elsevier: Amsterdam, The Netherlands, 1989; pp. 271–277. [Google Scholar]

- fierceelectronics.com. Sensor Types Drive Autonomous Vehicles. Available online: https://www.fierceelectronics.com/components/three-sensor-types-drive-autonomous-vehicles (accessed on 2 October 2019).

- John Campbell, D. Robust and Optimal Methods for Geometric Sensor Data Alignment. Ph.D. Thesis, The Australian National University, Canberra, Australia, 2018. [Google Scholar]

- Maddern, W.; Newman, P. Real-time probabilistic fusion of sparse 3d lidar and dense stereo. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2181–2188. [Google Scholar]

- Realsense, I. Intel Realsense D435 Details. Realsense D435. Available online: https://click.intel.com/intelr-realsensetm-depth-camera-d435.html (accessed on 1 August 2019).

- Realsense, I. Tuning Depth Cameras for Best Performance. Realsense D435 Tuning. Available online: https://dev.intelrealsense.com/docs/tuning-depth-cameras-for-best-performance (accessed on 23 March 2020).

- Mirzaei, F.M.; Kottas, D.G.; Roumeliotis, S.I. 3D LIDAR–camera intrinsic and extrinsic calibration: Identifiability and analytical least-squares-based initialization. Int. J. Robot. Res. 2012, 31, 452–467. [Google Scholar] [CrossRef]

- Dong, W.; Isler, V. A novel method for the extrinsic calibration of a 2d laser rangefinder and a camera. IEEE Sensors J. 2018, 18, 4200–4211. [Google Scholar] [CrossRef]

- Li, J.; He, X.; Li, J. 2D LiDAR and camera fusion in 3D modeling of indoor environment. In Proceedings of the 2015 National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 June 2015; pp. 379–383. [Google Scholar]

- Zhou, L.; Li, Z.; Kaess, M. Automatic Extrinsic Calibration of a Camera and a 3D LiDAR using Line and Plane Correspondences. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS, Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Crowley, J.; Ramparany, F. Mathematical tools for manipulating uncertainty in perception. In Proceedings of the AAAI Workshop on Spatial Reasoning and Multi-Sensor Fusion, St. Charles, IL, USA, 5–7 October 1987. [Google Scholar]

- Jing, L.; Wang, T.; Zhao, M.; Wang, P. An adaptive multi-sensor data fusion method based on deep convolutional neural networks for fault diagnosis of planetary gearbox. Sensors 2017, 17, 414. [Google Scholar] [CrossRef]

- Guindel, C.; Beltrán, J.; Martín, D.; García, F. Automatic extrinsic calibration for lidar-stereo vehicle sensor setups. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Nobrega, R.; Quintanilha, J.; O’Hara, C. A noise-removal approach for lidar intensity images using anisotropic diffusion filtering to preserve object shape characteristics. In Proceedings of the ASPRS Annual Conference 2007: Identifying Geospatial Solutions, American Society for Photogrammetry and Remote Sensing, Tampa, FL, USA, 7–11 May 2007; Volume 2, pp. 471–481. [Google Scholar]

- Cao, N.; Zhu, C.; Kai, Y.; Yan, P. A method of background noise reduction in lidar data. Appl. Phys. B 2013, 113. [Google Scholar] [CrossRef]

- Hänsler, E.; Schmidt, G. Topics in Acoustic Echo and Noise Control: Selected Methods for the Cancellation of Acoustical Echoes, the Reduction of Background Noise, and Speech Processing; Springer Science & Business Media: Boston, MA, USA, 2006. [Google Scholar]

- Gannot, S.; Burshtein, D.; Weinstein, E. Iterative and sequential Kalman filter-based speech enhancement algorithms. IEEE Trans. Speech Audio Process. 1998, 6, 373–385. [Google Scholar] [CrossRef]

- Kalman, R.E. Contributions to thetheory of optimal control. Bol. Soc. Mat. Mex. 1960, 5, 102–119. [Google Scholar]

- Gelb, A. Applied Optimal Estimation; MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. A General Method for Approximating Nonlinear Transformations of Probability Distributions; Technical Report; Robotics Research Group, Department of Engineering Science: Oxford, UK, 1996. [Google Scholar]

- Nørgaard, M.; Poulsen, N.K.; Ravn, O. Advances in Derivative-Free State Estimation for Nonlinear Systems; Informatics and Mathematical Modelling, Technical University of Denmark, DTU: Lyngby, Denmark, 2000. [Google Scholar]

- NøRgaard, M.; Poulsen, N.K.; Ravn, O. New developments in state estimation for nonlinear systems. Automatica 2000, 36, 1627–1638. [Google Scholar] [CrossRef]

- Lefebvre, T.; Bruyninckx, H.; De Schutter, J. Kalman filters for nonlinear systems: A comparison of performance. Int. J. Control 2004, 77, 639–653. [Google Scholar] [CrossRef]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H.F. A new method for the nonlinear transformation of means and covariances in filters and estimators. IEEE Trans. Autom. Control 2000, 45, 477–482. [Google Scholar] [CrossRef]

- Sorenson, H.W. Kalman Filtering: Theory and Application; IEEE: New York, NY, USA, 1985. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. New extension of the Kalman filter to nonlinear systems. In Proceedings of the International Society for Optics and Photonics, Signal Processing, Sensor Fusion, and Target Recognition VI, Orlando, FL, USA, 28 July 1997; Volume 3068, pp. 182–193. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef]

- Wan, E.A.; Van Der Merwe, R. The unscented Kalman filter for nonlinear estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373), Lake Louise, AB, Canada, 4 October 2000; pp. 153–158. [Google Scholar]

- Julier, S.J. The spherical simplex unscented transformation. In Proceedings of the 2003 American Control Conference, Denver, CO, USA, 4–6 June 2003; Volume 3, pp. 2430–2434. [Google Scholar]

- Olfati-Saber, R. Distributed Kalman filter with embedded consensus filters. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 15 December 2005; pp. 8179–8184. [Google Scholar]

- Spanos, D.P.; Olfati-Saber, R.; Murray, R.M. Approximate distributed Kalman filtering in sensor networks with quantifiable performance. In Proceedings of the IPSN 2005, Fourth International Symposium on Information Processing in Sensor Networks, Boise, ID, USA, 15 April 2005; pp. 133–139. [Google Scholar]

- Gordon, N.J.; Salmond, D.J.; Smith, A.F. Novel approach to nonlinear/non-Gaussian Bayesian state estimation. Proc. IEE F-Radar Signal Process. 1993, 140, 107–113. [Google Scholar] [CrossRef]

- Thrun, S. Particle filters in robotics. In Proceedings of the Eighteenth Conference on Uncertainty In Artificial Intelligence; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 2002; pp. 511–518. [Google Scholar]

- Doucet, A. Sequential Monte Carlo Methods in Practice. Technometrics 2003, 45, 106. [Google Scholar] [CrossRef]

- Bugallo, M.F.; Xu, S.; Djurić, P.M. Performance comparison of EKF and particle filtering methods for maneuvering targets. Digit. Signal Process. 2007, 17, 774–786. [Google Scholar] [CrossRef]

- Van Der Merwe, R.; Doucet, A.; De Freitas, N.; Wan, E.A. The unscented particle filter. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, UK, 3–8 December 2001; pp. 584–590. [Google Scholar]

- Carpenter, J.; Clifford, P.; Fearnhead, P. Improved particle filter for nonlinear problems. IEE Proc.-Radar Sonar Navig. 1999, 146, 2–7. [Google Scholar] [CrossRef]

- Hsiao, K.; Miller, J.; de Plinval-Salgues, H. Particle filters and their applications. Cogn. Robot. 2005, 4. [Google Scholar]

- Waxman, A.; Moigne, J.; Srinivasan, B. Visual navigation of roadways. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, Louis, MO, USA, 25–28 March 1985; Volume 2, pp. 862–867. [Google Scholar]

- Delahoche, L.; Pégard, C.; Marhic, B.; Vasseur, P. A navigation system based on an ominidirectional vision sensor. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems, Innovative Robotics for Real-World Applications, IROS’97, Grenoble, France, 11 September1997; Volume 2, pp. 718–724. [Google Scholar]

- Zingaretti, P.; Carbonaro, A. Route following based on adaptive visual landmark matching. Robot. Auton. Syst. 1998, 25, 177–184. [Google Scholar] [CrossRef]

- Research, B. Global Vision and Navigation for Autonomous Vehicle.

- Thrun, S. Robotic Mapping: A Survey; CMU-CS-02–111; Morgan Kaufmann Publishers: Burlington, MA, USA, 2002. [Google Scholar]

- Thorpe, C.; Hebert, M.H.; Kanade, T.; Shafer, S.A. Vision and navigation for the Carnegie-Mellon Navlab. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 362–373. [Google Scholar] [CrossRef]

- Zimmer, U.R. Robust world-modelling and navigation in a real world. Neurocomputing 1996, 13, 247–260. [Google Scholar] [CrossRef][Green Version]

- Research, K. Autonomous Navigation Market: Investigation and Growth Forecasted until the End of 2025. Marketwath.com Press Release. Available online: https://www.marketwatch.com/press-release/autonomous-navigation-market-investigation-and-growth-forecasted-until-the-end-of-2025-2019-11-13 (accessed on 12 November 2019).

- Brooks, R. A robust layered control system for a mobile robot. IEEE J. Robot. Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef]

- Danescu, R.G. Obstacle detection using dynamic Particle-Based occupancy grids. In Proceedings of the 2011 International Conference on Digital Image Computing: Techniques and Applications, Noosa, QLD, Australia, 6–8 December 2011; pp. 585–590. [Google Scholar]

- Leibe, B.; Seemann, E.; Schiele, B. Pedestrian detection in crowded scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 878–885. [Google Scholar]

- Lwowski, J.; Kolar, P.; Benavidez, P.; Rad, P.; Prevost, J.J.; Jamshidi, M. Pedestrian detection system for smart communities using deep Convolutional Neural Networks. In Proceedings of the 2017 12th System of Systems Engineering Conference (SoSE), Waikoloa, HI, USA, 18–21 June 2017; pp. 1–6. [Google Scholar]

- Kortenkamp, D.; Weymouth, T. Topological mapping for mobile robots using a combination of sonar and vision sensing. Proc. AAAI 1994, 94, 979–984. [Google Scholar]

- Engelson, S.P.; McDermott, D.V. Error correction in mobile robot map learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; pp. 2555–2560. [Google Scholar]

- Kuipers, B.; Byun, Y.T. A robot exploration and mapping strategy based on a semantic hierarchy of spatial representations. Robot. Auton. Syst. 1991, 8, 47–63. [Google Scholar] [CrossRef]

- Thrun, S.; Bücken, A. Integrating grid-based and topological maps for mobile robot navigation. In Proceedings of the National Conference on Artificial Intelligence, Oregon, Portland, 4–8 August 1996; pp. 944–951. [Google Scholar]

- Thrun, S.; Buecken, A.; Burgard, W.; Fox, D.; Wolfram, A.B.; Fox, B.D.; Fröhlinghaus, T.; Hennig, D.; Hofmann, T.; Krell, M.; et al. Map Learning and High-Speed Navigation in RHINO; MIT/AAAI Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Moravec, H.P. Sensor fusion in certainty grids for mobile robots. AI Mag. 1988, 9, 61. [Google Scholar]

- Elfes, A. Occupancy Grids: A Probabilistic Framework for Robot Perception and Navigation. Ph.D. Thesis, Carnegie-Mellon University, Pittsburgh, PA, USA, 1989. [Google Scholar]

- Borenstein, J.; Koren, Y. The vector field histogram-fast obstacle avoidance for mobile robots. IEEE Trans. Robot. Autom. 1991, 7, 278–288. [Google Scholar] [CrossRef]

- Ramsdale, J.D.; Balme, M.R.; Conway, S.J.; Gallagher, C.; van Gasselt, S.A.; Hauber, E.; Orgel, C.; Séjourné, A.; Skinner, J.A.; Costard, F.; et al. Grid-based mapping: A method for rapidly determining the spatial distributions of small features over very large areas. Planet. Space Sci. 2017, 140, 49–61. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhu, J.; Jin, C.; Xu, S.; Zhou, Y.; Pang, S. Simultaneously merging multi-robot grid maps at different resolutions. In Multimedia Tools and Applications; Springer Science & Business Media: Berlin, Germany, 2019; pp. 1–20. [Google Scholar]

- Burgard, W.; Fox, D.; Hennig, D.; Schmidt, T. Estimating the absolute position of a mobile robot using position probability grids. In Proceedings of the National Conference on Artificial Intelligence, Portland, Oregon, 4–8 August 1996; pp. 896–901. [Google Scholar]

- Gutmann, J.S.; Schlegel, C. Amos: Comparison of scan matching approaches for self-localization in indoor environments. In Proceedings of the First Euromicro Workshop on Advanced Mobile Robots (EUROBOT’96), Kaiserslautern, Germany, 9–11 October 1996; pp. 61–67. [Google Scholar]

- Zhang, Z.; Deriche, R.; Faugeras, O.; Luong, Q.T. A robust technique for matching two uncalibrated images through the recovery of the unknown epipolar geometry. Artif. Intell. 1995, 78, 87–119. [Google Scholar] [CrossRef]

- Lu, F.; Milios, E. Robot pose estimation in unknown environments by matching 2d range scans. J. Intell. Robot. Syst. 1997, 18, 249–275. [Google Scholar] [CrossRef]

- Buschka, P. An Investigation of Hybrid Maps for Mobile Robots. Ph.D. Thesis, Örebro universitetsbibliotek, Örebro, Sweden, 2005. [Google Scholar]

- Fernández-Madrigal, J.A. Simultaneous Localization and Mapping for Mobile Robots: Introduction and Methods: Introduction and Methods; IGI Global: Philadelphia, PA, USA, 2012. [Google Scholar]

- Thrun, S. Robotic mapping: A survey. Explor. Artif. Intell. New Millenn. 2002, 1, 1–35. [Google Scholar]

- Leonard, J.J.; Durrant-Whyte, H.F.; Cox, I.J. Dynamic map building for an autonomous mobile robot. Int. J. Robot. Res. 1992, 11, 286–298. [Google Scholar] [CrossRef]

- Dissanayake, M.W.M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Mirowski, P.; Grimes, M.; Malinowski, M.; Hermann, K.M.; Anderson, K.; Teplyashin, D.; Simonyan, K.; Zisserman, A.; Hadsell, R.; et al. Learning to navigate in cities without a map. In Proceedings of the Advances in Neural Information Processing Systems; Montreal Convention Centre, Montreal, QC, Canada, 3–8 December 2018; pp. 2419–2430. [Google Scholar]

- Pritsker, A. Introduction to Stimulation and Slam II, 3rd ed.; U.S. Department of Energy Office of Scientific and Technical Information: Oak Ridge, TN, USA, 1986. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Wikipedia.com. List of Slam Methods. Available online: https://en.wikipedia.org/wiki/List_of_SLAM_Methods (accessed on 1 August 2019).

- Aguilar, W.G.; Morales, S.; Ruiz, H.; Abad, V. RRT* GL based optimal path planning for real-time navigation of UAVs. In International Work-Conference on Artificial Neural Networks; Springer: Boston, MA, USA, 2017; pp. 585–595. [Google Scholar]

- Huang, S.; Dissanayake, G. Robot Localization: An Introduction. In Wiley Encyclopedia of Electrical and Electronics Engineering; John Wiley & Sons: New York, NY, USA, 1999; pp. 1–10. [Google Scholar]

- Huang, S.; Dissanayake, G. Convergence and consistency analysis for extended Kalman filter based SLAM. IEEE Trans. Robot. 2007, 23, 1036–1049. [Google Scholar] [CrossRef]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Leonard, J.J.; Durrant-Whyte, H.F. Mobile robot localization by tracking geometric beacons. IEEE Trans. Robot. Autom. 1991, 7, 376–382. [Google Scholar] [CrossRef]

- Betke, M.; Gurvits, L. Mobile robot localization using landmarks. IEEE Trans. Robot. Autom. 1997, 13, 251–263. [Google Scholar] [CrossRef]

- Thrun, S.; Fox, D.; Burgard, W.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 128, 99–141. [Google Scholar] [CrossRef]

- Kwon, S.; Yang, K.; Park, S. An effective kalman filter localization method for mobile robots. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 1524–1529. [Google Scholar]

- Ojeda, L.; Borenstein, J. Personal dead-reckoning system for GPS-denied environments. In Proceedings of the IEEE International Workshop on Safety, Security and Rescue Robotics, SSRR 2007, Rome, Italy, 27–29 September 2007; pp. 1–6. [Google Scholar]

- Levi, R.W.; Judd, T. Dead Reckoning Navigational System Using Accelerometer to Measure Foot Impacts. U.S. Patent 5,583,776, 1996. [Google Scholar]

- Elnahrawy, E.; Li, X.; Martin, R.P. The limits of localization using signal strength: A comparative study. In Proceedings of the 2004 First Annual IEEE Communications Society Conference on Sensor and Ad Hoc Communications and Networks, Santa Clara, CA, USA, 4–7 October 2004; pp. 406–414. [Google Scholar]

- Neves, A.; Fonseca, H.C.; Ralha, C.G. Location agent: A study using different wireless protocols for indoor localization. Int. J. Wirel. Commun. Mob. Comput. 2013, 1, 1–6. [Google Scholar] [CrossRef]

- Whitehouse, K.; Karlof, C.; Culler, D. A practical evaluation of radio signal strength for ranging-based localization. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2007, 11, 41–52. [Google Scholar] [CrossRef]

- He, S.; Chan, S.H.G. Wi-Fi fingerprint-based indoor positioning: Recent advances and comparisons. IEEE Commun. Surv. Tutor. 2016, 18, 466–490. [Google Scholar] [CrossRef]

- Wang, Y.; Ye, Q.; Cheng, J.; Wang, L. RSSI-based bluetooth indoor localization. In Proceedings of the 2015 11th International Conference on Mobile Ad-hoc and Sensor Networks (MSN), Shenzhen, China, 16–18 December 2015; pp. 165–171. [Google Scholar]

- Howell, E.; NAV Star. Navstar: GPS Satellite Network. Available online: https://www.space.com/19794-navstar.html (accessed on 1 August 2019).

- Robotics, A. Experience the New Mobius. Available online: https://www.asirobots.com/platforms/mobius/ (accessed on 1 August 2019).

- Choi, B.S.; Lee, J.J. Sensor network based localization algorithm using fusion sensor-agent for indoor service robot. IEEE Trans. Consum. Electron. 2010, 56, 1457–1465. [Google Scholar] [CrossRef]

- Ramer, C.; Sessner, J.; Scholz, M.; Zhang, X.; Franke, J. Fusing low-cost sensor data for localization and mapping of automated guided vehicle fleets in indoor applications. In Proceedings of the 2015 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 65–70. [Google Scholar]

- Fontanelli, D.; Ricciato, L.; Soatto, S. A fast ransac-based registration algorithm for accurate localization in unknown environments using lidar measurements. In Proceedings of the IEEE International Conference on Automation Science and Engineering, CASE 2007, Scottsdale, AZ, USA, 22–25 September 2007; pp. 597–602. [Google Scholar]

- Wan, K.; Ma, L.; Tan, X. An improvement algorithm on RANSAC for image-based indoor localization. In Proceedings of the 2016 International Conference on Wireless Communications and Mobile Computing Conference (IWCMC), An improvement algorithm on RANSAC for image-based indoor localization, Paphos, Cyprus, 5–9 September 2016; pp. 842–845. [Google Scholar]

- Biswas, J.; Veloso, M. Depth camera based indoor mobile robot localization and navigation. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 1697–1702. [Google Scholar]

- Vive, W.H. HTC Vive Details. Available online: https://en.wikipedia.org/wiki/HTC_Vive (accessed on 1 August 2019).

- Buniyamin, N.; Ngah, W.W.; Sariff, N.; Mohamad, Z. A simple local path planning algorithm for autonomous mobile robots. Int. J. Syst. Appl. Eng. Dev. 2011, 5, 151–159. [Google Scholar]

- Popović, M.; Vidal-Calleja, T.; Hitz, G.; Sa, I.; Siegwart, R.; Nieto, J. Multiresolution mapping and informative path planning for UAV-based terrain monitoring. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1382–1388. [Google Scholar]

- Laghmara, H.; Boudali, M.; Laurain, T.; Ledy, J.; Orjuela, R.; Lauffenburger, J.; Basset, M. Obstacle Avoidance, Path Planning and Control for Autonomous Vehicles. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 529–534. [Google Scholar]

- Rashid, A.T.; Ali, A.A.; Frasca, M.; Fortuna, L. Path planning with obstacle avoidance based on visibility binary tree algorithm. Robot. Auton. Syst. 2013, 61, 1440–1449. [Google Scholar] [CrossRef]

- Wagner, G.; Choset, H. M*: A complete multirobot path planning algorithm with performance bounds. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3260–3267. [Google Scholar]

- Urdiales, C.; Bandera, A.; Arrebola, F.; Sandoval, F. Multi-level path planning algorithm for autonomous robots. Electron. Lett. 1998, 34, 223–224. [Google Scholar] [CrossRef]

- Mac, T.T.; Copot, C.; Tran, D.T.; De Keyser, R. Heuristic approaches in robot path planning: A survey. Robot. Auton. Syst. 2016, 86, 13–28. [Google Scholar] [CrossRef]

- Vokhmintsev, A.; Timchenko, M.; Melnikov, A.; Kozko, A.; Makovetskii, A. Robot path planning algorithm based on symbolic tags in dynamic environment. In Proceedings of the Applications of Digital Image Processing XL, International Society for Optics and Photonics, San Diego, CA, USA, 7–10 August 2017; Volume 10396, p. 103962E. [Google Scholar]

- Marin-Plaza, P.; Hussein, A.; Martin, D.; de la Escalera, A. Global and local path planning study in a ROS-based research platform for autonomous vehicles. J. Adv. Transp. 2018. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Gavrilova, M.L. Voronoi diagram in optimal path planning. In Proceedings of the 4th International Symposium on Voronoi Diagrams in Science and Engineering (ISVD 2007), Glamorgan, UK, 9–11 July 2007; pp. 38–47. [Google Scholar]

- Canny, J. A new algebraic method for robot motion planning and real geometry. In Proceedings of the 28th Annual Symposium on Foundations of Computer Science (sfcs 1987), Los Angeles, CA, USA, 12–14 October 1987; pp. 39–48. [Google Scholar]

- Skiena, S. Dijkstra’s algorithm. In Implementing Discrete Mathematics: Combinatorics and Graph Theory with Mathematica; Addison-Wesley: Reading, MA, USA, 1990; pp. 225–227. [Google Scholar]

- Dechter, R.; Pearl, J. Generalized best-first search strategies and the optimality of A. J. ACM 1985, 32, 505–536. [Google Scholar] [CrossRef]

- Yang, S.X.; Luo, C. A neural network approach to complete coverage path planning. IEEE Trans. Syst. Man Cybern. Part B Cybernet. 2004, 34, 718–724. [Google Scholar] [CrossRef]

- Piazzi, A.; Bianco, C.L.; Bertozzi, M.; Fascioli, A.; Broggi, A. Quintic G/sup 2/-splines for the iterative steering of vision-based autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2002, 3, 27–36. [Google Scholar] [CrossRef]

- Rastelli, J.P.; Lattarulo, R.; Nashashibi, F. Dynamic trajectory generation using continuous-curvature algorithms for door to door assistance vehicles. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 510–515. [Google Scholar]

- Reeds, J.; Shepp, L. Optimal paths for a car that goes both forwards and backwards. Pac. J. Math. 1990, 145, 367–393. [Google Scholar] [CrossRef]

- Gim, S.; Adouane, L.; Lee, S.; Derutin, J.P. Clothoids composition method for smooth path generation of car-like vehicle navigation. J. Intell. Robot. Syst. 2017, 88, 129–146. [Google Scholar] [CrossRef]

- Kumar, P.B.; Sahu, C.; Parhi, D.R.; Pandey, K.K.; Chhotray, A. Static and dynamic path planning of humanoids using an advanced regression controller. Sci. Iran. 2019, 26, 375–393. [Google Scholar] [CrossRef]

- Tuba, E.; Strumberger, I.; Bacanin, N.; Tuba, M. Optimal Path Planning in Environments with Static Obstacles by Harmony Search Algorithm. In International Conference on Harmony Search Algorithm; Springer: Boston, MA, USA, 2019; pp. 186–193. [Google Scholar]

- Dutta, A.K.; Debnath, S.K.; Das, S.K. Path-Planning of Snake-Like Robot in Presence of Static Obstacles Using Critical-SnakeBug Algorithm. In Advances in Computer, Communication and Control; Springer: Boston, MA, USA, 2019; pp. 449–458. [Google Scholar]

- Gabardos, B.I.; Passot, J.B. Systems and Methods for Dynamic Route Planning in Autonomous Navigation. U.S. Patent App. 16/454,217, 2 January 2020. [Google Scholar]

- Connell, D.; La, H.M. Dynamic path planning and replanning for mobile robots using rrt. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5 October 2017; pp. 1429–1434. [Google Scholar]

- Liu, Y.; Ma, J.; Zang, S.; Min, Y. Dynamic Path Planning of Mobile Robot Based on Improved Ant Colony Optimization Algorithm. In Proceedings of the 2019 8th International Conference on Networks, Communication and Computing, Luoyang, China, 13–15 December 2019; 2019; pp. 248–252. [Google Scholar]

- Wang, C.C.; Thorpe, C.; Thrun, S.; Hebert, M.; Durrant-Whyte, H. Simultaneous localization, mapping and moving object tracking. Int. J. Robot. Res. 2007, 26, 889–916. [Google Scholar] [CrossRef]

- Saunders, J.; Call, B.; Curtis, A.; Beard, R.; McLain, T. Static and dynamic obstacle avoidance in miniature air vehicles. In Infotech@ Aerospace; BYU ScholarsArchive; BYU: Provo, UT, USA, 2005; p. 6950. [Google Scholar]

- Chu, K.; Lee, M.; Sunwoo, M. Local path planning for off-road autonomous driving with avoidance of static obstacles. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1599–1616. [Google Scholar] [CrossRef]