A Novel Hybrid Algorithm Based on Grey Wolf Optimizer and Fireworks Algorithm

Abstract

1. Introduction

- (1)

- A novel hybrid algorithm based on GWO and FWA is proposed.

- (2)

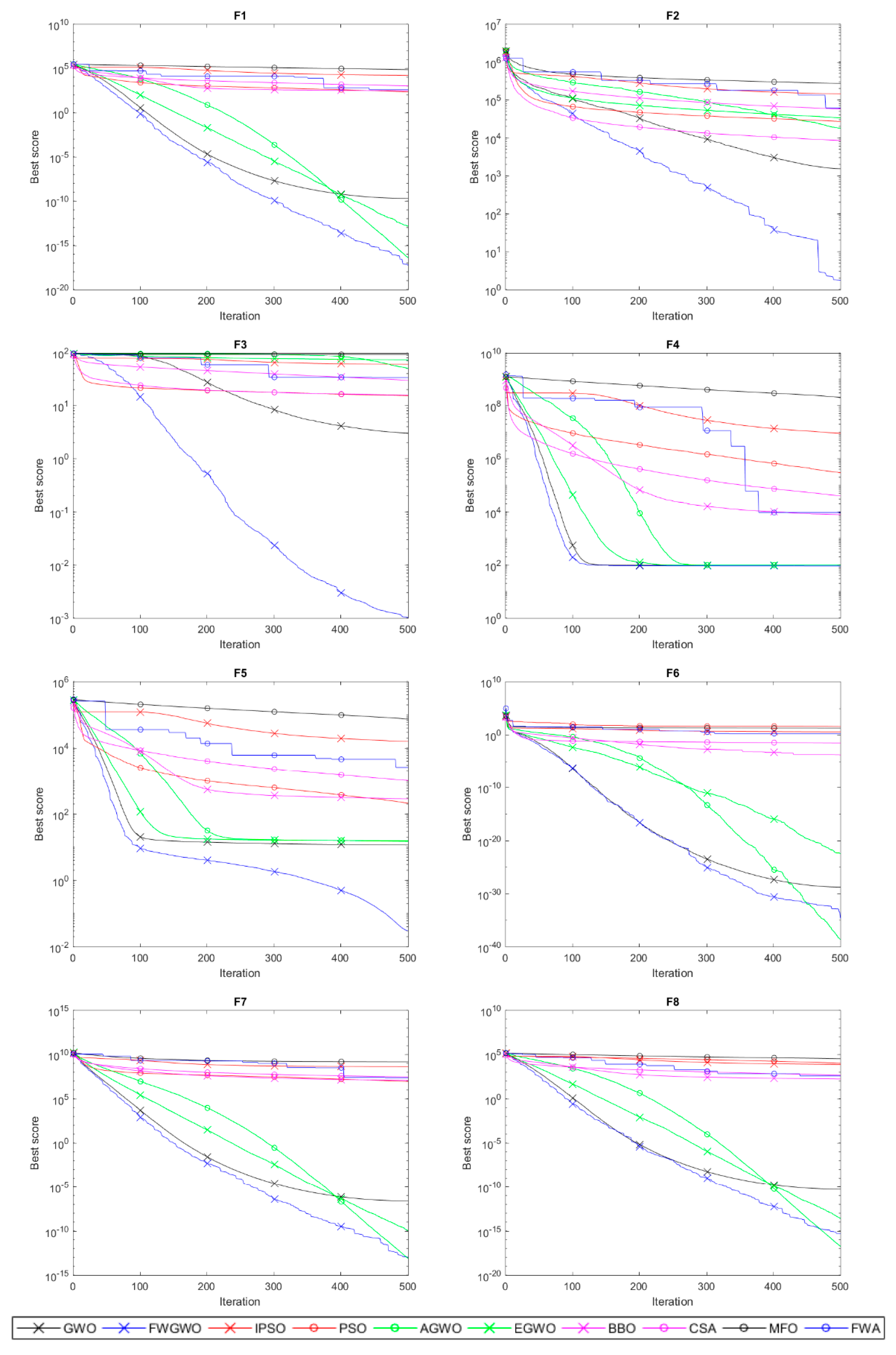

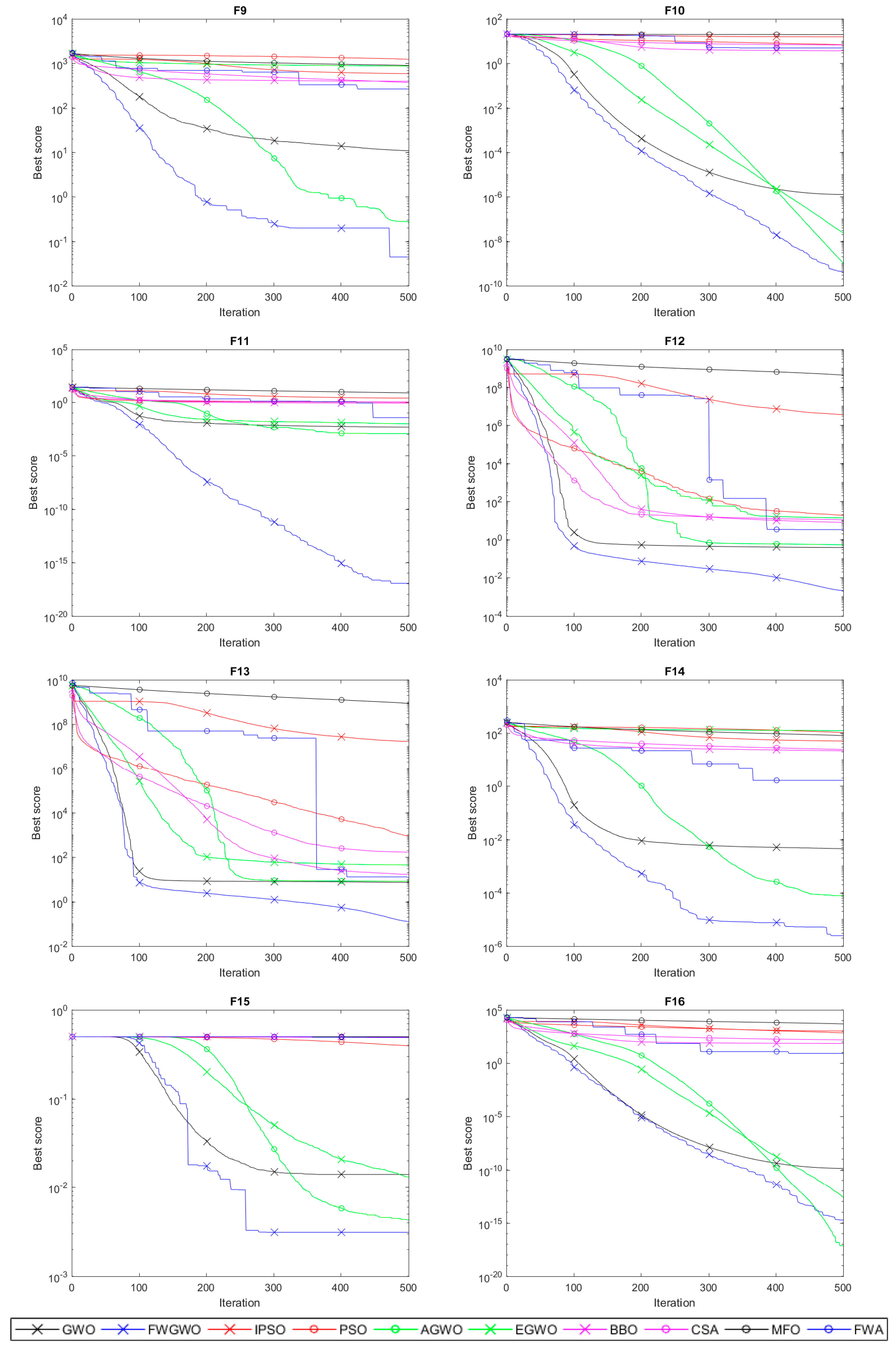

- The proposed algorithm is tested on 16 benchmark functions with a wide range of dimensions and varied complexities.

- (3)

- The performance of the proposed approach is compared with standard GWO, FWA, Moth Flame Optimization (MFO), Crow Search Algorithm (CSA) [30], improved Particle Swarm Optimization(IPSO) [31], Biogeography-based optimization (BBO), Particle Swarm Optimization (PSO), Enhanced GWO (EGWO) [32], and Augmented GWO (AGWO) [33].

2. Algorithms

2.1. Grey Wolf Optimizer

2.1.1. Hierarchical Structure

2.1.2. Encircling Prey

2.1.3. Hunting

2.1.4. Search for Prey (Exploration) and Attacking Prey (Exploitation)

2.2. Fireworks Algorithm

- (1)

- N fireworks are randomly generated in the search space, and each firework represents a feasible solution.

- (2)

- Evaluate the quality of these fireworks. Consider that the optimal location may be close to the fireworks with a better fitness; these fireworks get a smaller search amplitude and more explosion sparks to search the surrounding area. On the contrary, those fireworks with a bad fitness will get fewer explosion sparks and a larger search amplitude. The number of explosion sparks and the explosion amplitude of fireworks are calculated as shown in Equations (9) and (10):where is the number of explosion sparks. represents the amplitude of the explosion. is the minimum fitness among the fireworks. is the maximum fitness value among the fireworks. and are parameters controlling the total number of sparks and the maximum explosion amplitude, respectively. is the smallest constant in the computer, utilized to avoid a zero-division-error. To avoid that the good fireworks produce far more explosive sparks than the fireworks with a poor fitness, Equation (11) is used to bound the number of sparks that are generated:where are constant parameters, and represents the rounding function.

- (3)

- To guarantee the diversity of the fireworks, another method of generating sparks, Gaussian explosion, is designed in FWA. For the randomly selected fireworks, a number of dimensions are randomly selected and updated, as shown in Equation (12), to get the position of a Gaussian spark at the dimension k:where , is a Gaussian random value with mean 1 and standard deviation 1.The generated explosion sparks and Gaussian sparks may exceed the boundaries of the search space. Equation (13) maps the sparks beyond the boundary at dimension k to a new position:where represents the upper bound of the search space at dimension k, and is the lower bound of the search space at dimension k.

- (4)

- locations should be selected as the next generation of fireworks from the explosion sparks, Gaussian sparks, and the current fireworks. In FWA, the location with the best fitness is always kept for the next iteration. Then, locations are chosen, determined by their distance to other locations. The distance and the selection probability of is defined as follows:

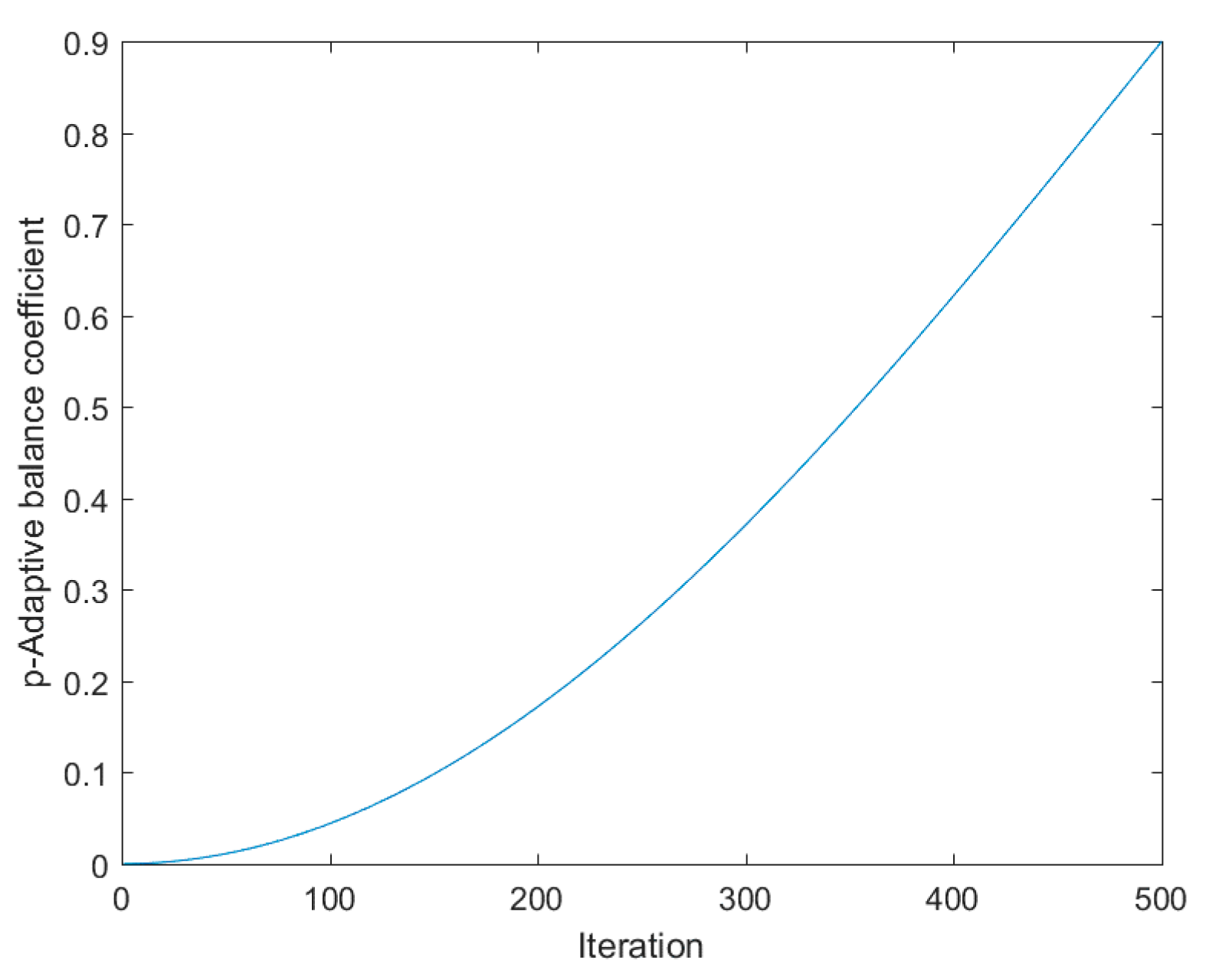

3. Hybrid FWGWO Algorithm

3.1. Establishment of FWGWO

3.2. Time Complexity Analysis of FWGWO

4. Experimental Section and Results

4.1. Compared Algorithms

4.2. Benchmark Functions

4.3. Performance Metrics

4.4. Comparison and Analysis of Simulation Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Function | Range | |

|---|---|---|

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| −418.982 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 | ||

| 0 |

References

- Şenel, F.A.; Gökçe, F.; Yüksel, A.S.; Yiğit, T. A novel hybrid PSO–GWO algorithm for optimization problems. Eng. Comput. 2018, 35, 1359–1373. [Google Scholar] [CrossRef]

- Brownlee, J. Clever Algorithms: Nature-Inspired Programming Recipes; Lulu Press: Morrisville, NC, USA, 2011. [Google Scholar]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Colorni, A.; Dorigo, M.; Maniezzo, V. Distributed optimization by ant colonies. In Proceedings of the European Conference on Artificial Life, Paris, France, 11–13 December 1991. [Google Scholar]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-TR06; Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Meng, X.; Gao, X.Z.; Lu, L.; Liu, Y.; Zhang, H. A new bio-inspired optimization algorithm: Bird swarm algorithm. J. Exp. Theor. Artif. Intell. 2016, 28, 673–687. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Tan, Y.; Zhu, Y. Fireworks algorithm for optimization. Comput. Vis. 2010, 6145, 355–364. [Google Scholar]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Agarwal, J.; Parmar, G.; Gupta, R.; Sikander, A. Analysis of grey wolf optimizer based fractional order PID controller in speed control of DC motor. Microsyst. Technol. 2018, 24, 4997–5006. [Google Scholar] [CrossRef]

- Song, X.; Tang, L.; Zhao, S.; Zhang, X.; Li, L.; Huang, J.; Cai, W. Grey wolf optimizer for parameter estimation in surface waves. Soil Dyn. Earthq. Eng. 2015, 75, 147–157. [Google Scholar] [CrossRef]

- Yammani, C.; Maheswarapu, S. Load frequency control of multi-microgrid system considering renewable energy sources using grey wolf optimization. Smart Sci. 2019, 7, 198–217. [Google Scholar]

- Bratton, D.; Kennedy, J. Defining a standard for particle swarm optimization. In Proceedings of the IEEE Swarm Intelligence Symposium, Honolulu, HI, USA, 1–5 April 2007; pp. 120–127. [Google Scholar]

- Tan, Y.; Xiao, Z.M. Clonal particle swarm optimization and its applications. In Proceedings of the IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 2303–2309. [Google Scholar]

- Mirjalili, S.; Wang, G.-G.; Coelho, L.D.S. Binary optimization using hybrid particle swarm optimization and gravitational search algorithm. Neural Comput. Appl. 2014, 25, 1423–1435. [Google Scholar] [CrossRef]

- Goldberg, D.E. Genetic Algorithms in Search Optimization and Machine Learning; Addison-Wesley: Boston, MA, USA, 1989. [Google Scholar]

- Choi, K.; Jang, D.-H.; Kang, S.-I.; Lee, J.-H.; Chung, T.-K.; Kim, H.-S.; Jung, T.-K. Hybrid algorithm combing genetic algorithm with evolution strategy for antenna design. IEEE Trans. Magn. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Mafarja, M.; Mirjalili, S. Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing 2017, 260, 302–312. [Google Scholar] [CrossRef]

- Alomoush, A.A.; Alsewari, A.A.; Alamri, H.S.; Alamri, H.S.; Zamli, K.Z. Hybrid Harmony search algorithm with grey wolf optimizer and modified opposition-based learning. IEEE Access 2019, 7, 68764–68785. [Google Scholar] [CrossRef]

- Arora, S.; Singh, H.; Sharma, M.; Sharma, S.; Anand, P. A new hybrid algorithm based on grey wolf optimization and crow search algorithm for unconstrained function optimization and feature selection. IEEE Access 2019, 7, 26343–26361. [Google Scholar] [CrossRef]

- Kumar, S.; Pant, M.; Dixit, A.; Bansal, R. BBO-DE: Hybrid algorithm based on BBO and DE. In Proceedings of the International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 379–383. [Google Scholar]

- Gohil, B.N.; Patel, D.R. A hybrid GWO-PSO algorithm for load balancing in cloud computing environment. In Proceedings of the Second International Conference on Green Computing and Internet of Things (ICGCIoT), Bangalore, India, 16–18 August 2018; pp. 185–191. [Google Scholar]

- Zhu, A.; Xu, C.; Li, Z.; Wu, J.; Liu, Z. Hybridizing grey wolf optimization with differential evolution for global optimization and test scheduling for 3D stacked SoC. J. Syst. Eng. Electron. 2015, 26, 317–328. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, M.-X.; Zheng, Y. A hybrid biogeography-based optimization and fireworks algorithm. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 3200–3206. [Google Scholar]

- Selim, A.; Kamel, S.; Jurado, F. Voltage profile improvement in active distribution networks using hybrid WOA-SCA optimization algorithm. In Proceedings of the Twentieth International Middle East Power Systems Conference (MEPCON), Cairo, Egypt, 18–20 December 2018; pp. 1064–1068. [Google Scholar]

- Alireza, A. A novel metaheuristic method for solving con- strained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar]

- Jiang, Y.; Hu, T.; Huang, C.; Wu, X. An improved particle swarm optimization algorithm. Appl. Math. Comput. 2007, 193, 231–239. [Google Scholar] [CrossRef]

- Sharma, S.; Salgotra, R.; Singh, U. An enhanced grey wolf optimizer for numerical optimization. In Proceedings of the International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–6. [Google Scholar]

- Hamour, H.; Kamel, S.; Nasrat, L.; Yu, J. Distribution network reconfiguration using augmented grey wolf optimization algorithm for power loss minimization. In Proceedings of the International Conference on Innovative Trends in Computer Engineering (ITCE), Aswan, Egypt, 2–4 February 2019; pp. 450–454. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F.; Cabrera, D.M. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

| Parameter | Value(s) | |

|---|---|---|

| GWO | Linearly decreased from 2 to 0 | |

| FWA | Total number of sparks | 50 |

| Maximum explosion amplitude | 40 | |

| Number of Gaussian sparks | 5 | |

| 0.04 | ||

| 0.8 | ||

| ISPO | Inertia w(wMin,wMax) | [0.4,0.9] |

| Acceleration constants(c1,c2) | [2,2] | |

| PSO | Inertia w(wMin,wMax) | [0.6,0.9] |

| Acceleration constants(c1,c2) | [2,2] | |

| AGWO | ||

| EGWO | ||

| BBO | Immigration probability | [0,1] |

| Mutation probability | 0.005 | |

| Habitat modification probability | 1.0 | |

| Step size | 1.0 | |

| Migration rate | 1.0 | |

| Maximum immigration | 1.0 | |

| CSA | Flight length | 2 |

| Awareness probability | 0.1 | |

| MFO |

| Function | GWO | FWA | IPSO | PSO | AGWO | EGWO | BBO | CSA | MFO | FWGWO | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 1.99 × 10−10 | 3.73 × 102 | 1.57 × 104 | 2.21 × 102 | 3.93 × 10−17 | 1.45 × 10−13 | 2.87 × 102 | 1.06 × 103 | 7.04 × 104 | 5.16 × 10−18 |

| Std | 1.19 × 10−10 | 5.49 × 103 | 5.18 × 103 | 3.01 × 101 | 7.20 × 10−17 | 6.23 × 10−14 | 2.47 × 101 | 1.83 × 102 | 1.35 × 104 | 1.93 × 10−16 | |

| Best | 2.70 × 10−11 | 4.84 | 7.40 × 103 | 1.50 × 102 | 4.61 × 10−18 | 6.15 × 10−16 | 2.54 × 102 | 7.09 × 102 | 4.69 × 104 | 6.68 × 10−24 | |

| F2 | Mean | 1.53 × 103 | 6.14 × 104 | 1.44 × 105 | 2.71 × 104 | 1.79 × 104 | 3.34 × 104 | 5.74 × 104 | 8.49 × 103 | 2.70 × 105 | 1.74 |

| Std | 1.35 × 103 | 7.11 × 104 | 3.11 × 104 | 6.72 × 103 | 1.81 × 104 | 1.41 × 104 | 8.78 × 103 | 1.52 × 103 | 5.48 × 104 | 6.01 × 101 | |

| Best | 1.20 × 102 | 1.32 × 101 | 8.33 × 104 | 1.55 × 104 | 2.54 × 102 | 6.75 × 103 | 4.38 × 104 | 5.54 × 103 | 1.64 × 105 | 4.97 × 10−7 | |

| F3 | Mean | 3.03 | 3.36 × 101 | 6.00 × 101 | 1.54 × 101 | 4.99 × 101 | 7.28 × 101 | 2.99 × 101 | 1.57 × 101 | 9.41 × 101 | 1.02 × 10−3 |

| Std | 2.17 | 1.44 × 101 | 4.32 | 1.66 | 3.13 × 101 | 7.82 | 3.01 | 1.40 | 1.72 | 3.06 × 10−3 | |

| Best | 2.24 × 10−1 | 8.24 × 10−1 | 4.84 × 101 | 1.26 × 101 | 6.56 × 10−1 | 5.61 × 101 | 2.35 × 101 | 1.32 × 101 | 9.13 × 101 | 5.61 × 10−7 | |

| F4 | Mean | 9.81 × 101 | 9.38 × 103 | 9.12 × 106 | 2.95 × 105 | 9.82 × 101 | 9.83 × 101 | 7.86 × 103 | 4.01 × 104 | 2.04 × 108 | 9.00 × 101 |

| Std | 5.22 × 10−1 | 2.50 × 106 | 3.99 × 106 | 6.54 × 104 | 5.50 × 10−1 | 6.68 × 10−1 | 1.39 × 103 | 1.80 × 104 | 8.37 × 107 | 1.74 × 101 | |

| Best | 9.65 × 101 | 1.00 × 102 | 4.79 × 106 | 1.87 × 105 | 9.71 × 101 | 9.63 × 101 | 5.16 × 103 | 2.26 × 104 | 6.98 × 107 | 1.66 × 101 | |

| F5 | Mean | 1.16 × 101 | 2.61 × 103 | 1.59 × 104 | 2.13 × 102 | 1.50 × 101 | 1.56 × 101 | 2.86 × 102 | 1.03 × 103 | 7.46 × 104 | 3.02 × 10−2 |

| Std | 9.88 × 10−1 | 2.14 × 103 | 4.71 × 103 | 3.34 × 101 | 6.26 × 10−1 | 9.66 × 10−1 | 2.88 × 101 | 1.07 × 102 | 1.39 × 104 | 2.42 × 10−2 | |

| Best | 9.54 | 2.44 × 101 | 8.20 × 103 | 1.56 × 102 | 1.40 × 101 | 1.40 × 101 | 2.26 × 102 | 7.95 × 102 | 5.23 × 104 | 1.27 × 10−2 | |

| F6 | Mean | 1.69 × 10−29 | 1.73 | 3.58 | 3.72 × 101 | 2.42 × 10−39 | 3.44 × 10−23 | 1.58 × 10−4 | 2.79 × 10−2 | 1.80 × 101 | 2.09 × 10−35 |

| Std | 2.50 × 10−29 | 4.11 × 10−1 | 2.52 | 1.11 × 101 | 1.03 × 10−35 | 6.04 × 10−22 | 2.03 × 10−3 | 1.65 × 10−2 | 5.18 | 1.21 × 10−34 | |

| Best | 4.86 × 10−34 | 8.30 × 10−8 | 1.05 × 10−1 | 1.18 × 101 | 5.32 × 10−46 | 1.38 × 10−35 | 6.48 × 10−7 | 8.35 × 10−3 | 2.00 | 1.80 × 10−46 | |

| F7 | Mean | 2.47 × 10−7 | 2.39 × 107 | 4.13 × 108 | 8.83 × 106 | 7.99 × 10−14 | 1.13 × 10−10 | 1.14 × 107 | 2.89 × 107 | 1.46 × 109 | 1.06 × 10−13 |

| Std | 1.62 × 10−7 | 8.81 × 107 | 2.66 × 108 | 2.57 × 106 | 8.94 × 10−14 | 1.44 × 10−10 | 2.50 × 106 | 9.00 × 106 | 8.26 × 108 | 2.12 × 10−12 | |

| Best | 4.80 × 10−8 | 1.31 × 101 | 6.05 × 107 | 4.00 × 106 | 3.74 × 10−15 | 1.15 × 10−12 | 7.24 × 106 | 1.75 × 107 | 1.16 × 108 | 1.00 × 10−18 | |

| F8 | Mean | 5.38 × 10−11 | 3.72 × 102 | 7.33 × 103 | 1.02 × 104 | 1.80 × 10−17 | 2.54 × 10−14 | 1.68 × 102 | 4.68 × 102 | 3.30 × 104 | 4.94 × 10−16 |

| Std | 4.61 × 10−11 | 7.11 × 102 | 2.62 × 103 | 1.30 × 103 | 2.04 × 10−17 | 1.31 × 10−14 | 2.58 × 101 | 7.19 × 101 | 7.03 × 103 | 5.01 × 10−16 | |

| Best | 1.19 × 10−11 | 4.81 × 10−2 | 3.38 × 103 | 7.58 × 103 | 5.21 × 10−19 | 2.86 × 10−16 | 1.35 × 102 | 3.14 × 102 | 1.40 × 104 | 1.42 × 10−21 | |

| F9 | Mean | 1.09 × 101 | 2.66 × 102 | 5.88 × 102 | 1.24 × 103 | 2.54 × 10−1 | 8.72 × 102 | 3.97 × 102 | 3.72 × 102 | 8.99 × 102 | 4.43 × 10−2 |

| Std | 8.83 | 1.04 × 102 | 6.85 × 101 | 9.61 × 101 | 6.46 × 10−5 | 1.80 × 102 | 5.40 × 101 | 4.50 × 101 | 7.18 × 101 | 8.31 × 10−2 | |

| Best | 5.96 × 10−7 | 3.06 × 10−2 | 4.79 × 102 | 1.05 × 103 | 0.00 | 4.45 × 102 | 3.17 × 102 | 3.04 × 102 | 6.92 × 102 | 0.00 | |

| F10 | Mean | 1.27 × 10−6 | 4.98 | 1.58 × 101 | 6.96 | 1.06 × 10−9 | 2.37 × 10−8 | 3.71 | 6.80 | 1.99 × 101 | 4.21 × 10−10 |

| Std | 3.85 × 10−7 | 2.93 | 9.91 × 10−1 | 3.72 × 10−1 | 7.18 × 10−10 | 2.69 × 10−8 | 1.18 × 10−1 | 5.39 × 10−1 | 1.52 × 10−1 | 5.61 × 10−10 | |

| Best | 6.16 × 10−7 | 1.05 × 10−2 | 1.43 × 101 | 6.06 | 1.97 × 10−10 | 1.55 × 10−9 | 3.49 | 6.07 | 1.95 × 101 | 2.93 × 10−14 | |

| F11 | Mean | 4.78 × 10−3 | 3.71 × 10−2 | 2.52 | 1.03 | 1.20 × 10−3 | 1.00 × 10−2 | 8.33 × 10−1 | 1.09 | 7.73 | 1.11 × 10−17 |

| Std | 1.09 × 10−2 | 4.83 × 10−1 | 3.68 × 10−1 | 2.44 × 10−2 | 9.13 × 10−3 | 1.22 × 10−2 | 3.47 × 10−2 | 1.60 × 10−2 | 1.52 | 1.20 × 10−16 | |

| Best | 1.52 × 10−13 | 3.47 × 10−2 | 1.70 | 9.72 × 10−1 | 0.00 | 0.00 | 7.60 × 10−1 | 1.07 | 4.44 | 0.00 | |

| F12 | Mean | 3.83 × 10−1 | 3.34 | 3.57 × 106 | 1.87 × 101 | 5.37 × 10−1 | 1.38 × 101 | 7.95 | 1.10 × 101 | 4.31 × 108 | 2.13 × 10−3 |

| Std | 6.78 × 10−2 | 5.95 × 105 | 1.56 × 106 | 4.44 | 4.11 × 10−2 | 8.57 | 2.37 | 3.03 | 1.65 × 108 | 6.89 × 10−3 | |

| Best | 2.41 × 10−1 | 1.20 | 1.56 × 105 | 7.79 | 4.06 × 10−1 | 6.15 × 10−1 | 2.33 | 6.06 | 1.34 × 108 | 6.35 × 10−4 | |

| F13 | Mean | 7.37 | 1.21 × 101 | 1.69 × 107 | 8.76 × 102 | 8.32 | 4.50 × 101 | 1.68 × 101 | 1.67 × 102 | 8.61 × 108 | 1.29 × 10−1 |

| Std | 4.10 × 10−1 | 7.62 × 106 | 1.19 × 107 | 8.25 × 102 | 3.16 × 10−1 | 3.71 × 101 | 2.36 | 3.12 × 101 | 3.28 × 108 | 7.60 × 10−2 | |

| Best | 6.36 | 1.01 × 101 | 1.87 × 106 | 1.97 × 102 | 7.59 | 9.89 | 1.26 × 101 | 8.56 × 101 | 2.15 × 108 | 3.67 × 10−2 | |

| F14 | Mean | 4.50 × 10−3 | 1.64 | 5.10 × 101 | 1.05 × 102 | 7.70 × 10−5 | 1.25 × 102 | 2.19 × 101 | 2.46 × 101 | 8.11 × 101 | 2.46 × 10−6 |

| Std | 2.87 × 10−3 | 9.69 | 1.17 × 101 | 1.26 × 101 | 8.55 × 10−4 | 2.23 × 101 | 3.88 | 8.16 | 1.41 × 101 | 6.63 × 10−6 | |

| Best | 7.96 × 10−7 | 6.83 × 10−3 | 3.26 × 101 | 7.72 × 101 | 5.32 × 10−12 | 6.85 × 101 | 1.28 × 101 | 1.50 × 101 | 5.54 × 101 | 1.06 × 10−14 | |

| F15 | Mean | 1.41 × 10−2 | 4.92 × 10−1 | 5.00 × 10−1 | 3.95 × 10−1 | 4.32 × 10−3 | 1.30 × 10−2 | 4.99 × 10−1 | 4.88 × 10−1 | 5.00 × 10−1 | 3.13 × 10−3 |

| Std | 2.33 × 10−3 | 1.46 × 10−1 | 4.43 × 10−5 | 1.71 × 10−2 | 1.45 × 10−3 | 2.74 × 10−3 | 6.33 × 10−4 | 2.78 × 10−3 | 2.05 × 10−6 | 1.18 × 10−3 | |

| Best | 9.29 × 10−3 | 1.91 × 10−2 | 5.00 × 10−1 | 3.56 × 10−1 | 3.13 × 10−3 | 6.22 × 10−3 | 4.97 × 10−1 | 4.82 × 10−1 | 5.00 × 10−1 | 2.58 × 10−6 | |

| F16 | Mean | 1.38 × 10−10 | 8.50 | 1.07 × 103 | 7.13 × 102 | 7.40 × 10−18 | 2.48 × 10−13 | 7.18 × 101 | 1.58 × 102 | 4.92 × 103 | 1.87 × 10−15 |

| Std | 7.46 × 10−11 | 7.48 × 101 | 3.89 × 102 | 9.40 × 101 | 7.97 × 10−17 | 1.04 × 101 | 3.26 | 9.91 | 1.30 × 103 | 2.09 × 10−15 | |

| Best | 2.63 × 10−11 | 4.28 × 10−1 | 5.92 × 102 | 4.96 × 102 | 0.00 | 0.00 | 6.25 × 101 | 1.23 × 102 | 2.89 × 103 | 0.00 | |

| Function | GWO | FWA | IPSO | PSO | AGWO | EGWO | BBO | CSA | MFO | FWGWO |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 0.65625 | 6.1875 | 0.828125 | 0.5625 | 0.59375 | 0.53125 | 10.65625 | 1.296875 | 0.953125 | 1.390625 |

| F2 | 2.0625 | 11.5 | 1.8125 | 1.828125 | 2.09375 | 1.734375 | 13.89063 | 4.890625 | 2.34375 | 2.46875 |

| F3 | 0.375 | 6.75 | 0.234375 | 0.15625 | 0.25 | 0.171875 | 9.46875 | 0.890625 | 0.453125 | 0.8125 |

| F4 | 0.453125 | 5.5625 | 0.25 | 0.1875 | 0.328125 | 0.203125 | 8.671875 | 0.890625 | 0.453125 | 0.671875 |

| F5 | 0.40625 | 6.21875 | 0.21875 | 0.15625 | 0.25 | 0.140625 | 10.57813 | 0.75 | 0.421875 | 0.671875 |

| F6 | 1.03125 | 8.53125 | 0.8125 | 0.796875 | 0.84375 | 0.71875 | 9.09375 | 2.640625 | 1.0625 | 2.5 |

| F7 | 0.609375 | 6.640625 | 0.4375 | 0.375 | 0.515625 | 0.375 | 9.671875 | 1.296875 | 0.625 | 0.90625 |

| F8 | 0.359375 | 5.375 | 0.21875 | 0.15625 | 0.234375 | 0.140625 | 9.4375 | 0.71875 | 0.453125 | 0.671875 |

| F9 | 0.421875 | 6.328125 | 0.3125 | 0.25 | 0.265625 | 0.1875 | 8.28125 | 1.046875 | 0.453125 | 0.734375 |

| F10 | 0.421875 | 7.171875 | 0.3125 | 0.25 | 0.28125 | 0.1875 | 8.3125 | 0.96875 | 0.421875 | 0.703125 |

| F11 | 0.453125 | 6.265625 | 0.328125 | 0.25 | 0.34375 | 0.1875 | 8.609375 | 0.90625 | 0.484375 | 1.484375 |

| F12 | 0.953125 | 7.5625 | 0.828125 | 0.71875 | 1 | 0.65625 | 9.09375 | 2.3125 | 1.046875 | 1.28125 |

| F13 | 1.015625 | 8.046875 | 0.796875 | 0.734375 | 0.890625 | 0.703125 | 9.078125 | 2.359375 | 1.046875 | 1.34375 |

| F14 | 0.515625 | 5.875 | 0.234375 | 0.21875 | 0.21875 | 0.15625 | 8.5625 | 0.953125 | 0.4375 | 0.625 |

| F15 | 0.53125 | 7.25 | 0.265625 | 0.1875 | 0.328125 | 0.15625 | 8.125 | 0.921875 | 0.421875 | 0.65625 |

| F16 | 0.453125 | 6.109375 | 0.296875 | 0.234375 | 0.28125 | 0.1875 | 8.3125 | 1.015625 | 0.484375 | 0.78125 |

| Function | GWO | FWA | IPSO | PSO | AGWO | EGWO | BBO | CSA | MFO | |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 1.82 × 10−7 | 7.86 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F2 | p-value | 7.86 × 10−12 | 1.65 × 10−11 | 6.51 × 10−12 | 6.51 × 10−12 | 7.15 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F3 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F4 | p-value | 2.05 × 10−10 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 1.72 × 10−10 | 6.28 × 10−10 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F5 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F6 | p-value | 7.15 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.29 × 10−3 | 1.14 × 10−11 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F7 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 2.05 × 10−2 | 7.08 × 10−11 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F8 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 8.45 × 10−2 | 4.13 × 10−11 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | |

| F9 | p-value | 3.14 × 10−12 | 1.73 × 10−12 | 1.16 × 10−12 | 1.16 × 10−12 | 1.19 × 10−3 | 1.16 × 10−12 | 1.16 × 10−12 | 1.16 × 10−12 | 1.16 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F10 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 1.27 × 10−7 | 7.86 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F11 | p-value | 5.54 × 10−13 | 5.54 × 10−13 | 5.54 × 10−13 | 5.54 × 10−13 | 3.62 × 10−1 | 6.72 × 10−7 | 5.54 × 10−13 | 5.54 × 10−13 | 5.54 × 10−13 |

| h-value | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | |

| F12 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F13 | p-value | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F14 | p-value | 9.47 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 2.36 × 10−3 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 | 6.51 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F15 | p-value | 2.05 × 10−10 | 6.51 × 10−12 | 1.11 × 10−10 | 6.51 × 10−12 | 1.12 × 10−1 | 4.81 × 10−8 | 1.01 × 10−10 | 8.47 × 10−11 | 1.21 × 10−10 |

| h-value | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | |

| F16 | p-value | 1.71 × 10−12 | 1.71 × 10−12 | 1.71 × 10−12 | 1.71 × 10−12 | 6.75 × 10−2 | 1.99 × 10−10 | 1.71 × 10−12 | 1.71 × 10−12 | 1.71 × 10−12 |

| h-value | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, Z.; Zhang, S.; Xiao, W. A Novel Hybrid Algorithm Based on Grey Wolf Optimizer and Fireworks Algorithm. Sensors 2020, 20, 2147. https://doi.org/10.3390/s20072147

Yue Z, Zhang S, Xiao W. A Novel Hybrid Algorithm Based on Grey Wolf Optimizer and Fireworks Algorithm. Sensors. 2020; 20(7):2147. https://doi.org/10.3390/s20072147

Chicago/Turabian StyleYue, Zhihang, Sen Zhang, and Wendong Xiao. 2020. "A Novel Hybrid Algorithm Based on Grey Wolf Optimizer and Fireworks Algorithm" Sensors 20, no. 7: 2147. https://doi.org/10.3390/s20072147

APA StyleYue, Z., Zhang, S., & Xiao, W. (2020). A Novel Hybrid Algorithm Based on Grey Wolf Optimizer and Fireworks Algorithm. Sensors, 20(7), 2147. https://doi.org/10.3390/s20072147