Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition

Abstract

1. Introduction

2. Literature Review

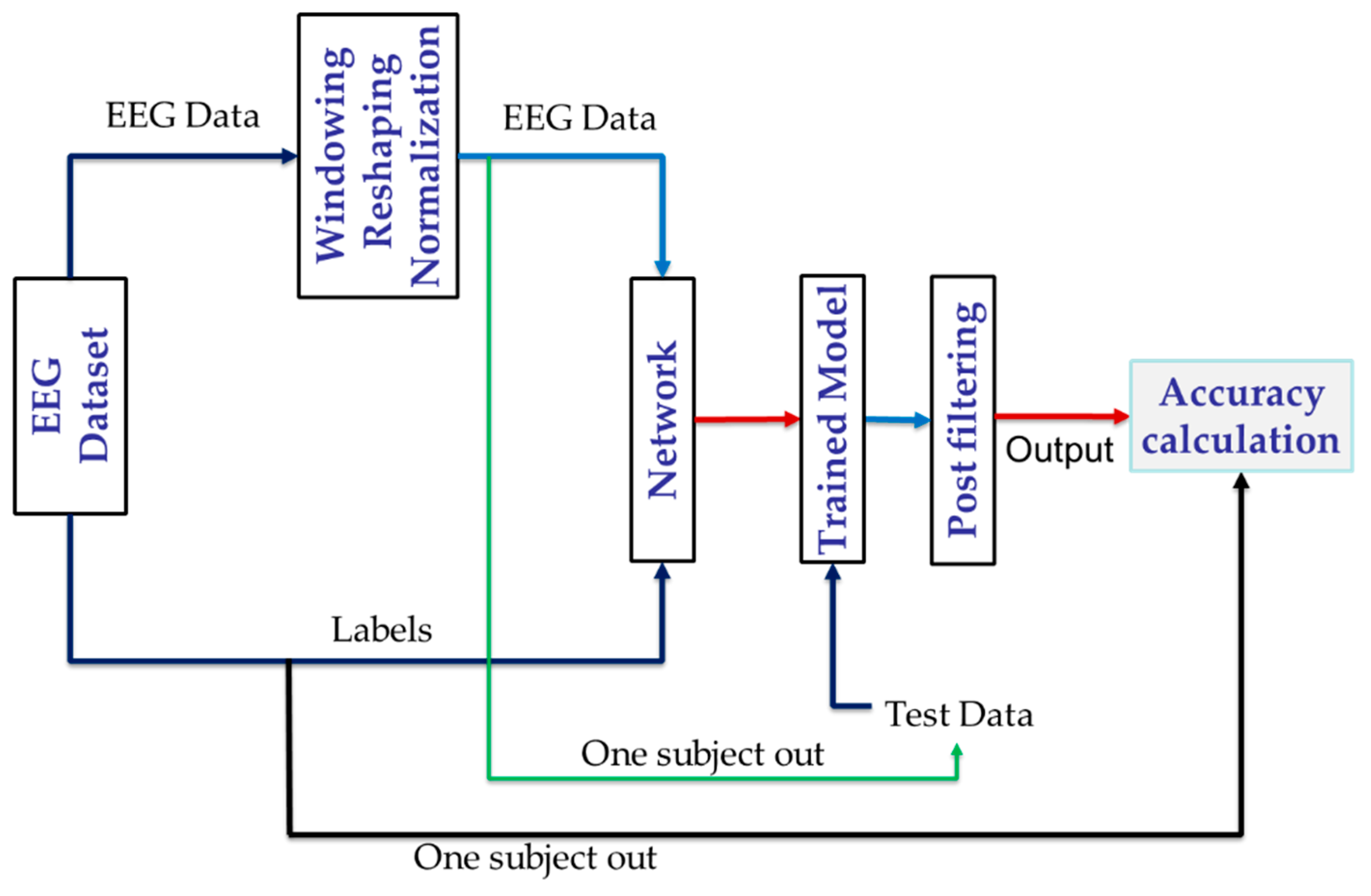

Contributions of the Work

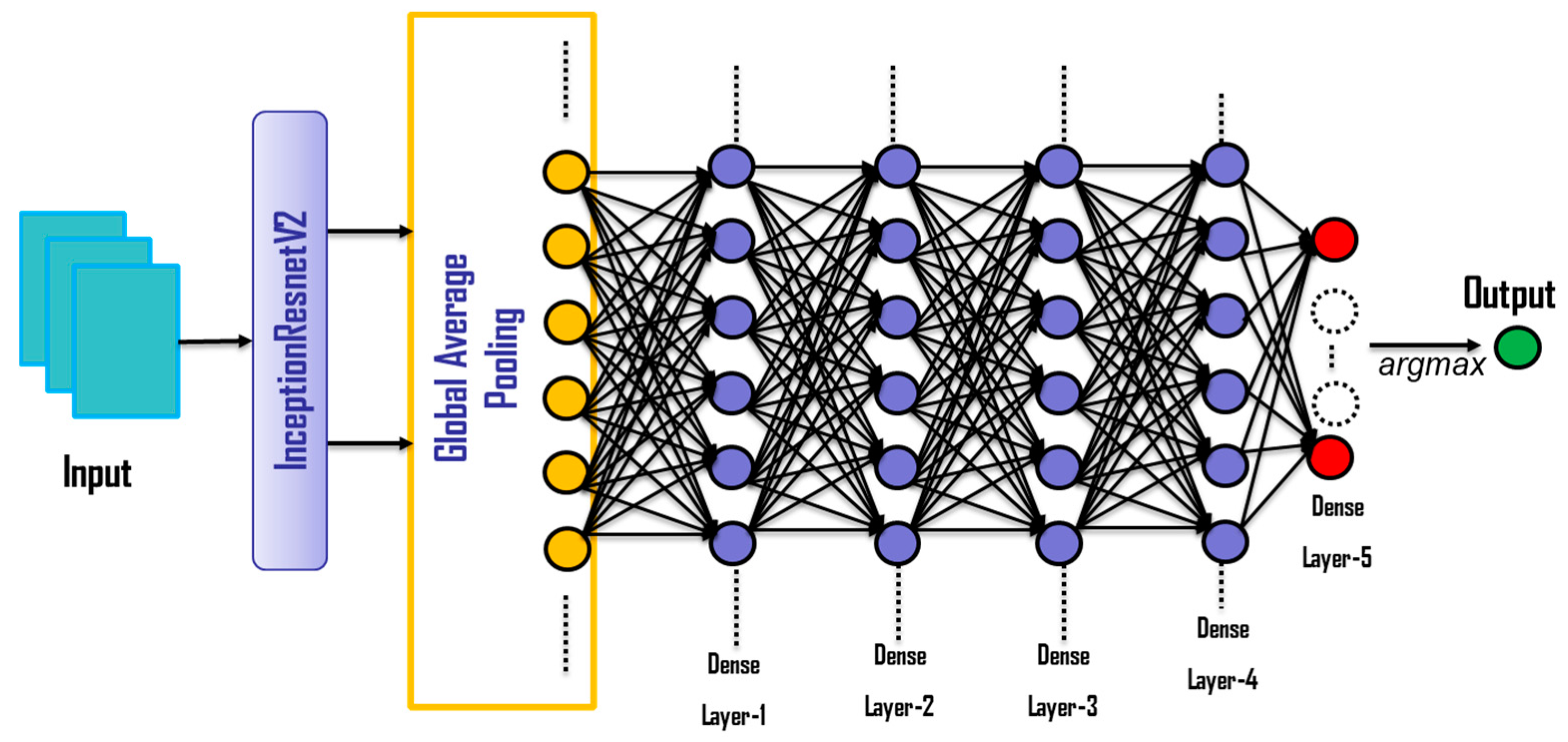

- Feature extraction process is completely left to a pretrained state-of-the-art CNN model InceptionResnetV2 whose capability of feature extraction is shown as highly competent in various classification tasks. This enables the model to explore useful and hidden features for classification.

- Data normalization is applied in order to remove the effects of fluctuations in the voltage amplitude and protect the proposed network against probable ill-conditioned situations.

- Extra pooling and dense layers are added to the pretrained CNN model in order to increase its depth, so that the classification capability is enhanced.

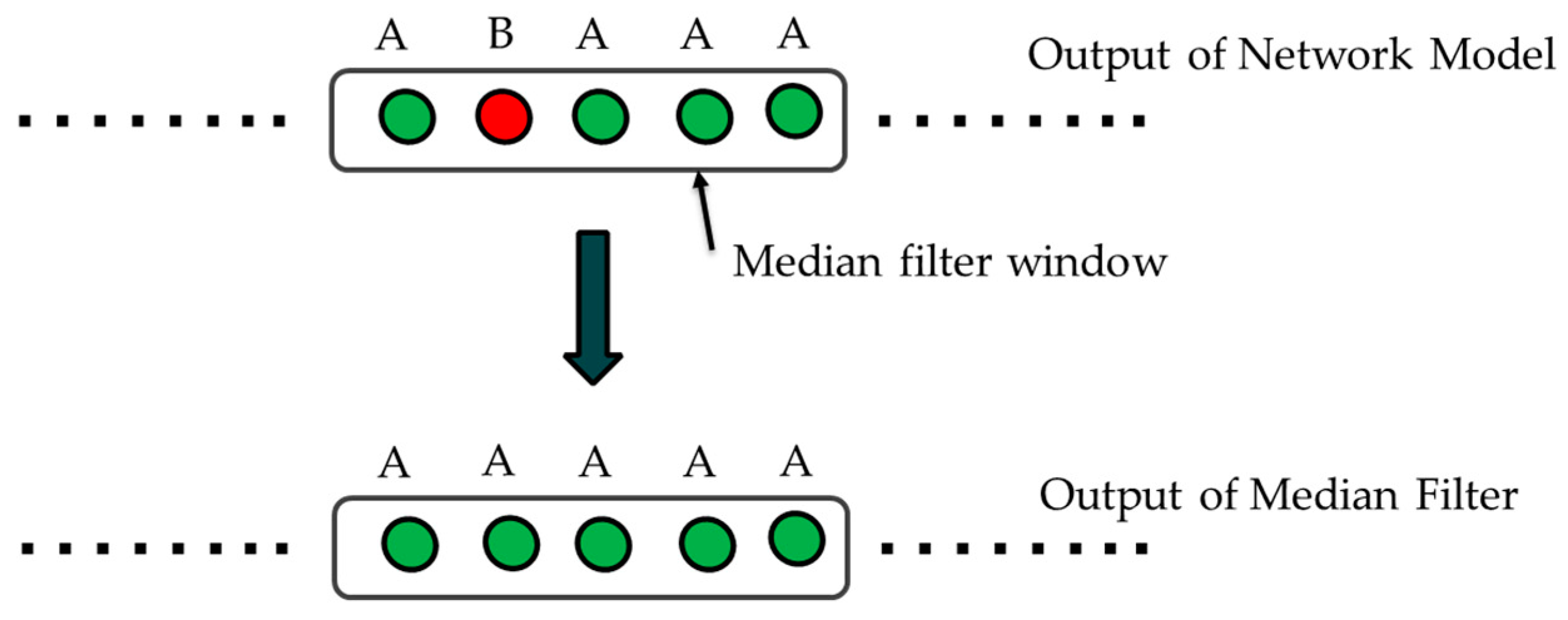

- The output of the network is post-filtered in order to remove the false alarms, which may emerge in short intervals of time where the emotions are assumed to remain mostly unchanged.

3. Materials

3.1. Overview of the SEED Dataset

3.2. Overview of the DEAP Dataset

3.3. Overview of the LUMED Dataset

4. Proposed Method

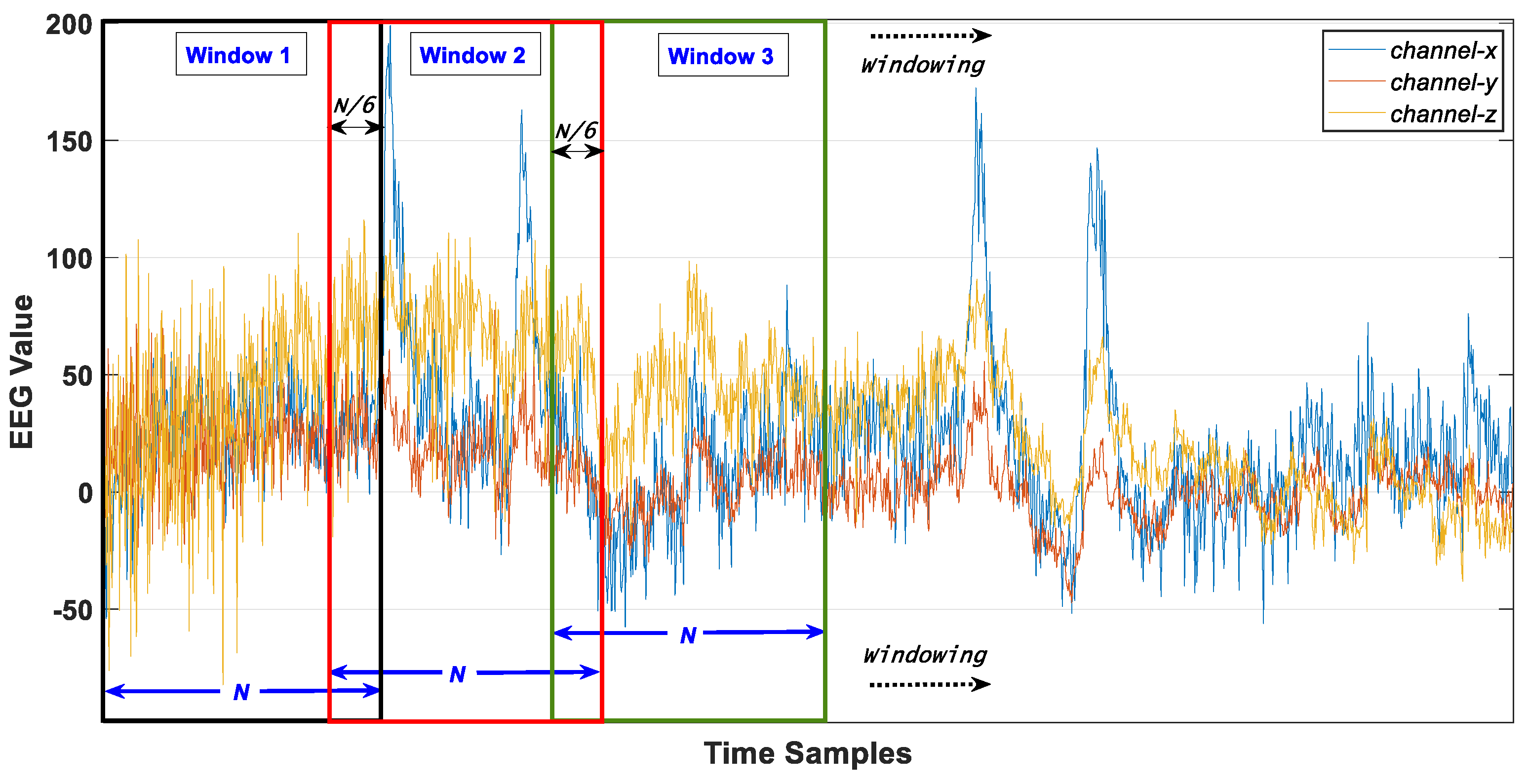

4.1. Windowing of Data

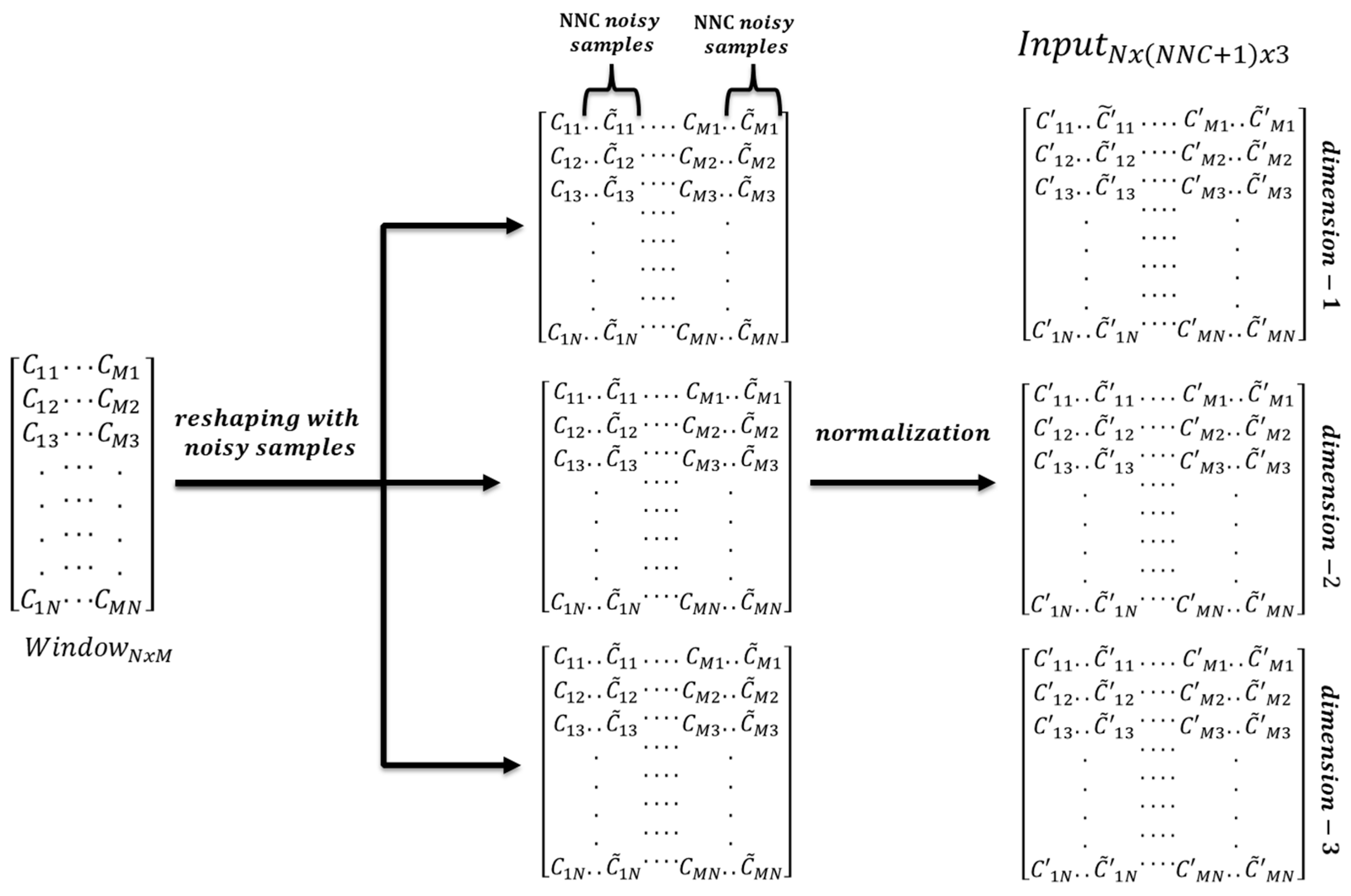

4.2. Data Reshaping

4.3. Normalization

4.4. Channel Selection

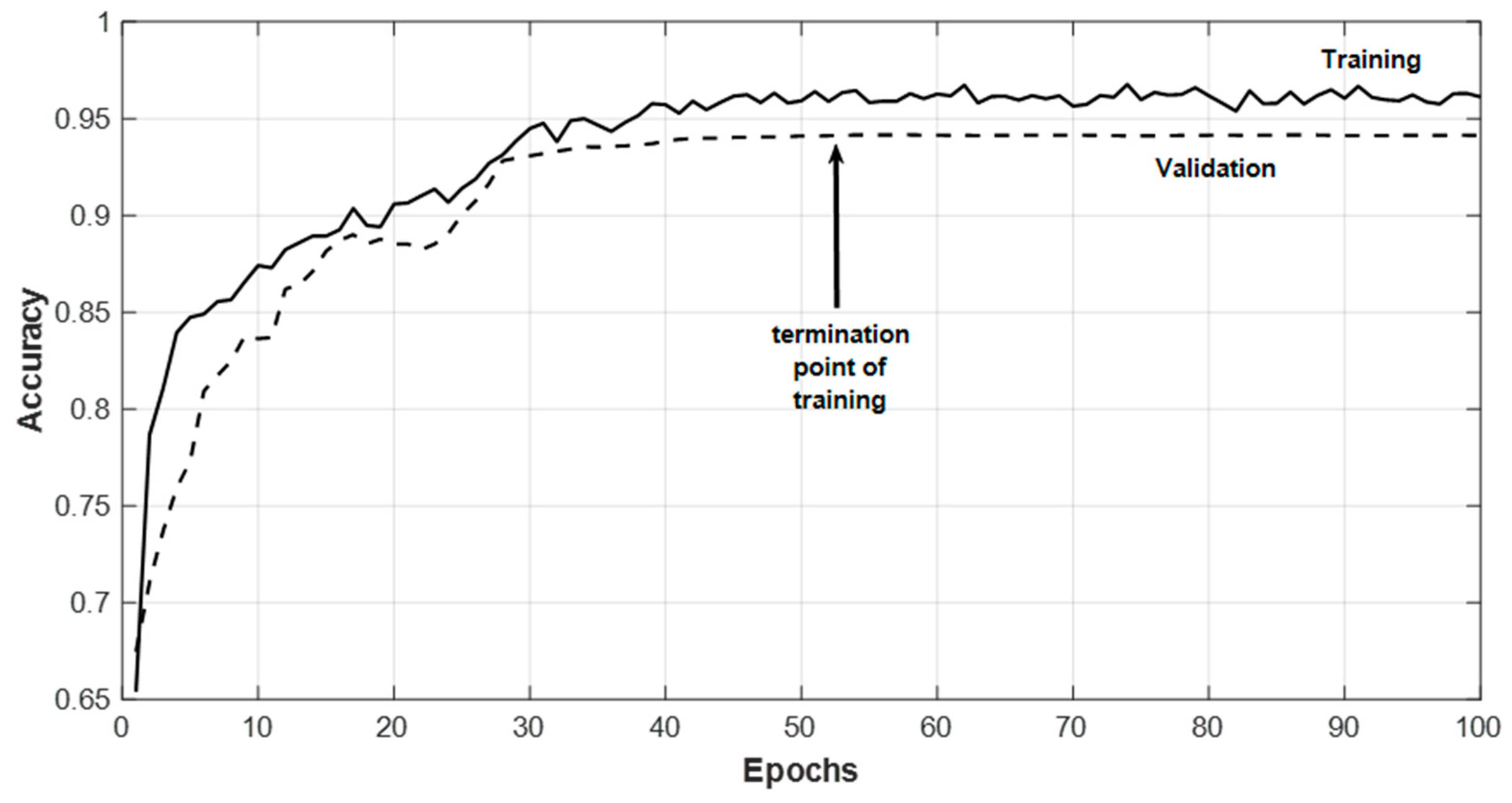

4.5. Network Structure

4.6. Filtering on Output Classes

5. Results and Discussions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Top 14 EEG Hardware Companies. Available online: https://imotions.com/blog/top-14-eeg-hardware-companies-ranked/ (accessed on 9 February 2020).

- Jurcak, V.; Tsuzuki, D.; Dan, I. 10/20, 10/10, and 10/5 systems revisited: Their validity as relative head-surface-based positioning systems. NeuroImage 2007, 34, 1600–1611. [Google Scholar] [CrossRef] [PubMed]

- Aboalayon, K.A.I.; Faezipour, M.; Almuhammadi, W.S.; Moslehpour, S. Sleep Stage Classification Using EEG Signal Analysis: A Comprehensive Survey and New Investigation. Entropy 2016, 18, 272. [Google Scholar] [CrossRef]

- Acharya, U.; Sree, S.V.; Swapna, G.; Martis, R.J.; Suri, J.S. Automated EEG analysis of epilepsy: A review. Knowl. Based Syst. 2013, 45, 147–165. [Google Scholar] [CrossRef]

- Engemann, D.; Raimondo, F.; King, J.-R.; Rohaut, B.; Louppe, G.; Faugeras, F.; Annen, J.; Cassol, H.; Gosseries, O.; Slezak, D.F.; et al. Robust EEG-based cross-site and cross-protocol classification of states of consciousness. Brain 2018, 141, 3179–3192. [Google Scholar] [CrossRef]

- Arns, M.; Conners, C.K.; Kraemer, H.C. A decade of EEG theta/beta ratio research in ADHD: A meta-analysis. J. Atten. Disord. 2013, 17, 374–383. [Google Scholar] [CrossRef]

- Liu, N.-H.; Chiang, C.-Y.; Chu, H.-C. Recognizing the Degree of Human Attention Using EEG Signals from Mobile Sensors. Sensors 2013, 13, 10273–10286. [Google Scholar] [CrossRef]

- Shestyuk, A.; Kasinathan, K.; Karapoondinott, V.; Knight, R.T.; Gurumoorthy, R. Individual EEG measures of attention, memory, and motivation predict population level TV viewership and Twitter engagement. PLoS ONE 2019, 14, e0214507. [Google Scholar] [CrossRef]

- Mohammadpour, M.; Mozaffari, S. Classification of EEG-based attention for brain computer interface. In Proceedings of the 3rd Iranian Conference on Intelligent Systems and Signal Processing (ICSPIS), Shahrood, Iran, 20–21 December 2017; pp. 34–37. [Google Scholar] [CrossRef]

- So, W.K.Y.; Wong, S.; Mak, J.N.; Chan, R.H.M. An evaluation of mental workload with frontal EEG. PLoS ONE 2017, 12. [Google Scholar] [CrossRef]

- Thejaswini, S.; Ravikumar, K.M.; Jhenkar, L.; Aditya, N.; Abhay, K.K. Analysis of EEG Based Emotion Detection of DEAP and SEED-IV Databases Using SVM. Int. J. Recent Technol. Eng. 2019, 8, 207–211. [Google Scholar]

- Liu, J.; Meng, H.; Nandi, A.; Li, M. Emotion detection from EEG recordings. In Proceedings of the 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 1722–1727. [Google Scholar]

- Gómez, A.; Quintero, L.; López, N.; Castro, J. An approach to emotion recognition in single-channel EEG signals: A mother child interaction. J. Phys. Conf. Ser. 2016, 705, 12051. [Google Scholar] [CrossRef]

- Xing, X.; Li, Z.; Xu, T.; Shu, L.; Hu, B.; Xu, X. SAE+LSTM: A New Framework for Emotion Recognition From Multi-Channel EEG. Front. Neurorobot. 2019, 13, 37. [Google Scholar] [CrossRef] [PubMed]

- Müller-Putz, G.; Peicha, L.; Ofner, P. Movement Decoding from EEG: Target or Direction. In Proceedings of the 7th Graz Brain-Computer Interface Conference, Graz, Austria, 18–22 September 2017. [Google Scholar] [CrossRef]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-Based Brain-Computer Interfaces Using Motor-Imagery: Techniques and Challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [PubMed]

- Mondini, V.; Mangia, A.L.; Cappello, A. EEG-Based BCI System Using Adaptive Features Extraction and Classification Procedures. Comput. Intell. Neurosci. 2016, 2016, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Picard, R.W. Affective Computing; MIT Media Laboratory, Perceptual Computing: Cambridge, MA, USA, 1995. [Google Scholar]

- Alarcao, S.M.; Fonseca, M.J. Emotions Recognition Using EEG Signals: A Survey. IEEE Trans. Affect. Comput. 2019, 10, 374–393. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; Lecompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 1987, 53, 712–717. [Google Scholar] [CrossRef] [PubMed]

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Aydin, S.G.; Kaya, T.; Guler, H. Wavelet-based study of valence-arousal model of emotions on EEG signals with LabVIEW. Brain Inform. 2016, 3, 109–117. [Google Scholar] [CrossRef]

- Paltoglou, G.; Thelwall, M. Seeing Stars of Valence and Arousal in Blog Posts. IEEE Trans. Affect. Comput. 2012, 4, 116–123. [Google Scholar] [CrossRef]

- The McGill Physiology Virtual Lab. Available online: https://www.medicine.mcgill.ca/physio/vlab/biomed_signals/eeg_n.htm (accessed on 9 February 2020).

- Al-Fahoum, A.S.; Al-Fraihat, A.A. Methods of EEG Signal Features Extraction Using Linear Analysis in Frequency and Time-Frequency Domains. ISRN Neurosci. 2014, 2014, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Duan, R.-N.; Zhu, J.-Y.; Lu, B.-L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 5–8 November 2013; pp. 81–84. [Google Scholar]

- Zheng, W.-L.; Lu, B.-L. Investigating Critical Frequency Bands and Channels for EEG-Based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- George, F.P.; Shaikat, I.M.; Hossain, P.S.F.; Parvez, M.Z.; Uddin, J. Recognition of emotional states using EEG signals based on time-frequency analysis and SVM classifier. Int. J. Electr. Comput. Eng. 2019, 9, 1012–1020. [Google Scholar] [CrossRef]

- Soundarya, S. An EEG based emotion recognition and classification using machine learning techniques, I. J. Emerg. Technol. Innov. Eng. 2019, 5, 744–750. [Google Scholar]

- Swati, V.; Preeti, S.; Chamandeep, K. Classification of Human Emotions using Multiwavelet Transform based Features and Random Forest Technique. Indian J. Sci. Technol. 2015, 8, 1–7. [Google Scholar]

- Bono, V.; Biswas, D.; Das, S.; Maharatna, K. Classifying human emotional states using wireless EEG based ERP and functional connectivity measures. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Las Vegas, NV, USA, 24–27 February 2016; pp. 200–203. [Google Scholar] [CrossRef]

- Nattapong, T.; Ken-ichi, F.; Masayuki, N. Application of Deep Belief Networks in EEG, based Dynamic Music-emotion Recognition. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 25–29 July 2016. [Google Scholar]

- Prieto, L.A.B.; Oplatková, Z.K. Emotion Recognition using AutoEncoders and Convolutional Neural Networks. MENDEL 2018, 24, 113–120. [Google Scholar] [CrossRef]

- Chen, J.X.; Zhang, P.W.; Mao, Z.J.; Huang, Y.F.; Jiang, D.M.; Zhang, Y.N. Accurate EEG-Based Emotion Recognition on Combined Features Using Deep Convolutional Neural Networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Tripathi, S.; Acharya, S.; Sharma, R.D.; Mittal, S.; Bhattacharya, S. Using deep and convolutional neural networks for accurate emotion classification on DEAP Dataset. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4746–4752. [Google Scholar]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Shalaby, M.A. EEG-based emotion recognition using 3D convolutional neural networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion Recognition based on EEG using LSTM Recurrent Neural Network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Jeevan, R.K.; Kumar, P.S.; Srivikas, M.; Rao, S.V.M. EEG-based emotion recognition using LSTM-RNN machine learning algorithm. In Proceedings of the 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; pp. 1–4. [Google Scholar]

- Li, M.; Xu, H.; Liu, X.; Lu, S. Emotion recognition from multichannel EEG signals using K-nearest neighbor classification. Technol. Health Care 2018, 26, 509–519. [Google Scholar] [CrossRef]

- DEAP Dataset. Available online: https://www.eecs.qmul.ac.uk/mmv/datasets/deap/ (accessed on 9 February 2020).

- Alazrai, R.; Homoud, R.; Alwanni, H.; Daoud, M.I. EEG-Based Emotion Recognition Using Quadratic Time-Frequency Distribution. Sensors 2018, 18, 2739. [Google Scholar] [CrossRef] [PubMed]

- Nivedha, R.; Brinda, M.; Vasanth, D.; Anvitha, M.; Suma, K.V. EEG based emotion recognition using SVM and PSO. In Proceedings of the International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 1597–1600. [Google Scholar]

- Particle Swarm Optimization. Available online: https://www.sciencedirect.com/topics/engineering/particle-swarm-optimization (accessed on 9 February 2020).

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef] [PubMed]

- Chai, X.; Wang, Q.; Zhao, Y.; Liu, X.; Bai, O.; Li, Y. Unsupervised domain adaptation techniques based on auto-encoder for non-stationary EEG-based emotion recognition. Comput. Boil. Med. 2016, 79, 205–214. [Google Scholar] [CrossRef] [PubMed]

- Yang, F.; Zhao, X.; Jiang, W.; Gao, P.; Liu, G. Multi-method Fusion of Cross-Subject Emotion Recognition Based on High-Dimensional EEG Features. Front. Comput. Neurosci. 2019, 13, 53. [Google Scholar] [CrossRef]

- SEED Dataset. Available online: http://bcmi.sjtu.edu.cn/~seed/ (accessed on 9 February 2020).

- Zhang, W.; Wang, F.; Jiang, Y.; Xu, Z.; Wu, S.; Zhang, Y. Cross-Subject EEG-Based Emotion Recognition with Deep Domain Confusion. ICIRA 2019 Intell. Robot. Appl. 2019, 11740, 558–570. [Google Scholar]

- Pandey, P.; Seeja, K. Subject independent emotion recognition from EEG using VMD and deep learning. J. King Saud Univ. Comput. Inf. Sci. 2019, 53–58. [Google Scholar] [CrossRef]

- Keelawat, P.; Thammasan, N.; Kijsirikul, B.; Numao, M. Subject-Independent Emotion Recognition During Music Listening Based on EEG Using Deep Convolutional Neural Networks. In Proceedings of the IEEE 15th International Colloquium on Signal Processing & Its Applications (CSPA), Penang, Malaysia, 8–9 March 2019; pp. 21–26. [Google Scholar] [CrossRef]

- Gupta, V.; Chopda, M.D.; Pachori, R.B. Cross-Subject Emotion Recognition Using Flexible Analytic Wavelet Transform From EEG Signals. IEEE Sens. J. 2018, 19, 2266–2274. [Google Scholar] [CrossRef]

- Yin, Z.; Wang, Y.; Liu, L.; Zhang, W.; Zhang, J. Cross-Subject EEG Feature Selection for Emotion Recognition Using Transfer Recursive Feature Elimination. Front. Neurorobot. 2017, 11, 200. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, D.; Miao, C. EEG-Based Emotion Recognition Using Regularized Graph Neural Networks. arXiv 2019. in Press. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Krauledat, M.; Tangermann, M.; Blankertz, B.; Müller, K.-R. Towards Zero Training for Brain-Computer Interfacing. PLoS ONE 2008, 3, e2967. [Google Scholar] [CrossRef] [PubMed]

- Fazli, S.; Popescu, F.; Danóczy, M.; Blankertz, B.; Müller, K.-R.; Grozea, C. Subject-independent mental state classification in single trials. Neural Netw. 2009, 22, 1305–1312. [Google Scholar] [CrossRef] [PubMed]

- Kang, H.; Nam, Y.; Choi, S. Composite Common Spatial Pattern for Subject-to-Subject Transfer. IEEE Signal Process. Lett. 2009, 16, 683–686. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Zhang, Y.-Q.; Zhu, J.-Y.; Lu, B.-L. Transfer components between subjects for EEG-based emotion recognition. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 917–922. [Google Scholar]

- Zheng, W.-L.; Lu, B.-L. Personalizing EEG-based affective models with transfer learning. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 2732–2738. [Google Scholar]

- Chai, X.; Wang, Q.; Zhao, Y.; Li, Y.; Wang, Q.; Liu, X.; Bai, O. A Fast, Efficient Domain Adaptation Technique for Cross-Domain Electroencephalography (EEG)-Based Emotion Recognition. Sensors 2017, 17, 1014. [Google Scholar] [CrossRef] [PubMed]

- Lan, Z.; Sourina, O.; Wang, L.; Scherer, R.; Müller-Putz, G.R. Domain Adaptation Techniques for EEG-Based Emotion Recognition: A Comparative Study on Two Public Datasets. IEEE Trans. Cogn. Dev. Syst. 2019, 11, 85–94. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. preprint. [Google Scholar]

- Loughborough University EEG based Emotion Recognition Dataset. Available online: https://www.dropbox.com/s/xlh2orv6mgweehq/LUMED_EEG.zip?dl=0 (accessed on 9 February 2020).

- Plöchl, M.; Ossandón, J.P.; König, P. Combining EEG and eye tracking: Identification, characterization, and correction of eye movement artifacts in electroencephalographic data. Front. Hum. Neurosci. 2012, 6. [Google Scholar] [CrossRef]

- Enobio 8. Available online: https://www.neuroelectrics.com/solutions/enobio/8/ (accessed on 9 February 2020).

- Pretrained Deep Neural Networks. Available online: https://uk.mathworks.com/help/deeplearning/ug/pretrained-convolutional-neural-networks.html (accessed on 9 February 2020).

- Keras Applications. Available online: https://keras.io/applications/#inceptionresnetv2 (accessed on 9 February 2020).

- Wang, F.; Zhong, S.-H.; Peng, J.; Jiang, J.; Liu, Y. Data Augmentation for EEG-Based Emotion Recognition with Deep Convolutional Neural Networks. In MultiMedia Modeling, Proceedings of the 24th International Conference, MMM 2018, Bangkok, Thailand, 5–7 February 2018; Schoeffmann, K., Chalidabhongse, T.H., Ngo, C.W., Aramvith, S., O’Connor, N.E., Ho, Y.-S., Gabbouj, M., Elgamma, A., Eds.; Springer: Cham, Germany, 2018; Volume 10705, p. 10705. [Google Scholar]

- Salzman, C.D.; Fusi, S. Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annu. Rev. Neurosci. 2010, 33, 173–202. [Google Scholar] [CrossRef]

- Zhao, G.; Zhang, Y.; Ge, Y. Frontal EEG Asymmetry and Middle Line Power Difference in Discrete Emotions. Front. Behav. Neurosci. 2018, 12, 225. [Google Scholar] [CrossRef]

- Bos, D.O. EEG-based Emotion Recognition: The influence of Visual and Auditory Stimuli. 2006. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.226.8188&rep=rep1&type=pdf (accessed on 3 April 2020).

- Alnafjan, A.; Hosny, M.; Al-Wabil, A.; Alohali, Y.A. Classification of Human Emotions from Electroencephalogram (EEG) Signal using Deep Neural Network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 419–425. [Google Scholar]

- Kawala-Sterniuk, A.; Podpora, M.; Pelc, M.; Blaszczyszyn, M.; Gorzelanczyk, E.J.; Martinek, R.; Ozana, S. Comparison of Smoothing Filters in Analysis of EEG Data for the Medical Diagnostics Purposes. Sensors 2020, 20, 807. [Google Scholar] [CrossRef] [PubMed]

- Tang, C.; Wang, D.; Tan, A.-H.; Miao, C. EEG-Based Emotion Recognition via Fast and Robust Feature Smoothing. In Proceedings of the 2017 International Conference on Brain Informatics, Beijing, China, 16–18 November 2017; pp. 83–92. [Google Scholar]

- Beedie, C.; Terry, P.; Lane, A. Distinctions between emotion and mood. Cogn. Emot. 2005, 19, 847–878. [Google Scholar] [CrossRef]

- Gong, B.; Shi, Y.; Sha, F.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2066–2073. [Google Scholar]

- Schölkopf, B.; Smola, A.; Müller, K.-R. Nonlinear Component Analysis as a Kernel Eigenvalue Problem. Neural Comput. 1998, 10, 1299–1319. [Google Scholar] [CrossRef]

- Yan, K.; Kou, L.; Zhang, D. Learning Domain-Invariant Subspace Using Domain Features and Independence Maximization. IEEE Trans. Cybern. 2018, 48, 288–299. [Google Scholar] [CrossRef] [PubMed]

- Fernando, B.; Habrard, A.; Sebban, M.; Tuytelaars, T. Unsupervised Visual Domain Adaptation Using Subspace Alignment. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2960–2967. [Google Scholar]

- Shi, Y.; Sha, F. Information-Theoretical Learning of Discriminative Clusters for Unsupervised Domain Adaptation. In Proceedings of the 2012 International Conference on Machine Learning (ICML), Edinburgh, Scotland, 26 June–1 July 2012; pp. 1275–1282. [Google Scholar]

- Matuszewski, J.; Pietrow, D. Recognition of electromagnetic sources with the use of deep neural networks. In Proceedings of the XII Conference on Reconnaissance and Electronic Warfare Systems, Oltarzew, Poland, 19–21 November 2018. [Google Scholar] [CrossRef]

| Layer (Type) | Output Shape | Connected to | Activation Function |

|---|---|---|---|

| Global_Average_Pooling | (None, 1536) | convolution | - |

| Dense 1 | (None, 1024) | Global_Average_Pooling | Relu |

| Dense 2 | (None, 1024) | Dense 1 | Relu |

| Dense 3 | (None, 1024) | Dense 2 | Relu |

| Dense 4 | (None, 512) | Dense 3 | Relu |

| Dense 5 | (None, z 1) | Dense 4 | Softmax |

| Property | Value |

|---|---|

| Base model | InceptionResnetV2 |

| Additional layers | Global Average Pooling, 5 Dense Layers |

| Regularization | L2 |

| Optimizer | Adam |

| Loss | Categorical cross entropy |

| Max. # Epochs | 100 |

| Shuffle | True |

| Batch size | 64 |

| Environment | Win 10, 2 Parallel GPU(s), TensorFlow |

| # Output classes | 2 (Pos-Neg) or 3 (Pos-Neu-Neg) |

| Users | Accuracy (Pos-Neg)1 | Accuracy (without Normalization) (Pos-Neg) | Accuracy (with Filtering) (Pos-Neg) | Accuracy (Pos-Neu-Neg)2 | Accuracy (without Normalization) (Pos-Neu-Neg) | Accuracy (with Filtering) (Pos-Neu-Neg) |

|---|---|---|---|---|---|---|

| User 1 | 85.7 | 74.2 | 88.5 | 73.3 | 55.2 | 78.2 |

| User 2 | 83.6 | 76.3 | 86.7 | 72.8 | 57.4 | 78.5 |

| User 3 | 69.2 | 56.7 | 74.3 | 61.6 | 53.9 | 67.7 |

| User 4 | 95.9 | 69.4 | 96.1 | 83.4 | 72.4 | 88.3 |

| User 5 | 78.4 | 70.1 | 83.2 | 74.1 | 54.3 | 76.5 |

| User 6 | 95.8 | 81.8 | 96.4 | 85.3 | 67.7 | 89.1 |

| User 7 | 72.9 | 56.2 | 77.7 | 64.4 | 53.5 | 70.3 |

| User 8 | 69.2 | 49.3 | 75.2 | 62.9 | 51.3 | 69.2 |

| User 9 | 88.6 | 61.5 | 90.5 | 79.2 | 64.8 | 82.7 |

| User 10 | 77.8 | 70.1 | 82.7 | 69.3 | 56.0 | 74.5 |

| User 11 | 78.6 | 65.7 | 83.1 | 73.0 | 59.9 | 78.1 |

| User 12 | 81.6 | 72.0 | 85.7 | 75.6 | 63.2 | 78.4 |

| User 13 | 91.2 | 80.2 | 94.2 | 81.3 | 73.1 | 84.9 |

| User 14 | 86.4 | 72.3 | 91.8 | 73.9 | 61.1 | 78.5 |

| User 15 | 89.2 | 73.9 | 92.3 | 75.4 | 60.3 | 80.3 |

| Average | 82.94 | 68.64 | 86.56 | 73.7 | 60.27 | 78.34 |

| Std.Dev. | 8.32 | 8.88 | 6.94 | 6.80 | 6.59 | 6.11 |

| Work | Accuracy (Pos-Neg) | Accuracy (Pos-Nue-Neg) |

|---|---|---|

| ST-SBSSVM [48] | 89.0 | - |

| RGNN [55] | - | 85.3 |

| Proposed | 86.5 | 78.3 |

| CNN-DDC [50] | - | 82.1 |

| ASFM [62] | - | 80.4 |

| SAAE [47] | - | 77.8 |

| TCA [46] | - | 71.6 |

| GFK [78] | 67.5 | |

| KPCA [79] | 62.4 | |

| MIDA [80] | 72.4 |

| Users | Accuracy | Accuracy (with Median Filtering) |

|---|---|---|

| User 1 | 65.1 | 69.2 |

| User 2 | 71.2 | 73.4 |

| User 3 | 67.8 | 69.1 |

| User 4 | 61.7 | 65.3 |

| User 5 | 73.1 | 75.9 |

| User 6 | 82.5 | 85.4 |

| User 7 | 75.5 | 77.2 |

| User 8 | 67.6 | 71.3 |

| User 9 | 62.8 | 67.9 |

| User 10 | 61.9 | 66.6 |

| User 11 | 68.8 | 72.5 |

| User 12 | 64.3 | 69.8 |

| User 13 | 69.1 | 74.9 |

| User 14 | 64.3 | 68.8 |

| User 15 | 65.6 | 70.2 |

| User 16 | 68.7 | 72.1 |

| User 17 | 65.6 | 70.7 |

| User 18 | 75.8 | 78.3 |

| User 19 | 66.9 | 72.1 |

| User 20 | 70.4 | 73.2 |

| User 21 | 64.5 | 68.8 |

| User 22 | 61.6 | 68.3 |

| User 23 | 80.7 | 83.6 |

| User 24 | 62.5 | 69.4 |

| User 25 | 64.9 | 70.1 |

| User 26 | 69.7 | 72.9 |

| User 27 | 82.7 | 85.3 |

| User 28 | 68.9 | 73.8 |

| User 29 | 61.7 | 69.9 |

| User 30 | 72.9 | 77.7 |

| User 31 | 73.1 | 78.4 |

| User 32 | 63.6 | 68.1 |

| Average | 68.60 | 72.81 |

| Std. Dev. | 5.85 | 5.07 |

| Work | Accuracy |

|---|---|

| FAWT [53] | 79.9 |

| T-RFE [54] | 78.7 |

| Proposed | 72.8 |

| ST-SBSSVM [48] | 72 |

| VMD-DNN [51] | 62.5 |

| MIDA [80] | 48.9 |

| TCA [46] | 47.2 |

| SA [81] | 38.7 |

| ITL [82] | 40.5 |

| GFK [78] | 46.5 |

| KPCA [79] | 39.8 |

| Users | Accuracy | Accuracy (with Filtering) |

|---|---|---|

| User 1 | 85.8 | 87.1 |

| User 2 | 56.3 | 62.7 |

| User 3 | 82.2 | 86.4 |

| User 4 | 73.8 | 78.5 |

| User 5 | 92.1 | 95.3 |

| User 6 | 67.8 | 74.1 |

| User 7 | 66.3 | 71.4 |

| User 8 | 89.7 | 93.5 |

| User 9 | 86.3 | 89.9 |

| User 10 | 89.1 | 93.4 |

| User 11 | 58.9 | 67.6 |

| Average | 77.11 | 81.80 |

| Std. Dev. | 12.40 | 10.92 |

| Users | Accuracy (Pos-Neg) | Accuracy (with Median Filtering) (Pos-Neg) |

|---|---|---|

| User 1 | 50.5 | 54.9 |

| User 2 | 61.7 | 63.7 |

| User 3 | 43.3 | 47.3 |

| User 4 | 46.0 | 51.5 |

| User 5 | 68.9 | 71.9 |

| User 6 | 45.3 | 49.4 |

| User 7 | 73.4 | 77.2 |

| User 8 | 51.9 | 56.3 |

| User 9 | 62.3 | 67.9 |

| User 10 | 63.8 | 68.6 |

| User 11 | 48.6 | 53.6 |

| User 12 | 46.4 | 51.3 |

| User 13 | 50.1 | 57.1 |

| User 14 | 70.4 | 76.9 |

| User 15 | 58.8 | 62.8 |

| User 16 | 59.7 | 66.3 |

| User 17 | 46.6 | 53.1 |

| User 18 | 64.7 | 68.5 |

| User 19 | 47.9 | 53.3 |

| User 20 | 39.1 | 44.6 |

| User 21 | 62.1 | 68.8 |

| User 22 | 45.6 | 51.3 |

| User 23 | 61.4 | 69.9 |

| User 24 | 54.0 | 59.2 |

| User 25 | 50.8 | 56.3 |

| User 26 | 40.8 | 44.7 |

| User 27 | 39.2 | 45.3 |

| User 28 | 42.4 | 48.4 |

| User 29 | 46.2 | 50.3 |

| User 30 | 41.7 | 46.2 |

| User 31 | 61.4 | 65.7 |

| User 32 | 53.8 | 57.1 |

| Average | 53.08 | 58.10 |

| Std. Dev. | 9.54 | 9.51 |

| Work | Accuracy (Pos-Neg) | Standard Deviation |

|---|---|---|

| Proposed | 58.10 | 9.51 |

| MIDA [80] | 47.1 | 10.60 |

| TCA [46] | 42.6 | 14.69 |

| SA [81] | 37.3 | 7.90 |

| ITL [82] | 34.5 | 13.17 |

| GFK [78] | 41.9 | 11.33 |

| KPCA [79] | 35.6 | 6.97 |

| Users (LUMED) | Trained on SEED | Trained on DEAP | ||

|---|---|---|---|---|

| Accuracy (Pos-Neg) | Accuracy (with Median Filtering) (Pos-Neg) | Accuracy (Pos-Neg) | Accuracy (with Median Filtering) (Pos-Neg) | |

| User 1 | 68.2 | 72.3 | 42.7 | 48.3 |

| User 2 | 54.5 | 61.7 | 50.4 | 56.8 |

| User 3 | 54.3 | 59.6 | 49.7 | 54.1 |

| User 4 | 59.6 | 64.1 | 51.3 | 57.9 |

| User 5 | 44.8 | 53.7 | 87.1 | 89.7 |

| User 6 | 67.1 | 73.5 | 52.6 | 58.4 |

| User 7 | 53.2 | 60.8 | 53.8 | 59.2 |

| User 8 | 64.5 | 71.2 | 46.0 | 49.3 |

| User 9 | 48.6 | 50.9 | 84.7 | 85.6 |

| User 10 | 64.9 | 76.3 | 51.5 | 58.8 |

| User 11 | 57.1 | 64.8 | 63.8 | 67.1 |

| Average | 57.89 | 64.44 | 57.6 | 62.29 |

| Std. Dev. | 7.33 | 7.82 | 14.23 | 12.91 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cimtay, Y.; Ekmekcioglu, E. Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition. Sensors 2020, 20, 2034. https://doi.org/10.3390/s20072034

Cimtay Y, Ekmekcioglu E. Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition. Sensors. 2020; 20(7):2034. https://doi.org/10.3390/s20072034

Chicago/Turabian StyleCimtay, Yucel, and Erhan Ekmekcioglu. 2020. "Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition" Sensors 20, no. 7: 2034. https://doi.org/10.3390/s20072034

APA StyleCimtay, Y., & Ekmekcioglu, E. (2020). Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition. Sensors, 20(7), 2034. https://doi.org/10.3390/s20072034