The Effect of a Diverse Dataset for Transfer Learning in Thermal Person Detection

Abstract

1. Introduction

2. Related Work

2.1. Multimodal Approaches

2.2. Thermal Approaches

2.3. Datasets

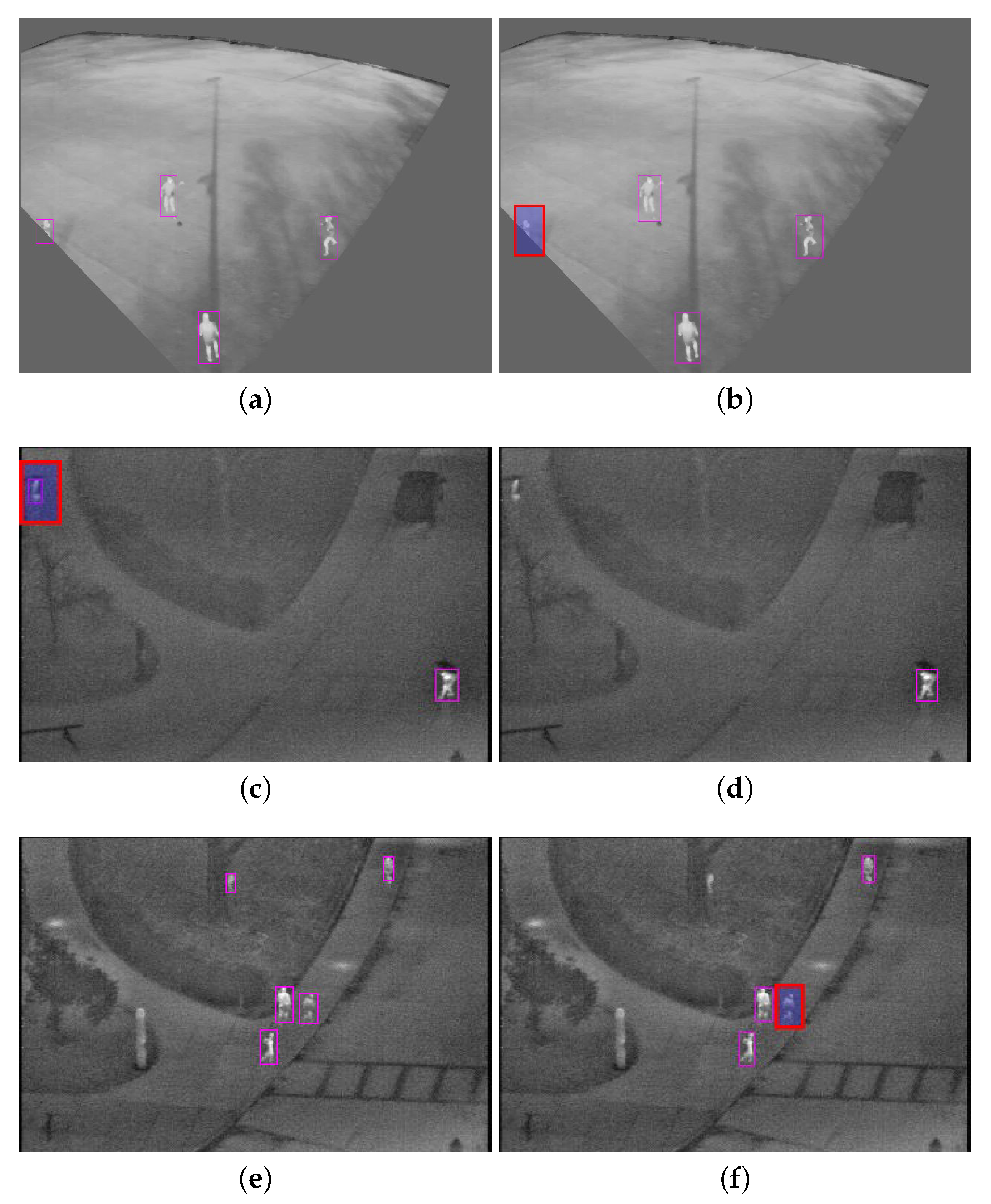

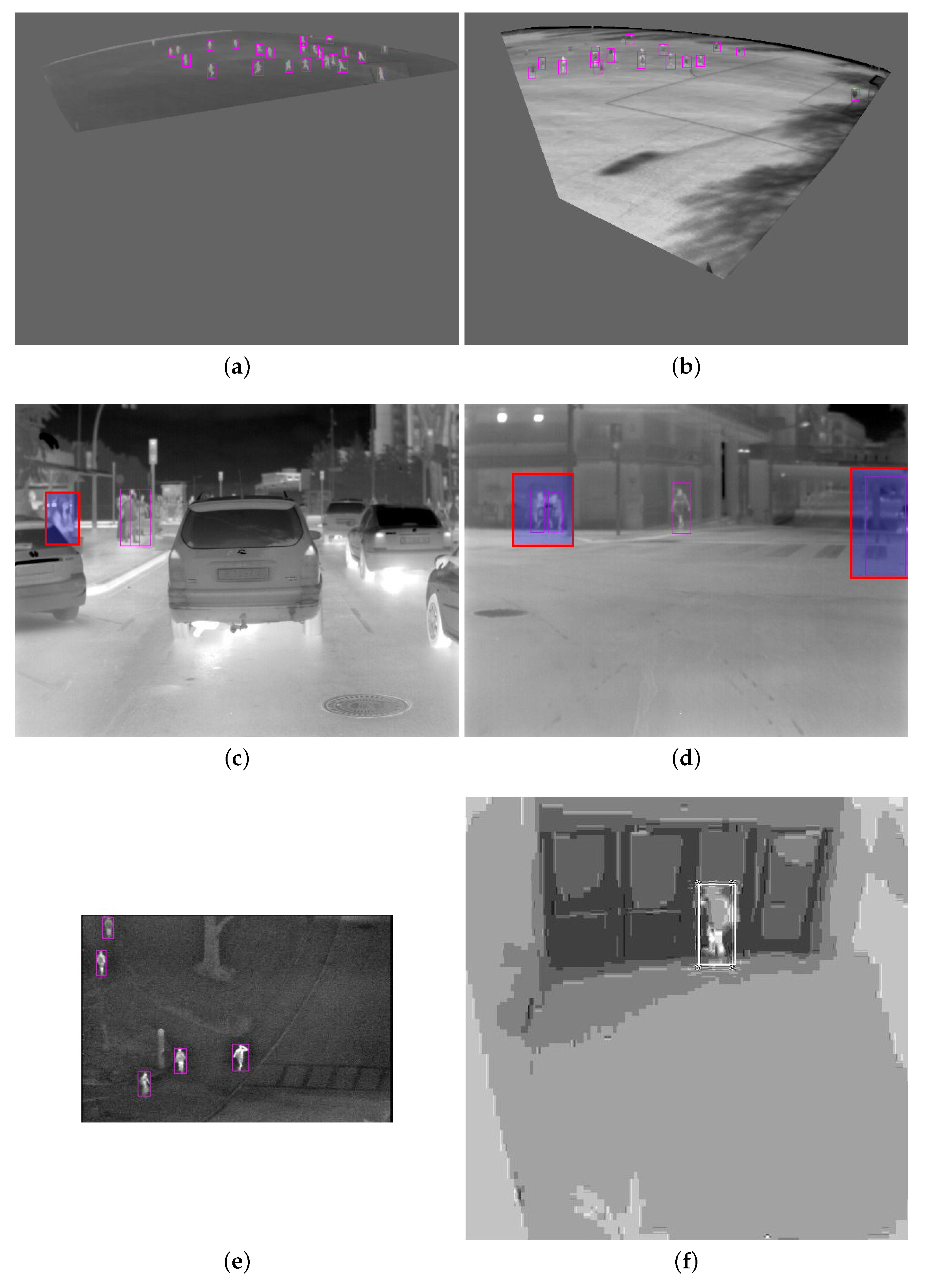

3. Novel Dataset

3.1. Data Recording

3.2. Data Description

4. Investigating the Role of Training Data

4.1. Assessment Protocol

4.2. Evaluation

4.3. Results on Publicly Available Datasets

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Li, W.; Zheng, D.; Zhao, T.; Yang, M. An effective approach to pedestrian detection in thermal imagery. In Proceedings of the International Conference on Natural Computation, Chongqing, China, 29–31 May 2012; pp. 325–329. [Google Scholar] [CrossRef]

- Teutsch, M.; Mueller, T.; Huber, M.; Beyerer, J. Low resolution person detection with a moving thermal infrared camera by hot spot classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 209–216. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, B.; Tang, L.; Li, J. Variational based contour tracking in infrared imagery. In Proceedings of the International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Herrmann, C.; Müller, T.; Willersinn, D.; Beyerer, J. Real-Time Person Detection in Low-Resolution Thermal Infrared Imagery with MSER and CNNs; SPIE: Bellingham, WA, USA, 2016; Volume 9987. [Google Scholar] [CrossRef]

- Tumas, P.; Jonkus, A.; Serackis, A. Acceleration of HOG based pedestrian detection in FIR camera video stream. In Proceedings of the IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 26 April 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Heo, D.; Lee, E.; Ko, B.C. Pedestrian detection at night using deep neural networks and saliency maps. Electron. Imaging 2018, 2018, 060403. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin, Germany, 2016; pp. 21–37. [Google Scholar]

- Cioppa, A.; Deliège, A.; Van Droogenbroeck, M. A bottom-up approach based on semantics for the interpretation of the main camera stream in soccer games. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1765–1774. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Lake Tahoe, NV, USA, 2012; pp. 1097–1105. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. the pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin, Germany, 2014; pp. 740–755. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016. [Google Scholar] [CrossRef]

- Davis, J.W.; Keck, M.A. A two-stage template approach to person detection in thermal imagery. In Proceedings of the IEEE Workshops on Applications of Computer Vision, Breckenridge, CO, USA, 5–7 January 2005; Volume 1, pp. 364–369. [Google Scholar] [CrossRef]

- Lahmyed, R.; El Ansari, M.; Ellahyani, A. A new thermal infrared and visible spectrum images based pedestrian detection system. Multimed. Tools Appl. 2019, 78, 15861–15885. [Google Scholar] [CrossRef]

- Davis, J.W.; Sharma, V. Background-subtraction using contour based fusion of thermal and visible imagery. Comput. Vis. Image Underst. 2007, 106, 162–182. [Google Scholar] [CrossRef]

- Video Analytics Dataset. Available online: https://www.ino.ca/en/technologies/video-analytics-dataset/ (accessed on 26 June 2019).

- Torabi, A.; Massé, G.; Bilodeau, G.A. An iterative integrated framework for thermal–visible image registration, sensor fusion, and people tracking for video surveillance applications. Comput. Vis. Image Underst. 2012, 116, 210–221. [Google Scholar] [CrossRef]

- Fritz, K.; König, D.; Klauck, U.; Teutsch, M. Generalization Ability of Region Proposal Networks for Multispectral Person Detection; SPIE Defense + Commercial Sensing: Baltimore, MD, USA, 2019; Volume 10988. [Google Scholar] [CrossRef]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A diverse dataset for pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4457–4465. [Google Scholar] [CrossRef]

- Socarras, Y.; Ramos, S.; Vázquez, D.; López, A.; Gevers, T. Adapting pedestrian detection from synthetic to far infrared images. In Proceedings of the International Conference on Computer Vision (ICCV) Workshop, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Ha, Q.; Watanabe, K.; Karasawa, T.; Ushiku, Y.; Harada, T. MFNet: Towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 5108–5115. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Multispectral pedestrian detection via simultaneous detection and segmentation. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Cuerda, J.S.; Caballero, A.F.; López, M.T. Selection of a visible-light vs. thermal infrared sensor in dynamic environments based on confidence measures. Appl. Sci. 2014, 4, 331–350. [Google Scholar] [CrossRef]

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2013, 25, 245–262. [Google Scholar] [CrossRef]

- Dai, C.; Zheng, Y.; Li, X. Layered representation for pedestrian detection and tracking in infrared imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005. [Google Scholar] [CrossRef]

- Miezianko, R.; Pokrajac, D. People detection in low resolution infrared videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Anchorage, AK, USA, 23–28 June 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Jungling, K.; Arens, M. Feature based person detection beyond the visible spectrum. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Miami, FL, USA, 20–25 June 2009; pp. 30–37. [Google Scholar] [CrossRef]

- Teutsch, M.; Müller, T. Hot spot detection and classification in LWIR videos for person recognition. In Automatic Target Recognition XXIII; SPIE: Bellingham, MA, USA, 2013; Volume 8744. [Google Scholar] [CrossRef]

- Wang, J.; Bebis, G.; Miller, R. Robust video based surveillance by integrating target detection with tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshop, New York, NY, USA, 17–22 June 2006; p. 137. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, B.; Nevatia, R. Pedestrian detection in infrared images based on local shape features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Gade, R.; Jørgensen, A.; Moeslund, T.B. Long-term occupancy analysis using Graph-Based Optimisation in thermal imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; IEEE Computer Society Press: Los Alamitos, CA, USA, 2013; pp. 3698–3705. [Google Scholar] [CrossRef]

- Gade, R.; Jørgensen, A.; Moeslund, T.B. Occupancy analysis of sports arenas using thermal imaging. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP), Rome, Italy, 24–26 February 2012; SCITEPRESS Digital Library: Setubal, Portugal, 2012; pp. 277–283. [Google Scholar]

- Huda, N.; Jensen, K.; Gade, R.; Moeslund, T. Estimating the Number of Soccer Players using Simulation based Occlusion Handling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1937–1946. [Google Scholar] [CrossRef]

- Gade, R.; Moeslund, T.B. Constrained multi-target tracking for team sports activities. IPSJ Trans. Comput. Vis. Appl. 2018. [Google Scholar] [CrossRef]

- Palmero, C.; Clapés, A.; Bahnsen, C.; Møgelmose, A.; Moeslund, T.B.; Escalera, S. Multi-modal rgb–depth–thermal human body segmentation. Int. J. Comput. Vis. 2016, 118, 217–239. [Google Scholar] [CrossRef]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian detection at day/night time with visible and FIR cameras: A comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef] [PubMed]

- St-Charles, P.L.; Bilodeau, G.A.; Bergevin, R. Online mutual foreground segmentation for multispectral stereo videos. Int. J. Comput. Vis. 2019, 127, 1044–1062. [Google Scholar] [CrossRef]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People detection and tracking from aerial thermal views. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1794–1800. [Google Scholar] [CrossRef]

- Olmeda, D.; Premebida, C.; Nunes, U.; Armingol, J.M.; Escalera, A.d.l. LSI far Infrared Pedestrian Dataset; Universidad Carlos III de Madrid: Madrid, Spain, 2019. [Google Scholar] [CrossRef]

- Wu, Z.; Fuller, N.W.; Theriault, D.H.; Betke, M. A thermal infrared video benchmark for visual analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 201–208. [Google Scholar]

- Felsberg, M.; Berg, A.; Hager, G.; Ahlberg, J.; Kristan, M.; Matas, J.; Leonardis, A.; Cehovin, L.; Fernandez, G.; Vojir, T.; et al. The thermal infrared visual object tracking VOT-TIR2015 challenge results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, CH, USA, 7–13 December 2015; pp. 76–88. [Google Scholar]

- Image Labeler MATLAB 2019. Available online: http://web.archive.org/web/20080207010024/http://www.808multimedia.com/winnt/kernel.htmhttps://www.mathworks.com/help/vision/ug/get-started-with-the-image-labeler.html (accessed on 3 November 2019).

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

| Name | # of Frames | # of Sequences | Viewpoint | Application Area/ Main Scene Characteristics | Camera/Image Specifications |

|---|---|---|---|---|---|

| KAIST [1] | 95 k | Near front | outdoor traffic, day and night multispectral | 640 × 480, 20 Hz | |

| OSU Color Thermal (CT) [20] | 17 k | Far top | Outdoor walkway | Raytheon PalmIR 250D, 320 × 240, 30 Hz | |

| AAU-VAP TPD [41] | 5.7 k | 3 | Near front | Indoor office | Axis Q1922 640 × 480 30 Hz |

| LITIV-VAP [22] | 4.3 k | Near front | Indoor hall | ||

| CVC-09 [26] | 11 k | Near | Traffic pedestrian, day and night | 640 × 480 | |

| CVC-14 [42] | 7.7 k | Near | Traffic pedestrian, day and night | ||

| LITIV-2018 [43] | 3 | Near front | Indoor hall | ||

| OSU Thermal (T) [18] | 0.2 k | Far top | Outdoor pedestrian haze, fair, light rain, partially cloudy | Raytheon 300D, 320 × 240, 30 Hz | |

| ASL-TID [44] | 4.3 k | 8 | Varied | Outdoor varied background, person, cat, horse | FLIR Tau 324 × 256 |

| Terravic Motion IR [32] | 23.7 k | 18 | Varied | Outdoor tracking, surveillance, indoor hallway, plane tracking, underwater and near-surface motion, background motion | Raytheon L-3 Thermal-eye 2000AS, 320 × 240 |

| LSI Dataset [45] | 15.2 k | 13 | Outdoor pedestrian Hz | Intigo Omega imager, 164 × 129 | |

| BU-TIV [46] Benchmark Atrium | 7.9 k | 2 | Near | Indoor atrium | 512 × 512 |

| Lab | 26.7 k | 3 | Near | Indoor and | 512 × 512 |

| Marathon | 1 k | Very far | outdoor marathon | 1024 × 640 | |

| VOT-TIR 2015 [47] Birds | 270 | 1 | Near front | Fair outdoor | 640 × 480, 30 Hz |

| Crossing | 301 | 1 | Near top | Fair outdoor | 640 × 480, 30 Hz |

| Crouching | 618 | 1 | Near front | Outdoor roadside | 640 × 480, 30 Hz |

| Crowd | 71 | 1 | Near front | Outdoor roadside occluded | 640 × 512, 30 Hz |

| Street | 172 | 1 | Far front | Outdoor street | 640 × 480, 30 Hz |

| Saturated | 218 | 1 | Near front | Outdoor street occluded | 640 × 480, 30 Hz |

| Mixed distractor | 270 | 1 | Near front | Indoor | 527 × 422, 30 Hz |

| Hiding | 358 | 1 | Near front | Indoor | 263 × 210, 30 Hz |

| Garden | 676 | 1 | Near top | Outdoor garden | 324 × 256, 30 Hz |

| Depth-wise crossing | 851 | 1 | Medium top | Outdoor fair roadside | 640 × 480, 30 Hz |

| Trees | 665 | 1 | Far top | Outdoor dark | 640 × 480, 30 Hz |

| Thermal soccer dataset [40] | 3000 | 4 | Near top | Indoor soccer arena | 640 × 480, 30 Hz |

| Category | Phenomena | # of Frames | # of Persons |

|---|---|---|---|

| Viewpoint | Good condition | 122 | 632 |

| Far viewpoint | 64 | 652 | |

| Heat effects | Opposite temperature | 72 | 792 |

| Similar temperature | 107 | 644 | |

| Image artifacts | Low resolution | 158 | 734 |

| fOcclusion | 20 | 305 | |

| Weather effects | Shadow | 171 | 742 |

| Snow | 1060 | 168 | |

| Wind | 167 | 921 |

| # | Combinations | # | Combinations |

|---|---|---|---|

| 1. | Indoor | 9. | Indoor+heat effects+image artifacts |

| 2. | Indoor+viewpoint | 10. | Indoor+heat effects+weather effects |

| 3. | Indoor+heat effects | 11. | Indoor+image artifacts+weather effects |

| 4. | Indoor+image artifacts | 12. | Indoor+Viewpoint+heat effects+image artifacts |

| 5. | Indoor+weather effects | 13. | Indoor+viewpoint+heat effects+weather effects |

| 6. | Indoor+viewpoint+heat effects | 14. | Indoor+heat effects+image artifacts+weather effects |

| 7. | Indoor+viewpoint+image artifacts | 15. | Indoor+viewpoint+image artifacts+weather effects |

| 8. | Indoor+viewpoint+weather effects | 16. | Indoor+viewpoint+heat effects+image artifacts+weather effects |

| Combinations | Indoor | Viewpoint | Heat Effects | Image Artifacts | Weather Effects | F1 Score |

|---|---|---|---|---|---|---|

| 1 | X | 63.35 | ||||

| 2 | X | X | 81.52 | |||

| 3 | X | X | 75.35 | |||

| 4 | X | X | 78.39 | |||

| 5 | X | X | 79.68 | |||

| 6 | X | X | X | 83.63 | ||

| 7 | X | X | X | 81.74 | ||

| 8 | X | X | X | 87.37 | ||

| 9 | X | X | X | 88.24 | ||

| 10 | X | X | X | 81.71 | ||

| 11 | X | X | X | 83.04 | ||

| 12 | X | X | X | X | 89.74 | |

| 13 | X | X | X | X | 87.57 | |

| 14 | X | X | X | X | 87.34 | |

| 15 | X | X | X | X | 86.99 | |

| 16 | X | X | X | X | X | 90.23 |

| Mean | 82.87 | 86.10 | 85.48 | 85.78 | 85.49 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huda, N.U.; Hansen, B.D.; Gade, R.; Moeslund, T.B. The Effect of a Diverse Dataset for Transfer Learning in Thermal Person Detection. Sensors 2020, 20, 1982. https://doi.org/10.3390/s20071982

Huda NU, Hansen BD, Gade R, Moeslund TB. The Effect of a Diverse Dataset for Transfer Learning in Thermal Person Detection. Sensors. 2020; 20(7):1982. https://doi.org/10.3390/s20071982

Chicago/Turabian StyleHuda, Noor Ul, Bolette D. Hansen, Rikke Gade, and Thomas B. Moeslund. 2020. "The Effect of a Diverse Dataset for Transfer Learning in Thermal Person Detection" Sensors 20, no. 7: 1982. https://doi.org/10.3390/s20071982

APA StyleHuda, N. U., Hansen, B. D., Gade, R., & Moeslund, T. B. (2020). The Effect of a Diverse Dataset for Transfer Learning in Thermal Person Detection. Sensors, 20(7), 1982. https://doi.org/10.3390/s20071982