Abstract

We develop a deep learning refined kinematic model for accurately assessing upper limb joint angles using a single Kinect v2 sensor. We train a long short-term memory recurrent neural network using a supervised machine learning architecture to compensate for the systematic error of the Kinect kinematic model, taking a marker-based three-dimensional motion capture system (3DMC) as the golden standard. A series of upper limb functional task experiments were conducted, namely hand to the contralateral shoulder, hand to mouth or drinking, combing hair, and hand to back pocket. Our deep learning-based model significantly improves the performance of a single Kinect v2 sensor for all investigated upper limb joint angles across all functional tasks. Using a single Kinect v2 sensor, our deep learning-based model could measure shoulder and elbow flexion/extension waveforms with mean CMCs >0.93 for all tasks, shoulder adduction/abduction, and internal/external rotation waveforms with mean CMCs >0.8 for most of the tasks. The mean deviations of angles at the point of target achieved and range of motion are under 5° for all investigated joint angles during all functional tasks. Compared with the 3DMC, our presented system is easier to operate and needs less laboratory space.

1. Introduction

Three dimensional (3D) kinematic analysis of upper limb functional movement has been widely conducted in many areas. Upper limb kinematic analysis has been employed in both theoretical studies such as the underlying theory of neuromusculoskeletal system [1,2,3] and practical concerns in the clinical assessment of motion functions, rehabilitation training [4], ergonomics studies [5,6], and so forth. Marker-based 3D motion capture systems (3DMC) [7] have been widely employed in quantitative measurements of upper limb functional tasks. In such a system, 3D motion data is obtained based on passive or active markers attached to the anatomical landmarks of participants. These marker-based systems have been confirmed to be valid and reliable in assessing upper limb kinematics [3,8]. However, these systems are not practical for applications in small clinics or home-based assessment, given the expensive hardware cost, time-consuming experiment conduction as well as the strict requirements for lab space and trained technician.

Markerless motion capture system could be a possible alternative for upper limb functional assessment [9], especially after the introduction of a commercially available, low-cost, and portable device named Kinect (Microsoft, Redmond, WA, USA). The second iteration of the Kinect (denoted as Kinect v2), is capable of tracking real-time 3D motions with its depth image sensor [10] and its human pose estimation algorithm [11]. The Kinect SDK v2.0 features skeletal tracking with 3D locations of 25 joints for each skeleton [12]. Kinect v2 has been employed in gait analysis [13,14,15], balance and postural assessment [16,17], foot position tracking [18], gait rehabilitation training [19,20], upper limb functional assessment or rehabilitation training [4,21,22,23,24,25].

Several studies have assessed the agreement between Kinect sensor and 3DMC. Kinect sensor demonstrated good reliability in assessing temporal-spatial parameters such as timing, velocity, or movement distance of functional tasks for both healthy subjects and people with physical disorders [4,13,21,22,26,27]. Kinect also has considerable good reliability in kinematics assessment such as upper limb joint angle trajectories [22,28] and the respective range of motions [28], trunk flexion angles during the standing and dynamic balance test [16], trunk, hip, and knee kinematics during squatting and jumping tasks [29] or foot postural index assessment [30].

Kinect sensor has been employed in various rehabilitation scenarios for people with motor disabilities [23,25]. A Kinect-based rehabilitation system improved exercise performance for adults with motor impairment during the intervention phase [23]. An RGBD-based interactive system using the Kinect sensor provided a gamified interface designed to replace physiotherapists in the supervision of upper limb exercises. The interactive system is able to provide real-time feedback and to create interactive, simple to use and fun environments to patients. The system improves the engagement of the participants and the effectiveness of the exercises [25].

Nevertheless, Kinect is limited by its inherent inaccuracy. Although the system has good accuracy in measuring temporal-spatial parameters such as the timing of movements [4], velocity, or distance measurement during movement [26], gross spatial characteristics of clinically relevant movements such as vertical oscillation during treadmill running [31], it lacks enough accuracy in small movements, such as hand clasping [4].

The Kinect system captures RGBD data with a time-of-flight sensor [10]. Such RGBD data is a 2D image with depth information for each pixel, which is not a complete 3D model. Intuitively speaking, the RGBD data can be seen as a “relieve”, or a “2.5D model”. Skeletons calculated from such RGBD data suffer from certain systematic errors due to the inaccurate depth measurement. The Kinect system is highly task-dependent and plane-dependent in terms of kinematic measurement accuracy [28]. Due to the “relieve” feature, the Kinect system measures more accurate joint angles on the sagittal plane and coronal plane than on the transverse plane [28,32,33].

The performance of the Kinect system is highly influenced by the occlusion of body parts. The Kinect camera cannot directly assess necessary anatomical joint centers if another body part is in between with the segment and the camera. The Kinect system is mostly placed in front of the human subjects. In this scenario, assessing upper limb functional tasks is more challenging than conducting gait analysis for the Kinect system due to the high probability of upper limb occlusion when performing upper body activities such as drinking or combing hair [34].

In comparison with the joint angles measured with 3DMC systems, those angles calculated via the Kinect system generally has unacceptable accuracy in clinical assessment. The performance of the Kinect system is normally demonstrated with the root-mean-squared error (RMSE) between the investigated parameter via the Kinect system and the 3DMC system. The RMSE of the Kinect based system is around 10° in measuring shoulder flexion/extension, 10° to 15° in measuring adduction/abduction, or approximately 15° to 30° in measuring shoulder axial rotation during computer-using task [33]. For people with Parkinson’s disease during multiple reaching tasks and tasks from the Unified Parkinson’s Disease Rating Scale, the mean bias for shoulder flexion/extension, shoulder adduction/abduction and elbow flexion/extension angles between the Kinect and 3DMC system are 10.44°, 8.68° and 16.93° for healthy subjects and 17.07°, 10.26°, and 21.66° for patients [4]. A study of five gross upper body exercises from the GRASP manual for stroke rehabilitation [35] revealed that the Kinect v2 is mostly inadequate for correctly assessing shoulder joint kinematics during stroke rehabilitation exercises. The movements of the shoulder joints are used as indicators for incorrect limb movements. Unacceptable jitter and tracking occurred when the depth data surrounding the joint is partial or completely occluded.

Various researches have been conducted to improve the accuracy of Kinect v2 in kinematics measurement. One type of solution is model fitting algorithms. Xu et al. [33] employed a linear regression algorithm between each Kinect-based shoulder joint angle and its 3DMC counterpart. Given the nonlinear relationship between the upper limb joint angle trajectories calculated via Kinect and the 3DMC system, linear regression algorithms have limited ability to improve the kinematic measurement accuracy. Only shoulder adduction/abduction angles are significantly improved after employed the linear regression algorithms and the RMSEs between the Kinect sensor and the 3DMC system is around 8.1° and 10.1° for the right and left shoulders. Kim et al. [36] proposed a post-processing method, which is a combination of two deep recurrent neural networks (RNN) and a classic Kalman filter, to correct unnatural tracking movements. This post-processing method only improves the naturalness of the captured joint trajectories. The accuracy is insufficient for clinical assessment.

Another type of solution is applying marker-tracking technology with the Kinect system. Timmi et al. [37] developed a novel tracking method using Kinect v2 by employing custom-made colored markers and computer vision techniques. The markers with diameters of 38 mm were painted using matte acrylic paints. Magenta, green, and blue paints were chosen for hip, knee, and ankle joint markers, respectively. The centers of the three markers should be placed on a straight line. They evaluated the method in comparison with lower limb kinematics over a range of gait speeds and found generally good results. However, the actual use case for this kind of system appears limited due to two factors: (1) The marker-tracking Kinect system could not solve the occlusion issue when performing upper limb functional task; (2) The introduction of markers into Kinect system bring the reliability issue from incorrect marker placement and complicated experimental calibration procedures. Thus, the method is unlikely to provide significant benefits over the skeleton tracker algorithm [34].

Using multi-Kinect and fusion systems might be another solution to improve the assessment accuracy of the Kinect system as it can reduce body occlusion and extend the field of view. However, these systems show apparent limitations such as: (1) It is difficult to set up and calibrate multiple depth cameras; (2) One Kinect is likely to be impacted by another Kinect sensor. For this matter, the evidence of improving accuracy is not strong [38].

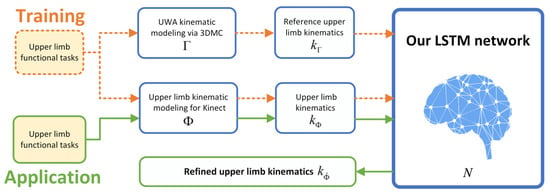

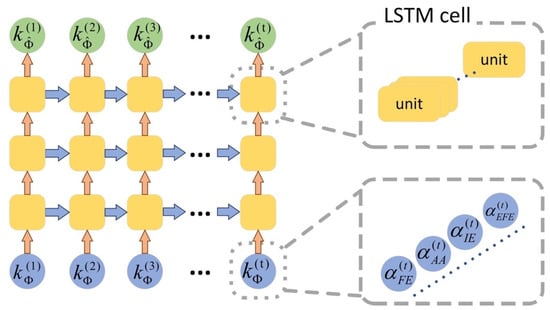

Given the pros and cons of the existing algorithms, as shown above, we develop a novel deep learning refined kinematic model using a single Kinect v2 sensor for accurately assessing 3D upper limb joint angles. We form a kinematic model to calculate upper limb joint angles from Kinect. For a specific task, we construct a deep neural network to compensate for the systematic error on those joint angles. Such a neural network is trained using joint angles via the Kinect sensor as the input and those 3DMC counterparts as the target. For the 3DMC, a UWA kinematic model [39] is used to calculate 3D upper limb kinematics based on the 3D positions of reflective markers attached on the subjects. A deep neural network is a favorable tool for non-linear fitting, especially when the shape of the underlying model is unknown [40]. The recurrent neural network (RNN) architecture is designed specifically for time series data, which conforms to our joint angel data very well. Long short-term memory (LSTM) is the cutting-edge technology of RNN [41]. We employ a three-layer LSTM network in our method. See Figure 1 for a brief pipeline of our method.

Figure 1.

The architecture of our deep learning refined kinematic model for Kinect v2.

A series of upper limb functional task experiments were conducted to evaluate the effectiveness of our developed deep learning-based model. The tasks represent a variety of active daily functional activities [42]. The hand to contra lateral shoulder task represents activities such as washing axilla or zip up a jacket. The hand to mouth task represents eating or reaching the face. The combing hair task represents washing/combing hair or reaching the back of the head. The hand to back pocket task represents reaching the back and perineal care.

3D positions of the reflective markers according to the UWA marker set are recorded using a 3DMC system. The joint centers extracted from the Kinect skeleton are recorded and a single Kinect v2 sensor. We use a leave-one subject-out cross-validation protocol to evaluate the performance of our deep learning refined kinematic model. The coefficient of multiple correlation (CMC) and root-mean-squared error (RMSE) are used to evaluate the performance of the deep learning refined kinematic model in assessing upper limb angular waveforms in comparison with the kinematic model for Kinect sensor. Range of motion (ROM) and angles at the point of target achieved (PTA) are extracted to represent key kinematic parameters. ROM and PTA via both our deep learning refined kinematic model and the kinematic model for Kinect sensor are statistically compared with those via the 3DMC system. The absolute error and Bland-Altman plot are analyzed for the ROM and PTA via the deep learning refined kinematic model as well as the kinematic model for the Kinect sensor in comparison with those via the 3DMC system.

Our deep learning refined kinematic model significantly improves the performance of upper limb kinematic assessment using a single Kinect v2 sensor for all investigated upper limb joint angles across all functional tasks. At the same time, such an assessment system is also easy to calibrate and operate. The requirements for laboratory space and specialties are easy to be fulfilled for a single Kinect-based system. The system has great potential to be an alternative of the 3DMC system and be widely used in clinics or other organizations, which lacks money, specialties, or lab space.

2. Methods

We denote the kinematic model for Kinect by and the UWA kinematic model for a 3DMC system by . The deep learning refined kinematic model for Kinect v2 is denoted by , which is a combination of the model and the trained neural network N. The upper limb kinematics calculated by model and are defined as and , respectively. We train a long short-term memory (LSTM) recurrent neural network (RNN) N using a supervised machine learning architecture to compensate for the systematic error of . During the training session, and are taken as the input data and the target data, respectively. In the application stage, is given as the input of N, and output is our refined upper limb kinematics (defined as ). See Figure 1 for a simple demonstrate.

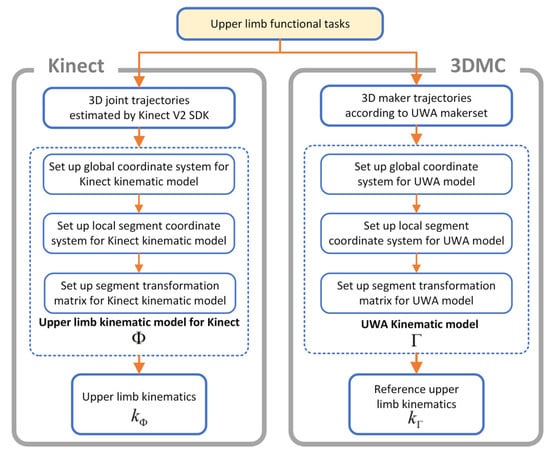

The UWA kinematic modeling for the 3DMC system and the upper limb kinematic modeling for the Kinect v2 system follow the procedures demonstrated in Figure 2. A standard 3D kinematic modeling procedure [43] includes four steps, namely setting up a global coordination system, setting up local segments coordination systems, calculation of transformation matrix for segment investigated and calculation of upper limb kinematics. The 3DMC system and the Kinect v2 sensor capture 3D marker trajectories and record 3D joint trajectories of a participant concurrently when performing upper limb functional tasks.

Figure 2.

The kinematic models of the Kinect v2 system and the 3D motion capture system.

2.1. Upper Limb Kinematic Modeling for Kinect v2

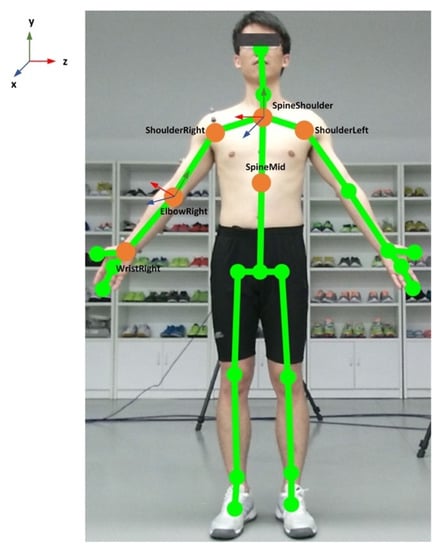

The 3D coordinates of the anatomical landmarks identified from the skeletal model of the Kinect v2 system (see Figure 3) during functional tasks are recorded concurrently with the 3DMC system. Local segment coordinates, including Thorax and Upper Arm , are established. Each of the segment is based on the global coordinate.

Figure 3.

Illustration of the Kinect skeleton joints and the anatomical coordination system.

The origin of the thorax segment is defined by SpineShoulder (SS). The y-axis of the thorax segment is defined by the unit vector going from SpineMid (SM) to SS (Equation (1)). The z-axis of the thorax segment is defined by the unit vector perpendicular to y-axes and the vector from ShoulderLeft (SL) to ShoulderRight (SR) (Equation (2)). The x-axis of the thorax segment is defined by z and y-axes to create a right-hand coordinate system (Equation (3)). The coordinate system of the thorax segment is then constructed by x, y and z-axis (Equation (4)):

The origin of the right upper arm segment is the right elbow joint center ElbowRight (ER). The y-axis of the right upper arm segment is defined by the unit vector going from the elbow joint center to shoulder joint center, ShoulderRight (SR), see Equation (5). The z-axis of the right upper arm segment is defined by the unit vector perpendicular to the plane formed by y-axis of the upper arm and the long axis vector of the forearm, pointing laterally (Equation (6)). The x-axis of the right upper arm segment is defined by the unit vector perpendicular to the z and y-axes, pointing anteriorly (Equation (7)). The coordinate system of the upper arm segment is then constructed by x, y and z-axis of the segment (Equation (8)):

Then our customized upper limb kinematics model for the Kinect v2 system calculates the three Euler angles () for shoulder rotations, which following the flexion (+)/extension (−), adduction (+)/abduction (−) and internal (+)/external (−) rotation order. The rotation matrix is obtained via the parent coordinate system (Equation (4)) and the child coordination system (Equation (8)). Shoulder flexion/extension , adduction/abduction , internal/external rotation angles are calculated by solving the multivariable equations in Equation (9).

The elbow flexion/extension angle is calculated by the position data from ShoulderRight (SS), ElbowRight (ER), and WristRight (WR) using the trigonometric function (Equations (10) and (11)). In equation (10), is the unit vector going from the elbow joint center to the wrist joint center. The upper limb kinematics via the Kinect based system is formed by the shoulder and elbow joint angles (Equation (11)). The kinematics model for Kinect v2 was developed using MATLAB 2019a:

The angular waveforms between the Kinect v2 sensor and the Vicon system are synchronized during post processing. The joint angles from both systems are firstly resampled to 300 Hz using the Matlab function “interp” and then synchronized using a cross-correlation based shift synchronization technique.

2.2. UWA Kinematic Modeling via 3D Motion Capture System

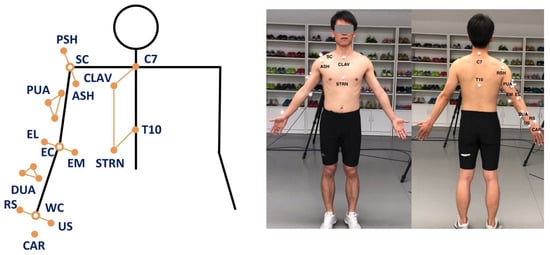

The UWA kinematic model for the reference 3DMC system (in this paper we use Vicon, Oxford Metrics Group, Oxford, UK) is based on the 3D trajectories of the reflective markers to the anatomical position of each subject according to the UWA upper limb marker set [44]. The UWA marker set includes the seventh cervical vertebra (C7), 10th thoracic vertebra (T10), sternoclavicular notch (CLAV), xyphoid process of the sternum (STRN), posterior shoulder (PSH), anterior shoulder (ASH), elbow medial epicondyle (EM), elbow lateral epicondyle (EL), most caudal-lateral point on the radial styloid (RS), caudal-medial point on the ulnar styloid (US), a triad of markers affixed to upper arm (PUA), a triad of markers affixed to forearm (DUA) and the metacarpal (CAR) (see Figure 4 for the detailed marker setting). The PUA and DUA are positioned in areas that are not largely influenced by the soft tissue artifact, according to Campbell et al. [45,46]. Medial and lateral elbow epicondyle markers are removed for the dynamic functional tasks.

Figure 4.

Marker set for the 3DMC system. Left: Arrangement of the UWA upper limb marker set. Right: A participant with the attached markers.

A biomechanical model is employed based on the UWA upper limb marker set [39,44]. The coordinates of each marker at each sample point in the global coordinate system are recorded and represented by a three-dimensional vector (x, y, z). Four rigid body segments, namely Thorax, Torso, Upper Arm, and Forearm, are defined based on the anatomical landmark positions following the recommendations of the International Society of Biomechanics (ISB) [47]. In the following equations, body segment Thorax, Torso, Upper Arm and Forearm are defined as , , , and , respectively. The origin of a segment is denoted by o. The axes of each coordinate system are denoted by x, y and z.

The origin of the thorax segment is defined as the midpoint between C7 and CLAV. The origin of the torso segment is defined as the midpoint of T10 and STRN. The y-axis of thorax coordination system is defined by the unit vector going from the midpoint of T10 and STRN to the midpoint of C7 and CLAV, pointing upwards. The z-axis of the thorax coordinate system is defined by the unit vector perpendicular to the plane defined by T10, C7 and CLAV, pointing laterally. The x-axis of the thorax coordinate system is defined by the unit vector perpendicular to the plane defined by the y-axis and z-axis to create a right-hand coordinate system. The coordinate system of the thorax segment is then constructed by its x, y, and z-axis.

The origin of the right upper arm segment is defined by the elbow joint center E, which is the midpoint between EL and EM. The y-axis of the right upper arm segment is defined by the unit vector going from the elbow joint center E to shoulder joint center S, which is the center of PSH, ASH and ACR. The z-axis of the right upper arm segment is defined as the unit vector perpendicular to the plane formed by the y-axis of the upper arm and the long axis vector of the forearm. The x-axis is defined by the y-axis and the z-axis of the right upper arm segment to create a right-hand coordinate system. The coordinate system of the upper arm segment is then constructed by x, y and z-axis of the segment.

The origin of the right forearm segment coordinate system is defined by the wrist joint center W, which is the midpoint between RS and US. The y-axis of the right forearm segment coordinate system is defined by the unit vector from the wrist joint center W to the elbow joint center E, pointing upwards. The x-axis of the right forearm segment coordinate system is defined by the unit vector perpendicular to the plane formed by y-axis and the vector from US to RS, pointing anteriorly. The z-axis is defined by the unit vector perpendicular to the x and y-axis of right forearm segment, pointing anteriorly. The coordinate system of the forearm segment is then constructed by x, y and z-axis of the segment.

The calibrated anatomical systems technique [48] is used to establish the motion of anatomical landmarks relative to the coordinate systems of the upper arm cluster (PUA) or the forearm cluster (DUA). The motion of the upper-limb landmarks could be reconstructed from their constant relative positions to the upper-arm technical coordinate system. For each sampling time frame, the coordinates of each segment with respect to its proximal segment are transformed by a sequence of three rotations following z-x-y order.

The rotation matrix and are obtained via their responsive parent coordinate system and child coordination system. Shoulder flexion (+)/extension (−) , adduction (+)/abduction (−) , internal (+)/external rotation (−) , elbow flexion (+)/extension (−) , varus (+)/valgus (−) , internal/external rotation angles are calculated by solving the multivariable equations.

The UWA upper limb kinematic model is developed using the Vicon Bodybuilder software (Oxford Metrics Group). The reference shoulder angles and elbow flexion/extension angle are used as a golden standard to train our deep learning refined model for the Kinect v2 system. We use fourth-order zero-lag Butterworth low-pass filter with the cut-off frequency of 6 Hz for the UWA model as well as the Kinematic model for Kinect . The cut-off frequency is followed the recommendation from the literature and determined by residual analysis for the upper limb tasks [49].

2.3. Long Short-Term Memory Neural Network

We construct a recurrent neural network [41] N to refine the upper limb kinematics calculated by the kinematic model for Kinect v2 (see Section 2.1). In order to reduce the systematic error of , the kinematics calculated by the UWA model for the 3DMC system (see Section 2.2) is taken as a target. To adapt the neural network, we assume that and are normalize into range [0,1].

As shown in Figure 5, our neural network is formed by three long short-term memory (LSTM) layers. The input of our model is a 101-time-step sequence (). The unit of each time-step is a 4-dimensional vector. We use, empirically, 100 neural units in each LSTM cell. The output of the model is also a 101-time-step sequence with 4-dimensional vectors.

Figure 5.

Architecture of our LSTM neural network for upper limb kinematics refinement.

To train this model, we let be the input of the model. The output is denoted by . We calculate the mean square error between and as the loss of the model, and then employ an Adam method for optimization [50]. The network is trained with a batch size of 20 and the learning rate is set to 0.006 for 200 epochs. In application, the upper limb kinematics calculated by Kinect v2 is taken as the input of the neural network. The output of the neural network, namely , is our refined upper limb kinematics.

3. Evaluation

3.1. Subjects

We recruited thirteen healthy male university students (age: 25.3 ± 2.5 years old; height: 173.2 ± 4.1 cm; mass: 69.1 ± 6.5 Kg). The participants were absent of any upper limb neuromusculoskeletal problems or medication use that would affect their upper limb functions. The participants were informed about the basic procedure of the experiment before the test. The experimental protocol was approved by the Research Academy of Grand Health’s Ethics Committee at Ningbo University and all participants provided written informed consent.

3.2. Experiment Protocol

We used a concurrent validity design to evaluate our deep learning based upper limb functional assessment system using the Kinect v2 sensor. The 3D anatomical position of the upper limb (take the right side as an example) and trunk were recorded concurrently by a Kinect v2 sensor and a 3DMC system with eight high-speed infrared cameras (Vicon, Oxford Metrics Ltd., Oxford, UK). The Kinect v2 sensor and the 3DMC recorded the position of anatomical landmarks with sampling frequencies of around 30 Hz and 100 Hz, respectively. The Kinect sensor was placed on a tripod, 0.8 meters above the ground, and 2 meters in front of the subject [51].

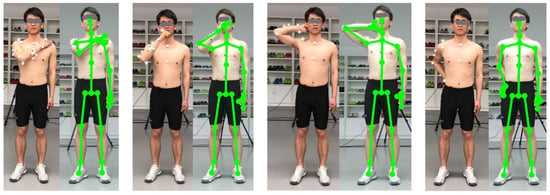

Optical reflective markers were attached to the anatomical landmarks of each individual following the instruction of the UWA upper limb marker set [39]. A static trial was recorded firstly during which the participant was standing in the anatomical position. The elbow and wrist markers were removed during dynamic trials. Four functional tasks, as shown in Figure 6, representing a variety of active daily functional activities [42] and at the same time are important for independent living [52], were performed. The tasks were selected based on previous studies [42,52,53,54,55] after extensive consultation with clinicians. These tasks are also used in assessment scales such as Mallet score, which is commonly used for evaluation of shoulder function [56].

Figure 6.

Four upper limb functional tasks evaluated in our study. Left: Hand to the contralateral shoulder. Middle-left: Hand to mouth or drinking. Middle-right: combing hair. Right: Hand to back pocket.

Task 1: Hand to the contralateral shoulder, which represents all activities near contralateral shoulder such as washing axilla or zip up a jacket. Subjects started with the arm in the anatomical position with their hand handing beside their body in a relaxed neutral position and end up with the hand touched the contralateral shoulder (see Figure 6, left);

Task 2: Hand to mouth or drinking, which represents activities such as eating and reaching the face. It begins with the same starting point, and ends when the hand reached subject’s mouth (see Figure 6, middle-left);

Task 3: Combing hair, which represents activities such as reaching the (back of the) head and washing hair. Subjects were instructed to move their hand to the back of their head (see Figure 6, middle-right);

Task 4: Hand to back pocket, which represents reaching the back and perineal care. It begins with the same starting point and ends when the hand placed on the back pocket (see Figure 6, right).

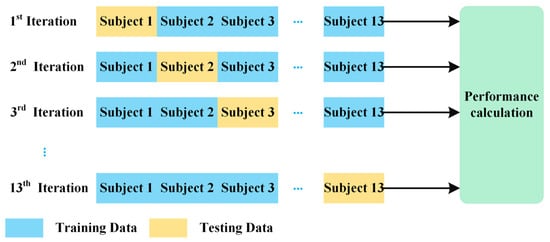

3.3. Leave One Subject Out Cross-Validation

We firstly calculate upper limb kinematics and using upper limb kinematic model for Kinect v2 system and the UWA kinematic model for the reference 3DMC system, respectively. For all four functional tasks, the joint angles are resampled to 101-time steps. Joint angles are represented as 0–100% across the time domain, with 0% being the initial and 100% being the finish. Next, we use a leave one subject out cross-validation (LOOCV) (see Figure 7) to evaluate the performance of our proposed deep learning refined upper limb functional assessment model using Kinect v2 sensor.

Figure 7.

The protocol of the leave one subject out cross validation (LOOCV).

Using the LOOCV protocol, the kinematic data and are partitioned into training data and test data. Assuming that we have n subjects, the validation process iterates n times. For each iteration, kinematic data of the left-out subject is set as the testing data and the kinematics of the remaining subjects is set as the training data. The testing data include one 3D matrix, which are the shoulder and elbow joint angles of the left-out subject calculated via the kinematic model for Kinect v2 system . The training data from the remaining subjects are consist of two 3D matrices, the upper limb joint angles calculated via model , regarded as the input data of the deep learning refined kinematic model for Kinect v2, and the reference UWA kinematic model for the 3DMC system, regarded as the target data of the model . Our deep learning refined kinematic model explores the nonlinear relationship between the upper limb kinematics via the kinematics model for Kinect and those angles via the UWA model using the 3DMC system . Such a model can reduce the systematic error of the Kinect system.

3.4. Performance Evaluation and Statistical Analysis of the Deep Learning Refined Kinematic Model

The performance of our developed model is evaluated based on the test data, using the upper limb kinematics calculated via the model for the 3DMC system as the ground truth. The coefficient of multiple correlation (CMC) values and root mean squared errors (RMSE) are calculated between upper limb kinematic waveforms and as well as between and in each application session.

The CMC values are used to evaluate the similarity and repeatability of the upper limb joint angle trajectories between and the as well as the similarities between and . The CMCs are calculated following Kadaba’s approach [57]. The CMC values are explained as excellent similarity (0.95–1), very good similarity (0.85–0.94), good similarity (0.75–0.84), moderate similarity (0.6–0.74) and poor similarity (0–0.59) [58]. The RMSE values are employed to evaluate mean errors between the upper limb angle waveforms and the as well as errors between and across all functional tasks.

Range of motion (ROM) values and the joint angle at the point of target achieved (PTA) via the kinematic model , our deep learning refined kinematic model for Kinect v2 system and the UWA kinematic model for the 3DMC system are calculated and extracted. Both ROM and PTA data are extracted from the test data in the application process. The normality of all ROM and PTA values are tested by the Shapiro-Wilks test (p > 0.05). A paired sample t-test is used for the parameters which are normally distributed; the Wilcoxon Signed Ranks Test is used for those who are not. Bland-Altman analysis with 95% limits of agreement (LoA) is performed to assess the agreements between the ROMs and the PTAs via model and model as well as the agreements via model and model . The CMC and RMSE are analyzed using Matlab 2019a, and the rest statistical analysis is carried out using SPSS 25.0.

4. Results

4.1. Joint Kinematic Waveforms Validity

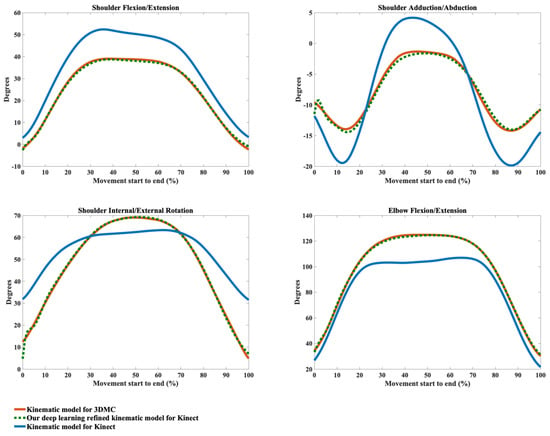

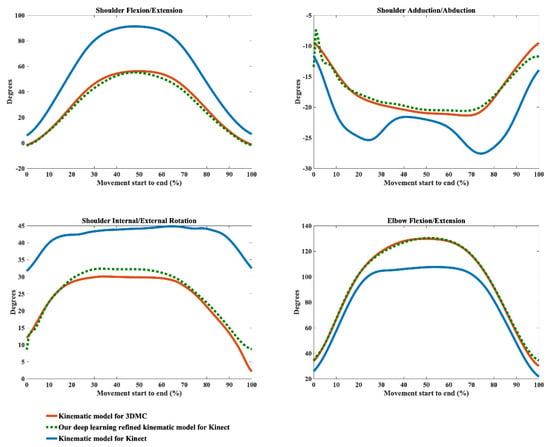

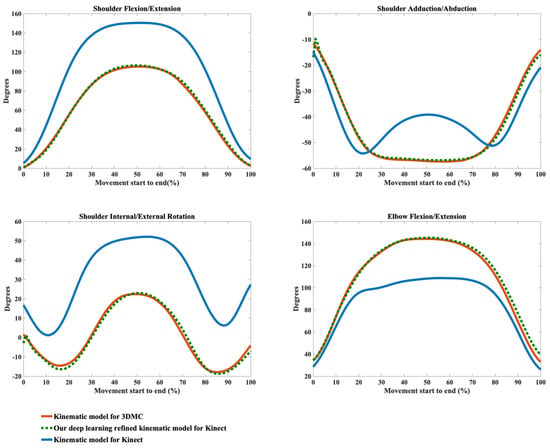

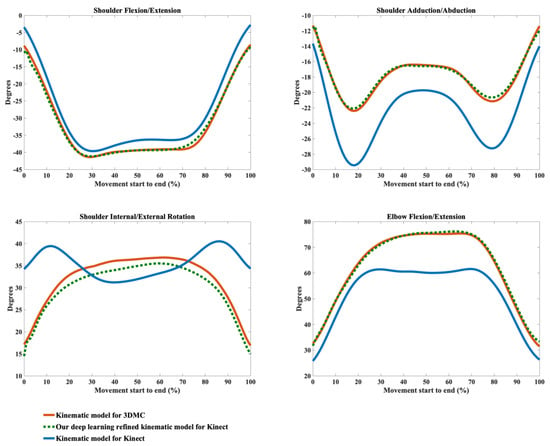

The kinematic waveforms of the chosen representative upper limb functional tasks via the kinematic model and our deep learning refined kinematic model for the Kinect v2 system are presented in Figure 8, Figure 9, Figure 10 and Figure 11 by means of average angles from the testing data. Joint angles via the UWA kinematic model for the 3DMC system are presented in Figure 8, Figure 9, Figure 10 and Figure 11 as the golden standard. The CMC values between and as well as between and are presented in Table 1. The RMSE values are presented in Table 2.

Figure 8.

Joint angles during the hand to contra lateral shoulder task calculated via the kinematic model for 3DMC (orange solid line), our deep learning refined kinematic model for Kinect (green dashed line) and the kinematic model for Kinect (blue solid line). The joint angles include shoulder flexion (+)/extension (−), shoulder adduction (+)/abduction (−), shoulder internal rotation (+)/external rotation (−), and elbow flexion (+)/extension (−).

Figure 9.

Joint angles during the hand to mouth task calculated via the kinematic model for 3DMC (orange solid line), our deep learning refined kinematic model for Kinect (green dashed line) and the kinematic model for Kinect (blue solid line). The joint angles include shoulder flexion (+)/extension (−), shoulder adduction (+)/abduction (−), shoulder internal rotation (+)/external rotation (−), and elbow flexion (+)/extension (−).

Figure 10.

Joint angles during the combing hair task calculated via the kinematic model for 3DMC (orange solid line), our deep learning refined kinematic model for Kinect (green dashed line) and the kinematic model for Kinect (blue solid line). The joint angles include shoulder flexion (+)/extension (−), shoulder adduction (+)/abduction (−), shoulder internal rotation (+)/external rotation (−), and elbow flexion (+)/extension (−).

Figure 11.

Joint angles during the hand to back pocket task calculated via the kinematic model for 3DMC (orange solid line), our deep learning refined kinematic model for Kinect (green dashed line) and the kinematic model for Kinect (blue solid line). The joint angles include shoulder flexion (+)/extension (−), shoulder adduction (+)/abduction (−), shoulder internal rotation (+)/external rotation (−), and elbow flexion (+)/extension (−).

Table 1.

The coefficient of multiple correlation (CMC) (SD) between the joint angles via kinematic model for Kinect and the angles via 3DMC as well as between angles via our deep learning refined kinematic model for Kinect and the angles via 3DMC.

Table 2.

The root mean squared error (RMSE) (SD) between the joint angles via kinematic model for Kinect and the angles via 3DMC as well as between angles via our deep learning refined kinematic model for Kinect and the angles via 3DMC.

Our model significantly improves the waveform similarity (see Table 1) and decreases the RMSE (see Table 2) in comparison with the model for Kinect v2 for almost all upper limb joint angles during all investigated functional tasks (p < 0.05). For the angles calculated with model , very good similarities (CMC = 0.85–0.94) are only observed in shoulder flexion/extension angles during Task 1 and Task 4 with the mean CMCs of 0.87 and 0.92 and in elbow flexion/extension angles during Task 1 and Task 2 with the mean CMCs of 0.93 and 0.92. Good similarities (CMC = 0.75–0.84) are only observed in shoulder flexion/extension angle during Task 3 (CMC = 0.77), in shoulder adduction/abduction angle (CMC = 0.84) and shoulder internal/external rotation angle (CMC = 0.81) during Task 1 and in elbow flexion/extension angle during Task 2 and Task 3 (CMC = 0.83 for both tasks). For the rest upper limb joint angles during all chosen functional tasks, angles calculated using model show poor to moderate waveform similarities in comparison with the reference joint angles (CMC = 0.55–0.74).

The RMSEs between and the as well as the RMSEs between and also demonstrate the promising ability of our deep learning refined kinematic model in increasing upper limb joint kinematic accuracy using Kinect v2. The RMSEs are both plane-dependent and task-dependent. Our model decreases the RMSEs with much lower mean values and standard deviations for all degrees of freedom under all functional tasks in comparison model . The RMSEs via our model are significantly smaller than those via model (p < 0.05) except for shoulder flexion/extension angles during the hand to back pocket task. For shoulder flexion/extension angle during the hand to back pocket task, despite the RMSEs via our model and via model do not reach significant difference, the RMSEs via both models are all relatively small. Our model yields lower RMSEs. Taking the combing hair task as an example, the RMSEs drop from 41.73° ± 8.19° to 11.50° ± 7.25° for shoulder flexion/extension angles, from 11.91° ± 4.61° to 5.14° ± 1.83° for shoulder adduction/abduction angles, from 31.45° ± 6.89° to 8.59° ± 2.91° for shoulder internal/external rotation angles and from 25.83° ± 3.45° to 6.96° ± 2.92° for elbow flexion/extension angles after using model instead of model .

Using our deep learning refined kinematic model , shoulder and elbow flexion/extension angles during all four functional tasks show excellent similarities between and with the mean CMC of 0.95–0.99 except for slightly lower similarities during Task 4 (mean CMC = 0.94 and 0.93 for shoulder and elbow joint respectively). The shoulder internal/external rotation angles show excellent similarity (mean CMC = 0.98) during Task 1, very good similarity (mean CMC = 0.89) during Task 3 and good similarity during Task 2 and Task 4 (mean CMC = 0.75 for both tasks). For shoulder adduction/abduction angles, excellent similarity (mean CMC = 0.97), very good similarity (mean CMC = 0.88) and good similarity (mean CMC = 0.79) are observed in Task 3, Task 1 and Task 4, respectively. The lowest similarity is found for the shoulder adduction/abduction angles during the drinking water task with the mean CMC of 0.72.

4.2. Joint Kinematic Variables Validity

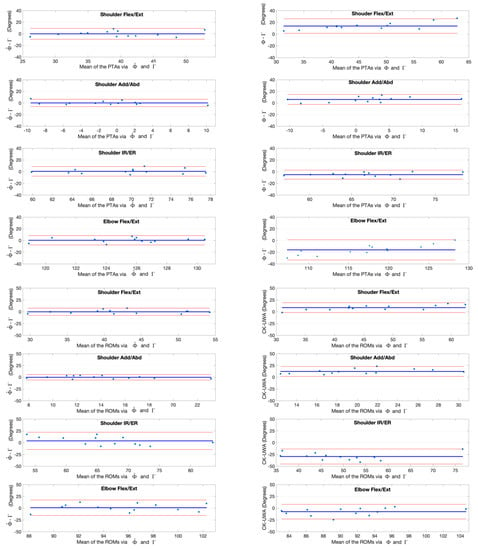

The joint angles at the point of target achieved (PTA) and the range of motion (ROM) during the upper limb functional tasks via the kinematic model and our deep learning refined kinematic model for the Kinect v2 system as well as via the UWA kinematic model for the 3DMC system are presented in Table 3 and Table 4, by means of mean and standard deviation values (± SD). Differences and statistical significance of PTAs via model and model in comparison with the PTAs via model are given in Table 3; whereas the absolute errors and statistical significance of ROMs are given in Table 4. The Bland-Altman plots for all PTAs and ROMs are presented in Figure 12, Figure 13, Figure 14 and Figure 15.

Table 3.

The joint angle at the point of target achieved (PTA) with mean and standard deviation (SD) values calculated via the kinematic model for Kinect v2, our deep learning refined kinematic model for Kinect v2 and the UWA model for the 3DMC system. represents the discrepancy between the PTAs via the model and the reference model . represents the differences between the PTAs via the model and the reference model .

Table 4.

The range of motion (ROM) with mean and standard deviation (SD) values calculated via the kinematic model for Kinect v2, our deep learning refined kinematic model for Kinect v2 and the UWA model for the 3DMC system. represents the discrepancy between the ROMs via the model and the reference model . represents the differences between the ROMs via the model and the reference model .

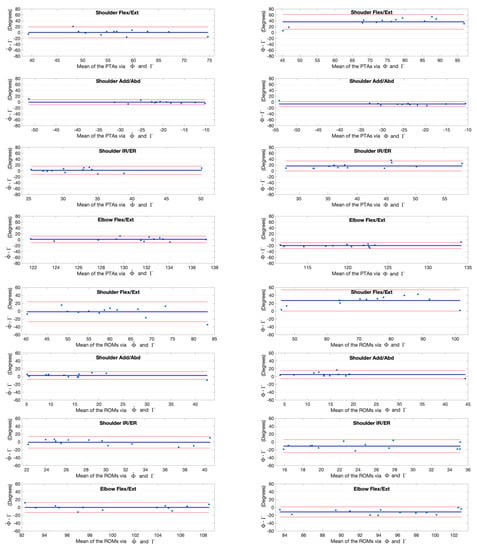

Figure 12.

Bland-Altman plots with 95% limits of agreement for joint kinematic parameters during the hand to contralateral shoulder task. X axes represents the angle means of two systems and the Y axes represents the mean of differences. The red line (middle one) represents the reference line at mean, and the two dashed lines represent the upper and lower limit of agreement. The upper four rows are the angles at the point of target achieved (PTA) and the lower four rows are the range of motion (ROM) values. Plots of the left column are measurement differences between our deep learning refined kinematic model for Kinect and the UWA kinematic model for the 3DMC. Plots of the right column are measurement differences between the kinematic model for Kinect and the UWA kinematic model for the 3DMC .

Figure 13.

Bland-Altman plots with 95% limits of agreement for joint kinematic parameters during the hand to mouth task. X axes represents the angle means of two systems and the Y axes represents the mean of differences. The red line (middle one) represents the reference line at mean, and the two dashed lines represent the upper and lower limit of agreement. The upper four rows are the angles at the point of target achieved (PTA) and the lower four rows are the range of motion (ROM) values. Plots of the left column are measurement differences between our deep learning refined kinematic model for Kinect and the UWA kinematic model for the 3DMC. Plots of the right column are measurement differences between the kinematic model for Kinect and the UWA kinematic model for the 3DMC .

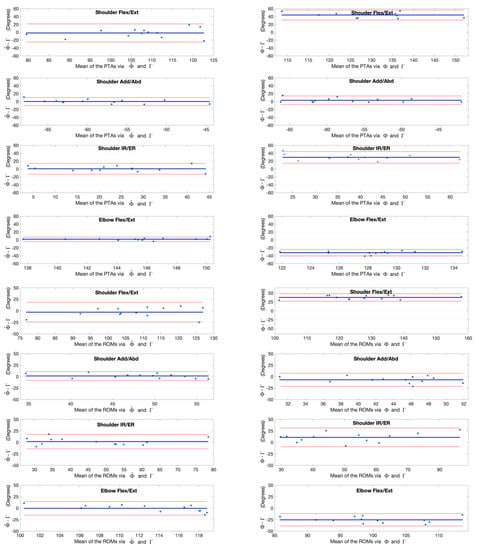

Figure 14.

Bland-Altman plots with 95% limits of agreement for joint kinematic parameters during the combing hair task. X axes represents the angle means of two systems and the Y axes represents the mean of differences. The red line (middle one) represents the reference line at mean, and the two dashed lines represent the upper and lower limit of agreement. The upper four rows are the angles at the point of target achieved (PTA) and the lower four rows are the range of motion (ROM) values. Plots of the left column are measurement differences between our deep learning refined kinematic model for Kinect and the UWA kinematic model for the 3DMC. Plots of the right column are measurement differences between the kinematic model for Kinect and the UWA kinematic model for the 3DMC .

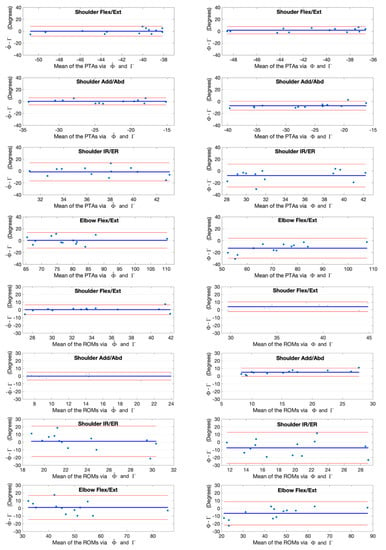

Figure 15.

Bland-Altman plots with 95% limits of agreement for joint kinematic parameters during the hand to back pocket task. X axes represents the angle means of two systems and the Y axes represents the mean of differences. The red line (middle one) represents the reference line at mean, and the two dashed lines represent the upper and lower limit of agreement. The upper four rows are the angles at the point of target achieved (PTA) and the lower four rows are the range of motion (ROM) values. Plots of the left column are measurement differences between our deep learning refined kinematic model for Kinect and the UWA kinematic model for the 3DMC. Plots of the left column are measurement differences between our deep learning refined kinematic model for Kinect and the UWA kinematic model for the 3DMC. Plots of the right column are measurement differences between the kinematic model for Kinect and the UWA kinematic model for the 3DMC .

The PTAs via the model are all reached significant difference in comparison with those via the refence model () except the shoulder flexion/extension angle during the hand to back pocket task and the shoulder adduction/abduction angle during the combing hair task. In contrast, there is no significant difference in all PTAs via our model and the references except for those of the elbow flexion/extension angles during the hand to back pocket task (). Although statistical significance exists in the PTAs of elbow flexion/extension angle during the combing hair task ( = 146.29° ± 4.21°, = 144.56° ± 3.49°), our model does reduce the absolute error of the PTA via model from () −32.39° ± 4.16° to () 1.73° ± 2.79°. By employing our model , the PTAs discrepancy of shoulder and elbow flexion/extension angles between the Kinect v2 and the 3DMC system drop from 45.61° ± 10.30° and -32.39° ± 4.16° () to 1.79° ± 11.78° and 1.73° ± 2.79° () respectively during the combing hair task.

There is significant difference in all ROMs via model and the references (); whereas there is no significant difference between the ROMs via our model and the references for all investigated upper limb joint angles. The greatest improvement occurs in the ROMs of the shoulder flexion/extension angles during the combing hair task, in which the absolute error between the Kinect v2 and the 3DMC system drop from 40.44° ± 9.88° () to 2.95° ± 10.88° ().

5. Discussion

Our study developed a novel deep learning refined kinematic model for 3D upper limb kinematic assessment using a single Kinect v2 sensor. Our refined model is in good agreement with the 3DMC system and is far more accurate than the traditional kinematic model using the same Kinect v2 sensor for upper limb waveforms, joint angles at the point of target achieved (PTA), and the range of motions (ROM) across all functional tasks. Using our deep learning-based model, the Kinect v2 could measure shoulder and elbow flexion/extension waveforms with mean CMCs >0.93 for all investigated tasks, shoulder adduction/abduction, and internal/external rotation waveforms with mean CMCs >0.8 for most of the tasks. The mean deviations of angles at the PTA and ROM are under 5° for all investigated joint angles during all investigated functional tasks. In clinic application, generally less than 2° is considered acceptable, an error between 2°–5° may also be acceptable with appropriate interpretation [59,60]. Thus, the performance of our deep learning refined kinematic model using a single Kinect v2 sensor is promising as an upper limb functional assessment system.

The results agree with other studies on similar upper limb functional tasks [42]. During the combing hair task, at the maximum elevation, the mean elbow flexion via our model is 146°. This is in agreement with results of van Andel et al. [42], Magermans et al. [52] and Morrey et al. [61], who find average elbow flexion angles of 122°, 136° and 100°, respectively. Andel et al. [42] find that the shoulder flexion angles reach nearly 100° in the combing hair tasks and stay under 70° during the other tasks. This is also the same case in our study via our model using a Kinect v2 sensor. Shoulder flexion angles are around 108° during the hair combing task and remain under 60° during the hand to contralateral shoulder and the hand to mouth task. The hand to mouth task does not require the full ROM of all joints and the most important joint angle is elbow flexion [52]. The mean elbow flexion is 112° via our model , which is consistent with Magermans et al.’s research with the elbow flexion of 117° [52].

The systematic errors of the proposed Kinect-based upper limb assessment system include errors due to the inaccurate depth measurement and the motion artifact of moving objects [10]. Kinect v2 measures the depth information based on the Time of Flight (ToF) technique. The ToF measures the time that “light emitted by an illumination unit requires to travel to an object and back to the sensor array”. Kinect v2 utilizes the Continuous Wave (CW) Intensity Modulation approach, which requires several correlated images for calculation of each depth image. The distance calculated based on the mixing of correlated images requires approximation on the CW algorithm and causes systematic error in depth measurement. Recording and processing the correlated images are also both affected by moving objects, which lead to inaccurate depth measurement at object boundaries [10].

The systematic errors also include error due to the kinematic modeling. In both kinematic models, the shoulder joint angles are considered as humerus coordinate rotations relative to the thorax coordinate systems. The kinematic models developed for the Kinect v2 sensor and the model used for the 3DMC system are followed the same recommendation on the definition of joint coordinate systems of trunk, shoulder, and elbow joint proposed by the International Society of Biomechanics [47,62]. The second option of humerus coordinate system is used for both systems [47], in which the z-axis of the humerus coordinate system is perpendicular to the plane formed by the vector from the elbow joint center to the shoulder joint center and the vector from the wrist joint center to the elbow joint center. For the UWA model, the thorax segment is defined by the 7th cervical vertebra, the 10th thoracic vertebra, the sternoclavicular notch and the xyphoid process of the sternum. Because of the limited ability of skeletal joint tracking in the Kinect based system, the thorax coordinate system is defined by Kinect Skeleton landmarks of both trunk segment and shoulder joints (i.e., SpineShoulder, SpineMid, ShoulderLeft and ShoulderRight). Thus, tasks with large clavicle movements such as combing hair have great deviations in shoulder kinematic assessment. In our study, the shoulder joint angles during the combing hair task yield the largest root-mean-squared errors using our deep learning refined model in comparison with the golden standard system.

From Figure 8, Figure 9, Figure 10 and Figure 11, it can be seen that the systematic error of the Kinect based system is highly nonlinear. The LSTM network we employed is the state-of-the-art recurrent neural network, which is good at modeling the nonlinear relationship for time series data. Our deep learning based algorithm yields better results to the linear regression algorithm [63] in refining joint angles using a single Kinect sensor. In assessing shoulder joint angles during the computer-using task, only shoulder adduction/abduction is improved after the linear regression refinement [63]. As the measurement error is positively correlated with the magnitude of that joint angle [63], the measurement error is presented with its ROM. After applying the linear regression calibration, the mean RMSE of the shoulder adduction/abduction angle are decreased from 14.8° and 9.1° for the right and left shoulder respectively to 7.5°, during which the ROM of the angle is under 20°. While using our deep learning refined kinematic model , all upper limb joint angles, including shoulder flexion/extension, shoulder adduction/abduction, shoulder internal/external rotation, and elbow flexion/extension, are significantly improved during all functional tasks. Notably, the mean RMSEs of shoulder adduction/abduction angles are decreased to around 3° for task 1, task 2 and task 4 and to around 5°for task 3 with the mean ROMs of 12.97° to 47.36°.

Previous studies reveal that Kinect v2 with the automated body tracking algorithm is also not suitable to assess lower-body kinematics. The deviation of hip flexion during the swing phase is more than 30° during walking [15]. The limits of agreement (LoA) between the Kinect v2 sensor and the 3DMC system are 28°, 46° for peak knee flexion angle at a self-selected walking speed [15], 7°, 25° for trunk anterior-posterior flexion [16]. Average errors of 24°, 26° are observed for the right and left peak knee flexion angles during squatting [19].

Timmi et al. [37] employed custom-made colored markers placed on bony prominences near the hip, knee, and ankle. The marker tracking approach improves the knee angle measurement with the LOA of −1.8° and 1.7° for flexion and −2.9°, 1.7° for adduction during fast walking. Compared with gait analysis and static posture assessment, motion analysis of the upper limb using Kinect sensors is far more challenging. Upper limb functional activities show a larger variation in the healthy population and a higher number of degrees of freedom in the upper limb. The upper limb, especially the shoulder joint, has a very large working range, comparing to the lower extremity. Furthermore, the upper limb joints are easy to be occluded by each other. The marker-tracking methodology may not be suitable for the Kinect based system in assessing upper limb kinematics.

6. Conclusions

We have developed a novel deep learning refined kinematic model for upper limb functional assessment using a single Kinect v2 sensor. The system demonstrates good kinematic accuracy in comparison with a standard marker-based 3D motion capture system during performing upper limb functional tasks, suggesting that such a single-Kinect-based kinematic assessment system has great potential to be used as an alternative of the traditional marker-based 3D motion capture system. Such a low-cost, easy to use system with good accuracy will help small rehabilitation clinics or meet the need for rehabilitation at home.

Author Contributions

Conceptualization, Y.M. and D.L.; methodology, Y.M. and D.L.; code, Y.M. and D.L.; formal analysis, Y.M.; experiment conduction, L.C.; writing—original draft preparation, Y.M.; writing—review and editing, Y.M.; visualization, Y.M.; supervision, D.L.; project administration, Y.M.; funding acquisition, Y.M. and D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (Grant Number. 61802338), the Zhejiang Provincial Natural Science Foundation of China (Grant Number: LQ19A020001), Ningbo Natural Science Foundation (Grant Number: 2018A610193). The study was also supported by K.C. Wong Magna Fund in Ningbo University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dounskaia, N.; Ketcham, C.J.; Leis, B.C.; Stelmach, G.E. Disruptions in joint control during drawing arm movements in Parkinson’s disease. Exp. Brain Res. 2005, 164, 311–322. [Google Scholar] [CrossRef] [PubMed]

- Dounskaia, N.; Swinnen, S.; Walter, C.; Spaepen, A.; Verschueren, S. Hierarchical control of different elbow-wrist coordination patterns. Exp. Brain Res. 1998, 121, 239–254. [Google Scholar] [CrossRef] [PubMed]

- Reid, S.; Elliott, C.; Alderson, J.; Lloyd, D.; Elliott, B. Repeatability of upper limb kinematics for children with and without cerebral palsy. Gait Posture 2010, 32, 10–17. [Google Scholar] [CrossRef]

- Galna, B.; Barry, G.; Jackson, D.; Mhiripiri, D.; Olivier, P.; Rochester, L. Accuracy of the Microsoft Kinect sensor for measuring movement in people with Parkinson’s disease. Gait Posture 2014, 39, 1062–1068. [Google Scholar] [CrossRef]

- Hoy, M.G.; Zernicke, R.F. The role of intersegmental dynamics during rapid limb oscillations. J. Biomech. 1986, 19, 867–877. [Google Scholar] [CrossRef]

- Putnam, C.A. Sequential motions of body segments in striking and throwing skills: Descriptions and explanations. J. Biomech. 1993, 26, 125–135. [Google Scholar] [CrossRef]

- Zhou, H.; Hu, H. Human motion tracking for rehabilitation—A survey. Biomed. Signal Process. Control 2008, 3, 1–18. [Google Scholar] [CrossRef]

- Jaspers, E.; Feys, H.; Bruyninckx, H.; Cutti, A.; Harlaar, J.; Molenaers, G.; Desloovere, K. The reliability of upper limb kinematics in children with hemiplegic cerebral palsy. Gait Posture 2011, 33, 568–575. [Google Scholar] [CrossRef]

- Mündermann, L.; Corazza, S.; Andriacchi, T.P. The evolution of methods for the capture of human movement leading to markerless motion capture for biomechanical applications. J. Neuroeng. Rehabil. 2006, 3, 6. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Shotton, J.; Sharp, T.; Kipman, A.; Fitzgibbon, A.; Finocchio, M.; Blake, A.; Cook, M.; Moore, R. Real-time human pose recognition in parts from single depth images. Commun. ACM 2013, 56, 116–124. [Google Scholar] [CrossRef]

- Wang, Q.; Kurillo, G.; Ofli, F.; Bajcsy, R. Evaluation of Pose Tracking Accuracy in the First and Second Generations of Microsoft Kinect. In Proceedings of the 2015 International Conference on Healthcare Informatics, Dallas, TX, USA, 21–23 October 2015; pp. 380–389. [Google Scholar]

- Latorre, J.; Llorens, R.; Colomer, C.; Alcañiz, M. Reliability and comparison of Kinect-based methods for estimating spatiotemporal gait parameters of healthy and post-stroke individuals. J. Biomech. 2018, 72, 268–273. [Google Scholar] [CrossRef] [PubMed]

- Pfister, A.; West, A.M.; Bronner, S.; Noah, J.A. Comparative abilities of Microsoft Kinect and Vicon 3D motion capture for gait analysis. J. Med. Eng. Technol. 2014, 38, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Mentiplay, B.F.; Perraton, L.G.; Bower, K.J.; Pua, Y.H.; McGaw, R.; Heywood, S.; Clark, R.A. Gait assessment using the Microsoft Xbox One Kinect: Concurrent validity and inter-day reliability of spatiotemporal and kinematic variables. J. Biomech. 2015, 48, 2166–2170. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.A.; Pua, Y.H.; Oliveira, C.C.; Bower, K.J.; Thilarajah, S.; McGaw, R.; Hasanki, K.; Mentiplay, B.F. Reliability and concurrent validity of the Microsoft Xbox One Kinect for assessment of standing balance and postural control. Gait Posture 2015, 42, 210–213. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.A.; Pua, Y.H.; Fortin, K.; Ritchie, C.; Webster, K.E.; Denehy, L.; Bryant, A.L. Validity of the Microsoft Kinect for assessment of postural control. Gait Posture 2012, 36, 372–377. [Google Scholar] [CrossRef] [PubMed]

- Paolini, G.; Peruzzi, A.; Mirelman, A.; Cereatti, A.; Gaukrodger, S.; Hausdorff, J.M.; Croce, U.D. Validation of a Method for Real Time Foot Position and Orientation Tracking With Microsoft Kinect Technology for Use in Virtual Reality and Treadmill Based Gait Training Programs. IEEE Trans. Neural. Syst. Rehabil. Eng. 2014, 22, 997–1002. [Google Scholar] [CrossRef]

- Capecci, M.; Ceravolo, M.G.; Ferracuti, F.; Iarlori, S.; Longhi, S.; Romeo, L.; Russi, S.N.; Verdini, F. Accuracy evaluation of the Kinect v2 sensor during dynamic movements in a rehabilitation scenario. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5409–5412. [Google Scholar]

- Mateo, F.; Soria-Olivas, E.; Carrasco, J.J.; Bonanad, S.; Querol, F.; Pérez-Alenda, S. HemoKinect: A Microsoft Kinect V2 Based Exergaming Software to Supervise Physical Exercise of Patients with Hemophilia. Sensors 2018, 18, 2439. [Google Scholar] [CrossRef]

- Chen, X.; Siebourgpolster, J.; Wolf, D.; Czech, C.; Bonati, U.; Fischer, D.; Khwaja, O.; Strahm, M. Feasibility of Using Microsoft Kinect to Assess Upper Limb Movement in Type III Spinal Muscular Atrophy Patients. PLoS ONE 2017, 12, e0170472. [Google Scholar] [CrossRef]

- Mobini, A.; Behzadipour, S.; Saadat, M. Test–retest reliability of Kinect’s measurements for the evaluation of upper body recovery of stroke patients. Biomed. Eng. Online 2015, 14, 75. [Google Scholar] [CrossRef]

- Chang, Y.J.; Chen, S.F.; Huang, J.D. A Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil. 2011, 32, 2566–2570. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.D. Kinerehab: A kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. In Proceedings of the 13th International ACM SIGACCESS Conference on Computers and Accessibility, Dundee Scotland, UK, 24–26 October 2011; pp. 319–320. [Google Scholar]

- Fuertes Muñoz, G.; Mollineda, R.A.; Gallardo Casero, J.; Pla, F. A RGBD-Based Interactive System for Gaming-Driven Rehabilitation of Upper Limbs. Sensors 2019, 19, 3478. [Google Scholar] [CrossRef] [PubMed]

- Vilas-Boas, M.d.C.; Choupina, H.M.P.; Rocha, A.P.; Fernandes, J.M.; Cunha, J.P.S. Full-body motion assessment: Concurrent validation of two body tracking depth sensors versus a gold standard system during gait. J. Biomech. 2019, 87, 189–196. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; McGorry, R.W.; Chou, L.S.; Lin, J.; Chang, C. Accuracy of the Microsoft Kinect™ for measuring gait parameters during treadmill walking. Gait Posture 2015, 42, 145–151. [Google Scholar] [CrossRef]

- Cai, L.; Ma, Y.; Xiong, S.; Zhang, Y. Validity and Reliability of Upper Limb Functional Assessment Using the Microsoft Kinect V2 Sensor. Appl. Bionics Biomech. 2019, 2019, 7175240. [Google Scholar] [CrossRef]

- Mentiplay, B.F.; Hasanki, K.; Perraton, L.G.; Pua, Y.H.; Charlton, P.C.; Clark, R.A. Three-dimensional assessment of squats and drop jumps using the Microsoft Xbox One Kinect: Reliability and validity. J. Sports Sci. 2018, 36, 2202–2209. [Google Scholar] [CrossRef]

- Mentiplay, B.F.; Clark, R.A.; Mullins, A.; Bryant, A.L.; Bartold, S.; Paterson, K. Reliability and validity of the Microsoft Kinect for evaluating static foot posture. J. Foot Ankle Res. 2013, 6, 14. [Google Scholar] [CrossRef]

- Kobsar, D.; Osis, S.T.; Jacob, C.; Ferber, R. Validity of a novel method to measure vertical oscillation during running using a depth camera. J. Biomech. 2019, 85, 182–186. [Google Scholar] [CrossRef]

- Schmitz, A.; Ye, M.; Shapiro, R.; Yang, R.; Noehren, B. Accuracy and repeatability of joint angles measured using a single camera markerless motion capture system. J. Biomech. 2014, 47, 587–591. [Google Scholar] [CrossRef]

- Xu, X.; Robertson, M.; Chen, K.B.; Lin, J.; McGorry, R.W. Using the Microsoft Kinect™ to assess 3-D shoulder kinematics during computer use. Appl. Ergon. 2017, 65, 418–423. [Google Scholar] [CrossRef]

- Clark, R.A.; Mentiplay, B.F.; Hough, E.; Pua, Y.H. Three-dimensional cameras and skeleton pose tracking for physical function assessment: A review of uses, validity, current developments and Kinect alternatives. Gait Posture 2019, 68, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Sarsfield, J.; Brown, D.; Sherkat, N.; Langensiepen, C.; Lewis, J.; Taheri, M.; McCollin, C.; Barnett, C.; Selwood, L.; Standen, P.; et al. Clinical assessment of depth sensor based pose estimation algorithms for technology supervised rehabilitation applications. Int. J. Med. Inform. 2019, 121, 30–38. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.B.; Park, Y.; Suh, I.H. Tracking human-like natural motion by combining two deep recurrent neural networks with Kalman filter. Intell. Serv. Robot. 2018, 11, 313–322. [Google Scholar] [CrossRef]

- Timmi, A.; Coates, G.; Fortin, K.; Ackland, D.; Bryant, A.L.; Gordon, I.; Pivonka, P. Accuracy of a novel marker tracking approach based on the low-cost Microsoft Kinect v2 sensor. Med. Eng. Phys. 2018, 59, 63–69. [Google Scholar] [CrossRef]

- Moon, S.; Park, Y.; Ko, D.W.; Suh, I.H. Multiple Kinect Sensor Fusion for Human Skeleton Tracking Using Kalman Filtering. Int. J. Adv. Robot. Syst. 2016, 13, 65. [Google Scholar] [CrossRef]

- Zhang, Y.; Unka, J.; Liu, G. Contributions of joint rotations to ball release speed during cricket bowling: A three-dimensional kinematic analysis. J. Sports Sci. 2011, 29, 1293–1300. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G.J. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Van Andel, C.J.; Wolterbeek, N.; Doorenbosch, C.A.; Veeger, D.H.; Harlaar, J. Complete 3D kinematics of upper extremity functional tasks. Gait Posture 2008, 27, 120–127. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Zhang, Y.; Lloyd, D.G.; Campbell, A.C.; Alderson, J.A. Can the effect of soft tissue artifact be eliminated in upper-arm internal-external rotation? J. Appl. Biomech. 2011, 27, 258–265. [Google Scholar] [CrossRef]

- Campbell, A.C.; Alderson, J.A.; Lloyd, D.G.; Elliott, B.C. Effects of different technical coordinate system definitions on the three dimensional representation of the glenohumeral joint centre. Med. Biol. Eng. Comput. 2009, 47, 543–550. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.; Lloyd, D.; Alderson, J.; Elliott, B. MRI development and validation of two new predictive methods of glenohumeral joint centre location identification and comparison with established techniques. J. Biomech. 2009, 42, 1527–1532. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Van der Helm, F.C.; Veeger, H.D.; Makhsous, M.; Van Roy, P.; Anglin, C.; Nagels, J.; Karduna, A.R.; McQuade, K.; Wang, X. ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion—Part II: Shoulder, elbow, wrist and hand. J. Biomech. 2005, 38, 981–992. [Google Scholar] [CrossRef] [PubMed]

- Cappozzo, A.; Catani, F.; Della Croce, U.; Leardini, A. Position and orientation in space of bones during movement: Anatomical frame definition and determination. Clin. Biomech. 1995, 10, 171–178. [Google Scholar] [CrossRef]

- Bartlett, R. Introduction to Sports Biomechanics: Analysing Human Movement Patterns; Routledge: Abington, UK, 2014. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Dutta, T. Evaluation of the Kinect™ sensor for 3-D kinematic measurement in the workplace. Appl. Ergon. 2012, 43, 645–649. [Google Scholar] [CrossRef]

- Magermans, D.; Chadwick, E.; Veeger, H.; Van Der Helm, F. Requirements for upper extremity motions during activities of daily living. Clin. Biomech. 2005, 20, 591–599. [Google Scholar] [CrossRef]

- Stanger, C.A.; Anglin, C.; Harwin, W.S.; Romilly, D.P. Devices for assisting manipulation: A summary of user task priorities. IEEE Trans. Rehabil. Eng. 1994, 2, 256–265. [Google Scholar] [CrossRef]

- Anglin, C.; Wyss, U. Review of arm motion analyses. J. Eng. Med. 2000, 214, 541–555. [Google Scholar] [CrossRef]

- Mosqueda, T.; James, M.A.; Petuskey, K.; Bagley, A.; Abdala, E.; Rab, G. Kinematic assessment of the upper extremity in brachial plexus birth palsy. J. Pediatr. Orthop. 2004, 24, 695–699. [Google Scholar] [CrossRef]

- Van Ouwerkerk, W.; Van Der Sluijs, J.; Nollet, F.; Barkhof, F.; Slooff, A. Management of obstetric brachial plexus lesions: State of the art and future developments. Children’s Nerv. Syst. 2000, 16, 638–644. [Google Scholar] [CrossRef]

- Kadaba, M.; Ramakrishnan, H.; Wootten, M.; Gainey, J.; Gorton, G.; Cochran, G. Repeatability of kinematic, kinetic, and electromyographic data in normal adult gait. J. Orthop. Res. 1989, 7, 849–860. [Google Scholar] [CrossRef] [PubMed]

- Garofalo, P.; Cutti, A.G.; Filippi, M.V.; Cavazza, S.; Ferrari, A.; Cappello, A.; Davalli, A. Inter-operator reliability and prediction bands of a novel protocol to measure the coordinated movements of shoulder-girdle and humerus in clinical settings. Med. Biol. Eng. Comput. 2009, 47, 475–486. [Google Scholar] [CrossRef] [PubMed]

- Hassan, E.A.; Jenkyn, T.R.; Dunning, C.E. Direct comparison of kinematic data collected using an electromagnetic tracking system versus a digital optical system. J. Biomech. 2007, 40, 930–935. [Google Scholar] [CrossRef] [PubMed]

- McGinley, J.L.; Baker, R.; Wolfe, R.; Morris, M.E. The reliability of three-dimensional kinematic gait measurements: A systematic review. Gait Posture 2009, 29, 360–369. [Google Scholar]

- Morrey, B.; Askew, L.; Chao, E. A biomechanical study of normal functional elbow motion. J. Bone Jt. Surg. 1981, 63, 872–877. [Google Scholar] [CrossRef]

- Wu, G.; Siegler, S.; Allard, P.; Kirtley, C.; Leardini, A.; Rosenbaum, D.; Whittle, M.; D’Lima, D.D.; Cristofolini, L.; Witte, H. ISB recommendation on definitions of joint coordinate system of various joints for the reporting of human joint motion—Part I: Ankle, hip, and spine. J. Biomech. 2002, 35, 543–548. [Google Scholar] [CrossRef]

- Fong, D.T.P.; Hong, Y.; Chan, L.K.; Yung, P.S.H.; Chan, K.M. A Systematic Review on Ankle Injury and Ankle Sprain in Sports. Sports Med. 2007, 37, 73–94. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).