Abstract

Based on the growing interest in encephalography to enhance human–computer interaction (HCI) and develop brain–computer interfaces (BCIs) for control and monitoring applications, efficient information retrieval from EEG sensors is of great importance. It is difficult due to noise from the internal and external artifacts and physiological interferences. The enhancement of the EEG-based emotion recognition processes can be achieved by selecting features that should be taken into account in further analysis. Therefore, the automatic feature selection of EEG signals is an important research area. We propose a multistep hybrid approach incorporating the Reversed Correlation Algorithm for automated frequency band—electrode combinations selection. Our method is simple to use and significantly reduces the number of sensors to only three channels. The proposed method has been verified by experiments performed on the DEAP dataset. The obtained effects have been evaluated regarding the accuracy of two emotions—valence and arousal. In comparison to other research studies, our method achieved classification results that were 4.20–8.44% greater. Moreover, it can be perceived as a universal EEG signal classification technique, as it belongs to unsupervised methods.

1. Introduction

Bioinformatics techniques for EEG signal analysis help in increasing the diagnostic potential of sleep disorders and detecting diseases of the nervous system. These include epilepsy or depression [1]. Moreover, there has been a growing interest in encephalography to enhance human–computer interaction (HCI) and develop brain–computer interfaces (BCIs) [2]. For BCIs, there are various applications, and their design depends on the intended application. Two main branches of BCI applications can be distinguished—control and monitoring. Control applications are designed to use brain signals to manipulate an external computer, while monitoring applications allow for the user’s mental and emotional state recognition to control the environment in which they are in, or the interface they use [3].

Emotions play an essential role in everyday life and interpersonal communication. Positive emotions enhance health, while negative emotions may contribute to the aggravation of health problems. Research in neurobiology and psychology confirms that emotions constitute a vital factor influencing rational behavior. Furthermore, patients with emotional disorders have difficulty carrying out daily activities [4].

Emotions are identified by verbal (e.g., emotional vocabulary) or non-verbal signals such as gestures, expressions, and intonation [5,6,7]. EEG signals are one of the methods of emotional state identification [8,9]. It is non-invasive and relatively inexpensive in comparison to other signal acquisition methods. However, during semantic EEG signal analysis in terms of mental task classification, the accuracy is still one of the significant problems. Therefore, recognizing emotions is critical for the current research within EEG signal analysis [10,11].

The EEG test records electrical patterns in the brain. During EEG examinations, sensors (electrodes and wires) are placed on the scalp of a patient. The sensors record potentials from the synchronous work of groups of cerebral cortical neurons. Thus, the recordings’ analysis depends on the appropriate placement of the measuring electrodes. Although the measured signal is amplified, retrieving information from the EEG signal is a difficult task. The complexity arises due to both noise from the internal (physiological) and external (hardware related) artifacts, and physiological interferences.

The improvement in signal quality can be achieved by eliminating artifacts, such as [12,13,14,15,16]:

- electrooculography (EOG), which occurs as a result of motion generated by eyes,

- electrocardiogram (ECG), which occurs as a result of heartbeats, and

- electromyogram (EMG), which occurs as a result of muscular motion.

On the other hand, the appropriate selection of features that should be taken into account can enhance the process of EEG signal analysis. Hence, the automatic feature selection of EEG signals is an important research area.

Two approaches can be distinguished within the undertaken research works:

- optimal selection of electrodes [17],

- optimal extraction and selection of features [18].

The optimal selection of measuring electrodes is the first and crucial stage of works related to the automatic selection of EEG signals’ features [19]. However, due to the complexity of the electrode selection and the need to adapt the research to the classification problem, the attempts made so far have not clearly indicated the number and the position of electrodes best suited to the tasks related to emotions’ classification. For example, the study [20] suggests using nine electrodes, while the authors of the research [17] indicate three to eight electrodes depending on the experiment. An additional difficulty is that the smaller sets of electrodes do not constitute subsets of the covering sets of electrodes in the cited works. The selected electrodes proposed in studies [20,21] are located over the frontal and parietal lobes, whereas [17] indicates mainly central and occipital lobes.

The extraction and selection of features also play an essential role in EEG applications. The most commonly used features can be divided into four categories—time domain, parametric model-based, transformed domain, and frequency-based components [19]. Moreover, the features extracted from the EEG may be redundant or contain outliers that degrade the classification performance [22]. To cope with these problems, using feature selection is recommended.

Our proposed research aims to identify a set of key electrodes for the emotional state classification based on the EEG signal as a continuation of research on the optimal selection of electrodes. Most of the earlier strategies focus on correlations between a particular type of emotion and a brain region with a subset of electrodes [10,23]. However, there is still a lack of more flexible solutions independent from emotion type, which perform well for different emotional categories. We propose using a reversed correlation-based algorithm RCA for the automatic selection of electrodes. The RCA was described in [24] and used for medical diagnosis support. Unlike most of the feature selection methods, the RCA technique is an unsupervised learning method. It implies the possibility of selecting a set of channels independent from the classified emotion. Moreover, the RCA significantly reduces the data necessary for further analysis while maintaining high classification accuracy.

The remainder of the paper is organized as follows. In the next section, related works, considering different techniques of emotion recognition, are presented. Then, the emotions’ classification problem in terms of the EEG test is introduced, and the whole methodology is described. Next, the experiments carried out are depicted, and the obtained results are discussed. The final section presents the study’s conclusions and delineates future research.

2. Related Work

Emotions play a vital role in human communication. However, most new human–computer interaction (HCI) systems, which are assumed to signify human interaction, suffer from a lack of emotional intelligence, and are deficient in interpreting emotions. Therefore, many types of research are undertaken to fill this gap and to automate emotional responses.

Various scales of emotions’ categorization were proposed [10]:

- the six basic emotions proposed by Ekman and Friesen [25],

- the tree structure of emotions proposed by Parrott [26],

- Plutchik’s emotion wheel [27], and

- the valence–arousal scale by Russell [28].

Russell’s valence–arousal scale is one of the most widely used scales [28]. In this scale, each psychological state is placed on a two-dimensional plane with arousal and valence as the vertical and horizontal axes. As it was depicted in Figure 1:

Figure 1.

Russell’s circumplex model of affect.

- arousal ranges from inactive (bored and uninterested) to active and excited,

- valence spreads from unpleasant (sad and stressed) to happy and pleasant.

In the past decades, many researchers have investigated subjects’ reactions and potentials of different emotion recognition techniques, including speech, non-verbal audition, facial expression, visual and thermal images, peripheral neural signals, and central neural system signals [29,30,31,32,33,34,35,36,37].

Infrared thermography (IRT) captures the radiation of energy from the use of sensors. Investigations revealed that there is a connection between emotions and blood flow, and such a connection can be recognized using infrared thermography [32,33,34].

The authors of [32] aimed at classifying the main emotions—joy, disgust, anger, fear, and sadness—using facial temperature reactions. Their system estimates changes in temperature from the face’s central area (forehead, cheeks, nose, and maxillary) and assigns the approrpiate emotion. The accuracy of the classification was 89.9%. The first three emotions—joy, disgust, and sadness—showed great accuracy, at 96.96%, 91.73%, and 89.33%, respectively.

Another method using landmark detection in thermal images was proposed in [33]. The main goal of these studies is to receive the best accuracy for emotion recognition by child–robot interaction. This kind of robotic system can be used as a therapy-aid and help children who suffer from autism and have problems with showing emotions. The researchers used various methods for feature extraction and reduction (algorithm Viola-Jones, PCA, LDA). They attained mean accuracies of 85.75% and 81.84% for the main five emotions.

The authors of [34] proposed a new algorithm for emotion recognition by analyzing facial thermal images. The algorithm divides an image into grids and predicts the probabilities of emotions for each part of the grid. Their study indicated that background has an impact on the results, and it is important to eliminate the surrounding, except for the face. Seven emotions—sadness, happiness, surprise, fear, anger, neutral, and disgust—were trained and tested. The average accuracy of all emotions was 65%. However, the accuracy depended on the emotions. The best result of 80.8% was attained for happiness. Emotions such as sadness, surprise, and disgust were above 50% accuracy. However, for anger, fear, and neutral emotions, the accuracies were less than 30%.

Some of the research on thermal images have satisfactory results. However, there is still an insifficient number of exhaustive studies about some of the emotions, which could improve quality. The main problem is the lack of datasets with thermal images, which prevent the development of emotion detection using infrared thermography. The other drawback is the requirement of expensive devices, such as a special infrared camera [32].

Another method for emotion detection is by speech. The authors of [35] created a new database to conduct research on emotion recognition by speech, which consists of basic emotions—neutral, anger, happiness, sadness, disgust, surprise, and fear. The accuracy was enhanced to 78.89% compared to the renowned EMO database −74.72%.

Zhu et al. [36] took into account a combination of the Support Vector Machine (SVM) and a Deep Belief Network (DBN) in emotion detection from Chinese speech. Their method consisted of two steps. First, features were extracted, and the algorithm selected only those with the highest importance for classification. The second step was to find the best way for emotion recognition. The authors introduced a novel approach, which joined two algorithms—DBN and SVM. Their experiments resulted in a great accuracy of 95.6%. However, the accuracies of using SVM and DBN separately were 84.6% and 94.6%, respectively, so the authors’ hybrid method improved on the previous results.

The authors of [37] introduced a Deep Convolutional Neural Network (DCNN) during feature selection. They used several classification methods—support vector machine, random forest, the k-nearest neighbors’ algorithm, and neural network classifiers. The average accuracy for all experiments achieved 85.58%. The authors also reported that DCNN models are efficient for feature extraction during speech and can bring a noticeable improvement in emotion recognition by speech.

According to neurophysiological and clinical research, electroencephalogram (EEG) signals reflect the brain’s electrical activity and the mind’s functional condition. Therefore, numerous supervised and unsupervised approaches based on EEG signals have been proposed in many applications, including motor imagery or epileptic seizure detection. EEG signals also express the human emotional state, and in the last several years, EEG signals have been slowly introduced to emotion recognition due to their strong objectivity, and moderately good classification accuracy [38].

The main goal of many investigations has been to identify brain areas and patterns associated with emotional states. Most of the studies stress high reactivity of negative emotions [39,40] and their relations to right-brain activity [41].

EEG data are accumulated from many locations across the brain and frequently entail data from more than 100 electrodes. That is why efficient channel selection is very significant. The main objective of employing channel selection is to reduce computational complexity while assessing EEG signals without losing classification accuracy. The literature describes that similar or even the same performance could be accomplished by using a more compact group of channels [23,42]. However, researchers still argue about the number and locations of the EEG electrodes [17,20,43]. Therefore, more comprehensive studies on channel selection are of great importance.

Band frequency selection and feature selection are other vast areas of research related to EEG signal analysis. Many works focus on motor imagery (MI) in automated selection techniques, as MI is one of the most common mental tasks used in BCI applications. The subjects are directed to imagine themselves performing a specific motor action, such as moving a foot or hand, without activating any muscles. The authors of [44] introduced a novel system for motor imagery detection. Joining empirical wavelet transform and several algorithms for dimensionality reduction yields a significant increase in performance. The attained accuracy equaled 92.9%. Those studies also undertook and resolved the problem with obtaining good results for small samples.

The BCIs interactions with subjects are one of the major problems described in studies on motor imagery. Electrode selection may be crucial for overcoming interaction problems. In the work [45], the authors explored the reduction of the time-response in motor imagery using four electrodes. The accuracy of 83.8% for detecting hand motion imagery was achieved in a time of 2 s. This research can help a disabled or immobile person communicate with a computer or a wheelchair.

The robustness and computational load are the key challenges in motor imagery based on EEG signals. In [46] Sadiq et al. proposed a multivariate empirical wavelet transform (MEWT)-based method to obtain robustness against noise. The average sensitivity, specificity, and classification accuracy of 98% were achieved by employing multilayer perceptron neural networks, a logistic model tree, and least-squares support vector machine (LS-SVM) classifiers, resulting in an improvement of up to 23.50% in classification accuracy compared with other existing methods [47,48]. The proposed method also shows a great potential in EEG signal processing for emotion recognition.

Electroencephalography is also used for epileptic seizure detection. Epilepsy is one of the most common brain disorders and makes life difficult for many people [49]. The work [50] introduced a novel method for the recording of epileptic signals for EEG. They found that EOG artifacts were not visible during the attack for channels that were located behind the ears. The investigations revealed 38 seizures by the behind-the-ear method among all 47 trials. Detecting epilepsy only thanks to a few channels behind the ears, which are not visible to other people, may render many lives easier.

High accuracy (99.25%) of epileptic seizure detection was achieved by the authors of [51]. They developed a new framework to detect epilepsy rapidly. Wang et al. used various feature extraction, nonlinear analysis of EEG signals, and Principal Component Analysis (PCA).

The research of [52] shows that one of the main points in epileptic seizure detection is finding the most relevant feature. The authors proposed using a graph eigen decomposition (GED)-based approach, which reduces unnecessary attributes. Their method, in conjunction with a feedforward neural network (FfNN), scored 99.55% for accuracy. It is one of the highest results among any other investigations related to epileptic seizure detection by EEG signals.

The authors of the study [53] propose emotion recognition with an improved particle swarm optimization for feature selection. They use an initial set of features from time, frequency, and time-frequency domains and a modified particle swarm optimization (PSO) method with multi-stage linearly-decreasing inertia weight (MLDW) for feature selection. The average accuracy of four-class emotion recognition reached over 76%. Their study also opens other issues that can be considered in the future, such as the optimization of parameter settings.

The research analysis shows that there are many obstacles to overcome in EEG data analysis. In our study, we focus on the EEG electrode selection problem applied to emotion recognition.

3. Materials and Methods

3.1. The Method Overview

The considered method for indicating the EEG electrodes’ number and locations consists of two main steps—selecting bands and electrodes. They are preceded by one initial step, which aims at adjusting the original dataset to analysis needs, and one final step for evaluation. Therefore, the proposed steps can be presented as follows:

- EEG data preprocessing, based on the initial signal’s downsampling and applying the bandpass filtering,

- selecting bands of frequencies based on calculating average frequencies for each second of a trial,

- selecting electrodes based on a statistical analysis of correlation coefficients,

- evaluation of emotions’ classification results in terms of valence and arousal.

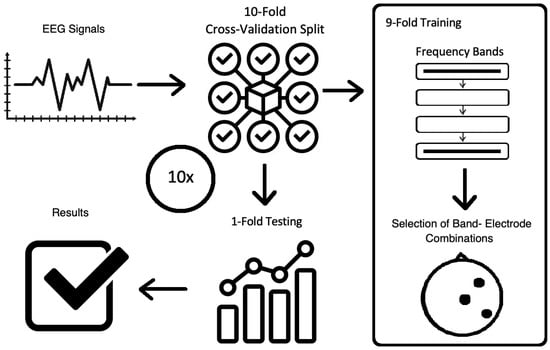

The general overview of the method is shown in Figure 2. The description of EEG data, as well as the mains steps of the methodology, are presented in Section 3.2, Section 3.3, Section 3.4 and Section 3.5.

Figure 2.

The overview of the methodology.

3.2. EEG Dataset

The experimental study was carried out on the reference DEAP dataset [10], created by researchers at Queen Mary University of London. It is among the most frequently used open-source datasets in research studies. It contains multiple physiological signals with the psychological evaluation. In the experiment, 32 healthy subjects took part, aged between 19 and 37 (50% female, 50% male). Each subject watched 40 one-minute music videos. The process of conducting the experiment for one subject consisted of the following steps:

- displaying number of the next trial (2 s),

- baseline recording (3 s),

- displaying one video (60 s),

- rating four emotions (valence, arousal, dominance, liking) by each user.

The data comes from 32 electrodes placed on the scalp and 13 physiological electrodes on the examined person’s fingers and face. The electrodes recorded bioelectrical signals while subjects were seeing 40 trials of music movies with different psychological tendencies.

The electrodes pick up values from the four main parts of the brain:

- the frontal lobe, which is responsible for thinking, memory, evaluation of emotions and situations,

- the parietal lobe, located just behind the frontal part and responsible for movement, recognition, a sensation of temperature, touch, and pain,

- the occipital lobe, responsible for seeing and analyzing colors and shapes, and

- the temporal lobe, located in the lateral parts, and responsible for speech and recognition of objects.

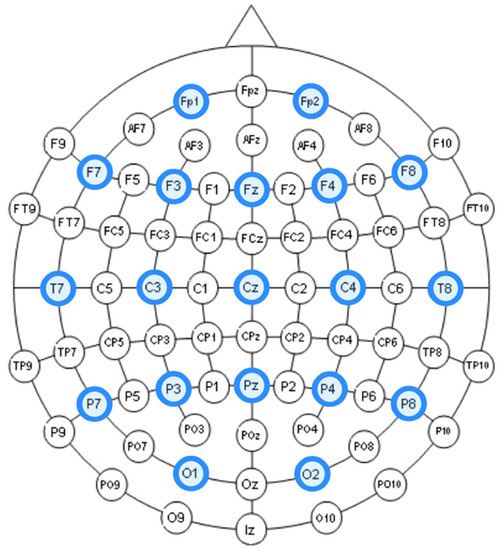

The entire distribution of channels is shown in Figure 3.

Figure 3.

Names of channels.

We applied the initial 32-channel EEG signals in the DEAP dataset in our experiments, preprocessed by downsampling to 128 Hz and segmented into a 60 s trial and a 3 s pre-trial baseline. The artifacts of eye movement (EOG) were removed using a blind source separation technique [10].

Four psychophysical states were assessed (valence, arousal, dominance, liking) while viewing 1 min movies in the DEAP dataset. Each video lasted 60 s, and 3 s were reserved for preparing the person for the next trial. Each movie has been ranked on a scale of 1–9. The smallest value indicates negative emotion and higher—strong, positive emotion.

Our research focused on the two most objective feelings—valence and arousal. The valence reflects what the person feels while viewing the film (happy or sad), while arousal demonstrates the degree of the impression the film makes (calm, enthusiasm). The evaluation between 1 and 9 was transformed into a binary scale:

- 0 suggests low valence/arousal, less than 4.5 points, and

- 1 for high valence/arousal, more than 4.5 points.

A score of 4.5 points was designated as a threshold.

3.3. Band Selection

There are five widely recognized brain bands—delta (), theta (), alpha (), beta (), and gamma (). The main frequencies of human EEG waves, along with their characteristics, are listed in Table 1 [54,55].

Table 1.

Five Bands for Brain Waves.

For valence and arousal classification, the delta band can be excluded from analysis.

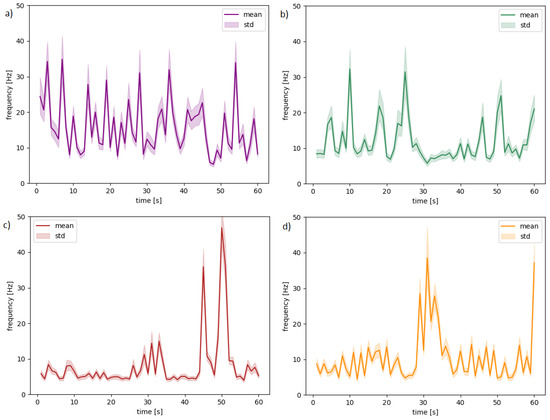

We propose choosing the commonly used mean statistics for every second of the recordings. The sample signal waveforms for four randomly selected subjects, videos, and channels are presented in Figure 4 to show the variability of the frequency values while watching a video. The solid lines in charts visualize the means, and light areas show standard deviation (variability between frequencies). Table 2 presents descriptions for each chart.

Figure 4.

The waveforms for random subjects and different channels: (a) 11-T7 (b) 11-CP1, (c) 30-F7 (d) 30-CP5.

Table 2.

Channel allocation distribution to Figure 4.

As a result, after preprocessing our data, we obtained 128 band-channel combinations using a frequency branch—32 electrodes × 4 bands.

3.4. Automated Feature Selection for Band-Electrode Combinations

Feature selection algorithms enhance computation time and classification accuracy and identify the most appropriate channels for a specific application or task. As it was introduced in Section 2, the process of channel selection might be crucial. Hence, the researchers developed new techniques for selecting the optimal number and location of electrodes.

Regarding one of the main EEG classification issues, i.e., redundancy, we suggested using the Reversed Correlation Algorithm (RCA). The algorithm uses correlation coefficients but in reverse order. It means that the RCA suggests features that are the least connected with all their predecessors. For that reason, the RCA might be beneficial in the reducing EEG signals’ redundancy.

The algorithm was proposed by Wosiak and Zakrzewska in [24]. The entire process is explained in Algorithm 1.

| Algorithm 1. Reversed Correlation Algorithm | |

| 1: | function RCA(N) ▹ N is a desired number of channels from R subset |

| 2: | ▹ set of all channels (features) |

| 3: | take the first channel with the min correlation |

| 4: | while do |

| 5: | while j < length of do |

| 6: | while k < length of R do |

| 7: | compute correlation between channels |

| 8: | + |

| 9: | |

| 10: | end while |

| 11: | |

| 12: | |

| 13: | |

| 14: | end while |

| 15: | choose channel with the lowest sum of value |

| 16: | |

| 17: | end while |

| 18: | return ▹ selected subset of channels |

| 19: | end function |

The main goal is to find the channels that are the least correlated with each other. Every channel is divided into four main frequencies—theta, alpha, beta, gamma. Therefore, as an input, we have 128 features (32 channels × 4 frequencies), which represent the subset. The first step of RCA is to enter the number of desired channels. The first band-channel with the lowest average correlation to the rest of the channels is calculated. The channel number is saved to the R subset (as ). Then, the loop starts, and we look for the next band-channels until obtaining N number from the input. The second band-channel is chosen as a value with the lowest correlation to the first attribute from R subset and is saved as an . Another loop designates the next channel, wherein:

- correlation with each band-channel from R subset (, , etc.) is calculated for each band-channel from Ch subset, and

- the values are summed.

The loop ends when the number of channels for the R subset is obtained. In the next step, the sum is divided by the length of R list, and the channel number with the lowest sum is saved. The operation is repeated for every band-channel from the subset. The lowest value is taken along with the number of that channel saved as the next channel in the R subset. The sum value is equal to 0, and we proceed to the next sub-channel. The algorithm ends when the length of subset R is equal to N input number.

We set the number of channels selected to three, according to other studies and data limitations. In order to compare the results of our channel selection by the RCA algorithm, we applied three other methods of electrode selection:

- based on mean correlations of the valence and arousal in all participants, as described by Koelstra et al. in [10],

- based on local subset feature selection and modified Dempster–Shafer theory as proposed by Soroush, Maghooli et al. in [23],

- based on three central electrodes, as described in [42].

3.5. Evaluation Criteria

Selecting an optimum subset of EEG channels requires an evaluation criteria. Concerning emotion classification, classifier-based measures may be used. A classifier is responsible for finding the separability measure for different classes described by labels, and a channel is selected when the classifier performs well. The Support Vector Machine (SVM) is among the most fundamental and popular classification methods. It was proposed by Cortes and Vapnik [56] and is based upon the principle of structural risk minimization. The SVM uses core functions to create a plane that can carry datasets, which are difficult to separate linearly into a high-dimensional space and maximizes the space between classes. Scientific studies reveal that the SVM contributes to better classification accuracy than other algorithms with feature vectors obtained from EEG signals [57,58]. A Gaussian radial basis function (RBF) kernel was used to enhance the data separability [55,59,60].

Classification values obtained for the selected subset of sensors were compared in terms of statistical significance. By significant differences, we assume p value .

4. Results and Discussion

The purpose of the experiments was to indicate a subset of EEG channels, which reduces the complexity of the analysis and signal redundancy and positively influences emotion classification.

The experiments were conducted according to the methods introduced in Section 3 on the dataset described in Section 3.2. Only valence and arousal were analyzed. Three steps of experiments were performed:

- The experimental procedure of emotions’ classification that incorporated all 32 EEG electrodes to determine the possibilities of automatic feature selection methods in EEG signal extraction in terms of emotion recognition—the baseline for subsequent procedures.

- The experimental procedure of emotion classification that uses the proposed RCA algorithm for EEG channel selection.

- The experimental procedure of emotion classification that uses channels recommended in the literature: Koelstra et al. [10], Soroush et al. [23], and Frantzidis et al. [42].

The procedures were conducted with 10-fold cross-validation. The first step is to divide all data into ten subsets, which consist of independent trials. Each trial consisted of mean statistics for every second of one-minute recording, according to other relevant studies [10,23,42,61,62]. One of these subsets is selected as test data, and the others are summed and used for training. The process is repeated ten times and returns the average accuracy and standard deviation. Both training and testing sets were formed by an inter-user approach, i.e., summing up all subjects’ EEG data and shuffling it. The RCA algorithm is applied on the training set, and the selected features are used as a reference over the testing set. The process for training and testing data was introduced in Figure 2. The experimental environment was based on the Python programming language and its libraries.

The results of the experiments were gathered in Table 3 and Table 4. Table 3 presents the results of channel selection, where each row represents a particular feature selection approach. The fourth column contains the names of selected channels along with bands of frequencies, and the last column illustrates the electrodes’ locations.

Table 3.

Channel selection.

Table 4.

Results of classification accuracy [in %].

It can be seen in Table 3 that several methods, including the proposed RCA, selected Cz electrode, which is consistent with the perspective of psychology. The study described in [63] emphasized the strong activity of the Cz electrode associated with emotional states. We can also notice that apart from the central lobe, most of the methods (i.e., CHS_RCA, CHS1, CHS2, CHS3, CHS4, CHS5) favor the right part of the brain. It can by justified by two psychological factors, namely:

- the studies show that higher left-brain activity is related to a positive emotional state, while higher right-brain activity reflects a negative emotional state [41],

- negative emotions are generally believed to elicit more reactivity [39,40,64].

The analysis of frequency bands selected by the methods presented in Table 3 revealed that CHS3, CHS4 and CHS6 focused on the Beta band, whereas CHS7 included only the Theta band. Our proposed method CHS_RCA distinguished different bands. These findings can be useful in emotion differentiation, as according to the studies [65,66,67]:

- the lateral temporal areas are mostly stimulated by positive emotions in beta and gamma bands,

- the neural patterns of neutral emotions have higher alpha responses at parietal and occipital sites,

- the neural patterns have significant higher delta responses at parietal and occipital sites and higher gamma responses at prefrontal sites for negative emotions.

Table 4 describes classification results for RCA channel selection compared to classification based on all electrodes and three other solutions, as introduced in Section 3.4. It is worth mentioning that some of the channel selection methods were related to a particular emotion, and therefore, some of the corresponding cells in Table 4 may be left blank (—). The third and the sixth column of Table 4 highlight the difference between classification accuracy attained by the subset of channels pointed by the proposed RCA algorithm and the corresponding channel selection method.

One can notice that the RCA outperformed other channel selection methods in terms of valence and arousal classification. Moreover, most of the outperformed differences were statistically significant (p value ). The RCA algorithm returned worse results only in three out of twelve comparisons, wherein none of those differences was statistically significant. What is more, the CH2 method—three channels proposed by Koelstra et al. in [10] were adjusted to only one type of emotion classified, whereas our method may deal with different types of emotions.

It is also worth emphasizing that our RCA algorithm is an unsupervised machine learning technique, which is an advantage in terms of uncertain or unlabeled data. The variability in emotion expression and the subjectivity of emotion perception may lead to datasets with missing or uncertain labels. Many attempts have been undertaken to solve this problem, with the application of unsupervised methods of machine learning being among the most popular [68,69].

It can be also noted that the attained classification results of emotions may be perceived as inferior in comparison to works related to EEG signal analysis in other domains [46]. However, it must be stressed that efficient extraction of features for emotion classification is more complex as emotions are less predictable and less explainable by EEG signals [70].

To conclude, the presented results of the study are very encouraging. However, in the field of emotion classification and EEG analysis, there is still abundant room for further analysis. Firstly, we performed our experiments on only one dataset. Although the DEAP dataset is a primary source for studies related to emotion recognition [10,23,55,60,71], multiple datasets [72,73] will be used in further research to prove the significance of the proposed method. Secondly, deeper insight into signal decomposition methods should be considered, as they play an important role in BCI applications [74,75,76,77]. Our approach involved traditional signal denoising [10]. However, other denoising methods are worth considering, as the studies on multiscale component analysis (MSPCA) proved to have very good results in terms of classification accuracy for motor imagery applications [78]. The existing research studies have also confirmed that the combination of signal decomposition with dimensionality reduction techniques along with neural networks provide good accuracy for subject-dependent and -independent motor imagery based systems [44] and for emotion recognition solutions [79]. Therefore, future research may also explore neural networks and deep learning approaches. Moreover, data augmentation by sliding windows will be considered, as it is increasingly used with deep learning on EEG [80].

5. Conclusions

The proposed research addresses the problem of finding correlations between human emotions and the activated brain regions based on EEG signals. Optimal EEG channel selection and location is a challenging task due to the interconnections between electrodes. The proposed hybrid method incorporating the RCA algorithm finds combinations of electrodes and their frequency bands, which are least correlated with one another, and therefore, enables redundancy reduction and classification advancement. The obtained classification accuracy results for two emotions—valence and arousal—were, on average, 4.20–8.44% greater than channel selections suggested in different works.

Our method based on the Reversed Correlation Algorithm is simple to use. At the same time, it significantly reduces the number of data needed for the analysis. As a method of unsupervised machine learning techniques, it can also be used for unlabeled data or datasets where the obtained labels are uncertain.

Nonetheless, there is still a need for additional study. Therefore, further research is planned to investigate multiple datasets. Moreover, deeper insight into denoising methods and decomposition in terms of frequency-domains and time-frequency domains is planned. Future research may also explore neural networks and deep learning approaches to find unknown attribute correlations.

Author Contributions

Conceptualization, A.W. and A.D.; methodology, A.W.; software, A.D.; validation, A.W. and A.D.; formal analysis, A.W.; investigation, A.W. and A.D.; data curation, A.D.; writing—original draft preparation, A.W.; writing—review and editing, A.W. and A.D.; visualization, A.W. and A.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cai, H.; Chen, Y.; Han, J.; Zhang, X.; Hu, B. Study on feature selection methods for depression detection using three-electrode EEG data. Interdiscip. Sci. Comput. Life Sci. 2018, 10, 558–565. [Google Scholar] [CrossRef] [PubMed]

- Hwang, H.J.; Kim, S.; Choi, S.; Im, C.H. EEG-based brain-computer interfaces: A thorough literature survey. Int. J. Hum. Comput. Interact. 2013, 29, 814–826. [Google Scholar] [CrossRef]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-based brain-computer interfaces using motor-imagery: Techniques and challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [PubMed]

- Nesse, R.M.; Ellsworth, P.C. Evolution, emotions, and emotional disorders. Am. Psychol. 2009, 64, 129–139. [Google Scholar] [CrossRef] [PubMed]

- Berry, D.S.; Pennebaker, J.W. Nonverbal and Verbal Emotional Expression and Health. Psychother. Psychosom. 1993, 59, 11–19. [Google Scholar] [CrossRef]

- Jacob, H.; Kreifelts, B.; Brück, C.; Erb, M.; Hösl, F.; Wildgruber, D. Cerebral integration of verbal and nonverbal emotional cues: Impact of individual nonverbal dominance. NeuroImage 2012, 61, 738–747. [Google Scholar] [CrossRef]

- Stough, C.; Saklofske, D.H.; Parker, J.D.A. Assessing Emotional Intelligence. Theory, Research, and Applications. In The Springer Series on Human Exceptionality; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Petrantonakis, P.; Hadjileontiadis, L. Emotion Recognition from EEG Using Higher Order Crossings. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 186–197. [Google Scholar] [CrossRef]

- Masruroh, A.H.; Imah, E.M.; Rahmawati, E. Classification of Emotional State Based on EEG Signal using AMGLVQ. Procedia Comput. Sci. 2019, 157, 552–559. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074. [Google Scholar] [CrossRef]

- Maswanganyi, C.; Owolawi, C.; Tu, P.; Du, S. Overview of Artifacts Detection and Elimination Methods for BCI Using EEG. In Proceedings of the 3rd IEEE International Conference on Image, Vision and Computing, Chongqing, China, 27–29 June 2018. [Google Scholar]

- Issa, M.F.; Juhasz, Z. Improved EOG Artifact Removal Using Wavelet Enhanced Independent Component Analysis. Brain Sci. 2019, 9, 355. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Zhou, Z.; Hu, S.; Zhang, J.; Babiloni, F.; Dai, G. Automatic and Direct Identification of Blink Components from Scalp EEG. Sensors 2013, 13, 10783–10801. [Google Scholar] [CrossRef] [PubMed]

- Dora, C.; Biswal, P.K. Correlation-based ECG Artifact Correction from Single Channel EEG using Modified Variational Mode Decomposition. Comput. Methods Programs Biomed. 2020, 183, 105092. [Google Scholar] [CrossRef] [PubMed]

- Dora, C.; Patro, R.N.; Rout, S.K.; Biswal, P.K.; Biswal, B. Adaptive SSA Based Muscle Artifact Removal from Single Channel EEG using Neural Network Regressor. IRBM 2020. [Google Scholar] [CrossRef]

- Lahiri, R.; Rakshit, P.; Konar, A. Evolutionary perspective for optimal selection of EEG electrodes and features. Biomed. Signal Process. Control 2017, 36, 113–137. [Google Scholar] [CrossRef]

- Datta, S.; Rakshit, P.; Konar, A.; Nagar, A.K. Selecting the optimal EEG electrode positions for a cognitive task using an artificial bee colony with adaptive scale factor optimization algorithm. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 2748–2755. [Google Scholar]

- Baig, M.Z.; Aslam, N.; Shum, H.P. Filtering techniques for channel selection in motor imagery EEG applications: A survey. Artif. Intell. Rev. 2020, 53, 1207–1232. [Google Scholar] [CrossRef]

- Nakisa, B.; Rastgoo, M.N.; Tjondronegoro, D.; Chandran, V. Evolutionary computation algorithms for feature selection of EEG-based emotion recognition using mobile sensors. Expert Syst. Appl. 2018, 93, 143–155. [Google Scholar] [CrossRef]

- Wang, X.W.; Nie, D.; Lu, B.L. EEG-based emotion recognition using frequency domain features and support vector machines. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2011; pp. 734–743. [Google Scholar]

- Liu, A.; Chen, K.; Liu, Q.; Ai, Q.; Xie, Y.; Chen, A. Feature Selection for Motor Imagery EEG Classification Based on Firefly Algorithm and Learning Automata. Sensors 2017, 17, 2576. [Google Scholar] [CrossRef]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.K.; Nasrabadi, A.M. A novel approach to emotion recognition using local subset feature selection and modified Dempster-Shafer theory. Behav. Brain Funct. 2018, 14, 17. [Google Scholar] [CrossRef]

- Wosiak, A.; Zakrzewska, D. Integrating Correlation-Based Feature Selection and Clustering for Improved Cardiovascular Disease Diagnosis. Complexity 2018, 2018 , 250706. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; LeCompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E. Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 1987, 53, 712–717. [Google Scholar] [CrossRef]

- Parrott, W.G. Emotions in Social Psychology: Essential Readings; Psychology Press: Hove, UK, 2001. [Google Scholar]

- Plutchik, R. The Nature of Emotions: Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 2001, 89, 344–350. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Kołakowska, A.; Szwoch, W.; Szwoch, M. A review of emotion recognition methods based on data acquired via smartphone sensors. Sensors 2020, 20, 6367. [Google Scholar] [CrossRef]

- Marin-Morales, J.; Llinares, C.; Guixeres, J.; Alcañiz, M. Emotion recognition in immersive virtual reality: From statistics to affective computing. Sensors 2020, 20, 5163. [Google Scholar] [CrossRef]

- Raims, S.; Buades, J.M.; Perales, F.J. Using a social robot to Evaluate facial expressions in the wild. Sensors 2020, 20, 6716. [Google Scholar] [CrossRef]

- Cruz-Albarran, I.A.; Benitez-Rangel, J.P.; Osornio-Rios, R.A.; Morales-Hernandez, L.A. Human emotions detection based on a smart-thermal system of thermographic images. Infrared Phys. Technol. 2017, 81, 250–261. [Google Scholar] [CrossRef]

- Goulart, C.; Valadäo, C.; Delisle-Rodriguez, D.; Funayama, D.; Favarato, A.; Baldo, G.; Binotte, V.; Caldeira, E.; Bastos-Filho, T. Visual and thermal image processing for facial specific landmark detection to infer emotions in a child-robot interaction. Sensors 2019, 19, 2844. [Google Scholar] [CrossRef]

- Chaitanya; Sarath, S.; Malavika; Prasanna; Karthik. Human Emotions Recognition from Thermal Images Using Yolo Algorithm. In Proceedings of the IEEE International Conference on Communication and Signal Processing, Chennai, India, 28–30 July 2020. [Google Scholar] [CrossRef]

- Ko, Y.; Hong, I.; Shin, H.; Kim, Y. Construction of a database of emotional speech using emotion sounds from movies and dramas. In Proceedings of the KICS-IEEE International Conference on Information and Communications with Samsung LTE & 5G Special Workshop, Hanoi, Vietnam, 26–28 June 2017; pp. 266–267. [Google Scholar]

- Zhu, L.; Chen, L.C.; Zhao, D.; Zhou, J.; Zhang, W. Emotion Recognition from Chinese Speech for Smart Affective Services Using a Combination of SVM and DBN. Sensors 2017, 17, 1694. [Google Scholar] [CrossRef]

- Farooq, M.; Hussain, F.; Baloch, N.K.; Raja, F.R.; Yu, H.; Zikria, Y.B. Impact of Feature Selection Algorithm on Speech Emotion Recognition Using Deep Convolutional Neural Network. Sensors 2020, 20, 6008. [Google Scholar] [CrossRef]

- Chen, J.X.; Zhang, P.W.; Mao, Z.J.; Huang, Y.F.; Jiang, D.M.; Zhang, Y.N. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Cacioppo, J.T.; Berntson, G.G.; Larsen, J.T.; Pohlmann, K.M.; Ito, T.A. The psychophysiology of emotion. In Handbook of Emotions, 2nd ed.; Guilford Press: New York, NY, USA, 2000; pp. 173–191. [Google Scholar]

- Ekman, P.; Levenson, R.W.; Friesen, W.V. Autonomic nervous system activity distinguishes among emotions. Science 1983, 221, 1208–1210. [Google Scholar] [CrossRef] [PubMed]

- Coan, J.A.; Allen, J.J.B. Frontal EEG asymmetry as a moderator and mediator of emotion. Biol. Psychol. 2004, 67, 7–50. [Google Scholar] [CrossRef] [PubMed]

- Frantzidis, C.A.; Bratsas, C.; Papadelis, C.L.; Konstantinidis, E.; Pappas, C.; Bamidis, P.D. Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 589–597. [Google Scholar] [CrossRef]

- Tong, L.; Zhao, J.; Wenli, F. Emotion recognition and channel selection based on EEG Signal. In Proceedings of the 11th International Conference on Intelligent Computation Technology and Automation, Changsha, China, 22–23 September 2018; pp. 101–105. [Google Scholar]

- Sadiq, M.T.; Yu, X.; Yuan, Z. Exploiting dimensionality reduction and neural network techniques for the development of expert brain–computer interfaces. Expert Syst. Appl. 2020, 164, 114031. [Google Scholar] [CrossRef]

- Garcia-Moreno, F.M.; Bermudez-Edo, M.; Garrido, J.L.; Rodriguez-Fortiz, M.J. Reducing Response Time in Motor Imagery Using A Headband and Deep Learning. Sensors 2020, 20, 6730. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Zeming, F.; Rehman, A.U.; Ullah, I.; Li, G.; Xiao, G. Motor Imagery EEG Signals Decoding by Multivariate Empirical Wavelet Transform-Based Framework for Robust Brain–Computer Interfaces. IEEE Access 2019, 7, 171431–171451. [Google Scholar] [CrossRef]

- Kee, C.Y.; Ponnambalam, S.G.; Loo, C.K. Binary and multi-class motor imagery using Renyi entropy for feature extraction. Neural Comput. Appl. 2017, 28, 2051–2062. [Google Scholar] [CrossRef]

- Shin, Y.; Lee, S.; Ahn, M.; Cho, H.; Jun, S.C.; Lee, H.N. Simple adaptive sparse representation based classification schemes for EEG based brain–computer interface applications. Comput. Biol. Med. 2015, 66, 29–38. [Google Scholar] [CrossRef]

- Wang, G.; Deng, Z.; Choi, K.S. Detection of epilepsy with Electroencephalogram using rule-based classifiers. Neurocomputing 2017, 228, 283–290. [Google Scholar] [CrossRef]

- Gu, Y.; Cleeren, E.; Dan, J.; Claes, K.; Paesschen, W.V.; Huffel, S.V.; Hunyadi, B. Comparison between Scalp EEG and Behind-the-Ear EEG for Development of a Wearable Seizure Detection System for Patients with Focal Epilepsy. Sensors 2017, 18, 29. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Xue, W.; Li, Y.; Luo, M.; Huang, J.; Cui, W.; Huang, C. Automatic Epileptic Seizure Detection in EEG Signals Using Multi-Domain Feature Extraction and Nonlinear Analysis. Entropy 2017, 19, 222. [Google Scholar] [CrossRef]

- Molla, M.K.I.; Hassan, K.M.; Islam, M.R.; Tanaka, T. Graph Eigen Decomposition-Based Feature-Selection Method for Epileptic Seizure Detection Using Electroencephalography. Sensors 2020, 20, 4639. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Qiu, L.; Li, R.; He, Z.; Xiao, J.; Liang, Y.; Wang, F.; Pan, J. Enhancing BCI-Based emotion recognition using an improved particle swarm optimization for feature selection. Sensors 2020, 20, 3028. [Google Scholar] [CrossRef] [PubMed]

- Ko, L.-W.; Chikara, R.K.; Lee, Y.-C.; Lin, W.-C. Exploration of User’s Mental State Changes during Performing Brain–Computer Interface. Sensors 2020, 20, 3169. [Google Scholar] [CrossRef]

- Pan, C.; Shi, C.; Mu, H.; Li, J.; Gao, X. EEG-Based Emotion Recognition Using Logistic Regression with Gaussian Kernel and Laplacian Prior and Investigation of Critical Frequency Bands. Appl. Sci. 2020, 10, 1619. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Arslan, M.T.; Eraldemir, S.G.; Yildirim, E. Channel selection from EEG signals and application of support vector machine on EEG data. In Proceedings of the IEEE International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 1–17 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, S.; Tong, J.; Meng, J.; Yang, J.; Zhao, X.; He, F.; Qi, H.; Ming, D. Study on an effective cross-stimulus emotion recognition model using EEGs based on feature selection and support vector machine. Int. J. Mach. Learn. Cybern. 2018, 9, 721–726. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Hyperspectral Image Segmentation Using a New Bayesian Approach With Active Learning. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 3947–3960. [Google Scholar] [CrossRef]

- Atkinson, J.; Campos, D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 2016, 47, 35–41. [Google Scholar] [CrossRef]

- Yanagimoto, M.; Sugimoto, C. Recognition of persisting emotional valence from EEG using convolutional neural networks. In Proceedings of the IEEE 9th International Workshop on Computational Intelligence and Applications (IWCIA), Hiroshima, Japan, 5 November 2016; pp. 27–32. [Google Scholar]

- Yanagimoto, M.; Sugimoto, C.; Nagao, T. Frequency filter networks for EEG-based recognition. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 270–275. [Google Scholar]

- Yang, Y.; Zhou, J. Recognition and analyses of EEG & ERP signals related to emotion: From the perspective of psychology. In Proceedings of the IEEE First International Conference on Neural Interface and Control, Wuhan, China, 26–28 May 2005; pp. 96–99. [Google Scholar]

- Hu, X.; Yu, J.; Song, M.; Yu, C.; Wang, F.; Sun, P.; Wang, D.; Zhang, D. EEG correlates of ten positive emotions. Front. Hum. Neurosci. 2017, 11, 26. [Google Scholar] [CrossRef] [PubMed]

- Davidson, R.J. Anterior cerebral asymmetry and the nature of emotion. Brain Cogn. 1992, 20, 125–151. [Google Scholar] [CrossRef]

- Nie, D.; Wang, X.W.; Shi, L.C.; Lu, B.L. EEG-based emotion recognition during watching movies. In Proceedings of the 5th International IEEE/EMBS Conference on Neural Engineering, Cancun, Mexico, 2 April–1 May 2011; pp. 667–670. [Google Scholar]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2019, 10, 417–429. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Carro, B.; Sanchez-Esguevillas, A.; Lloret, J. Conditional Variational Autoencoder for Prediction and Feature Recovery Applied to Intrusion Detection in IoT. Sensors 2017, 17, 1967. [Google Scholar] [CrossRef] [PubMed]

- De Amorim, R.C.; Makarenkov, V.; Mirkin, B. Core clustering as a tool for tackling noise in cluster labels. J. Classif. 2019, 37, 143–157. [Google Scholar] [CrossRef]

- Kwon, Y.H.; Shin, S.B.; Kim, S.D. Electroencephalography based fusion two-dimensional (2D)-convolution neural networks (CNN) model for emotion recognition system. Sensors 2018, 18, 1383. [Google Scholar] [CrossRef]

- Chen, M.; Han, J.; Guo, L.; Wang, J.; Patras, I. Identifying valence and arousal levels via connectivity between EEG channels. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Xi’an, China, 21–24 September 2015; pp. 63–69. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Fan, Z.; Rehman, A.U.; Li, G.; Xiao, G. Motor imagery EEG signals classification based on mode amplitude and frequency components using empirical wavelet transform. IEEE Access 2019, 7, 127678–127692. [Google Scholar] [CrossRef]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Cao, Z.; Lin, C.T.; Lai, K.L.; Ko, L.W.; King, J.T.; Liao, K.K.; Fuh, J.-L.; Wang, S.-J. Extraction of SSVEPs-based inherent fuzzy entropy using a wearable headband EEG in migraine patients. IEEE Trans. Fuzzy Syst. 2019, 28, 14–27. [Google Scholar] [CrossRef]

- Zhuang, N.; Zeng, Y.; Yang, K.; Zhang, C.; Tong, L.; Yan, B. Investigating patterns for self-induced emotion recognition from EEG signals. Sensors 2018, 18, 841. [Google Scholar] [CrossRef] [PubMed]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z. Motor imagery BCI classification based on novel two-dimensional modelling in empirical wavelet transform. Electron. Lett. 2020, 56, 1367–1369. [Google Scholar] [CrossRef]

- Opalka, S.; Stasiak, B.; Szajerman, D.; Wojciechowski, A. Multi-channel convolutional neural networks architecture feeding for effective EEG mental tasks classification. Sensors 2018, 18, 3451. [Google Scholar] [CrossRef] [PubMed]

- Lashgari, E.; Liang, D.; Maoz, U. Data augmentation for deep-learning-based electroencephalography. J. Neurosci. Methods 2020, 346, 108885. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).