A Modular Experimentation Methodology for 5G Deployments: The 5GENESIS Approach

Abstract

1. Introduction

- we detail the methodology components and corresponding templates, i.e., test cases, scenarios, slices, and ED. The analysis reveals that the methodology can be used by any 5G testbed, even outside the 5GENESIS facility, due to its modular and open-source nature [8];

- we analyze the use of the methodology under the 5GENESIS reference architecture, describing all of the steps leading to a fully automated experiment execution, from the instantiation of needed resources to the collection and analysis of results;

- we showcase the use of the methodology for testing components and configurations in the 5G infrastructure at the University of Málaga (UMA), i.e., one of the 5GENESIS testbeds; and,

- by means of our tests in a real 5G deployment, we provide initial 5G performance assessment and empirical KPI analysis. The results are provided, together with details on adopted scenarios and network configurations, making it possible to reproduce the tests in other testbeds (under the same or different settings) for comparison and benchmarking purposes.

2. Background and Related Work

2.1. Standardization on 5G KPI Testing and Validation

2.2. Research on 5G KPI Testing and Validation

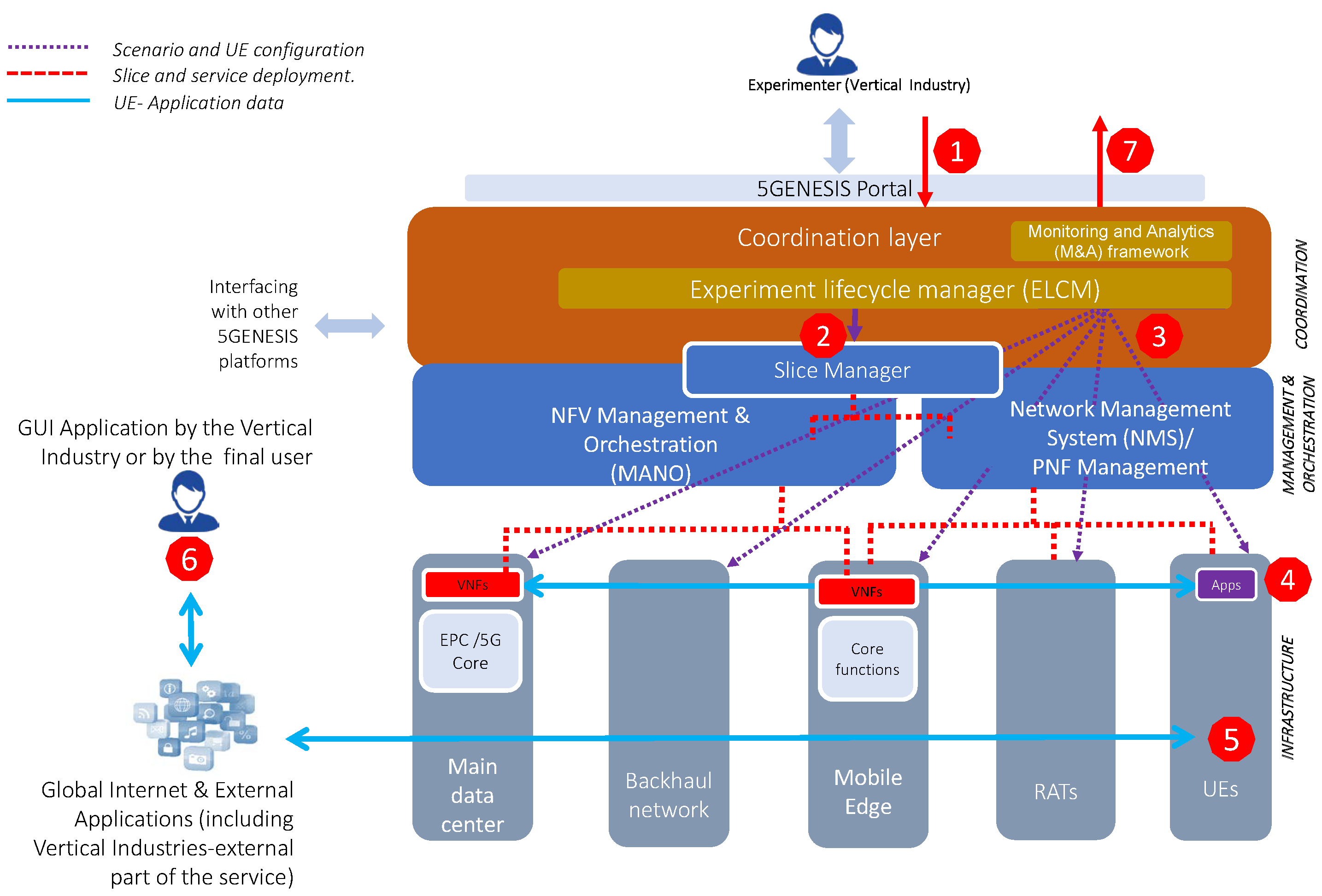

3. Overview of 5GENESIS Approach to KPI Testing and Validation

3.1. 5GENESIS Reference Architecture

3.2. 5GENESIS M&A Framework

- Infrastructure Monitoring (IM), which collects data on the status of architectural components, e.g., UE, RAN, core, and transport systems, as well as computing and storage distributed units;

- Performance Monitoring (PM), which executes measurements via dedicated probes for collecting E2E QoS/QoE indicators, e.g., throughput, latency, and vertical-specific KPIs;

- Storage and Analytics, which enable efficient data storage and perform KPI statistical validation and ML analyses.

4. Formalization of the Experimentation Methodology

4.1. Test Case

4.2. Scenario

4.3. Slice

4.4. Experiment Descriptor

4.5. Experiment Execution

5. Experimental Setup

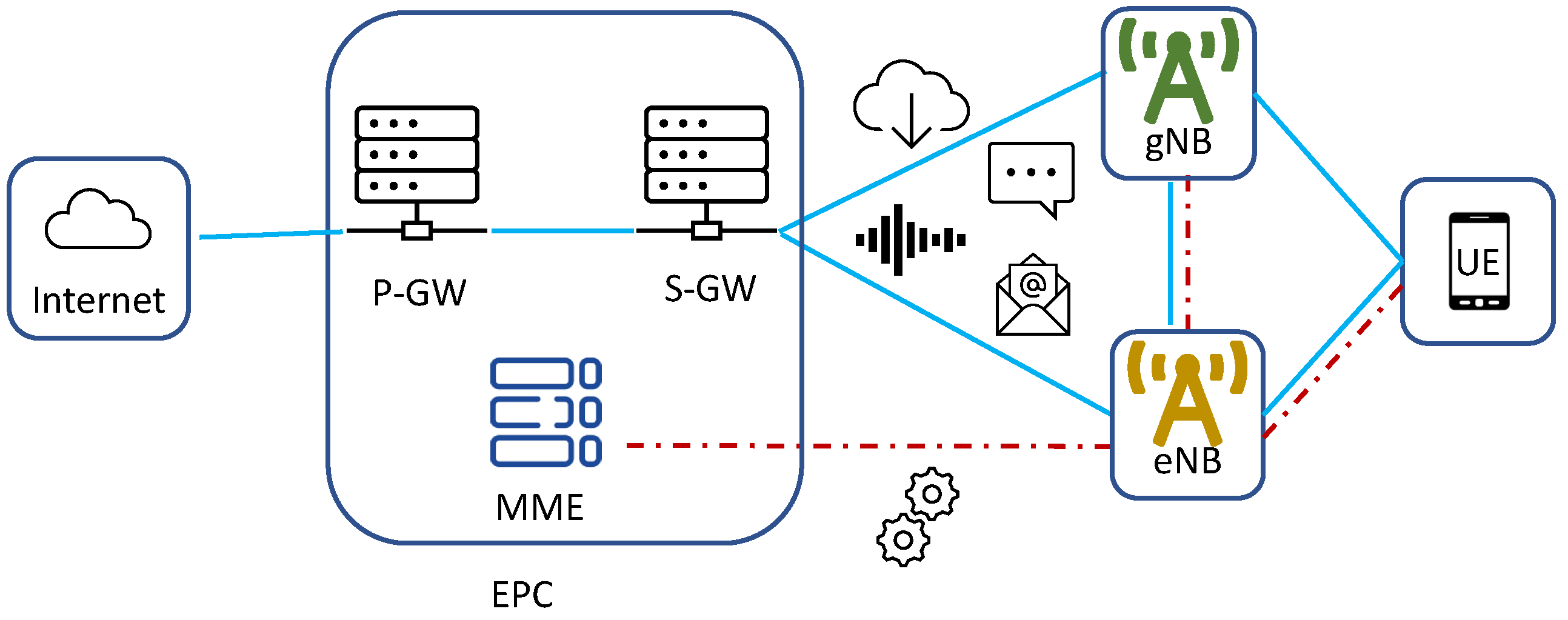

5.1. 5G Deployment at UMA Campus

5.2. Test Case Definition

5.3. Scenario Definition

5.4. Slice Definition

5.5. ED Definition

6. Experimental Results

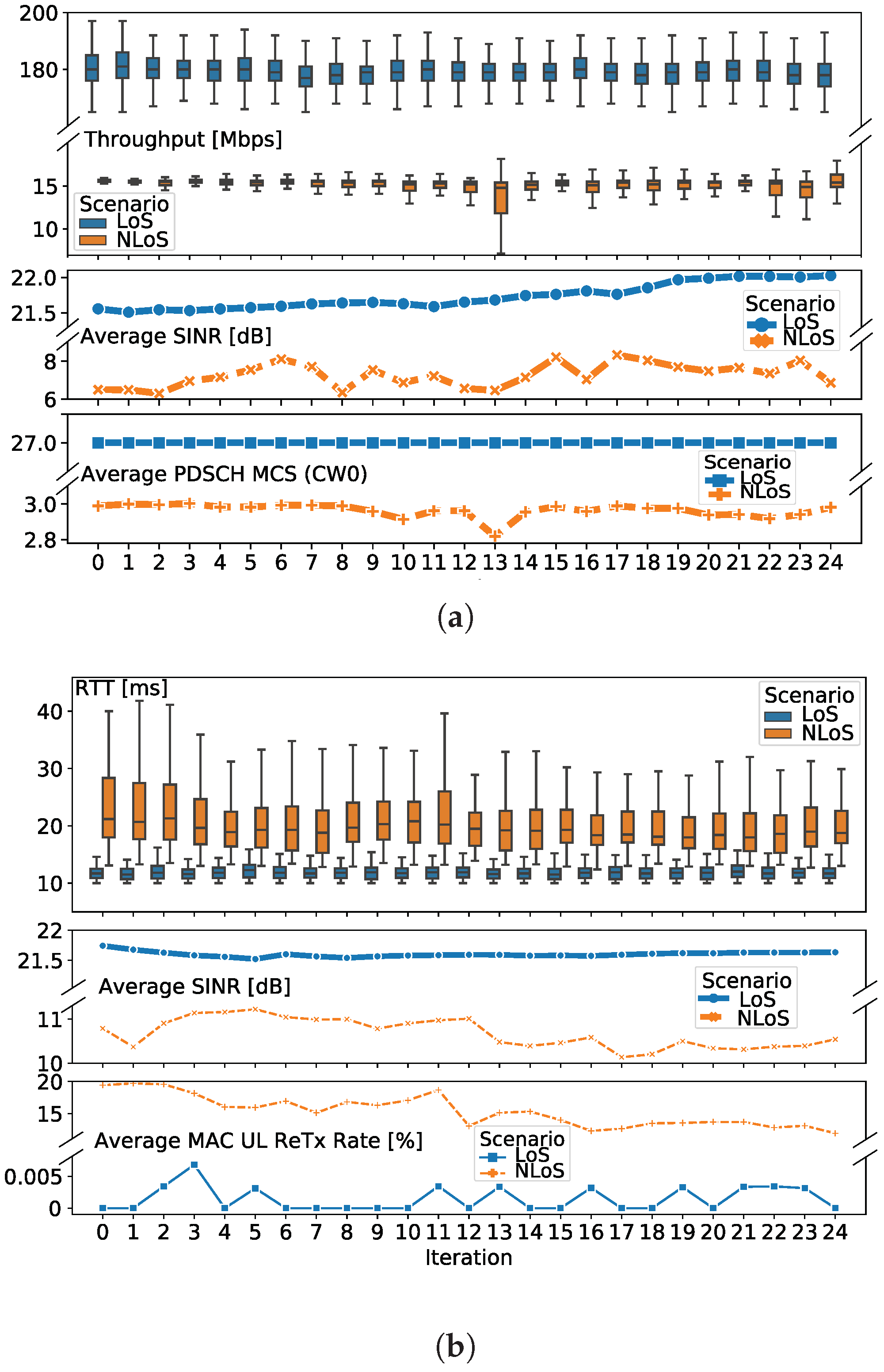

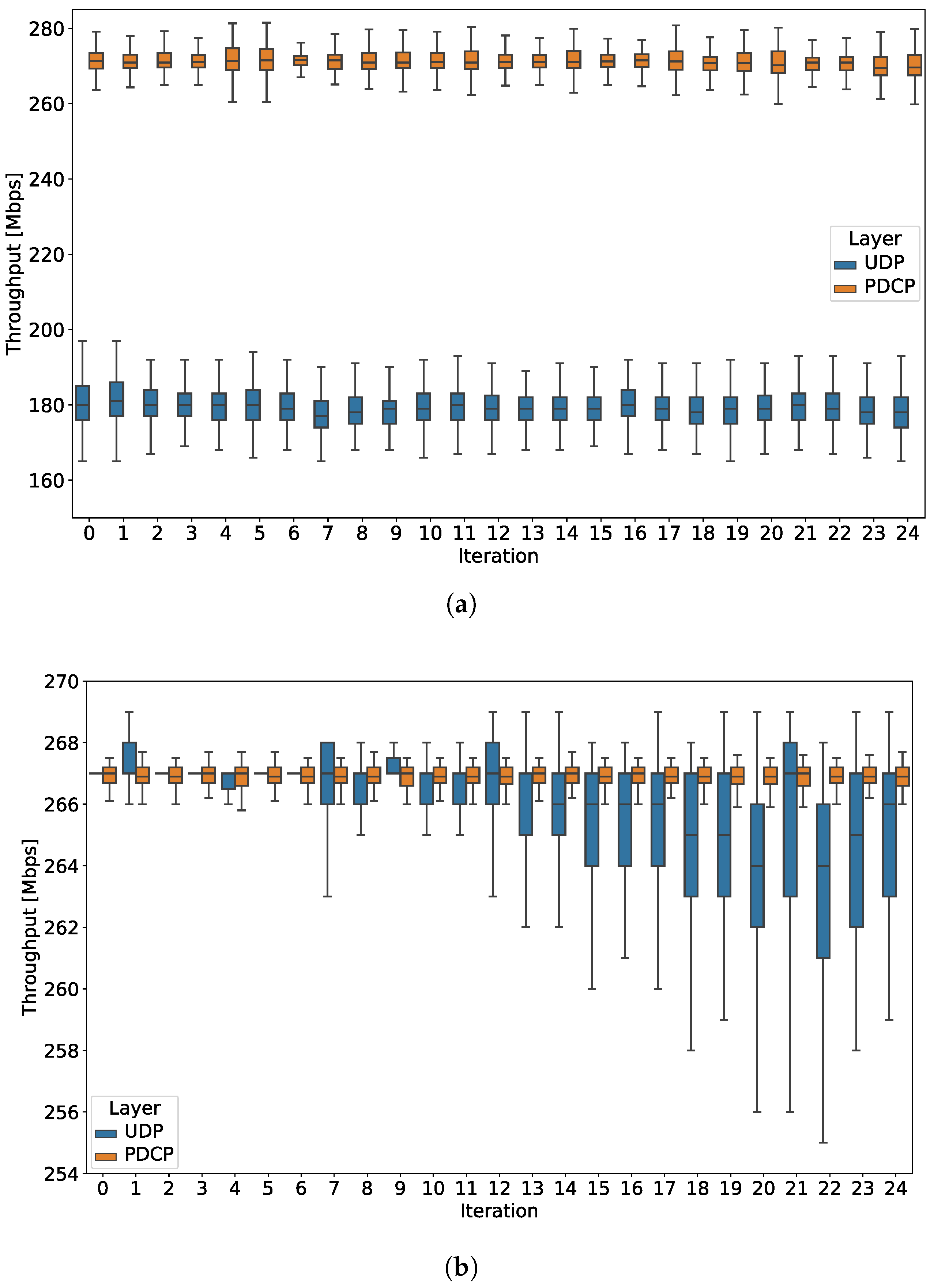

6.1. KPI Nalidation across Different Scenarios

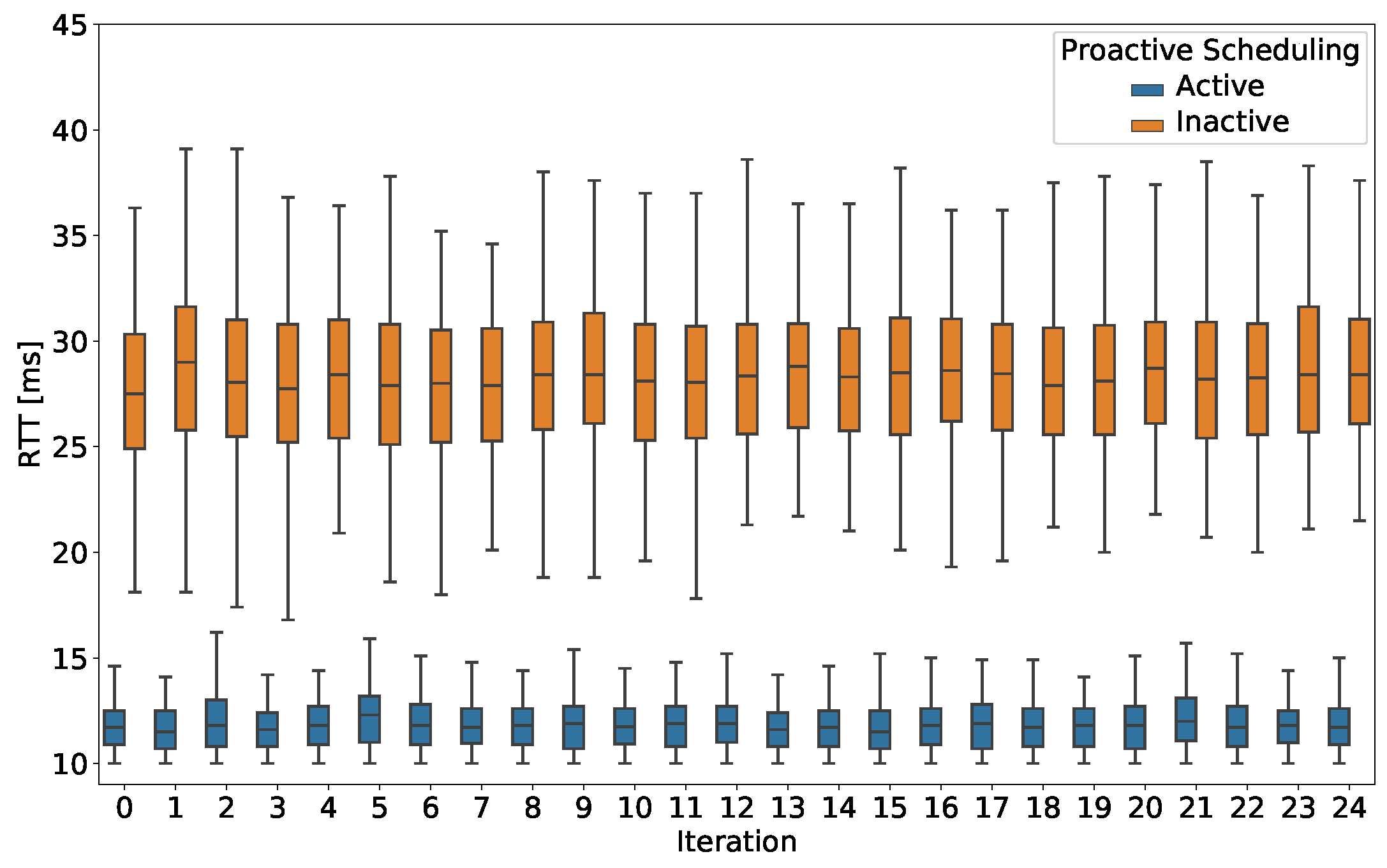

6.2. KPI Validation across Different Configurations

6.3. KPI Validation across Different Technologies

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- ITU-R M.2083-0. IMT Vision-Framework and Overall Objectives of the Future Development of IMT for 2020 and Beyond; ITU: Geneva, Switzerland, 2015. [Google Scholar]

- Shafi, M.; Molisch, A.F.; Smith, P.J.; Haustein, T.; Zhu, P.; Silva, P.D.; Tufvesson, F.; Benjebbour, A.; Wunder, G. 5G: A Tutorial Overview of Standards, Trials, Challenges, Deployment, and Practice. IEEE J. Sel. Areas Commun. 2017, 35, 1201–1221. [Google Scholar] [CrossRef]

- 5G-PPP Test, Measurement and KPIs Validation Working Group, Validating 5G Technology Performance—Assessing 5G Architecture and Application Scenarios, 5G-PPP White Paper. Available online: https://5g-ppp.eu/wp-content/uploads/2019/06/TMV-White-Paper-V1.1-25062019.pdf (accessed on 30 September 2020).

- Foegelle, M.D. Testing the 5G New Radio. In Proceedings of the 2019 13th European Conference on Antennas and Propagation (EuCAP’19), Krakow, Poland, 31 March–5 April 2019. [Google Scholar]

- Koumaras, H.; Tsolkas, D.; Gardikis, G.; Gomez, P.M.; Frascolla, V.; Triantafyllopoulou, D.; Emmelmann, M.; Koumaras, V.; Osma, M.L.G.; Munaretto, D.; et al. 5GENESIS: The Genesis of a flexible 5G Facility. In Proceedings of the 2018 23rd International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD’19), Barcelona, Spain, 17–19 September 2019. [Google Scholar]

- 5GENESIS Project. Deliverable D2.4 Final Report on Facility Design and Experimentation Planning. 2020. Available online: https://5genesis.eu/wp-content/uploads/2020/07/5GENESIS_D2.4_v1.0.pdf (accessed on 30 September 2020).

- 5GENESIS Project. Deliverable D3.5 Monitoring and Analytics (Release A). 2019. Available online: https://5genesis.eu/wp-content/uploads/2019/10/5GENESIS_D3.5_v1.0.pdf (accessed on 30 September 2020).

- Open 5GENESIS Suite. 2020. Available online: https://github.com/5genesis (accessed on 30 September 2020).

- 3GPP TS 38.521-3; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Conformance Specification; Radio Transmission and Reception; Part 3: Range 1 and Range 2 Interworking Operation with Other Radios (Release 16), v16.4; 3GPP: Sophia Antipolis, France, May 2020.

- 3GPP TS 38.521-1; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Conformance Specification; Radio Transmission and Reception; Part 1: Range 1 Standalone (Release 16), v16.4; 3GPP: Sophia Antipolis, France, July 2020.

- 3GPP TS 38.521-2; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Conformance Specification; Radio Transmission and Reception; Part 2: Range 2 Standalone (Release 16), v16.4; 3GPP: Sophia Antipolis, France, July 2020.

- 3GPP TS 38.521-4; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Conformance Specification; Radio Transmission and Reception; Part 4: Performance (Release 16), v16.4; 3GPP: Sophia Antipolis, France, July 2020.

- 3GPP TS 38.523-1; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; 5GS; User Equipment (UE) Conformance Specification; Part 1: Protocol (Release 16), v16.4; 3GPP: Sophia Antipolis, France, May 2020.

- 3GPP TS 38.523-2; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; 5GS; User Equipment (UE) Conformance Specification; Part 2: Applicability of Protocol Test Cases (Release 16), v16.4; 3GPP: Sophia Antipolis, France, June 2020.

- 3GPP TS 38.523-3; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; 5GS; User Equipment (UE) Conformance Specification; Part 3: Protocol Test Suites (Release 15), v15.8; 3GPP: Sophia Antipolis, France, June 2020.

- 3GPP TS 38.533; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Conformance Specification; Radio Resource Management (RRM) (Release 16); 3GPP: Sophia Antipolis, France, July 2020.

- CTIA Battery Life Programm Management Document; CTIA: Washisgton, DC, USA, 2018.

- 3GPP TS 28.552; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; Management and Orchestration; Management and Orchestration; 5G Performance Measurements (Release 16), v16.6; 3GPP: Sophia Antipolis, France, July 2020.

- 3GPP TS 32.425; 3rd Generation Partnership Project; Telecommunication Management; Performance Management (PM); Performance Measurements Evolved Universal Terrestrial Radio Access Network (E-UTRAN) (Release 16), v16.5; 3GPP: Sophia Antipolis, France, January 2020.

- 3GPP TS 32.451; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; Telecommunication Management; Key Performance Indicators (KPI) for Evolved Universal Terrestrial Radio Access Network (E-UTRAN); Requirements (Release 16), v16.0; 3GPP: Sophia Antipolis, France, September 2019.

- 3GPP TS 32.404; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; Telecommunication Management; Performance Management (PM); Performance Measurements; Definitions and Template (Release 16), v16.0; 3GPP: Sophia Antipolis, France, July 2020.

- ETSI GS NFV-TST 010; Network Functions Virtualisation (NFV) Release 2; Testing; API Conformance Testing Specification, v2.6.1; ETSI: Sophia Antipolis, France, September 2020.

- 3GPP TS 37.901; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; User Equipment (UE) Application Layer Data throughput Performance (Release 15); 3GPP: Sophia Antipolis, France, May 2018.

- 3GPP TR 37.901-5; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; Study on 5G NR User Equipment (UE) Application Layer Data throughput Performance (Release 16), v16.0; 3GPP: Sophia Antipolis, France, May 2020.

- 5GENESIS Project Deliverable D6.1 “Trials and Experimentation (Cycle 1). 2019. Available online: https://5genesis.eu/wp-content/uploads/2019/12/5GENESIS_D6.1_v2.00.pdf (accessed on 30 September 2020).

- 3GPP TS 28.554; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; Management and Orchestration; 5G End to End Key Performance Indicators (KPI) (Release 16), v16.5; 3GPP: Sophia Antipolis, France, July 2020.

- NGMN Alliance. Definition of the Testing Framework for the NGMN 5G Pre-Commercial Networks Trials; NGMN: Frankfurt, Germany, 2019. [Google Scholar]

- 3GPP TR 38.913; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; Study on Scenarios and Requirements for Next Generation Access Technologies (Release 16), v16.5; 3GPP: Sophia Antipolis, France, July 2020.

- Díaz-Zayas, A.; García, B.; Merino, P. An End-to-End Automation Framework for Mobile Network Testbeds. Mob. Inf. Syst. 2019, 2019, 2563917. [Google Scholar] [CrossRef]

- Espada, A.R.; Gallardo, M.D.; Salmerón, A.; Panizo, L.; Merino, P. A formal approach to automatically analyse extra-functional properties in mobile applications. Softw. Test. Verif. Reliab. 2019, 29, e1699. [Google Scholar]

- Tateishi, T.K.; Kunta, D.; Harada, A.; Kishryama, Y.; Parkvall, S.; Dahlman, E.; Furuskog, J. Field experiments on 5G radio access using 15-GHz band in outdoor small cell environment. In Proceedings of the 2015 IEEE 26th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC’15), Hong Kong, China, 30 August–2 September 2015; pp. 851–855. [Google Scholar]

- Moto, K.; Mikami, M.; Serizawa, K.; Yoshino, H. Field Experimental Evaluation on 5G V2N Low Latency Communication for Application to Truck Platooning. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Meng, X.; Li, J.; Zhou, D.; Yang, D. 5G technology requirements and related test environments for evaluation. China Commun. 2016, 13, 42–51. [Google Scholar] [CrossRef]

- Li, X.; Deng, W.; Liu, L.; Tian, Y.; Tong, H.; Liu, J.; Ma, Y.; Wang, J.; Horsmanh, S.; Gavras, A. Novel Test Methods for 5G Network Performance Field Trial. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC’20 Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Xu, D.; Zhou, A.; Zhang, X.; Wang, G.; Liu, X.; An, C.; Shi, Y.; Liu, L.; Ma, H. Understanding Operational 5G: A First Measurement Study on Its Coverage, Performance and Energy Consumption. In Proceedings of the Annual conference of the ACM Special Interest Group on Data Communication on the Applications, Technologies, Architectures, and Protocols for Computer Communication (SIGCOMM’20), Virtual Event, NY, USA, 10–14 August 2020; pp. 479–494. [Google Scholar]

- Narayanan, A.; Ramadan, E. A First Look at Commercial 5G Performance on Smartphones. In Proceedings of the Web Conference 2020 (WWW’20), Taipei Taiwan, 20–24 April 2020; pp. 894–905. [Google Scholar]

- Piri, E.; Ruuska, P.; Kanstrén, T.; Mäkelä, J.; Korva, J.; Hekkala, A.; Pouttu, A.; Liinamaa, O.; Latva-aho, M.; Vierimaa, K.; et al. 5GTN: A test network for 5G application development and testing. In Proceedings of the 2016 European Conference on Networks and Communications (EuCNC), Athens, Greece, 27–30 June 2016. [Google Scholar]

- Vidal, I.; Nogales, B.; Valera, F.; Gonzalez, L.F.; Sanchez-Aguero, V. A Multi-Site NFV Testbed for Experimentation With SUAV-Based 5G Vertical Services. IEEE Access 2020, 8, 111522–111535. [Google Scholar] [CrossRef]

- Mavromatis, A.; Colman-Meixner, C.; Silva, A.P.; Vasilakos, X.; Nejabati, R. A Software-Defined IoT Device Management Framework for Edge and Cloud Computing. IEEE Internet Things J. 2020, 7, 1718–1735. [Google Scholar] [CrossRef]

- Vucinic, M.; Chang, T.; Škrbić, B.; Kočan, E.; Pejanović-Djurišić, M. Key Performance Indicators of the Reference 6TiSCH Implementation in Internet-of-Things Scenarios. IEEE Access 2020, 8, 79147–79157. [Google Scholar] [CrossRef]

- Muñoz, J.; Rincon, F.; Chang, T.; Vilajosana, X.; Vermeulen, B.; Walcariu, T. OpenTestBed: Poor Man’s IoT Testbed. In Proceedings of the 2019 IEEE Conference on Computer Communications Workshops (INFOCOM’19 WKSHPS), Paris, France, 29 April–2 May 2019. [Google Scholar]

- Garcia-Aviles, G.; Gramaglia, M.; Serrano, P.; Banchs, A. POSENS: A Practical Open Source Solution for End-to-End Network Slicing. IEEE Wirel. Commun. 2018, 25, 30–37. [Google Scholar] [CrossRef]

- 5G PPP Architecture Working Group. View on 5G Architecture, Version 3.0. 2020. Available online: https://5g-ppp.eu/wp-content/uploads/2020/02/5G-PPP-5G-Architecture-White-Paper_final.pdf (accessed on 25 October 2020).

- 5GENESIS Project. Deliverable D5.3 Documentation and Supporting Material for 5G Stakeholders (Release A). 2020. Available online: https://5genesis.eu/wp-content/uploads/2020/07/5GENESIS-D5.3_v1.0.pdf (accessed on 30 September 2020).

- EURO-5G Project. Deliverable D2.6 Final Report on Programme Progress and KPIs. 2017. Available online: https://5g-ppp.eu/wp-content/uploads/2017/10/Euro-5G-D2.6_Final-report-on-programme-progress-and-KPIs.pdf (accessed on 30 September 2020).

- 3GPP TS 23.501; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; System Architecture for the 5G System (5GS); Stage 2; (Release 16), v16.5.1; 3GPP: Sophia Antipolis, France, August 2020.

- 3GPP TR 28.801; 3rd Generation Partnership Project; Technical Specification Group Services and System Aspects; Telecommunication Management; Study on Management and Orchestration of Network Slicing for Next Generation Network (Release 15), v15.1.0; 3GPP: Sophia Antipolis, France, January 2018.

- 5GENESIS Project. Deliverable D3.3 Slice Management (Release A). 2019. Available online: https://5genesis.eu/wp-content/uploads/2019/10/5GENESIS_D3.3_v1.0.pdf (accessed on 30 September 2020).

- Katana Slice Manager. Available online: https://github.com/medianetlab/katana-slice_manager (accessed on 30 September 2020).

- GSM Association. Official Document NG.116—Generic Network Slice Template; GSM Association: London, UK, 2020. [Google Scholar]

- Monroe Experiments. Available online: https://github.com/MONROE-PROJECT/Experiments (accessed on 30 September 2020).

- Mancuso, V.; Quirós, M.P.; Midoglu, C.; Moulay, M.; Comite, V.; Lutu, A.; Alay, Ö.; Alfredsson, S.; Rajiullah, M.; Brunström, A.; et al. Results from running an experiment as a service platform for mobile broadband networks in Europe. Comput. Commun. 2019, 133, 89–101. [Google Scholar] [CrossRef]

- GPP TS 37.340; 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; Evolved Universal Terrestrial Radio Access (E-UTRA) and NR; Multi-Connectivity; Stage 2 (Release 16), v16.2.0; 3GPP: Sophia Antipolis, France, June 2020.

- 5GENESIS Project. Deliverable D4.5 The Malaga Platform (Release B). 2020. Available online: https://5genesis.eu/wp-content/uploads/2020/02/5GENESIS_D4.5_v1.0.pdf (accessed on 25 October 2020).

- 3GPP TS 38.101-3; Technical Specification 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; User Equipment (UE) Radio Transmission and Reception; Part 3: Range 1 and Range 2 Interworking Operation with Other Radios (Release 16), v16.4.0; 3GPP: Sophia Antipolis, France, May 2020.

- 3GPP TS 38.214; Technical Specification 3rd Generation Partnership Project; Technical Specification Group Radio Access Network; NR; Physical Layer Procedures for Data (Release 15), v15.10.0; 3GPP: Sophia Antipolis, France, May 2020.

| Main Component | Reference | Focus |

|---|---|---|

| 3GPP TS 38.521-3 [9] | 5GNR UE RF conformance. NSA | |

| 3GPP TS 38.521-1/2 [10,11] | 5GNR UE RF conformance. SA FR1/FR2 | |

| UE | 3GPP TS 38.521-4 [12] | 5GNR UE RF conformance. Performance |

| 3GPP TS 38.523-1/2/3 [13,14,15] | 5GNR UE protocol conformance | |

| 3GPP TS 38.533 [16] | 5GNR UE RRM conformance | |

| GCF/CTIA [17] | Battery consumption | |

| RAN/Core | 3GPP TS 28.552 [18] | RAN, 5GC, and network slicing performance |

| RAN | 3GPP TS 32.425 [19], TS 32.451 [20] | RRC aspects |

| NFV | ETSI GS NFV-TST 010 [22] | Testing of ETSI NFV blocks |

| 3GPP TR 37.901 [23], TR 37.901-5 [24] | 4G/5G throughput testing at application layer | |

| E2E | 3GPP TR 28.554 [26] | Slicing performance at network side |

| NGMN [27] | eMBB and uRLLC (partial) KPI testing | |

| Network elements | 3GPP TS 32.404 [21] | Measurement templates |

| Test Case: TC_THR_UDP | Metric: Throughput | |

|---|---|---|

| Target KPI: UDP Throughput The UDP Throughput test case aims at assessing the maximum throughput achievable between a source and a destination.

| ||

Methodology: For measuring UDP Throughput, a packet stream is emitted from a source and received by a data sink (destination). The amount of data (bits) successfully transmitted per unit of time (seconds), as measured by the traffic generator, shall be recorded. A UDP-based traffic stream is created between the source and destination while using the iPerf2 tool.

The test case shall include the consecutive execution of several iterations, according to the following properties:

| ||

| iPerf2 configuration: | ||

| Parameter | iPerf option | Suggested value |

| Throughput measurement interval | –interval | 1 |

| Number of simultaneously transmitting probes/processes/threads | –parallel | −4 (in order to generate high data rate in the source) |

| Bandwidth limitation set to above the maximum bandwidth available | –b | Depends on the maximum theoretical throughput available in the network |

| Format to report iPerf results | –format | m [Mbps] |

Calculation process and output: Once the KPI samples are collected, time-stamped (divided by iteration), and stored, evaluate relevant statistical indicators, e.g., average, median, standard deviation, percentiles, minimum, and maximum values, as follows:

| ||

| Complementary measurements (if available): Throughput at Packet Data Convergence Protocol (PDCP) and Medium Access Control (MAC) layers, Reference Signal Received Power (RSRP), Reference Signal Received Quality (RSRQ), Channel Quality Indicator (CQI), Adopted Modulation, Rank Indicator (RI), Number of MIMO layers, MAC, and Radio Link Control (RLC) Downlink Block Error Rate (BLER). For each measurement (or selected ones), provide the average per iteration and for the entire test case, following the procedure in “Calculation process and output”. Note: packet loss rate is not recorded because constant traffic in excess of the available capacity will be injected and the excess will be marked as lost packets, as could be expected. | ||

| Preconditions: The scenario has been configured. In case of network slicing, the slice must be activated. The traffic generator should support the generation of the traffic pattern defined in “Methodology”. Connect a reachable UE (end point) in the standard 3GPP interface SGi or N6 (depending on whether the UE is connected to EPC or 5GC, respectively). Deploy the monitoring probes to collect throughput and complementary measurements. Ensure that, unless specifically requested in the scenario, no unintended traffic is present in the network. | ||

| Applicability: The measurement probes should be capable of injecting traffic into the system as well as determining the throughput of the transmission. | ||

Test case sequence:

| ||

| Scenario ID | SC_LoS_PS |

|---|---|

| Radio Access Technology | 5G NR |

| Standalone/Non-Standalone | Non-Standalone |

| LTE to NR frame shift | 3 ms |

| Cell Power | 40 dBm |

| Band | n78 |

| Maximum bandwidth per component carrier | 40 MHz |

| Subcarrier spacing | 30 kHz |

| Number of component carriers | 1 |

| Cyclic Prefix | Normal |

| Number of antennas on NodeB | 2 |

| MIMO schemes (codeword and number of layers) | 1 CW, 2 layers |

| DL MIMO mode | 2 × 2 Close Loop Spatial Multiplexing |

| Modulation schemes | 256-QAM |

| Duplex Mode | TDD |

| Power per subcarrier | 8.94 dBm/30 kHz |

| TDD uplink/downlink pattern | 2/8 |

| Random access mode | Contention-based |

| Scheduler configuration | Proactive scheduling |

| User location and speed | Close to the base station, direct line of sight, static |

| Background traffic | No |

| Computational resources available in the virtualized infrastructure | N/A |

| Parameter | Indicator | Scenario | |

|---|---|---|---|

| LoS | NLoS | ||

| UDP Throughput [Mbps] | Average | ± | ± |

| Median | ± | ± | |

| Min | ± | ± | |

| Max | ± | ± | |

| 5% Percentile | ± | ± | |

| 95% Percentile | ± | ± | |

| Standard deviation | ± | ± | |

| SINR [dB] | Average | ± | ± |

| PDSCH MCS CW0 | Average | ± | ± |

| RSRP [dBm] | Average | ± | ± |

| RSRQ [dB] | Average | ± | ± |

| PDSCH Rank | Average | ± | ± |

| MAC DL BLER [%] | Average | ± | ± |

| Parameter | Indicator | Scenario | |

|---|---|---|---|

| LoS | NLoS | ||

| RTT [ms] | Average | ± | ± |

| Median | ± | ± | |

| Min | ± | ± | |

| Max | ± | ± | |

| 5% Percentile | ± | ± | |

| 95% Percentile | ± | ± | |

| Standard deviation | ± | ± | |

| SINR [dB] | Average | ± | ± |

| MAC UL ReTx Rate [%] | Average | ± | ± |

| RSRP [dBm] | Average | ± | ± |

| RSRQ [dB] | Average | ± | ± |

| MAC DL BLER [%] | Average | ± | ± |

1 | { |

2 | " base_slice_descriptor ": { |

3 | " base_slice_des_id ": " AthonetEPC ", |

4 | " coverage ": [" Campus "], |

5 | " delay_tolerance ": true , |

6 | " network_DL_throughput ": { |

7 | " guaranteed ": 100.000 |

8 | }, |

9 | " ue_DL_throughput ": { |

10 | " guaranteed ": 100.000 |

11 | }, |

12 | " network_UL_throughput ": { |

13 | " guaranteed ":10.000 |

14 | }, |

15 | " ue_UL_throughput ": { |

16 | " guaranteed ": 10.000 |

17 | }, |

18 | " mtu ": 1500 |

19 | }, |

20 | } |

1 | { |

2 | ExperimentType : Standard / Custom / MONROE |

3 | Automated : <bool > |

4 | TestCases : <List [str ]> |

5 | UEs : <List [ str ]> UEs IDs |

6 | |

7 | Slices : <List [ str]> |

8 | NSs : <List [ Tuple [str , str ]]> ( NSD Id , Location ) |

9 | Scenarios : <List [str ]> |

10 | |

11 | ExclusiveExecution : <bool > |

12 | ReservationTime : <int > ( Minutes ) |

13 | |

14 | Application : <str > |

15 | Parameters : <Dict [str , obj ]> |

16 | |

17 | Remote : <str > Remote platform Id |

18 | RemoteDescriptor : <Experiment Descriptor > |

19 | |

20 | Version : <str > |

21 | Extra : <Dict [str ,obj ]> |

22 | } |

1 | { |

2 | ExperimentType : Standard |

3 | Automated : Yes |

4 | TestCases : TC_THR_UDP , TC_RTT |

5 | UEs : UE_1 |

6 | Slice : Default |

7 | Scenario : SC_LoS_PS , SC_NLoS_PS |

8 | ExclusiveExecution : yes |

9 | Version : 2.0 |

10 | } |

1 | { |

2 | ExperimentType : Standard |

3 | Automated : Yes |

4 | TestCases : TC_RTT |

5 | UEs : UE_1 |

6 | Slice : Default |

7 | Scenario : SC_LoS , SC_LoS_PS |

8 | ExclusiveExecution : yes |

9 | Version : 2.0 |

10 | } |

1 | { |

2 | ExperimentType : Standard |

3 | Automated : Yes |

4 | TestCases : TC_THR_UDP |

5 | UEs : UE_1 , UE_2 |

6 | Slice : Default |

7 | Scenario : SC_LoS_PS |

8 | ExclusiveExecution : yes |

9 | Version : 2.0 |

10 | } |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz Zayas, A.; Caso, G.; Alay, Ö.; Merino, P.; Brunstrom, A.; Tsolkas, D.; Koumaras, H. A Modular Experimentation Methodology for 5G Deployments: The 5GENESIS Approach. Sensors 2020, 20, 6652. https://doi.org/10.3390/s20226652

Díaz Zayas A, Caso G, Alay Ö, Merino P, Brunstrom A, Tsolkas D, Koumaras H. A Modular Experimentation Methodology for 5G Deployments: The 5GENESIS Approach. Sensors. 2020; 20(22):6652. https://doi.org/10.3390/s20226652

Chicago/Turabian StyleDíaz Zayas, Almudena, Giuseppe Caso, Özgü Alay, Pedro Merino, Anna Brunstrom, Dimitris Tsolkas, and Harilaos Koumaras. 2020. "A Modular Experimentation Methodology for 5G Deployments: The 5GENESIS Approach" Sensors 20, no. 22: 6652. https://doi.org/10.3390/s20226652

APA StyleDíaz Zayas, A., Caso, G., Alay, Ö., Merino, P., Brunstrom, A., Tsolkas, D., & Koumaras, H. (2020). A Modular Experimentation Methodology for 5G Deployments: The 5GENESIS Approach. Sensors, 20(22), 6652. https://doi.org/10.3390/s20226652