1. Introduction

Enterprise software systems are increasingly structured as a number of distributed components, called services, which communicate with one another. Individual services of an enterprise system often communicate with external requisite services from many different vendors to accomplish their tasks [

1]. However, a number of factors may prevent software development teams from developing components for enterprise systems on time, within budget, and with the expected quality and performance. Such a phenomenon occurs because the services that a newly developed (or significantly modified) component depends upon may be accessible for a limited time only or at a significant (monetary) cost. This situation may result in new components only being thoroughly tested after the completion of the development, thereby putting an entire deployment environment at risk if any undetected defaults cause ripple effects across multiple services in the respective deployment environment.

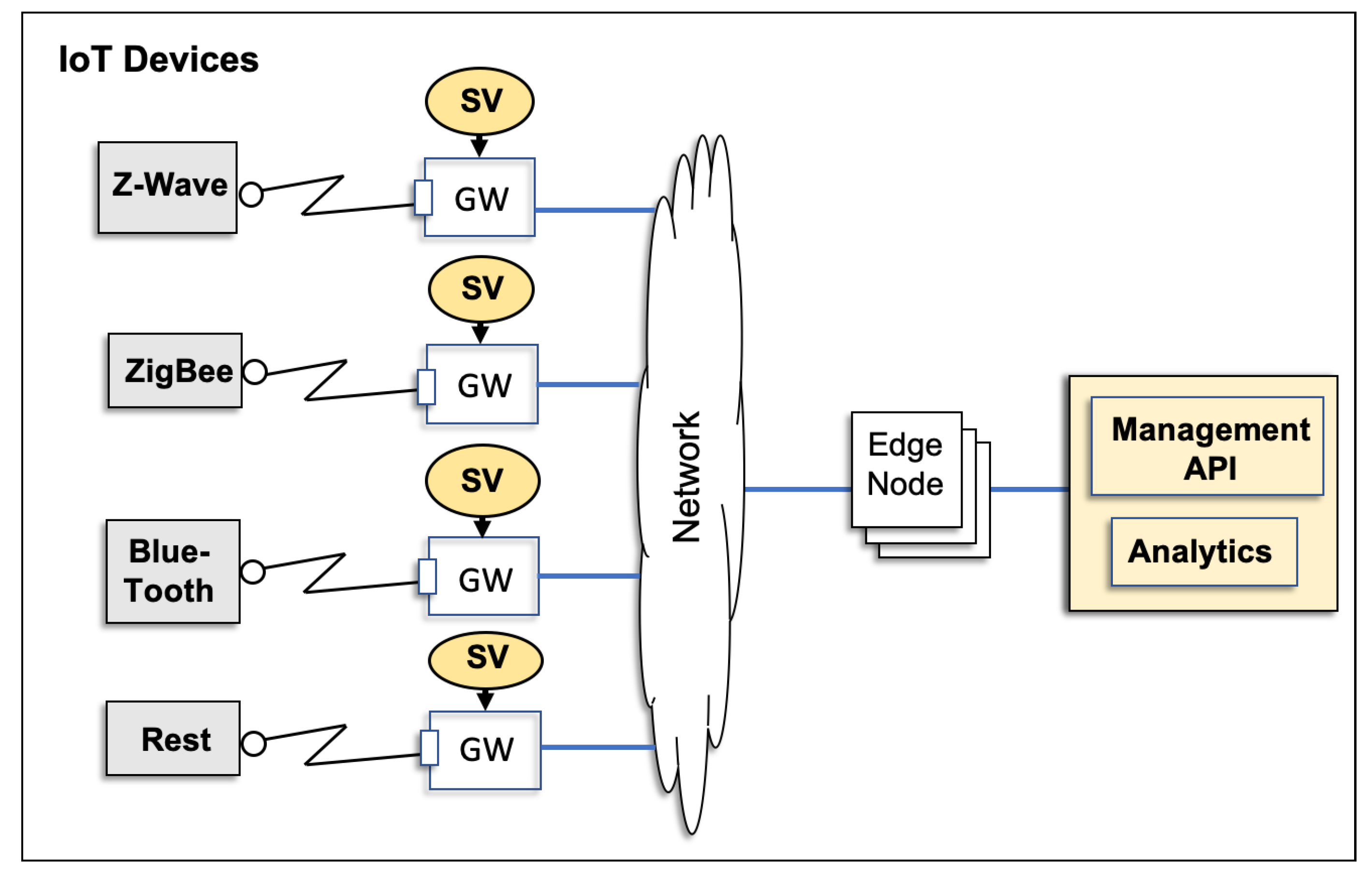

This phenomenon will be significantly highlighted in IoT applications, such as sensor recorded data where the data model places a vital role in the performance of the relevant systems [

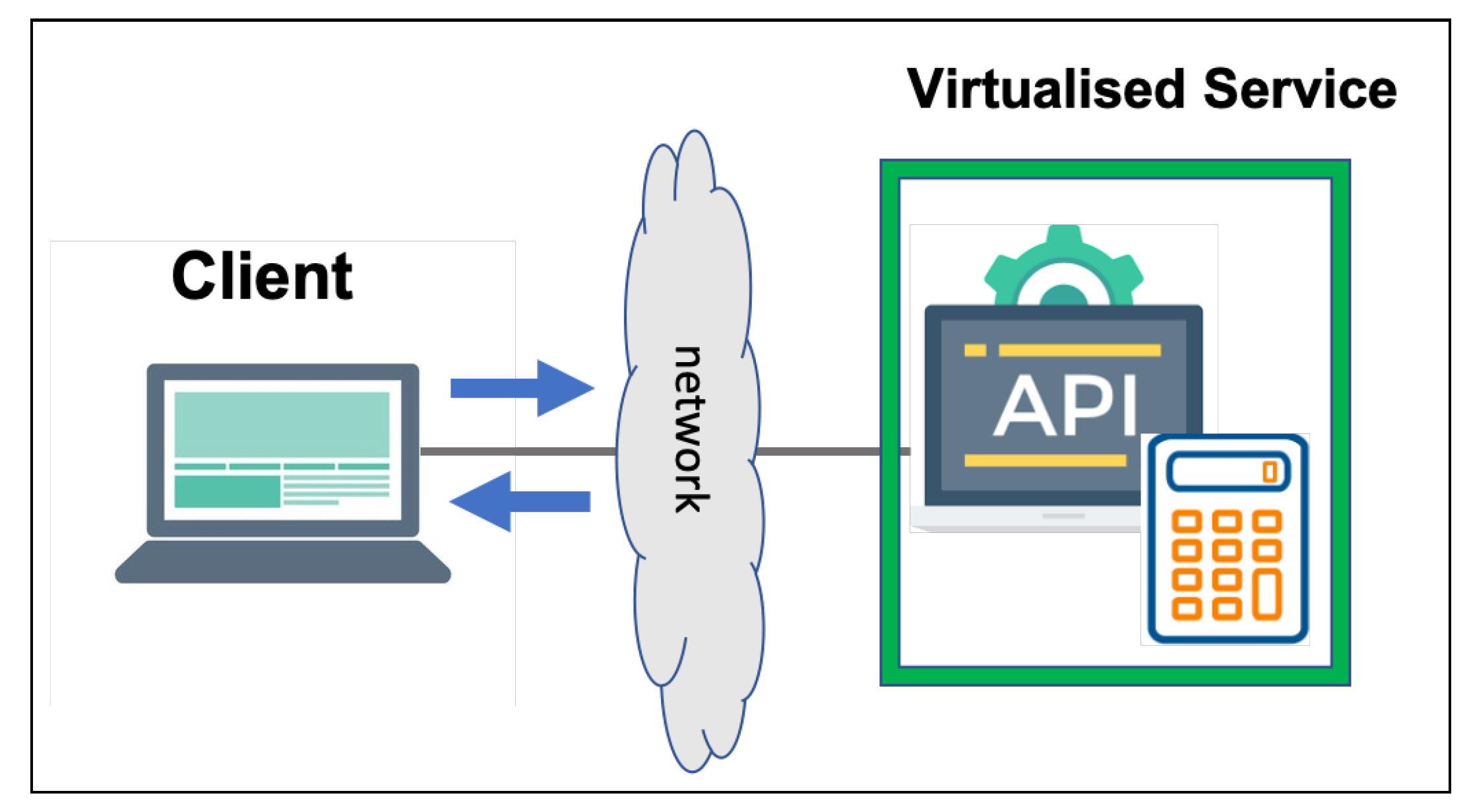

2]. IoT services need to communicate with many IoT devices that may be sensors, actuators, or gateways (see

Figure 1).

Continuous delivery and testing of IoT services are particularly challenging because of the heterogeneous hardware, multiple communication layers, lack of industry standards, and skill sets requiring OT and IT. These challenges can delay the release of the application and affect software quality. Releasing the IoT application may require the physical devices to be present every time that the application is fully tested. IoT virtualisation can remove constraints for IoT development. A virtual test-bed of IoT devices can accelerate the IoT application development by enabling automated testing without requiring a continuous connection to the physical devices.

The IoT concept has initiated the smart cities, as presented by Bhagya, et al. [

3]. The aim in the smart cities is to improve the citizen’s quality of lives by intelligently supporting the city functions with minimal human interplay. However, testing the integration of multiple smart components each consists of innumerable heterogeneous devices, sensors, several communication models, and protocols could be one of the most challenging tasks when deploying a real world smart city [

3].

Continuous delivery is an industry best-practice for accelerating software delivery and increasing software quality [

4]. This practice has been introduced in order to mitigate the challenges of significant integration costs when simultaneous changes to components and their requisite services are irregularly synchronized (and “mismatches” quickly fixed). To effectively adopt continuous delivery, requisite services must be readily available to the software engineers of a development team; otherwise, continuous integration and testing cannot be efficiently performed.

A number of techniques have been proposed for providing ‘replicated’ services in development and testing environments in order to ensure that they can be readily accessed by software engineers. Mock objects and stubs [

5,

6] are widely used to mimic the interactive behaviour of actual server-side systems. However, this approach is language-specific, and any modification in the systems leads to a change in the code. Another solution uses virtual machines or hardware virtualisation tools, such as VMWare and VirtualBox [

7], to host multiple server-side systems that communicate with a component under development. This method assumes the availability of the real server systems for installation. These systems are often large and they do not scale well to large numbers of deployments. Container technologies, such as Docker [

8], which virtualise operating system environments rather than hardware and make available protected portions of the operating systems, are also used for setting up testing environments. Container technologies are lightweight and provide good scalability; however, they mostly suffer from the same limitations as hardware virtualisation approaches.

Recently, service emulation [

9,

10] and service virtualisation (SV) [

11] have been introduced. Both approaches aim to replace any requisite services for a component under development with lightweight, executable “approximations” thereof that can be easily configured and deployed without replicating the requisite services and/or their deployment environments. The aforementioned approaches also aim to only replicate real services’ behaviour for specific quality assurance tasks, but they mostly ignore any other features or properties of the real services.

In service emulation, the executable model of a requisite service has to be manually specified [

9,

10]. This task requires significant knowledge regarding the functionality of a requisite service, which is something that may not always be readily available (e.g., in case of legacy software) and practical when a requisite service’s behaviour is quite complex. Some shortcomings of service emulation are addressed in SV by (i) recording the interactions between a component under development and its requisite services (e.g., using tools, such as Wireshark [

12]), from a number of test scenarios and (ii) generating executable models of the requisite services by applying data mining techniques [

6,

11,

13,

14]. Consequently, some of these techniques are referred to as “record-and-replay” techniques. For example, the technique that was presented by Du et al. [

14] “matches” an incoming request with those found in the aforementioned recordings and substitutes fields to generate a response for the incoming request. This technique was refined and it became sophisticated over the years (Versteeg et al. [

15]).

One of the key drawbacks of these SV approaches is that they only focus on stateless protocols—the response of a service only depends on the current request, but not on any prior interactions—and hence fail to adequately work for protocols that have inherent side-effects on the internal state of a service (as found in state-full protocols). Enişer and Sen [

16] employed classification-based and sequence-to-sequence-based machine learning algorithms to cater for state-full protocols. However, their work can only project values of a categorical type, thereby failing to address the accurate projection of numeric values, amongst others.

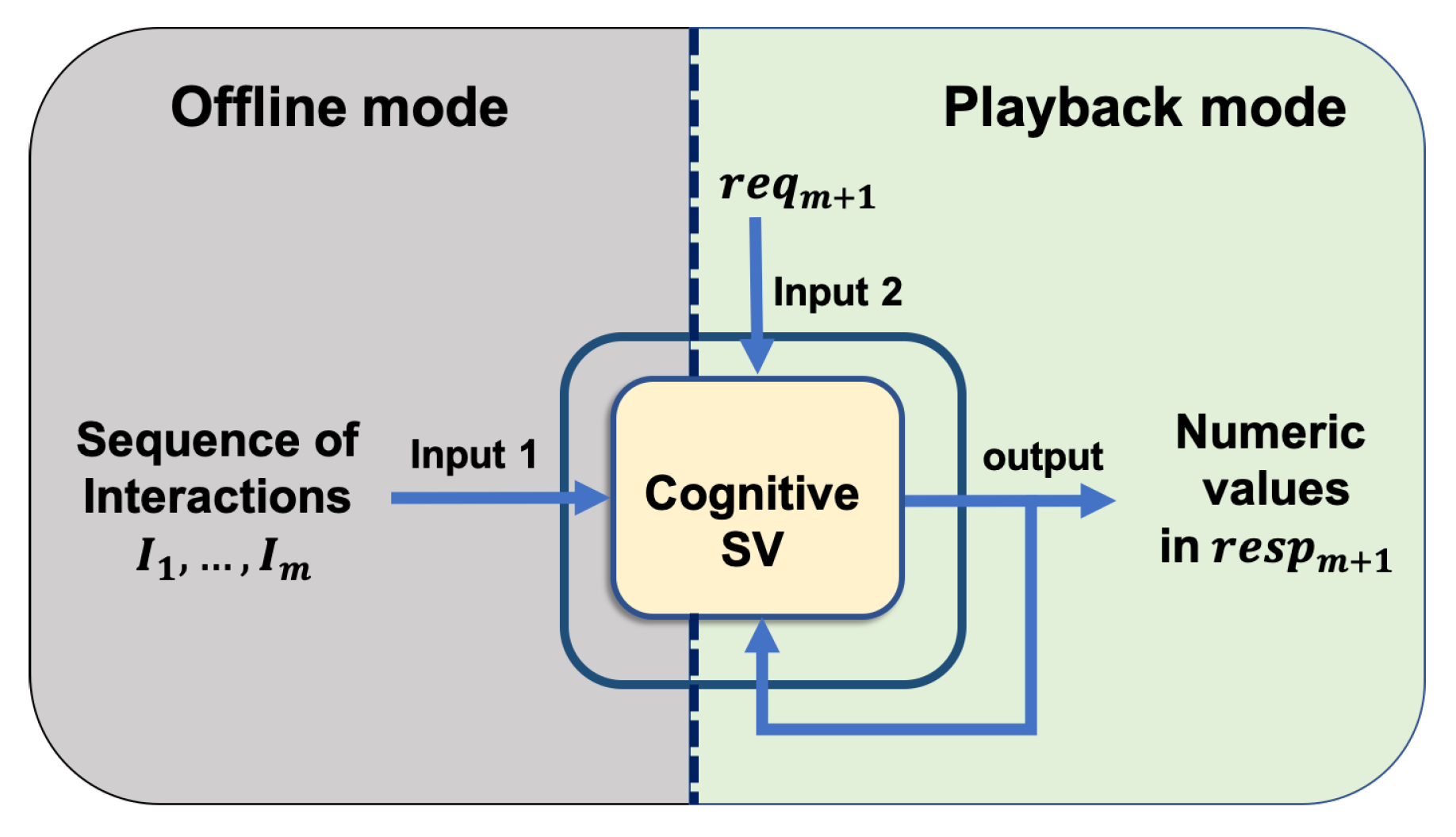

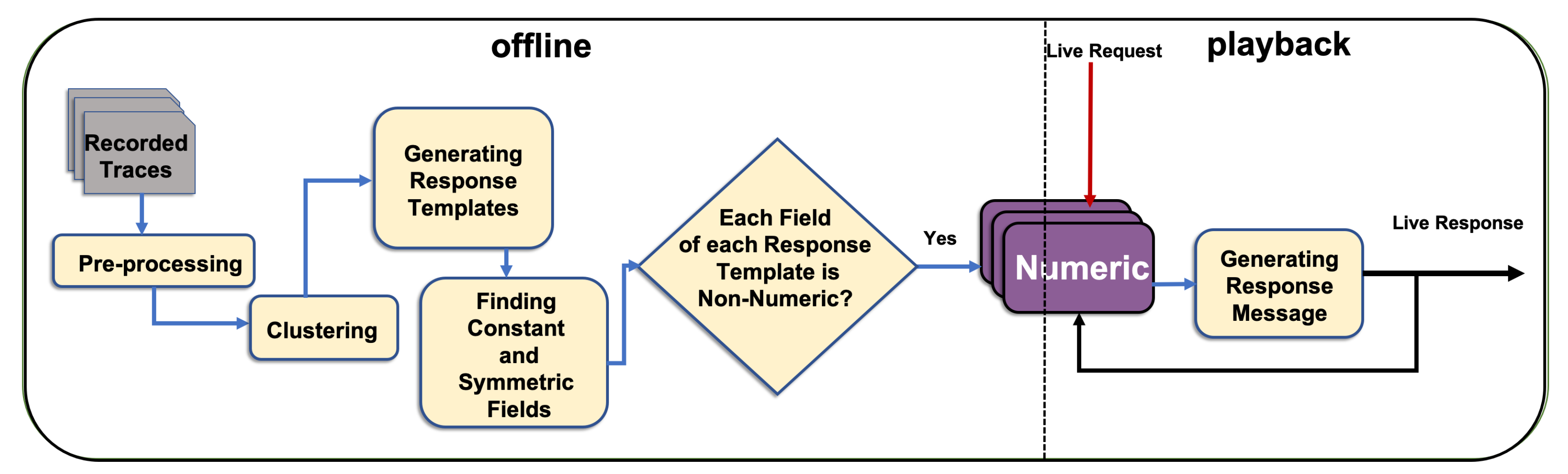

This paper presents a novel software virtualisation solution, called Cognitive Service Virtualisation (CSV), which is capable of projecting numeric values in response to service requests. This project employs a new methodical approach for the first time in this field. The proposed approach uses data mining methods and time series analysis concept to find the relationship between each numeric field in the service response with their previously sent/received request/response messages to achieve an accurate model for each numeric field in the service response.

The rest of this paper is organised, as follows: in

Section 2, a number of key related studies are discussed. The problem to address in this work is formalised in

Section 3, followed by a motivating scenario in

Section 4, which illustrates the challenge of predicting numeric fields in response messages.

Section 5 presents the key steps of the proposed CSV approach. The data sets used for our evaluation and the results of the evaluation are introduced in

Section 6 and

Section 7, respectively.

Section 8 discusses threats to validity to our evaluation. The paper concludes in

Section 9 with a summary of the main observations and future work.

2. Related Work

Mining service behaviour has become a topic of interest in many fields of software engineering; accordingly, much research in recent years has focused on the development of methods for addressing it. SV is one use case of service behaviour mining that focuses on the discovery of control and data aspects of software services from their network interactions. Researchers have examined and improved many aspects of SV.

Kaluta [

17] is a specification-based service emulation tool that replaces different types of requisite systems with the execution of a runtime behaviour model on the basis of the protocol specifications of their expected interactions with SUT. Kaluta provides a framework that can model the behaviour of services in a compact and convenient way. This framework uses the description of the services’ protocols, behaviour and data models, and provides a runtime emulation of the service in a testing environment for responding to requests from the system under test, thereby reportedly achieving a high scalability.

In recent years, commercial enterprises have developed service virtualisation tools. Computer Associates, [

18], IBM [

19], and HP [

20] strive to speed up software development by using virtualisation tools. They use service and protocol specifications along with inferred information from the traces to create a virtual service model.

Opaque SV [

15] has been introduced in order to virtualise stateless services. This method receives a raw message as input and generates its response by using clustering and sequence alignment techniques without any prior knowledge of the protocol message format. The aforementioned method addresses encoding and decoding of the protocol and generating the response message with the correct content. This method works well when a simple equivalence dependency exists between each request and its corresponding response. However, this method cannot accommodate many complex dependencies between the request and corresponding response in stateless services or protocols.

Enişer and Sen [

16,

21] proposed three different ways of generating responses to a request in order to address stateful services. In the first method, they used a classification that maps each request and its previous messages to a class of possible values for each field in the response message. They considered the fields’ values as categorical data and employed a classifier to map the request and historic messages to one of the categories in each field in the response message. The second method that they proposed is sequence-to-sequence based virtualisation using a special RNN structure, which consists of two LSTM networks. LSTMs have been found to be efficient with sequence-based data sets that contain categorical or textual data in language translation use case [

22]. The third method uses a Model Inference Technique (MINT) [

23] to generate each categorical response field. MINT is a tool for inferring deterministic and flexible Guarded Extended Finite State Machines (EFSM) that use arbitrary data-classifiers to infer data guards in order to generate the guard variables and merge the states. The training time for RNN structure is long, thereby leading to the implementation of the method on GPU. All models with the proposed structures were reported to work well with protocols that have textual and categorical data fields. However, these models cannot accommodate non-categorical numeric fields.

Existing service emulation and virtualisation approaches have major limitations. First, developing a service specification in Kaluta requires significant developer effort, and its configuration and maintenance are complex. Second, an opaque SV is stateless and it generates a response to an incoming request only on the basis of the simple dependency to its corresponding request without considering the interaction history, thereby resulting in the inaccuracy of complicated protocols. Third, although the latest published work [

16] can accommodate stateful services, it cannot generate a response message that contains accurate non-categorical numeric data. In addition, there are limits regarding how long the LSTM-based method can take the recorded messages into account. LSTM has a sequential structure and it uses back-propagation to train the model; therefore, it takes a long time on a normal system to be trained [

24].

3. Problem Definition

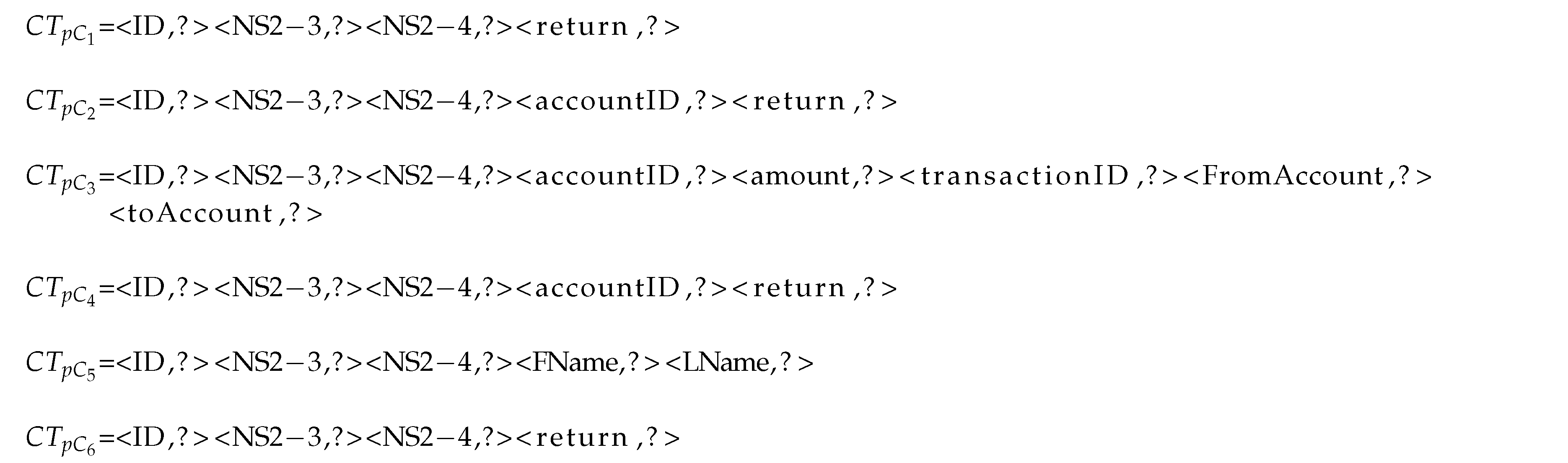

Software services communicate with each other by exchanging messages, often with the premise that one service sends a request to another service expecting to receive a response (or possibly several responses) to the request within a certain amount of time. The types of messages that are exchanged often follow the rules defined by an underlying protocol. Specifically, these types must adhere to the (i) specific structures on how information is encoded and transmitted over a network and (ii) temporal dependencies that determine in which order certain messages can be exchanged.

In this work, we assume that each request message emitted by a service will always result in a single response. In situations where a protocol does not strictly follow the notion of request/response pairs (e.g., LDAP [

25] search requests may result in multiple responses), we apply the technique that was proposed by Du et al. [

14] to convert exchanged messages into request/response pairs.

Different protocols apply varying techniques in order to structure the information to be transmitted and how the resulting structure is encoded into a format suitable for effective communication over a computer network: some protocols use a textual encoding for transmission (e.g., SOAP), whilst others use a binary encoding (e.g., LDAP).

Herein, we assume that we have a suitable encoder/decoder that can read messages sent in a protocol’s “native” format and convert them to a non-empty sequence of key/value pairs with unique keys, and vice versa. If a protocol allows for the repeated use of a key across messages (for example, in LDAP [

26]), then a suitable strategy is applied to make keys unique within a message (e.g., adding a number pre- or postfix to each repeated key).

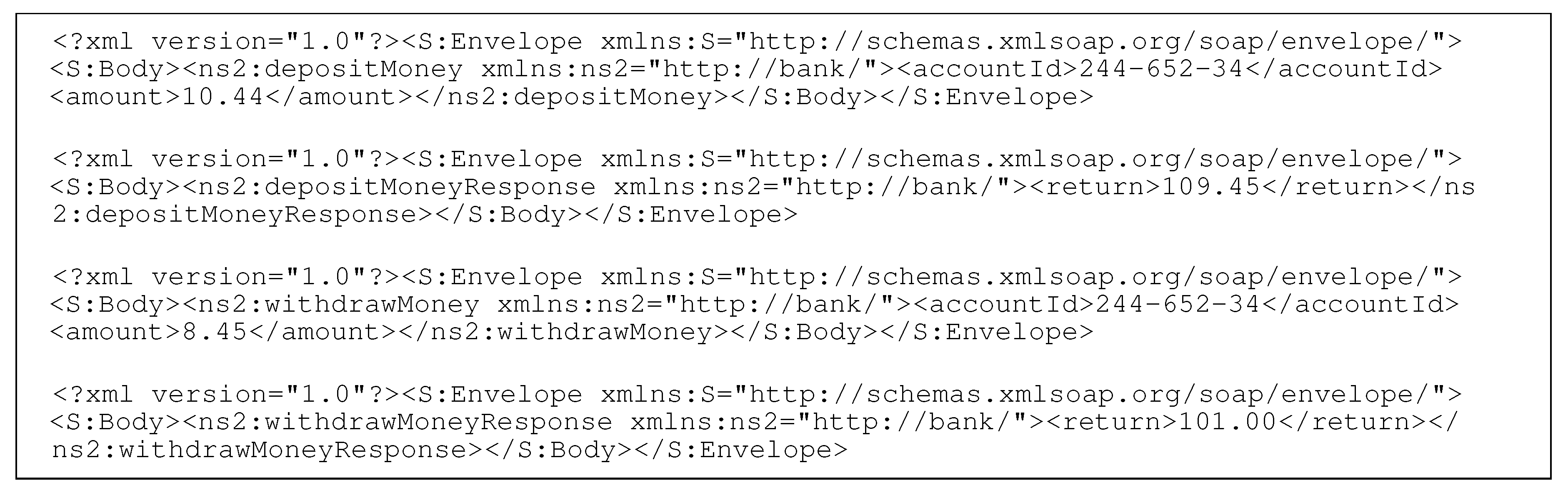

Finally, we assume that we can identify a specific key across all request messages of a protocol that defines the request type (or operation type) of a given request (e.g.,

depositMoney or

withdrawMoney;

Figure 2). If the key for the request type cannot be readily identified, then we can use the technique proposed by Hossain et al. [

27] to generate such a key. A domain expert may also manually apply annotations. Response messages may or may not have an equivalent response type indicator. However, the proposed approach does not require the presence of explicit response types.

Given these assumptions, we define a number of constructs needed to formally express our approach. First, we have the set of keys, denoted by , and require that equality and inequality be defined for the elements of . We use k and to denote elements of .

Furthermore, we have the set of values, denoted by

, and require that equality and inequality be defined for values. We use

v and

to denote the elements of

. Message

m is a non-empty sequence of key/value pair bindings with each key

to appear at most once in

m. We use

m and

to denote the messages and the set of all messages as

and use the notation

to denote a message with keys

and the corresponding values

. We write

to denote the set of keys that are used in a message

m. In the example above,

.

A specific key/value pair <, > is often referred to as field, and we use the terminology that a message is defined by a non-empty sequence of fields. We also use the notation and to explicitly denote the request and response messages, respectively, if this is not immediately obvious from the context.

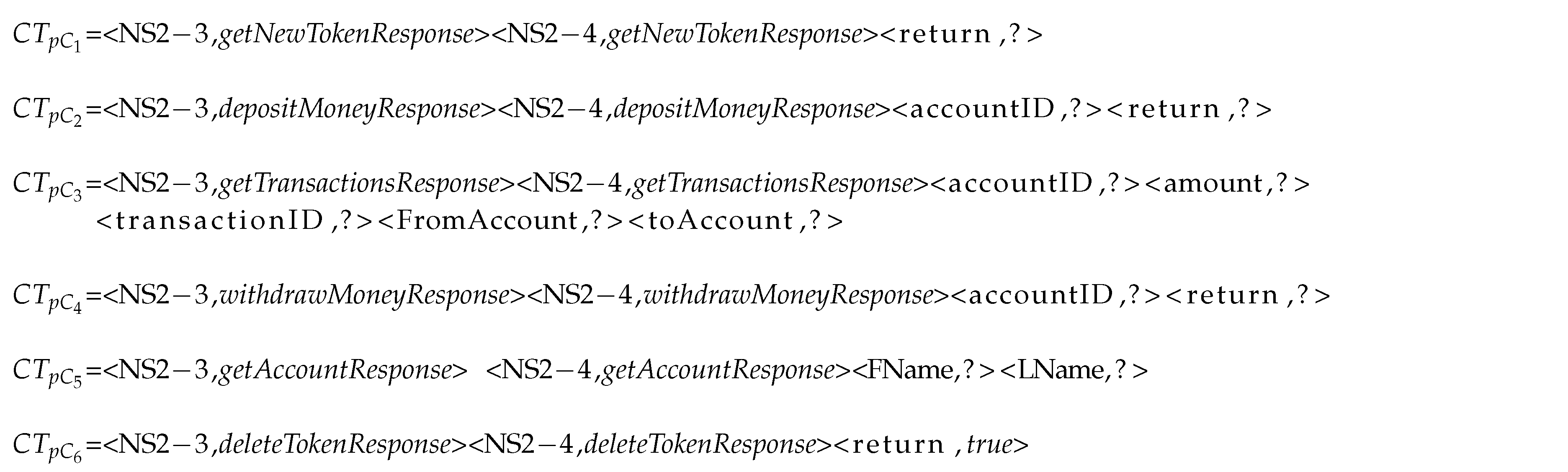

Consider the four XML-encoded messages of a banking service that are given in

Figure 2: the first message defines a

depositMoney request; the second message is the corresponding response; the third message represents a

withdrawMoney request; and, the fourth and final messages are the corresponding responses.

DepositMoney and

withdrawMoney denote two of the six possible request types of the corresponding protocol.

We encode the following four messages into a non-empty sequence of fields (i.e., key/value pairs):

The value of the key ‘NS2’ encodes the request type of both request messages, whereas the values of the other two keys denote information of the corresponding requests.

In this work, we denote a request message and its corresponding response as an Interaction. In the banking service example, and denote one interaction and and as another interaction. We use to denote the set of interactions (I, etc.) to denote individual iterations. Finally, we denote non-empty sequences of interactions , , … as trace T.

We define the stateless protocol as interactions that the response message values can be generated by incorporating its request message. In the stateful protocol, a service for generating some response values may need its current request and the previous relevant interactions. The number of relevant interaction to generate a response field can vary from service-to-service, message type-to-message type, and field-to-field. If we show the number of relevant messages for a response field with , then we can use and for the stateless and stateful protocols, respectively.

A categorical variable is a variable that can be assigned one of a limited number of possible values. For convenience in processing categorical variables, they may be assigned numeric indices, for example, for k categorical variables they may assign 1 to K. These numbers which assigned to categorical variables are arbitrary and provide only a label for each specific value. These categorical labels follow different logical concepts than the assigned numbers, therefore, cannot be manipulated as numbers. Instead, valid operations are equivalence, set membership, and different distribution related operations, such as joint probabilities, etc. As the result, we consider that categorical fields do not need mathematical calculations to be generated; therefore, we identify the numeric fields that can be considered as categorical and remove them from the list of numeric fields.

Given these preliminaries, we can now formally define the problem of service virtualisation, as follows: given a sequence of interactions , , … and a request message , a virtual service is to generate a response that resembles as closely as possible the response a real service would create; specifically, it only contains the complete set of keys the real service would create with the values expected from the real service.

We can split the service virtualisation problem into four sub-problems:

What keys does a virtual service need to generate in the response message?

What values does it need to generate for each of these keys?

Which messages are relevant to generate the specific fields’ values?

How many relevant messages are required to generate these values?

We will address these four sub-problems in the following sections with special focus on generating the values for numeric fields.

Given that the proposed solution uses machine learning models, any machine learning classifiers can be added to the collection of the functions to analyse the data; therefore, CSV’s ability can be constrained to the limitations of the machine learning models in identifying the relationships between data. In this study, we consider the numeric modules to discover the linear and nonlinear relationships between numeric fields, such as multiplication and exponential, in the case of a large amount of data to predict them. However, some fields’ values, such as random fields, encrypted fields, hash fields, and tokens, use complex algorithms to prevent their regeneration. The use of machine learning algorithms to accurately generate data seems impossible and beyond the scope of this work. Therefore, we exclude the aforementioned fields from our evaluations.

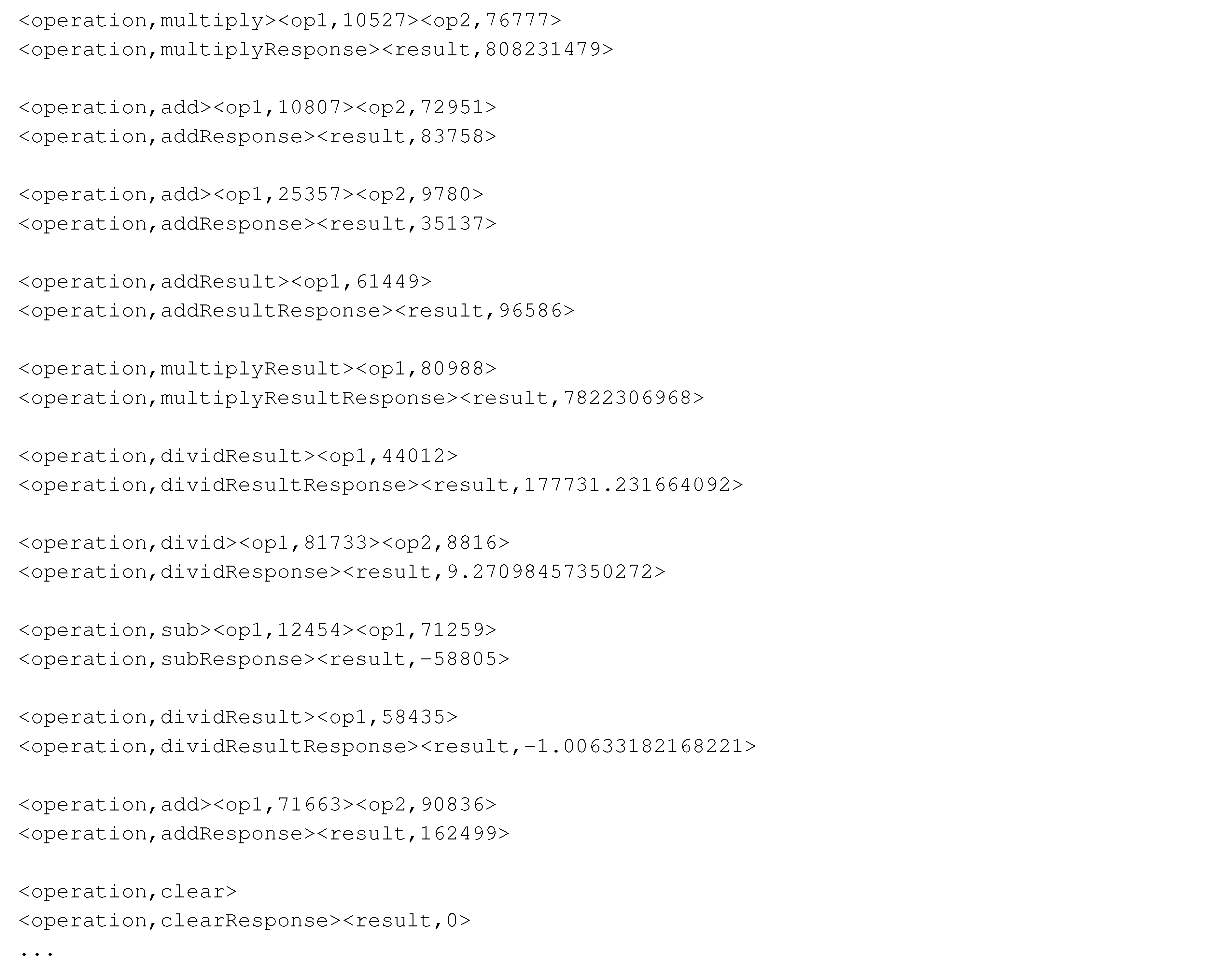

For example, banking data set that contain getNewToken, deleteToken, getTransactions, getAccount, deposit, and withdraw transactions have linear relationships. The calculator data set, including the plus, minus, multiply, and division, is an example of linear and nonlinear (i.e., exponential) relationships.

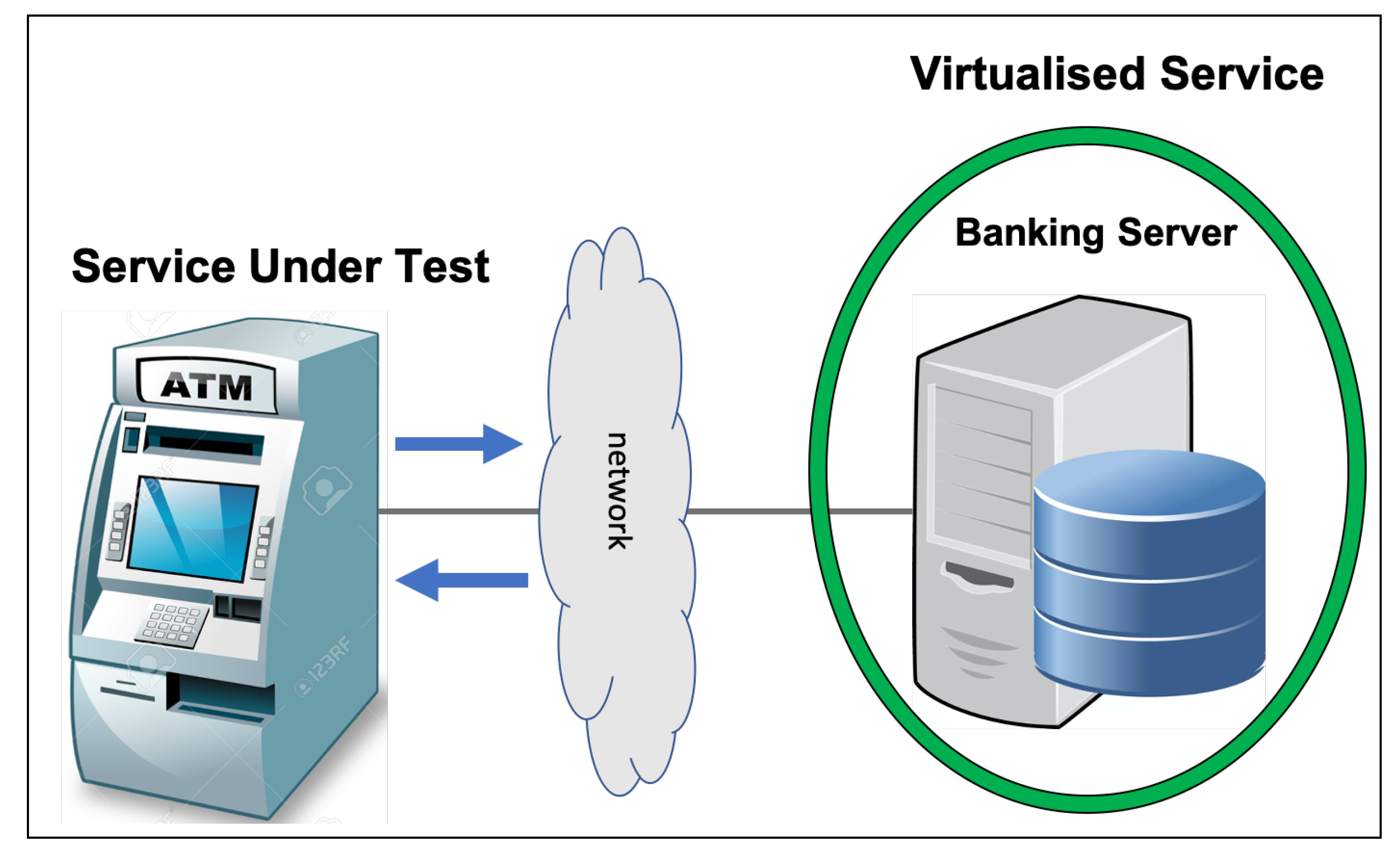

7. Evaluation

This section contains the result of testing CSV on three data sets. CSV was implemented in Matlab. In the banking scenario, the ATM as the SUT sends either a

or

request message with the specific amount that is related to one of the existing account IDs as part of its interaction. The virtualised service responds to the request from the ATM. A model with

consecutive messages was correctly selected as the optimal model. Filter 2

was correctly identified as the appropriate filter. Given that deposits and withdrawals are the only interactions that contain numeric fields in the responses, the experiments were based on these types of interactions.

Table 2 shows that CSV can accurately derive the target field for all deposits and withdrawals of the test set.

Table 3 shows the numbers of test cases used and correctly virtualised responses.

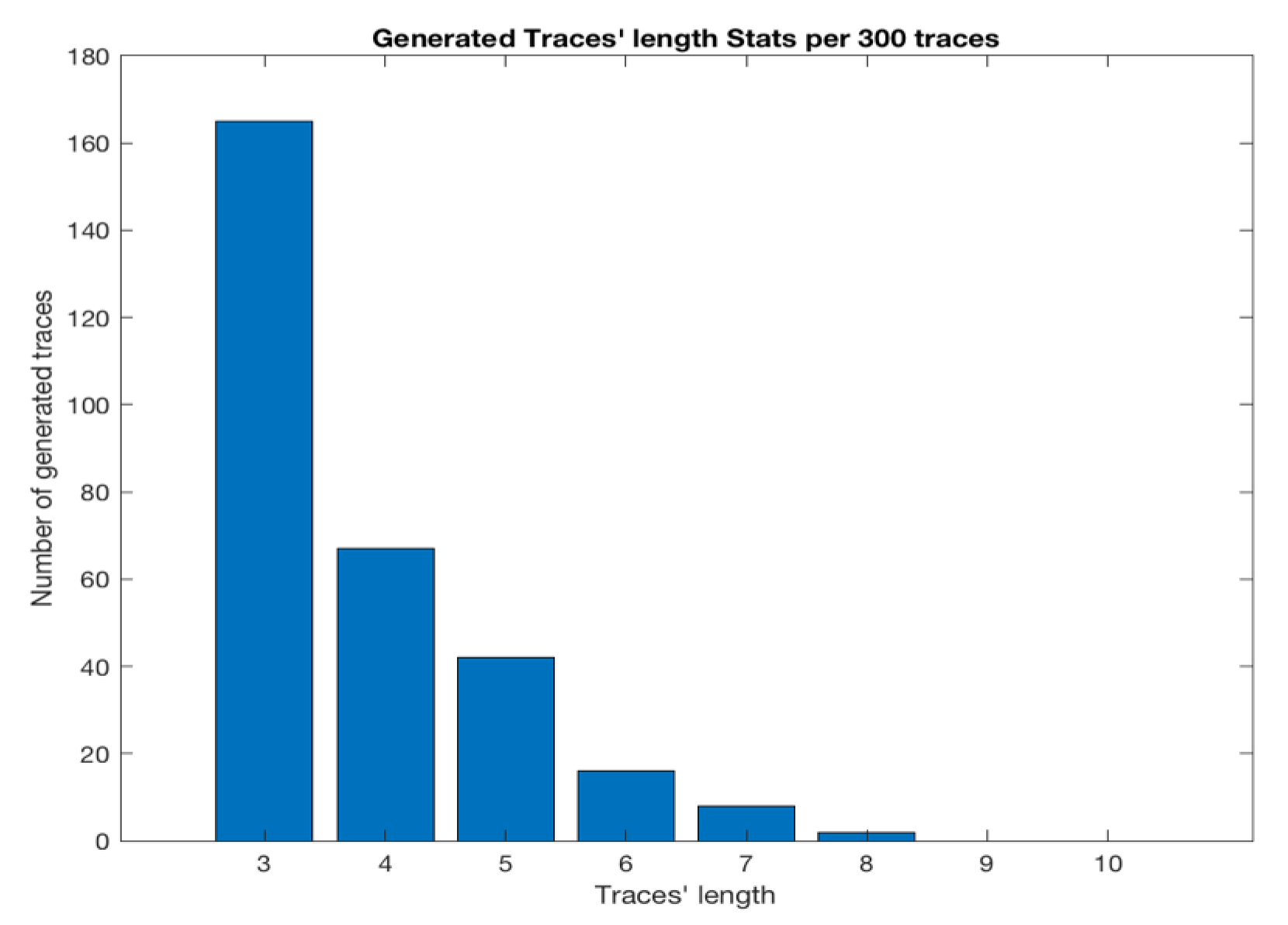

In the case of the calculator data set, CSV generates accurate values for the result fields of all seven different message types. The result value in the response of the request was identified as a constant by the component that creates the RT. The model with linear complexity achieved the optimal accuracy and it was selected for the , , and request types. The SMOReg function with a polykernel model optimally performed in terms of accuracy for and . In all cases, the interactions with in the responses were the evidence collections, which achieved the best accuracy. Therefore, the corresponding filter was chosen as a basis for the choice of evidence. In operations with two operands, such as multiply, the number of relevant messages in the trace is correctly identified as , because all of the information that the model needs is in the request. In single-operand cases, the system correctly chooses .

In the LDAP data set, the ‘creating RT’ component attempts to find an RT by using simple intra-message and inter-message (request—response) dependencies; therefore, it detects the equivalence between request and response . Given the values always equal to one that in the , it identifies the in the as the constant in the RT. The numeric field of in the other RTs are identified as equal to the same key in their corresponding request types as the result of the ‘creating RT’ component.

Table 2 compares the results of CSV with those that were achieved by Opaque SV when generating non-categorical numeric data in the banking, calculator and LDAP data sets. In this work, the accuracy is defined as the ratio of correctly generated non-categorical numeric values in the response messages to the number of all non-categorical numeric values in all of the responses that were generated. CSV accurately generates all numeric fields for all three data sets with error rate (residuals) of zero. Meanwhile, Opaque SV can generate 100% accurate results in the case of LDAP, which only has equality relationships; it can successfully generate responses to the clear request type of the calculator data set, which requires a constant value of zero. Opaque SV was not designed to create values for numeric fields; accordingly, it failed in all cases of the banking data set.

Table 3 shows the number of correctly generated response values. The creation of the responses included generating the template and response values. Although the CSV can cover majority of the fields, the CSV cannot predict other fields, such as encrypted fields, hash fields, and tokens, which were excluded from our evaluations.

In the end,

Table 4 compares the related SV and the proposed CSV solutions on four different benchmarks. As the table shows, CSV presents an automatic solution that gains high accuracy in generating numeric fields. Besides, the CSV learns from the recorded interactions in a short time while others fall short in one or more benchmarks. Even though both Kaluta and Commercial SVs gain high accuracy, they require service specifications, long time and massive human effort to build the virtualised services manually.