Abstract

COVID-19, caused by SARS-CoV-2, has resulted in a global pandemic recently. With no approved vaccination or treatment, governments around the world have issued guidance to their citizens to remain at home in efforts to control the spread of the disease. The goal of controlling the spread of the virus is to prevent strain on hospitals. In this paper, we focus on how non-invasive methods are being used to detect COVID-19 and assist healthcare workers in caring for COVID-19 patients. Early detection of COVID-19 can allow for early isolation to prevent further spread. This study outlines the advantages and disadvantages and a breakdown of the methods applied in the current state-of-the-art approaches. In addition, the paper highlights some future research directions, which need to be explored further to produce innovative technologies to control this pandemic.

1. Introduction

Since late 2019, countries around the world have been experiencing a global pandemic through the surfacing and spread of the potentially fatal COVID-19 (COronaVIrusDisease 2019) caused by SARS-CoV-2 (Severe Acute Respiratory Syndrome CoronaVirus 2) virus [1]. COVID-19 causes victims to develop a fever and display respiratory difficulties causing coughing or shortness of breath [2,3,4]. Data collected from victims of the virus shows that most deaths occurred in patients with underlying health issues with elderly people being at a higher risk of death [5]. The first confirmed case of the virus is considered to be in Wuhan, China in December 2019 with some of the early cases thought to be traced to seafood markets trading live animal species such as bats and snakes [6,7,8,9]. The virus has been discovered to likely be related to bats. It is suspected that the virus may have been transmitted to humans through bats which were being sold as food items [10,11]. The exact cause of the virus is still unknown, and it has also been suggested that the virus could originate from pangolins, which are natural hosts of corona viruses [12]. Pangolin is unlikely to be linked to the outbreak as the corona viruses found on the animal differ to COVID-19 [13]. However, it is possible the pangolin could have served as an intermediate host. As a result, these markets were shut down in China [14]. The virus rapidly spread throughout China and eventually spread throughout the world. The virus was officially declared a global pandemic by the World Health Organisation (WHO) on 30th January 2020 [15,16]. Although new discoveries are being made at the time of writing this paper, the virus has been found to be highly contagious and this has led to its rapid spread throughout the world [17]. The virus is spread primarily through respiratory droplets from an infected person [18]. These droplets can be dispensed by an infected person when coughing or sneezing. The droplets can then infect others directly via the eyes, mouth or nose when they are within a one or two meters radius of an infected person [19]. Some examples can show where 2 m is not enough distance such as with tobacco smoke traveling over 9 m from a lung source [20]. This uncertainly has led to the recommendation of using facial masks as a protective measure. There is debate on the effectiveness of masks, but it is recommended by the WHO to use masks if in contact with COVID-19 patients [21,22]. The droplets can also be passed to others indirectly due to their long-term presence on surfaces [23]. Another leading factor in the rapid spread is that those infected with COVID-19 can be contagious during the early stages of infection while they are showing no symptoms [24]. This leads to people believing they are not sick while unknowingly spreading the virus. One of the main challenges of the COVID-19 pandemic is the how the spread of the virus can be controlled. The rapid spread of COVID-19 has highlighted how the world’s population interacts when faced with a pandemic [25]. Governments around the world have outlined guidelines to their citizens to adhere to lockdown rules. Currently, the best strategy to control the spread of COVID-19 is to ensure social distancing until a vaccine or an effective treatment can be produced [26,27]. The National Health Service (NHS) of the United Kingdom is expecting an increased demand for their services as more COVID-19 patients are admitted and staff sick leave increases as staff members contract the disease [28]. Technology is being rapidly introduced in healthcare applications to develop systems that can ease the demand of the health service [29,30,31]. Any assistance via healthcare technology will free up valuable clinical resources to focus on other areas of care. In this paper, we look at the state-of-the-art non-contact sensing techniques and how these technologies can be used to assist in the care and detection of people suffering from COVID-19 and how these methods can help to reduce the spread of the disease, primarily the spreading of the disease from patients to healthcare workers such as doctors, nurses, and career staff.

Search Strategy

The following search terms and variation of search terms were used in Google Scholar, MDPI, Science Direct and IEEE databases: radar breathing detection tachypnea, RGB-thermal breathing detection, Terahertz COVID-19, ultrasound non-contact lungs, ultrasound imaging, CT Scanning COVID-19, X-ray COVID-19, Camera COVID-19 Detection, Radar COVID-19 diagnosis, Thermography COVID-19, Terahertz COVID-19 detection, thermography non-contact, COVID-19 symptoms, Ultrasound Non-contact.

2. Non-Contact Sensing to Detect COVID-19 Symptoms

Non-contact sensing is the ability to detect information without direct contact with a subject. In terms of healthcare, non-contact can be used monitor the human body without devices physically touching the body. Non-contact techniques are considered highly valuable in dealing with a highly infectious disease such as COVID-19, as contact may contribute to the spread of disease. This is because healthcare workers will not need to make physical contact with patients to enable the monitoring of the patient. Using wearable devices can cause risks to healthcare workers as they will need to have physical contact with patients to attach the device. Despite precautions being undertaken such as wearing gloves and face masks, there will be lower risk if contact with patients can be successfully removed completely. Healthcare sensing technologies aim to collect information from a person which can be processed by Artificial Intelligence (AI) to provide decision support or directly analyzed by a clinician to diagnose a disease or monitor existing conditions. The use of AI can help to relieve pressure on hospital staff while they work hard to manage resources during the global pandemic. Non-contact remote sensing technology can sense such healthcare markers without introducing anything to the body (e.g., wearable devices). Wearable devices can be uncomfortable for some which will entice users to remove the device and results in misplacement or damage [32]. The non-contact techniques can assist in the detection of COVID-19 and the care of patients suffering from COVID-19. This will allow for quick diagnosis and allow for healthcare professionals to make clearer judgements on the treatment of the patient and allow for quarantine action to be undertaken. Vital-sign monitoring can provide great assistance in the fight against COVID-19 for several reasons. These reasons include detection of irregular breathing patterns, which is a major symptom of COVID-19, but it can also monitor the health conditions of patients suffering with COVID-19. Although COVID-19 affects the respiratory system [33,34], it has also been shown to take effect on the cardiovascular system [16]. These non-contact methods can also monitor heartbeats and therefore provide a monitoring system of the patient cardiovascular system. It can be concluded that non-contact sensing that monitors these vital signs can be used to aid in the detection and treatment of COVID-19. Examples of non-contact techniques described in this paper include computed tomography (CT) scans, X-rays, Camera Technology, Ultrasound Technology, Radar Technology, Radio Frequency (RF) signal sensing Thermography and Terahertz. Table 1 details the advantages and disadvantages of each technique. These methods can be used with AI to help give diagnosis. Currently testing for COVID-19 is done by doing a swab test. The results of these tests are currently returned the next day, but may be delayed by up to 72 h [35]. The paper will provide a review of the state-of-the-art literature that is using these non-contact methods to be able assist patients suffering with COVID-19. Table 2 provides a summary table of the current literature contained within this review paper.

Table 1.

Summary of Non-Invasive Techniques.

Table 2.

Summary of Current Literature.

2.1. CT Scanning

An example of a non-invasive technique to detect COVID-19 is using computed tomography (CT) scans [47]. This process involves taking several X-ray images of a person’s chest to create a 3D image of the lungs. The images can be reviewed by professionals to look for abnormalities in the lungs. The professionals are trained to review the images and they can tell from the captured image what is normal tissue of the lungs and which part of the lungs look to be infected. Infection can lead to inflammation of the tissue which will be present in the CT images. This method has been used to look for pneumonia which is an infection of the lungs which can affect the lungs similarly to how COVID-19 has an effect on the lungs of a patient. The activity of COVID-19 in the lungs is more prominent in the later stages of infection; however, ultimately, research has shown that CT scans showed a sensitivity of 86–98% [48]. This technique is non-contact as nothing is directly introduced into the body of the patient. However if a patient has been found to be infected with COVID-19 then the surface of the CT scanning machine is likely to contain droplets of the infection dispensed by the patient. This will therefore need to be cleaned effectively to prevent the spread of the virus to another patient who will be tested using the CT scanner apparatus. It can be noted that cleaning of surfaces can be considered safer for healthcare workers than physical contact with a patient. This is because droplets that are present on surfaces are likely to be static, whereas infected patients will dispense these droplets from their bodies during breathing and possibly through coughing, which is a symptom of COVID-19. CT scans can achieve high precision with high image resolution, however the technology used to perform CT scans is expensive. CT Scanners are paid for out of hospital budgets and are part of the dedicated equipment used to assist hospital staff in patient diagnosis. Their cost is proportionate to the level of accuracy they can provide within the healthcare industry. The equipment is not portable, and it requires skilled professionals for image analysis. The CT scanning machine is a massive piece of equipment. The machine is big enough to scan the entire length of an adult laying down. This also ensures the machine is of a high weight which will further remove the portability of the device. Another disadvantage of CT scanning is that the patient is exposed to radiation [49]. The radiation levels in CT scans have been found to result in an estimated cancer mortality risk of 0.08% within a 45-year-old adult [50]. Recently, AI has been used on CT images for diagnosis of COVID-19 [51]. Again, AI can allow for support for the skilled professionals analyzing the CT images produced by the CT scanners. If AI can assist with the detection and predictions of any disease in the lungs, this can help to ease the workload of the CT scan professionals. The advantages of this can allow for greater care of patients and more opportunity to ensure the appropriate safety prosecutions are being taken to prevent the spread of COVID-19 to the hospital staff or other patients who could potentially be classed as at high risk of COVID-19.

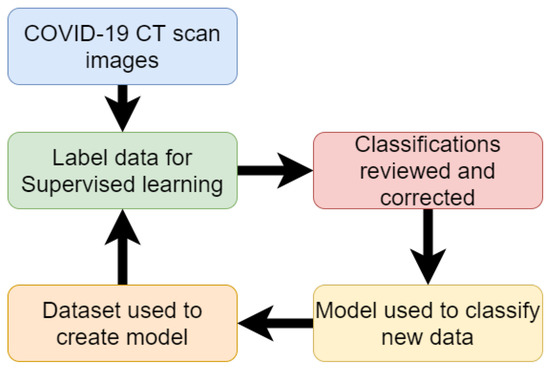

Fei Shan et al. [45] developed a deep-learning model which was able to detect COVID-19 and the level of infection within the lungs. Their model adopted a human-in-the-loop (HITL) strategy. Human-in-the-loop is when specialists are used to label a small amount of training data. Then an initial model is trained. Then this initial model is used to classify new data. The specialist then corrects any incorrect labels and the data set can be used to train further models. This task can be iterated numerous times to reduce the tedious task of labeling large amounts of data. The experiment used 249 confirmed cases of COVID-19 for training. The experiment achieved a high result of 91.6% accuracy. The experiments of this paper used 3 iterations. The first iteration made classifications on the validation data using 36 labeled images as a data set with an accuracy score of 85.1%. The labels are then corrected and added to the second iteration. The second iteration used 114 images for training and achieved an accuracy result of 91.0%. The labels are then corrected and passed to the third iteration. The third iteration is used on all 249 training images and achieved an accuracy result of 91.6%. The improved accuracy greatly reduces the human involvement and time devoted to labeling the full data. Figure 1 displays a flow chart of the process of human-in-the-loop.

Figure 1.

Flow chart of work for detection of COVID-19 from CT scan (Reproduced from [45]).

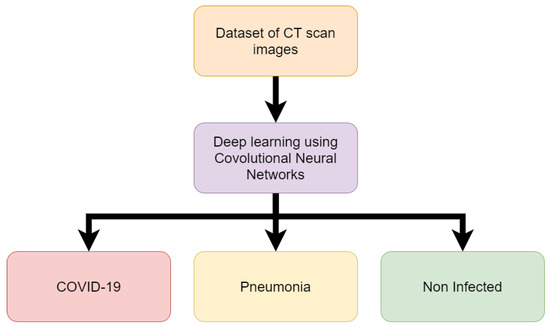

Li, Lin, et al. [37] used a COVNet, a custom deep-learning neural network to predict COVID-19 in CT images. The complete data set used included 400 COVID-19 CT images, 1396 Pneumonia CT images and 1173 non-infected CT images. The model takes CT images as input and extracts features of COVID-19 and pneumonia evidence found in the CT images. The features are then combined and the neural network can be applied to make predictions on whether the CT images contain COVID-19 or pneumonia features or if the CT images are of that of a non-infected person. Results found that the model was able to predict COVID-19 in patients with 90% sensitivity. The model proved to not only be able to detect infected and non-infected lungs but was also able to differentiate between COVID-19 and pneumonia with pneumonia having a sensitivity of 87%. Once the model was trained it was able to classify new samples within 4.51 s [37]. Figure 2 shows the process followed in this research.

Figure 2.

Flow chart of work for detection of COVID-19 from CT scan (Reproduced from [37]).

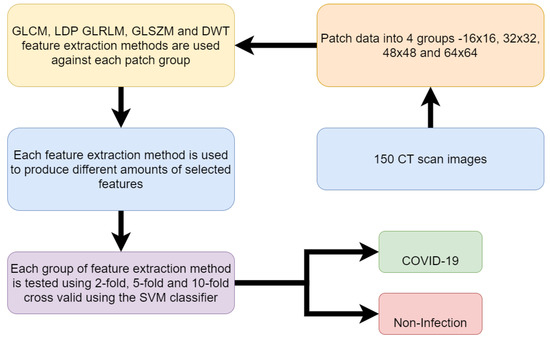

The research of Barstugan, Mucahid et al. [42] used machine learning on a data set of 150 CT images. The data set contains 53 infected CT images. Patches of the images are taken. Patches in image processing is the process of taking images and dividing them into containers of different sizes of pixels. Different sized patches are used to create 4 different samples of patches. The patch sizes are 16 × 16, 32 × 32, 48 × 48 and 64 × 64. The images were labeled as infected CT images and non-infected CT images in regard to COVID-19. The research used different methods of feature extraction on the images. These methods include Grey-Level Co-occurrence Matrix (GLCM), Local Directional Patterns (LDP), Grey-Level Run Length Matrix (GLRLM), Grey-Level Size Zone Matrix (GLSZM) and Discrete Wavelet Transform (DWT). Support Vector Machine (SVM) algorithm was then used to classify the extracted features of each of the methods. Support Vector Machine was used on the features using 2-fold, 5-fold and 10-fold cross-validation. Cross-fold validation is the process of using each fold to work as both training and testing data for the model to make predictions. Each fold will take a turn as being the testing data while the others are used as training. This is repeated for however many folds there are so that each fold serves as the testing data at least once. Then the results are compiled and each sample will have predictions made on it as it served as the testing data through each fold. The best accuracy result achieved out of the various methods of experimentation was 99.64%. This result was achieved using Discrete Wavelet Transform feature extraction method with 10-fold cross-validation using the 48 × 48 patch dimension CT images. A flow chart of the methodology followed in this research is shown in Figure 3.

Figure 3.

Flow chart of work for detection of COVID-19 from CT scan (Reproduced from [37]).

The above papers have shown through experimentation that CT scanning can display the signs of COVID-19 within a person’s lungs. The research has also shown how AI can be used to make predictions of CT images and provide assistance in the determination of whether COVID-19 is present in the lungs or not. The studies have also shown that AI can determine the level of infection present in COVID-19 patients. The AI has also been able to differentiate between pneumonia and COVID-19 infections which is a positive as COVID-19 and pneumonia is similar in the way that both diseases attack the lungs. Table 3 provides a breakdown of the above research papers on using CT scans for non-contact COVID-19 diagnosis and care of patients.

Table 3.

Summary of CT Scanning works.

2.2. X-Ray Imaging

X-ray images can provide an analysis of the health of the lungs and are used frequently to diagnose pneumonia [52]. The same strategy is used with X-ray images of the lungs to display the visual indicators of COVID-19 [53,54]. This is due to the similarities between COVID-19 and pneumonia as diseases that take an effect on the respiratory system. Similar to CT scans, X-ray equipment is also expensive and requires professionals to analyze the X-ray image.

The paper entitled “Automatic detection of coronavirus disease (COVID-19) using x-ray images and deep convolutional neural networks” used X-ray images taken of COVID-19-infected lungs and patients with lungs that were non-infected with COVID-19 to create a data set of x-ray images which was then used to predict COVID-19 automatically in patients. The X-ray images are passed into a ResNet-50 Convolutional Neural Network (CNN) which successfully obtained results of 98% accuracy in the differentiating between COVID-19 infected X-ray images and the non-infected x-ray images [38].

The paper of Zhang, Jianpeng, et al. [44] used deep-learning techniques on a data set of X-ray images of 70 patients confirmed to have COVID-19. Additional images of patients with pneumonia are added from a public chest X-ray image data set. The model is used to identify differences in X-ray images between patients infected with COVID-19 and patients suffering from pneumonia. The proposed deep-learning model was able to achieve a sensitivity of 90% detecting COVID-19 and a specificity of 87.84% in detecting non-COVID-19 cases.

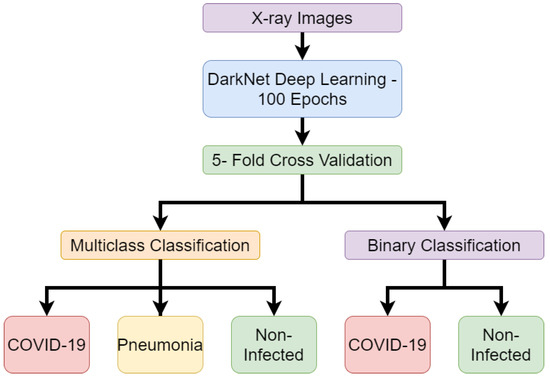

Ozturk, Tulin, et al. [39] also conducted experiments using deep learning to classify X-ray images of patients with real-time classification of COVID-19. The experiments made use of a custom deep-learning model named DarkNet to perform binary and multi-class classifications. The binary classification is the process of deep learning, making predictions based on two choices. In the case of this experiment, the binary classification seeks to distinguish between COVID-19 and no findings of disease. Multi-class classification is when AI is tasked with making classifications on more than two possible classifications. This differs from binary classifications as the model must make decisions on which class data belongs to rather than just making distinctions between data. The multi-class classification distinguishes between no findings of disease and or if disease is found, and then whether the disease is pneumonia or COVID-19. The experiments used a publicly available data set of COVID-19 X-ray images and another publicly available data set for non-infected and pneumonia X-ray images. The complete data set included 127 COVID-19 X-ray images and 500 pneumonia X-ray images and 500 non-infected X-ray images. The deep-learning process made use of the developed DarkNet neural network. The complete X-ray image data set was divided between 80% training data and 20% testing data. The deep learning was run for 100 epochs using 5-fold cross-validation. Each epoch is an iteration of when the data is passed through the neural network. The neural network will learn about the data being passed through. Repeating epochs can allow for the model to fine-tune its biases and weights on what it believes data should be classified as. Then the model can improve the accuracy as it learns what works and does not until it can provide the best results obtained. The results produced an accuracy score of 98% for binary classification and an accuracy score of 87.02% for multi-class classification. It is expected that the result will fall as the number of classifications increase as the AI will need to recognize more features to distinguish between classes rather than differentiate between two data patterns. The complete process followed in this work is detailed in Figure 4.

Figure 4.

Flow chart of work for detection of COVID-19 from X-ray images (Reproduced from [39]).

2.3. Camera Technology

Camera technology can be used to provide non-contact sensing by observing the chest movements of an individual [55]. This can be achieved by capturing video footage of movements of the chest or, in the case of depth cameras, they are able to calculate depth by using two sensors with a known range [56]. The information captured using camera technology can be used provide assistance in the detection of COVID-19 as one of the symptoms of the disease includes an increase in the breathing rate of patients.

The paper “Combining Visible Light and Infrared Imaging for Efficient Detection of Respiratory Infections such as COVID-19 on Portable Device” used RGB-thermal camera footage for the detection of COVID-19. The footage was used with machine-learning binary classification to detect normal and abnormal breathing from people wearing protective masks. This research is relevant as masks are now commonly worn by people around the world as a preventive measure against COVID-19. The research collected real-world data and applied deep learning to achieve a high result of 83.7% accuracy which is the highest result found in the literature in regards to breathing detection using RGB-thermal imaging with deep-learning models. This research can provide a scanning method which can be used to control the spread of the virus and work with protective masks, thus reducing spread of COVID-19 [41].

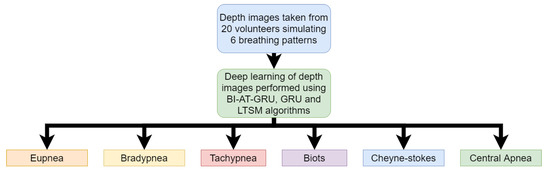

Wang, Yunlu, et al. [36] used Microsoft Kinect cameras to take depth images of volunteers breathing. A total of 20 volunteers were asked to sit on a chair and simulate 6 different breathing patterns. The breathing patterns were eupnea, bradypnea, tachypnea, biots, Cheyne –Stokes and central apnea. Each of these patterns display a different breathing rate in the individuals. Patients of COVID-19 display the rapid breathing pattern of tachypnea. During data collection, a spirometer was used to ensure the breathing pattern was being simulated correctly by the volunteers. The depth images taken using the camera were used in a deep-learning neural network model to classify the abnormal breathing patterns of tachypnea associated with COVID-19. The deep-learning model used was the BI-AT-GRU algorithm. Gated Recurrent Unit (GRU) is a simplified version of the Long-Term Short Memory (LTSM) algorithm. The BI-AT-GRU algorithm results achieved a high accuracy score of 94.5%. This research shows how depth images can be used to identify the tachypnea breathing patterns observed in COVID-19 patients in real time. The process map for this research is shown in Figure 5.

Figure 5.

Flow chart of work for detection of COVID-19 from Depth Camera Image (Reproduced from [36]).

The primary disadvantage of using this method is the cost of thermal and depth cameras and the camera operators. Although the price of these cameras is falling gradually, it remains substantially high [57]. The cost of the equipment is of course less expensive than methods such as CT and X-ray scanning, but still more expensive than other methods discussed further in this paper. The research done with cameras has shown that the devices can be used with AI in the detection of COVID-19 and without contact with the body. This allows for more techniques to be implemented where diagnosis of COVID-19 can be achieved in a safe manner without increasing the risk of spreading the disease.

2.4. Ultrasound Technology

Ultrasound technology can be applied to detect respiratory failure of the lungs. An ultrasound machine is a device that uses high-frequency sound waves to image body movements [58]. The sound waves bounce off different parts of the body which create echoes that are detected by the probe and used to create a moving image. Lung ultrasounds have seen great development in recent years [59]. The use of ultrasound technology can be used in the detection of COVID-19 in a non-contact method where the risk of healthcare professionals becoming infected from patients can be decreased [60,61]. Ultrasound technology becomes contactless by using an ultrasound transmitter and receiver. Respiratory movement can then take place between the transmitter and receiver and creates a Doppler affect. This can then be used to create a contactless breathing monitor [62,63,64]. Ultrasound technology can be performed using smartphones for the signal and processing of ultrasound images in a portable setting [65]. The disadvantage of ultrasound technology is that patients must prepare themselves before an ultrasound can effectively create an image of the body [66]. This preparation can include not eating for a few hours before.

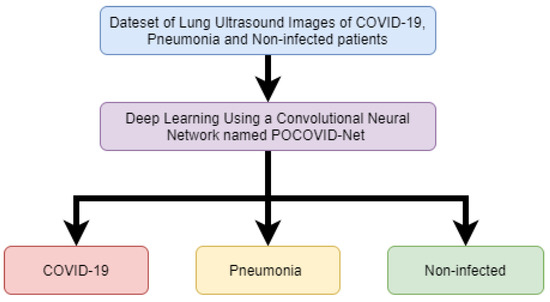

The work of Born, Jannis, et al. [46] shows that ultrasound technology can be used in deep-learning models to distinguish the differences in COVID-19, pneumonia, and no infection within the lungs. The research collects a data set of lung ultrasound images which contain video recordings of lung ultrasound scans. The data set includes a total of 64 video recordings with 39 of the recordings of COVID-19 patients, 14 videos of pneumonia patients and 11 videos of non-infected patients. The paper has developed a deep-learning convolutional neural network named POCOVID-Net. The deep-learning algorithm was able to achieve an accuracy score of 89%. These ultrasound devices can diagnose 4 to 5 patients per hour. Figure 6 shows a simplified flow graph of the experiment undertaken in this paper.

Figure 6.

Flow chart of work for detection of COVID-19 from Ultrasound Technology (Reproduced from [46]).

2.5. Radar Technology

Radar technology can be used to monitor the respiratory system within a home environment and provide a quick response if abnormalities are found, which suggests COVID-19 being present. Radar systems use frequency-modulated continuous wave (FMCW) to observe the Doppler effect when a person moves [67,68,69,70]. This can be used to monitor the fine movements associated with breathing. This is achieved by using the images captured by the radar systems then applying AI to classify the images. AI models can be used to give real-time classification on new images [71,72,73]. Research done shows that radar technology can achieve 94% accuracy for the detection of breathing rates and 80% accuracy for heart-rate detection [34,74,75]. The Israeli military force has made use of radar systems for monitoring the vital signs of COVID-19 patients. The goal of using this method is to prevent medical staff from becoming infected while caring for patients [40,76]. Tachypnea is a symptom of COVID-19 and can be detected in a patient by using radar sensing technology [63,68,77]. Using radar technology to monitor vital signs can provide non-interference monitoring; however the disadvantage of radar systems is that it has high power requirements and the technology comes at a high cost [78].

2.6. Radio Frequency Signals

The use of radio frequency (RF) signal sensing can detect the vital signs of individuals by sensing the minute movements of the chest made while breathing as the heart beats ( [73,79,80,81,82]). This technique can be used for monitoring the vital signs of patients independent of their activities [83]. The RF signals detect the movement by observing the Channel State Information (CSI), which can show amplitudes of the RF signals while movement occurs between a RF transmitter and receiver [84,85]. The Emerald system has been developed to monitor COVID-19 patients using RF signals. The system uses RF signals to detect the breathing rate of COVID-19 patients and then uses AI to infer the breathing rate of the patient. This allows for doctors treating the patients to be able to monitor the patient from a safe distance. This method prevents the risk of infection to staff and provides the patient comfort as they do not need to wear monitoring devices [43]. RF signals have been used in previous research to detect breathing rates. RF signals can be used to detect abnormal breathing patterns such as tachypnea [86], which is a symptom of COVID-19 [36]. Systems have been developed to allow for real-time monitoring of breathing patterns using RF signals [87]. RF signals can be vulnerable to other movements within the room. The other movements create noise in the Channel State Information which can then in turn cause false readings [88,89].

2.7. Thermography

Thermography is a widely used non-contact technique within the medical community [90,91]. It has been used for mass screening of people in other pandemics such as H1N1 and Ebola so it can be applied in this current pandemic of COVID-19 [92]. Thermography works by using infrared radiation to calculate the temperature of the human body [93]. Abnormal body temperatures are a well-known indication of infection [94]. Symptoms of COVID-19 have been found to include high temperatures over the normal body temperature of 36–37 degrees Celsius [95,96]. Thermography can also be used to monitor the respiratory systems of patients and provide detection of breathing patterns such as bradypnea or tachypnea using AI [97]. Thermography has been recommended as an early detection strategy for COVID-19 among large amounts of people in places such as in airports [98]. Deep learning has been applied to thermal images where classifications on new images can be made in under a second [99,100].

2.8. Terahertz

Terahertz sensing technology is the process of directing terahertz beams to a person’s body to detect the motion of the chest created by a heart beating or lungs inhaling or exhaling breath [101,102]. Terahertz sensing is a non-contact method which can achieve superior penetration depth [103]. This can be helpful when penetrating a patient’s clothes. These terahertz systems can be produced in a similar fashion to how the radar imaging takes place, except with using terahertz waves and observing the Doppler effect of the Terahertz wave while a patient performs the breathing issue [104]. Terahertz waves refer to electromagnetic frequencies around 0.1–10 Terahertz(THZ) [103,105]. The use of terahertz can detect disease such as COVID-19 [106]. This will work similarly to the radar system with AI being used to make classifications on the images showing the Doppler effect of terahertz waves. Deep learning can be applied to these images and give fast classifications of new models once an AI model has been fully trained. Terahertz radiation is considered the first choice in radiation exploitation due to the non-harmful properties to living cells [107]. A terahertz spectroscopy is an example of a powerful tool in medical research and diagnosis used for analysis of human breath samples and it offers a low cost [108].

2.9. Comparison to Contact Methods

The methods discussed in this paper have looked at non-contact techniques for diagnosing COVID-19. Due to the nature of the disease, it has been widely acknowledged that reducing contact between people is the best action to reduce the spread. Therefore non-contact technologies for diagnosis are the preferred method. Wearable devices can also be used for monitoring vital signs [109,110]. This monitoring of vital signs can therefore be used to detect any displays of COVID-19 symptoms. Popular devices such as AppleWatch, FitBit and Oura ring are highly available and provide monitoring of the heart rate [111]. The Oura ring has been found to show changes in body temperature associated with COVID-19 and has led to several studies being conducted into the use of Oura rings in early detection of COVID-19 [112,113]. These technologies are known as personal health trackers and in terms of COVID-19 detection, these devices will be better for self-diagnosis. If these devices can inform users that they are displaying COVID-19 symptoms then the user can take action. Non-contact methods will serve healthcare workers better as they can provide assistance to patients while still reducing contact with the patient and thus reducing risk of infection.

2.10. Future Directions

This section will detail some of the future directions which may be suitable for expanding on the research presented in this paper. The research has highlighted how the detection of COVID-19 is possible using various techniques. This section will now discuss how this research can be taken further to work within real-life scenarios.

- One of the biggest challenges with CT scanning to diagnose COVID-19 is the lack of portability. This means that although the method is non-contact, its use still requires individuals to travel to a location where the machine is available. As the CT images can provide high resolution, the AI can be used for the detection of COVID-19. Therefore, future directions of this method should look to creating highly accurate models that can eventually lead to the automation of COVID-19 detection. This can allow for faster diagnosis, which can allow for more patients to be tested and increase availability of staff operating and analyzing CT scans.

- X-rays, similarly to CT scans, are not portable. Like CT scans, professionals are required to operate these machines and analyze the X-ray images. The research presented in this paper has shown that AI can be used to make predictions if COVID-19 is present in the lungs. This can be useful similarly to CT scans where AI can be applied to make the predictions and speed up the process. The more data collected, the more advanced the model will become. Perhaps initially the predictions will need to be confirmed by humans but eventually the checks can become less frequent. Since the research above has displayed an ability of AI to distinguish between not just COVID-19 and non-infected but also pneumonia at high accuracy, then the AI has proved to be capable of accurate classifications.

- Thermal and depth cameras can detect the irregular breathing patterns that are associated with COVID-19 symptoms. The issue here is that even though the camera can detect the irregular breathing pattern, it is unable to categorically define COVID-19 as the cause for individuals displaying the irregular breathing patterns. In a real-life situation, the camera method may be better suited to monitoring vulnerable people who are considered high risk from COVID-19. Then once the monitoring system has identified the irregular breathing patterns, an alarm can be raised with a career or family member. Then, appropriate action can be taken for greater accuracy such as diagnosis with CT scanning or X-ray scanning.

- Ultrasound technology can take moving images of the lungs and detect COVID-19. This can also be made portable by using mobile devices. AI can be applied to recognize if COVID-19 or pneumonia is present in the lungs. This research can be further applied to develop applications on a mobile device that can capture an ultrasound of the lungs then compare it to an AI model to predict if COVID-19 is present. Although not all phones may not have the necessary hardware to achieve this, the non-contact method can allow for others to be able to use the devices for diagnosis at a safe distance.

- Radar technology can identify the breathing patterns of individuals. Much like camera technology, the identification of breathing patterns can raise cause of concern but it cannot isolate COVID-19 as the sole cause. Radar technology can again be used to monitor individuals but due to the high costs it is more likely to be used as a monitoring system within a hospital and not a home environment.

- Any future directions should consider the use of RF signals to detect the breathing patterns which give indication of COVID-19 symptoms. The RF systems can be implemented inexpensively using existing WiFi technology present within many homes. This allows for the monitoring of individuals without the costs incurred in implementing radar or camera technologies highlighted in this paper.

- Thermography has shown in previous research to be able to detect body temperatures of large amounts of people in previous pandemics. Therefore, it can be implemented in mass screening in the current COVID-19 pandemic. With the use of thermography being able to detect respiratory issues, it is clear that these systems can also be implemented for COVID-19 detection.

- Terahertz can provide deeper penetration and detect smaller movements such as the chest movements while breathing. This can therefore be used in early detection of COVID-19. The earlier the disease is detected, the sooner isolation can begin and ensure that further spread is reduced.

3. Conclusions

The works listed in this paper have shown that COVID-19 can be detected using contactless techniques. Techniques such as CT scans and X-ray imaging provide high accuracy and high image resolution, but the cost of the equipment is high and not portable. Thermal and depth camera technology has been used to detect breathing patterns, which is associated with COVID-19 symptoms. However, these cameras are expensive and need to be operated by a professional. Radar technology is also able to detect breathing patterns but carries disadvantages of high operating expenses and capital expenditures. RF signals provide low cost and high accuracy as compared with other non-invasive technologies. The technologies can work on AI which can allow for skilled professionals to be available to assist in other areas of healthcare during the pandemic. The non-contact methods also protect healthcare workers from contracting the disease. The future direction of non-contact detection should look at the use of RF systems as the cost is cheap and it is easier to implement within a home environment in comparison to other methods. This gives the advantage of allowing the users to remain within isolation.

Author Contributions

Conceptualization, W.T., Q.H.A., S.A., S.A.S., A.K., M.A.I.; formal analysis, W.T., S.A.S., K.D., A.K.; investigation, W.T., S.A.S., K.D.; resources, writing, review and editing, W.T., Q.H.A., S.A., S.A.S., A.K., M.A.I.; funding acquisition, Q.H.A., M.A.I.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

William Taylor’s studentship is funded by CENSIS UK through Scottish funding council in collaboration with British Telecom. This work is supported in parts by EPSRC DTG EP/N509668/1 Eng, EP/T021020/1 and EP/T021063/1.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Singhal, T. A review of coronavirus disease-2019 (COVID-19). Indian J. Pediatr. 2020, 87, 281–286. [Google Scholar] [CrossRef]

- Pan, L.; Mu, M.; Yang, P.; Sun, Y.; Wang, R.; Yan, J.; Li, P.; Hu, B.; Wang, J.; Hu, C.; et al. Clinical characteristics of COVID-19 patients with digestive symptoms in Hubei, China: A descriptive, cross-sectional, multicenter study. Am. J. Gastroenterol. 2020, 115. [Google Scholar] [CrossRef] [PubMed]

- Poyiadji, N.; Shahin, G.; Noujaim, D.; Stone, M.; Patel, S.; Griffith, B. COVID-19—Associated acute hemorrhagic necrotizing encephalopathy: CT and MRI features. Radiology 2020, 201187. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Shi, L.; Wang, Y.; Zhang, J.; Huang, L.; Zhang, C.; Liu, S.; Zhao, P.; Liu, H.; Zhu, L.; et al. Pathological findings of COVID-19 associated with acute respiratory distress syndrome. Lancet Respir. Med. 2020, 8, 420–422. [Google Scholar] [CrossRef]

- Wu, X.; Nethery, R.C.; Sabath, B.M.; Braun, D.; Dominici, F. Exposure to air pollution and COVID-19 mortality in the United States. medRxiv 2020. [Google Scholar] [CrossRef]

- Hellewell, J.; Abbott, S.; Gimma, A.; Bosse, N.I.; Jarvis, C.I.; Russell, T.W.; Munday, J.D.; Kucharski, A.J.; Edmunds, W.J.; Sun, F.; et al. Feasibility of controlling COVID-19 outbreaks by isolation of cases and contacts. Lancet Glob. Health 2020, 8, 488–496. [Google Scholar] [CrossRef]

- Jiang, S.; Xia, S.; Ying, T.; Lu, L. A novel coronavirus (2019-nCoV) causing pneumonia-associated respiratory syndrome. Cell. Mol. Immunol. 2020, 17, 554. [Google Scholar] [CrossRef]

- Khan, M.A.; Atangana, A. Modeling the dynamics of novel coronavirus (2019-nCov) with fractional derivative. Alex. Eng. J. 2020, 59, 2379–2389. [Google Scholar] [CrossRef]

- Nishiura, H.; Linton, N.M.; Akhmetzhanov, A.R. Initial cluster of novel coronavirus (2019-nCoV) infections in Wuhan, China is consistent with substantial human-to-human transmission. J. Clin. Med. 2020, 9, 488. [Google Scholar] [CrossRef]

- Shereen, M.A.; Khan, S.; Kazmi, A.; Bashir, N.; Siddique, R. COVID-19 infection: Origin, transmission, and characteristics of human coronaviruses. J. Adv. Res. 2020, 24, 91–98. [Google Scholar] [CrossRef]

- Lai, C.C.; Shih, T.P.; Ko, W.C.; Tang, H.J.; Hsueh, P.R. Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and corona virus disease-2019 (COVID-19): The epidemic and the challenges. Int. J. Antimicrob. Agents 2020, 105924. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.; Jiang, J.Z.; Wan, X.F.; Hua, Y.; Li, L.; Zhou, J.; Wang, X.; Hou, F.; Chen, J.; Zou, J.; et al. Are pangolins the intermediate host of the 2019 novel coronavirus (SARS-CoV-2)? PLoS Pathog. 2020, 16, e1008421. [Google Scholar] [CrossRef]

- Xiao, K.; Zhai, J.; Feng, Y.; Zhou, N.; Zhang, X.; Zou, J.J.; Li, N.; Guo, Y.; Li, X.; Shen, X.; et al. Isolation and characterization of 2019-nCoV-like coronavirus from Malayan pangolins. BioRxiv 2020. [Google Scholar] [CrossRef]

- Novel, C.P.E.R.E. The epidemiological characteristics of an outbreak of 2019 novel coronavirus diseases (COVID-19) in China. Zhonghua liu xing bing xue za zhi= Zhonghua liuxingbingxue zazhi 2020, 41, 145. [Google Scholar]

- Spinelli, A.; Pellino, G. COVID-19 pandemic: Perspectives on an unfolding crisis. Br. J. Surg. 2020. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.Y.; Ma, Y.T.; Zhang, J.Y.; Xie, X. COVID-19 and the cardiovascular system. Nat. Rev. Cardiol. 2020, 17, 259–260. [Google Scholar] [CrossRef] [PubMed]

- Dong, D.; Tang, Z.; Wang, S.; Hui, H.; Gong, L.; Lu, Y.; Xue, Z.; Liao, H.; Chen, F.; Yang, F.; et al. The role of imaging in the detection and management of COVID-19: A review. IEEE Rev. Biomed. Eng. 2020. [Google Scholar] [CrossRef]

- Cai, J.; Sun, W.; Huang, J.; Gamber, M.; Wu, J.; He, G. Indirect virus transmission in cluster of COVID-19 cases, Wenzhou, China, 2020. Emerg. Infect. Dis. 2020, 26. [Google Scholar] [CrossRef]

- Jones, N.R.; Qureshi, Z.U.; Temple, R.J.; Larwood, J.P.; Greenhalgh, T.; Bourouiba, L. Two metres or one: What is the evidence for physical distancing in covid-19? BMJ 2020, 370. [Google Scholar] [CrossRef]

- Schroter, R.C. Social distancing for covid-19: Is 2 metres far enough? BMJ 2020, 369. [Google Scholar] [CrossRef]

- Feng, S.; Shen, C.; Xia, N.; Song, W.; Fan, M.; Cowling, B.J. Rational use of face masks in the COVID-19 pandemic. Lancet Respir. Med. 2020, 8, 434–436. [Google Scholar] [CrossRef]

- Howard, J.; Huang, A.; Li, Z.; Tufekci, Z.; Zdimal, V.; van der Westhuizen, H.M.; von Delft, A.; Price, A.; Fridman, L.; Tang, L.H.; et al. Face masks against COVID-19: An evidence review. Gen. Med Res. 2020. [Google Scholar] [CrossRef]

- World Health Organization. Modes of Transmission of Virus Causing COVID-19: Implications for IPC Precaution Recommendations: Scientific Brief, 27 March 2020; Technical Report; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Kooraki, S.; Hosseiny, M.; Myers, L.; Gholamrezanezhad, A. Coronavirus (COVID-19) outbreak: What the department of radiology should know. J. Am. Coll. Radiol. 2020, 17. [Google Scholar] [CrossRef] [PubMed]

- Dowd, J.B.; Andriano, L.; Brazel, D.M.; Rotondi, V.; Block, P.; Ding, X.; Liu, Y.; Mills, M.C. Demographic science aids in understanding the spread and fatality rates of COVID-19. Proc. Natl. Acad. Sci. USA 2020, 117, 9696–9698. [Google Scholar] [CrossRef]

- Salathé, M.; Althaus, C.L.; Neher, R.; Stringhini, S.; Hodcroft, E.; Fellay, J.; Zwahlen, M.; Senti, G.; Battegay, M.; Wilder-Smith, A.; et al. COVID-19 epidemic in Switzerland: On the importance of testing, contact tracing and isolation. Swiss Med Wkly. 2020, 150, w20225. [Google Scholar] [CrossRef]

- Lewnard, J.A.; Lo, N.C. Scientific and ethical basis for social-distancing interventions against COVID-19. Lancet. Infect. Dis. 2020, 20, 631. [Google Scholar] [CrossRef]

- Willan, J.; King, A.J.; Jeffery, K.; Bienz, N. Challenges for NHS hospitals during COVID-19 epidemic. BMJ 2020, 368, m1117. [Google Scholar] [CrossRef]

- Yang, X.; Ren, X.; Chen, M.; Wang, L.; Ding, Y. Human Posture Recognition in Intelligent Healthcare. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1437, p. 012014. [Google Scholar]

- Abbasi, Q.H.; Rehman, M.U.; Qaraqe, K.; Alomainy, A. Advances in Body-Centric Wireless Communication: Applications and State-of-the-Art; Institution of Engineering and Technology: Stevenage, UK, 2016. [Google Scholar]

- Taylor, W.; Shah, S.A.; Dashtipour, K.; Zahid, A.; Abbasi, Q.H.; Imran, M.A. An intelligent non-invasive real-time human activity recognition system for next-generation healthcare. Sensors 2020, 20, 2653. [Google Scholar] [CrossRef]

- Tan, B.; Chen, Q.; Chetty, K.; Woodbridge, K.; Li, W.; Piechocki, R. Exploiting WiFi channel state information for residential healthcare informatics. IEEE Commun. Mag. 2018, 56, 130–137. [Google Scholar] [CrossRef]

- Marini, J.J.; Gattinoni, L. Management of COVID-19 respiratory distress. JAMA 2020, 323, 2329–2330. [Google Scholar] [CrossRef]

- Fan, D.; Ren, A.; Zhao, N.; Yang, X.; Zhang, Z.; Shah, S.A.; Hu, F.; Abbasi, Q.H. Breathing rhythm analysis in body centric networks. IEEE Access 2018, 6, 32507–32513. [Google Scholar] [CrossRef]

- Your Coronavirus Test Result. Available online: https://www.nhs.uk/conditions/coronavirus-covid-19/testing-and-tracing/what-your-test-result-means/ (accessed on 23 September 2020).

- Wang, Y.; Hu, M.; Li, Q.; Zhang, X.P.; Zhai, G.; Yao, N. Abnormal respiratory patterns classifier may contribute to large-scale screening of people infected with COVID-19 in an accurate and unobtrusive manner. arXiv 2020, arXiv:2002.05534. [Google Scholar]

- Li, L.; Qin, L.; Xu, Z.; Yin, Y.; Wang, X.; Kong, B.; Bai, J.; Lu, Y.; Fang, Z.; Song, Q.; et al. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology 2020, 200905. [Google Scholar] [CrossRef] [PubMed]

- Narin, A.; Kaya, C.; Pamuk, Z. Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks. arXiv 2020, arXiv:2003.10849. [Google Scholar]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 103792. [Google Scholar] [CrossRef]

- Islam, S.M. Can Radar Remote Life Sensing Technology Help to Combat COVID-19? TechRxiv. Preprint 2020. [Google Scholar] [CrossRef]

- Jiang, Z.; Hu, M.; Fan, L.; Pan, Y.; Tang, W.; Zhai, G.; Lu, Y. Combining visible light and infrared imaging for efficient detection of respiratory infections such as COVID-19 on portable device. arXiv 2020, arXiv:2004.06912. [Google Scholar]

- Barstugan, M.; Ozkaya, U.; Ozturk, S. Coronavirus (covid-19) classification using ct images by machine learning methods. arXiv 2020, arXiv:2003.09424. [Google Scholar]

- CSAIL Device Lets Doctors Monitor COVID-19 Patients from a Distance. Available online: https://www.csail.mit.edu/news/csail-device-lets-doctors-monitor-covid-19-patients-distance (accessed on 23 September 2020).

- Zhang, J.; Xie, Y.; Li, Y.; Shen, C.; Xia, Y. COVID-19 screening on chest X-ray images using deep learning based anomaly detection. arXiv 2020, arXiv:2003.12338. [Google Scholar]

- Shan, F.; Gao, Y.; Wang, J.; Shi, W.; Shi, N.; Han, M.; Xue, Z.; Shi, Y. Lung infection quantification of covid-19 in ct images with deep learning. arXiv 2020, arXiv:2003.04655. [Google Scholar]

- Born, J.; Brändle, G.; Cossio, M.; Disdier, M.; Goulet, J.; Roulin, J.; Wiedemann, N. POCOVID-Net: Automatic detection of COVID-19 from a new lung ultrasound imaging dataset (POCUS). arXiv 2020, arXiv:2004.12084. [Google Scholar]

- Yang, W.; Sirajuddin, A.; Zhang, X.; Liu, G.; Teng, Z.; Zhao, S.; Lu, M. The role of imaging in 2019 novel coronavirus pneumonia (COVID-19). Eur. Radiol. 2020, 1–9. [Google Scholar] [CrossRef]

- Udugama, B.; Kadhiresan, P.; Kozlowski, H.N.; Malekjahani, A.; Osborne, M.; Li, V.Y.; Chen, H.; Mubareka, S.; Gubbay, J.B.; Chan, W.C. Diagnosing COVID-19: The disease and tools for detection. ACS Nano 2020, 14, 3822–3835. [Google Scholar] [CrossRef] [PubMed]

- Ceniccola, G.D.; Castro, M.G.; Piovacari, S.M.F.; Horie, L.M.; Corrêa, F.G.; Barrere, A.P.N.; Toledo, D.O. Current technologies in body composition assessment: Advantages and disadvantages. Nutrition 2019, 62, 25–31. [Google Scholar] [CrossRef]

- Brenner, D.J. Radiation risks potentially associated with low-dose CT screening of adult smokers for lung cancer. Radiology 2004, 231, 440–445. [Google Scholar] [CrossRef]

- Shi, F.; Wang, J.; Shi, J.; Wu, Z.; Wang, Q.; Tang, Z.; He, K.; Shi, Y.; Shen, D. Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020. [Google Scholar] [CrossRef]

- Sethy, P.K.; Behera, S.K. Detection of coronavirus disease (COVID-19) based on deep features. Preprints 2020, 2020030300, 2020. [Google Scholar]

- Wang, L.; Wong, A. COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. arXiv 2020, arXiv:2003.09871. [Google Scholar]

- Ghoshal, B.; Tucker, A. Estimating uncertainty and interpretability in deep learning for coronavirus (COVID-19) detection. arXiv 2020, arXiv:2003.10769. [Google Scholar]

- Nam, Y.; Kong, Y.; Reyes, B.; Reljin, N.; Chon, K.H. Monitoring of heart and breathing rates using dual cameras on a smartphone. PLoS ONE 2016, 11, e0151013. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Vaughan, R. Deep learning radar design for breathing and fall detection. IEEE Sensors J. 2020, 20, 5072–5085. [Google Scholar] [CrossRef]

- Elphick, H.E.; Alkali, A.H.; Kingshott, R.K.; Burke, D.; Saatchi, R. Exploratory study to evaluate respiratory rate using a thermal imaging camera. Respiration 2019, 97, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Powles, A.E.; Martin, D.J.; Wells, I.T.; Goodwin, C.R. Physics of ultrasound. Anaesth. Intensive Care Med. 2018, 19, 202–205. [Google Scholar] [CrossRef]

- Mojoli, F.; Bouhemad, B.; Mongodi, S.; Lichtenstein, D. Lung ultrasound for critically ill patients. Am. J. Respir. Crit. Care Med. 2019, 199, 701–714. [Google Scholar] [CrossRef]

- Soldati, G.; Smargiassi, A.; Inchingolo, R.; Buonsenso, D.; Perrone, T.; Briganti, D.F.; Perlini, S.; Torri, E.; Mariani, A.; Mossolani, E.E.; et al. Is there a role for lung ultrasound during the COVID-19 pandemic? J. Ultrasound Med. 2020. [Google Scholar] [CrossRef]

- Buonsenso, D.; Pata, D.; Chiaretti, A. COVID-19 outbreak: Less stethoscope, more ultrasound. Lancet Respir. Med. 2020, 8, e27. [Google Scholar] [CrossRef]

- Arlotto, P.; Grimaldi, M.; Naeck, R.; Ginoux, J.M. An ultrasonic contactless sensor for breathing monitoring. Sensors 2014, 14, 15371–15386. [Google Scholar] [CrossRef]

- Al-Naji, A.; Al-Askery, A.J.; Gharghan, S.K.; Chahl, J. A system for monitoring breathing activity using an ultrasonic radar detection with low power consumption. J. Sens. Actuator Netw. 2019, 8, 32. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, D.; Wang, L.; Zheng, Y.; Gu, T.; Dorizzi, B.; Zhou, X. Contactless respiration monitoring using ultrasound signal with off-the-shelf audio devices. IEEE Internet Things J. 2018, 6, 2959–2973. [Google Scholar] [CrossRef]

- Kim, K.C.; Kim, M.J.; Joo, H.S.; Lee, W.; Yoon, C.; Song, T.K.; Yoo, Y. Smartphone-based portable ultrasound imaging system: A primary result. In Proceedings of the 2013 IEEE International Ultrasonics Symposium (IUS), Prague, Czech Republic, 21–25 July 2013; pp. 2061–2063. [Google Scholar]

- Genc, A.; Ryk, M.; Suwała, M.; Żurakowska, T.; Kosiak, W. Ultrasound imaging in the general practitioner’s office–A literature review. J. Ultrason. 2016, 16, 78. [Google Scholar] [CrossRef]

- Ding, C.; Zou, Y.; Sun, L.; Hong, H.; Zhu, X.; Li, C. Fall detection with multi-domain features by a portable FMCW radar. In Proceedings of the 2019 IEEE MTT-S International Wireless Symposium (IWS), Guangzhou, China, 19–22 May 2019; pp. 1–3. [Google Scholar]

- Shah, S.A.; Yang, X.; Abbasi, Q.H. Cognitive health care system and its application in pill-rolling assessment. Int. J. Numer. Model. Electron. Netw. Devices Fields 2019, 32, e2632. [Google Scholar] [CrossRef]

- Yang, X.; Fan, D.; Ren, A.; Zhao, N.; Shah, S.A.; Alomainy, A.; Ur-Rehman, M.; Abbasi, Q.H. Diagnosis of the Hypopnea syndrome in the early stage. Neural Comput. Appl. 2020, 32, 855–866. [Google Scholar] [CrossRef]

- Shah, S.A.; Tahir, A.; Ahmad, J.; Zahid, A.; Parvez, H.; Shah, S.Y.; Ashleibta, A.M.A.; Hasanali, A.; Khattak, S.; Abbasi, Q.H. Sensor fusion for identification of freezing of gait episodes using Wi-Fi and radar imaging. IEEE Sensors J. 2020. [Google Scholar] [CrossRef]

- Fioranelli, F.; Shah, S.A.; Li, H.; Shrestha, A.; Yang, S.; Le Kernec, J. Radar sensing for healthcare. Electron. Lett. 2019, 55, 1022–1024. [Google Scholar] [CrossRef]

- Gennarelli, G.; Ludeno, G.; Soldovieri, F. Real-time through-wall situation awareness using a microwave Doppler radar sensor. Remote. Sens. 2016, 8, 621. [Google Scholar] [CrossRef]

- Yang, X.; Shah, S.A.; Ren, A.; Fan, D.; Zhao, N.; Cao, D.; Hu, F.; Rehman, M.U.; Wang, W.; Von Deneen, K.M.; et al. Detection of essential tremor at the s-band. IEEE J. Transl. Eng. Health Med. 2018, 6, 1–7. [Google Scholar] [CrossRef]

- Alizadeh, M.; Shaker, G.; De Almeida, J.C.M.; Morita, P.P.; Safavi-Naeini, S. Remote monitoring of human vital signs using mm-Wave FMCW radar. IEEE Access 2019, 7, 54958–54968. [Google Scholar] [CrossRef]

- Fioranelli, F.; Le Kernec, J.; Shah, S.A. Radar for health care: Recognizing human activities and monitoring vital signs. IEEE Potentials 2019, 38, 16–23. [Google Scholar] [CrossRef]

- Shah, S.A.; Fioranelli, F. Human activity recognition: Preliminary results for dataset portability using FMCW radar. In Proceedings of the 2019 International Radar Conference (RADAR), Toulon, France, 23–27 September 2019. [Google Scholar]

- Kim, S.H.; Han, G.T. 1D CNN based human respiration pattern recognition using ultra wideband radar. In Proceedings of the 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Korea, 11–13 February 2019; pp. 411–414. [Google Scholar]

- Christenson, P.D.; Yang, C.X.; Kaabouch, N. A low cost through-wall radar for vital signs monitoring. In Proceedings of the 2019 IEEE International Conference on Electro Information Technology (EIT), Brookings, SD, USA, 31 July–1 August 2019; pp. 567–571. [Google Scholar]

- Liu, J.; Chen, Y.; Wang, Y.; Chen, X.; Cheng, J.; Yang, J. Monitoring vital signs and postures during sleep using WiFi signals. IEEE Internet Things J. 2018, 5, 2071–2084. [Google Scholar] [CrossRef]

- Dong, B.; Ren, A.; Shah, S.A.; Hu, F.; Zhao, N.; Yang, X.; Haider, D.; Zhang, Z.; Zhao, W.; Abbasi, Q.H. Monitoring of atopic dermatitis using leaky coaxial cable. Healthc. Technol. Lett. 2017, 4, 244–248. [Google Scholar] [CrossRef]

- Haider, D.; Ren, A.; Fan, D.; Zhao, N.; Yang, X.; Tanoli, S.A.K.; Zhang, Z.; Hu, F.; Shah, S.A.; Abbasi, Q.H. Utilizing a 5G spectrum for health care to detect the tremors and breathing activity for multiple sclerosis. Trans. Emerg. Telecommun. Technol. 2018, 29, e3454. [Google Scholar] [CrossRef]

- Yang, X.; Shah, S.A.; Ren, A.; Zhao, N.; Zhao, J.; Hu, F.; Zhang, Z.; Zhao, W.; Rehman, M.U.; Alomainy, A. Monitoring of patients suffering from REM sleep behavior disorder. IEEE J. Electromagn. Microwaves Med. Biol. 2018, 2, 138–143. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Chen, Y.; Yang, J.; Chen, X.; Cheng, J. Tracking vital signs during sleep leveraging off-the-shelf WiFi. In Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Hangzhou, China, 7–12 June 2015; pp. 267–276. [Google Scholar]

- Zhao, J.; Liu, L.; Wei, Z.; Zhang, C.; Wang, W.; Fan, Y. R-DEHM: CSI-based robust duration estimation of human motion with WiFi. Sensors 2019, 19, 1421. [Google Scholar] [CrossRef] [PubMed]

- Chopra, N.; Yang, K.; Abbasi, Q.H.; Qaraqe, K.A.; Philpott, M.; Alomainy, A. THz time-domain spectroscopy of human skin tissue for in-body nanonetworks. IEEE Trans. Terahertz Sci. Technol. 2016, 6, 803–809. [Google Scholar] [CrossRef]

- Shah, S.A.; Fioranelli, F. RF sensing technologies for assisted daily living in healthcare: A comprehensive review. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 26–44. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, D.; Xiong, J.; Yi, E.; Gao, R.; Zhang, D. FarSense: Pushing the range limit of WiFi-based respiration sensing with CSI ratio of two antennas. Proc. Acm Interactive Mobile, Wearable Ubiquitous Technol. 2019, 3, 1–26. [Google Scholar] [CrossRef]

- Rocamora, J.; Ho, I.W.H.; Mak, M.W.; Lau, A. Survey of CSI fingerprinting-based indoor positioning and mobility tracking systems. IET Signal Process. 2020. [Google Scholar] [CrossRef]

- Al-qaness, M.A.; Abd Elaziz, M.; Kim, S.; Ewees, A.A.; Abbasi, A.A.; Alhaj, Y.A.; Hawbani, A. Channel state information from pure communication to sense and track human motion: A survey. Sensors 2019, 19, 3329. [Google Scholar] [CrossRef]

- Ekici, S.; Jawzal, H. Breast cancer diagnosis using thermography and convolutional neural networks. Med. Hypotheses 2020, 137, 109542. [Google Scholar] [CrossRef]

- Liu, X.; Tian, G.; Chen, Y.; Luo, H.; Zhang, J.; Li, W. Non-contact degradation evaluation for IGBT modules using eddy current pulsed thermography approach. Energies 2020, 13, 2613. [Google Scholar] [CrossRef]

- Silvino, V.O.; Gomes, R.B.B.; Ribeiro, S.L.G.; de Lima Moreira, D.; dos Santos, M.A.P. Identifying febrile humans using infrared thermography screening: Possible applications during COVID-19 outbreak. Rev. Contexto SaÚDe 2020, 20, 5–9. [Google Scholar] [CrossRef]

- Silva, T.A.E.D.; Silva, L.F.D.; Muchaluat-Saade, D.C.; Conci, A. A computational method to assist the diagnosis of breast disease using dynamic thermography. Sensors 2020, 20, 3866. [Google Scholar] [CrossRef] [PubMed]

- Lahiri, B.; Bagavathiappan, S.; Jayakumar, T.; Philip, J. Medical applications of infrared thermography: A review. Infrared Phys. Technol. 2012, 55, 221–235. [Google Scholar] [CrossRef] [PubMed]

- Qiu, H.; Wu, J.; Hong, L.; Luo, Y.; Song, Q.; Chen, D. Clinical and epidemiological features of 36 children with coronavirus disease 2019 (COVID-19) in Zhejiang, China: An observational cohort study. Lancet Infect. Dis. 2020, 20, 689–696. [Google Scholar] [CrossRef]

- Chen, J.; Qi, T.; Liu, L.; Ling, Y.; Qian, Z.; Li, T.; Li, F.; Xu, Q.; Zhang, Y.; Xu, S.; et al. Clinical progression of patients with COVID-19 in Shanghai, China. J. Infect. 2020, 80. [Google Scholar] [CrossRef]

- Jagadev, P.; Giri, L.I. Non-contact monitoring of human respiration using infrared thermography and machine learning. Infrared Phys. Technol. 2020, 104, 103117. [Google Scholar] [CrossRef]

- Ulhaq, A.; Khan, A.; Gomes, D.; Pau, M. Computer vision for COVID-19 Control: A survey. arXiv 2020, arXiv:2004.09420. [Google Scholar]

- Farooq, M.A.; Corcoran, P. Infrared imaging for human thermography and breast tumor classification using thermal images. In Proceedings of the 2020 31st Irish Signals and Systems Conference (ISSC), Maynooth, Ireland, 17–18 June 2020; pp. 1–6. [Google Scholar]

- Rodriguez-Lozano, F.J.; León-García, F.; Ruiz de Adana, M.; Palomares, J.M.; Olivares, J. Non-invasive forehead segmentation in thermographic imaging. Sensors 2019, 19, 4096. [Google Scholar] [CrossRef]

- Rong, Y.; Theofanopoulos, P.C.; Trichopoulos, G.C.; Bliss, D.W. Cardiac sensing exploiting an ultra-wideband terahertz sensing system. In Proceedings of the 2020 IEEE International Radar Conference (RADAR), Basel, Switzerland, 27 April 27–1 May 2020; pp. 1002–1006. [Google Scholar]

- Matsumoto, H.; Watanabe, I.; Kasamatsu, A.; Monnai, Y. Integrated terahertz radar based on leaky-wave coherence tomography. Nat. Electron. 2020, 3, 122–129. [Google Scholar] [CrossRef]

- Tao, Y.H.; Fitzgerald, A.J.; Wallace, V.P. Non-contact, non-destructive testing in various industrial sectors with terahertz technology. Sensors 2020, 20, 712. [Google Scholar] [CrossRef]

- Petkie, D.T.; Bryan, E.; Benton, C.; Phelps, C.; Yoakum, J.; Rogers, M.; Reed, A. Remote respiration and heart rate monitoring with millimeter-wave/terahertz radars. In Proceedings of the Millimetre Wave and Terahertz Sensors and Technology, Cardiff, UK, 17–18 September 2008; p. 71170I. [Google Scholar]

- Yan, W.; Chen, D.; Kong, F.; Bai, X. FDTD simulation of terahertz wave propagation in time-varying plasma. In Proceedings of the 2019 Photonics & Electromagnetics Research Symposium-Fall (PIERS-Fall), Xiamen, China, 17–20 December 2019; pp. 699–701. [Google Scholar]

- Saeed, N.; Loukil, M.H.; Sarieddeen, H.; Al-Naffouri, T.Y.; Alouini, M.S. Body-Centric Terahertz Networks: Prospects and Challenges. Available online: https://www.techrxiv.org/articles/preprint/Body-Centric_Terahertz_Networks_Prospects_and_Challenges/12923498 (accessed on 23 September 2020).

- Punia, S.; Malik, H.K. THz radiation generation in axially magnetized collisional pair plasma. Phys. Lett. A 2019, 383, 1772–1777. [Google Scholar] [CrossRef]

- Rothbart, N.; Holz, O.; Koczulla, R.; Schmalz, K.; Hübers, H.W. Analysis of human breath by millimeter-wave/terahertz spectroscopy. Sensors 2019, 19, 2719. [Google Scholar] [CrossRef] [PubMed]

- Dinh-Le, C.; Chuang, R.; Chokshi, S.; Mann, D. Wearable health technology and electronic health record integration: Scoping review and future directions. JMIR Mhealth Uhealth 2019, 7, e12861. [Google Scholar] [CrossRef] [PubMed]

- Qiu, H.J.; Song, W.Z.; Wang, X.X.; Zhang, J.; Fan, Z.; Yu, M.; Ramakrishna, S.; Long, Y.Z. A calibration-free self-powered sensor for vital sign monitoring and finger tap communication based on wearable triboelectric nanogenerator. Nano Energy 2019, 58, 536–542. [Google Scholar] [CrossRef]

- Jeong, H.; Rogers, J.A.; Xu, S. Continuous on-body sensing for the COVID-19 pandemic: Gaps and opportunities. Sci. Adv. 2020, 6, eabd4794. [Google Scholar] [CrossRef]

- Kapoor, A.; Guha, S.; Das, M.K.; Goswami, K.C.; Yadav, R. Digital healthcare: The only solution for better healthcare during COVID-19 pandemic? Indian Heart J. 2020, 72, 61–64. [Google Scholar] [CrossRef]

- Seshadri, D.R.; Davies, E.V.; Harlow, E.R.; Hsu, J.J.; Knighton, S.C.; Walker, T.A.; Voos, J.E.; Drummond, C.K. Wearable sensors for COVID-19: A call to action to harness our digital infrastructure for remote patient monitoring and virtual assessments. Front. Digit. Health 2020, 2, 8. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).