A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals

Abstract

1. Introduction

2. Materials and Methods

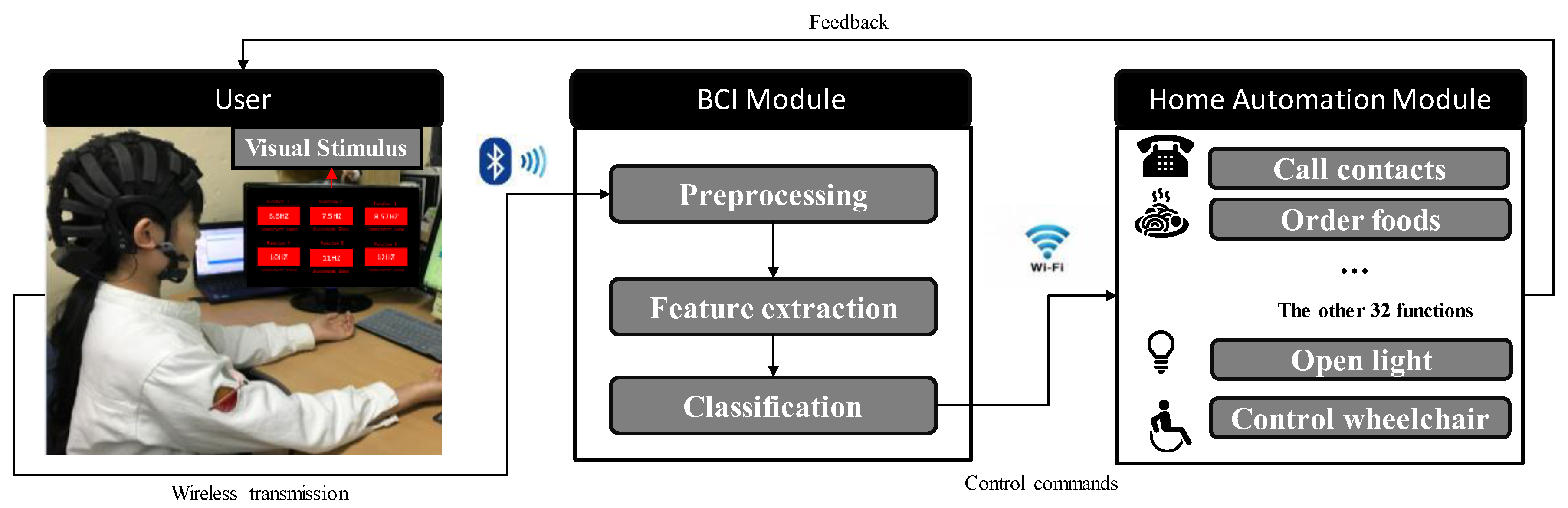

2.1. System Architecture and Parameters

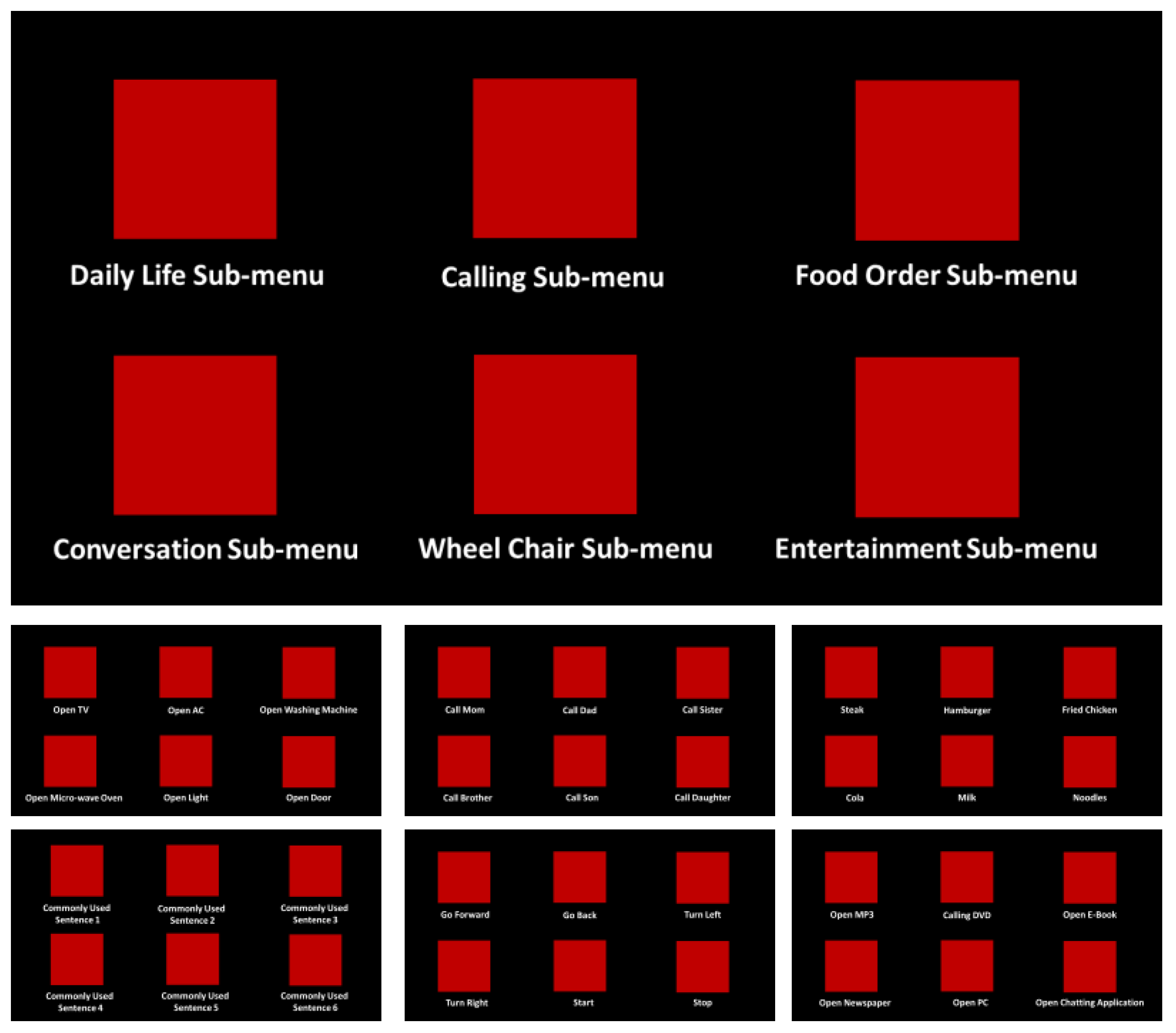

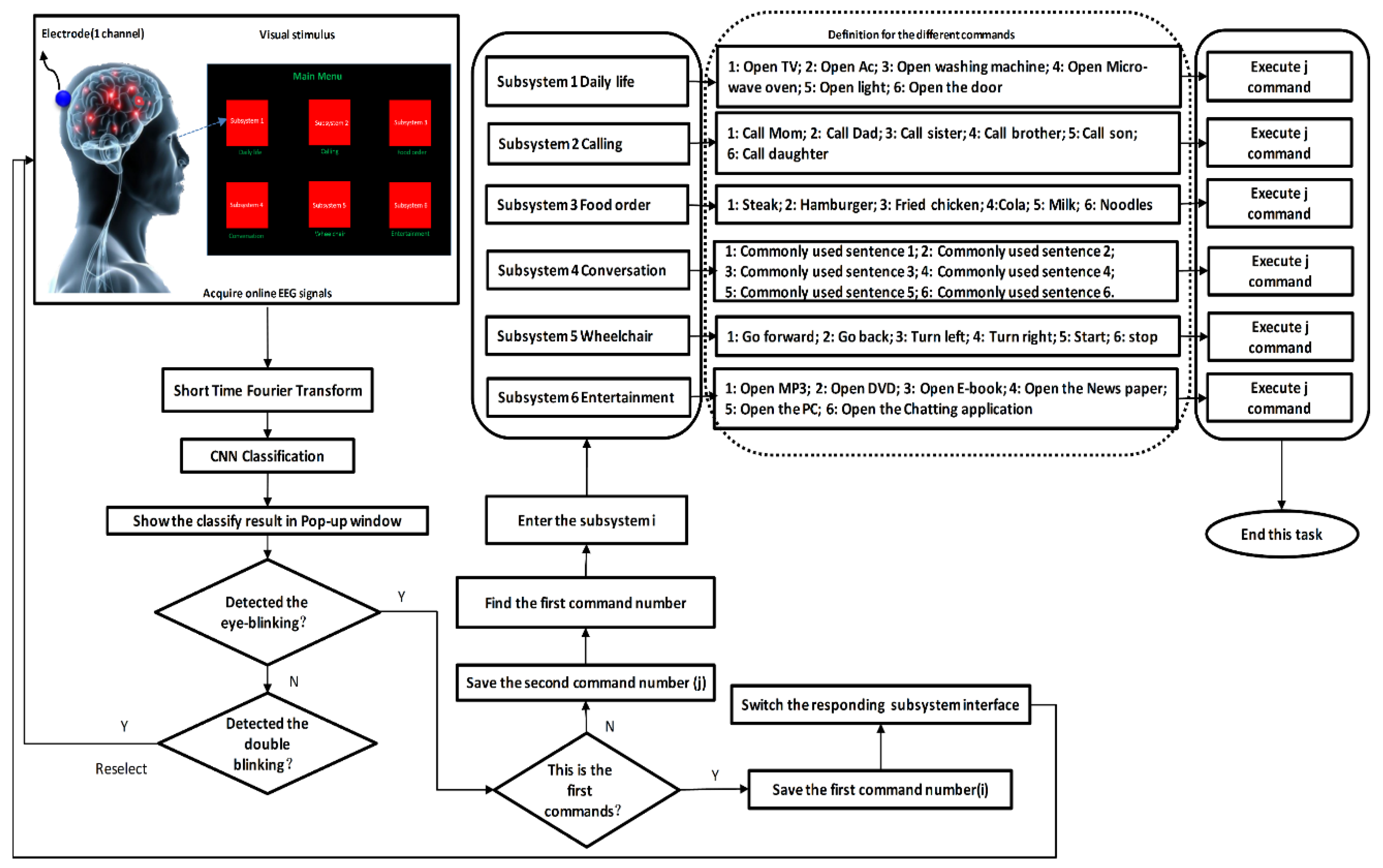

2.2. Setting Up the Interface

2.3. Experimental Protocol

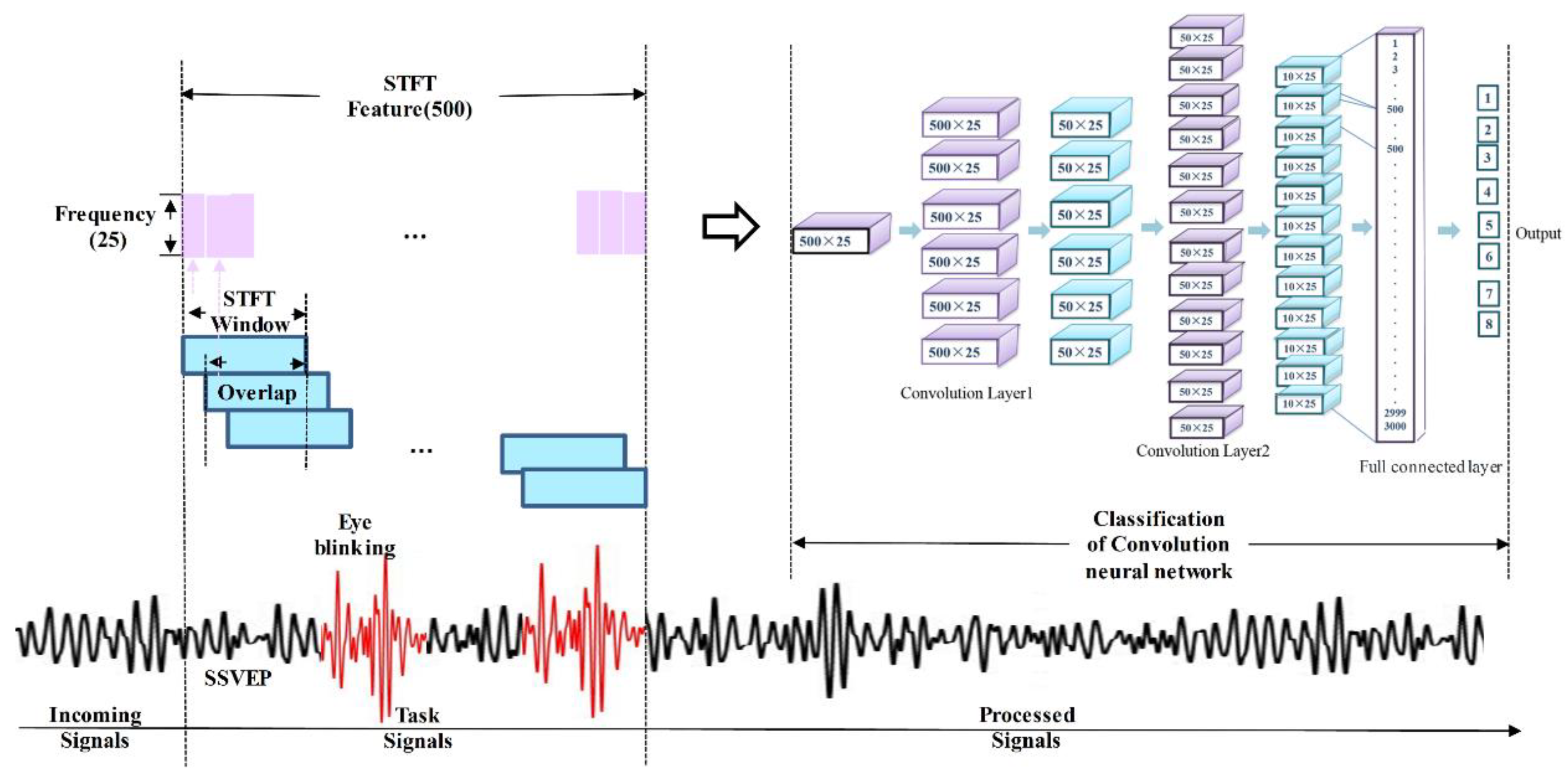

2.4. Feature Extraction Protocol

2.5. Classification

3. Results

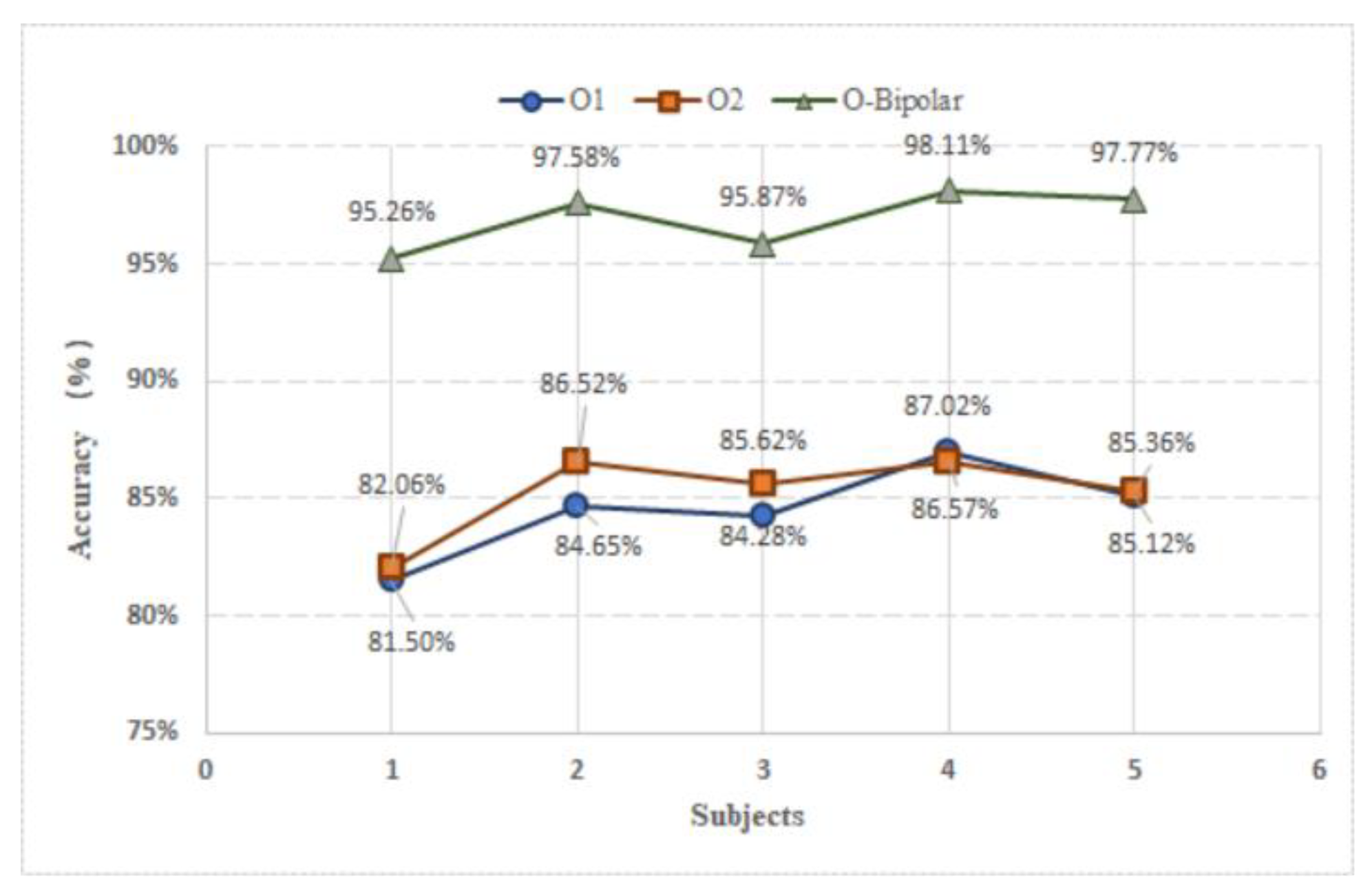

3.1. Channel Selection

3.2. Time Window Selection

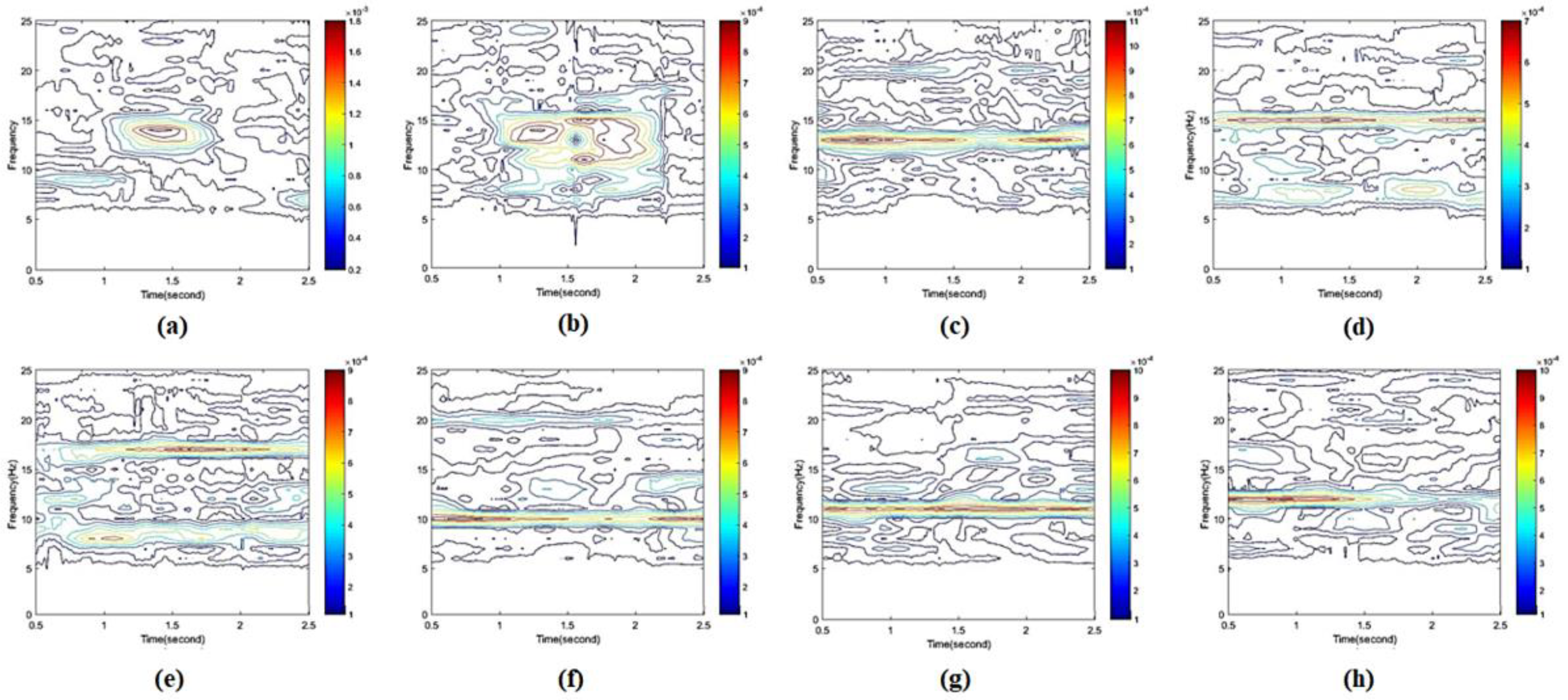

3.3. Feature Extraction

3.4. Real-Time Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lance, B.J.; Kerick, S.E.; Ries, A.J.; Oie, K.S.; McDowell, K. Brain–Computer Interface Technologies in the Coming Decades. Proc. IEEE 2012, 100, 1585–1599. [Google Scholar] [CrossRef]

- Leuthardt, E.C.; Schalk, G.; Wolpaw, J.R.; Ojemann, J.G.; Moran, D.W. A brain–computer interface using electrocorticographic signals in humans. J. Neural Eng. 2004, 1, 63–71. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.-H.; Yang, D.; Chung, W.-Y. A High-Rate BCI Speller Based on Eye-Closed EEG Signal. IEEE Access 2018, 6, 33995–34003. [Google Scholar] [CrossRef]

- Yang, D.; Huang, R.; Yoo, S.-H.; Shin, M.-J.; Yoon, J.A.; Shin, Y.-I.; Hong, K.-S. Detection of Mild Cognitive Impairment Using Convolutional Neural Network: Temporal-Feature Maps of Functional Near-Infrared Spectroscopy. Front. Aging Neurosci. 2020, 12. [Google Scholar] [CrossRef]

- Kosmyna, N.; Tarpin-Bernard, F.; Bonnefond, N.; Rivet, B. Feasibility of BCI Control in a Realistic Smart Home Environment. Front. Hum. Neurosci. 2016, 10, 1–10. [Google Scholar] [CrossRef]

- Birn, R.M. The role of physiological noise in resting-state functional connectivity. NeuroImage 2012, 62, 864–870. [Google Scholar] [CrossRef]

- Tanaka, M. Effects of Mental Fatigue on Brain Activity and Cognitive Performance: A Magnetoencephalography Study. Anat. Physiol. 2015, 4, 1–5. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M.; Lecuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng. 2007, 4, R1–R13. [Google Scholar] [CrossRef]

- Naseer, N.; Hong, K.-S. fNIRS-based brain-computer interfaces: A review. Front. Hum. Neurosci. 2015, 9. [Google Scholar] [CrossRef]

- Yang, D.; Hong, K.-S.; Yoo, S.-H.; Kim, C.-S. Evaluation of Neural Degeneration Biomarkers in the Prefrontal Cortex for Early Identification of Patients With Mild Cognitive Impairment: An fNIRS Study. Front. Hum. Neurosci. 2019, 13, 317. [Google Scholar] [CrossRef]

- Abdulkader, S.N.; Atia, A.; Mostafa, M.-S.M. Brain computer interfacing: Applications and challenges. Egypt. Inform. J. 2015, 16, 213–230. [Google Scholar] [CrossRef]

- Zhang, W.; Tan, C.; Sun, F.; Wu, H.; Zhang, B. A review of EEG-based brain-computer interface systems design. Brain Sci. Adv. 2018, 4, 156–167. [Google Scholar] [CrossRef]

- Zakaria, M.H.F.; Mansor, W.; Lee, K.Y. Time-frequency analysis of executed and imagined motor movement EEG signals for neuro-based home appliance system. In Proceedings of the TENCON 2017—2017 IEEE Region 10 Conference, Penang, Malaysia, 5–8 November 2017; pp. 1657–1660. [Google Scholar] [CrossRef]

- Vidaurre, C.; Blankertz, B. Towards a Cure for BCI Illiteracy. Brain Topogr. 2009, 23, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Rani, M.S.B.A.; Mansor, W.B. Detection of eye blinks from EEG signals for home lighting system activation. In Proceedings of the 2009 6th International Symposium on Mechatronics and its Applications, Sharjah, UAE, 23–26 March 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Sajda, P.; Pohlmeyer, E.; Wang, J.; Parra, L.; Christoforou, C.; Dmochowski, J.; Hanna, B.; Bahlmann, C.; Singh, M.; Chang, S.-F. In a Blink of an Eye and a Switch of a Transistor: Cortically Coupled Computer Vision. Proc. IEEE 2010, 98, 462–478. [Google Scholar] [CrossRef]

- Aloise, F.; Schettini, F.; Aricò, P.; Leotta, F.; Salinari, S.; Mattia, D.; Babiloni, F.; Cincotti, F. P300-based brain–computer interface for environmental control: An asynchronous approach. J. Neural Eng. 2011, 8, 25025. [Google Scholar] [CrossRef] [PubMed]

- Sellers, E.W.; Vaughan, T.M.; Wolpaw, J.R. A brain-computer interface for long-term independent home use. Amyotroph. Lateral Scler. 2010, 11, 449–455. [Google Scholar] [CrossRef]

- Carabalona, R.; Grossi, F.; Tessadri, A.; Castiglioni, P.; Caracciolo, A.; De Munari, I. Light on! Real world evaluation of a P300-based brain–computer interface (BCI) for environment control in a smart home. Ergonomics 2012, 55, 552–563. [Google Scholar] [CrossRef]

- Corralejo, R.; Nicolas-Alonso, L.F.; Alvarez, D.; Hornero, R. A P300-based brain–computer interface aimed at operating electronic devices at home for severely disabled people. Med. Biol. Eng. 2014, 52, 861–872. [Google Scholar] [CrossRef]

- Holzner, C.; Guger, C.; Edlinger, G.; Gronegress, C.; Slater, M. Virtual Smart Home Controlled by Thoughts. In Proceedings of the 2009 18th IEEE International Workshops on Enabling Technologies: Infrastructures for Collaborative Enterprises, Groningen, The Netherlands, 29 June–1 July 2009; pp. 236–239. [Google Scholar] [CrossRef]

- Lin, C.-T.; Lin, B.-S.; Lin, F.-C.; Chang, C.-J. Brain Computer Interface-Based Smart Living Environmental Auto-Adjustment Control System in UPnP Home Networking. IEEE Syst. J. 2012, 8, 363–370. [Google Scholar] [CrossRef]

- Karmali, F.; Polak, M.; Kostov, A. Environmental control by a brain-computer interface. In Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Cat No 00CH37143) IEMBS-00, Chicago, IL, USA, 23–28 July 2002; Volume 4, pp. 2990–2992. [Google Scholar] [CrossRef]

- Piccini, L.; Parini, S.; Maggi, L.; Andreoni, G. A Wearable Home BCI system: Preliminary results with SSVEP protocol. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; Volume 5, pp. 5384–5387. [Google Scholar] [CrossRef]

- Prateek, V.; Poonam, S.; Preeti, K. Home Automation Control System Implementation using SSVEP based Brain-Computer Interface. In Proceedings of the IEEE ICICI—2017, Coimbatore, India, 23–24 November 2017; pp. 1068–1073. [Google Scholar]

- Perego, P.; Maggi, L.; Andreoni, G.; Parini, S. A Home Automation Interface for BCI application validated with SSVEP protocol. In Proceedings of the 4th International BrainComputer Interface Workshop and Training Course, Graz, Austria, 18–21 September 2008. [Google Scholar]

- Zhao, J.; Li, W.; Li, M. Comparative Study of SSVEP- and P300-Based Models for the Telepresence Control of Humanoid Robots. PLoS ONE 2015, 10, e0142168. [Google Scholar] [CrossRef]

- Amiri, S.; Fazel-Rezai, R.; Asadpour, V. A Review of Hybrid Brain-Computer Interface Systems. Adv. Hum. Comput. Interact. 2013, 2013, 187024. [Google Scholar] [CrossRef]

- He, S.; Zhou, Y.; Yu, T.; Zhang, R.; Huang, Q.; Chuai, L.; Mustafa, M.-U.; Gu, Z.; Yu, Z.L.; Tan, H.; et al. EEG- and EOG-Based Asynchronous Hybrid BCI: A System Integrating a Speller, a Web Browser, an E-Mail Client, and a File Explorer. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 519–530. [Google Scholar] [CrossRef] [PubMed]

- Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Choi, H.S.; Jeong, M.Y. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface. Sensors 2020, 20, 891. [Google Scholar] [CrossRef] [PubMed]

- Lau, T.M.; Gwin, J.T.; Ferris, D.P. How Many Electrodes Are Really Needed for EEG-Based Mobile Brain Imaging? J. Behav. Brain Sci. 2012, 2, 387–393. [Google Scholar] [CrossRef]

- Wang, Y.-T.; Wang, Y.; Cheng, C.-K.; Jung, T.-P. Measuring Steady-State Visual Evoked Potentials from non-hair-bearing areas. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; Volume 2012, pp. 1806–1809. [Google Scholar] [CrossRef]

- Zhu, D.; Bieger, J.; Molina, G.G.; Aarts, R.M. A Survey of Stimulation Methods Used in SSVEP-Based BCIs. Comput. Intell. Neurosci. 2010, 2010, 702357. [Google Scholar] [CrossRef]

- Isa, I.S.; Zainuddin, B.S.; Hussain, Z.; Sulaiman, S.N. Preliminary Study on Analyzing EEG Alpha Brainwave Signal Activities Based on Visual Stimulation. Procedia Comput. Sci. 2014, 42, 85–92. [Google Scholar] [CrossRef][Green Version]

- Goel, K.; Vohra, R.; Kamath, A.; Baths, V. Home automation using SSVEP & eye-blink detection based brain-computer interface. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014; pp. 4035–4036. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Kirkup, L.; Searle, A.; Craig, A.; McIsaac, P.; Larsen, G. Three methods compared for detecting the onset of alpha wave synchronization following eye closure. Physiol. Meas. 1998, 19, 213–224. [Google Scholar] [CrossRef]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis; Icdar: Edinburgh, UK, 2003. [Google Scholar]

- Cecotti, H.; Graser, A. Convolutional Neural Networks for P300 Detection with Application to Brain-Computer Interfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 433–445. [Google Scholar] [CrossRef]

- Ieracitano, C.; Mammone, N.; Bramanti, A.; Hussain, A.; Morabito, F.C. A Convolutional Neural Network approach for classification of dementia stages based on 2D-spectral representation of EEG recordings. Neurocomputing 2019, 323, 96–107. [Google Scholar] [CrossRef]

- Trachtman, J.P. The Limits of PTAs. Prefer. Trade Agreem. 2011, 115–149. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.; McFarland, D.; Peckham, P.; Schalk, G.; Donchin, E.; Quatrano, L.; Robinson, C.; Vaughan, T. Brain-computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, Y.; Long, J.; Yu, T.; Gu, Z. An asynchronous wheelchair control by hybrid EEG–EOG brain–computer interface. Cogn. Neurodyn. 2014, 8, 399–409. [Google Scholar] [CrossRef]

- Wang, M.; Qu, W.; Chen, W.-Y. Hybrid sensing and encoding using pad phone for home robot control. Multimed. Tools Appl. 2017, 77, 10773–10786. [Google Scholar] [CrossRef]

- Park, S.; Cha, H.-S.; Im, C.-H. Development of an Online Home Appliance Control System Using Augmented Reality and an SSVEP-Based Brain–Computer Interface. IEEE Access 2019, 7, 163604–163614. [Google Scholar] [CrossRef]

- Duan, X.; Xie, S.; Xie, X.; Meng, Y.; Xu, Z. Quadcopter Flight Control Using a Non-invasive Multi-Modal Brain Computer Interface. Front. Neurorobot. 2019, 13. [Google Scholar] [CrossRef]

- Suefusa, K.; Tanaka, T. A comparison study of visually stimulated brain–computer and eye-tracking interfaces. J. Neural Eng. 2017, 14, 036009. [Google Scholar] [CrossRef]

- Hong, K.-S.; Naseer, N. Reduction of Delay in Detecting Initial Dips from Functional Near-Infrared Spectroscopy Signals Using Vector-Based Phase Analysis. Int. J. Neural Syst. 2016, 26, 1650012. [Google Scholar] [CrossRef]

- Khan, M.J.; Hong, K.-S. Hybrid EEG–fNIRS-Based Eight-Command Decoding for BCI: Application to Quadcopter Control. Front. Neurorobot. 2017, 11. [Google Scholar] [CrossRef]

- Tanveer, M.A.; Khan, M.J.; Qureshi, M.J.; Naseer, N.; Hong, K.-S. Enhanced Drowsiness Detection Using Deep Learning: An fNIRS Study. IEEE Access 2019, 7, 137920–137929. [Google Scholar] [CrossRef]

- Asgher, U.; Khalil, K.; Khan, M.J.; Ahmad, R.; Butt, S.I.; Ayaz, Y.; Naseer, N.; Nazir, S. Enhanced Accuracy for Multiclass Mental Workload Detection Using Long Short-Term Memory for Brain–Computer Interface. Front. Mol. Neurosci. 2020, 14. [Google Scholar] [CrossRef]

| Publication | Type | Category | Commands | Channel | Evaluation Criteria | ||

|---|---|---|---|---|---|---|---|

| Accuracy (%) | Time (s) | ITR (bits/min) | |||||

| Aloise et al. [17] | Real | P-300 | 16 | 8 | 90.00 | 4–5.6 | 11.19 |

| Holzner et al. [21] | Virtual | P-300 | 13 | N/A | 79.35 | N/A | N/A |

| Karmali et al. [23] | Virtual | Alpha Rhythm | 44 | 4 | N/A | 27.7 | N/A |

| Kosmyna et al. [5] | Virtual | Conceptual imagery | 8 | 16 | 77–81 | N/A | N/A |

| Goel et al. [35] | Virtual | SSVEP + Eye blink | 2 | 4 | 94.17 | 5.2 | 11.6 |

| Lin et al. [22] | Virtual | Alpha Rhythm | 2 | 1 | 81.40 | 36–37 | N/A |

| Prateek et al. [25] | Virtual | SSVEP | 5 | 8 | 84.80 | 15 | N/A |

| Perego et al. [26] | Virtual | SSVEP | 4 | N/A | N/A | 350 | N/A |

| Corralejo et al. [20] | Virtual | P-300 | 113 | 8 | 75–95 | 10.2 | 20.1 |

| Carabalona et al. [19] | Real | P-300 | 36 | 12 | 50–80 | N/A | N/A |

| Sellers et al. [18] | Real | P-300 | 72 | 8 | 83.00 | N/A | N/A |

| Our study | Virtual | SSVEP + Eye blink | 38 | 1 | 96.92 | 2 | 146.67 |

| Subjects | Correct Command | Response Time | ||||

|---|---|---|---|---|---|---|

| Neye-blink | Ndouble-blink | NSSVEP | Teye-blink (s) | Tdouble-blink (s) | TSSVEP (s) | |

| S1 | 16/16 | 1/2 | 14/16 | 1.25 | 1.652 | 1.754 |

| S2 | 15/16 | 2/2 | 15/16 | 1.425 | 1.576 | 1.953 |

| S3 | 16/16 | 2/2 | 14/16 | 1.274 | 1.597 | 1.731 |

| Total | 47/48 | 5/6 | 43/48 | 1.316 | 1.608 | 1.813 |

| Std. | 0.036 | 0.289 | 0.036 | 0.095 | 0.039 | 0.122 |

| Study | Type | Category | Commands | Channel | Accuracy (%) | Time (s) | ITR (bits/min) |

|---|---|---|---|---|---|---|---|

| Wang et al. [44] | Real | P-300 + Eye blink + MI | 8 | 17 | 91.25 | 4 | N/A |

| Wang et al. [45] | Virtual | SSVEP + Eye blink | 12 | 4 | 40–100 | 0–20 | N/A |

| Park et al. [46] | Virtual | SSVEP + AR + Eye blink | 16 | 32 | 92.8 | 20 | 37.4 |

| Duan et al. [47] | Real | MI + SSVEP + Eye blink | 6 | 12 | 86.5 | 48 | 1.69 |

| Malik et al. [30] | Virtual | SSVEP + Eye tracking | 48 | 8 | 90.35 | 1 | 184.06 |

| He et al. [29] | Virtual | MI + Eye blink | N/A | 32 | 96.02 | 6.16 | 45.97 |

| Our study | Virtual | SSVEP + Eye blink | 38 | 1 | 96.92 | 2 | 146.67 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, D.; Nguyen, T.-H.; Chung, W.-Y. A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals. Sensors 2020, 20, 5474. https://doi.org/10.3390/s20195474

Yang D, Nguyen T-H, Chung W-Y. A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals. Sensors. 2020; 20(19):5474. https://doi.org/10.3390/s20195474

Chicago/Turabian StyleYang, Dalin, Trung-Hau Nguyen, and Wan-Young Chung. 2020. "A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals" Sensors 20, no. 19: 5474. https://doi.org/10.3390/s20195474

APA StyleYang, D., Nguyen, T.-H., & Chung, W.-Y. (2020). A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals. Sensors, 20(19), 5474. https://doi.org/10.3390/s20195474