Recognition of Crop Diseases Based on Depthwise Separable Convolution in Edge Computing

Abstract

1. Introduction

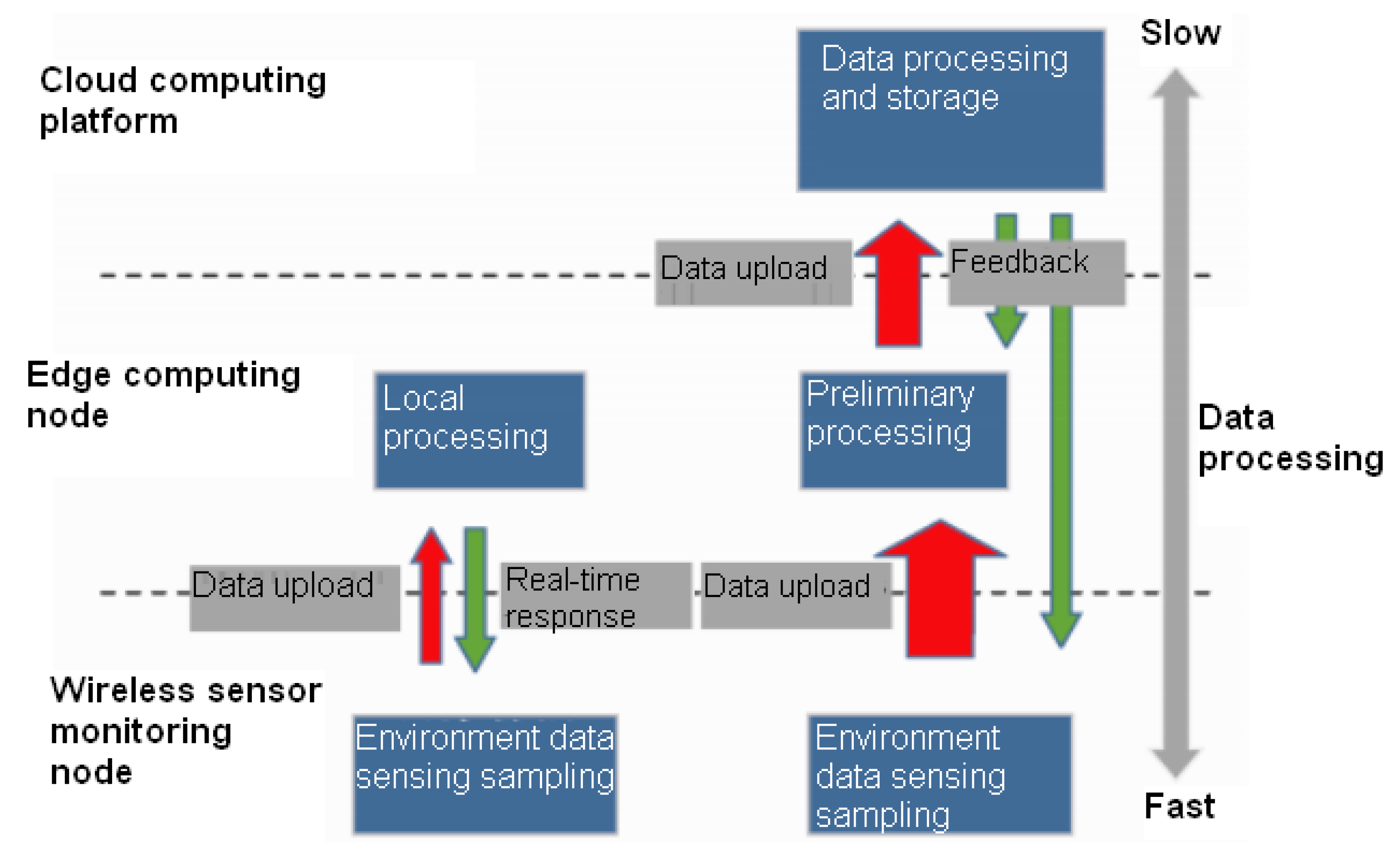

2. Monitoring System Based on Edge Computing

2.1. Sensor Node

2.2. Edge Computing Node

2.3. Cloud Computing Platform

3. Proposed Algorithm

3.1. Recognition of Crop Diseases

3.2. CNN

- i.

- Error back propagation of the output layer

- ii.

- Error back propagation of the pooling layer

- iii.

- Error back propagation of convolutional layer

- iv.

- Calculation of weight and bias gradient using error term

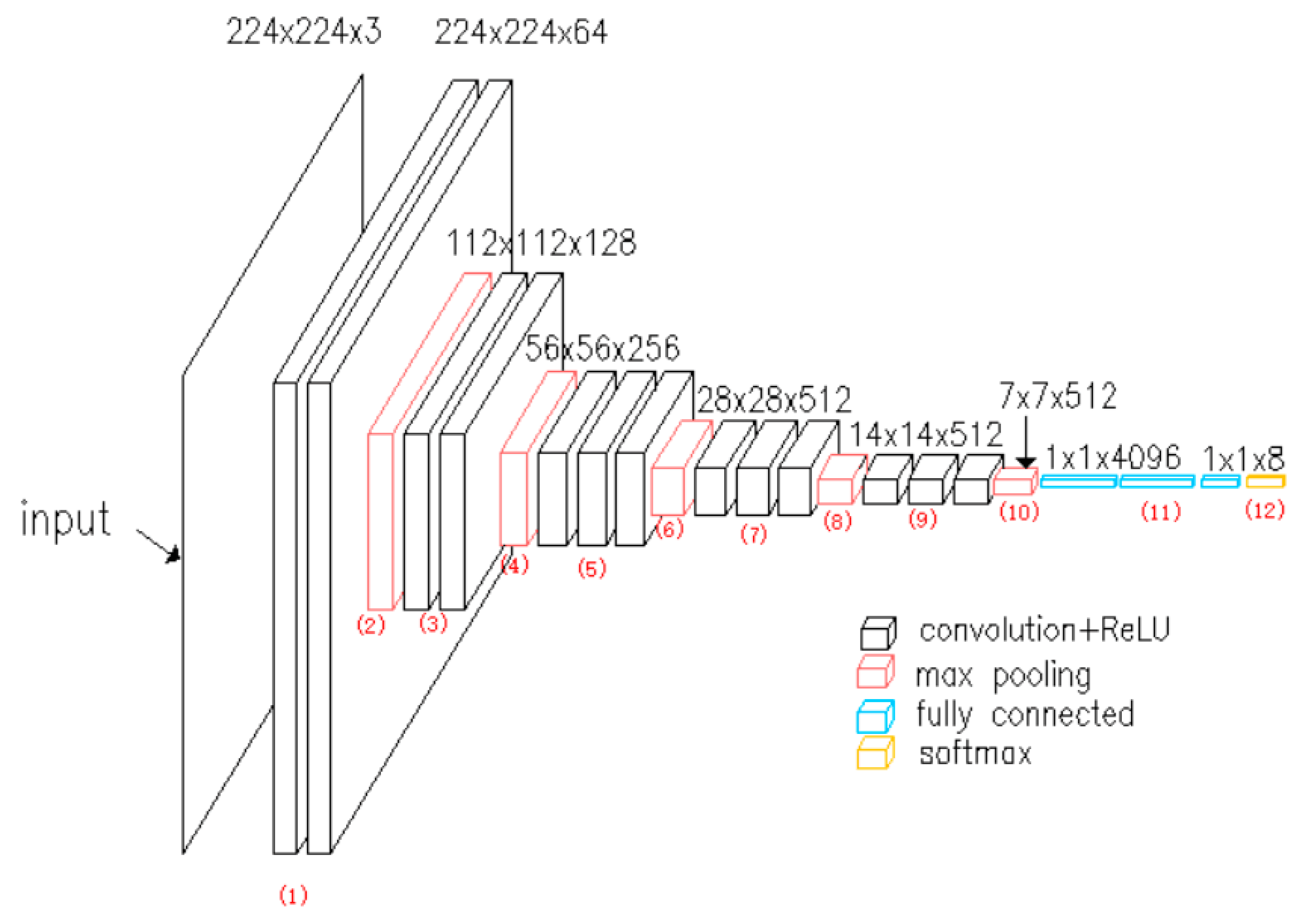

3.3. VGG Network Based on Transfer Learning

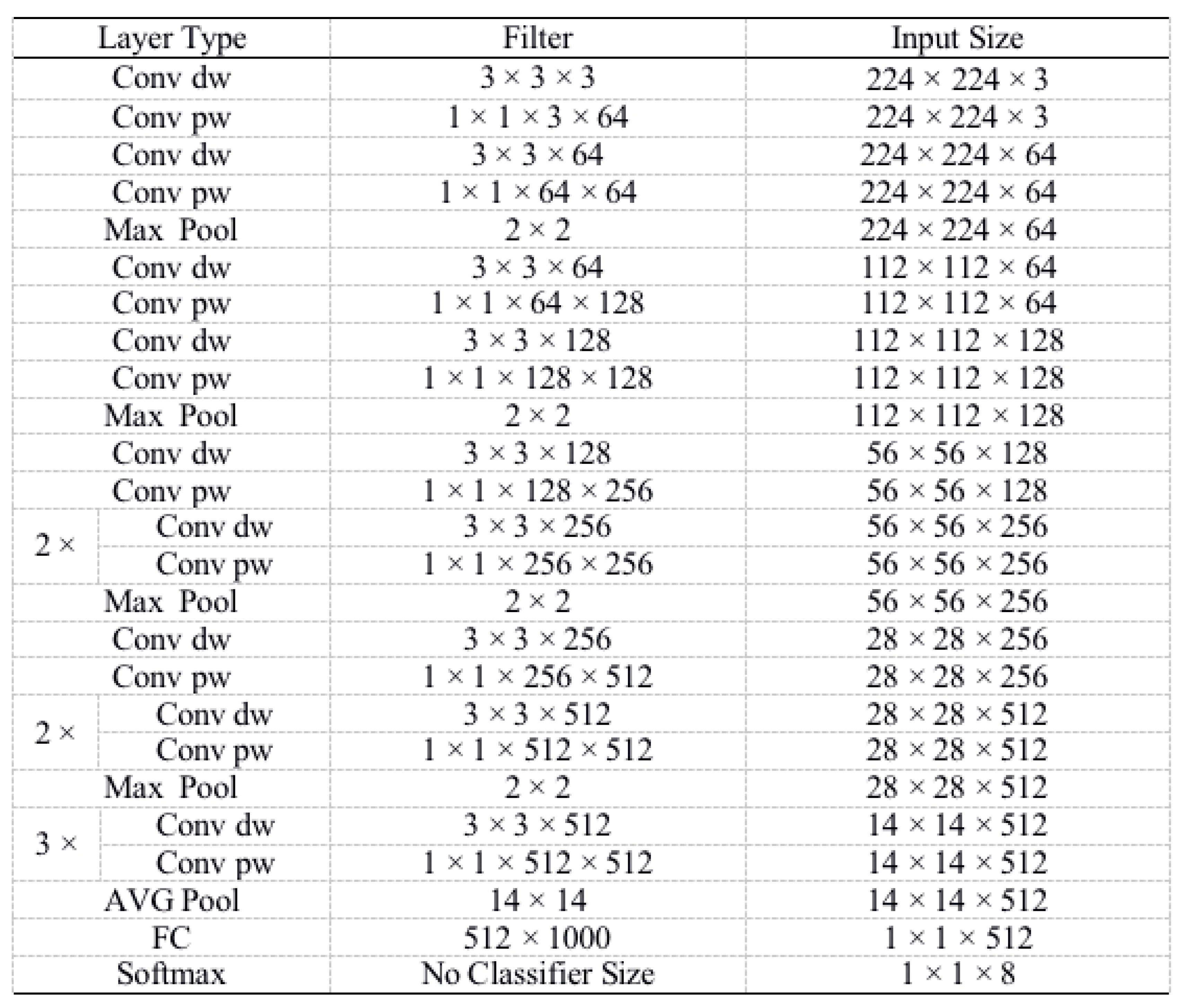

3.4. Recognition Model Based on Depthwise Separable Convolutional Network

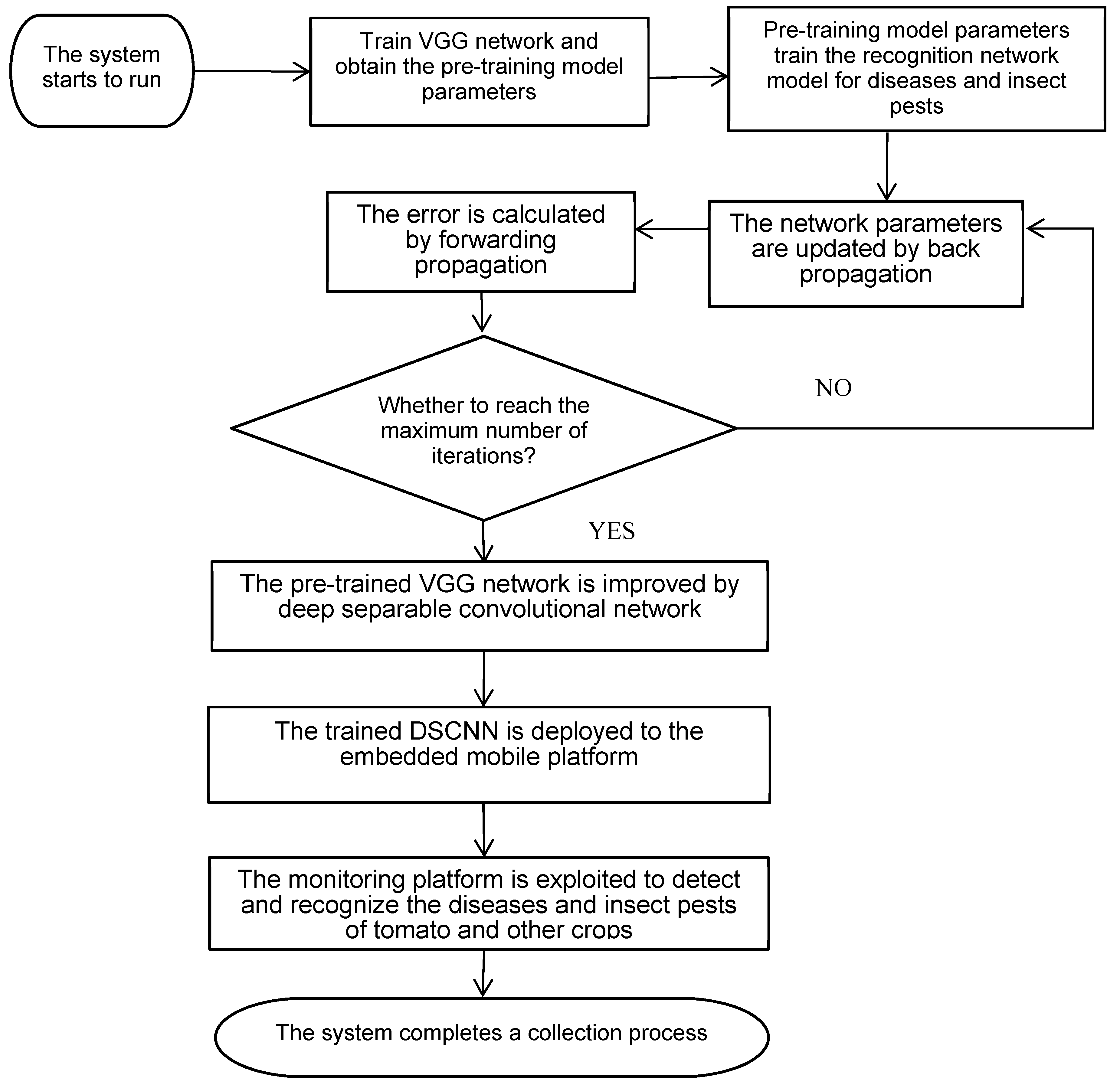

3.5. Algorithm Flow Chart

4. Results and Discussion

4.1. Experimental Environment

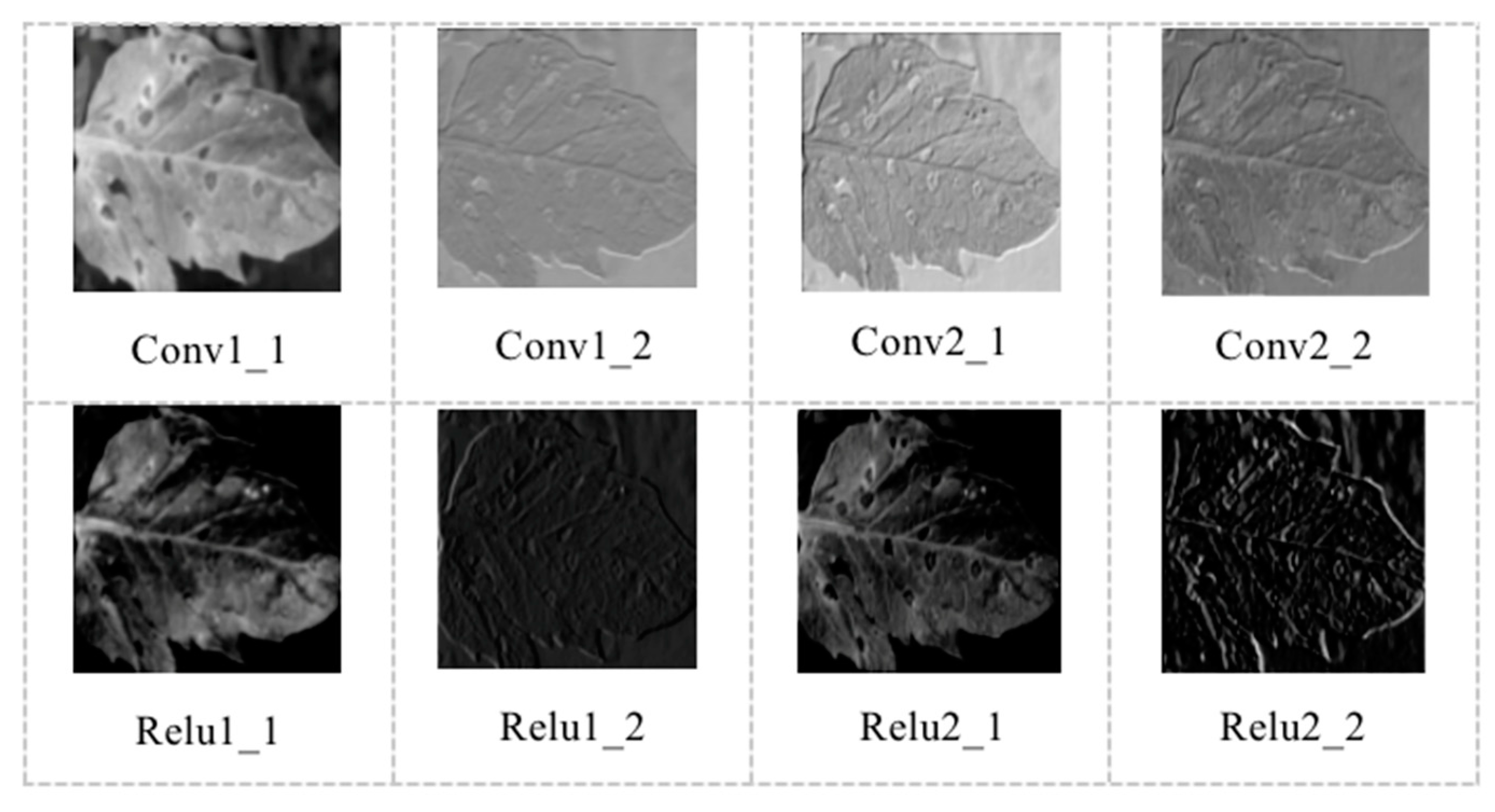

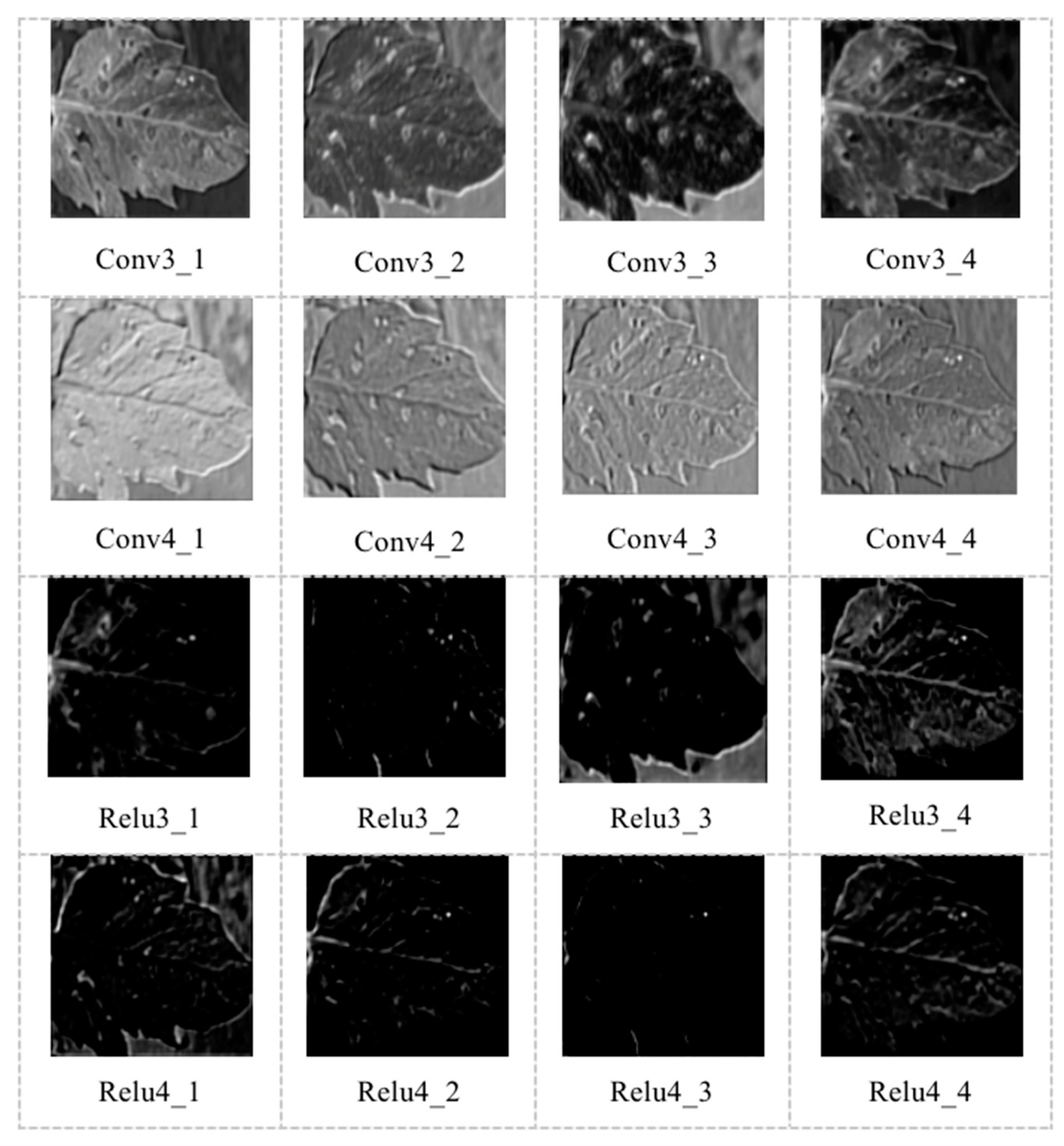

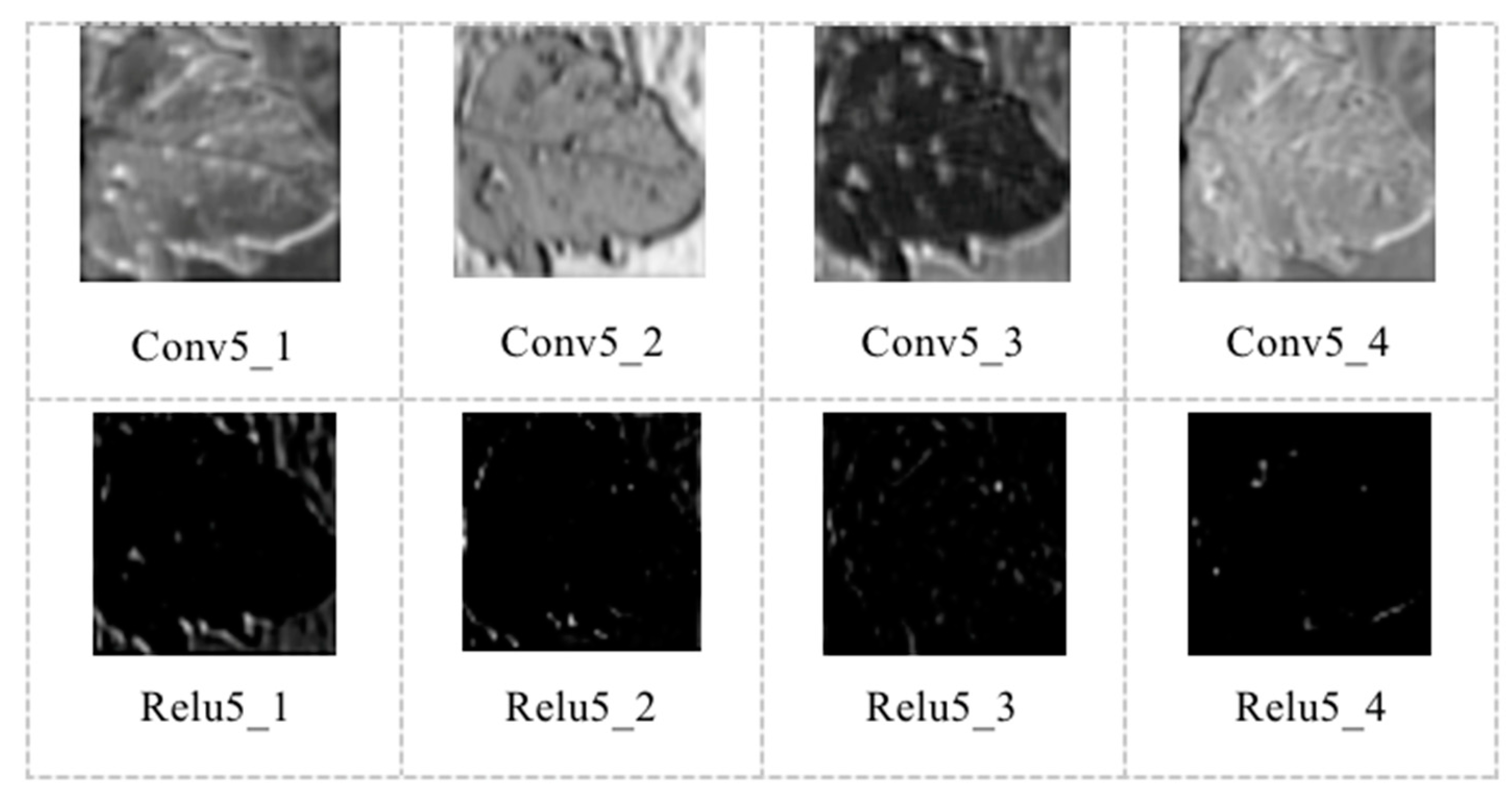

4.2. Visual Feature Map

4.3. Analysis and Comparison of Experimental Results

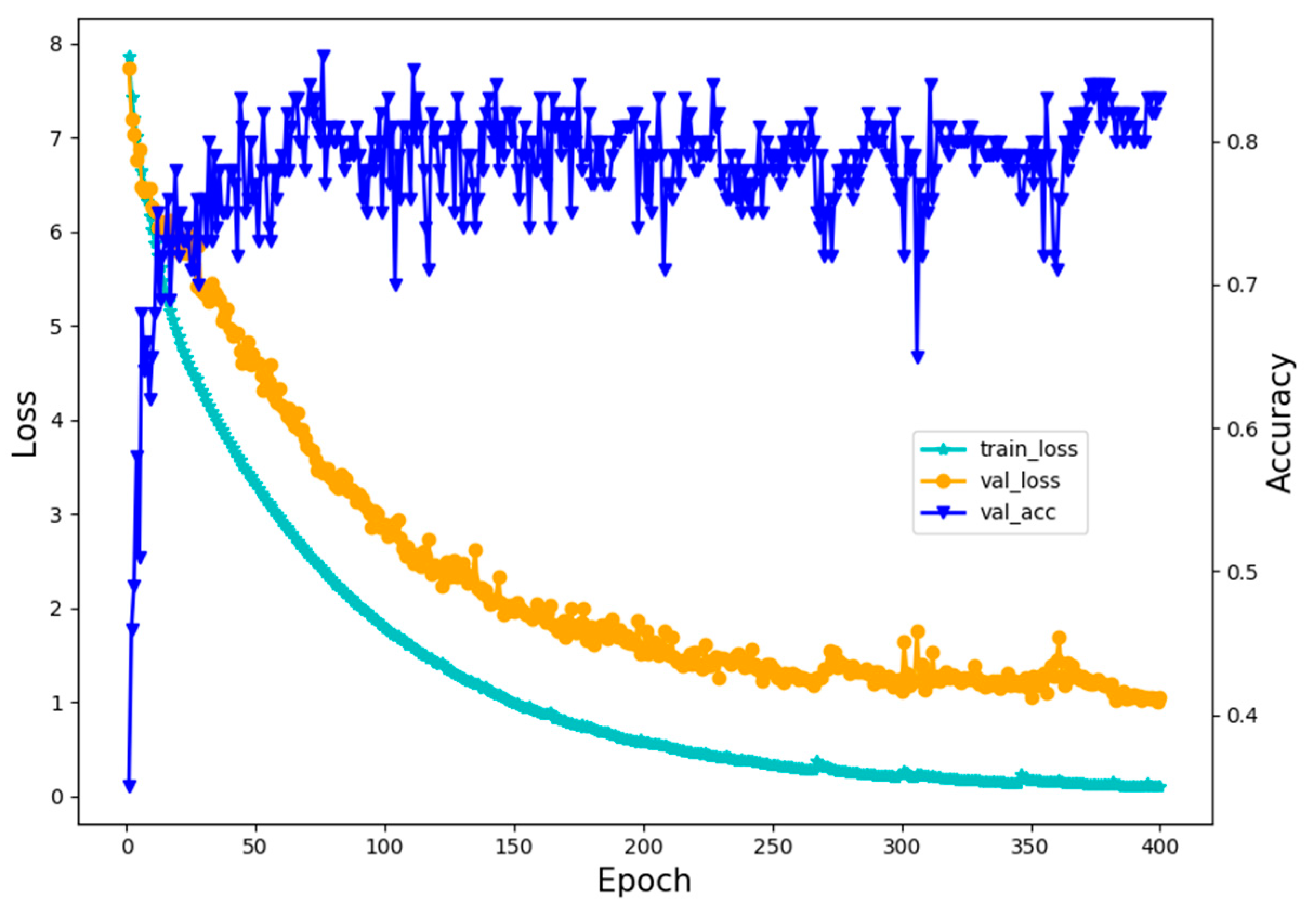

4.3.1. The Recognition Accuracy of the DSCNN Model

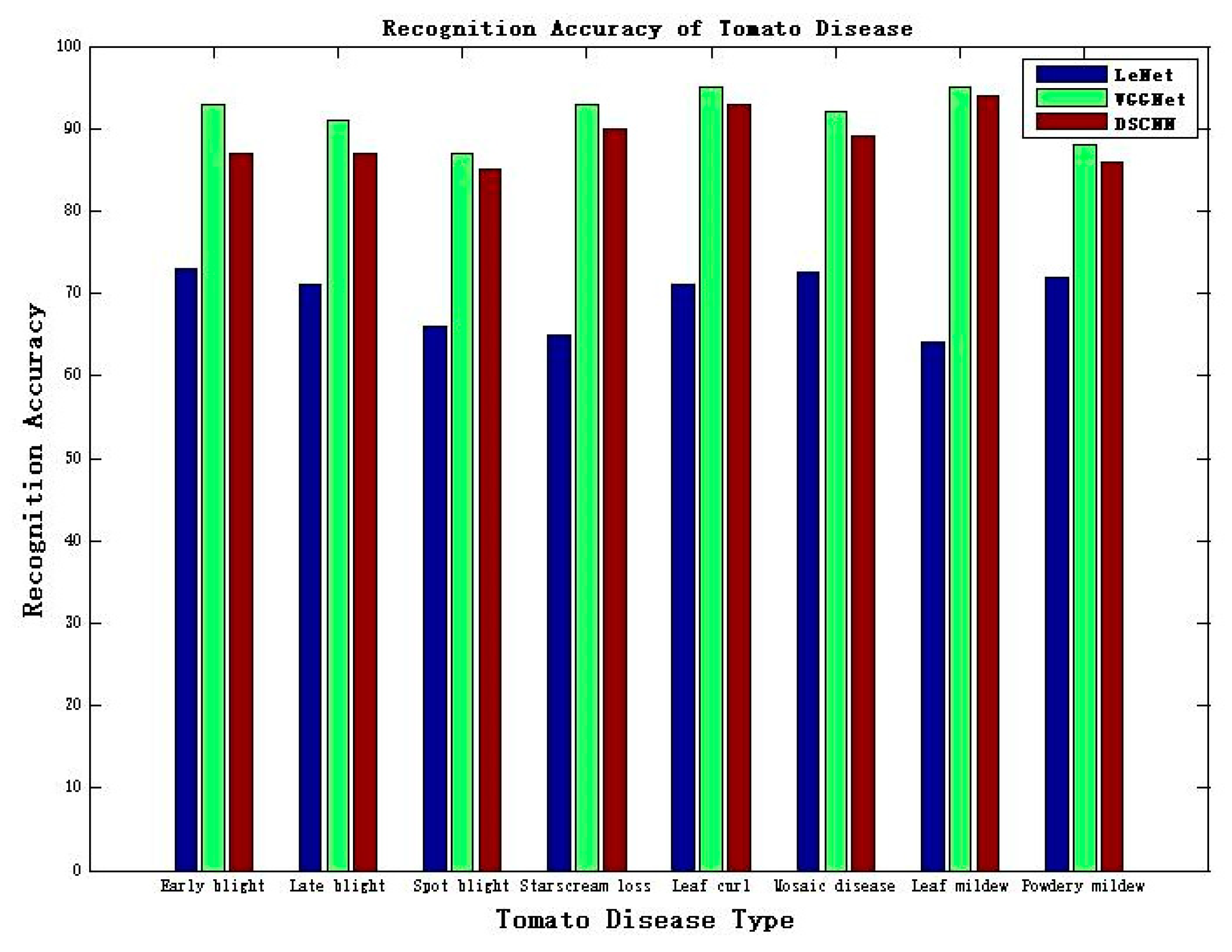

4.3.2. The Recognition Accuracy of Various Crop Diseases

4.3.3. Comparison of Recognition Speed

5. Concluding Remarks

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| CNN | Convolutional neural network |

| DSCNN | Depthwise separable convolutional neural network |

| SVM | Support vector machine |

References

- Tripathi, A.; Gupta, H.P.; Dutta, T.; Mishra, R.; Shukla, K.K.; Jit, S. Coverage and connectivity in WSNs: Survey, research issues and challenges. IEEE Access 2018, 6, 26971–26992. [Google Scholar] [CrossRef]

- Satyanarayanan, M. The emergence of edge computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Zhu, X.; Li, K.; Zhang, J.; Zhang, S. Distributed Reliable and Efficient Transmission Task Assignment for WSNs. Sensors 2019, 19, 5028. [Google Scholar] [CrossRef]

- Hu, Y.C.; Patel, M.; Sabella, D.; Sprecher, N.; Young, V. Mobile edge computing A key technology towards 5G. ETSI White Paper 2015, 11, 1–16. [Google Scholar]

- Long, J.; Liang, W.; Li, K.; Zhang, D.; Tang, M.; Luo, H. PUF-Based Anonymous Authentication Scheme for Hardware Devices and IPs in Edge Computing Environment. IEEE Access 2019, 7, 124785–124796. [Google Scholar] [CrossRef]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative intelligence between the cloud and mobile edge. ACM Sigplan Not. 2017, 52, 615–629. [Google Scholar] [CrossRef]

- Liang, W.; Fan, Y.; Li, K.; Zhang, D.; Gaudiot, J. Secure Data Storage and Recovery in Industrial Blockchain Network Environments. IEEE Trans. Ind. Inform. 2020, 16, 6543–6552. [Google Scholar] [CrossRef]

- Mohanty, S.; Hughes, D.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1–10. [Google Scholar] [CrossRef]

- Zhang, J.; Kong, F.; Zhai, Z.F. Robust image segmentation method for cotton leaf under natural conditions based on immune algorithm and PCNN algorithm. Int. J. Pattern Recognit. Artif. Intell. 2017, 32, 1–18. [Google Scholar] [CrossRef]

- Brahimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Chandra, S.R.; Suraj, S.; Deepak, P.; Zomaya, A.Y. Location of Things (LoT): A Review and Taxonomy of Sensors Localization in IoT Infrastructure. IEEE Commun. Surv. Tutor. 2018, 20, 2028–2061. [Google Scholar]

- Han, D.; Yu, Y.; Li, K.-C.; de Mello, R.F. Enhancing the Sensor Node Localization Algorithm Based on Improved DV-Hop and DE Algorithms in Wireless Sensor Networks. Sensors 2020, 20, 343. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Wang, S.; Ouyang, M.; Xuan, Y.; Li, K.-C. Iterative Positioning Algorithm for Indoor Node Based on Distance Correction in WSNs. Sensors 2019, 19, 4871. [Google Scholar] [CrossRef] [PubMed]

- Armbrust, M.; Fox, A.; Griffith, R.; Joseph, A.D.; Katz, R.; Konwinski, A.; Lee, G.; Patterson, D.; Rabkin, A.; Stoica, I.; et al. A view of cloud computing. Commun. ACM 2010, 53, 50–58. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef]

- Liang, W.; Zhang, D.; Lei, X.; Tang, M.; Li, K.; Zomaya, A. Circuit Copyright Blockchain: Blockchain-based Homomorphic Encryption for IP Circuit Protection. IEEE Trans. Emerg. Top. Comput. 2020. [Google Scholar] [CrossRef]

- Mutka, A.M.; Bart, R.S. Image-based phenotyping of plant disease symptoms. Front. Plant Sci. 2015, 20, 40–48. [Google Scholar] [CrossRef]

- Gao, X.; Shi, X.; Zhang, G.; Lin, J.; Liao, M.; Li, K.; Li, C. Progressive Image Retrieval With Quality Guarantee Under MapReduce Framework. IEEE Access 2018, 6, 44685–44697. [Google Scholar] [CrossRef]

- Mishra, P.; Asaari, M.S.M.; Herrero-Langreo, A.; Lohumi, S.; Diezma, B.; Scheunders, P. Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 2017, 164, 49–67. [Google Scholar] [CrossRef]

- Zhang, S.; You, Z. Leaf image-based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Barbedo, J.; Koenigkan, I.; Santos, T. Identifying multiple plant diseases using digital image processing. Biosyst. Eng. 2016, 147, 104–116. [Google Scholar] [CrossRef]

- Dai, Y.; Wang, G.; Li, K. Conceptual alignment deep neural networks. J. Intell. Fuzzy Syst. 2018, 34, 1631–1642. [Google Scholar] [CrossRef]

- Mahdavinejad, M.S.; Rezvan, M.; Barekatain, M.; Adibi, P.; Barnaghi, P.; Sheth, A.P. Machine learning for Internet of things data analysis: A survey. Digit. Commun. Netw. 2018, 4, 161–175. [Google Scholar] [CrossRef]

- Sezer, B.; Dogdu, E.; Ozbayoglu, A.M. Context-aware computing, learning and Big Data in Internet of things: A survey. IEEE Internet Things J. 2018, 5, 1–27. [Google Scholar] [CrossRef]

- Gopalakrishnan, K.; Khaitan, S.; Choudhary, A. Deep convolutional neural networks with transfer learning for computer vision-based data-driven pavement distress detection. Constr. Build. Mater. 2017, 157, 322–330. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Las Vegas, NV, USA, 2016; pp. 2818–2826. [Google Scholar]

- Liang, W.; Huang, W.; Long, J.; Zhang, K.; Li, K.; Zhang, D. Deep Reinforcement Learning for Resource Protection and Real-time Detection in IoT Environment. IEEE Internet Things J. 2020, 7, 6392–6401. [Google Scholar] [CrossRef]

| Model Name | Accuracy (%) | Predicted Speed (s) |

|---|---|---|

| LeNet | 69.31 | 1.256 |

| VGGNet | 91.75 | 4.242 |

| DSCNN | 89.13 | 0.239 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, M.; Li, K.-C.; Li, Z.; Han, Q.; Fan, W. Recognition of Crop Diseases Based on Depthwise Separable Convolution in Edge Computing. Sensors 2020, 20, 4091. https://doi.org/10.3390/s20154091

Gu M, Li K-C, Li Z, Han Q, Fan W. Recognition of Crop Diseases Based on Depthwise Separable Convolution in Edge Computing. Sensors. 2020; 20(15):4091. https://doi.org/10.3390/s20154091

Chicago/Turabian StyleGu, Musong, Kuan-Ching Li, Zhongwen Li, Qiyi Han, and Wenjie Fan. 2020. "Recognition of Crop Diseases Based on Depthwise Separable Convolution in Edge Computing" Sensors 20, no. 15: 4091. https://doi.org/10.3390/s20154091

APA StyleGu, M., Li, K.-C., Li, Z., Han, Q., & Fan, W. (2020). Recognition of Crop Diseases Based on Depthwise Separable Convolution in Edge Computing. Sensors, 20(15), 4091. https://doi.org/10.3390/s20154091