Effects of the Level of Interactivity of a Social Robot and the Response of the Augmented Reality Display in Contextual Interactions of People with Dementia

Abstract

1. Introduction

- To explore the effects of contextual interactions between PWD and an animal-like social robot embedded in the augmented responsive environment in an LTC facility;

- To investigate which experimental condition is more effective in enhancing engagement in PWD, provoking positive affective responses and reducing apathy related behaviors.

2. Materials and Methods

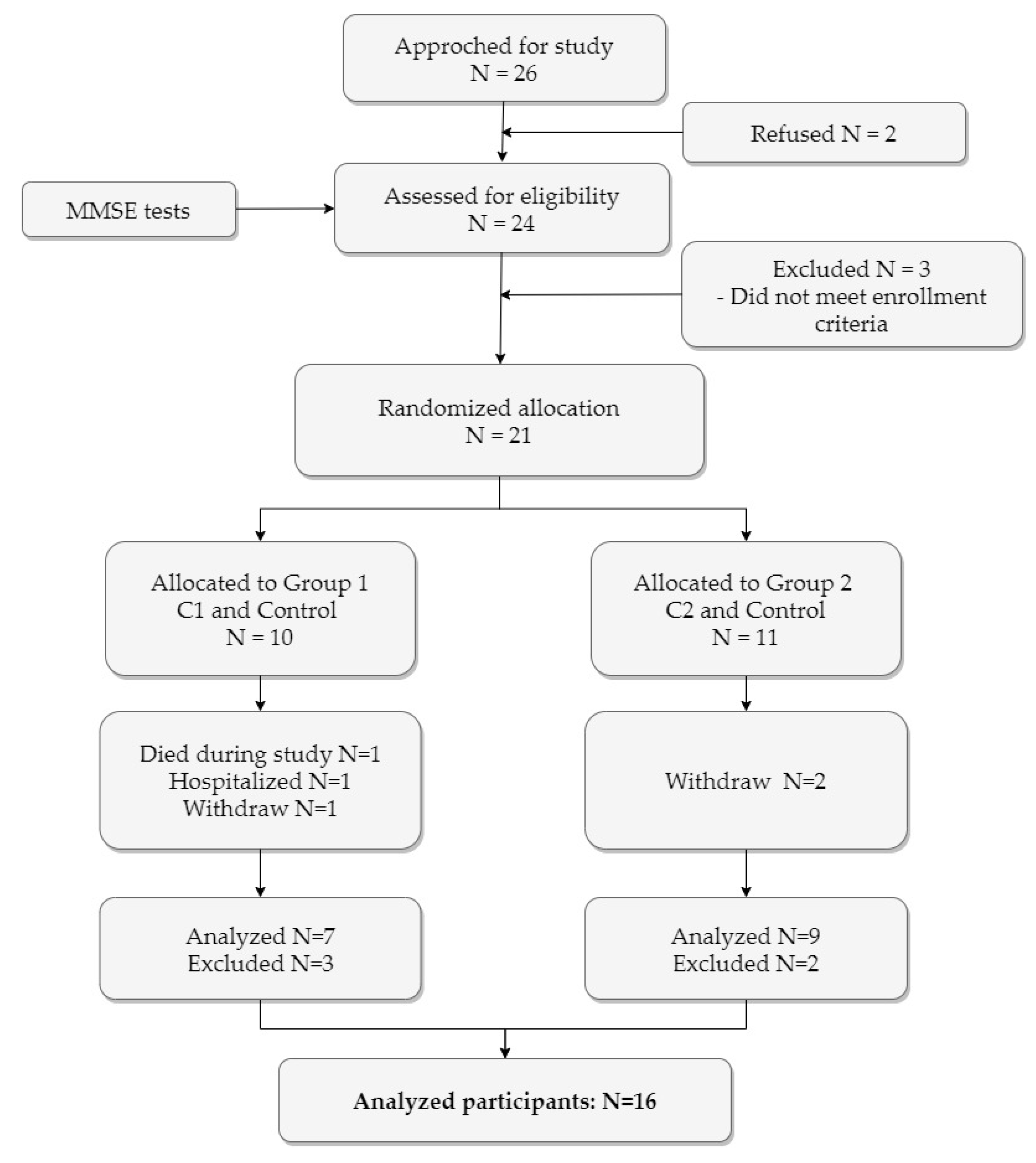

2.1. Participants

2.2. Study Design

2.3. Measures

- Observational Measurement of Engagement (OME) [32] was adopted as it is the most widely used scale for assessing the engagement of PWD. It measures engagement through the duration of the time that resident is involved with the stimulus, and level of attention and attitude towards the stimulus on two 7-point Likert scales separately;

- The Engagement of a Person with Dementia Scale (EPWDS) [33] using a 5-point Likert scale was also adopted for evaluating user engagement, as it compensates OME by providing the verbal and social aspects of engagement. EPWDS emphasizes both the social interaction and activity participation (engagement with the stimulus) of PWD across LTC setting. This 10-item scale measures five dimensions of engagement: affective, visual, verbal, behavioral, and social engagement. Each dimension was assessed separately using a positive and a negative subscale, then interpreted collectively to provide an overall impression of engagement. Each item indicates the extent to which the rater agrees or disagrees with the statement (“strongly disagree” = 1, “strongly agree” = 5);

- Observed Emotional Rating Scale (OERS) [34], a 5-point Likert scale for evaluating five affective states: pleasure; anger; anxiety/fear; sadness; general alertness. Items were scored according to the intensity presented during experiment sessions;

- People Environment Apathy Rating Scales–Apathy subscale (PEAR–Apathy subscale) [35], a 4-point Likert scale for assessing apathy related behaviors. It evaluates symptoms of apathy in cognitive, behavioral, and affective domains through six ratings: facial expressions; eye contact; physical engagement; purposeful activity; verbal tone; verbal expression.

2.4. Ethical Considerations

3. Data Analysis

4. Results

4.1. Participant Demographics

4.2. Results of the Observational Rating Scales Analysis

5. Discussion and Conclusions

5.1. Effects of Contextual Interactions on PWD

5.2. Reflections for Measurement Use

5.3. Limitations and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Rating Scale Items | k Value | |

|---|---|---|

| OME | ||

| Attention | Most of the time | 0.776 |

| Highest level | 0.655 | |

| Attitude | Most of the time | 0.685 |

| Highest level | 0.675 | |

| EPWDS | ||

| Affective Engagement | 0.612 | |

| Visual Engagement | 0.664 | |

| Verbal Engagement | 0.719 | |

| Behavioral Engagement | 0.678 | |

| Social Engagement | 0.606 | |

| OERS | ||

| Pleasure | 0.782 | |

| General Alertness | 0.642 | |

| Anger | 1.000 | |

| Anxiety/Fear | 0.755 | |

| Sadness | 1.000 | |

| PEAR–Apathy | ||

| Facial Expression | 0.708 | |

| Eye Contact | 0.649 | |

| Physical Engagement | 0.750 | |

| Purposeful Activity | 0.714 | |

| Verbal Tone | 0.745 | |

| Verbal Expression | 0.723 | |

Appendix B

| Rating Scale Items | Conditions M (SD) | p Value | ||||||

|---|---|---|---|---|---|---|---|---|

| C1 | C2 | CC | Sig. | C1-CC | C2-CC | C1-C2 | ||

| OME | ||||||||

| Duration (in seconds) | 678.38 (244.94) | 502.46 (266.38) | 671.04 (372.91) | 0.260 | 0.947 | 0.128 | 0.169 | |

| Attention | Most of the time | 5.69 (0.95) | 4.85 (0.99) | 4.85 (1.19) | 0.094 | - | - | - |

| Highest level | 6.23 (0.83) | 5.69 (1.03) | 5.54 (0.95) | 0.111 | - | - | - | |

| Attitude | Most of the time | 5.46 (1.20) | 4.31 (1.18) | 4.62 (1.09) | 0.041 | 0.125 | 1.000 | 0.049 |

| Highest level | 5.92 (1.04) | 5.00 (1.29) | 5.19 (0.98) | 0.094 | - | - | - | |

| EPWDS | ||||||||

| Affective Engagement | 8.38 (1.50) | 7.62 (1.50) | 7.77 (2.42) | 0.578 | 0.375 | 0.824 | 0.337 | |

| Visual Engagement | 8.77 (1.69) | 7.85 (1.82) | 7.04 (1.73) | 0.018 | 0.005 ** | 0.179 | 0.183 | |

| Verbal Engagement | 8.23 (1.59) | 7.85 (2.04) | 7.54 (1.73) | 0.517 | 0.257 | 0.612 | 0.583 | |

| Behavioral Engagement | 8.85 (1.21) | 8.00 (2.19) | 6.58 (0.76) | <0.001 *** | <0.001 *** | 0.003 ** | 0.118 | |

| Social Engagement | 7.69 (1.55) | 6.77 (1.69) | 6.12 (1.14) | 0.007 ** | 0.002 ** | 0.175 | 0.099 | |

| Composite Sum | 41.92 (6.98) | 38.08 (8.25) | 35.04 (6.53) | 0.022 | 0.006 ** | 0.213 | 0.173 | |

| OERS | ||||||||

| Pleasure | 2.54 (0.97) | 2.15 (0.69) | 2.15 (0.93) | 0.419 | - | - | - | |

| General Alertness | 4.38 (0.77) | 4.15 (0.80) | 3.69 (1.02) | 0.104 | - | - | - | |

| Negative Affect | 3.38 (0.65) | 3.54 (0.66) | 3.42 (0.76) | 0.669 | - | - | - | |

| PEAR–Apathy | ||||||||

| Facial Expression | 2.15 (0.80) | 2.69 (0.86) | 2.69 (0.84) | 0.120 | - | - | - | |

| Eye Contact | 1.23 (0.44) | 1.54 (0.78) | 1.81 (0.63) | 0.024 | 0.023 | 0.438 | 0.880 | |

| Physical Engagement | 1.69 (0.75) | 2.69 (1.18) | 3.54 (0.71) | <0.001 *** | <0.001 *** | 0.081 | 0.088 | |

| Purposeful Activity | 1.62 (0.65) | 2.46 (1.05) | 3.42 (0.90) | <0.001 *** | <0.001 *** | 0.043 * | 0.191 | |

| Verbal Tone | 2.08 (0.64) | 2.46 (0.78) | 2.42 (0.64) | 0.345 | - | - | - | |

| Verbal Expression | 1.46 (0.78) | 2.00 (1.16) | 2.35 (0.85) | 0.016 | 0.013 | 0.574 | 0.542 | |

References

- Cadieux, M.-A.; Garcia, L.J.; Patrick, J. Needs of people with dementia in long-term care: A systematic review. Am. J. Alzheimer Dis. Dement. 2013, 28, 723–733. [Google Scholar] [CrossRef] [PubMed]

- Hancock, G.A.; Woods, B.; Challis, D.; Orrell, M. The needs of older people with dementia in residential care. Int. J. Geriatr. Psychiatry J. Psychiatry Late Life Allied Sci. 2006, 21, 43–49. [Google Scholar] [CrossRef]

- Topo, P. Technology studies to meet the needs of people with dementia and their caregivers: A literature review. J. Appl. Gerontol. 2009, 28, 5–37. [Google Scholar] [CrossRef]

- Takayanagi, K.; Kirita, T.; Shibata, T. Comparison of verbal and emotional responses of elderly people with mild/moderate dementia and those with severe dementia in responses to seal robot, PARO. Front. Aging Neurosci. 2014, 6, 257. [Google Scholar] [CrossRef] [PubMed]

- Chang, W.-L.; Šabanović, S.; Huber, L. Situated analysis of interactions between cognitively impaired older adults and the therapeutic robot PARO. In Proceedings of the International Conference on Social Robotics, Bristol, UK, 27–29 October 2013; pp. 371–380. [Google Scholar]

- Hung, L.; Liu, C.; Woldum, E.; Au-Yeung, A.; Berndt, A.; Wallsworth, C.; Horne, N.; Gregorio, M.; Mann, J.; Chaudhury, H. The benefits of and barriers to using a social robot PARO in care settings: A scoping review. BMC Geriatr. 2019, 19, 232. [Google Scholar] [CrossRef] [PubMed]

- Kramer, S.C.; Friedmann, E.; Bernstein, P.L. Comparison of the effect of human interaction, animal-assisted therapy, and AIBO-assisted therapy on long-term care residents with dementia. Anthrozoös 2009, 22, 43–57. [Google Scholar] [CrossRef]

- Libin, A.; Cohen-Mansfield, J. Therapeutic robocat for nursing home residents with dementia: Preliminary inquiry. Am. J. Alzheimer Dis. Dement. 2004, 19, 111–116. [Google Scholar] [CrossRef]

- Stiehl, W.D.; Breazeal, C.; Han, K.-H.; Lieberman, J.; Lalla, L.; Maymin, A.; Salinas, J.; Fuentes, D.; Toscano, R.; Tong, C.H. The huggable: A therapeutic robotic companion for relational, affective touch. In Proceedings of the 2006 3rd IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 8–10 January 2016. [Google Scholar] [CrossRef]

- Moyle, W.; Jones, C.; Sung, B.; Bramble, M.; O’Dwyer, S.; Blumenstein, M.; Estivill-Castro, V. What effect does an animal robot called CuDDler have on the engagement and emotional response of older people with dementia? A pilot feasibility study. Int. J. Soc. Robot. 2016, 8, 145–156. [Google Scholar] [CrossRef]

- Fernaeus, Y.; Håkansson, M.; Jacobsson, M.; Ljungblad, S. How do you play with a robotic toy animal? A long-term study of Pleo. In Proceedings of the 9th International Conference on Interaction Design and Children, Barcelona, Spain, 9–12 June 2010; pp. 39–48. [Google Scholar]

- Perugia, G.; Rodríguez-Martín, D.; Boladeras, M.D.; Mallofré, A.C.; Barakova, E.; Rauterberg, M. Electrodermal activity: Explorations in the psychophysiology of engagement with social robots in dementia. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 1248–1254. [Google Scholar]

- Moyle, W.; Jones, C.J.; Murfield, J.E.; Thalib, L.; Beattie, E.R.; Shum, D.K.; O’Dwyer, S.T.; Mervin, M.C.; Draper, B.M. Use of a robotic seal as a therapeutic tool to improve dementia symptoms: A cluster-randomized controlled trial. J. Am. Med. Dir. Assoc. 2017, 18, 766–773. [Google Scholar] [CrossRef]

- Chu, M.-T.; Khosla, R.; Khaksar, S.M.S.; Nguyen, K. Service innovation through social robot engagement to improve dementia care quality. Assist. Technol. 2017, 29, 8–18. [Google Scholar] [CrossRef] [PubMed]

- Mordoch, E.; Osterreicher, A.; Guse, L.; Roger, K.; Thompson, G. Use of social commitment robots in the care of elderly people with dementia: A literature review. Maturitas 2013, 74, 14–20. [Google Scholar] [CrossRef] [PubMed]

- Góngora Alonso, S.; Hamrioui, S.; de la Torre Díez, I.; Motta Cruz, E.; López-Coronado, M.; Franco, M. Social robots for people with aging and dementia: A systematic review of literature. Telemed. e-Health 2019, 25, 533–540. [Google Scholar] [CrossRef] [PubMed]

- Peluso, S.; De Rosa, A.; De Lucia, N.; Antenora, A.; Illario, M.; Esposito, M.; De Michele, G. Animal-assisted therapy in elderly patients: Evidence and controversies in dementia and psychiatric disorders and future perspectives in other neurological diseases. J. Geriatr. Psychiatry Neurol. 2018, 31, 149–157. [Google Scholar] [CrossRef] [PubMed]

- Yakimicki, M.L.; Edwards, N.E.; Richards, E.; Beck, A.M. Animal-assisted intervention and dementia: A systematic review. Clin. Nurs. Res. 2019, 28, 9–29. [Google Scholar] [CrossRef]

- Lai, N.M.; Chang, S.M.W.; Ng, S.S.; Tan, S.L.; Chaiyakunapruk, N.; Stanaway, F. Animal-assisted therapy for dementia. Cochrane Database Syst. Rev. 2019. [Google Scholar] [CrossRef]

- Tamura, T.; Yonemitsu, S.; Itoh, A.; Oikawa, D.; Kawakami, A.; Higashi, Y.; Fujimooto, T.; Nakajima, K. Is an entertainment robot useful in the care of elderly people with severe dementia? J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2004, 59, M83–M85. [Google Scholar] [CrossRef]

- Moyle, W.; Jones, C.; Murfield, J.; Thalib, L.; Beattie, E.; Shum, D.; Draper, B. Using a therapeutic companion robot for dementia symptoms in long-term care: Reflections from a cluster-RCT. Aging Ment. Health 2019, 23, 329–336. [Google Scholar] [CrossRef]

- Marx, M.S.; Cohen-Mansfield, J.; Regier, N.G.; Dakheel-Ali, M.; Srihari, A.; Thein, K. The impact of different dog-related stimuli on engagement of persons with dementia. Am. J. Alzheimer Dis. Dement. 2010, 25, 37–45. [Google Scholar] [CrossRef]

- Frens, J.W. Designing for rich interaction: Integrating form, interaction, and function. In Proceedings of the 3rd Symposium of Design Research Conference, Basel, Switzerland, 17–18 November 2006; pp. 91–106. [Google Scholar]

- Wada, K.; Shibata, T.; Musha, T.; Kimura, S. Robot therapy for elders affected by dementia. IEEE Eng. Med. Biol. Mag. 2008, 27, 53–60. [Google Scholar] [CrossRef]

- Salam, H.; Chetouani, M. A multi-level context-based modeling of engagement in human-robot interaction. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; pp. 1–6. [Google Scholar]

- Hoffman, G.; Bauman, S.; Vanunu, K. Robotic experience companionship in music listening and video watching. Person. Ubiquit. Comput. 2016, 20, 51–63. [Google Scholar] [CrossRef]

- Hendrix, J.; Feng, Y.; van Otterdijk, M.; Barakova, E. Adding a context: Will it influence human-robot interaction of people living with dementia? In Proceedings of the International Conference on Social Robotics, Madrid, Spain, 26–29 November 2019; pp. 494–504. [Google Scholar]

- Feng, Y.; Yu, S.; van de Mortel, D.; Barakova, E.; Hu, J.; Rauterberg, M. LiveNature: Ambient display and social robot-facilitated multi-sensory engagement for people with dementia. In Proceedings of the 2019 on Designing Interactive Systems Conference, San Diego, CA, USA, 23–28 June 2019; pp. 1321–1333. [Google Scholar]

- Feng, Y.; Yu, S.; van de Mortel, D.; Barakova, E.; Rauterberg, M.; Hu, J. Closer to nature: Multi-sensory engagement in interactive nature experience for seniors with dementia. In Proceedings of the Sixth International Symposium of Chinese CHI, Montreal, QC, Canada, 21–22 April 2018; pp. 49–56. [Google Scholar]

- Neal, I.; du Toit, S.H.; Lovarini, M. The use of technology to promote meaningful engagement for adults with dementia in residential aged care: A scoping review. Int. Psychogeriatr. 2019. [Google Scholar] [CrossRef] [PubMed]

- Lazar, A.; Thompson, H.J.; Piper, A.M.; Demiris, G. Rethinking the design of robotic pets for older adults. In Proceedings of the 2016 ACM Conference on Designing Interactive Systems, Brisbane, Australia, 4–8 June 2016; pp. 1034–1046. [Google Scholar]

- Cohen-Mansfield, J.; Marx, M.S.; Freedman, L.S.; Murad, H.; Regier, N.G.; Thein, K.; Dakheel-Ali, M. The comprehensive process model of engagement. Am. J. Geriatr. Psychiatry 2011, 19, 859–870. [Google Scholar] [CrossRef]

- Jones, C.; Sung, B.; Moyle, W. Engagement of a Person with Dementia Scale: Establishing content validity and psychometric properties. J. Adv. Nurs. 2018, 74, 2227–2240. [Google Scholar] [CrossRef]

- Lawton, M.P.; Van Haitsma, K.; Klapper, J. Observed affect in nursing home residents with Alzheimer’s disease. J. Gerontol. Ser. B Psychol. Sci. Soc. Sci. 1996, 51, P3–P14. [Google Scholar] [CrossRef] [PubMed]

- Jao, Y.-L.; Algase, D.L.; Specht, J.K.; Williams, K. Developing the Person–Environment Apathy Rating for persons with dementia. Aging Ment. Health 2016, 20, 861–870. [Google Scholar] [CrossRef] [PubMed]

- Kolanowski, A.; Litaker, M.; Buettner, L.; Moeller, J.; Costa, J.; Paul, T. A randomized clinical trial of theory-based activities for the behavioral symptoms of dementia in nursing home residents. J. Am. Geriatr. Soc. 2011, 59, 1032–1041. [Google Scholar] [CrossRef]

- Fleiss, J.L.; Levin, B.; Paik, M.C. Statistical Methods for Rates and Proportions; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Cohen, J. Citation-classic-a coefficient of agreement for nominal scales. Curr. Contents Soc. Behav. Sci. 1986, 18. [Google Scholar] [CrossRef]

- Cohen-Mansfield, J.; Dakheel-Ali, M.; Jensen, B.; Marx, M.S.; Thein, K. An analysis of the relationships among engagement, agitated behavior, and affect in nursing home residents with dementia. Int. Psychogeriatr. 2012, 24, 742–752. [Google Scholar] [CrossRef]

- Perugia, G.; Díaz-Boladeras, M.; Català-Mallofré, A.; Barakova, E.I.; Rauterberg, M. ENGAGE-DEM: A model of engagement of people with dementia. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

| Variable | C1 | C2 | CC |

|---|---|---|---|

| Stimulus | The proactive robot: the robotic sheep responds to users’ stroke and touch by moving its head, neck, legs, and tail and making baby lamb sound. | The static robot: the robotic sheep was turned off; however, the tactile feature is still available and inviting to stroke and hug. | No physical stimulus. |

| Context | Reactive context: the virtual sheep in the screen display responds to users’ stroke and touch by being active and approaching user. | Dynamic context: the display plays looped video of the same content as in C1. | Dynamic context: same as in C2. |

| Characteristics | G1 n = 7 | G2 n = 9 | Total N = 16 | p Value |

|---|---|---|---|---|

| Age, mean (SD) | 86.6 (4.2) | 84.1 (5.2) | 85.2 (4.8) | 0.325 |

| Female, n (%) | 5 (71.4) | 7 (77.8) | 12 (75.0) | 0.789 |

| Type of dementia, n (%) | 0.717 | |||

| Alzheimer’s Dementia | 2 (28.6) | 3 (33.3) | 5 (31.3) | |

| Vascular Dementia | 1 (14.3) | 2 (22.2) | 3 (18.8) | |

| Mixed Dementia | 4 (57.1) | 4 (44.4) | 8 (50.0) | |

| Marital status, n (%) | 0.598 | |||

| Single/Divorced | 1 (14.3) | 1 (11.1) | 2 (12.5) | |

| Married | 4 (57.1) | 4 (44.4) | 8 (50.0) | |

| Widowed | 2 (28.6) | 4 (44.4) | 6 (37.5) | |

| Stages according to staff records, n (%) | 0.861 | |||

| Mild | 1 (14.3) | 1 (11.1) | 2 (12.5) | |

| Middle | 2 (28.6) | 3 (33.3) | 5 (31.3) | |

| Middle to severe | 3 (42.9) | 2 (22.2) | 5 (31.3) | |

| Severe | 1 (14.3) | 3 (33.3) | 4 (25.0) | |

| Cognitive functions reported by staff, n (%) | 0.877 | |||

| Mild | 1 (14.3) | 2 (22.2) | 3 (18.8) | |

| Confused at times | 3 (42.9) | 3 (33.3) | 6 (37.5) | |

| Constantly confused | 3 (42.9) | 4 (44.4) | 7 (43.8) | |

| Wheelchair use, n (%) | 3 (42.9) | 3 (33.3) | 6 (37.5) | 0.719 |

| MMSE score, mean (SD) | 14 (5.3) | 11.3 (8.3) | 12.88 (7.1) | 0.475 |

| Range | 8–22 | 0–23 | 0–23 | |

| MMSE Stage, n (%) | 0.509 | |||

| Stage 1 (>19) | 2 (28.6) | 2 (22.2) | 4 (25.0) | |

| Stage 2 (10–19) | 4 (57.1) | 4 (44.4) | 8 (50.0) | |

| Stage 3 (<10) | 1 (14.3) | 3 (33.3) | 4 (25.0) | |

| Length of stay, n (%) | 0.967 | |||

| Six months or less | 1 (14.3) | 1 (11.1) | 2 (12.5) | |

| More than 6 months | 2 (28.6) | 3 (33.3) | 5 (31.3) | |

| More than 12 months | 4 (57.1) | 5 (55.6) | 9 (56.3) | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Y.; Barakova, E.I.; Yu, S.; Hu, J.; Rauterberg, G.W.M. Effects of the Level of Interactivity of a Social Robot and the Response of the Augmented Reality Display in Contextual Interactions of People with Dementia. Sensors 2020, 20, 3771. https://doi.org/10.3390/s20133771

Feng Y, Barakova EI, Yu S, Hu J, Rauterberg GWM. Effects of the Level of Interactivity of a Social Robot and the Response of the Augmented Reality Display in Contextual Interactions of People with Dementia. Sensors. 2020; 20(13):3771. https://doi.org/10.3390/s20133771

Chicago/Turabian StyleFeng, Yuan, Emilia I. Barakova, Suihuai Yu, Jun Hu, and G. W. Matthias Rauterberg. 2020. "Effects of the Level of Interactivity of a Social Robot and the Response of the Augmented Reality Display in Contextual Interactions of People with Dementia" Sensors 20, no. 13: 3771. https://doi.org/10.3390/s20133771

APA StyleFeng, Y., Barakova, E. I., Yu, S., Hu, J., & Rauterberg, G. W. M. (2020). Effects of the Level of Interactivity of a Social Robot and the Response of the Augmented Reality Display in Contextual Interactions of People with Dementia. Sensors, 20(13), 3771. https://doi.org/10.3390/s20133771