Abstract

Sparse dictionary learning (SDL) is a classic representation learning method and has been widely used in data analysis. Recently, the -norm () maximization has been proposed to solve SDL, which reshapes the problem to an optimization problem with orthogonality constraints. In this paper, we first propose an -norm maximization model for solving dual principal component pursuit (DPCP) based on the similarities between DPCP and SDL. Then, we propose a smooth unconstrained exact penalty model and show its equivalence with the -norm maximization model. Based on our penalty model, we develop an efficient first-order algorithm for solving our penalty model (PenNMF) and show its global convergence. Extensive experiments illustrate the high efficiency of PenNMF when compared with the other state-of-the-art algorithms on solving the -norm maximization with orthogonality constraints.

1. Introduction

In this paper, we focus on solving the optimization problem with orthogonality constraints:

where W is the variable, is a given data matrix, and denotes the identity matrix in . Besides, the -norm is defined as with constant . For brevity, the orthogonality constraints in (1) can be expressed as . Here, denotes the Stiefel manifold in real matrix space, and we call it the Stiefel manifold for simplicity in the rest of our paper.

The sparse dictionary learning (SDL) exploits the low-dimensional features within a set of unlabeled data, and therefore plays an important role in unsupervised representative learning. More specifically, given a data set that contains N samples in , SDL aims to compute a full-rank matrix named as dictionary, and an associated sparse representation that satisfies

or equivalently, find a such that

As a result, the SDL can be solved by finding a , which leads to a sparse . Some existing works introduce the -norm or -norm penalty term to promote the underlying sparsity of and present various algorithms for solving the consequent optimization models, see the work in [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15] for instance. Interested readers are referred to a recent paper [16] and the references therein. However, the -norm minimization-based models are known to be sensitive with noise, and so far the existing approaches are not efficient enough for the purpose of solving real application problems which are often large-scale [17]. Consequently, a proper model with an efficient algorithm for SDL is desired, especially for the large-scale case.

Recently, an -norm maximization model is proposed in [17], which can recover the entire dictionary in a single run. This new formulation is motivated by the fact that maximizing a higher-order norm promotes spikiness and sparsity at the same time. The authors of [17] demonstrate that the global minimizers of -norm maximization with orthogonality constraints are very close to the true dictionary. Moreover, concaveness of the objective function in Equation (1) enables a fast fixed-point type algorithm, named matching, stretching, and projection (MSP). MSP achieves significant speedup compared with existing methods based on -norm or -norm penalty minimization. As maximizing any higher-order norm over a lower-order norm constraint leads to sparse and spiky solutions, Shen et al. [18] extend -norm maximization technique to a generalized -norm maximization (). In addition, the authors propose a gradient projection method (GPM) for solving it with guaranteed global convergence.

However, both MSP and GPM invoke polar decomposition to keep the feasibility in each iteration. As illustrated in [19,20,21], orthonormalization lacks concurrency, which results in low scalability in column-wise parallel computing, particularly when the number of columns is large.

Several infeasible approaches have been developed to avoid orthonormalization. Gao et al. [19] propose the proximal linearized augmented Lagrangian method (PLAM) as well as its enhanced version, PCAL. Based on the merit function used in Gao et al. [19], Xiao et al. [21] propose an exact penalty model with a convex and compact auxiliary constraint, named PenC, for optimization problems with orthogonality constraints. The authors propose an approximated gradient method named PenCF for solving PenC and showed its global convergence and local convergence rate under mild conditions. The above-mentioned infeasible approaches do not require orthonormalization in each iteration. Numerical experiments illustrate the promising performance of these infeasible approaches with the existing state-of-the-art algorithms.

Although PCAL and PenCF avoid the orthonormalization process by taking infeasible steps, these approaches require additional constraints to restrict the sequence in a compact set in , which can undermine their overall efficiency. Therefore, to develop an efficient algorithm on solving SDL, an infeasible model without constraints is desired.

Similar to the -norm penalty model for SDL, dual principal component pursuit (DPCP) aims to recover a tangent vector in from samples contaminated by outliers. Specifically, DPCP solves the following nonsmooth nonconvex optimization problem with a spherical constraint:

Due to the ability on recovering an dimensional hyperplane from , DPCP has wide applications in 3D computer vision, such as detecting planar structures in 3D point clouds in KITTI dataset [22,23] and estimating relative poses in multiple-view [24].

Existing approaches [25,26,27,28] for solving convex problem (4) are not scalable and not competent in high dimensional cases [29]. On the other hand, the Random Sampling and Consensus (RANSAC) algorithm [30] has been one of the most popular methods in computer vision for the high relative dimension setting. RANSAC alternates between fitting a subspace to a randomly sampled minimal number of points ( in the case of DPCP) and measuring the quality of selected subspace by using the number of data-points close to the subspace. In particular, as described in [29], RANSAC can be extremely effective when the probability of sampling outlier-free samples inside the allocated time budget is large. Recently, Tsakiris and Vidal [31] introduce Denoised-DPCP (DPCP-d) by minimizing over the constraints . In the same paper, Tsakiris and Vidal [31] propose an Iteratively-Reweighted-Least-Squares algorithm (DPCP-IRLS) for solving the non-convex DPCP problem (4). The authors illustrate that DPCP-IRLS can successfully handle to of outliers and showed its high efficiency compared with RANSAC. In addition, Zhu et al. [32] propose a projected subgradient-based algorithm named DPCP-PSGM, which exhibits great efficiency on reconstructing road-plane in the KITTA dataset. There are also some approaches using smoothing techniques to approximate the -norm term such as Logcosh [8,33], Huber loss [34], pseudo-Huber [5], etc. Then, algorithms for minimizing a smooth objective function on a sphere can be applied. Nonetheless, these smoothing techniques often introduce approximation errors as the smooth objective functions usually lead to dense solutions. Qu et al. [35] and Sun et al. [8] propose a rounding step as postprocessing to achieve exact recovery [16] by solving a linear programming, which leads to addition computational cost.

The main difficulties in developing efficient algorithms are the nonsmoothness and nonconvexity in DPCP models. By observing the similarity between SDL and DPCP, we consider to adopt the -norm maximization to reformulate DPCP as a smooth optimization problem on sphere.

1.1. Contribution

In this paper, we first point out that the DPCP problem can be formulated as the -norm () maximization (1) with . Therefore, both SDL and DPCP can be unified as a smooth optimization problem on the Stiefel manifold.

Motivated by PenC [21], we propose a novel penalty function as the following expression,

where is the penalty-parameter and is the operator that symmetrizes the square matrix, defined by . We show that is bounded from below, then the convex compact constraint in PenC can be avoided. Therefore, we propose the following smooth unconstrained penalty model for -norm maximization (PenNM),

We prove that Equation (6) with is an exact penalty function of Equation (1) under some mild conditions. Moreover, when , we verify that PenNM does not introduce any first-order stationary point other than those of Equation (1) and . Based on the new exact penalty model, we propose an efficient orthonormalization-free first-order algorithm named PenNMF with no additional constraint. In PenNMF, we adopt an approximate gradient in each iterate instead of the exact one in which the second-order derivative of the original objective involves. The global convergence of PenNMF under mild conditions can be established.

The numerical experiments on synthetic and real imaginary data demonstrate that PenNMF outperforms PenCF and MSP/GPM in solving SDL, especially in large-scale cases. As an infeasible method, PenNMF shows superior performance when compared with MSP and GPM, which invoke an orthonormalization process to keep the feasibility. Moreover, when compared with PenCF, PenNMF also shows better performance, implying the benefits of avoiding the constraints in PenC. In our numerical experiments on DPCP, our proposed model (1) with shows comparable accuracy with -norm based penalty model (4) on solving road-plane recovery in KITTA dataset. In some test examples, (1) can have even better accuracy than (4). Besides, PenNMF takes less CPU time while achieving comparable accuracy in reconstructing road-plane in KITTA dataset when compared with other state-of-the-art algorithms such as DPCP-PSGM and DPCP-d.

1.2. Notations and Terminologies

Norms: In this paper, denotes the element-wise m-th norm of a vector or matrix, i.e., . Besides, denotes the Frobenius norm and denotes the 2-th operator norm, i.e., equals the maximum singular value of A. Besides, we denote as the smallest singular value of a given matrix A. The operator stands for the Hadamard product of matrices A and B with the same size. and represent the component-wise absolute value and l-th power of matrix A, respectively. Besides, for two symmetric matrices A and B, denotes that is semi-positive definite, and denotes that is positive definite.

Besides, W is a first-order stationary point of PenNM if and only if .

2. Model Description

In this section, we first discuss how to reformulate DPCP as an -norm maximization with orthognoality constraints. To construct a orthonormalization-free algorithm, we minimize rather than directly solve (1). As an unconstrained penalty problem for (1), the model (6) may introduce additional infeasible first-order stationary points. Therefore, in this section, we characterize the equivalence between (1) and (6) to provide theoretical guarantees for our approach.

2.1. -Norm Maximization for DPCP Problems

Based on the fact that maximization of a higher-order norm promotes spikiness and sparsity, we maximize the -norm of over the constraint . The model can be expressed as

Although with different constraints to (1), (4) can be reshaped to the formulation of (1). Let be the rank-revealing QR decomposition of Y, where is an orthogonal matrix and is an upper-triangular matrix, and denote , then the optimization model can be reshaped as

Clearly, problem (8) is a special case of (1) with . Moreover, suppose is a global minimizer of (8), the solution for DPCP problem can be recovered by . The detailed framework for solving DPCP by -norm maximization is presented in Algorithm 1.

| Algorithm 1 Framework for Solving DPCP by -Norm Maximization. |

| Require: Data matrix |

| 1: Perform QR-factorization for Y. Namely, where R is upper-triangular matrix and is orthogonal matrix; |

| 2: Compute the solution for (1); |

| 3: Return |

2.2. Equivalence

In this subsection, we first derive the expression for and .

Proposition 1.

The gradient and the Hessian of can be expressed as

respectively. Moreover, the gradient of can be formulated as

Proof.

From the work in [17] we have . Based on the expression for , the Hessian of f can be expressed as . As a result, .

Therefore, based on ([21], Equation 2.8), the gradient of can be formulated as

□

With the expression for , we can establish the equivalence between (1) and our proposed model, (6). The equivalence is illustrated in Theorem 4, and the main body of the proofs is presented in Appendix A.

Theorem 2.

Theorem 2 characterizes the relationship between the first-order stationary points of (1) and those of (6). Namely, the penalty model only yields the first-order stationary points other than those of the original model (1) far away from the Stiefel manifold. When , we can derive a stronger result on those additional first-order stationary points produced by the penalty model in Corollary 3.

Corollary 3.

Theorem 2 characterizes the equivalence between (1) and (6) in the sense that all the infeasible first-order stationary points of (6) is relatively far away from the constraint . Besides, Corollary 3 shows that when , the only infeasible first-order stationary point of (6) is 0. Therefore, when we achieve a solution near the constraint by solving (1), we can conclude that W is a first-order stationary point of (1). Instead of directly solving (1), we can compute the first-order stationary point of (6) and thus avoid intensive orthonormalization in the computation.

3. Algorithm

3.1. Global Convergence

In this section, we focus on developing an infeasible approach for solving (6). The calculation of the gradient of is involved with the second-order derivative, which is typically even more expensive than the iterations in MSP/GPM. Therefore, we consider to solve (6) by an approximated gradient descent algorithm. Let be the approximation for the gradient of , we present the detailed algorithm as Algorithm 2.

| Algorithm 2 First-Order Method for Solving (6). (PenNMF) |

| Require:, ; |

| 1: Randomly choose satisfies , set ; |

| 2: while not terminate do |

| 3: Compute inexact gradient

|

| 4: Compute stepsize ; |

| 5: ; |

| 6: ; |

| 7: end while |

| 8: Return |

Next, we establish the convergence of PenNMFin Theorem 4, which illustrates the global convergence and worst-case convergence rate of PenNMF under mild conditions. The main body of the proof is presented in Appendix B.

Theorem 4.

(Global convergence)Suppose and . Let be the iterate sequence generated by PenNMF, starting from any initial point satisfying , and the stepsize , where . Then, weakly converges to a first-order stationary point of (1). Moreover, for any , the convergence rate of PenNMF can be estimated by

3.2. Some Practical Settings

As illustrated in Algorithm 2, the hyperparameters in PenNMF are the penalty parameter and stepsize . In the theoretical analysis for PenNMF, the upper bound of adopted in Theorem 4 is too restrictive in practice. There are many adaptive stepsize for first-order algorithms, and here we consider the Barzilai–Borwein (BB) stepsize [36],

and alternating Barzilai–Borwein (ABB) stepsize [37],

where and , . We suggest to choose the stepsize as ABB stepsize in PenNMF, and we test PenNMF with ABB stepsize in our numerical experiments.

Another parameter is , which controls the smooth penalty term in . Similarly, the lower-bound for in Theorem 4 is too large to be practical. In our numerical examples, we uses the constant , which is an approximation to , as an upper-bound for . According to the work in [21], we suggest to choose the penalty parameter by .

Additionally, to achieve high accuracy in feasibility, we perform the polar factorization to the final solution generated by PenCF and PenNMF as the default postprocess. More precisely, when we compute the final solution by PenNMF, we can compute its rank-revealing singular-value decomposition and return . Using the same proof techniques in [21], our postprocess leads to decrease in feasibility as well as the functional value. Moreover, the numerical experiments in [19] show that the introduced orthonormalization process results in little changes in . Therefore, we suggest to perform the described postprocess for PenNMF.

4. Numerical Examples

In this section, we present our preliminary numerical examples. We compare our algorithm with some state-of-the-art algorithms on SDL and DPCP problems, which are formulated as (1) and (8), respectively. Then, we observe the performance of our algorithm under different selections of parameters, and then choose the default setting. All the numerical experiments in this section are tested on an Intel(R) Core(R) Silver 4110 CPU @ 2.1 GHz, with 32 cores and 394 GB of memory running under Ubuntu 18.04 and MATLAB R2018a.

4.1. Numerical Results on Sparse Dictionary Earning

In this subsection, we mainly compare the numerical performance of PenNMF with some state-of-the-art algorithms on SDL. As illustrated in Table 2 in [17], MSP is significantly faster than the Riemannian subgradient [3] and Riemannian trust-region method [8]. Therefore, to have a better illustration on the performance of PenNMF, we compare PenNMF with state-of-the-art algorithms on solving (1), which is a smooth optimization problem with orthogonality constraints. We first select two state-of-the-art algorithms on solving optimization problems with orthogonality constraints. One is Manopt [38,39], a projection-based feasible method. In our numerical test, we choose nonlinear conjugate gradient with inexact linear-search strategy to accelerate Manopt. Another one is PenCF [21], which is an infeasible approach for optimization problems with orthogonality constraints. In our algorithms we choose to apply Alternating Bzarzilar–Borwein stepsize to accelerate PenNMF, and uses all parameters as default setting described in [21]. Besides, we test the MSP algorithm [17] and GPM algorithm [18]. It is worth to mention that when , the MSP and GPM are actually the same. According to the numerical examples in [18], has better recovery quality than the case . Therefore, in our numerical experiments, we test the mentioned algorithms on the case where .

The stopping criteria for Manopt, MSP/GPM is , while the stopping criteria for PenCF and PenNMF is . Besides, the max iteration for all compared algorithms is set as 200.

In all test examples, we randomly generate the sparse representation X by and the dictionary by randomly selecting a point on Stiefel manifold. Then, the original data matrix Y is constructed by . To test the performance of all compared algorithms, we add different types of noise to . We first fix the level of noise and choose n from 20 to 100. Then, we test the performance of compared algorithms with different types of noisy while fix . In our numerical tests, the “Noise” denotes the Gaussian Noise, where Y is constructed by . Besides, the term “Outliers” denotes the Gaussian outliers, where . Additionally, the term “Corruption” refers to the Gaussian corruption to , which is achieved by . Besides, the term ’CPU time’ denotes the averaged run-time, while the term ’Error’ denotes the , where denotes the final output of all the compared algorithm.

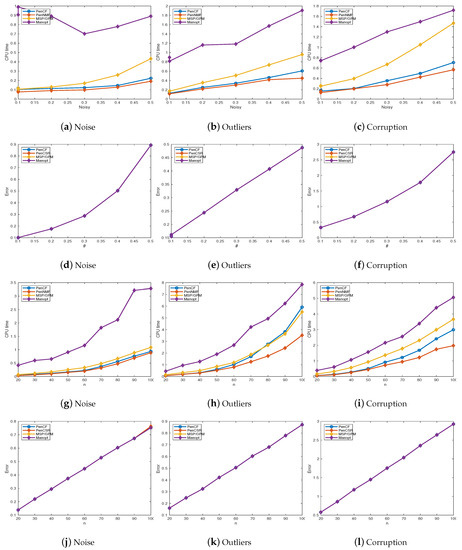

The numerical results are listed in Figure 1. From Figure 1d–f, j–l we conclude that all these compared algorithms achieve almost the same accuracy in all the cases. Besides, for Gaussian noise, the performance of PenNMF is comparable to MSP/GPM algorithm and outperforms Manopt. Moreover, with Gasuuain outliers and Gaussian corruption, the performance of PenNMF is better than PenCF, MSP/GPM, and Manopt. One possible explanation is that for Manopt invokes computing the Riemannian gradient, line-search in each iteration, resulting in higher computational complexity than MSP/GPM. Besides, the infeasible approaches overcome the bottleneck in the orthonormalization process in Manopt and MSP/GPM, and thus achieve comparable performance to MSP/GPM. Additionally, PenCF solves a constrained model by taking approximated gradient descent steps, while in PenNMF the model is an unconstrained one. The absence of constraint helps to improve the performance of PenNMF.

Figure 1.

A detailed comparison among MSP, Manopt, PenCF, and PenNMF. (a)–(c) a comparison with different level of noisy on CPU time; (d)–(f) a comparison with different level of noisy on errors; (g)–(i) a comparison with different n on their CPU time; (j)–(l) a comparison with different n on their errors. The errors are evaluated by , where denotes the final output of all the compared algorithm.

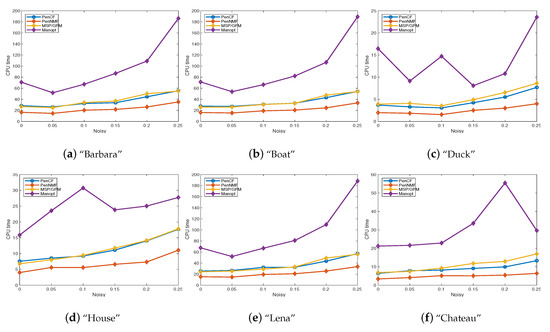

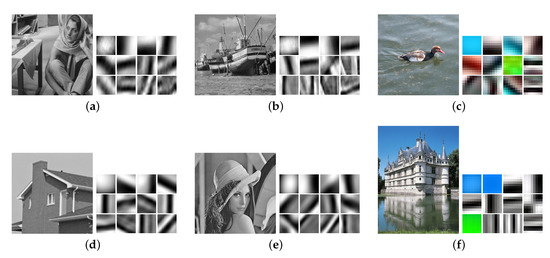

Besides testing on synthetic datasets, we also perform extensive experiments to verify the performance of PenNMF on real imagery data. A classic application of dictionary learning involves learning sparse representations of image patches [40]. In this paper, we extend the experiments in [17] to learn patches from grayscale and color images. Based on the grayscale image “Barbara”, we construct the clean data matrix by vectorizing each patches from it. Then, we use the same approach to construct the clean data matrix Y from grayscale images “Boat” and “Lena”, together with a grayscale image ”House”. In “Barbara”, “Boat”, and “Lena”, the clean data matrix , and the data matrix from “House” satisfies . Besides, we construct the matrix by vectorizing the patches from the RGB image “Duck”. In such setting, all the compared algorithms recover the dictionary for all three channels simultaneously rather than learn them once for each channel in “Duck”. Such approach is aslo applied to generate the data matrix in from RGB image “Chateau”. We run MSP/GPM, PenNMF, PenCF, and Manopt with to compute the dictionary from with different level of noise, where is generated in the same manner as our first numerical experiment and has the same size as these patched figures. The numerical results are presented in Figure 2 and Figure A1. In all experiments, PenNMF takes less time than PenCF, MSP/GPM, and Manopt, which further illustrate the high efficiency of PenNMF in tackling the real imagery data, especially in the large-scale case.

Figure 2.

The CPU time of PenCF, PenNMF, MSP/GPM, and Manopt on computing the dictionary. (a) Barbara, ; (b) Boat, ; (c) Duck, ; (d) House, ; (e) Lena, ; (f) Chateau, .

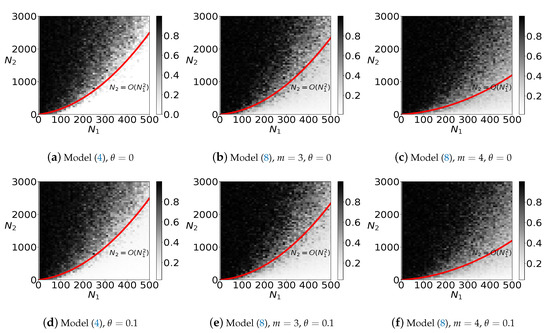

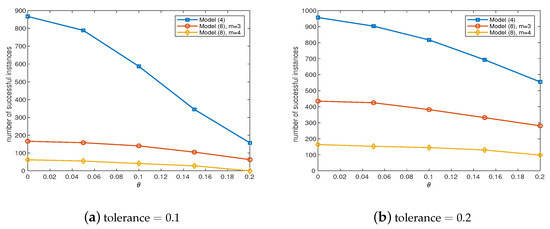

4.2. Dual Principal Component Pursuit

In this subsection, we first verify the recovery property of our proposed model (8), which is a special case of (1) by fixing . We first compare the distance between global minimizer of (8) and the ground-truth for DPCP problem. We first fix and randomly select . Then, we randomly generate inliers in the hyperplane whose normal vector is . Besides, we randomly generate outliers in following Gaussian distribution. Additionally, the data is corrupted by Gaussian noise by adding to Y. Then, we normalize each sample in Y. The range of is , whereas the range of is . We run each test problem for 5 instances. Moreover, in each instance, we run DPCP-PSGM to solve (4) and PenNMF to solve (8) with and 4, and get the solution for each model. We plot the principal angle between and in Figure 3. From Figure 3a,b we can conclude that (4) can tolerate outliers while achieve exact recovery, which coincides the theoretical results presented in [32]. For model (8), numerical experiments do not show the exact recovery ability of (8) for and 4. However, with some tolerance on the principal angle, we also observe that (4) can tolerate outliers. Moreover, we conclude that with , (8) has better ability to recover the normal vector than . As a result, in the rest of this subsection, we only test (8) with . In addition, we analyze the number of successfully recovered instances, where the is less than or . The results are presented in Figure 4. From Figure 4, we can conclude that, with tolerance on the errors, the -norm maximization model can successfully recover the normal vector. Moreover, in model (8), has better performance than , which coincides with the numerical experiments in [18]. Therefore, when applying -norm maximization model to solving the DPCP problems, we suggest to choose in (8).

Figure 4.

A comparison on the number of successfully recovered instances on the different level of noise. (a) is less than ; (b) is less than .

In the rest of this subsection, we test the numerical performance of PenNMF on solving DPCP problem, which plays an important role in autonomous driving applications. DPCP is applied to recover the road-plane, which can be regarded as inliers, from the 3d point clouds in KITTA dataset [22], which is recorded from a moving platform while driving in and around Karlsruhe, Germany. This dataset consists of image data together with corresponding 3D points collected by a rotating 3D laser scanner [32]. Moreover, DPCP only uses the 3D point clouds with the objective of determining the 3D points that lie on the road plane (inliers) and those off that plane (outliers): Given a 3D point cloud of a road scene, the DPCP problem focuses on reconstructing an affine plane as a representation for the road. Equivalently, this task can be converted to a linear subspace learning problem by embedding the affine plane into the linear hyperplane with normal vector , through the mapping [29]. We use the experimental set-up in [29,32] to further compare Equations (4) and (8), RANSAC, and other alternative methods in the task of 3D road plane detection in KITTA dataset. Each point cloud contains over samples with approximately outliers. Besides, the samples are homogenized and normalized to unit -norm.

We use 11 frames annotated in [29,32] from KITTA dataset. We compare DPCP-PSGM [29], DPCP-IRLS, and DPCP-d [31], which focus on solving the -norm minimization model (4). Besides, we test RANSAC and -RPCA [25]. Additionally, we test PenNMF and MSP/GPM on solving our proposed model (8), which is a special case of (1). For DPCP-PSGM, DPCP-d, DPCP-IRLS, and -RPCA, all parameters are set by following the suggestions in [32].

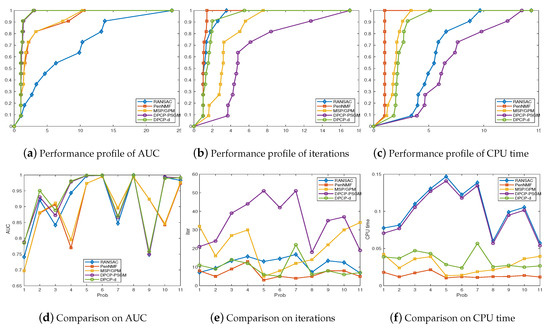

Figure 5 illustrates the numerical performance of all the compared algorithms. We present the numerical results in Figure 5d–f. Moreover, we draw the performance profiles proposed by Dolan and Moré [41] in Figure 5a–c to present an illustrative comparison on the performance of all compared algorithms. The performance profiles can be regarded as distribution functions for a performance metric for benchmarking and comparing optimization algorithms. Besides, we draw the recovery results of frames 328 and 441 in KITTA-CITY-71, which is presented in Figure 6. Here the term “AUC” denotes the area under the AUC curve, and “iterations” denotes the total iterations taken by these compared algorithms. Besides, “Prob” in Figure 5d–f denotes the indexes of tested frames, which are presented in Table 1.

Figure 5.

A comparison between PenNMF, MSP, DPCP-PSGM, DPCP-D, and Random Sampling and Consensus (RANSAC). (a)–(c) performance profile [41] of AUC, iterations and CPU time; (d)–(f) the numerical results of AUC, iterations and CPU time.

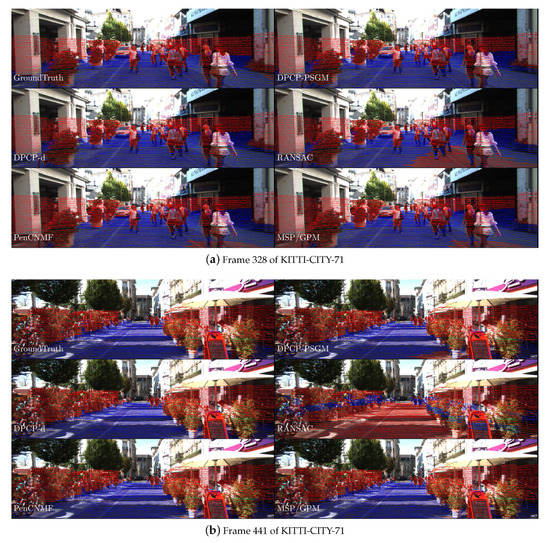

Figure 6.

Illustrations to some results in our numerical tests, with inliers in blue and outliers in red. (a) Frame 328 from KITTI-CITY-71, ; (b) Frame 441 from KITTI-CITY-71, . Inliers/outliers are detected by using a ground-truth thresholding on the distance to the hyperplane recovered by each compared method. The results are represented by projecting 3D point clouds onto the image.

Table 1.

The testing instances and their corresponding frames in KITTA dataset.

From Figure 5a, we can conclude that PenNMF and MSP/GPM successfully recover the hyperplanes with comparable accuracy. Moreover, in problems and 9, PenNMF and MSP produce better classification accuracy than other approaches. Besides, in the aspect of CPU time, PenNMF and MSP cost much less time than other compared algorithms in most cases. Moreover, from Figure 5c, we can conclude that PenNMF takes less time than MSP as well as other compared algorithms in almost all the cases. As a result, we can conclude that our proposed model (1) is easy to be solved and PenNMF shows better efficiency than MSP in our test examples.

5. Conclusions

Sparse dictionary learning (SDL) and dual principal pursuit (DPCP) are two powerful tools in data science. In this paper, we formulate DPCP as a special case of the -norm maximization on the Stiefel manifold proposed for SDL. Then, we propose a novel smooth unconstrained penalty model PenNM for the original optimization problem with orthogonality constraints. We show PenNM is an exact penalty function of (1) under mild assumptions. We develop an novel approximate gradient approach PenNMF for solving PenNM. The global convergence of PenNMF as well as its sublinear convergence rate are established. Numerical experiments illustrate that our proposed approach enjoys better performance than MSP/GPM [17,18] on various testing problems.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, visualization, supervision, project administration, X.H. and X.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

Research is supported in part by the National Natural Science Foundation of China (No. 11971466, 11991021, and 11991020); Key Research Program of Frontier Sciences, Chinese Academy of Sciences (No. ZDBS-LY-7022); the National Center for Mathematics and Interdisciplinary Sciences, Chinese Academy of Sciences; and the Youth Innovation Promotion Association, Chinese Academy of Sciences.

Acknowledgments

We gratefully thank Yuexiang Zhai, Hermish Mehta, Zhengyuan Zhou, and Yi Ma for sharing their codes on MSP. Besides, we also gratefully thank Tianyu Ding, Zhihui Zhu, Tianjiao Ding, Yunchen Yang, Rene Vidal, Manolis Tsakiris, and Daniel Robinson for sharing their codes on DPCP problems.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DPCP | dual principal pursuit |

| DPCP-d | Denoised-DPCP |

| DPCP-IRLS | Iteratively-Reweighted-Least-Squares algorithm |

| DPCP-PSGM | Projected subgradient-based algorithm for solving DPCP |

| GPM | gradient projection method |

| MSP | matching, stretching, and projection |

| PenNM | penalty model for -norm maximization |

| PenNMF | first-order algorithm for solving our penalty model |

| RANSAC | Random Sampling and Consensus |

| SDL | Sparse dictionary learning |

Appendix A. Proof for Theorem 2 and Corollary 3

In this section, we present the proof for Theorem 2 and Corollary 3. As (6) is an unconstrained optimization problem, the upper-bound for should be estimated. Before estimating the upper bounds of and , we first present two linear algebraic inequalities:

Lemma A1.

For any , .

Proof.

□

Lemma A2.

For any and any , we have .

Proof.

This lemma directly follows the fact that

□

Now, we present the upper bound estimation for and .

Lemma A3.

For any ,

Proof.

Due to the fact that ,

Here, the last inequality follows the fact that . □

Lemma A4.

For any ,

Proof.

From the expression of in Proposition 1,

Here, the second inequality directly uses Lemma A1 and the last inequality follows Lemma A2. □

In the rest of this section, we consider the equivalence between (1) and (6). We first establish the relationships between the first-order stationary points of (6) and problem (1).

From the optimality condition of (6), we derive an important equality in Lemma A5.

Lemma A5.

For any first-order stationary point of (6) and any symmetric matrix that satisfies , we have

Proof.

Suppose is a first-order stationary point of (6), by the first-order optimality condition, . Then, for any symmetric matrix that satisfies , .

As described in Proposition 1, can be separated into three parts, we estimate their inner-product with respectively.

First,

Here, the second equality follows the fact that that holds for any symmetric B and skew-symmetric C. Besides, the last inequality follows that . As a result, we achieve the following equality:

Additionally, we estimate their inner-product of and and achieve the following equality

Based on the above two equations, multiplying on both sides of results in

and thus we complete the proof. □

Then based on the equality in Lemma A5, the following proposition shows that all first-order stationary point of (6) is uniformly bounded.

Proposition A6.

For any first-order stationary point of (6), suppose , then .

Proof.

Let u denotes the top eigenvector of , i.e. .

Suppose is a first-order stationary point that satisfies . By Lemma A5 we first have

which leads to the contradictory and shows that . Here, the second equality directly follows Lemma A5. The first inequality uses Lemma A4 and the fact that . Besides, the second inequality follows the fact that . The second inequality uses the fact that . The fourth inequality uses the fact that , and the last inequality follows the fact that . □

Combine Lemma A5 and Proposition A6, we restate Theorem 2 as Theorem A7 and achieve the equivalence between (1) and (6).

Theorem A7.

Proof.

When , any first-order stationary point of (6) satisfies that .

Suppose satisfies , then . Then from Lemma A5, we have

showing that . Then by the positive-definiteness of , we can conclude that .

As a result, we have that either or , and completes the proof. □

Corollary A8.

Proof.

By the same routine of Theorem 2, when , any first-order stationary point of (6) satisfies that . Then, following the same proof routine in Lemma A5 and Theorem 2, we have

When , we have

holds for any .

Appendix B. Proof for Theorem 4

In this section, we present the main body of the proof for Theorem 4. To show the convergence of PenNMF, we first present some preliminary lemmas. Then, we show that the updating direction is a descending direction and thus , as illustrated in Lemma A12. Together with Lemma A10, we show that the sequence is restricted in the neighborhood of the constraints, and we achieve the global convergence property of PenNMF in Theorem 4. We first estimate the upper-bound of the term in .

Lemma A9.

For any ,

Proof.

We first estimate the upper-bound for , which can be achieved by

Besides, from Lemma A3, we have

Combine the above two equations, we achieve

and complete the proof. □

We then show that the penalty term builds a barrier around , i.e., those points that are sufficiently far from have higher functional value than those points that are close to .

Lemma A10.

Suppose for any and , we have

Proof.

Let . For any satisfies and satisfies and , then

Here, the second inequality uses the fact that , and .

Moreover, when , we have . Then, .

Here, the second inequality follows the fact that .

Besides, as implies

As a result, implies . Besides, implies . Therefore,

□

Lemma A10 shows that the smooth penalty term builds a barrier around . Moreover, we characterize the relations between and in the following lemma.

Lemma A11.

Suppose , set , and . Then,

where .

Proof.

First, we present two linear algebra relationships: The first is the inequality holds for any square matrix A, which is quite obvious and the proof is omitted. The second is the equality holds for any symmetric matrices A and B, which results from the fact .

It follows from the above facts that

where the last equality uses the fact that .

Together with the facts that and , we have

□

Let , then we have that the following illustrating that PenNMF generates a descending sequence .

Lemma A12.

Suppose and . Let be the iterate sequence generated by PenNMF, starting from any initial point satisfying , and the stepsize , where . Then, it holds that

for any .

Proof.

By the explicit expression of , we first have

Here, the first inequality follows Lemma A3 and Lemma A4.

Besides, by the definition of , we have

Suppose , then by Lemma A11 we can conclude that

Then by Lemma A10, as , we can conclude that . Then, by induction we can conclude that holds for . Then, by (A15) again we conclude that

holds for and completes our proof. □

The following lemma shows that when our algorithm stops at , then is a first-order stationary point of (1).

Lemma A13.

Suppose and . For any satisfying and , we have that is a first-order stationary point of (1).

Proof.

Suppose , then by the same proof routine in Theorem 2, we consider the inner-product of and :

Here, the fourth equation follows the definition of and the first inequality uses the fact that , then together with Lemma A3, we can conclude that . Besides, the last inequality uses the fact that .

Then we can conclude that that . By the definition of , we have

showing that . Together with Theorem 2 we can conclude that is a first-order stationary point of (1). □

Based on the Lemmas A10–A13, we restate Theorem 4 as Theorem A14 and show the global convergence property of PenNMF in the following theorem.

Theorem A14.

Suppose and . Let be the iterate sequence generated by PenNMF, starting from any initial point satisfying , and the stepsize , where . Then, weakly converges to a first-order stationary point of (1). Moreover, for any , the convergence rate of PenNMF can be estimated by

Proof.

By Lemma A12, it holds that

If is a cluster point of , we have . Together with implied by Lemma A11, we can conclude that is a first-order stationary point of problem (1).

Calculating the summation of the above inequalities from to , we have

showing that , which further implies that . By Lemma A13, implies that is a first-order stationary point of (1).

Moreover, by (A19), we have that

and complete the proof. □

Appendix C. Additional Experimental Results

In this section, we propose some additional numerical experiments. Figure A1 shows the top 12 basis computed from the testing instances in Section 4.1 by PenNMF. As described in [17], the top bases are those with the largest coefficients in terms of -norm.

Figure A1.

The top 12 bases computed from all patches of the test images without noise by PenNMF. (a) “Barbara“; (b) “Boat”; (c) “Duck“; (d) “House”; (e) “Lena“; (f) “Chateau”.

References

- Hansen, T.L.; Badiu, M.A.; Fleury, B.H.; Rao, B.D. A sparse Bayesian learning algorithm with dictionary parameter estimation. In Proceedings of the Sensor Array and Multichannel Signal Processing Workshop (SAM), A Coruña, Spain, 22–25 June 2014; pp. 385–388. [Google Scholar]

- Shen, H.; Li, X.; Zhang, L.; Tao, D.; Zeng, C. Compressed Sensing-Based Inpainting of Aqua Moderate Resolution Imaging Spectroradiometer Band 6 Using Adaptive Spectrum-Weighted Sparse Bayesian Dictionary Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 894–906. [Google Scholar] [CrossRef]

- Bai, Y.; Jiang, Q.; Sun, J. Subgradient descent learns orthogonal dictionaries. arXiv 2018, arXiv:1810.10702. [Google Scholar]

- Gilboa, D.; Buchanan, S.; Wright, J. Efficient dictionary learning with gradient descent. arXiv 2018, arXiv:1809.10313. [Google Scholar]

- Kuo, H.W.; Zhang, Y.; Lau, Y.; Wright, J. Geometry and symmetry in short-and-sparse deconvolution. SIAM J. Math. Data Sci. 2020, 2, 216–245. [Google Scholar] [CrossRef]

- Rambhatla, S.; Li, X.; Haupt, J. NOODL: Provable Online Dictionary Learning and Sparse Coding. arXiv 2019, arXiv:1902.11261. [Google Scholar]

- Song, X.; Wu, L. A Novel Hyperspectral Endmember Extraction Algorithm Based on Online Robust Dictionary Learning. Remote Sens. 2019, 11, 1792. [Google Scholar] [CrossRef]

- Sun, J.; Qu, Q.; Wright, J. Complete dictionary recovery over the sphere I: Overview and the geometric picture. IEEE Trans. Inf. Theory 2016, 63, 853–884. [Google Scholar] [CrossRef]

- Wang, D.; Wan, J.; Chen, J.; Zhang, Q. An Online Dictionary Learning-Based Compressive Data Gathering Algorithm in Wireless Sensor Networks. Sensors 2016, 16, 1547. [Google Scholar] [CrossRef]

- Yang, L.; Fang, J.; Cheng, H.; Li, H. Sparse Bayesian dictionary learning with a Gaussian hierarchical model. Signal Process. 2017, 130, 93–104. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, S.; Yu, B. Unique Sharp Local Minimum in ℓ1-minimization Complete Dictionary Learning. arXiv 2019, arXiv:1902.08380. [Google Scholar]

- Zhang, Y.; Kuo, H.W.; Wright, J. Structured local optima in sparse blind deconvolution. IEEE Trans. Inf. Theory 2019, 66, 419–452. [Google Scholar] [CrossRef]

- Zhou, Q.; Feng, Z.; Benetos, E. Adaptive Noise Reduction for Sound Event Detection Using Subband-Weighted NMF. Sensors 2019, 19, 3206. [Google Scholar] [CrossRef] [PubMed]

- Ling, Y.; Gao, H.; Zhou, S.; Yang, L.; Ren, F. Robust Sparse Bayesian Learning-Based Off-Grid DOA Estimation Method for Vehicle Localization. Sensors 2020, 20, 302. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, Y.; Wu, H.; Tan, C.; Jia, J. Efficient Multi-Task Structure-Aware Sparse Bayesian Learning for Frequency-Difference Electrical Impedance Tomography. IEEE Trans. Industr. Inform. 2020. [Google Scholar] [CrossRef]

- Qu, Q.; Zhu, Z.; Li, X.; Tsakiris, M.C.; Wright, J.; Vidal, R. Finding the Sparsest Vectors in a Subspace: Theory, Algorithms, and Applications. arXiv 2020, arXiv:2001.06970. [Google Scholar]

- Zhai, Y.; Yang, Z.; Liao, Z.; Wright, J.; Ma, Y. Complete Dictionary Learning via ℓ4-Norm Maximization over the Orthogonal Group. arXiv 2019, arXiv:1906.02435. [Google Scholar]

- Shen, Y.; Xue, Y.; Zhang, J.; Letaief, K.B.; Lau, V. Complete Dictionary Learning via ℓp-norm Maximization. arXiv 2020, arXiv:2002.10043. [Google Scholar]

- Gao, B.; Liu, X.; Yuan, Y.x. Parallelizable Algorithms for Optimization Problems with Orthogonality Constraints. SIAM J. Sci. Comput. 2019, 41, A1949–A1983. [Google Scholar] [CrossRef]

- Wen, Z.; Yang, C.; Liu, X.; Zhang, Y. Trace-penalty minimization for large-scale eigenspace computation. J. Sci. Comput. 2016, 66, 1175–1203. [Google Scholar] [CrossRef]

- Xiao, N.; Liu, X.; Yuan, X. A Class of Smooth Exact Penalty Function Methods for Optimization Problems with Orthogonality Constraints. Available online: http://www.optimization-online.org/DB_HTML/2020/02/7607.html (accessed on 26 May 2020).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from RGBD images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Xu, H.; Caramanis, C.; Sanghavi, S. Robust PCA via outlier pursuit. IEEE Trans. Inf. Theory 2010, 58, 3047–3064. [Google Scholar] [CrossRef]

- Soltanolkotabi, M.; Candes, E.J. A geometric analysis of subspace clustering with outliers. Ann. Stat. 2012, 40, 2195–2238. [Google Scholar] [CrossRef]

- Rahmani, M.; Atia, G.K. Coherence pursuit: Fast, simple, and robust principal component analysis. IEEE Trans. Signal Process. 2017, 65, 6260–6275. [Google Scholar] [CrossRef]

- You, C.; Robinson, D.P.; Vidal, R. Provable self-representation based outlier detection in a union of subspaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3395–3404. [Google Scholar]

- Ding, T.; Zhu, Z.; Ding, T.; Yang, Y.; Robinson, D.; Vidal, R.; Tsakiris, M. Noisy dual principal component pursuit. In Proceedings of the International Conference on Machine learning, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Tsakiris, M.C.; Vidal, R. Dual principal component pursuit. J. Mach. Learn. Res. 2018, 19, 684–732. [Google Scholar]

- Zhu, Z.; Wang, Y.; Robinson, D.P.; Naiman, D.Q.; Vidal, R.; Tsakiris, M.C. Dual principal component pursuit: probability analysis and efficient algorithms. arXiv 2018, arXiv:1812.09924. [Google Scholar]

- Shi, L.; Chi, Y. Manifold gradient descent solves multi-channel sparse blind deconvolution provably and efficiently. arXiv 2019, arXiv:1911.11167. [Google Scholar]

- Qu, Q.; Li, X.; Zhu, Z. A nonconvex approach for exact and efficient multichannel sparse blind deconvolution. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, CB, Canada, 8–14 December 2019; pp. 4017–4028. [Google Scholar]

- Qu, Q.; Sun, J.; Wright, J. Finding a sparse vector in a subspace: Linear sparsity using alternating directions. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QB, Canada, 8–13 December 2014; pp. 3401–3409. [Google Scholar]

- Barzilai, J.; Borwein, J.M. Two-point step size gradient methods. IMA J. Numer. Anal. 1988, 8, 141–148. [Google Scholar] [CrossRef]

- Dai, Y.H.; Fletcher, R. Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 2005, 100, 21–47. [Google Scholar] [CrossRef]

- Absil, P.A.; Mahony, R.; Sepulchre, R. Optimization Algorithms on Matrix Manifolds; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Boumal, N.; Mishra, B.; Absil, P.A.; Sepulchre, R. Manopt, a Matlab toolbox for optimization on manifolds. J. Mach. Learn. Res. 2014, 15, 1455–1459. [Google Scholar]

- Mairal, J.; Elad, M.; Sapiro, G. Sparse Representation for Color Image Restoration. IEEE Trans. Image Process. 2008, 17, 53–69. [Google Scholar] [CrossRef] [PubMed]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).