Abstract

Noise reduction is one of the most important and still active research topics in low-level image processing due to its high impact on object detection and scene understanding for computer vision systems. Recently, we observed a substantially increased interest in the application of deep learning algorithms. Many computer vision systems use them, due to their impressive capability of feature extraction and classification. While these methods have also been successfully applied in image denoising, significantly improving its performance, most of the proposed approaches were designed for Gaussian noise suppression. In this paper, we present a switching filtering technique intended for impulsive noise removal using deep learning. In the proposed method, the distorted pixels are detected using a deep neural network architecture and restored with the fast adaptive mean filter. The performed experiments show that the proposed approach is superior to the state-of-the-art filters designed for impulsive noise removal in color digital images.

1. Introduction

Image denoising is a long-standing research topic in low-level image processing that still receives much attention from the computer vision community [1,2]. Over the last three decades, a considerable increase in the effectiveness of algorithms took place, but despite these improvements, modern miniaturized high-resolution, low-cost image sensors still provide a limited quality, when operating in poor lighting conditions. Therefore, image enhancement and noise removal are very important operations of digital image processing [3,4].

In practice, we can observe various types of noise that significantly degrade the quality of captured images. One of them is the so-called impulsive noise, which may appear due to electric signal instabilities, corruptions in physical memory storage, random or systematic errors in data transmission, electromagnetic interferences, malfunctioning or aging of camera sensors and low lighting conditions [3,5,6,7]. This type of noise causes a total loss of information at certain image locations because the original color channels information is replaced by random values.

In the literature, impulse noise is typically classified into two main categories [8,9,10]. The first one is the Channel Together Random Impulse (CTRI), in which a pixel channel may be replaced with any value in the image intensity range. The second noise model is salt and pepper impulse noise, in which a corrupted pixel value is set to either the minimum or the maximum of a range of possible values (so it is set to either 0 or 255 for an 8-bit image). In both models, the main parameter is the noise density , which is the fraction of corrupted pixels in the processed image. In this paper, we focus on the CTRI model, however the proposed filter can also be applied to the salt and pepper model.

The classical method for removal of impulsive noise is the median filter. Generally, the median concept for color images is based on vector ordering, in which image pixels are treated as three-dimensional vectors. Such an approach yields better results than processing image channels independently [11,12,13,14], as the strong correlation between color channels is considered. The most widely used denoising method based on the ordering concept is the Vector Median Filter (VMF) [15], whose drawback is that every pixel of the image is processed, regardless of whether it is contaminated or not. This may result in strong signal degradation and introduction of perceivable blurring effect, especially in highly textured regions. In many applications, it may become a major flaw, and therefore, a plethora of improvements have been proposed [6,16,17,18,19,20,21,22,23,24,25].

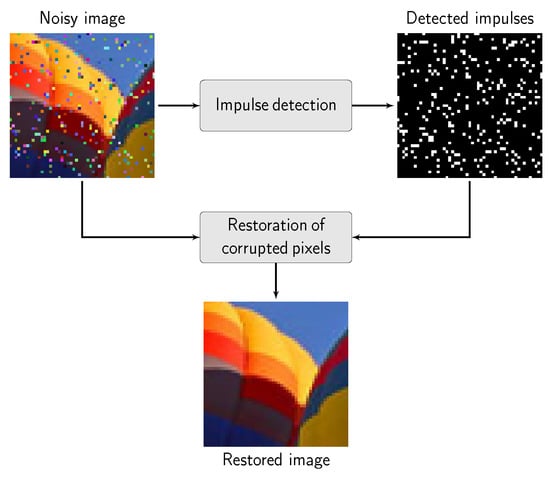

To preserve image details and still efficiently suppress impulsive noise, a family of filters based on fuzzy set theory was also introduced, in which a combination of noise detection and a replacement scheme based on weighted averaging is performed [26,27,28,29,30,31]. However, these methods still may alter clean pixels in the processed image. An effective approach to retain uncorrupted pixels is based on the switching concept [32]. A general scheme of switching filter is presented in Figure 1. In the majority of the switching techniques, it is necessary to determine the measure of the impulsiveness of the processed pixel, which allows classifying the pixels as pristine or distorted. One of the most popular measures of similarity used in switching filters is the ROAD (Rank-Ordered Absolute Differences) statistic introduced in [33], in which the trimmed cumulative distance of the pixels, in a given color space, to their neighbors is utilized as a degree of pixel corruption.

Figure 1.

A general scheme of a switching filter. Only the pixels identified as corrupted are being restored and the remaining pixels are retained.

Among switching filters, an important group of methods is based on the concept of a peer group [34,35,36], in which the membership degree of a central pixel of the filtering window to its local neighborhood is determined in terms of the number of close pixels. Another efficient family utilizes the elements of quaternion theory [37,38]. In this concept, instead of the commonly used Euclidean distance in a chosen color space, the similarity between pixels is defined in the quaternion form. Some switching filters also use classical machine learning approaches for impulse detection such as Support Vector Machines [39] and fully connected neural networks [40,41,42,43]. The detected impulses are restored using a median of neighboring uncorrupted pixels [40,42], adaptive and iterative mean filters [41] or edge-preserving regularization methods [43].

Recently, thanks to easy access to large image datasets and advances in deep learning, the Convolutional Neural Networks (CNNs) have led to a series of breakthroughs in various computer vision problems such as image segmentation, object recognition and detection. Concurrently, CNNs have also been successfully applied for image denoising, focusing mainly on the problem of Gaussian noise suppression. The recently proposed filters significantly outperform classical, well established algorithms in terms of filtering efficiency and computational speed [44,45,46]. However, despite the fact that image denoising using deep learning for Gaussian noise removal has been well-studied, relatively little work has been done in the area of impulsive noise detection and removal [47,48,49,50,51]. In [47], the authors proposed a method, which replaces noisy image pixels by a weighted average of samples from the neighborhood to remove salt and pepper noise and then the filter output is further processed using CNN to boost the final filtering performance. An alternative technique [48] divides the input image into small patches, which are processed independently by a set of convolution and deconvolution layers. Yet another approach [50] is based on a CNN trained on noisy images and is using the ROAD statistic to estimate the contamination level and to select the network found to be optimal for the estimated image pollution. The authors of [51] proposed to use two CNNs: the first one detects noisy pixels and the second one performs the final image reconstruction.

In one of the most promising approaches, called Denoising Convolutional Neural Network (DnCNN) [52], the authors proved that residual learning and batch normalization are beneficial in the case of the Gaussian noise model. Unfortunately, the network based on residual learning formulation is not effective in the case of other types of operations like JPEG artifacts removal, deblurring or image resolution enhancement [53] and also in the case of impulsive noise suppression. Applying residual learning for images contaminated by impulsive noise causes all pixels in the image to be altered, even those that were impulse-free, introducing unpleasant visual artifacts. Moreover, impulsive noise is not additive, and therefore, a residual approach is not suitable. To alleviate the inability of DnCNN to cope with impulsive distortions, we propose a modified version, which will be denoted as Impulse Detection Convolutional Neural Network (IDCNN).

In the proposed approach, we added a sigmoid layer to distinguish noise-free pixels from impulses and we reformulated the residual learning to the classification problem. In this way, the deep neural network is used as the impulse detector, and afterward, the corrupted pixels are restored using an adaptive mean filter, due to its good balance between computational complexity and restoration efficacy. The main contributions of our paper are as follows:

- We introduce a CNN architecture for impulse detection in noisy images,

- We propose a switching filter that applies deep learning for the localization of corrupted pixels and adaptive mean technique for their replacement,

- We analyze the impact of some network’s parameters on impulse detection accuracy,

- We also investigate the influence of impulse detection errors on the final restoration efficiency,

- We show that the proposed method is superior to the state-of-the-art denoising algorithms,

- We share the source code of the proposed approach at http://github.com/k-radlak/IDCNN.

The paper is organized as follows. Section 2 describes the structure of the proposed switching filter, focusing on the architecture of the proposed IDCNN and also presents its ablation study and the applied noisy pixel replacement method. The next section presents a comparison of the proposed technique with state-of-the-art filters designed for impulsive noise removal. Finally, discussion and conclusions are given in Section 4.

2. Proposed Switching Filter Design

Recently, the application of deep learning for image denoising has received much attention from the computer vision community due to its significant performance improvement in comparison to the classical machine learning algorithms. One of the most interesting methods, intended for Gaussian noise suppression, is the Denoising Convolutional Neural Network (DnCNN), inspired by VGG network [54] and introduced by Zhang et al. [52].

The DnCNN contains a sequence of convolutional layers followed by Rectified Linear Unit (ReLU) [55] and Batch Normalization (BN) [56]. The first layer has a convolution filter and ReLU activation. The second and each consecutive layer consists of a convolution filter, BN and ReLU activation, with the exception of the last layer that only uses convolution. The training of the network is based on the concept of deep residual learning [57], in which the network does not estimate the original values of the undistorted image, but instead learns to estimate the difference between a noisy and clean image.

The DnCNN filter outperforms most of the state-of-the-art algorithms designed for Gaussian noise reduction, but due to the fact that it uses residual learning, the original DnCNN trained on impulsive noise model also alters non-corrupted pixels as was shown in [49]. Therefore, in the proposed IDCNN, we modified the original DnCNN architecture to ensure that noise-free pixels will not be affected.

2.1. Impulsive Noise Detection Using CNN

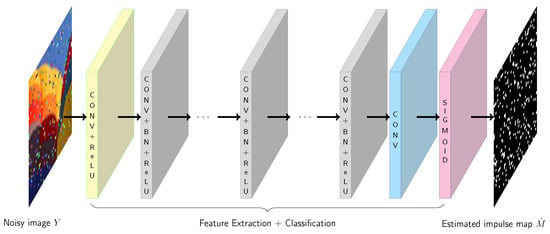

In our approach, instead of the usage of residual learning, we employed all layers proposed in DnCNN for feature extraction and we added a sigmoid layer, which estimates for each pixel its impulsivity measure , the values of which are close to 0 when a pixel is likely to be undistorted and close to 1 if it seems to be corrupted. In this way, the network divides the image pixels into clean and distorted, depending on the appropriate value of the measure. The pixels with or , are treated by the network as clean or corrupted respectively, with highest confidence. The architecture of the proposed network is depicted in Figure 2.

Figure 2.

The architecture of the proposed network for impulsive noise detection.

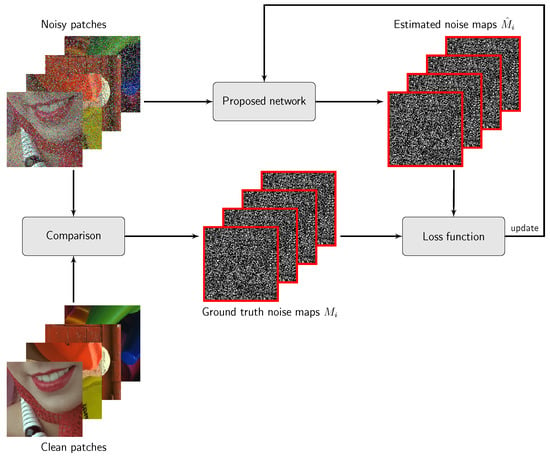

The introduced architecture also requires a change in the training procedure. In the original DnCNN, during the training, the images are divided into small, square and non-overlapping patches of size . The loss function is then determined taking into account the clean and denoised patches. In our approach, we generate a ground truth noise map M (consisting of values: 0 for clean pixels and 1 for impulses). Then, in the training procedure we calculate the loss function utilizing the patches cropped from M and the noise map , whose intensities are in the range , estimated by the network, as shown in Figure 3. More formally, the loss function is defined as

where denotes a set of trainable parameters, , denote for each patch i the original and the estimated noise map, respectively, and N stands for the number of patches used in the training. Finally, the output of the IDCNN is a map of impulsivity measure that has to be binarized using a threshold, to finally classify a pixel as either noisy or undistorted.

Figure 3.

Training of the proposed Impulse Detection Convolutional Neural Network (IDCNN) detector.example

2.2. Detected Noisy Pixels Replacement

In order to restore the detected noisy pixels, we used the modified version of the adaptive arithmetic mean filter introduced in [41], which offers satisfying image quality and reasonable computational speed. This algorithm of restoration of the detected noisy pixel can be summarized as follows:

- Select initial window of size centered at the detected noisy pixel and calculate the number of uncorrupted pixels. If all pixels are corrupted, then go to step 2, otherwise go to step 4.

- Increase the size of W by 2.

- Calculate the number of uncorrupted pixels in W and if all pixels are corrupted, then go to step 2, otherwise go to step 4.

- Replace the processed pixel by the average of clean pixels inside W.

The proposed algorithm restores only pixels that were classified by the network as impulses. However, it is worth mentioning here that the corrupted pixels can be replaced using other, more efficient techniques, for example, an image restoration algorithm based on deep neural network introduced in [58]. This issue will be the subject of follow-up research.

2.3. Network Training and Ablation Study

In order to evaluate the performance of the proposed network, we started with the default parameters that were proposed for DnCNN [52]. These parameters are summarized in Table 1. Additionally, for training purposes, all images were resized using bicubic interpolation in four scales and we also performed data augmentation: image rotations (90°, 180°, 270°) and flipping in the vertical direction. Here, it is worth mentioning that the small patches were used only in the training phase, but in inference, the obtained convolution masks were applied to the whole image.

Table 1.

Summary of the network parameters.

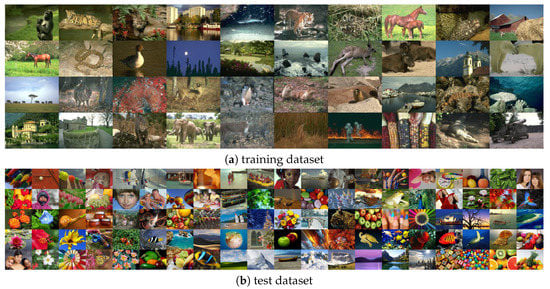

In our experiments, we used a Berkeley segmentation dataset (BSD500) [61] that consists of 500 natural images in resolution . Exemplary images from BSD500 are depicted in Figure 4a.

Figure 4.

Example images from (a) Berkeley segmentation dataset (BSD500) [61] used for training purposes and (b) our test dataset accessible from [36] as supplementary material.

For testing purposes, we used the dataset introduced in [36], consisting of 100 color images in resolution , which are presented in Figure 4b. In this paper, all presented results were obtained on this dataset.

For training purposes, these images were contaminated using the CTRI model. In this model each RGB pixel , is contaminated with probability and each channel of the noisy pixel obtains new values from the range drawn from a uniform distribution. Index i determines the pixel position on the image domain and Q is the total number of image pixels. This model can be formally defined as

where denotes the contaminated pixel, .

In order to evaluate the noise detection efficiency of the proposed network and impact of the network’s parameters, we propose to transform the problem into the classification domain, instead of using traditional measures for image denoising. In the proposed evaluation methodology, the result of noise detection is represented by the estimated noise map and is compared to the ground truth map M. Then, impulsive noise detection problem can be transformed into noisy vs. clean pixels classification and the results can be presented using the number of True Positives (TP), True Negatives (TN), False Positives (FP), False Negatives (FN):

- TP are pixels that were correctly recognized as impulses,

- TN are pixels that were correctly recognized as clean,

- FP are pixels that were incorrectly classified as noisy,

- FN are pixels that were incorrectly classified as uncorrupted.

Finally, the network performance can be evaluated using weighted accuracy (wACC) defined as

Weighted accuracy considers the number of pixels that were correctly classified when the classes are unbalanced and their cardinalities depend on selected noise intensity . However, this metric does not distinguish the type of errors made by the network, if an impulse was missed or incorrectly classified as pristine. To better analyze the detection performance, we evaluated the False Positive Rate (FPR) defined as

which shows the ratio of incorrectly classified clean pixels as impulses to the total number of clean pixels in the processed image. Additionally, we made use of the False Negative Rate (FNR) defined as

which represents the ratio of wrongly detected noisy pixels to the total number of impulses. Another measure used to evaluate detection performance is the F1-score, defined as

where precision = and recall = . This measure does not take into account the cardinality of TN results, because the number of TN tends to be dominant in most cases of impulsive noise detection. Therefore, it is more sensitive to detection imperfections than wACC.

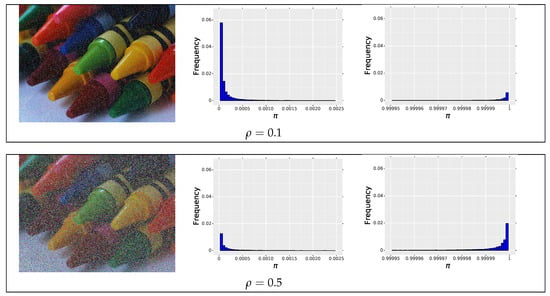

To evaluate the network performance on the whole test dataset, we determined wACC, TPR, FPR and F1-score for each image and then calculated their average values. To correctly localize impulses in the image using the output of the proposed IDCNN, in the first step it is necessary to estimate the proper value of the threshold, to decide which pixels are contaminated. Selection of the optimal threshold typically can significantly affect the final results, but we noticed that the values of the impulsivity degree returned by the network are very close to 0 if a pixel is clean and close to 1 if a pixel is likely to be an impulse. Exemplary distributions of measures returned by the IDCNN are presented in Figure 5, where we show only the left and right part of the histogram. Therefore, in our research, we set the threshold, which divides the pixels into clean and noisy to 0.5 as the values of impulsiveness are very close to 0 or 1.

Figure 5.

Exemplary distributions of the IDCNN outputs for the test image contaminated with impulsive noise with intensity and 0.5. For the contamination , 89.59% of pixels are assigned and 10.14% reaches a value . For noise intensity , the respective frequencies are 50.20% and 49.44%.

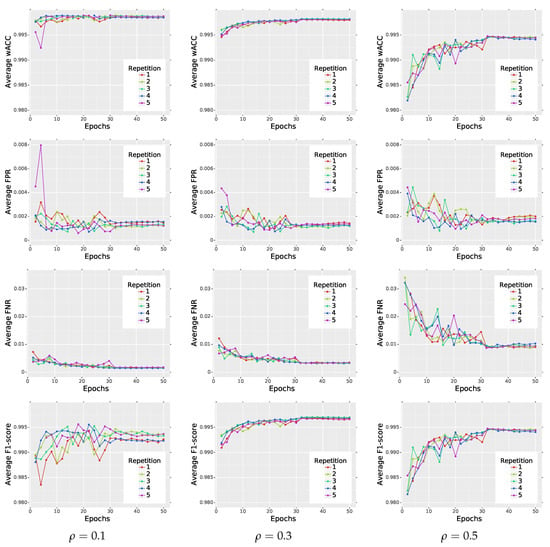

In order to better understand the influence of different parameters on the final performance of the proposed network, we conducted some additional experiments. In the first one, we checked whether the network impulse detection efficiency is repeatable when we start the training procedure from scratch. The changes of the average wACC, FPR, FNR and F1 score calculated on test database during the training are presented in Figure 6 and in Table 2. The outcomes are repeatable and the network starts to stabilize and converges to its final performance when the learning rate is decreased after 30 epochs. Additionally, we can see that the average FPR is relatively low and wACC reflects the network’s performance quite well. Therefore, in the rest of the paper, we present the results of the wACC metric only.

Figure 6.

Repeatability of the training procedure on BSD500 dataset.

Table 2.

Repeatability of the training on BSD500 dataset in terms of weighted accuracy (wACC), False Positive Rate (FPR), False Negative Rate (FNR) and F1.

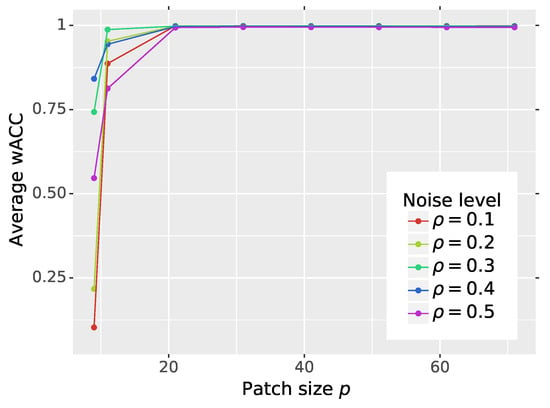

In the next experiment, we evaluated the influence of the patch size p used in the training procedure on the final average performance (see Figure 7 and Table 3). We tested our method using the following patch sizes p: . For smaller p, the network was not able to learn, and therefore, the results are not presented.

Figure 7.

Impact of the patch size p used in the training procedure on the network’s weighted detection accuracy.

Table 3.

Impact of the patch size used in the training procedure on the detection performance of the network in terms of wACC. The best values are presented in bold font.

As can be observed, if the patch size used in the training is not smaller than , the optimal performance of the network is achieved. However, the increase of the patch size does not boost the network’s performance, but it makes training more time consuming, because the loss function is calculated for bigger patches. Therefore, the selected patch size cannot be too small nor too big as it would only increase the training time.

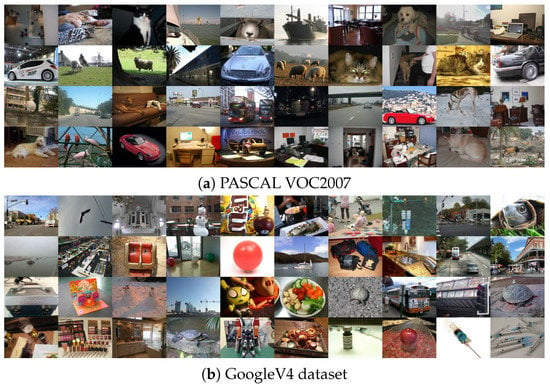

In the next experiment, we analyzed the impact of the type of dataset used in training procedure and its size on the final network performance. We selected two additional datasets: the PASCAL VOC2007 dataset [62] and the Google Open Images Dataset V4 (GoogleV4) [63] used for object detection purposes. Both datasets contain high quality images and we selected randomly 500 pictures. The PASCAL VOC2007 dataset consists of images, which present 20 classes of various objects. The GoogleV4 dataset contains images, which cover 600 classes. From the GoogleV4 dataset we randomly selected 50 classes and additionally we also decreased the original resolution four times to ensure similar image sizes in all training and test datasets. Example images from both datasets are presented in Figure 8.

Figure 8.

Example images from GoogleV4 and PASCAL VOC2007 datasets [63].

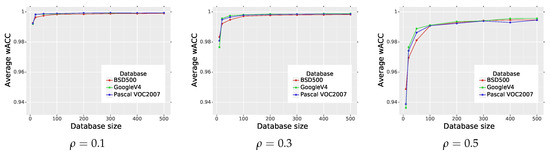

The influence of the size of the training dataset on the final average performance of the network is shown in Figure 9 and summarized in Table 4. As can be observed, if the size of the dataset is increased, then the average wACC is also growing. The highest average wACC was achieved for GoogleV4 dataset, but the difference between various datasets is rather small. The performed experiment also shows that the training of the network requires sufficient amount of data to reach expected effectiveness. However, the optimal performance can be achieved on different datasets. Additionally, we can notice that when the noise density increases, the network needs more data in the training.

Figure 9.

Impact of the type of dataset used in the training and its size on the average wACC of the proposed IDCNN.

Table 4.

Influence of the dataset type used in the training and its size on the average wACC. The best values are presented in bold font.

Finally, we recommend to use 500 images in the training, but we need to remember that the final number of patches can differ depending on image resolution. In a single experiment, the total number of non-overlapping patches of size generated for BSD500 dataset was 120,500.

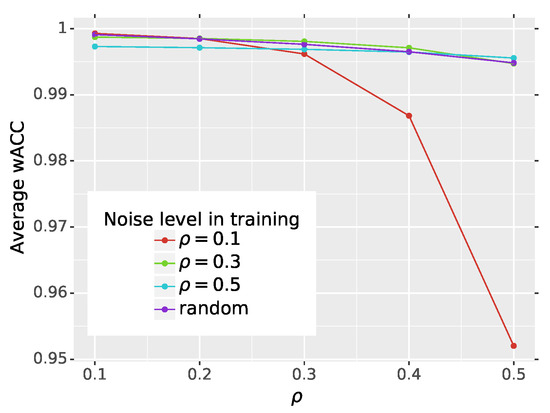

The last issue that we would like to address in the scope of network learning is the noise level that should be used in the training procedure to obtain optimal network performance. For the original DnCNN, the authors proved that the efficiency of their network was not influenced by the Gaussian noise intensity. To evaluate this behavior for impulsive noise, we trained the network with patches contaminated with noise density . Additionally, we trained the network with patches contaminated with randomly selected noise probability from the range . This experiment is denoted in this paper as “random” and the results are depicted in Figure 10 and summarized in Table 5.

Figure 10.

Impact of the noise density used during training on the final network performance.

Table 5.

Dependence of the noise density used during training using BSD500 dataset on the network performance and its ability to detect impulses in test images degraded with varying noise density. The best values are presented in bold font.

It can be noticed that the highest values of wACC are obtained if the noise level during training and tests is the same. When using a random noise density during training, the results are very close to the optimal performance. The highest deviations from the optimal average wACC were obtained for low noise contamination level during training and heavy noise at testing phase and vice versa. In most cases, the maximum of wACC was achieved using and for other noise levels in the test phase, the performance was very close to the optimal one. Therefore, we recommend to use this value during training. In the future, it will be interesting to investigate the impact of the diversity of the dataset used during training on the final network performance.

3. Comparison with the State-of-the-Art Denoising Methods

The proposed switching filter was compared with the competitive methods in two variants of IDCNN trained on different datasets: BSD500 (IDCNNB) and GoogleV4 (IDCNNG). The state-of-the-art algorithms chosen for comparison are listed bellow:

- Adaptive Weighted Quaternion Color Distance (AWQD) [64],

- DnCNN trained on impulsive noise model [49],

- Fast Averaging Peer Group Filter (FAPGF) [36],

- Fast Adaptive Switching Trimmed Arithmetic Mean Filter (FASTAMF) [32],

- Fast Fuzzy Noise Reduction Filter (FFNRF) [29],

- Fuzzy Rank-Ordered Differences Filter (FRF) [65],

- Fuzzy Weighted Non-Local Means (FWNLM) [66],

- Impulse Noise Reduction Filter (INRF) [67],

- TV-based restoration method with TV-norm data fidelity (L0TV) [68],

- Patch-based Approach for the Restoration of Images affected by Gaussian and Impulse noise (PARIGI) [69],

- Peer Group Filter (PGF) [35],

- Quaternion-Based Switching Filter (QBSF) [70],

- Two-stage Quaternion Switching VMF (TSQSVF) [71],

- Blind Denoising CNN (BDCNN) [72],

- Pixel-shuffle Down-sampling (PD) [73].

The implementation codes were downloaded from the authors’ websites and we used the recommended parameters. In our experiments, we also used DnCNN, trained on the impulsive noise model, because as it was shown in [49], even though DnCNN was designed for Gaussian noise, when it is trained on impulsive noise model, it still might provide competitive results in comparison to the state-of-the-art filters.

3.1. Evaluation Using Objective Quality Measures

The assessment of selected filtering techniques performance was done using objective numerical measures. We used Peak Signal to Noise Ratio (PSNR) and the Mean Absolute Error (MAE), defined as:

where , denote the RGB channel values of original and restored pixels. Additionally, we employed the Structural SIMilarity index (SSIMc) designed for color images [74], because it has demonstrated better agreement with human perception than traditional metrics.

The numerical results are shown in Table 6 using three representative test images chosen from the dataset [36] presented in Figure 11. To make an analysis of the data in Table 6 and Table 7 more convenient, we annotated five of the best results for each noise level using green color, and bold font was used to indicate the best one. The following remarks can be formulated:

Table 6.

Comparison of the denoising efficiency of the proposed network for impulsive noise removal with the state-of-the-art methods on selected representative images from the test dataset [36]. The result obtained with the most efficient filter are emboldened and 5 best results are highlighted with green color.

Figure 11.

Representative test images from benchmark dataset [36] for which numerical results were calculated.

Table 7.

Comparison of the denoising efficiency of the proposed network for impulsive noise removal with the state-of-the-art methods on the test dataset [36]. The result obtained with the most efficient filter are emboldened and 5 best results are highlighted with green color.

- In all cases, the proposed filter outperforms the state-of-the-art techniques and the IDCNNG, trained on GoogleV4 dataset, provided the best results for every image and quality measure.

- The AWQD and FASTAMF filters can be distinguished as those that appear very often among the five best results (depicted in green color).

- Other techniques provide noticeably good results in a rather random manner, thus those may be efficient for certain images, noise ratios or applied quality measure.

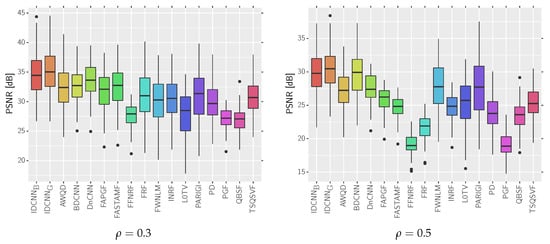

Finally, the average values of selected metrics calculated on the test dataset [36] are presented in Table 7. We also included the representative boxplots for PSNR measure to show the distribution of the obtained results (see Figure 12). As can be observed, the average results of all used quality measures are significantly better than state-of-the-arts filters. In addition, the proposed switching filter allows achieving much better results than the original DnCNN trained for impulsive noise.

Figure 12.

Box plots presenting the distributions of the obtained results for the analyzed methods using the test dataset [36].

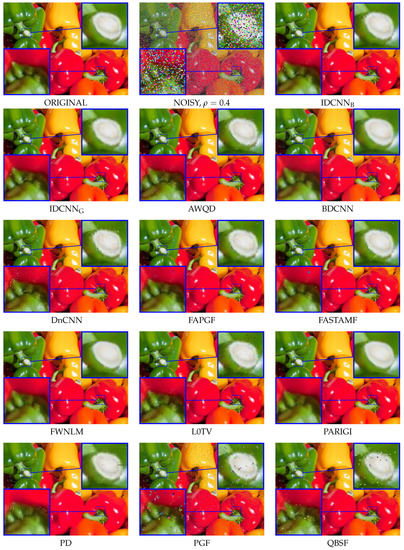

3.2. Visual Assessment

The visual comparison of the obtained results is depicted in Figure 13. One may notice that the proposed IDCNN is able to correctly localize almost all impulses and the visible artifacts are the effect of insufficient quality of restoration of the noisy pixels. Impressive results for high noise fraction were also obtained applying BDCNN. The main drawback of BDCNN is the fact that it was designed to cope with mixed Gaussian and impulsive noise and therefore uncorrupted pixels in the image were also altered. Due to the smoothing property, this approach sometimes excels over our switching technique for intensive noise in terms of PSNR (Table 6), but the MAE values, which are indicators of detail preservation achieved by our technique, are lower than those obtained with BDCNN. This effect is caused by errors in the detection of noisy pixels made by IDCNN and insufficient quality of the applied noisy pixel replacement method.

Figure 13.

Visual comparison of the filtering efficiency using a part of the PEPPERS image ().

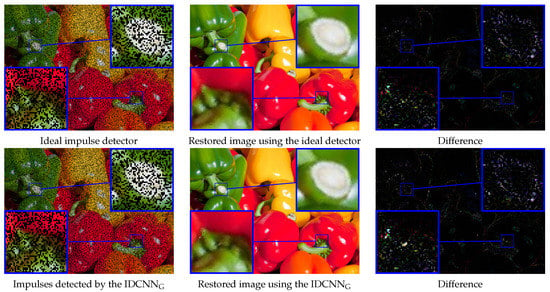

Additionally, in Figure 14, we presented a comparison of the proposed IDCNN with the ideal impulse detector that correctly localizes all impulses in the analyzed image (impulses are localized using ground truth map) and then the corrupted pixels are restored in both cases using the fast adaptive mean filter. As can be observed, only a few impulses (which are very similar to the original texture in the analyzed PEPPERS image) were not correctly detected by the proposed IDCNN network.

Figure 14.

Visualization of the denoising efficiency of the proposed IDCNNG in comparison to the ideal impulse detector, which correctly identifies all impulses in the analyzed image (). The right column shows the difference between the restored and clean image.

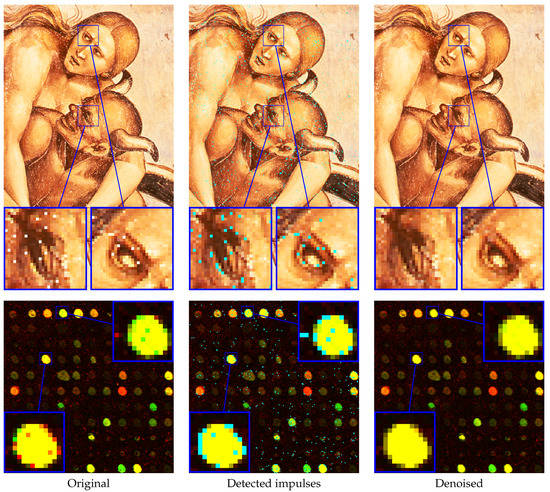

The performance of the proposed method was also evaluated on real noisy images. The first experiment was performed on a part of an image of the fresco “The Condemned in Hel” by Luca Signorelli and the second on a corrupted cDNA image, which are used for measuring the expression level of large number of genes. The restoration results are presented in Figure 15, and they confirm good denoising capabilities of the proposed IDCNN filter.

Figure 15.

Denoising result of the proposed IDCNN filter on real noisy images: a part of an image of the fresco “The Condemned in Hell” by Luca Signorelli (top) and cDNA image (bottom). Detected impulses are annotated using cyan color.

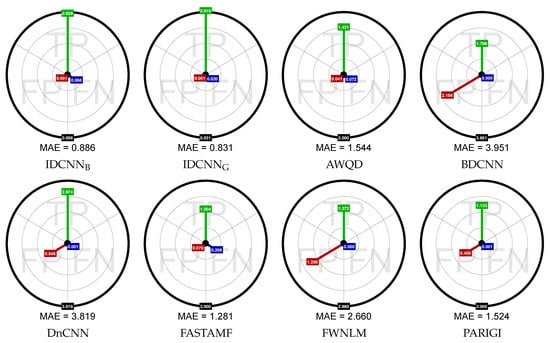

3.3. The Influence of Impulse Detection Imperfections

To confirm that the main source of error is the method of pixel replacement, we presented the Aim Diagram (AD), which separates the distribution of errors that were caused by improper classification of impulses. Using traditional metrics, we are not able to judge whether the main source of the error is incorrect impulse detection or corrupted pixels restoration.

In the proposed diagrams, the radius in the circle denote the proportion of the MAE metric calculated independently for pixels that are TP, FP and FN, respectively. The error for TN pixels is equal to zero and therefore it is not presented in the plots. The AD calculated for the MAE metric is presented in Figure 16.

Figure 16.

Diagrams that show what portion of the MAE error was caused by the improper decision of the used filter from classification perspective. These diagrams were obtained for the PEPPERS image ( = 0.4).

The networks IDCNNB and IDCNNG, trained on BSD500 and GoogleV4 datasets respectively, almost perfectly detected the impulses, and the main contribution to the MAE error comes from insufficient quality of the restoration of the noisy pixels. For the proposed method, the total MAE for the analyzed PEPPERS image is equal to 0.886, while error related to incorrectly detected impulses equals 0.056. In this way, the main contribution to the total error is made by the replacement (interpolation) of the correctly detected impulses. It shows that in further research, the efficiency of the proposed filter could be significantly improved if we used a better noisy pixel substitution method.

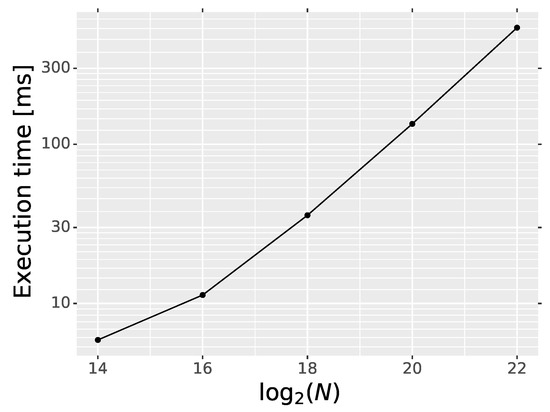

3.4. Computational Complexity

All the experiments were performed in Tensorflow v1.8 [75] environment and Python client, running on a PC with Intel® Core™ i7-3930K CPU 3.20 GHz and GeForce GTX 1080 Ti. In this setup, the training of the IDCNN detector with the default parameters (see Table 1) takes about six hours on GPU. Regarding the time for inference, we measured only the execution time of operations calculated on GPU, excluding the time required for data image reading and writing. The execution time is summarized in Table 8. As can be observed, execution time of the proposed IDCNN detector on GPU is similar to DnCNN, which is not surprising because both networks differ only slightly on the last layer. The execution time dependency of the proposed IDCNN on the number of image pixels is depicted in Figure 17. The time required for processing of an image is proportional to the number of pixels N.

Table 8.

Comparison of average execution time (in miliseconds) of the proposed IDCNN detector and DnCNN method on GPU.

Figure 17.

Execution time dependency of the IDCNN on the number of image pixels N.

4. Conclusions

In this work, we have introduced a switching filter that employs a deep neural network for impulsive noise removal in color images. The performed experiments reveal that the proposed filtering architecture, which operates using a modified version of DnCNN for impulsive pixels detection and adaptive mean filter for their restoration, outperforms or is comparable with the state-of-the-art filters in terms of PSNR, MAE and SSIMc quality measures.

The proposed IDCNN filter performs well on images contaminated by artificial impulsive noise and also on those affected by real noise process, keeping the undistorted pixels unchanged, without introducing visible artifacts. Apart from the high filtering efficiency, the proposed method is quite fast, and processing of an image of the standard size takes about 35 milliseconds on GPU, which enables applying our technique for real time applications. Moreover, the execution time is proportional to the number of pixels, which allows to efficiently process images also in higher resolutions. The unique feature of the introduced switching filter is that it does not require any adjusting parameters, and the same filtering framework can be applied regardless of the image contamination density. In this way, the proposed method can be applied in many denoising scenarios, as no user’s intervention is required. This is a crucial feature of our design, as the methods that deliver good results in terms of objective and also subjective evaluation, need to be tuned, which is mostly difficult and requires experience of the operator.

Future work will be continued in two main directions. The first goal will be the improvement of the efficiency of the noisy pixel replacement using other suitable CNNs. Thus, a combination of the proposed IDCNN for impulse detection and a CNN for noisy pixel restoration will significantly increase the overall performance of noisy image enhancement. The performed experiments indicate that the BDCNN output [72] could be used for the restoration of the detected noisy pixels. Another research direction will be focused on the elaboration of a single network able to combine both the detection and noisy pixel restoration in one processing stage.

Author Contributions

Conceptualization, K.R. and B.S.; data curation, K.R. and L.M.; formal analysis, K.R. and B.S.; funding acquisition, B.S.; investigation, K.R. and B.S.; methodology, K.R. and B.S.; resources, K.R. and L.M. and B.S.; software, K.R. and L.M.; supervision, B.S.; validation, B.S.; visualization, K.R. and L.M.; writing—original draft, K.R.; writing—review and editing, K.R., L.M. and B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Polish National Science Centre under the project 2017/25/B/ST6/02219, and was also funded by the Statutory Research funds of Silesian University of Technology, Poland (Grant BK/Rau1/2020).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Arnal, J.; Súcar, L.B. Hybrid Filter Based on Fuzzy Techniques for Mixed Noise Reduction in Color Images. Appl. Sci. 2019, 10, 243. [Google Scholar] [CrossRef]

- Sen, A.P.; Rout, N.K. Removal of High-Density Impulsive Noise in Giemsa Stained Blood Smear Image Using Probabilistic Decision Based Average Trimmed Filter. In Smart Healthcare Analytics in IoT Enabled Environment; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 127–141. [Google Scholar]

- Boncelet, C. Image noise models. In Handbook of Image and Video Processing; Bovik, A., Ed.; Academic Press: Cambridge, MA, USA, 2005; pp. 397–410. [Google Scholar]

- Faraji, H.; MacLean, W. CCD noise removal in digital images. IEEE Trans. Image Process. 2006, 15, 2676–2685. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Szeliski, R.; Bing Kang, S.; Zitnick, C.L.; Freeman, W.T. Automatic Estimation and Removal of Noise from a Single Image. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 299–314. [Google Scholar] [CrossRef] [PubMed]

- Smolka, B.; Malik, K.; Malik, D. Adaptive rank weighted switching filter for impulsive noise removal in color images. J. Real-Time Image Process. 2012, 10, 289–311. [Google Scholar] [CrossRef]

- Malinski, L.; Smolka, B. Self-tuning fast adaptive algorithm for impulsive noise suppression in color images. J. Real-Time Image Process. 2019. [Google Scholar] [CrossRef]

- Plataniotis, K.N.; Venetsanopoulos, A.N. Color Image Filtering. In Color Image Processing and Applications; Digital Signal Processing; Springer: Berlin/Heidelberg, Germnay, 2000; pp. 51–105. [Google Scholar]

- Smolka, B.; Plataniotis, K.; Venetsanopoulos, A. Chapter Nonlinear Techniques for Color Image Processing. In Nonlinear Signal and Image Processing: Theory, Methods, and Applications; CRC Press: London, UK, 2004; pp. 445–505. [Google Scholar]

- Phu, M.Q.; Tischer, P.; Wu, H.R. Statistical Analysis of Impulse Noise Model for Color Image Restoration. In Proceedings of the 6th IEEE/ACIS International Conference on Computer and Information Science, Melbourne, Australia, 11–13 July 2007; pp. 425–431. [Google Scholar]

- Lukac, R.; Smolka, B.; Plataniotis, K.N.; Venetsanopoulos, A.N. Entropy Vector Median Filter. In Pattern Recognition and Image Analysis; Springer: Berlin/Heidelberg, Germany, 2003; pp. 1117–1125. [Google Scholar]

- Lukac, R.; Smolka, B.; Martin, K.; Plataniotis, K.; Venetsanopoulos, A. Vector filtering for color imaging. IEEE Signal Process. Mag. 2005, 22, 74–86. [Google Scholar] [CrossRef]

- Lukac, R.; Plataniotis, K. A Taxonomy of Color Image Filtering and Enhancement Solutions. In Advances in Imaging and Electron Physics; Elsevier: Amsterdam, The Netherlands, 2006; Volume 140, pp. 187–264. [Google Scholar]

- Morillas, S.; Gregori, V.; Sapena, A. Adaptive marginal median filter for colour images. Sensors 2011, 11, 3205–3213. [Google Scholar] [CrossRef]

- Astola, J.; Haavisto, P.; Neuvo, Y. Vector median filters. Proc. IEEE 1990, 78, 678–689. [Google Scholar] [CrossRef]

- Lukac, R.; Smolka, B.; Plataniotis, K.; Venetsanopoulos, A. Vector sigma filters for noise detection and removal in color images. J. Vis. Commun. Image Represent. 2006, 17, 1–26. [Google Scholar] [CrossRef]

- Lukac, R. Adaptive vector median filtering. Pattern Recognit. Lett. 2003, 24, 1889–1899. [Google Scholar] [CrossRef]

- Celebi, M.E.; Kingravi, H.A.; Aslandogan, Y.A. Nonlinear vector filtering for impulsive noise removal from color images. J. Electron. Imaging 2007, 16, 033008. [Google Scholar] [CrossRef]

- Morillas, S.; Gregori, V. Robustifying vector median filter. Sensors 2011, 11, 8115–8126. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Bao, L.; Agaian, S. A New Unified Impulse Noise Removal Algorithm Using a New Reference Sequence-to-Sequence Similarity Detector. IEEE Access 2018, 6, 37225–37236. [Google Scholar] [CrossRef]

- Chen, J.; Zhan, Y.; Cao, H. Adaptive Sequentially Weighted Median Filter for Image Highly Corrupted by Impulse Noise. IEEE Access 2019, 7, 158545–158556. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image Denoising Via Sparse and Redundant Representations Over Learned Dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering Quantitative Remote Sensing Products Contaminated by Thick Clouds and Shadows Using Multitemporal Dictionary Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Farouk, R.M.; Khalil, H.A. Image Denoising based on Sparse Representation and Non-Negative Matrix Factorization. Life Sci. J. 2012, 9, 337–341. [Google Scholar]

- Li, X.; Wang, L.; Cheng, Q.; Wu, P.; Gan, W.; Fang, L. Cloud removal in remote sensing images using nonnegative matrix factorization and error correction. ISPRS J. Photogramm. Remote Sens. 2019, 148, 103–113. [Google Scholar] [CrossRef]

- Chatzis, V.; Pitas, I. Fuzzy scalar and vector median filters based on fuzzy distances. IEEE Trans. Image Process. 1999, 8, 731–734. [Google Scholar] [CrossRef]

- Plataniotis, K.; Androutsos, D.; Venetsanopoulos, A. Adaptive fuzzy systems for multichannel signal processing. Proc. IEEE 1999, 87, 1601–1622. [Google Scholar] [CrossRef]

- Shen, Y.; Barner, K. Fuzzy vector median-based surface smoothing. IEEE Trans. Vis. Comput. Graph. 2004, 10, 252–265. [Google Scholar] [CrossRef] [PubMed]

- Morillas, S.; Gregori, V.; Peris-Fajarnés, G.; Latorre, P. A New Vector Median Filter Based on Fuzzy Metrics; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3656, pp. 81–90. [Google Scholar]

- Camarena, J.G.; Gregori, V.; Morillas, S.; Sapena, A. Fast detection and removal of impulsive noise using peer groups and fuzzy metrics. J. Vis. Commun. Image Represent. 2008, 19, 20–29. [Google Scholar] [CrossRef]

- Morillas, S.; Gregori, V.; Hervas, A. Fuzzy Peer Groups for Reducing Mixed Gaussian-Impulse Noise from Color Images. IEEE Trans. Image Process. 2009, 18, 1452–1466. [Google Scholar] [CrossRef]

- Malinski, L.; Smolka, B. Fast adaptive switching technique of impulsive noise removal in color images. J. Real-Time Image Process. 2019, 16, 1077–1098. [Google Scholar] [CrossRef]

- Garnett, R.; Huegerich, T.; Chui, C.; He, W. A universal noise removal algorithm with an impulse detector. IEEE Trans. Image Process. 2005, 14, 1747–1754. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Kenney, C.; Moore, M.; Manjunath, B. Peer group filtering and perceptual color image quantization. In Proceedings of the 1999 IEEE International Symposium on Circuits and Systems, Orlando, FL, USA, 30 May–2 June 1999; Volume 4, pp. 21–24. [Google Scholar]

- Kenney, C.; Deng, Y.; Manjunath, B.; Hewer, G. Peer group image enhancement. IEEE Trans. Image Process. 2001, 10, 326–334. [Google Scholar] [CrossRef] [PubMed]

- Malinski, L.; Smolka, B. Fast averaging peer group filter for the impulsive noise removal in color images. J. Real-Time Image Process. 2016, 11, 427–444. [Google Scholar] [CrossRef]

- Jin, L.; Liu, H.; Xu, X.; Song, E. Quaternion-based color image filtering for impulsive noise suppression. J. Electron. Imaging 2010, 19, 043003. [Google Scholar] [CrossRef]

- Geng Xin, H.X. Quaternion based switching filter for impulse noise removal in color images. J. Beijing Univ. Aeronaut. Astronaut. 2012, 92, 1181. [Google Scholar]

- Lin, T.C. Decision-based filter based on SVM and evidence theory for image noise removal. Neural Comput. Appl. 2012, 21, 695–703. [Google Scholar] [CrossRef]

- Liang, S.; Lu, S.; Chang, J.; Lin, C. A Novel Two-Stage Impulse Noise Removal Technique Based on Neural Networks and Fuzzy Decision. IEEE Trans. Fuzzy Syst. 2008, 16, 863–873. [Google Scholar] [CrossRef]

- Kaliraj, G.; Baskar, S. An efficient approach for the removal of impulse noise from the corrupted image using neural network based impulse detector. Image Vis. Comput. 2010, 28, 458–466. [Google Scholar] [CrossRef]

- Nair, M.S.; Shankar, V. Predictive-based adaptive switching median filter for impulse noise removal using neural network-based noise detector. Signal Image Video Process. 2013, 7, 1041–1070. [Google Scholar] [CrossRef]

- Turkmen, I. The ANN based detector to remove random-valued impulse noise in images. J. Vis. Commun. Image Represent. 2016, 34, 28–36. [Google Scholar] [CrossRef]

- Lefkimmiatis, S. Non-local Color Image Denoising with Convolutional Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5882–5891. [Google Scholar]

- Lefkimmiatis, S. Universal Denoising Networks: A Novel CNN Architecture for Image Denoising. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3204–3213. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a Fast and Flexible Solution for CNN-Based Image Denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Fu, B.; Zhao, X.; Li, Y.; Wang, X.; Ren, Y. A convolutional neural networks denoising approach for salt and pepper noise. Multimed. Tools Appl. 2018, 78, 30707–30721. [Google Scholar] [CrossRef]

- Amaria, Y.; Miyazakia, T.; Koshimuraa, Y.; Yokoyamaa, Y.; Yamamoto, H. A Study on Impulse Noise Reduction Using CNN Learned by Divided Images. In Proceedings of the 6th IIAE International Conference on Industrial Application Engineering, Okinawa, Japan, 20–26 March 2018; pp. 93–100. [Google Scholar]

- Radlak, K.; Malinski, L.; Smolka, B. Deep learning for impulsive noise removal in color digital images. Procedding of 2019 International Society for Optics and Photonics, Baltimore, MD, USA, 5–8 May 2019; pp. 18–26. [Google Scholar]

- Chen, J.; Zhang, G.; Xu, S.; Yu, H. A Blind CNN Denoising Model for Random-Valued Impulse Noise. IEEE Access 2019, 7, 124647–124661. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, W.; Ma, G.; Song, E. Learning deep CNNs for impulse noise removal in images. J. Vis. Commun. Image Represent. 2019, 62, 193–205. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Zuo, W.; Zhang, K.; Zhang, L. Convolutional Neural Networks for Image Denoising and Restoration. In Denoising of Photographic Images and Video: Fundamentals, Open Challenges and New Trends; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 93–123. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd ICML’15 International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning Deep CNN Denoiser Prior for Image Restoration. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2808–2817. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010. [Google Scholar]

- Kingma, D.; Ba, L. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html (accessed on 7 May 2020).

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Duerig, T.; et al. The Open Images Dataset V4: Unified image classification, object detection, and visual relationship detection at scale. arXiv 2018, arXiv:1811.00982. [Google Scholar] [CrossRef]

- Jin, L.; Zhu, Z.; Song, E.; Xu, X. An effective vector filter for impulse noise reduction based on adaptive quaternion color distance mechanism. Signal Process. 2019, 155, 334–345. [Google Scholar] [CrossRef]

- Camarena, J.G.; Gregori, V.; Morillas, S.; Sapena, A. Two-step fuzzy logic-based method for impulse noise detection in colour images. Pattern Recognit. Lett. 2010, 31, 1842–1849. [Google Scholar] [CrossRef]

- Wu, J.; Tan, C. Random-valued impulse noise removal using fuzzy weighted non-local means. Signal Image Video Process. 2014, 8, 349–355. [Google Scholar] [CrossRef]

- Schulte, S.; Morillas, S.; Gregori, V.; Kerre, E.E. A New Fuzzy Color Correlated Impulse Noise Reduction Method. IEEE Trans. Image Process. 2007, 16, 2565–2575. [Google Scholar] [CrossRef]

- Yuan, G.; Ghanem, B. ℓ0TV: A Sparse Optimization Method for Impulse Noise Image Restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 352–364. [Google Scholar] [CrossRef]

- Delon, J.; Desolneux, A. A Patch-Based Approach for Removing Impulse or Mixed Gaussian-Impulse Noise. SIAM J. Imaging Sci. 2013, 6, 1140–1174. [Google Scholar] [CrossRef]

- Wang, G.; Liu, Y.; Zhao, T. A quaternion-based switching filter for colour image denoising. Signal Process. 2014, 102, 216–225. [Google Scholar] [CrossRef]

- Jin, L.; Zhu, Z.; Xu, X.; Li, X. Two-stage quaternion switching vector filter for color impulse noise removal. Signal Process. 2016, 128, 171–185. [Google Scholar] [CrossRef]

- Abiko, R.; Ikehara, M. Blind Denoising of Mixed Gaussian-impulse Noise by Single CNN. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1717–1721. [Google Scholar]

- Zhou, Y.; Jiao, J.; Huang, H.; Wang, Y.; Wang, J.; Shi, H.; Huang, T. When AWGN-based Denoiser Meets Real Noises. arXiv 2019, arXiv:1904.03485. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Martín, A.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado G., S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. Software. 2015. Available online: tensorflow.org (accessed on 7 May 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).