SemImput: Bridging Semantic Imputation with Deep Learning for Complex Human Activity Recognition

Abstract

1. Introduction

2. Problem Statement

2.1. Some Definitions

2.2. Problem Formulation: Semantic Imputation

2.3. Preliminaries of Sensing Technologies

2.3.1. Unobtrusive Sensing

2.3.2. Obtrusive Sensing

3. Methodology

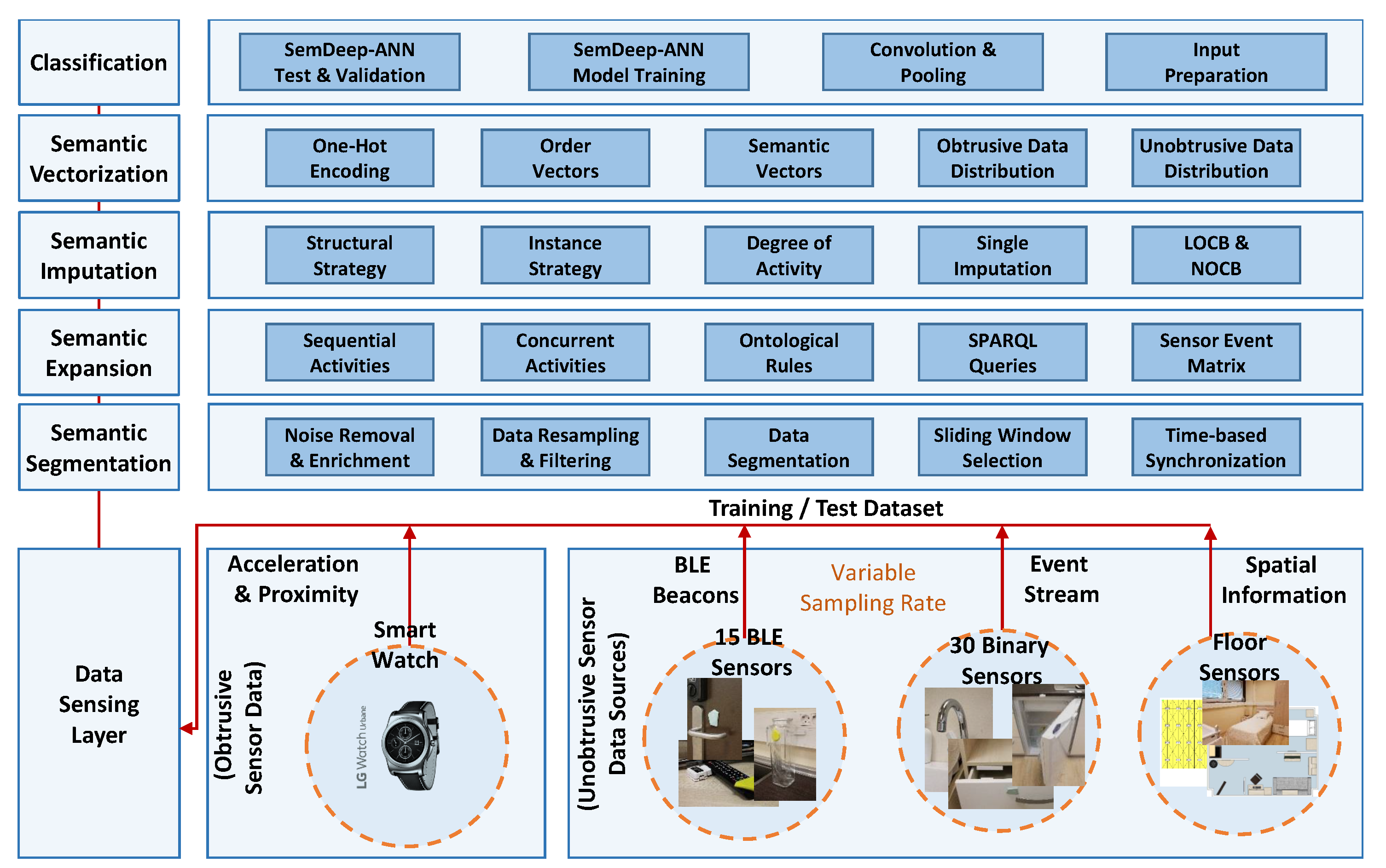

3.1. High-Level Overview of the SemImput Functional Framework

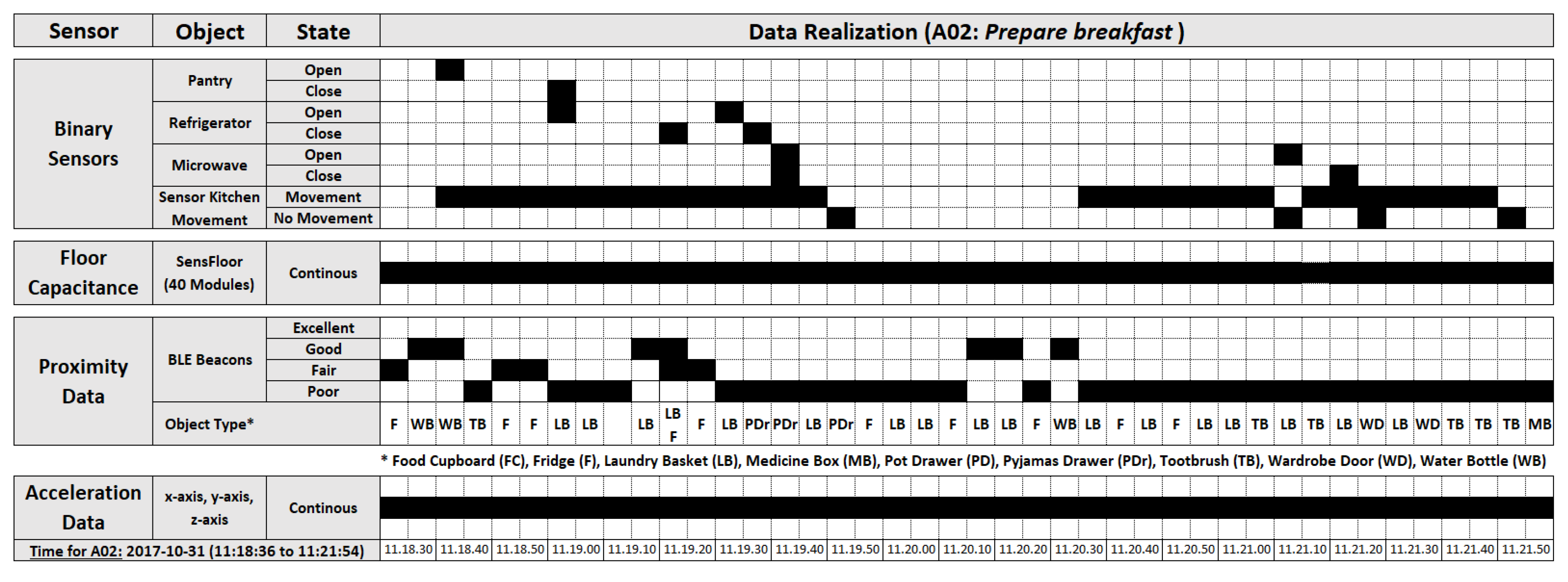

3.2. Data Sensing and Representation

3.2.1. Taxonomy Construction

3.2.2. Concurrent Sensor State Modeling

3.3. Semantic Segmentation

- : Valid Open sensor state

- : Valid Closed sensor state

- : Start-time of Next, sensor state

- : Sensor having Open state within the sliding window

- : Sensor having Closed state within the sliding window

- : start-time and still Open sensor states

- : start-time but Closed sensor states

- : end-time but still Open sensor states

- : end-time but Closed sensor states

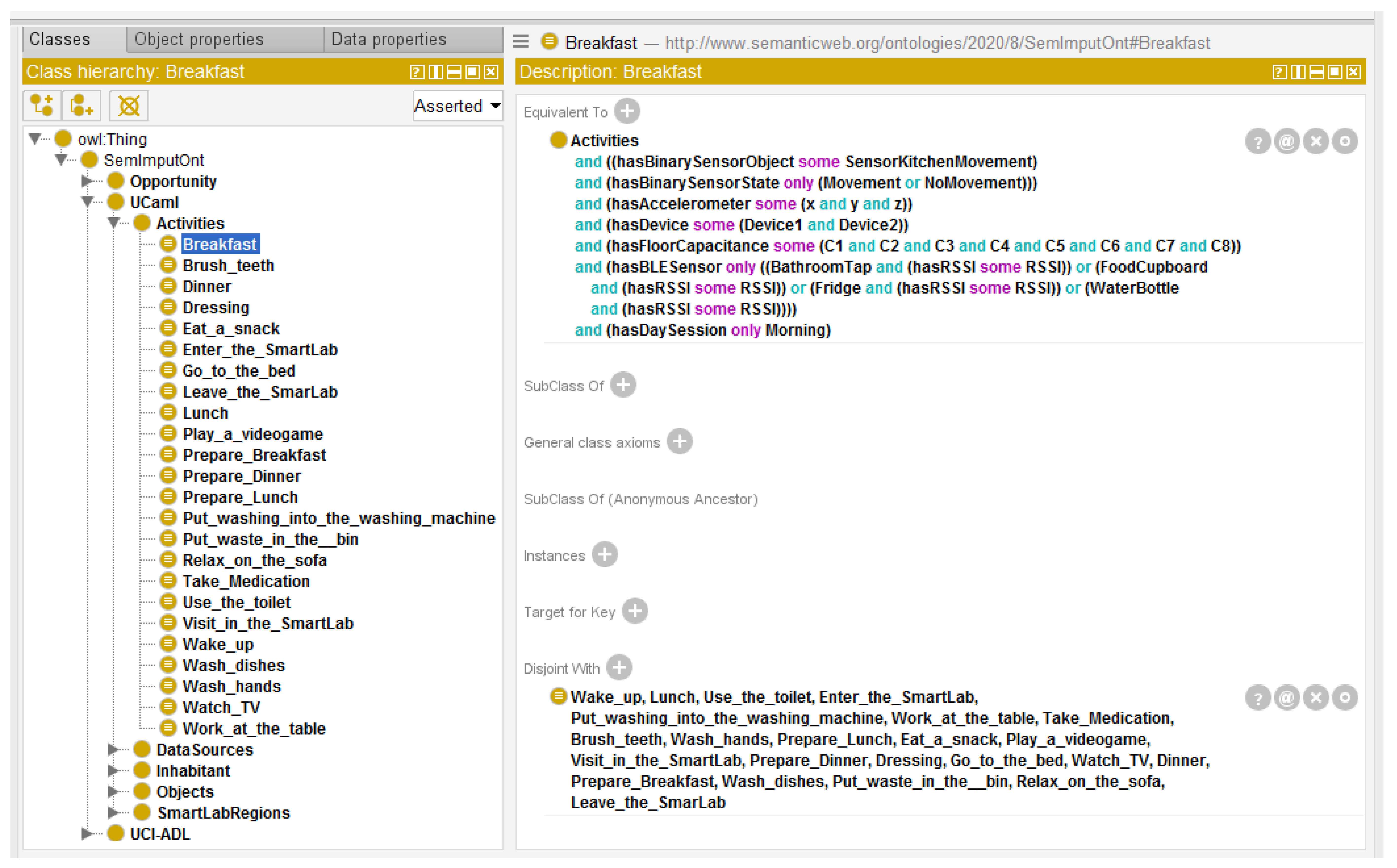

3.4. Semantic Data Expansion

3.4.1. Ontology-Based Complex Activity Structures

3.4.2. Conjunction Separation

3.4.3. Feature Transformation

3.5. Semantic Data Imputation

| Algorithm 1 Semantic Imputation Using , , and through SPARQL Queries |

Input: Incomplete Segmented Data Output: Complete Data with Imputation ▹ Segmented Imputed Dataset.

|

3.5.1. Structure-Based Imputation Measure

3.5.2. Instance-Based Imputation Measure

3.5.3. Longitudinal Imputation Measure

3.6. Classification

3.6.1. One-Hot Code Vectorization

3.6.2. Artificial Neural Networks for HAR

| Algorithm 2 Semantic Vectorization Using One-Hot Coding Technique |

Input: ▹ Extract scalar sequence (BinSens, Proximity) Output: M ▹ Vectorized feature Matrix.

|

| Algorithm 3 Semantic Deep Learning-based Artificial Neural Network (SemDeep-ANN) |

Input: Labeled Dataset ,Unlabeled Dataset , and labels ▹ Scalar sequence Equation (8) Output: Activity Labels for the ▹ HAR.

|

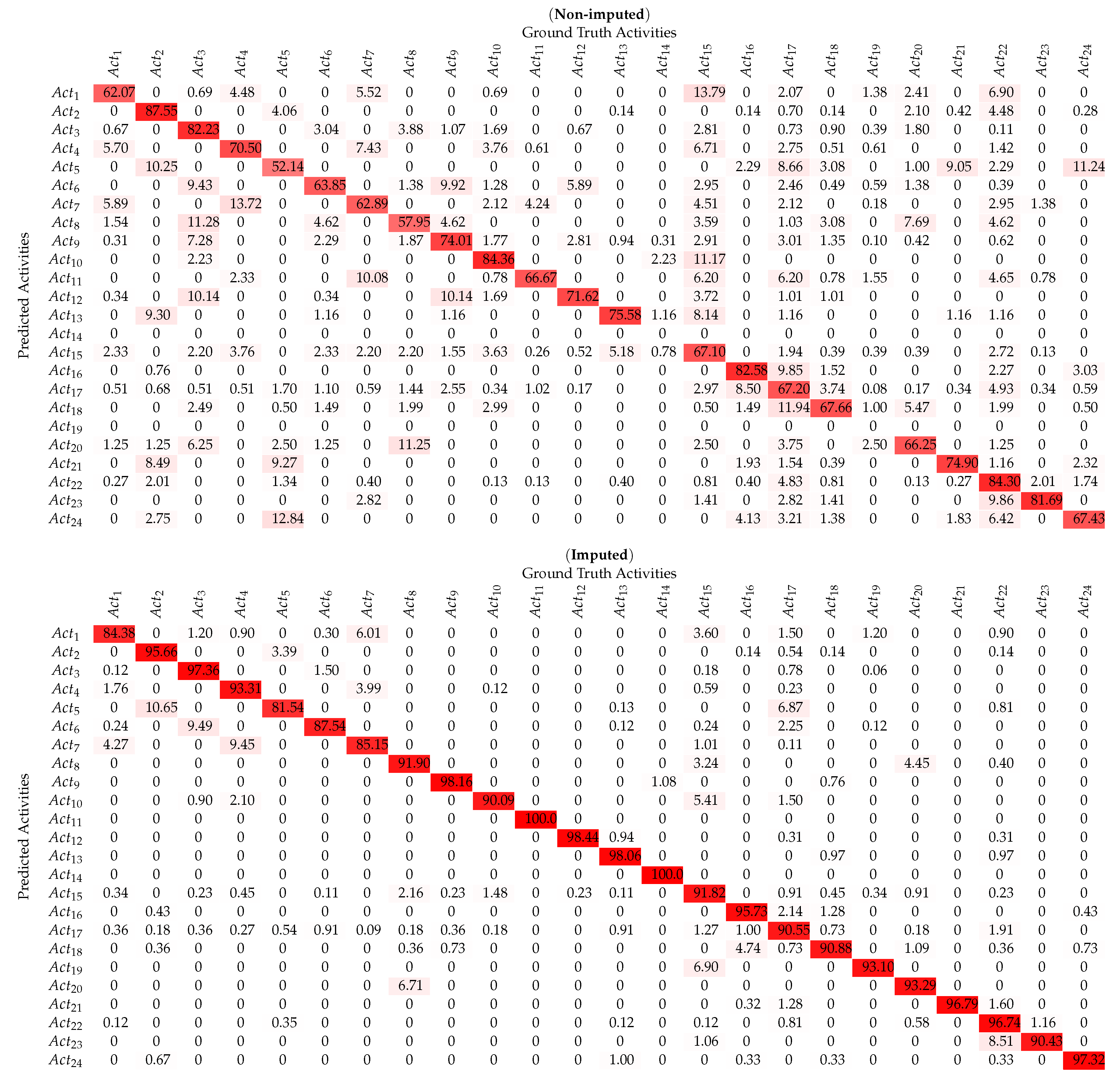

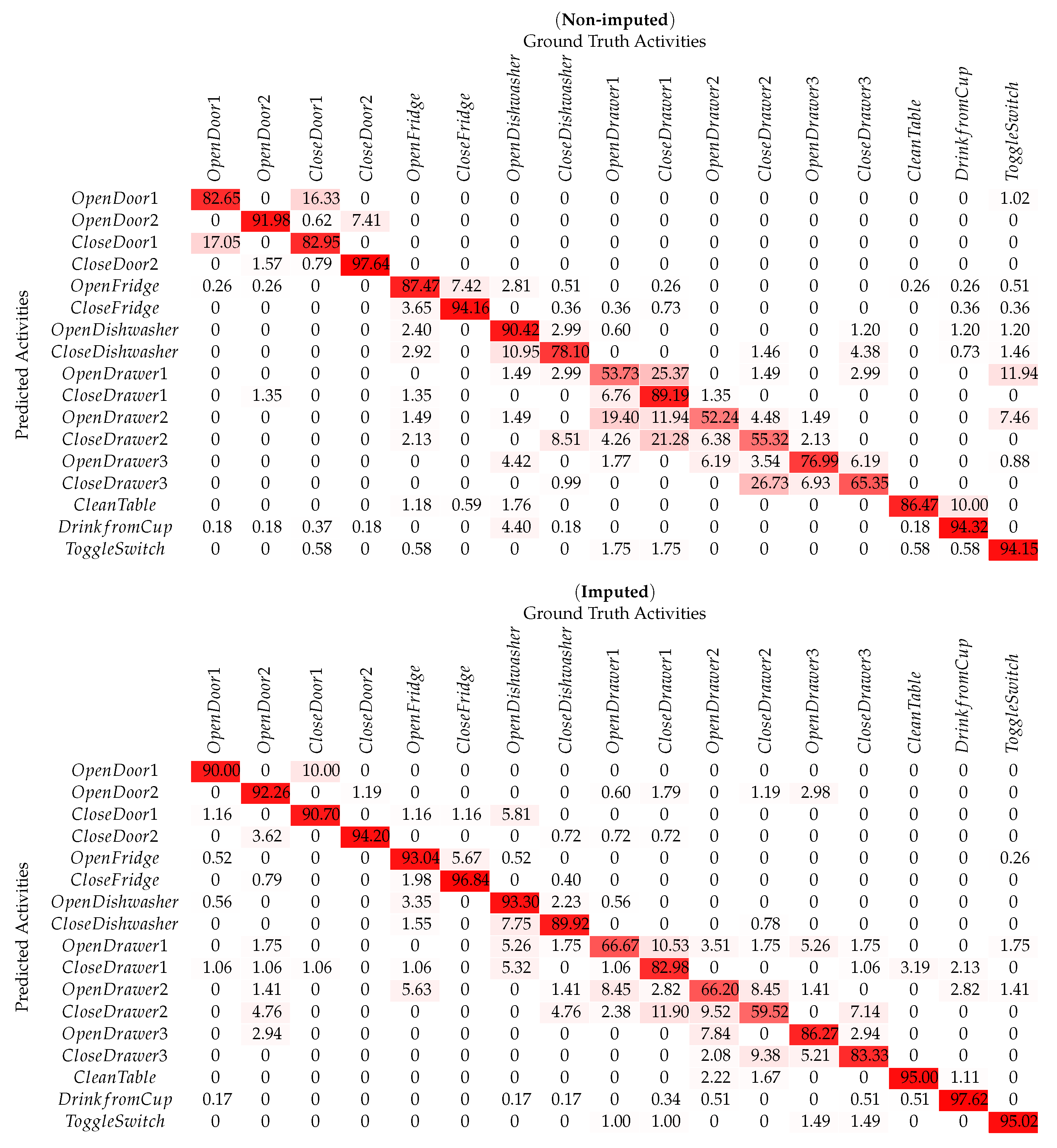

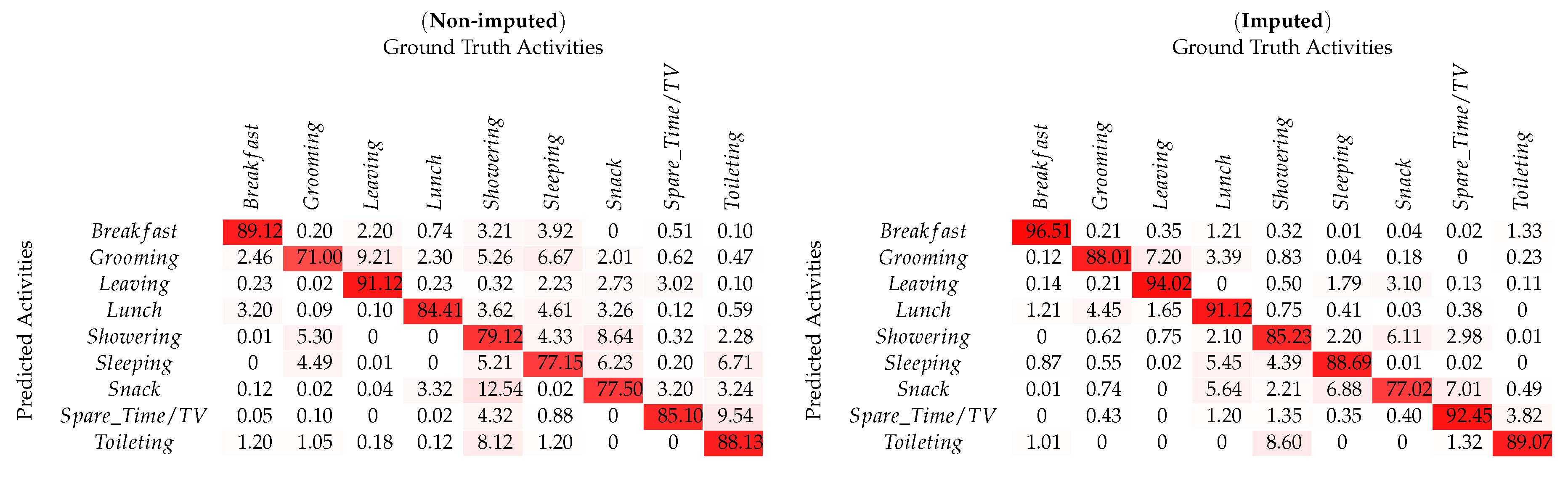

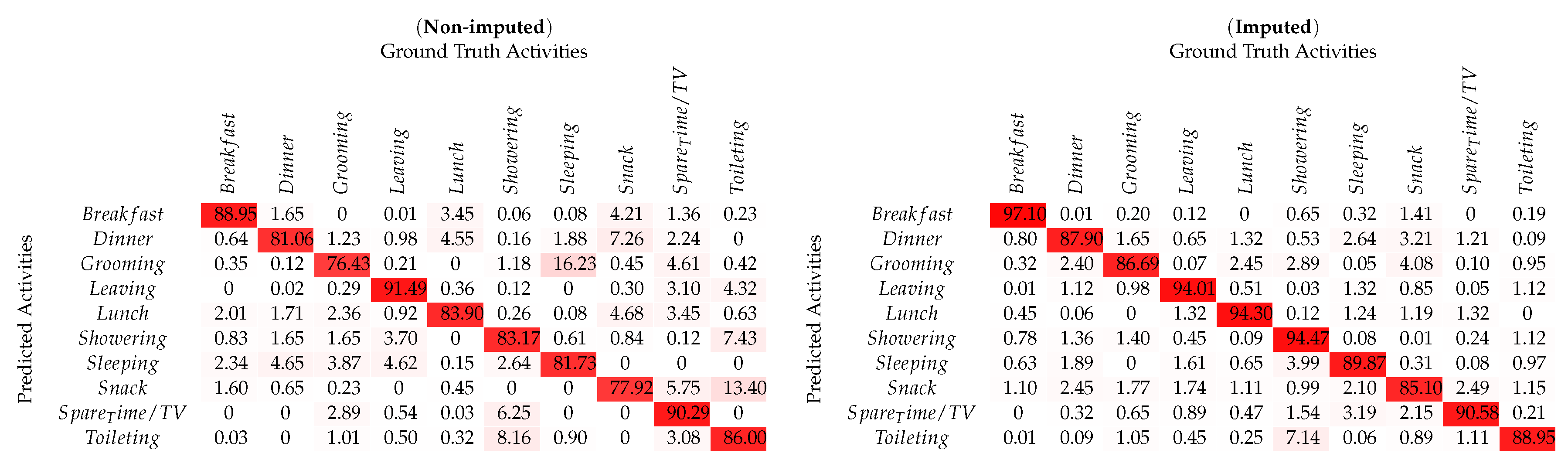

4. Results and Discussion

4.1. Data Description

4.2. Performance Metrics

4.3. Discussion

5. Conclusions and Future Work

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| ADL | Activities of Daily Living |

| HAR | Human Activity Recognition |

| OWL | Web Ontology Language |

| SemImput | Semantic Imputation |

| SemImputOnt | Semantic Imputation Ontology |

| LOCF | Last Observation Carried Forward |

| NOCB | Next Observation Carried Backward |

| SemDeep ANN | Semantic Deep Artificial Neural Network |

| BLE | Bluetooth Low Energy |

References

- Safyan, M.; Qayyum, Z.U.; Sarwar, S.; García-Castro, R.; Ahmed, M. Ontology-driven semantic unified modelling for concurrent activity recognition (OSCAR). Multimed. Tools. Appl. 2019, 78, 2073–2104. [Google Scholar] [CrossRef]

- Yang, J.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Kautz, T.; Groh, B.H.; Hannink, J.; Jensen, U.; Strubberg, H.; Eskofier, B.M. Activity recognition in beach volleyball using a Deep Convolutional Neural Network. Data Min. Knowl. Discov. 2017, 31, 1678–1705. [Google Scholar] [CrossRef]

- Krishnan, N.C.; Cook, D.J. Activity recognition on streaming sensor data. Pervasive Mob. Comput. 2014, 10, 138–154. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhang, Q. Distinct Sampling on Streaming Data with Near-Duplicates. In Proceedings of the 37th ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, Houston, TX, USA, 10–15 June 2018; ACM: Houston, TX, USA, 2018; pp. 369–382. [Google Scholar]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-based activity recognition. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Farhangfar, A.; Kurgan, L.A.; Pedrycz, W. A novel framework for imputation of missing values in databases. IEEE Trans. Syst. Man Cybern. Syst. Hum. 2007, 37, 692–709. [Google Scholar] [CrossRef]

- Farhangfar, A.; Kurgan, L.; Dy, J. Impact of imputation of missing values on classification error for discrete data. Pattern Recognit. 2008, 41, 3692–3705. [Google Scholar] [CrossRef]

- Ni, Q.; Patterson, T.; Cleland, I.; Nugent, C. Dynamic detection of window starting positions and its implementation within an activity recognition framework. J. Biomed. Inform. 2016, 62, 171–180. [Google Scholar] [CrossRef]

- Chernbumroong, S.; Cang, S.; Yu, H. A practical multi-sensor activity recognition system for home-based care. Decis. Support Syst. 2014, 66, 61–70. [Google Scholar] [CrossRef]

- Bae, I.H. An ontology-based approach to ADL recognition in smart homes. Future Gener. Comput. Syst. 2014, 33, 32–41. [Google Scholar] [CrossRef]

- Salguero, A.; Espinilla, M.; Delatorre, P.; Medina, J. Using ontologies for the online recognition of activities of daily living. Sensors 2018, 18, 1202. [Google Scholar] [CrossRef]

- Sarker, M.K.; Xie, N.; Doran, D.; Raymer, M.; Hitzler, P. Explaining Trained Neural Networks with Semantic Web Technologies: First Steps. arXiv 2017, arXiv:cs.AI/1710.04324. [Google Scholar]

- Demri, S.; Fervari, R.; Mansutti, A. Axiomatising Logics with Separating Conjunction and Modalities. In Proceedings of the European Conference on Logics in Artificial Intelligence, Rende, Italy, 7–11 May 2019; pp. 692–708. [Google Scholar]

- Meditskos, G.; Dasiopoulou, S.; Kompatsiaris, I. MetaQ: A knowledge-driven framework for context-aware activity recognition combining SPARQL and OWL 2 activity patterns. Pervasive Mob. Comput. 2016, 25, 104–124. [Google Scholar] [CrossRef]

- Amador-Domínguez, E.; Hohenecker, P.; Lukasiewicz, T.; Manrique, D.; Serrano, E. An Ontology-Based Deep Learning Approach for Knowledge Graph Completion with Fresh Entities. In Proceedings of the International Symposium on Distributed Computing and Artificial Intelligence, Avila, Spain, 26–28 June 2019; pp. 125–133. [Google Scholar]

- Socher, R.; Chen, D.; Manning, C.D.; Ng, A. Reasoning with neural tensor networks for knowledge base completion. In Proceedings of the Advances in neural information processing systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 926–934. [Google Scholar]

- Zhu, Y.; Ferreira, J. Data integration to create large-scale spatially detailed synthetic populations. In Planning Support Systems and Smart Cities; Springer: Cham, Switzerland, 2015; pp. 121–141. [Google Scholar]

- UCAmI Cup 2018. Available online: http://mamilab.esi.uclm.es/ucami2018/UCAmICup.html (accessed on 11 March 2020).

- Opportunity Dataset. Available online: http://www.opportunity-project.eu/challengeDownload.html (accessed on 11 March 2020).

- ADLs Recognition Using Binary Sensors Dataset. Available online: https://archive.ics.uci.edu/ml/datasets/Activities+of+Daily+Living+%28ADLs%29+Recognition+Using+Binary+Sensors (accessed on 11 March 2020).

- Razzaq, M.A.; Cleland, I.; Nugent, C.; Lee, S. Multimodal Sensor Data Fusion for Activity Recognition Using Filtered Classifier. Proceedings 2018, 2, 1262. [Google Scholar] [CrossRef]

- Ning, H.; Shi, F.; Zhu, T.; Li, Q.; Chen, L. A novel ontology consistent with acknowledged standards in smart homes. Comput. Netw. 2019, 148, 101–107. [Google Scholar] [CrossRef]

- Okeyo, G.; Chen, L.; Wang, H.; Sterritt, R. Dynamic sensor data segmentation for real-time knowledge-driven activity recognition. Pervasive Mob. Comput. 2014, 10, 155–172. [Google Scholar] [CrossRef]

- Razzaq, M.; Villalonga, C.; Lee, S.; Akhtar, U.; Ali, M.; Kim, E.S.; Khattak, A.; Seung, H.; Hur, T.; Bang, J.; et al. mlCAF: Multi-level cross-domain semantic context fusioning for behavior identification. Sensors 2017, 17, 2433. [Google Scholar] [CrossRef]

- Wan, J.; O’grady, M.J.; O’hare, G.M. Dynamic sensor event segmentation for real-time activity recognition in a smart home context. Pers. Ubiquitous Comput. 2015, 19, 287–301. [Google Scholar] [CrossRef]

- Triboan, D.; Chen, L.; Chen, F.; Wang, Z. A semantics-based approach to sensor data segmentation in real-time Activity Recognition. Future Gener. Comput. Syst. 2019, 93, 224–236. [Google Scholar] [CrossRef]

- Chen, R.; Tong, Y. A two-stage method for solving multi-resident activity recognition in smart environments. Entropy 2014, 16, 2184–2203. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, Z. Recover missing sensor data with iterative imputing network. In Proceedings of the Workshops at the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Liu, J.; Li, Y.; Tian, X.; Sangaiah, A.K.; Wang, J. Towards Semantic Sensor Data: An Ontology Approach. Sensors 2019, 19, 1193. [Google Scholar] [CrossRef]

- Yang, A.C.; Hsu, H.H.; Lu, M.D. Imputing missing values in microarray data with ontology information. In Proceedings of the 2010 IEEE International Conference on Bioinformatics and Biomedicine Workshops (BIBMW), Hong Kong, China, 18 December 2010; pp. 535–540. [Google Scholar]

- Song, S.; Zhang, A.; Chen, L.; Wang, J. Enriching data imputation with extensive similarity neighbors. PVLDB Endow. 2015, 8, 1286–1297. [Google Scholar] [CrossRef]

- Stuckenschmidt, H. A semantic similarity measure for ontology-based information. In Proceedings of the International Conference on Flexible Query Answering Systems, Roskilde, Denmark, 26–28 October 2009; pp. 406–417. [Google Scholar]

- Nweke, H.F.; Teh, Y.W.; Al-Garadi, M.A.; Alo, U.R. Deep Learning Algorithms for Human Activity Recognition using Mobile and Wearable Sensor Networks: State of the Art and Research Challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Patricio, C.; Gael, V.; Balázs, K. Similarity encoding for learning with dirty categorical variables. Mach. Learn. 2018, 107, 1477–1494. [Google Scholar]

- Moya Rueda, F.; Grzeszick, R.; Fink, G.; Feldhorst, S.; ten Hompel, M. Convolutional neural networks for human activity recognition using body-worn sensors. Informatics 2018, 5, 26. [Google Scholar] [CrossRef]

- Li, F.; Shirahama, K.; Nisar, M.; Köping, L.; Grzegorzek, M. Comparison of feature learning methods for human activity recognition using wearable sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef] [PubMed]

- Peng, L.; Chen, L.; Ye, Z.; Zhang, Y. AROMA: A Deep Multi-Task Learning Based Simple and Complex Human Activity Recognition Method Using Wearable Sensors. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 74. [Google Scholar] [CrossRef]

- Salguero, A.G.; Delatorre, P.; Medina, J.; Espinilla, M.; Tomeu, A.J. Ontology-Based Framework for the Automatic Recognition of Activities of Daily Living Using Class Expression Learning Techniques. Sci. Program. 2019, 2019. [Google Scholar] [CrossRef]

- Ordóñez, F.; de Toledo, P.; Sanchis, A. Activity recognition using hybrid generative/discriminative models on home environments using binary sensors. Sensors 2013, 13, 5460–5477. [Google Scholar] [CrossRef]

- Salomón, S.; Tîrnăucă, C. Human Activity Recognition through Weighted Finite Automata. Proceedings 2018, 2, 1263. [Google Scholar] [CrossRef]

| Type | ID | Activity Name | Location | Activity Dependencies Sensors’ Objects |

|---|---|---|---|---|

| Static | Act01 | Take medication | Kitchen | Water bottle, MedicationBox |

| Dynamic | Act02 | Prepare breakfast | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Refrigerator, Kettle, Microwave, Tap, Kitchen Faucet |

| Dynamic | Act03 | Prepare lunch | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Refrigerator, Pantry, Cupboard Cups, Cutlery, Pots, Microwave |

| Dynamic | Act04 | Prepare dinner | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Refrigerator, Pantry, Dish, microwave |

| Dynamic | Act05 | Breakfast | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Pots, Dishwasher, Tap, Kitchen Faucet |

| Dynamic | Act06 | Lunch | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Pots, Dishwasher, Tap, Kitchen Faucet |

| Dynamic | Act07 | Dinner | Kitchen, Dining room | Motion Sensor Bedroom, Sensor Kitchen Movement, Pots, Dishwasher, Tap, Kitchen Faucet |

| Dynamic | Act08 | Eat a snack | Kitchen, Living room | Motion Sensor Bedroom, Sensor Kitchen Movement, Fruit Platter, Pots, Dishwasher, Tap, Kitchen Faucet |

| Static | Act09 | Watch TV | Living room | RemoteControl, Motion Sensor Sofa, Pressure Sofa, TV |

| Dynamic | Act10 | Enter the SmartLab | Entrance | Door |

| Static | Act11 | Play a video game | Living room | Motion Sensor Sofa, Motion Sensor Bedroom, Pressure Sofa, Remote XBOX |

| Static | Act12 | Relax on the sofa | Living room | Motion Sensor Sofa, Motion Sensor Bedroom, Pressure Sofa |

| Dynamic | Act13 | Leave the SmartLab | Entrance | Door |

| Dynamic | Act14 | Visit in the SmartLab | Entrance | Door |

| Dynamic | Act15 | Put waste in the bin | Kitchen, Entrance | Trash |

| Dynamic | Act16 | Wash hands | bathroom | Motion Sensor Bathroom, Tap, Tank |

| Dynamic | Act17 | Brush teeth | bathroom | Motion Sensor Bathroom, Tap, Tank |

| Static | Act18 | Use the toilet | bathroom | Motion Sensor Bathroom, Top WC |

| Static | Act19 | Wash dishes | Kitchen | dish, dishwasher |

| Dynamic | Act20 | Put washing into the washing machine | Bedroom, Kitchen | Laundry Basket, Washing machine, Closet |

| Static | Act21 | Work at the table | Workplace | |

| Dynamic | Act22 | Dressing | Bedroom | Wardrobe Clothes, Pyjama drawer, Laundry Basket, Closet |

| Static | Act23 | Go to the bed | Bedroom | Motion Sensor bedroom, Bed |

| Static | Act24 | Wake up | Bedroom | Motion Sensor bedroom, Bed |

| Method | Datasets | Number of | (Mean Recognition Accuracy) | Standard | |

|---|---|---|---|---|---|

| Activities | Non-Imputed | Imputed | Deviation | ||

| Proposed SemImput | Opportunity [20] | 17 | 86.57 | 91.71 | ±2.57 |

| UCI-ADL OrdóñezA [40] | 9 | 82.27 | 89.20 | ±3.47 | |

| UCI-ADL OrdóñezB [40] | 10 | 84.0 | 90.34 | ±3.17 | |

| UCamI [19] | 24 | 71.03 | 92.62 | ±10.80 | |

| State-of-the-Art | Datasets | Number of | Mean Recognition | SemImput |

|---|---|---|---|---|

| Methods | Activities | Accuracy(%) | Gain | |

| Razzaq et al. [22] | UCamI [19] | 24 | 47.01 | +45.61 |

| Salomón et al. [41] | UCamI [19] | 24 | 90.65 | +1.97 |

| Li et al. [37] | Opportunity [20] | 17 | 92.21 | −0.50 |

| Salguero et al. [12,39] | UCI-ADL OrdóñezA [40] | 9 | 95.78 | −6.58 |

| UCI-ADL OrdóñezB [40] | 10 | 86.51 | +3.83 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Razzaq, M.A.; Cleland, I.; Nugent, C.; Lee, S. SemImput: Bridging Semantic Imputation with Deep Learning for Complex Human Activity Recognition. Sensors 2020, 20, 2771. https://doi.org/10.3390/s20102771

Razzaq MA, Cleland I, Nugent C, Lee S. SemImput: Bridging Semantic Imputation with Deep Learning for Complex Human Activity Recognition. Sensors. 2020; 20(10):2771. https://doi.org/10.3390/s20102771

Chicago/Turabian StyleRazzaq, Muhammad Asif, Ian Cleland, Chris Nugent, and Sungyoung Lee. 2020. "SemImput: Bridging Semantic Imputation with Deep Learning for Complex Human Activity Recognition" Sensors 20, no. 10: 2771. https://doi.org/10.3390/s20102771

APA StyleRazzaq, M. A., Cleland, I., Nugent, C., & Lee, S. (2020). SemImput: Bridging Semantic Imputation with Deep Learning for Complex Human Activity Recognition. Sensors, 20(10), 2771. https://doi.org/10.3390/s20102771