1. Introduction

Accurate and reliable obstacle classification is an important task for the environment perception module in the autonomous vehicle system, since various participants exist in the traffic environment and the motion properties of the surrounding participants directly affect the path planning of an autonomous vehicle [

1]. Pedestrians are the most vulnerable traffic elements on the road, thus a great deal of attention has been paid on pedestrian detection using exteroceptive sensors. Pedestrian detection is considered as a particularly difficult problem due to the large variation of appearance and pose of human beings [

2].

Most existing pedestrian detection methods rely upon several kinds of popular sensors, such as camera, radar or laser scanner [

3,

4]. Each sensor has its own strengths and weaknesses. The camera has been applied extensively to model the appearance characteristic of the pedestrian intuitively, but it is hard to obtain the accurate distance information and it is susceptible to illumination changes. Radar can capture the precise spatial and motion features of the obstacles, but it is not always possible to detect the static obstacles and has poor recognition capabilities. Compared with camera and radar, laser scanner enables accurate measurements and the invariance to illumination. Thus, laser scanner is used as the primary sensor in one of the most promising sensor schemes for autonomous vehicles, while camera or radar is utilized as the secondary sensor [

5].

In terms of the number of scanning layers, laser scanners can be classified into three categories, namely, 2D single-layer, 2.5D multi-layer, and 3D dozens-of-layers laser scanners [

6,

7,

8]. 2D laser scanners, e.g., the SICK LMS-111 (SICK AG, Waldkirch, Germany), provide single-layer laser beam at a fixed pitch angle, and the sparse information from single-layer point cloud is insufficient for obstacle recognition. 3D laser scanners, e.g., the Velodyne HDL-64E (Velodyne, San Francisco, CA, USA), use dozens of layers to cover 360° horizontal field of view and generate dense point cloud for omnidirectional environment modelling. In recent years, 3D laser scanners are gaining popularity in autonomous driving and intelligent vehicles, and they are usually placed on the top of a vehicle. However, the high price and external installation of 3D laser scanners limit their commercialization and popularization. Considering the practicality, 2.5D multi-layer laser scanner might be a better choice as the primary sensor than other types of laser scanners for autonomous vehicles. The existing 2.5D multi-layer laser scanner usually has four or eight layers and it is installed on the front bumper of the vehicle. Examples of 2.5D scanners include the IBEO LUX 4L and 8L (IBEO, Hamburg, Germany).

Numerous algorithms have been proposed for pedestrian detection using laser range data. Samuel et al. [

7] built a pedestrian detection system based on the point cloud information from four laser planes. Particle filter was used to achieve the observation of pedestrian random movement dynamics. Carballo et al. [

9] improved the pedestrian detection accuracy by introducing two novel features, namely laser intensity variation and uniformity. Gate et al. [

10] used the appearance to estimate the true outlines of the tracked target. Both the geometrical and dynamical criteria of the tracked targets were utilized for pedestrian detection. Leigh et al. [

11] presented a pedestrian detection and tracking system using 2D laser scanners at leg-height. Their system integrated a joint leg tracker with local occupancy grid maps to achieve robust detection. Kim et al. [

12] fully exploited the feature information from 2.5D laser scanner data and developed RBFAK classifier to improve the pedestrian detection performance and reduce the computation time. Adiaviakoye et al. [

13] introduced a method for detecting and tracking a crowd of pedestrians based on accumulated distribution of consecutive laser frames. This method explored the characteristics of pedestrian crowds including the velocity and trajectory. Lüy et al. [

14] proposed a pedestrian detection algorithm based on a majority voting scheme using single-layer laser scanner. The scheme calculated the recognition confidence of each hypothesis over time until a high recognition confidence is achieved. Wang et al. [

15] presented a framework for current frame-based pedestrian detection using 3D point clouds. A fixed-dimensional feature vector was built for each patch to solve the binary classification task. However, in their work, the precision and recall of the pedestrian detection test were unsatisfactory. Lehtomäki et al. [

16] used several geometry-based point cloud features, such as local descriptor histograms, spin images, general shape and point distribution features, to improve the pedestrian detection accuracy. Xiao et al. [

17] proposed a simultaneous detection and tracking method for pedestrians using 3D laser scanner. An energy function was built to incorporate the shape and motion of the point cloud, and the points belonging to pedestrians were assigned into continuous trajectories in space-time. The methods in the above literatures mainly assume that the individual people is entirely visible or the state of legs can be tracked in the classification stage. However, due to the high chance of partial occlusion, it is hard to obtain the complete contour of the individual pedestrian.

To improve the pedestrian detection performance in case of partial occlusion, some researchers attempted to use the fusion of laser and vision. García et al. [

18] processed context information to enhance the pedestrian detection performance using sensor fusion of single-layer laser scanner and computer vision. Oliveira et al. [

19] performed a cooperative fusion of laser and vision for pedestrian detection based on spatial relationship of parts-based classifiers via a Markov logic network. Premebida et al. [

20] trained a deformable part detector using different configurations of image and laser point cloud to make a joint decision regarding whether the detected target is a pedestrian or not. It is notable that the joint calibration of a camera and laser scanner is cost-effective, and some laser points are relatively invisible to the camera.

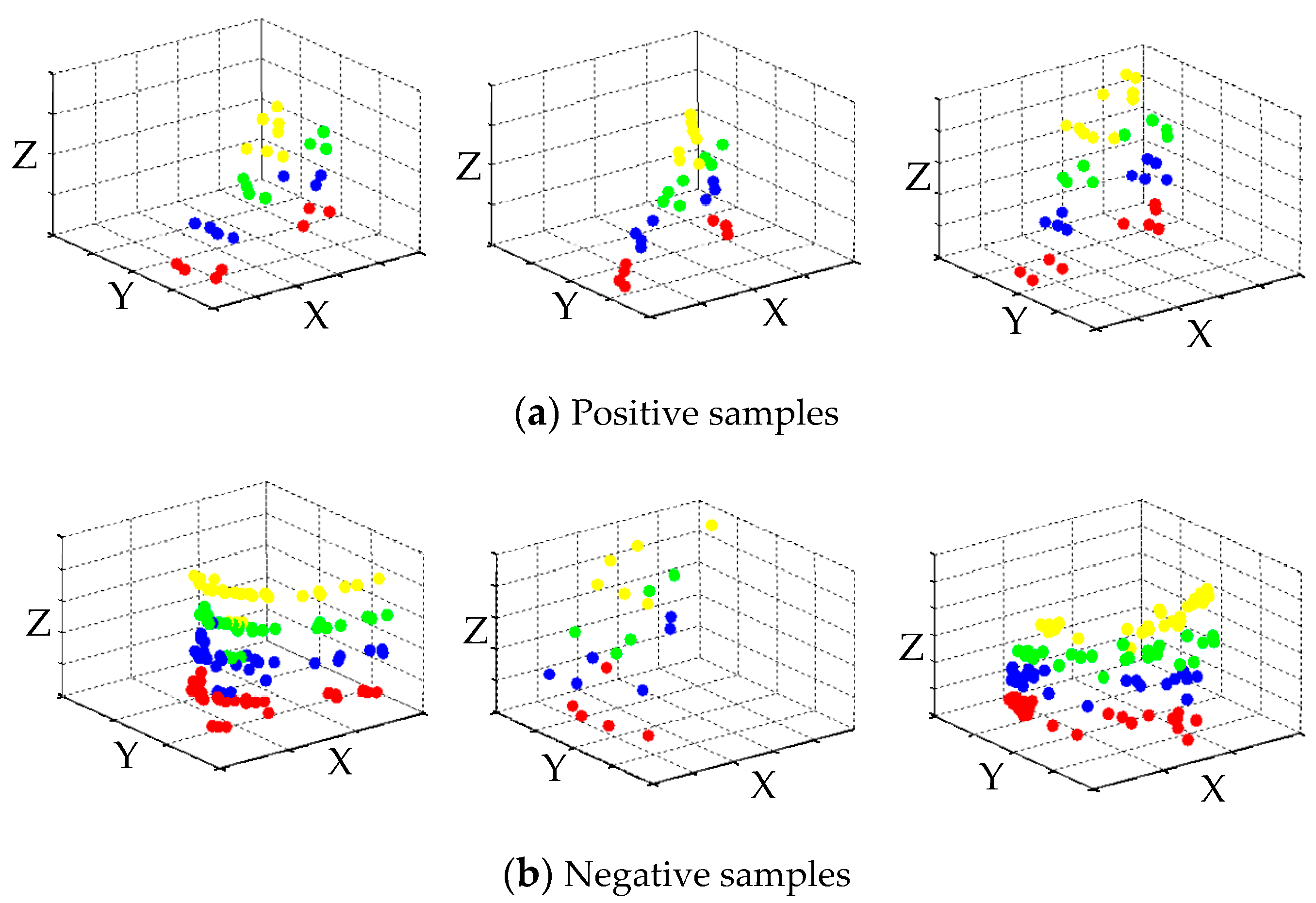

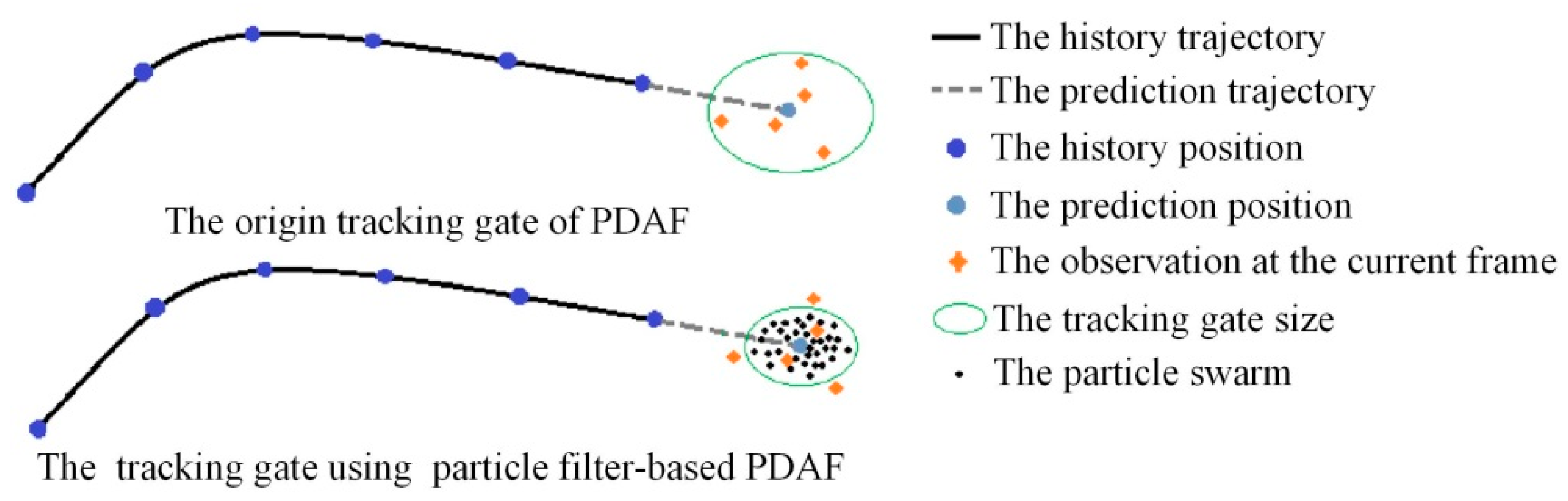

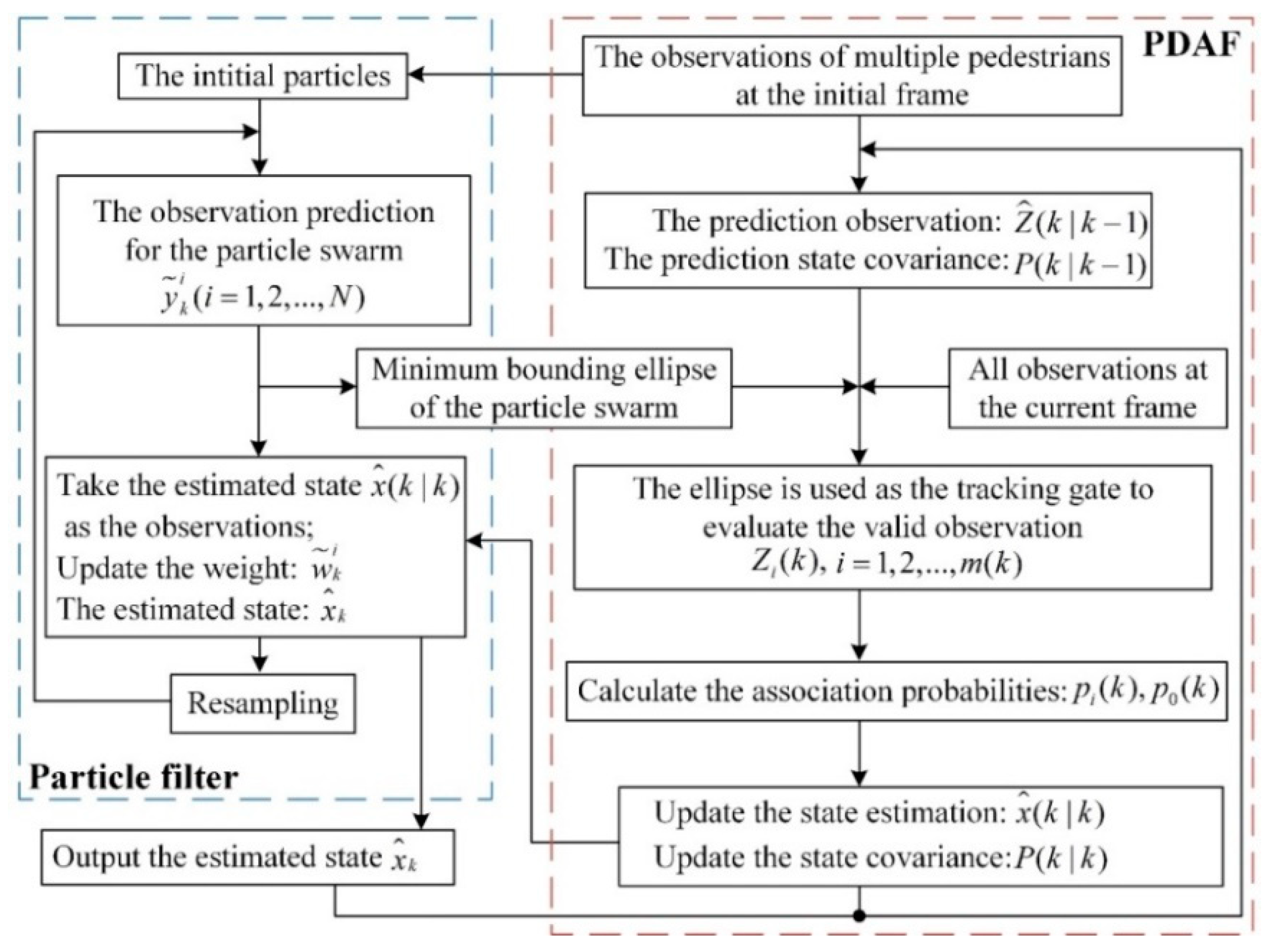

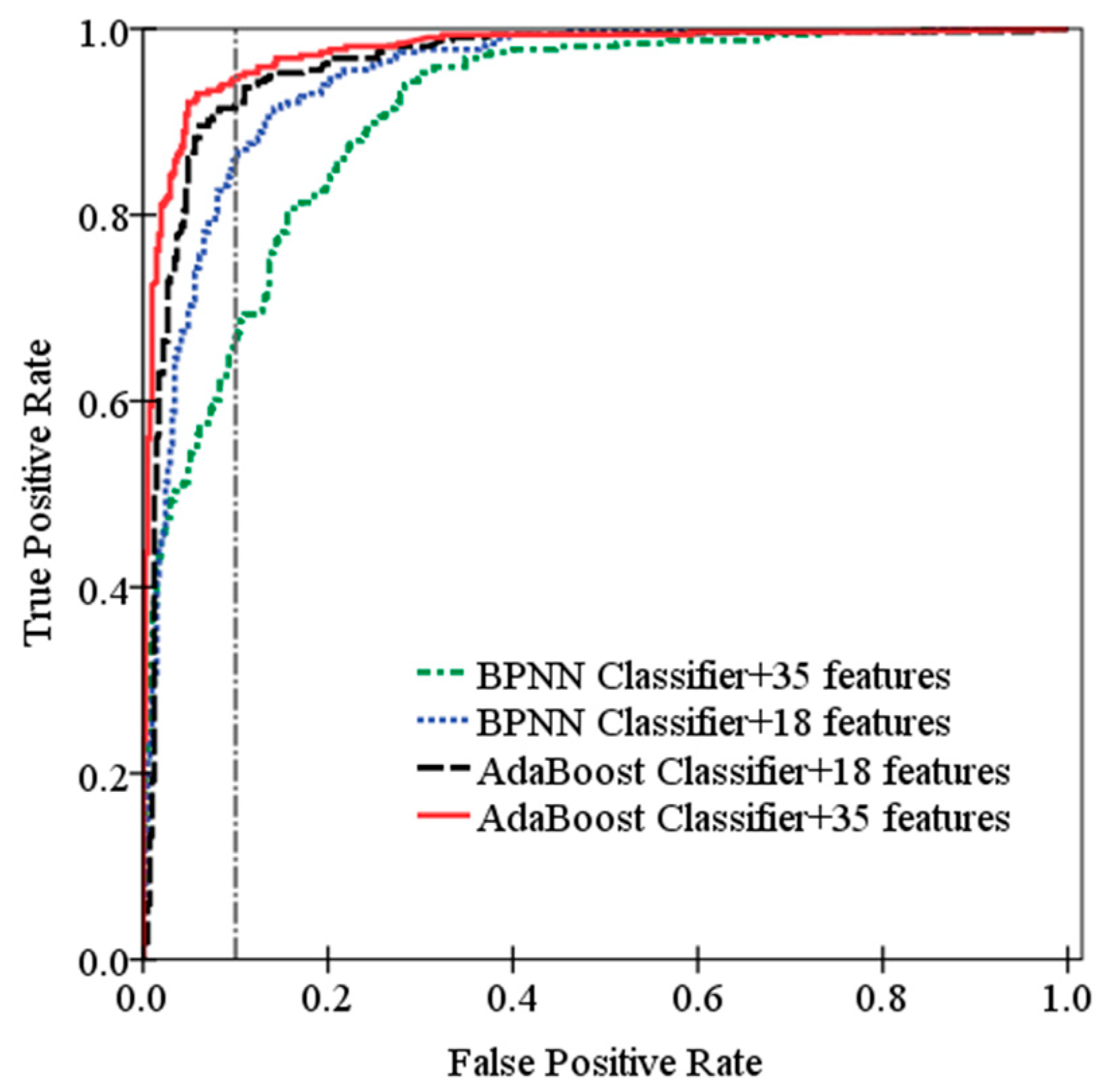

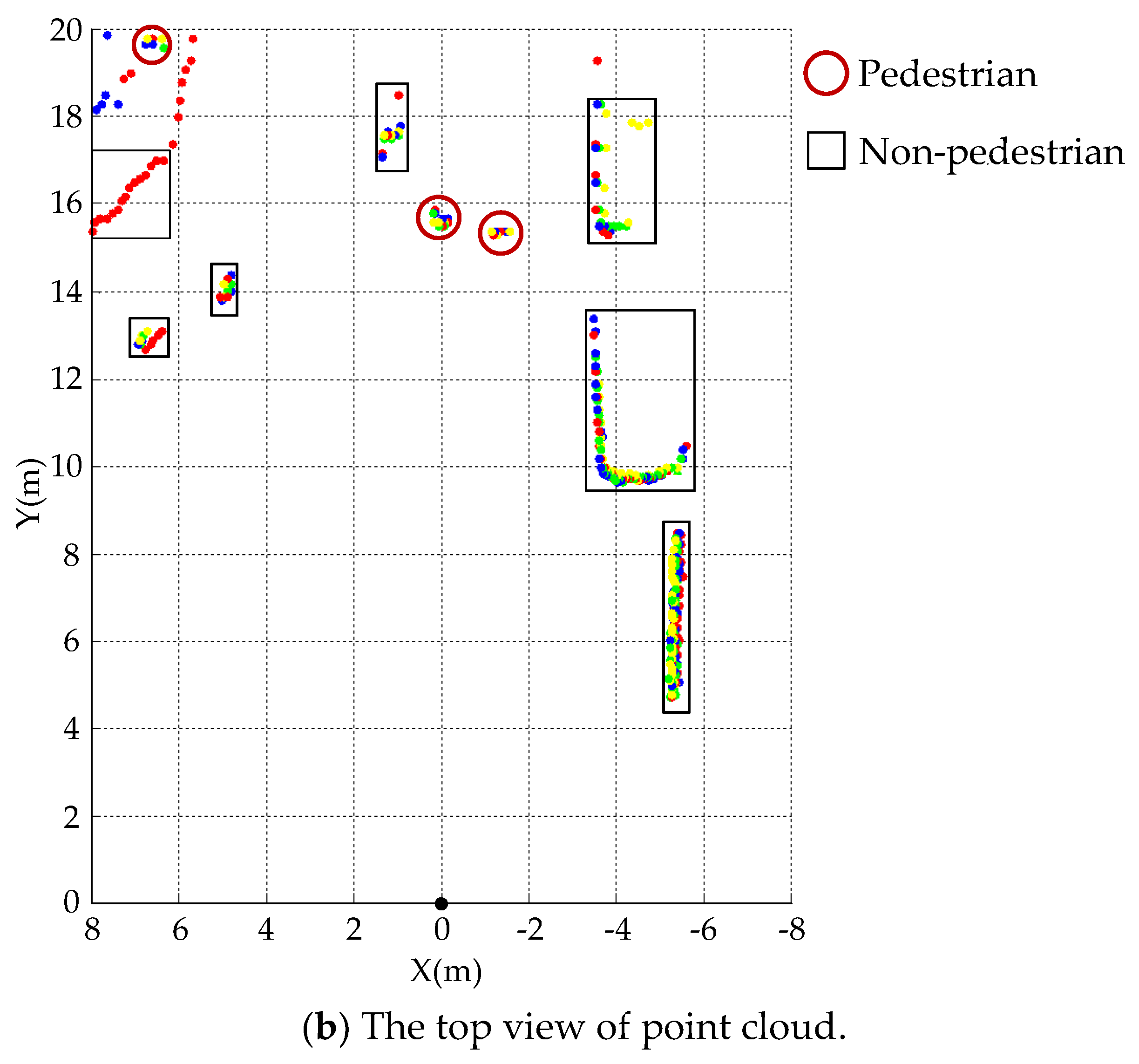

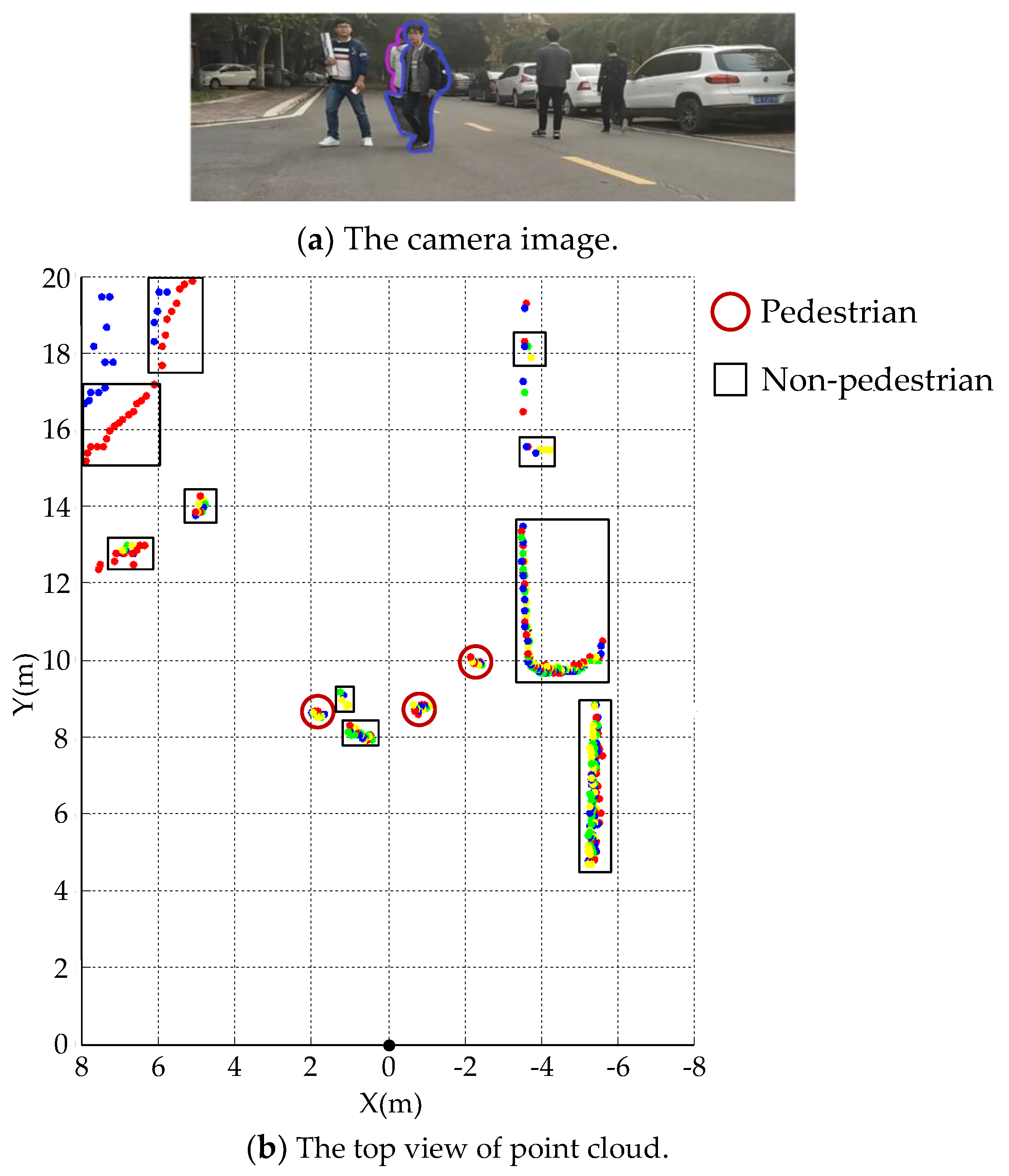

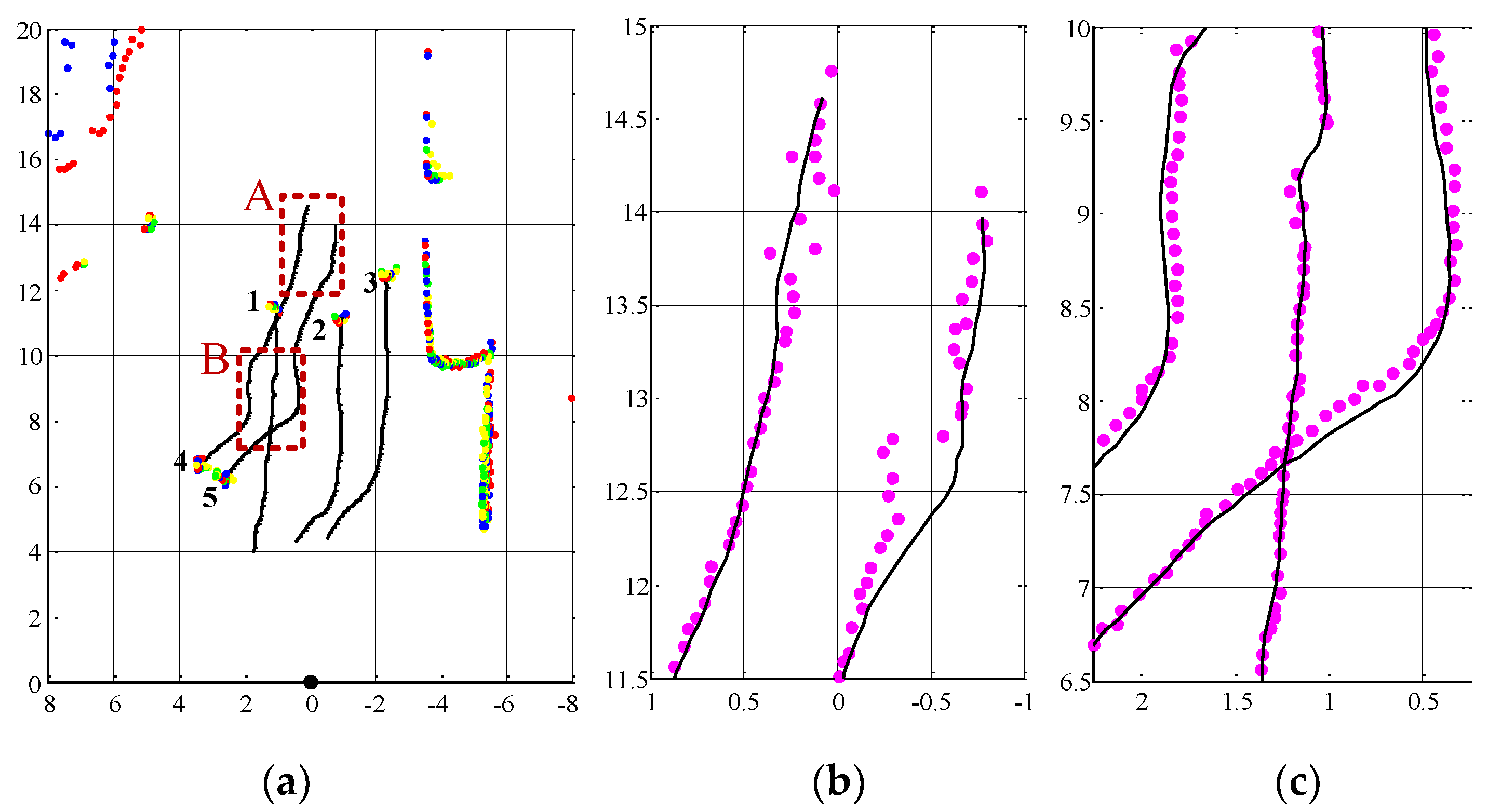

Motivated by the analysis of the existing works in related literatures, a novel method for pedestrian detection using 2.5D laser scanner is presented in this paper. The laser scanner sensor adopted in this study is a four-layer laser scanner, i.e., IBEO LUX 4L, which is extensively used in Advanced Driver Assistance Systems (ADAS) and autonomous vehicles. The architecture of the proposed pedestrian detection method encompasses four components: segmentation, feature extraction, segment classification, and track classification, as shown in

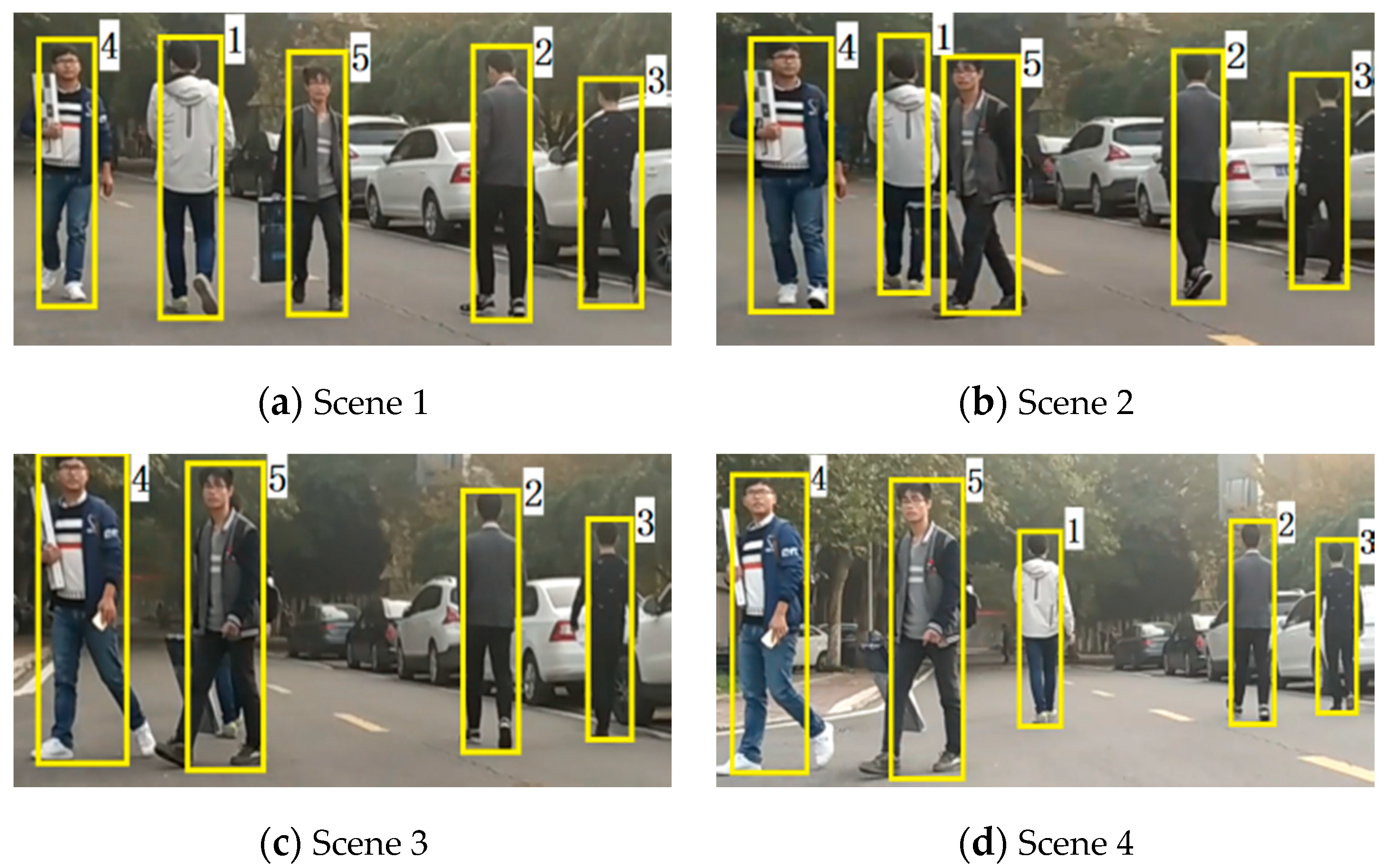

Figure 1. The proposed method differs from other pedestrian detection methods in two aspects. First, each layer of the raw data stream is employed to find the specific properties corresponding to objects of interest, and some new features are proposed. Most features are simple single-valued features, rather than high-level complex features. Second, in order to improve the detection accuracy when the pedestrians cross with each other and the partial occlusion exists, multi-pedestrian detection based on tracking is proposed to compensate the segment classification result.

The rest of this paper is organized as follows. The details of the proposed methods are presented in

Section 2. Experimental results are analyzed and discussed in

Section 3.

Section 4 concludes the paper.