Guided, Fusion-Based, Large Depth-of-field 3D Imaging Using a Focal Stack

Abstract

1. Introduction

2. Related Work

2.1. DOF Extension of the Structured Light 3D Imaging System

2.2. DOF Extension of the Camera

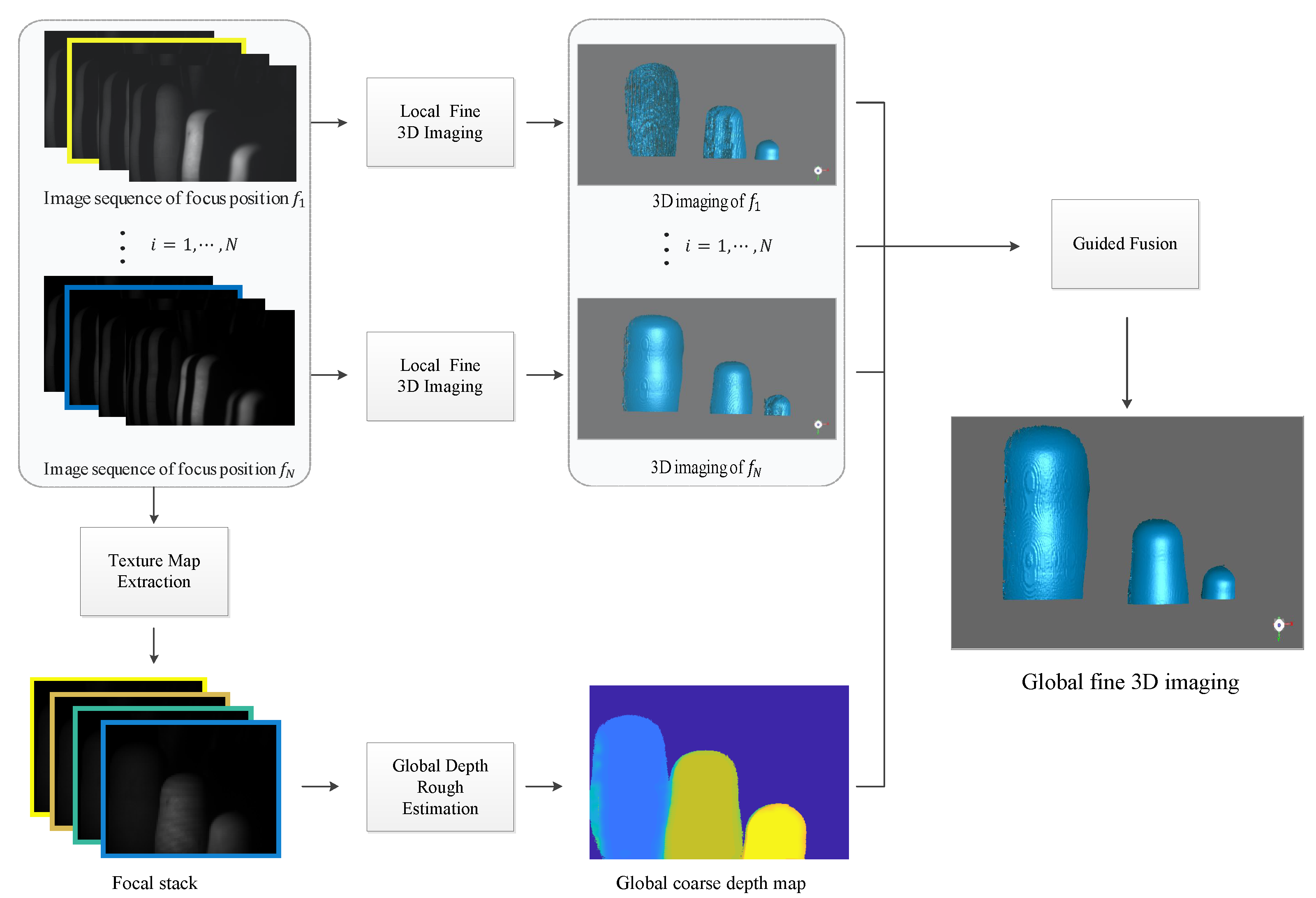

3. Computational Framework for Large DOF 3D Imaging

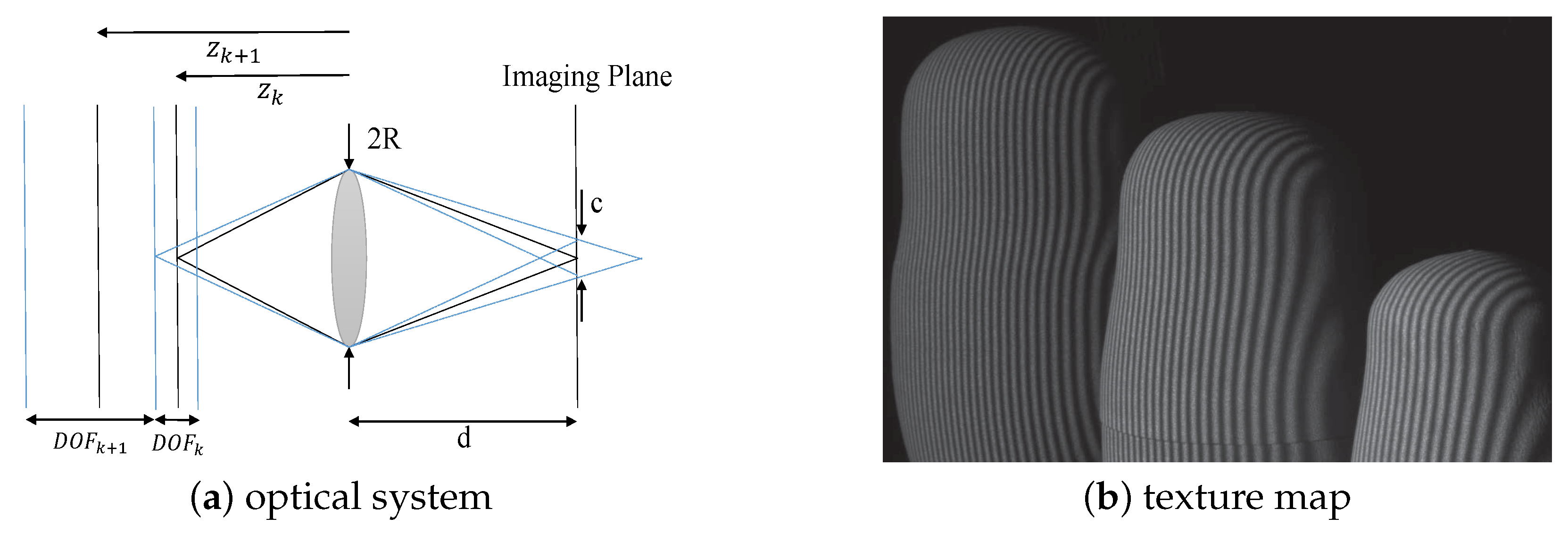

3.1. Local Depth Fine Estimation and Texture Map Extraction

3.2. Global Depth Rough Estimation

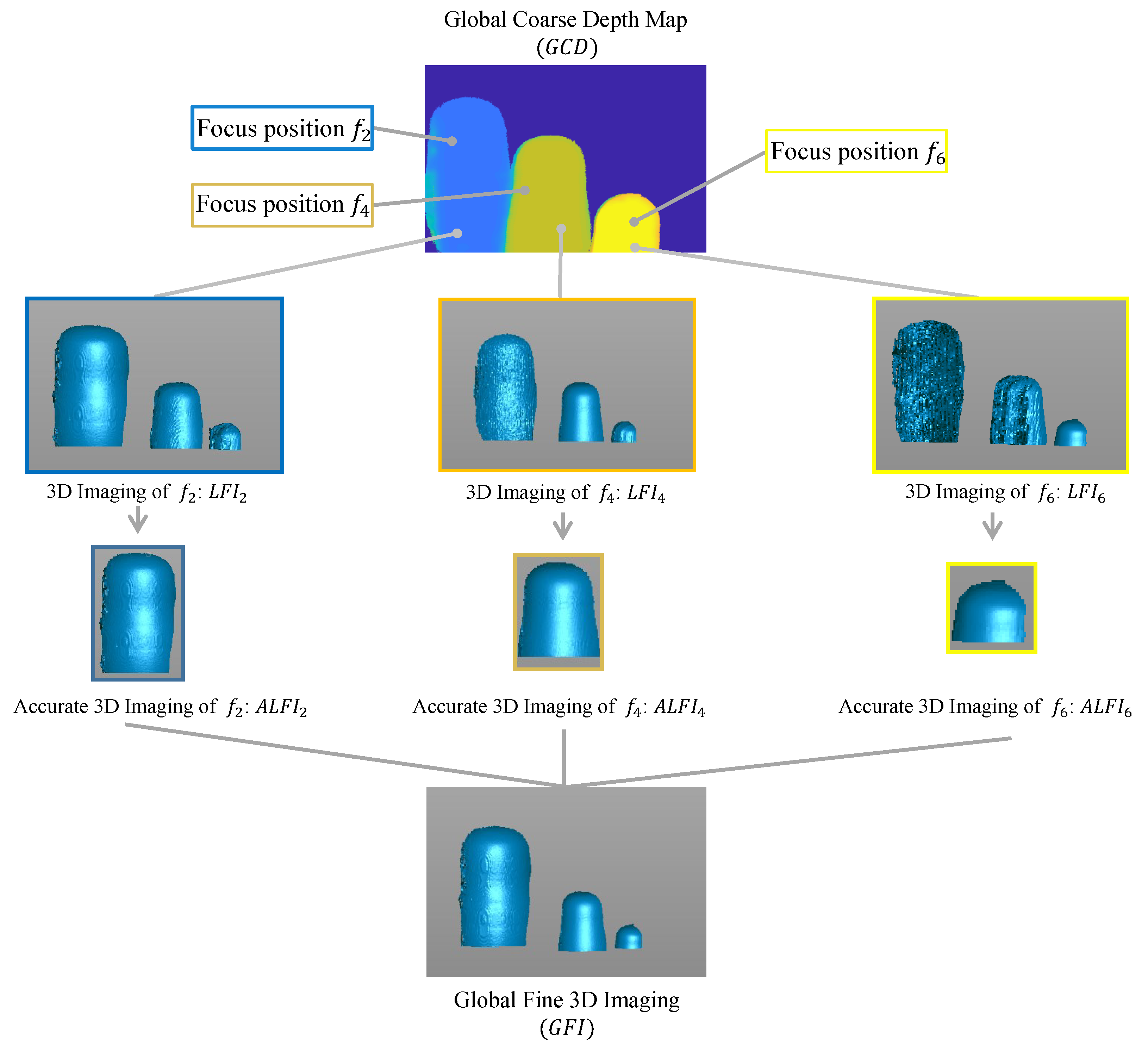

3.3. Guided Fusion

4. Experiments

4.1. Setup

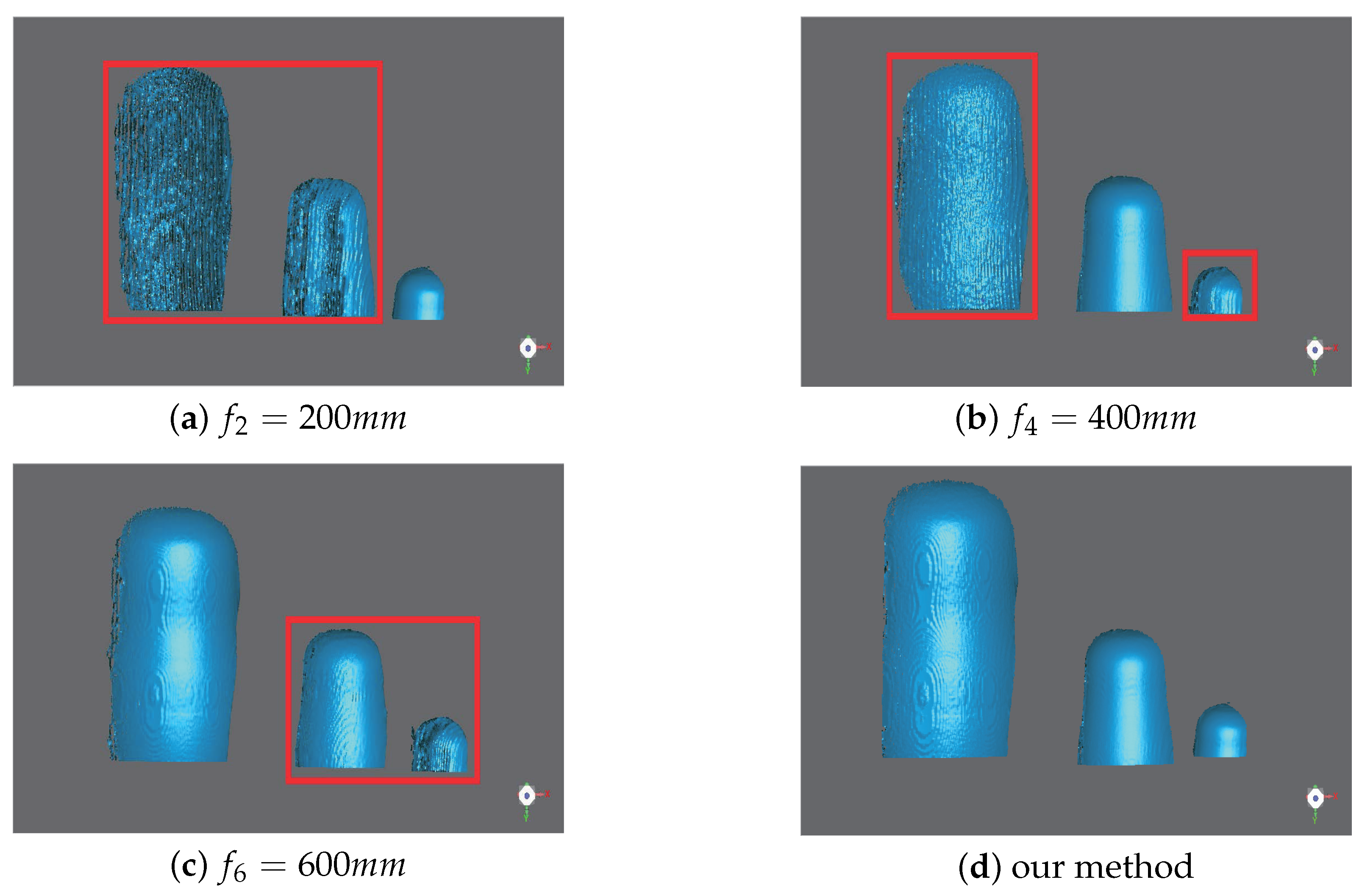

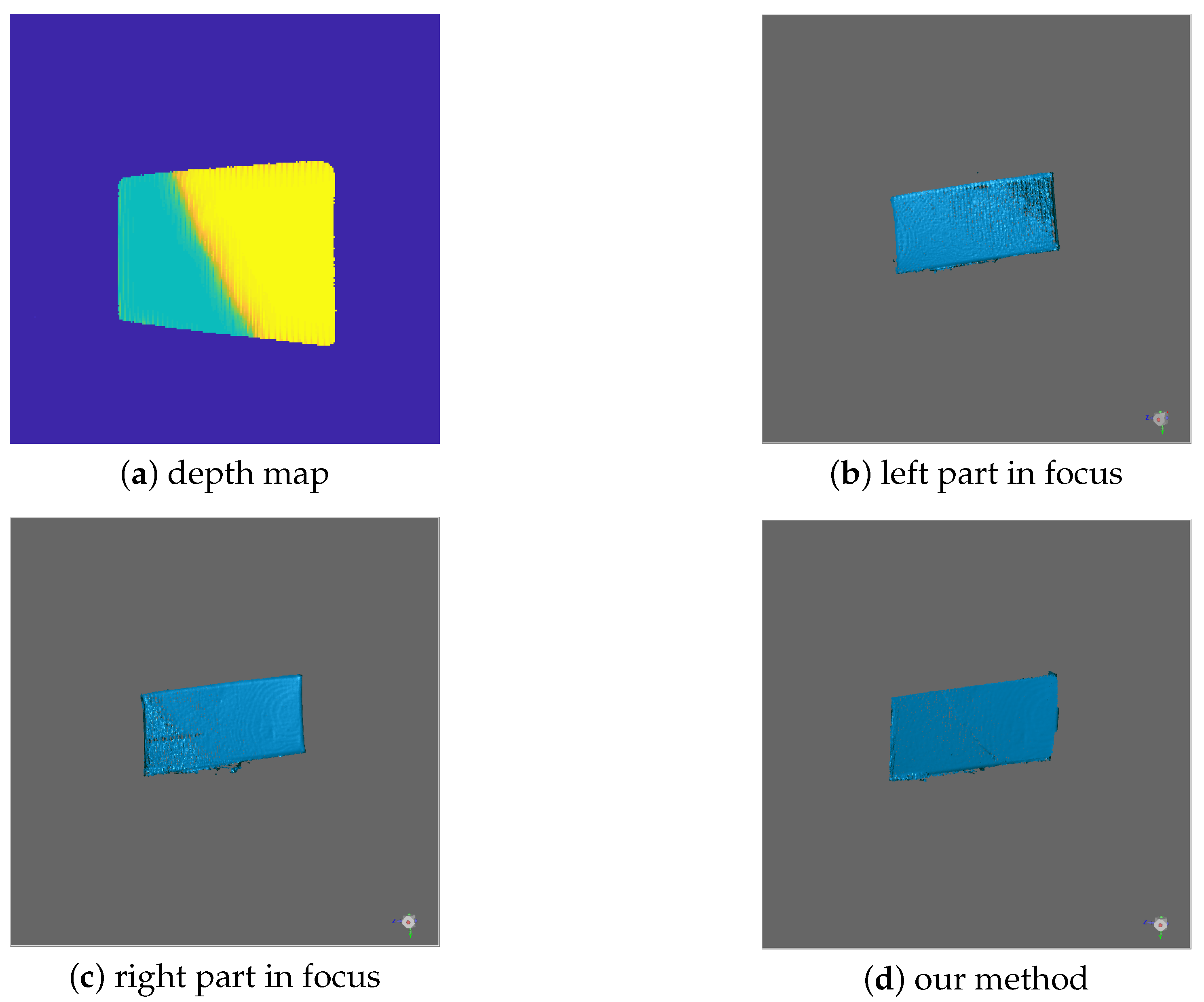

4.2. Overall Performance

4.3. Error Analysis

4.4. Robustness Analysis

5. Discussion

- More time required: since our method needs to capture images at different focus positions, the time taken by our method is more than that of the traditional methods.

- High precision calibration required: The proposed method has a strict requirement for calibration. Inaccurate calibration will introduce an artificial error, making measurement points of each focus position misaligned.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liberadzki, P.; Adamczyk, M.; Witkowski, M.; Sitnik, R. Structured-Light-Based System for Shape Measurement of the Human Body in Motion. Sensors 2018, 18, 2827. [Google Scholar] [CrossRef] [PubMed]

- Hinz, L.; Kästner, M.; Reithmeier, E. Metal Forming Tool Monitoring Based on a 3D Measuring Endoscope Using CAD Assisted Registration. Sensors 2019, 19, 2084. [Google Scholar] [CrossRef] [PubMed]

- Song, L.; Li, X.; Yang, Y.g.; Zhu, X.; Guo, Q.; Liu, H. Structured-light based 3D reconstruction system for cultural relic packaging. Sensors 2018, 18, 2981. [Google Scholar] [CrossRef] [PubMed]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Zhang, S. High-speed 3D shape measurement with structured light methods: A review. Opt. Lasers Eng. 2018, 106, 119–131. [Google Scholar] [CrossRef]

- Mertz, C.; Koppal, S.J.; Sia, S.; Narasimhan, S. A low-power structured light sensor for outdoor scene reconstruction and dominant material identification. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 15–22. [Google Scholar]

- Zhang, L.; Nayar, S. Projection defocus analysis for scene capture and image display. ACM Trans. Gr. (TOG) 2006, 25, 907–915. [Google Scholar] [CrossRef]

- Gupta, M.; Tian, Y.; Narasimhan, S.G.; Zhang, L. A combined theory of defocused illumination and global light transport. Int. J. Comput. Vis. 2012, 98, 146–167. [Google Scholar] [CrossRef]

- Gupta, M.; Nayar, S.K. Micro phase shifting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 813–820. [Google Scholar]

- Lei, S.; Zhang, S. Digital sinusoidal fringe pattern generation: Defocusing binary patterns vs focusing sinusoidal patterns. Opt. Lasers Eng. 2010, 48, 561–569. [Google Scholar] [CrossRef]

- Achar, S.; Narasimhan, S.G. Multi focus structured light for recovering scene shape and global illumination. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 205–219. [Google Scholar]

- Brown, M.S.; Song, P.; Cham, T.J. Image pre-conditioning for out-of-focus projector blur. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 2, pp. 1956–1963. [Google Scholar]

- Zhang, Y.; Xiong, Z.; Cong, P.; Wu, F. Robust depth sensing with adaptive structured light illumination. J. Vis. Commun. Image Represent. 2014, 25, 649–658. [Google Scholar] [CrossRef]

- Kawasaki, H.; Ono, S.; Horita, Y.; Shiba, Y.; Furukawa, R.; Hiura, S. Active one-shot scan for wide depth range using a light field projector based on coded aperture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3568–3576. [Google Scholar]

- Nagahara, H.; Kuthirummal, S.; Zhou, C.; Nayar, S.K. Flexible depth of field photography. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 60–73. [Google Scholar]

- Levin, A.; Fergus, R.; Durand, F.; Freeman, W.T. Image and depth from a conventional camera with a coded aperture. ACM Trans. Gr. (TOG) 2007, 26, 70. [Google Scholar] [CrossRef]

- George, N.; Chi, W. Extended depth of field using a logarithmic asphere. J. Opt. A Pure Appl. Opt. 2003, 5, S157. [Google Scholar] [CrossRef]

- Castro, A.; Ojeda-Castañeda, J. Asymmetric phase masks for extended depth of field. Appl. Opt. 2004, 43, 3474–3479. [Google Scholar] [CrossRef] [PubMed]

- Georgiev, T.G.; Zheng, K.C.; Curless, B.; Salesin, D.; Nayar, S.K.; Intwala, C. Spatio-angular resolution tradeoffs in integral photography. Render. Tech. 2006, 2006, 21. [Google Scholar]

- Yin, X.; Wang, G.; Li, W.; Liao, Q. Large aperture focus stacking with max-gradient flow by anchored rolling filtering. Appl. Opt. 2016, 55, 5304–5309. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Li, W.; Yin, X.; Yang, H. All-in-focus with directional-max-gradient flow and labeled iterative depth propagation. Pattern Recognit. 2018, 77, 173–187. [Google Scholar] [CrossRef]

- Hasinoff, S.W.; Kutulakos, K.N. Light-efficient photography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2203–2214. [Google Scholar] [CrossRef]

- Salvi, J.; Pages, J.; Batlle, J. Pattern codification strategies in structured light systems. Pattern Recognit. 2004, 37, 827–849. [Google Scholar] [CrossRef]

- Alonso, J.R.; Fernández, A.; Ferrari, J.A. Reconstruction of perspective shifts and refocusing of a three-dimensional scene from a multi-focus image stack. Appl. Opt. 2016, 55, 2380–2386. [Google Scholar] [CrossRef] [PubMed]

- Kopf, J.; Cohen, M.F.; Lischinski, D.; Uyttendaele, M. Joint bilateral upsampling. ACM Trans. Gr. (ToG) 2007, 26, 96. [Google Scholar] [CrossRef]

- Moreno, D.; Taubin, G. Simple, accurate, and robust projector-camera calibration. In Proceedings of the Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 464–471. [Google Scholar]

| Maximum Distance (mm) | Average Distance (mm) | Standard Deviation (mm) | |

|---|---|---|---|

| = 200 mm | 1.613432 | 0.121577 | 0.155088 |

| = 400 mm | 1.279587 | 0.134344 | 0.130969 |

| = 600 mm | 1.539015 | 0.325372 | 0.276541 |

| Our method |

| Small Sphere | Medium Sphere | Big Sphere | Average Value | |

|---|---|---|---|---|

| = 200 mm | 0.6467 | 4.7459 | 1.8499 | |

| = 400 mm | 0.6135 | 4.4225 | 1.7396 | |

| = 600 mm | 1.2332 | 0.7366 | 0.7887 | |

| our method |

| Small Sphere | Medium Sphere | Big Sphere | Average Value | |

|---|---|---|---|---|

| = 200 mm | 0.5123 | 3.8840 | 1.5050 | |

| = 400 mm | 0.3755 | 3.6583 | 1.3914 | |

| = 600 mm | 1.0139 | 0.5659 | 0.6081 | |

| our method |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, Y.; Wang, G.; Hu, X.; Shi, C.; Meng, L.; Yang, H. Guided, Fusion-Based, Large Depth-of-field 3D Imaging Using a Focal Stack. Sensors 2019, 19, 4845. https://doi.org/10.3390/s19224845

Xiao Y, Wang G, Hu X, Shi C, Meng L, Yang H. Guided, Fusion-Based, Large Depth-of-field 3D Imaging Using a Focal Stack. Sensors. 2019; 19(22):4845. https://doi.org/10.3390/s19224845

Chicago/Turabian StyleXiao, Yuhao, Guijin Wang, Xiaowei Hu, Chenbo Shi, Long Meng, and Huazhong Yang. 2019. "Guided, Fusion-Based, Large Depth-of-field 3D Imaging Using a Focal Stack" Sensors 19, no. 22: 4845. https://doi.org/10.3390/s19224845

APA StyleXiao, Y., Wang, G., Hu, X., Shi, C., Meng, L., & Yang, H. (2019). Guided, Fusion-Based, Large Depth-of-field 3D Imaging Using a Focal Stack. Sensors, 19(22), 4845. https://doi.org/10.3390/s19224845