Abstract

The Internet of Things (IoT) and sensors are becoming increasingly popular, especially in monitoring large and ambient environments. Applications that embrace IoT and sensors often require mining the data feeds that are collected at frequent intervals for intelligence. Despite the fact that such sensor data are massive, most of the data contents are identical and repetitive; for example, human traffic in a park at night. Most of the traditional classification algorithms were originally formulated decades ago, and they were not designed to handle such sensor data effectively. Hence, the performance of the learned model is often poor because of the small granularity in classification and the sporadic patterns in the data. To improve the quality of data mining from the IoT data, a new pre-processing methodology based on subspace similarity detection is proposed. Our method can be well integrated with traditional data mining algorithms and anomaly detection methods. The pre-processing method is flexible for handling similar kinds of sensor data that are sporadic in nature that exist in many ambient sensing applications. The proposed methodology is evaluated by extensive experiment with a collection of classical data mining models. An improvement over the precision rate is shown by using the proposed method.

1. Introduction

The infrastructure of the Internet of Things (IoT) is establishing rapidly recently, with the hype of smart cities over the world. Many ambient-sensing applications subsequently were developed that tap into the maturity of IoT technology [1]. From these applications and the proliferation of sensor equipment and ubiquitous communication technologies, we are able to collect a huge amount of useful data, which was not possible before [2,3].

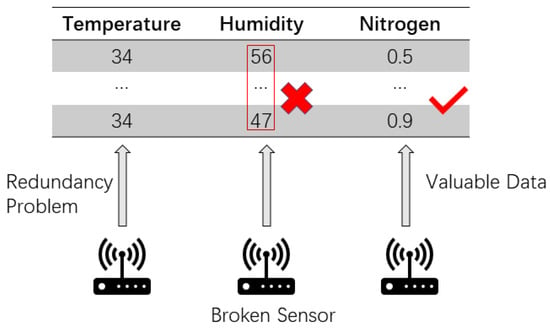

As the Figure 1 shows, there are some problem in the internet of things.For example, a typical and challenging ambient sensing application namely human activity recognition (HAR) collects data about human activities, such as walking, running and standing, in a confined space [4]. HAR tries to make sense of a massive amount data that is collected continuously for analyzing what a person is doing over a long period of time. Another typical application of ambient sensing focuses on detecting unusual environmental occurrences by using many outdoor sensors such as atmospheric sensors [5]. Although sensors have become ubiquitous and their applications are prevalent covering every aspect of our life, the data mining component in an IoT system has to keep up its effectiveness with the sheer volume of dynamic data. Sensor data are data feeds which exhibit some patterns when they are zoomed out and viewed longitudinally. The patterns are somewhat different from the structured datasets that are used traditionally for supervised learning. Earlier on, some researchers advocated that this kind of sensor data has unique characteristics, and it is unsuitable for use directly in data analysis [6]. First, the sensor data collected by the sensor are usually in numerical values. Second, the frequency of collection is relatively quick, and a lot of data can be collected within a few seconds at a time, depending on the sampling rate. Third, the adjacent data cells along a sensor data sequence may be very similar to each other without any significant changes in values over a period of time. For instance, a sensor that is tasked to monitor the activity of a sleeping baby or a sitting phone operator: their body postures change relatively little in slow or still motions; the same goes for monitoring the humidity of a forest or agriculture in fine and stable weather [7]. Fourth, sensor data may be corrupted by noise and losing useful information due to various transmission errors or malfunctioning sensors [8]. It is not uncommon for sensors that collect bogus data due to unreliable medium or external interference in a large scale IoT network deployed in harsh environment.

Figure 1.

Kinds of problem in the Internet of Things.

From the perspective of data mining, the process may not know whether a piece of data is noisy or a plain outlier, until and unless the fault is pinpointed. For example, when a sensor malfunctions, it sends incorrect readings to the server. The wrong data will not be discovered though they are more than useless for training a data mining model. As a result, the induced model is degraded by junk data. The wrong prediction outputted from the data mining model would propagate to throughout the IoT network and eventually to the final [9].

Basically, the characteristic of the IoT data collected by sensors are sequential and huge. The data values are largely repetitive and noises that have irregular values may easily go unnoticed in the data. Many users would aggregate the data for deriving descriptive statistics such as mean and distributions. Relatively they pay less attention to individual pieces of data at the micro-scale. It is known that sensor data are often generated at narrow intervals at the high sampling rate. Some problematic data that have gone unnoticed will cause the classifiers drop in performance during operation. At the data level, it is difficult for the model induction process to know whether an incoming training data will be counter-productive to the supervised learning. This may require post-processing or feedback-learning. A simpler method is pre-processing, which is usually fast and lightweight suitable for dynamic IoT scenarios.

Some popular pre-processing methods, such as Principle Component Analysis (PCA) [10] only change the original data by reducing the attribute dimension. Dimension reduction may not be so effective here because sensor data may have only a few attributes about the sensor readings. Sensor data are time-series that come as training data feed in sequential manner. Some regularization methods [11] only change the scope of the data, and they cannot effectively isolate outliers from a data feed of mediocre values. Changing the time interval of the instance by using a simple sliding window and statistical methods is possible and straightforward, but it could be further improved. Some efficient and effective pre-processing mechanism is required for upholding the performance of a trained model. For solving the problem of “repetitive and redundant data” in an IoT situation, a novel pre-processing methodology is proposed in this article. In this paper, the proposed pre-processing part of a classification model calculates the probability between the subspace of source sequential data and the target data. This model transforms the subsequent input data into probability data in a period by the length of sliding windows that controls the time interval hence the resolution. It focuses on the data features while maintaining the data original structures.

The contributions of this paper are as follows: (i) a pre-processing method suitable for sensor data that may have persistent and redundant data values is proposed. The method converts the original data values into probabilities that are computed based on the similarity of subspace; (ii) The pre-processing method could set the size of the subspace and the length of the sliding window, and it can effectively combine the needs of the time segment analysis in the real task. (iii)The advantages of proposed pre-processing mechanisms can combine perfectly with different models, reducing the sensitivity to noisy data and redundancy problems. The precision of the classification model will be improved by using this pre-processing method, rather than directly using classification algorithms alone. The source code of this new pre-processing methodology can be downloaded for testing and verification at https://github.com/Ayo616/TBSS.

In Section 2, some related work about the pre-processing methods that are applicable for sensor data are introduced. In Section 3, the proposed pre-processing methodology is explained in detail. In Section 4, validation experiment is designed for evaluating the performance of our method. Empirical datasets are used in the comparison of other methods. In Section 5, the work is concluded.

2. Literature Review

With the advancement of communication technologies and, electronic sensing devices are increasing, sensor-centric IoT technologies and applications experienced rapid growth. From recent statistics [12], by 2017 the total value of the IoT industry reached 29 billion U.S. dollars. This huge market has attracted attention from both practitioners and academic researchers.

Over the years, some companies have been developing and building IoT smart systems such as smart homes, smart transportation, and smart security [13,14,15]. These technologies are aimed at solving the specific problem for a relatively pure dataset in Internet of Things.

The massive deployment of IoT devices helps people to obtain large amounts of sensory data. How to tap valuable information from this vast amount of data and form knowledge to serve life more effectively is an important issue [16]. Some researchers have tried to use current data mining technology in the development of the Internet of Things to make the Internet of Things more intelligent [17,18,19,20]. These methods under the IoT framework could usefully solve some parts of the RFID mining task problem.

Clustering is commonly used in data mining of the Internet of Things. The most common clustering method is K-means [21]. K-means is very mature in traditional data mining. It divides a dataset into several clusters. K-means have been widely used in unsupervised classification task. The distribution of Internet of Things data in some cases is a clustering problem [22,23], but the classification results presented by clustering are only similar data and cannot be judged. If people are unfamiliar or unclear with the collected data, they cannot rely on the clustering results to precisely dig out effective knowledge.

In supervised learning, people often use decision tree algorithms for data mining of the Internet of Things [24,25]. In addition, probabilistic models are also widely used, such as the Naive Bayesian model [26,27]. In machine learning, there is also a simple and efficient classification method that is SVM [28], combining with the kernel function can linearly separate data in high-dimensional space. SVM methods are suitable for small dataset due to its long learning time. Finding a hyperplane in SVM is time-consuming because it iteratively tries and searches for the most appropriate non-linear division among the data.

However, the traditional classification model shows an unstable performance on the actual sensor data. That is because of the unique nature of the Internet of Things data. Through analysis and observation, all collected sensor data have highly repetitive characteristics and often contain noisy data. The source of these noise data may be due to sensor detection errors. That leads to too many samples of negative instances in the classification process, and the accuracy of the training model will decrease. In addition, in real life, people pay more attention to the results of a period, which is inconsistent with the phenomenon of collecting data in seconds.

Some research [10] used pre-processing of the dimension to deal with the sensor data problem. It is advantageous for tackling the dimension explosion problem. However, the useless features will increase the amount of calculation and it is not beneficial for the model’s precision. So the performance of these methods is not very good in sensor data mining because this type of methods only affects the feature of instance [11]. Sensor data have a time-series feature, so some research uses sliding windows as pre-processing methodology to adjust the time interval and do statistical computation within the windows. This way is straightforward and easy to implement but still affected by noisy data. Its contribution to the performance of the model is limited [29,30] because it depends on the data purity and special requirement of task.

Our intuition for this method is that a subspace is corresponding to a behavior state. No matter how the redundant in data, the corresponding space is limited. We could detection the similarity of space to identify the status. Therefore, we propose a new type of data pre-processing methodology called Transfer by Subspace Similarity (TBSS). TBSS constructs subspace from the initial dataset. Our proposed TBSS methodology combines an anomaly detection algorithm that derives a probability table, and finally using just a traditional classification method for classification. TBSS pre-processing method can significantly improve the precision of classification. Moreover, this pre-processing methodology can be combined with various classification methods and anomaly detection methods, as well as it is flexible for real-time activity detection.

3. Methodology

3.1. Problem Definitions

Suppose we have a training dataset , , it includes original sequence training data. These data are all from the sensors.

The training dataset includes different labels. The collection of these labels is , . These labels indicates the the status of object at a moment.

Each instance in dataset has a set of features. , . The collection of these features without label is .

Our task is to design an algorithm to deal with these sequence data in order to improve the data mining quality in the Internet of things. So we need to transfer the dataset D to the , .

3.2. Reconstruct Training Data Table

In this step, we deal with the original training data table. We could divide some group from the original dataset according to the different labels. We get collection based on different class.

Next we construct a sub-dataset from the and the sample size of the is . The represent the datum of a space.

We construct a sub-dataset from the ,and the sample size of is . The is used to compare with the .

We also construct a noisy dataset , and we add this to the .

All this sampling method does in the above operation is randomly select data. When we constructed these sub-datasets, we could compute some information between them as new features. Here we define some definitions. First we define TBSS function(transfer by subspace similarity).

Definition 1.

Function is defined to generate a new training dataset from with the setting of (the length of ) and (the length of ).

The new table is combined as follows, and the is new instance for a new table.

The features of instance in new table is computed by the function TDT.

means we construct a new training data in . The new attributes are a group of non-isolation rates and class of this instance is . A group of non-isolation rates is computed by function ITR () for whose input is a combination of , and .

Definition 2.

Construct a train data table and the element in the row and column of TDT is computed by function and the element in the row and the column is ,:

and are sub-datasets from the , so the and are subspaces of the . We use non-anomaly attribute to represent the similarity of two spaces.

Definition 3.

Function used X as standard case to train an isolation forest which is an algorithms created by Prof. Zhi Hua Zhou [31] for detecting the isolation point and then put the Y sample set(detection case) into the isolation forest model to classify whether there are isolation points or not in the Y. Finally, computing the rate P of data in Y that normally obeys the distribution in X. In other words, this function is to compute the non-isolation rate of Y.

3.3. Reconstruct Test Data Table

Suppose we have a test dataset. We only use the label of test dataset in evaluation process. We extract the features from each instance. The collection of test dataset is .

We still apply some transfer processes to the ,transforming .

A sliding window is used to construct the sub-dataset. The length of the sliding window is p. p is same as . So the original dataset could be transferred to the , where r is determined by the length of sliding window p and length of test dataset.

Definition 4.

Construct a test data table :

where , . is the sliding window, while the element in the row and column of is computed by function .

With the help of the final high level function of step two can be defined as follows.

Definition 5.

Function is defined for transforming testing dataset into new testing dataset which has same categories of attributes as . But part of computing input changes because we no longer use τ to gain sample set whose size is but using sliding windows of while the class of new testing data is replaced by the which is major class of a sliding window.

where

and for ,

3.4. Step 3: Model Learning

After using the pre-processing method to generate the new training dataset and new testing dataset , user could apply different algorithms to make the prediction with the help of and . Then a group of high level equations that represent these processes is defined as follow:

Definition 6.

is defined for a group of high level equations that use training data set (such as ), testing dataset (such as ) as well as a group of parameters for training the model and testing the model. Finally the performance evaluation index pf is obtained.

where is a collection of all possible specific parameters with respect to the demand of user and , is a performance evaluation index which is a combination of several statistical parameters of model such as accuracy, recall and . In this paper, there are five classical classification algorithms being tested, they are SVM, logistic regression, C4.5, Bayes classifier and KNN.

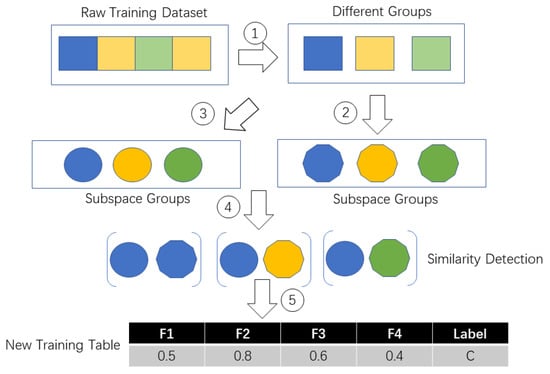

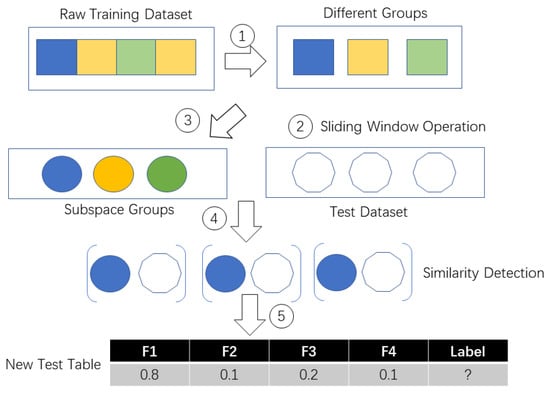

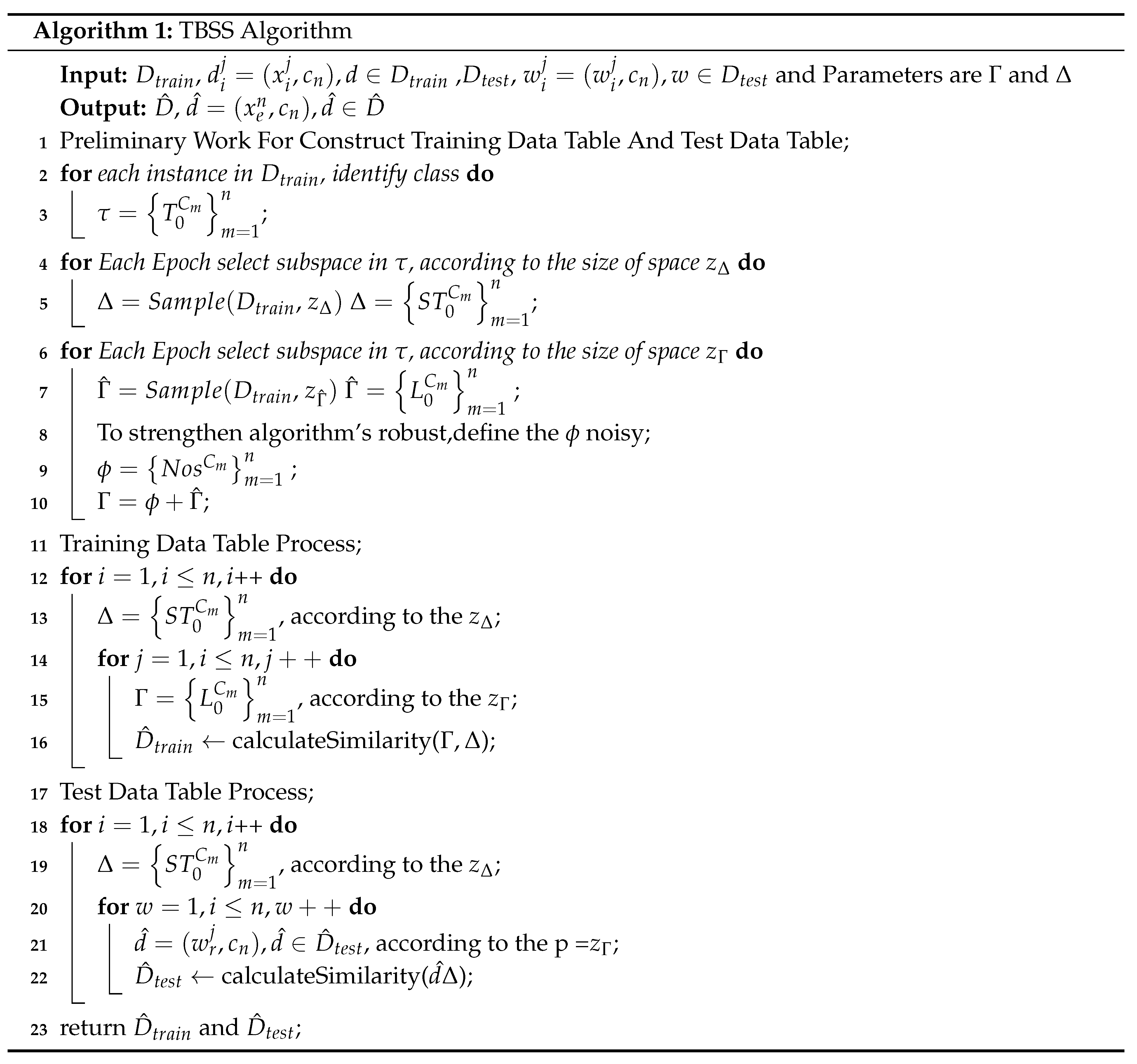

In TBSS, the probability between each subspace similarity is calculated. If the subject is walking, of course the data collected under walking status belong to the statistical distribution of walking training dataset. It is known that walking is a homogenous activity without any strange behaviour. So we should obtain a same data space from the walking test data. Time-consumption depends on the time spent on constructing the subspace and the distribution of the original space. If the original space is large (for example, we can possibly have different styles of lying down behavior), the sampling times will be large too because we need to ensure the coverage rate for the original space. If the original space is small (for example we only have a simple kind of walking behavior), the sampling times will be short and the cost time will be low too. Figure 2 and Figure 3 show the TBSS’s process details as well as we introduced the pseudo code of TBSS Algorithm 1 in detail.

Figure 2.

Process of transfering raw training dataset to new training dataset.

Figure 3.

Process of transferring raw test dataset to new test dataset.

After comparing the performance between before and after pre-processing, we found that this new method actually could improve the accuracy of the prediction. The following section will show the experiment results in detail.

4. Experiment

In this section, the performance of the proposed method is evaluated through extensive experiments. We chose three datasets which are generated from some IoT applications, and they are all related to the sensors and ambient assisted environment. The performance of five chosen classification algorithms that function without any pre-processing, serves as a baseline. The baseline is then compared with three pre-processing methods including our new method.

4.1. Datasets

Three datasets are prepared in this experiment. All these data are related to the sensor applications, which monitor human activities and environment. The description of each dataset is tabulated in Table 1. These datasets inherently consist of redundancy problem but lack of error information. To simulate the real situation in IoT where faulty sensor can exit, we inject a controlled level of noisy data when we construct the training and testing datasets. As the Figure 4 shows, we analyze some feature of datasets at first. The source datasets are all from the UCI datasets website.

Table 1.

Description of various datasets.

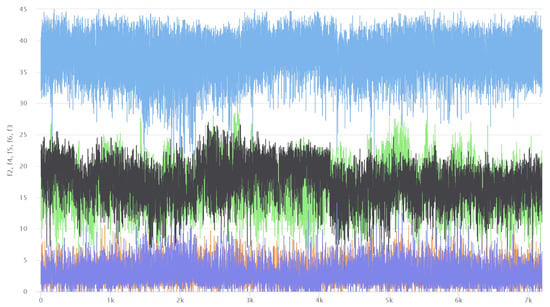

Figure 4.

Visualization of AreM dataset which is a graph of time-series of five feature values: it shows that all the time-series are stationary without any significant and distinctive shape, fluctuating within a limited range of values.

Heterogeneity Activity Recognition Data Set: The Heterogeneity Human Activity Recognition (HHAR) dataset from Smart phones and Smart watches is a dataset devised to benchmark human activity recognition algorithms (classification, automatic data segmentation, sensor fusion, feature extraction, etc.) in real-world contexts; specifically, the dataset is gathered with a variety of different device models and use-scenarios, in order to reflect sensing heterogeneities to be expected in real deployments.

Localization Data for Person Activity Data Set: Data contains recordings of five people performing different activities. Each person wore four sensors (tags) while performing the same scenario five times.

Activity Recognition system based on a Multisensor data fusion (AReM) Data Set: This dataset contains temporal data from a Wireless Sensor Network worn by an actor performing the activities: bending, cycling, lying down, sitting, standing, walking.This dataset represents a real-life benchmark in the area of Activity Recognition applications. The classification tasks consist of predicting the activity performed by the user from time-series generated by a Wireless Sensor Network (WSN), according to the EvAAL competition technical annex

4.2. Comparison of Pre-Processing Methods

This section chooses two ways to compare the performance of TBSS. First, five traditional methods were selected as baseline comparisons. There are KNN, Logistic Regression, Naive Bayes, Decision Tree and Support Vector Machine. We try to use the algorithms directly to get the results, then compare with the result that is after TBSS. Second, some pre-processing methods were selected as comparisons.

- K-Neighbors Classifier

- Logistic Regression

- Gaussian Naive Bayes

- Decision Tree

- Support vector machine

Here we select three well-known comparing pre-processing methods as comparisons. They are PCA, Incremental PCA and Normalize Method. Two methods are related to reducing dimension (PCA and Incremental PCA) and one method (Normalize Method) is related to changing the range of the data values. These methods are useful in traditional data mining because they could deal with redundancy problem and dimension explosion.

- PCA

- Incremental PCA

- Normalize Method

4.3. Evaluation Criteria

Three evaluation indicators are used for evaluating the pre-processing methods. There are precision, recall and F1-score. These evaluation criteria are very commonly used in classification tasks so we chose these evaluation criteria as a baseline evaluation. In the experiment result, we could focus on the precision criteria. Our proposed methodology performs well in the precision indicator.

- Precision

- Recall

- F1 score

4.4. Parameters Setting

For setting the parameters of the comparing methods, default values that are given by the Sklearn package are used. For a fair evaluation of the experiment, the program code is run for ten times and obtain the average precision and recall, etc. The length of the sliding window could be changed to suit different tasks. Further experimentation is planned as future work for exploring the optimal sliding window size. The amount of noise we injected into the original dataset is proportional to the amount of the original dataset.

4.5. Result and Analysis

First, we use some original classification algorithms as a baseline to test our datasets. As we could see the results from Table 2, Table 3 and Table 4, the performance of the model that is trained by raw data is not excellent, because the raw data include so much noisy data, and we found that the running time of the whole process is long because the model repeatedly calculated the redundant data.

Table 2.

The performance of TBSS and other pre-processing methods on localization recognition dataset.

Table 3.

The performance of TBSS and other pre-processing methods on AReM recognition dataset.

Table 4.

The performance of TBSS and other pre-processing methods on activity recognition dataset.

Table 2 shows that the performance of TBSS and other pre-processing methods on localization recognition dataset. The TBSS pre-processing method is useful to improve the performance of the model. The parameters are set as and . The KNN method does not perform very well in this dataset. Compared with other methods, the TBSS get high precision. Especially the combination model of TBSS and decision tree get the about 0.3264 precision in this dataset.

Table 3 shows that the performance of TBSS and other pre-processing methods on AReM dataset. In this evaluation of dataset, parameters are set as and . As we can see, the TBSS pre-processing is successful in improving the performance of the model by combining with a classification algorithm. The The precision of Bayesian algorithm with TBSS is up to about 0.3477, and it is higher than original precision. Table 4 shows that the performance of TBSS and other pre-processing methods on activity recognition dataset. In this dataset, parameters are set as , , and we adjust the interval of data in order to improve higher precision. The TBSS still perform well compared with other methods.

The TBSS gets good results in these datasets, although these datasets have noisy and redundancy problem, because the TBSS focuses on the similarity of space and a subspace, which is not easy to affect by single error instances. We use non-anomaly detection and statistics representing the similarity of each instance. These mechanisms strengthen the model’s robustness and precision.

For a realistic simulation, we design other experiments in which the length of the sliding window () is a variable. When we change , the whole model will generates different performance. As Table 5 shows, we fix the value of epoch and set , , , and we use a localization dataset to test the TBSS. It is clear that the setting of is better. We analyze this dataset and we found that its classification interval is suitable for the twenty to thirty seconds(which is approximately ). So this result indicated that TBSS methodology is robust with regards to pre-process the sensor data according to the interesting time interval.

Table 5.

Different size of subspace and its performance.

The final experiment is to test the influence of epoch. TBSS will receive more instances when the epoch is large because the times of sampling decide the coverage of the subspace to the original space. As Table 6 shows, we set the parameters , , . We try to use 10 to 3 epochs to run the model. We found that the more epochs there are in a range, the greater the improvement in the precision of thenmodel. The epoch does not affect the model’s performance out of a range because the subspace similar is overlapping.

Table 6.

Different iteration times to the performance of the classification in LG dataset.

5. Conclusions

This paper reports a subspace similarity detection model based on the subspace-attribute probability calculation, and the computational process uses the anomaly detection method. The proposed methodology is to be used as a pre-processing method that transforms the fine granular time scaled dataset (that has frequent intervals) into a probability dataset in a period, hence better classification model training. The TBSS pre-processing method can effectively solve the problem of repeatability and noise that exist in the sensor data. TBSS is able to smoothly combine with anomaly detection and traditional classification algorithm. Therefore it should be flexible for most of the IoT machine learning applications in real life. Through the different aspect of experiments, we observe that this model can effectively improve the performance of traditional machine learning classification algorithms in data mining of the Internet of Things. Although TBSS improves the precision in the sensor data mining task, it has a shortcoming. The time-consumption is relatively long, because the sampling time incurs certain overhead for covering the whole search space in each step of window sliding. In the future work, we will look into formulating a low-complexity design suitable for fast sampling for TBSS. The codes of TBSS should be optimized for leveraging GPU computation for fast running.

Author Contributions

Conceptualization, Y.Z. and S.F.; methodology, Y.Z.; software, Y.Z.; validation, S.H., R.W. and W.L.

Funding

The financial support was funded by the following fou research grants: (1) Nature-Inspired Computing and Metaheuristics Algorithms for Optimizing Data Mining Performance, Grant no. MYRG2016-00069-FST, by the University of Macau; (2) A Scalable Data Stream Mining Methodology: Stream-based Holistic Analytics and Reasoning in Parallel, Grant no. FDCT/126/2014/A3, by FDCT Macau; and 2018 Guangzhou Science and Technology Innovation and Development of Special Funds, (3) Grant no. EF003/FST-FSJ/2019/GSTIC, and (4) EF004/FST-FSJ/2019/GSTIC.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dhakar, L.; Tay, F.; Lee, C. Skin based flexible triboelectric nanogenerators with motion sensing capability. In Proceedings of the 2015 28th IEEE International Conference on Micro Electro Mechanical Systems (MEMS), Estoril, Portugal, 18–22 January 2015; pp. 106–109. [Google Scholar]

- Li, T.; Liu, Y.; Tian, Y.; Shen, S.; Mao, W. A storage solution for massive iot data based on nosql. In Proceedings of the 2012 IEEE International Conference on Green Computing and Communications, Besancon, France, 20–23 Novomber 2012; pp. 50–57. [Google Scholar]

- Madakam, S.; Ramaswamy, R.; Tripathi, S. Internet of Things (IoT): A literature review. J. Comput. Commun. 2015, 3, 164. [Google Scholar] [CrossRef]

- Nyan, M.; Tay, F.E.; Murugasu, E. A wearable system for pre-impact fall detection. J. Biomech. 2008, 41, 3475–3481. [Google Scholar] [CrossRef] [PubMed]

- Dan, L.; Xin, C.; Chongwei, H.; Liangliang, J. Intelligent agriculture greenhouse environment monitoring system based on IOT technology. In Proceedings of the 2015 International Conference on Intelligent Transportation, Big Data and Smart City, Halong Bay, Vietnam, 19–20 December 2015; pp. 487–490. [Google Scholar]

- Shi, F.; Li, Q.; Zhu, T.; Ning, H. A survey of data semantization in internet of things. Sensors 2018, 18, 313. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Chen, Z. Anomaly detection and redundancy elimination of big sensor data in internet of things. arXiv 2017, arXiv:1703.03225. [Google Scholar]

- Pérez-Penichet, C.; Hermans, F.; Varshney, A.; Voigt, T. Augmenting IoT networks with backscatter-enabled passive sensor tags. In Proceedings of the 3rd Workshop on Hot Topics in Wireless, New York, NY, USA, 3–7 October 2016; pp. 23–27. [Google Scholar]

- Nesa, N.; Ghosh, T.; Banerjee, I. Outlier detection in sensed data using statistical learning models for IoT. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A: Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef] [PubMed]

- Patro, S.; Sahu, K.K. Normalization: A preprocessing stage. arXiv 2015, arXiv:1503.06462. [Google Scholar] [CrossRef]

- M&M Research Group. Internet of Things (IoT) & M2M Communication Market: Advanced Technologies, Future Cities & Adoption Trends, Roadmaps & Worldwide Forecasts 2012–2017; Technical Report; Electronics. ca Publications: Kirkland, QC, Canada, 2012. [Google Scholar]

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Miorandi, D.; Sicari, S.; De Pellegrini, F.; Chlamtac, I. Internet of things: Vision, applications and research challenges. Ad Hoc Netw. 2012, 10, 1497–1516. [Google Scholar] [CrossRef]

- Bandyopadhyay, D.; Sen, J. Internet of things: Applications and challenges in technology and standardization. Wirel. Pers. Commun. 2011, 58, 49–69. [Google Scholar] [CrossRef]

- Xu, L.; Guo, D.; Tay, F.E.H.; Xing, S. A wearable vital signs monitoring system for pervasive healthcare. In Proceedings of the 2010 IEEE Conference on Sustainable Utilization and Development in Engineering and Technology, Petaling Jaya, Malaysia, 20–21 November 2010; pp. 86–89. [Google Scholar]

- Cantoni, V.; Lombardi, L.; Lombardi, P. Challenges for data mining in distributed sensor networks. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; Volume 1, pp. 1000–1007. [Google Scholar]

- Keller, T. Mining the Internet of Things-Detection of False-Positive RFID Tag Reads Using Low-Level Reader Data. Ph.D. Thesis, University of St. Gallen, St. Gallen, Switzerland, 2011. [Google Scholar]

- Masciari, E. A Framework for Outlier Mining in RFID data. In Proceedings of the 11th International Database Engineering and Applications Symposium, Banff, AB, Canada, 6–8 September 2007; pp. 263–267. [Google Scholar]

- Bin, S.; Yuan, L.; Xiaoyi, W. Research on data mining models for the internet of things. In Proceedings of the 2010 International Conference on Image Analysis and Signal Processing (IASP), Zhejiang, China, 9–11 April 2010; pp. 127–132. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 21 June–18 July 1965; Volume 1, pp. 281–297. [Google Scholar]

- Jain, A.K.; Murty, M.N.; Flynn, P.J. Data clustering: A review. ACM Comput. Surv. (CSUR) 1999, 31, 264–323. [Google Scholar] [CrossRef]

- Xu, R.; Wunsch, D. Survey of clustering algorithms. IEEE Trans. Neural Netw. 2005, 16, 645–678. [Google Scholar] [CrossRef] [PubMed]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man, Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- McCallum, A.; Nigam, K. A comparison of event models for naive bayes text classification. In Proceedings of the AAAI-98 Workshop on Learning for Text Categorization Citeseer, Madison, WI, USA, 26–27 July 1998; Volume 752, pp. 41–48. [Google Scholar]

- Langley, P.; Iba, W.; Thompson, K. An analysis of Bayesian classifiers. Aaai 1992, 90, 223–228. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Danita, M.; Mathew, B.; Shereen, N.; Sharon, N.; Paul, J.J. IoT Based Automated Greenhouse Monitoring System. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 1933–1937. [Google Scholar]

- Akbar, A.; Khan, A.; Carrez, F.; Moessner, K. Predictive analytics for complex IoT data streams. IEEE Int. Things J. 2017, 4, 1571–1582. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).