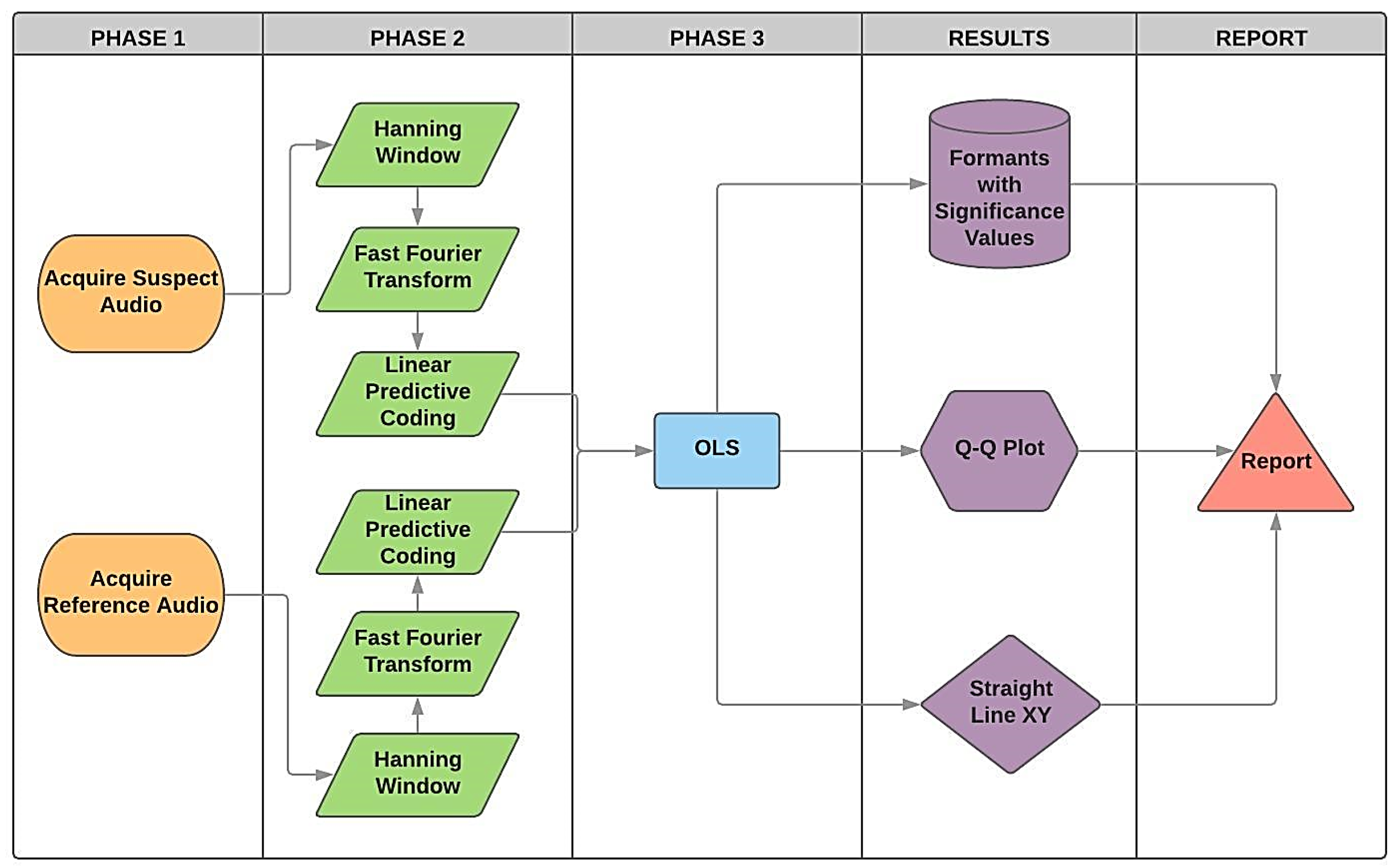

To verify whether a confronted audio corresponds to a reference, the spectrum pattern analysis consisted of the first step. The audios were acquired as presented in Phase 1. Hence,

Figure 4a illustrates the frequencies spectrum generated for a given suspect, whilst

Figure 4b shows the spectrum for the reference (both relating to Sentence #1). The results were obtained by applying Hanning windowing (90% overlapping) and the FFT (Phase 2). As indicated by

Figure 4, analysing audios using only the frequencies spectrum, besides being complex becomes too subjective and tends to introduce error, ultimately requiring more data information for the decision-making process.

Note that it is still not possible to establish a parameter for a reliable result based only on graphics. To enhance the visualisation, a moving average filter (MAV) was conducted. This filter is used to lighten the dots, rendering them more continuous.

Figure 6a shows the formants extracted from the contested audio, whilst

Figure 6b presents those extracted from the reference audio, following the MAV application (both relating to Sentence #1). It is notable that certifying the likelihood amongst the formants’ spectrums, even after applying the MAV filter, presents a difficult task. Accordingly, it was necessary to quantify those subtle differences statistically, aiming to achieve a much more accurate analysis as demanded in the forensic context.

To obtain a robust method to verify the speaker, this paper proposed the use of the OLS model, whereby the reference formants were compared to the audio confronted by the OLS model. The small

p-value resulted in a higher significance. In this approach, the highest significance was represented by ‘***’. It is important to highlight that pitch is the main formant, and if it presents no ‘***’, the comparison is considered as negative, requiring the remaining formants to present at least one ‘*’. Accordingly, it must not accept non-significant (NS) for the analysed sentence. Hence,

Table 2 presents the results obtained using OLS comparing the confronted audio with the reference (both for Sentence #1). After analysing the results, it is evident that the 19 formants showed high significance levels. The smaller the

p-value, the stronger the correlation amongst the pairs (formant

F3).

4.1. Validating the Model

To compare normal distributions, the Q–Q plot can be utilised. The aim was to validate whether the model presents a similar distribution by verifying whether the histogram of the reference was close to that of the confronted audio. If an affirmative result is achieved, this provides a positive result for the speaker comparison, ensuring that the normal distribution is confronted with the same audio segment. The residual histograms, in turn, are inversely proportional to the quality of the formant. This means that smaller matches of histograms lead to a better

p-value, increasing the reliability of the model. For the purpose of brevity, only some formants are shown. Hence,

Figure 7a,b shows the Q–Q plots for formants

F0 (pitch) and

F1, respectively. Henceforth, all figures presented in this paper relate to Sentence #1. By examining

Figure 7a, it is evident that there are four intersection points of the residues with the straight line: one high, two medium, and one low intensity. Conversely,

Figure 7b presents two intersection points, one high and one low intensity. To conclude,

F0 presents a higher degree of significance compared with

F1, as previously presented in

Table 2 (

p-value).

Figure 8a,b shows the Q-Q plots for formants

F2 and

F3, respectively. By examining

Figure 8a, it is evident that there are three points where the residues intersect with the straight line: one is very high and two of high intensity. Conversely,

Figure 8b presents three high intersections. This result matches with those in

Table 2, where

F3 was shown to present the higher

p-value.

Figure 9a,b presents the Q-Q plots for formants

F15 and

F16, respectively. By examining

Figure 9a,b, it is evident that the line of the residues coincides with the reference line, showing that the significances are weaker compared with those presented for

F3, for example. These results match with those presented in

Table 2, where

F15 and

F16 presented smaller

p-values.

Figure 10a,b shows the Q-Q plots for formants

F17 and

F18, respectively. Likewise, the results indicate that the line of the residues coincides with the reference line, showing that significances are weaker than for

F2 and

F3, for example. These results match with those presented in

Table 2. There,

F17 and

F18 presented the smallest

p-values. It is expected that the last four formants have less satisfactory results compared with the first formants. However, they may be important to verify speakers with similar voice tones.

It is important to point out that although some formants on the Q–Q plot are almost coincident with the straight line, this does not disqualify the results of the model. The Q–Q plot is a comparison of how the data adjusts in relation to the norm. Thus, divergences regarding the central line indicate that there is a difference in the frequency regime of the compared formants, but does not suggest problems with the quality of the model. Besides, the similarity on the profile of residues indicates that the formants have similar patterns. However, the comparison through a linear model may (or may not) return a significant value, regardless. Thus, the Q–Q plot shows that the compared frequency strip amongst formants is similar which reinforces the formants extracted method by using the LPC.

Here, the obtained model via an XY plot was also evaluated. It was expected that the data would be close to the straight line, which would indicate a good fit for the model. As a consequence, it is implied that the level of significance of the formant is stronger.

Figure 11a,b shows the plots for formants

F0 and

F1, respectively. As observed from the figures, the data are agglomerated close to the straight line, showing that the models for formants

F0 and

F1 have strong significance.

Coincidently,

Figure 12a,b shows the plots for formants

F2 and

F3, respectively. As observed, once again, the data are agglomerated close to the straight line, showing that models for formants

F2 and

F3 have strong significance.

The last four formants were also evaluated. First,

Figure 13a,b shows the plots for formants

F15 and

F16, respectively. Unlike the results already presented, the data are scattered around the straight line, showing that models for formants

F15 and

F16 have lower significance compared with

F0,

F1,

F2, and

F3 (

Figure 11 and

Figure 12). Similar results were also shown for

p-values and Q–Q plot. Careful attention should be given to the scales of axes for

Figure 13 and

Figure 14, because they are multiplied by 10^4, unlike

Figure 11 and

Figure 12.

Second,

Figure 14a,b shows the plots for formants

F17 and

F18, respectively. As observed from these figures, the data are scattered around the straight line, showing that the models for formants

F17 and

F18 present lower significance compared with

F0,

F1,

F2, and

F3 (

Figure 11 and

Figure 12). Similar results have already been shown for

p-values and Q–Q plots.

4.2. Practical Results

To validate the proposed method, practical tests were conducted considering a database composed of 26 speakers (13 males and 13 females), where all 26 as suspects were classified ordinally (see

Table 3). Another audio was recorded from one of these and this was taken as the reference. The column “Time” refers to the length of the recorded audio. An interesting scenario was performed, whereby the suspects spoke Sentence #2 (as the reference audio), and the contested audio from Sentence #3 was recorded.

Table 3 shows the results for

p-values comparing all suspects and formants.

From

Table 3, it is evident that suspect #3 attained a higher significance value of ‘***’ and only one formant, NS. These results are in an acceptable level of confidence to certify positively that suspect #3 is the speaker responsible, according to the contested audio. By analysing

Table 3, it might be considered that suspect #11 could be charged as the speaker. It is important to highlight that the significance value returned by pitch analysis (

F0) must be taken into account at the first criterion of analysis. As the

p-value is NS for

F0, this hypothesis should be rejected. This test also took into account a sentence with suppression and change of words, as well as the peak of frequencies being different for each character narrated. This analysis allows the validation of the developed model, leading to the verification of which speaker is responsible, even under some interference in the recorded audio.

Table 4 shows the comparison for another scenario, whereby Sentence #1 is recorded for both the contested and reference audios. The comparison confronted several suspects, including some of those previously used in

Table 3. Once again, suspect #3 achieved maximum significance in all formants (a positive result), validating the hypothesis that the confronted audio belongs to that suspect.

Table 5 presents a new scenario. The idea was to investigate the correlation of the reference audio for Sentence #1 with itself. It was highly anticipated that all formants would be positively identified, demonstrating then that the audios were identical. As shown in

Table 5, the

p-values are 0 for all formants. This clearly demonstrates that both compared audios are equal, showing that the proposed model can also detect with accuracy in this scenario.

Posteriorly, it was proposed to verify the effect of the quality of audios on the proposed method, with the results presented in

Table 6. First, it is important to highlight that 128 kbps is the standard quality. Here, this study compared contested audios at low (64 kbps), medium (128 kbps), and high (256 kbps) qualities with the reference at the standard quality (128 kbps). The tests were conducted for suspect #3. By examining

Table 6, it is evident that the audios sampled at the same frequencies tend to obtain better results. However, 19 formants were extracted. Conversely, only 16 formants were obtained when considering a higher quality for the contested audio (256 kbps). Despite this point, the positive results verify the speaker. On the contrary, low-quality audio (64 kbps) for the contested audio, decreased the number of formants to 17. Although some non-significative

p-values were obtained, the high number of “***” ensured that the speaker was positively identified.

Another fundamental test consists of investigating whether different audio timing has influence on the results of the developed model. Accordingly, the timing of the reference audio was noted as very slow, slow, and very fast. The results are shown in

Table 7. The test was also carried out for suspect #3, considering the length of time of the contested audio (3.817347 s) for Sentence #1.

From

Table 7, it is evident that the

p-values have reduced significantly. First, it should be noted that formant

F18 was not recognised. Second, if the audio time is played in slow motion, the level of significance diminishes for the last formants. However, the result is still positive for speaker verification. Conversely, when the audio speeds up, the level of significance of the formants oscillates, where even

F1 gets NS. However, the results still positively verify the speaker.

Finally, the different levels of noise on the contested audio were examined followed by the statistical confrontation. The test was also carried out for suspect #3, considering brown, pink, and white noise, for Sentence #1. This represents 50%, 5%, and 1% of the spectrum. These results are presented in

Table 8. First, by examining

Table 8, it is remarkable that after noise insertion, the maximum number of formants was 10. Despite that, the results could positively verify speaker identity, given that all other

p-values were “***”. Overall, all seven experimental tests hit 100% accuracy, demonstrating that the method is well suited to verify the speaker in a forensic context.

To evaluate real scenarios and to better validate the method, other audios containing various conditions (reference audios) were tested. These records were obtained after more than 30 days from the contested audio, all for Sentence #1.

Table 9 depicts the results obtained. First, a telephone interception was carried out. Accordingly, the phone call was carried out on the street considering a noisy scenario (cars, wind and people’s voices). Owing to the phone channel limitation, only 5 formants were possible to be obtained. After applying the proposed method, it achieved good significance in all formants (a positive result), validating the hypothesis that the confronted audio belongs to that suspect. On another day, a WhatsApp message was recorded (at home). In this scenario, children playing and TV background noise were present. Once again, it results in a positive match. Notwithstanding, a new audio record was performed in a restaurant during a birthday celebration (Sony recorder Icd-PX470). From the analysis, 18 formants were obtained. As shown in

Table 9, this clearly demonstrates that the proposed method may also detect with high accuracy in this scenario. Afterwards, another record was carried out in an office by using the computer microphone. There was also background noise caused by the air conditioning, mouse and, keyboard. As afore obtained, the results ensure that the speaker is positively identified. It is important to point out that the number of formants is intrinsically limited to the type of audio recorder used. Apart from this, the results demonstrated that the method is well suited to verify the speaker in several scenarios containing noise and for different recording devices.

Finally, the proposed method was preliminarily evaluated utilizing the dataset LibriSpeech [

45]. This dataset is a public domain and contains 1983 speakers. All of them spoke in English. They conducted all the recordings for months, with different accents, mixing female and male, etc. First of all, the file named as 9023-296467 was considered as questioned audio. Accordingly, 20 different audios were considered as the references (

Table 10). Based on the proposed methodology, this study observed that audios named as 9023-296468 and 7177-258977 are the best candidates to be the speaker. The 9023-296468 speaker attained a higher significance value of ‘***’ and only one formant NS, being the correspondent speaker for the test (positive verification). It is important to highlight that those audios for 9023-296468 and 9023-296467 were recorded by the same speaker at a different time. For further details about the dataset formation, readers can explore the following reference [

45]. However, readers should pay attention to the best

p-value (F0) that was accomplished for speaker #9 (10

−7), which may be considered a poor value given an unnoisy condition. Comparing it with

Table 2, whereby 10

−22 was obtained for the best

p-value, English may be considered as a non-adaptive language for the proposed method, since if it inserts noise, all

p-values decrease to a non-significant value in several formants. The reason might be explained back to the history of the Portuguese language which emerged in the thirteenth century, undergoing a Latin evolution. The Brazilian Portuguese language has a complex vocabulary, having a symmetrical and balanced phonetic system with the final notes more clearly than in European Portuguese [

46]. On the other hand, the history of the English language begins in the year 499 BC, 5th Century. Accordingly, it is much older than the Portuguese language and, since then, has had influences from the Anglo-Saxons and later from the French. In this context, the vowel sound system has changed substantially from the correlation between spelling and pronunciation, distancing itself from other Western European languages [

47]. Therefore, the results here reached are legitimate for Brazilian Portuguese and these strongly reinforce the potentiality of this work, since the several voices dataset are in English which could provide distance from Brazilian Portuguese. In the same way, several methods found in the literature, for speaker identification, might be not work accurately for Brazilian Portuguese.