Multi-Scale Vehicle Detection for Foreground-Background Class Imbalance with Improved YOLOv2

Abstract

1. Introduction

2. Brief Introduction on YOLOv2

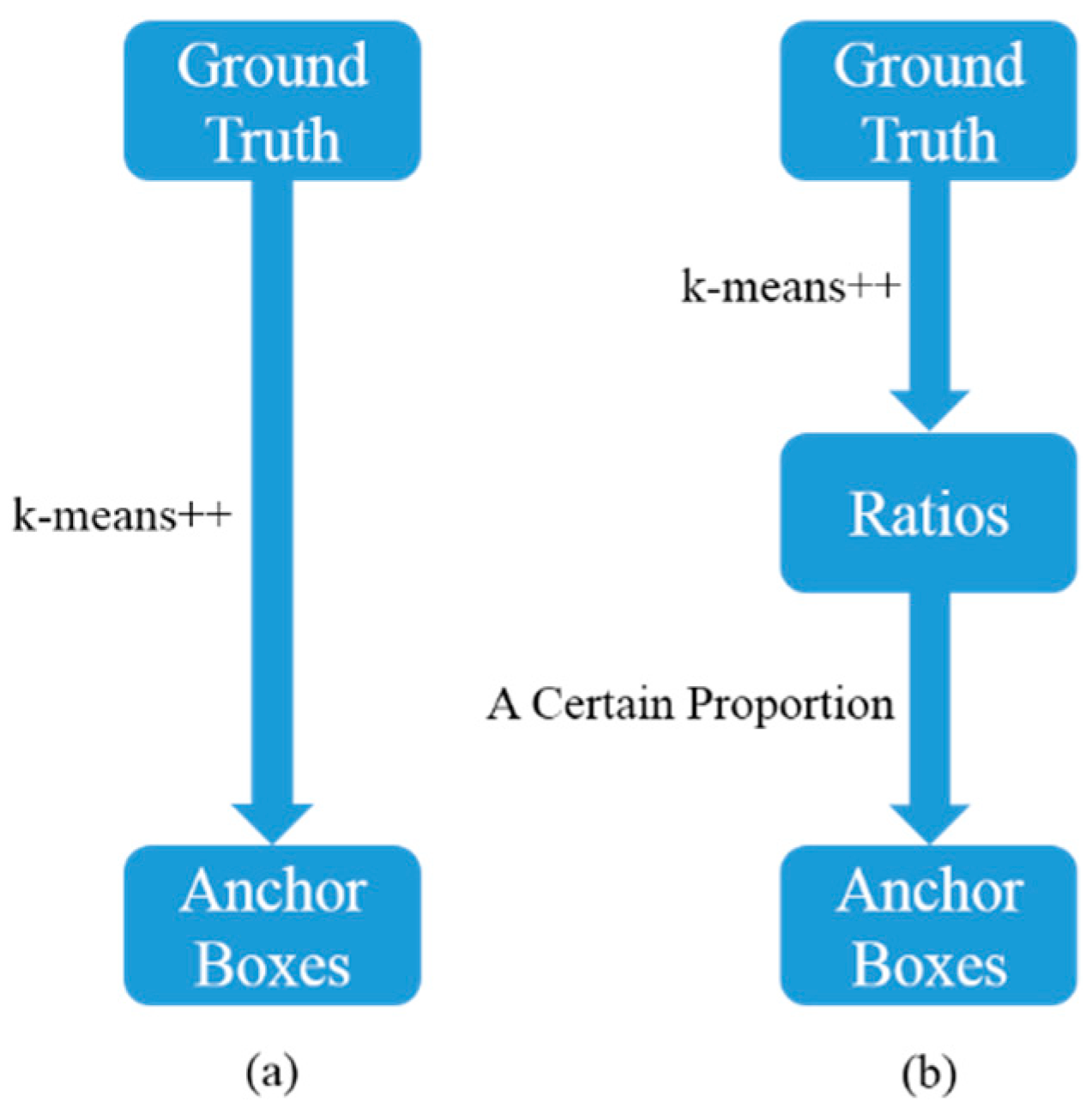

2.1. The Generation of Anchor Boxes

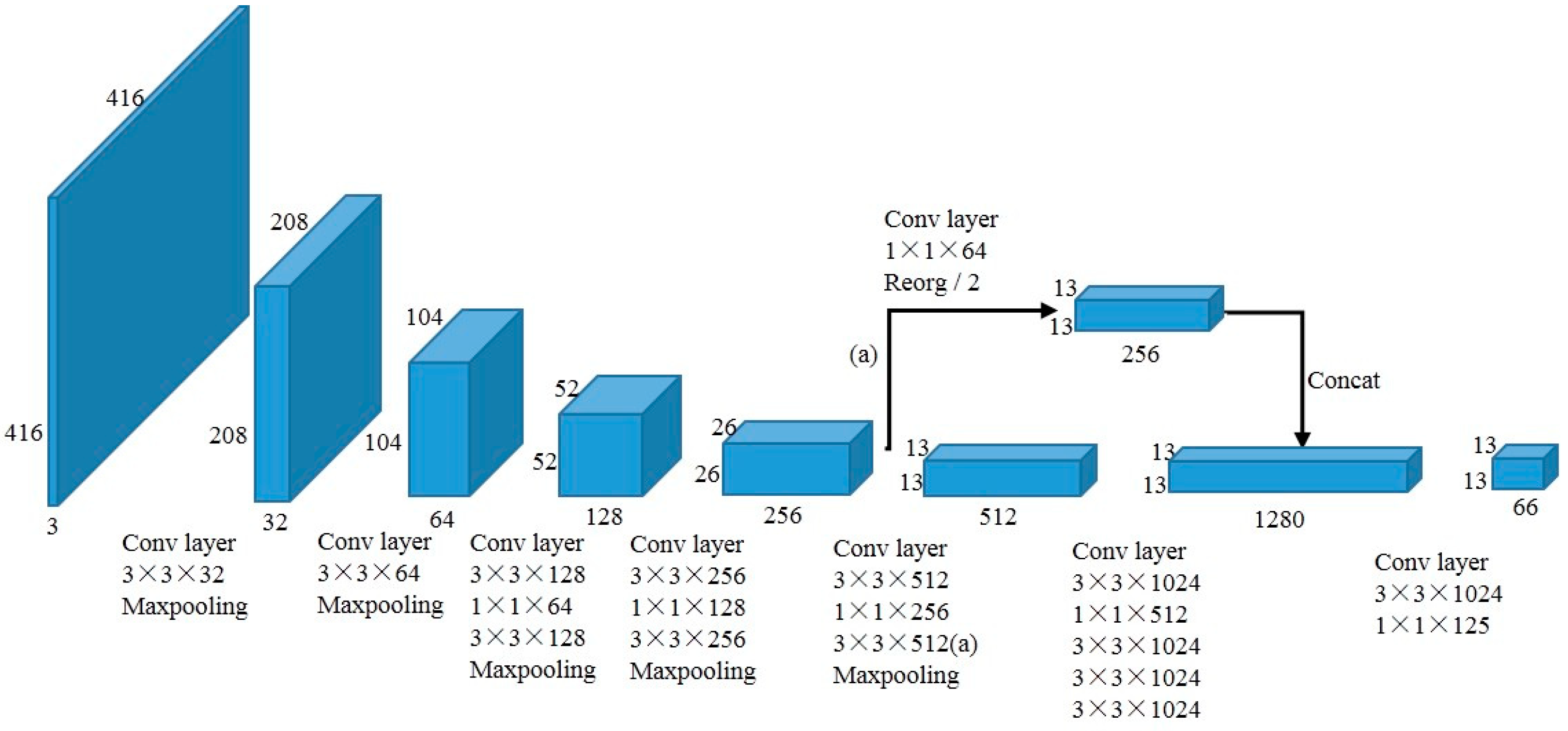

2.2. The Network Structure

2.3. The Loss Function

3. The Proposed Method

3.1. The Generation of Anchor Boxes

3.2. Focal Loss

4. Experiments

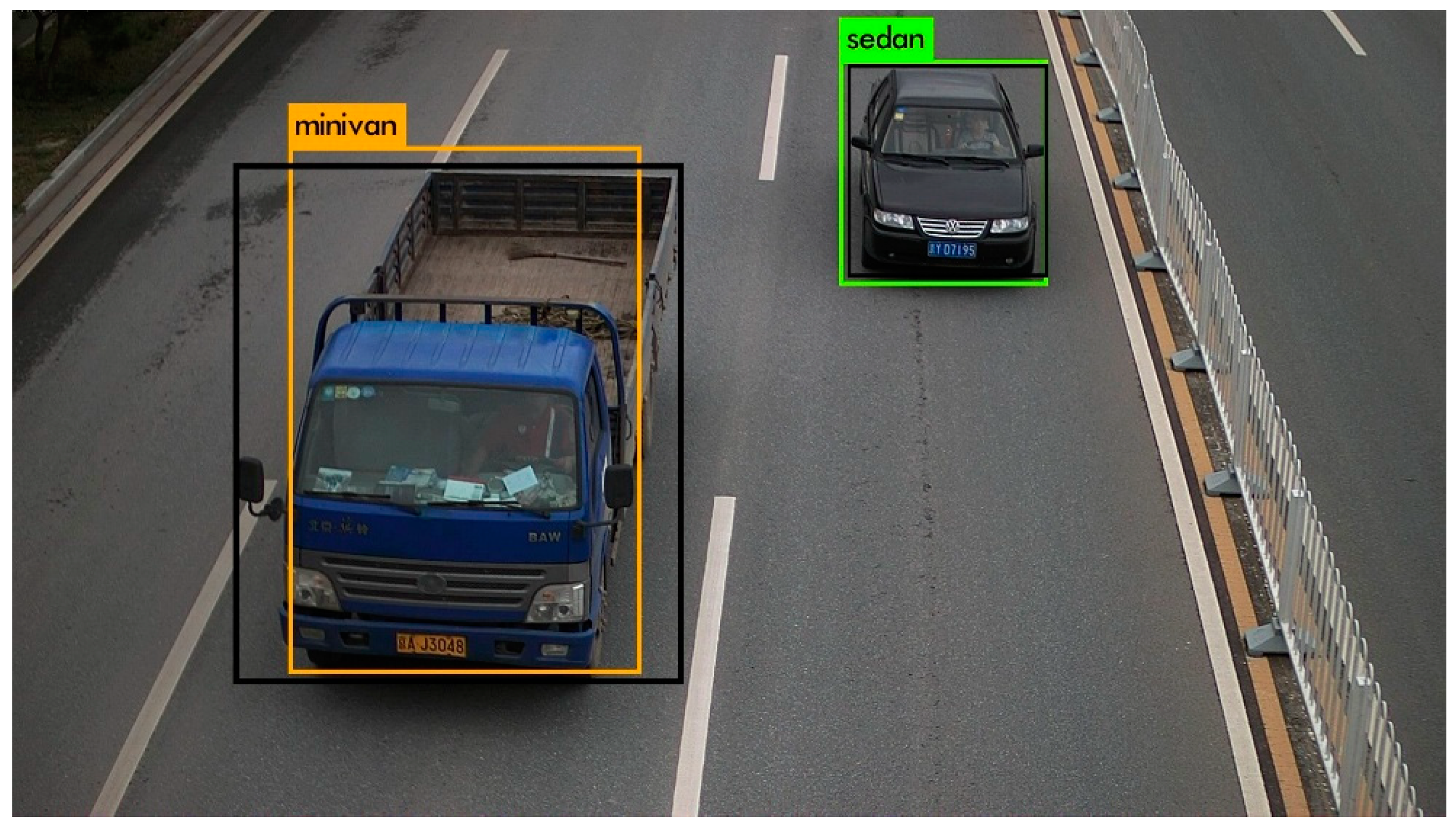

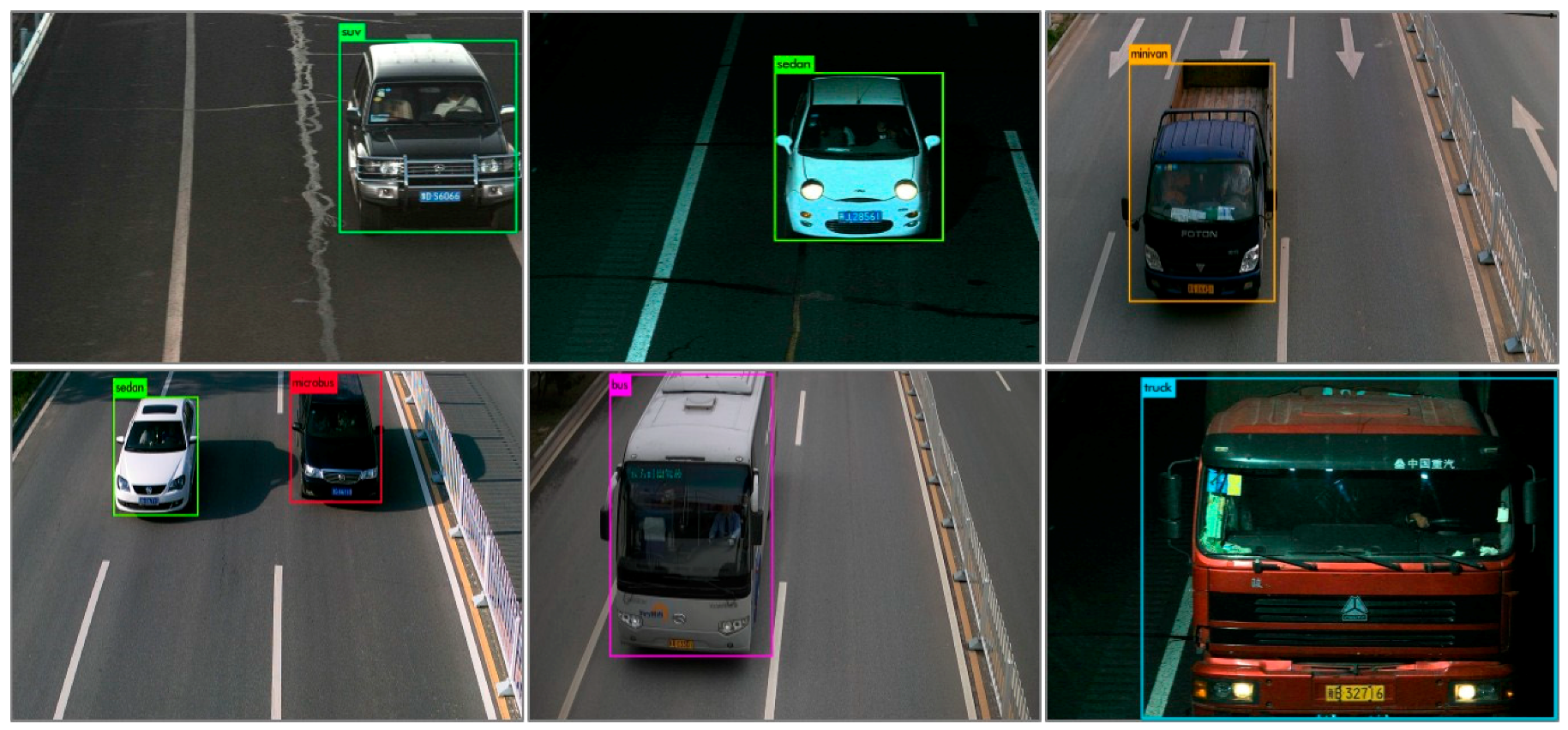

4.1. Dataset

4.2. Implementation

4.3. Experimental Results and Analysis

4.4. The Validation of Rk-means++

4.5. The Validation of Focal Loss

5. Conclusions

- (1)

- By analyzing the detection boxes of YOLOv2, it was found that the common sizes of the vehicles can be predicted well, but some unusual sizes of vehicles were predicted terribly. Therefore, to adapt most sizes of vehicles with different scales, instead of clustering the anchor boxes directly, a new anchor box generation method, Rk-means++, was proposed.

- (2)

- By analyzing the loss of the training stage, it was found that most of the loss was from the irrelevant background, which is due to the imbalance between the vehicles and background in each image. Therefore, the Focal Loss was introduced in YOLOv2 for vehicle detection to reduce the impact of easy negatives (background) on loss and make the loss focus on the hard examples.

- (3)

- The experiments conducted on the BIT-Vehicle public dataset demonstrated that our improved YOLOv2 obtained superior performance than others on vehicle detection.

- (4)

- By conducting numerous comparison experiments, the effectiveness of Rk-means++ and Focal Loss was validated.

Author Contributions

Funding

Conflicts of Interest

References

- Tsai, L.W.; Hsieh, J.W.; Fan, K.C. Vehicle Detection Using Normalized Color and Edge Map. IEEE Trans. Image Process. 2007, 16, 850–864. [Google Scholar] [CrossRef] [PubMed]

- Jazayeri, A.; Cai, H.; Zheng, J.Y.; Tuceryan, M. Vehicle Detection and Tracking in Car Video Based on Motion Model. IEEE Trans. Intell. Transp. Syst. 2011, 12, 583–595. [Google Scholar] [CrossRef]

- Cao, X.; Wu, C.; Yan, P.; Li, X. Linear SVM classification using boosting HOG features for vehicle detection in low-altitude airborne videos. In Proceedings of the 2011 IEEE International Conference Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 2421–2424. [Google Scholar]

- Guo, E.; Bai, L.; Zhang, Y.; Han, J. Vehicle Detection Based on Superpixel and Improved HOG in Aerial Images. In Proceedings of the International Conference on Image and Graphics, Shanghai, China, 13–15 September 2017; pp. 362–373. [Google Scholar]

- Laopracha, N.; Sunat, K. Comparative Study of Computational Time that HOG-Based Features Used for Vehicle Detection. In Proceedings of the International Conference on Computing and Information Technology, Helsinki, Finland, 21–23 August 2017; pp. 275–284. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2261–2269. [Google Scholar]

- Pyo, J.; Bang, J.; Jeong, Y. Front collision warning based on vehicle detection using CNN. In Proceedings of the International SoC Design Conference (ISOCC), Jeju, Korea, 23–26 October 2016; pp. 163–164. [Google Scholar]

- Tang, Y.; Zhang, C.; Gu, R.; Li, P.; Yang, B. Vehicle Detection and Recognition for Intelligent Traffic Surveillance System. Multimed. Tools Appl. 2017, 76, 5817–5832. [Google Scholar] [CrossRef]

- Gao, Y.; Guo, S.; Huang, K.; Chen, J.; Gong, Q.; Zou, Y.; Bai, T.; Overett, G. Scale optimization for full-image-CNN vehicle detection. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 785–791. [Google Scholar]

- Huttunen, H.; Yancheshmeh, F.S.; Chen, K. Car type recognition with deep neural networks. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1115–1120. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. In Proceedings of the 2014 IEEE International Conference of European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 346–361. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497v3. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks. In Proceedings of the 2016 IEEE International Conference of Advances in Neural Information Processing Systems, Barcelona, Spain, 5–8 December 2016; pp. 379–387. [Google Scholar]

- Azam, S.; Rafique, A.; Jeon, M. Vehicle Pose Detection Using Region Based Convolutional Neural Network. In Proceedings of the International Conference on Control, Automation and Information Sciences (ICCAIS), Ansan, Korea, 27–29 October 2016; pp. 194–198. [Google Scholar]

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle Detection in Aerial Images Based on Region Convolutional Neural Networks and Hard Negative Example Mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef] [PubMed]

- Deng, Z.; Sun, H.; Zhou, S. Toward Fast and Accurate Vehicle Detection in Aerial Images Using Coupled Region-Based Convolutional Neural Networks. IEEE J.-Stars. 2017, 10, 3652–3664. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Sang, J.; Wu, Z.; Guo, P.; Hu, H.; Xiang, H.; Zhang, Q.; Cai, B. An Improved YOLOv2 for Vehicle Detection. Sensors 2018, 18, 4272. [Google Scholar] [CrossRef] [PubMed]

- Arthur, D.; Vassilvitskii, S. k-means plus plus: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means Clustering Algorithm. J. R. Stat. Soc. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Carlet, J.; Abayowa, B. Fast vehicle detection in aerial imagery. arXiv 2018, arXiv:1709.08666. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Dong, Z.; Wu, Y.; Pei, M.; Jia, Y. Vehicle type classification using a semisupervised convolutional neural network. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2247–2256. [Google Scholar] [CrossRef]

| Method | The Class AP (%) | mAP (%) | IoU (%) | Speed (s) | |||||

|---|---|---|---|---|---|---|---|---|---|

| Bus | Microbus | Minivan | Sedan | SUV | Truck | ||||

| YOLOv2 [25] | 98.34 | 95.03 | 91.11 | 97.42 | 93.62 | 98.41 | 95.65 | 90.44 | 0.0496 |

| YOLOv2_Vehicle [27] | 98.42 | 97.04 | 95.02 | 97.37 | 93.73 | 97.80 | 96.56 | 91.06 | 0.0486 |

| YOLOv3 [26] | 98.65 | 96.98 | 94.04 | 97.65 | 94.36 | 98.17 | 96.64 | 88.50 | 0.1100 |

| SSD300 VGG16 [24] | 97.97 | 97.98 | 90.28 | 97.15 | 91.25 | 97.75 | 93.75 | 91.60 | 0.0440 |

| Faster R-CNN VGG16 [18] | 99.05 | 93.75 | 91.38 | 98.14 | 94.75 | 98.17 | 95.87 | 92.19 | 0.4257 |

| Our Method | 98.86 | 96.63 | 95.90 | 98.23 | 94.86 | 99.30 | 97.30 | 92.97 | 0.0522 |

| Method | The Class AP (%) | mAP (%) | IoU (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Bus | Microbus | Minivan | Sedan | SUV | Truck | ||||

| YOLOv2 | k-means | 98.34 | 95.03 | 91.11 | 97.42 | 93.62 | 98.41 | 95.65 | 90.44 |

| k-means++ | 98.60 | 96.29 | 93.16 | 97.47 | 93.72 | 98.15 | 96.23 | 91.05 | |

| Rk-means++ | 98.68 | 96.66 | 91.50 | 97.48 | 93.59 | 97.33 | 95.88 | 92.18 | |

| YOLOv3 | k-means | 98.65 | 96.98 | 94.04 | 97.65 | 94.36 | 98.17 | 96.64 | 88.50 |

| k-means++ | 98.70 | 96.44 | 93.80 | 97.66 | 94.76 | 97.78 | 96.52 | 88.86 | |

| Rk-means++ | 98.32 | 97.08 | 92.65 | 97.70 | 94.27 | 97.96 | 96.33 | 90.49 | |

| YOLOv2 | The Class AP (%) | mAP (%) | IoU (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Bus | Microbus | Minivan | Sedan | SUV | Truck | ||||

| k-means | Wo FL | 98.34 | 95.03 | 91.11 | 97.42 | 93.62 | 98.41 | 95.65 | 90.44 |

| With FL | 98.34 | 96.80 | 94.81 | 98.20 | 95.34 | 99.32 | 97.13 | 92.06 | |

| k-means++ | Wo FL | 98.60 | 96.29 | 93.16 | 97.47 | 93.72 | 98.15 | 96.23 | 91.05 |

| With FL | 98.48 | 97.44 | 96.03 | 98.24 | 95.68 | 99.17 | 97.51 | 92.30 | |

| Rk-means++ | Wo FL | 98.68 | 96.66 | 91.50 | 97.48 | 93.59 | 97.33 | 95.88 | 92.18 |

| With FL | 98.86 | 96.63 | 95.90 | 98.23 | 94.86 | 99.30 | 97.30 | 92.97 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Sang, J.; Zhang, Q.; Xiang, H.; Cai, B.; Xia, X. Multi-Scale Vehicle Detection for Foreground-Background Class Imbalance with Improved YOLOv2. Sensors 2019, 19, 3336. https://doi.org/10.3390/s19153336

Wu Z, Sang J, Zhang Q, Xiang H, Cai B, Xia X. Multi-Scale Vehicle Detection for Foreground-Background Class Imbalance with Improved YOLOv2. Sensors. 2019; 19(15):3336. https://doi.org/10.3390/s19153336

Chicago/Turabian StyleWu, Zhongyuan, Jun Sang, Qian Zhang, Hong Xiang, Bin Cai, and Xiaofeng Xia. 2019. "Multi-Scale Vehicle Detection for Foreground-Background Class Imbalance with Improved YOLOv2" Sensors 19, no. 15: 3336. https://doi.org/10.3390/s19153336

APA StyleWu, Z., Sang, J., Zhang, Q., Xiang, H., Cai, B., & Xia, X. (2019). Multi-Scale Vehicle Detection for Foreground-Background Class Imbalance with Improved YOLOv2. Sensors, 19(15), 3336. https://doi.org/10.3390/s19153336