A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles

Abstract

:1. Introduction

- (1)

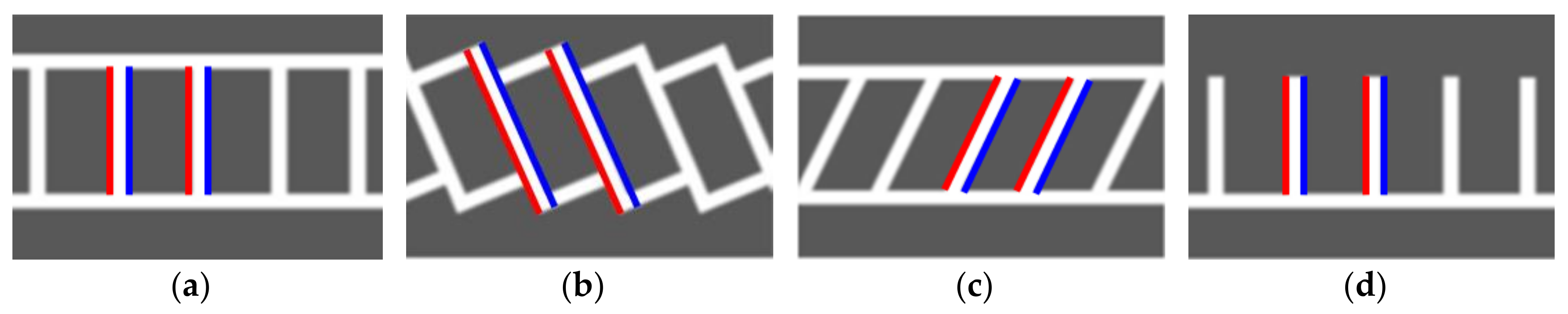

- To recognize various types of parking slot markings with open and close entrances, this paper proposes an approach that generates parking slot candidates based on separating lines and confirms their entrances using both line and corner features. Previous parallel line-based methods cannot handle parking slot markings with open entrances because they only detect line features.

- (2)

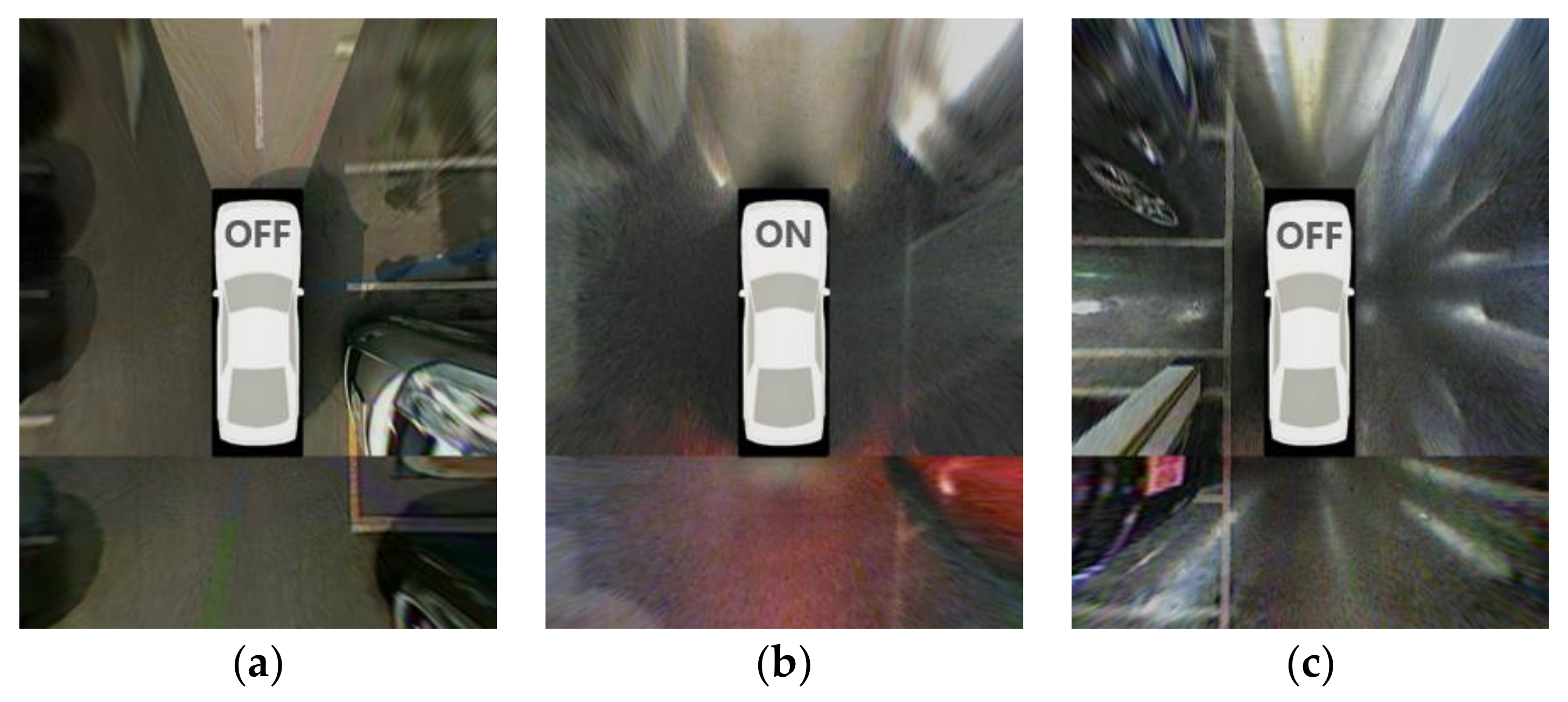

- To achieve robustness in severe lighting conditions at night and underground, this paper proposes an approach that tracks separating lines and parking slots using in-vehicle motion sensor-based odometry, whose accuracy is independent of lighting conditions. Previous slot-marking-based methods have limitations in handling severe lighting conditions because they only use features extracted from the current image.

- (3)

- This paper efficiently combines the two aforementioned approaches to develop a universal parking slot recognition method that can handle various types of parking slot markings during the day, at night, and underground. The proposed method was quantitatively evaluated using the dataset obtained under various severe lighting conditions and showed outstanding performance. According to our literature review, there has been no previous method that can handle various types of parking slot markings and a variety of severe lighting conditions, and no previous method has been quantitatively evaluated in these different settings.

2. Related Research

2.1. User-Interface-Based Approach

2.2. Free-Space-Based Approach

2.3. Slot-Marking-Based Approach

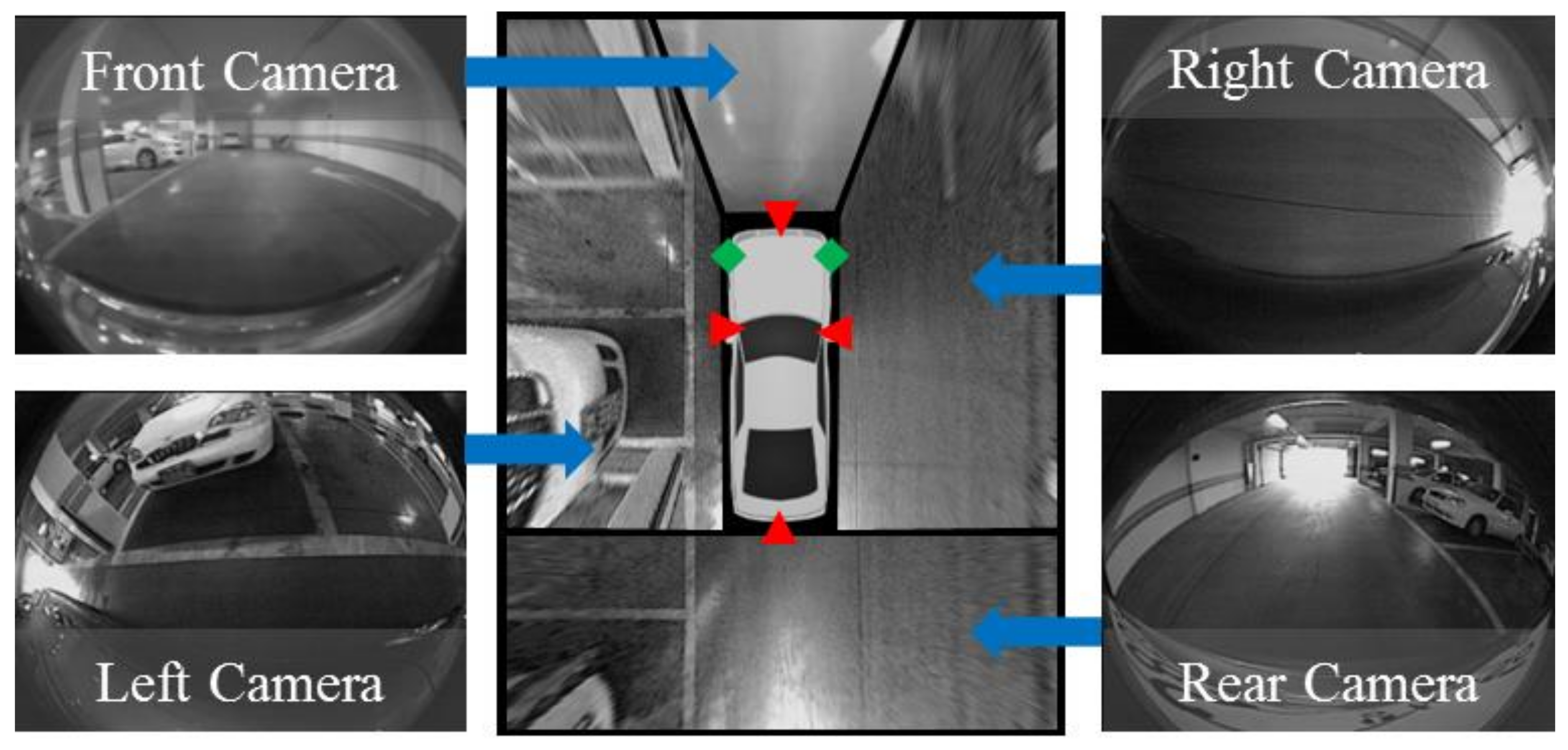

3. Sensor Configuration

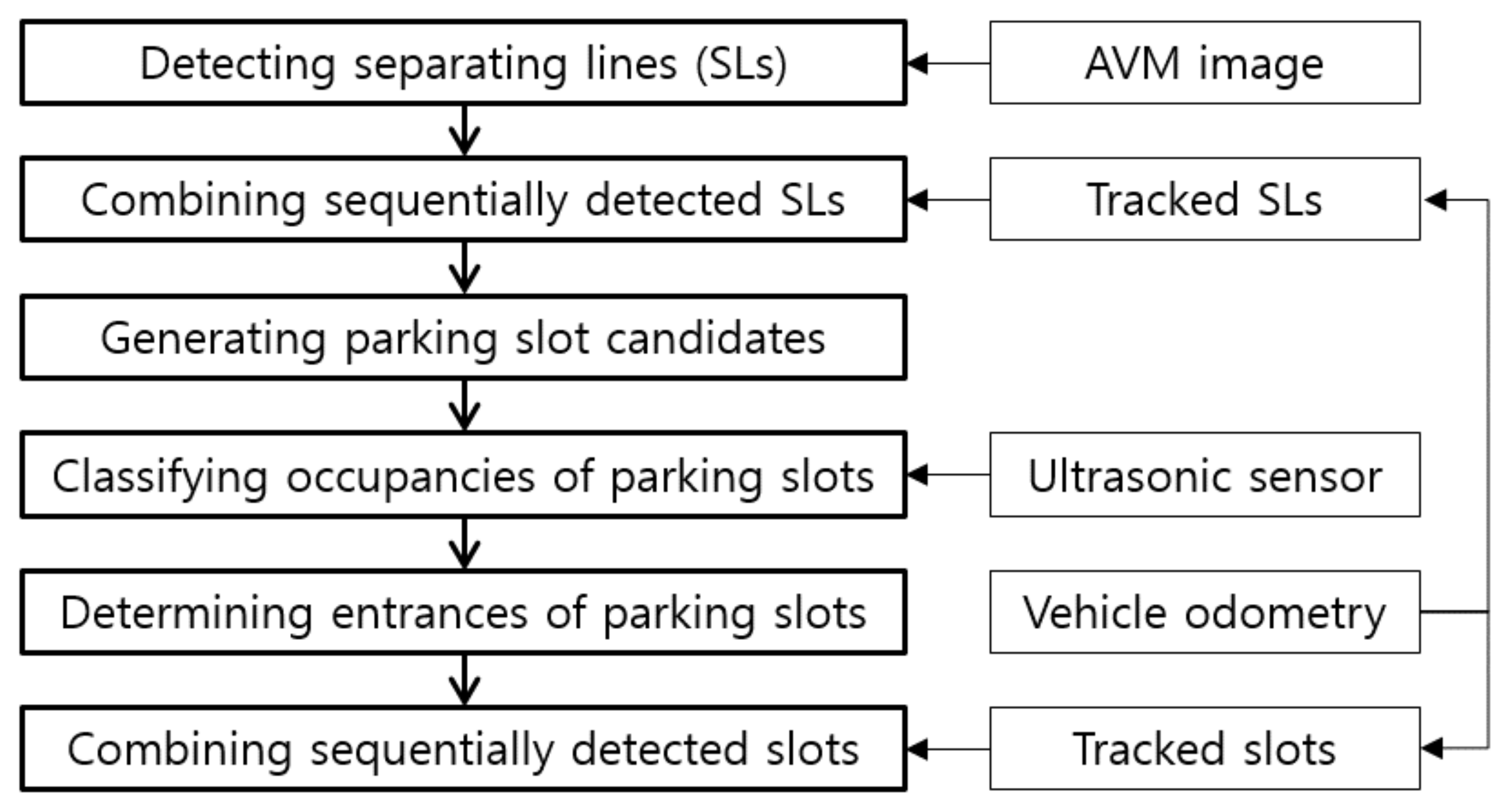

4. Overview of the Proposed Method

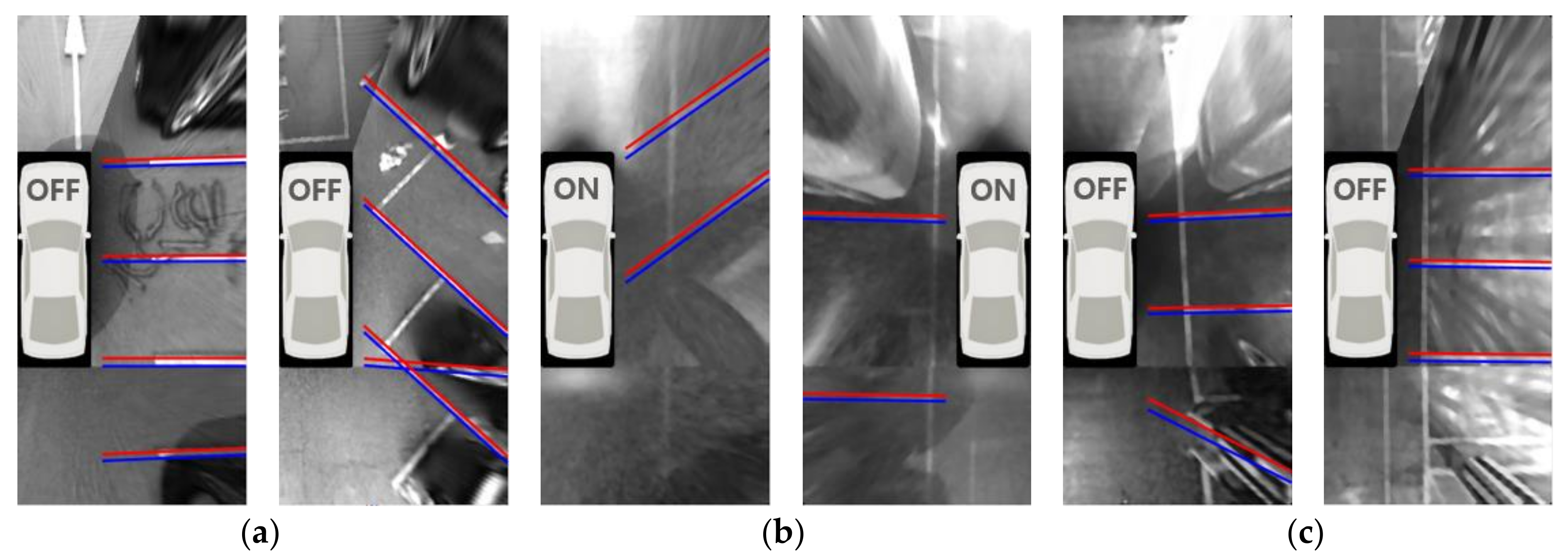

5. Separating Line Detection and Tracking

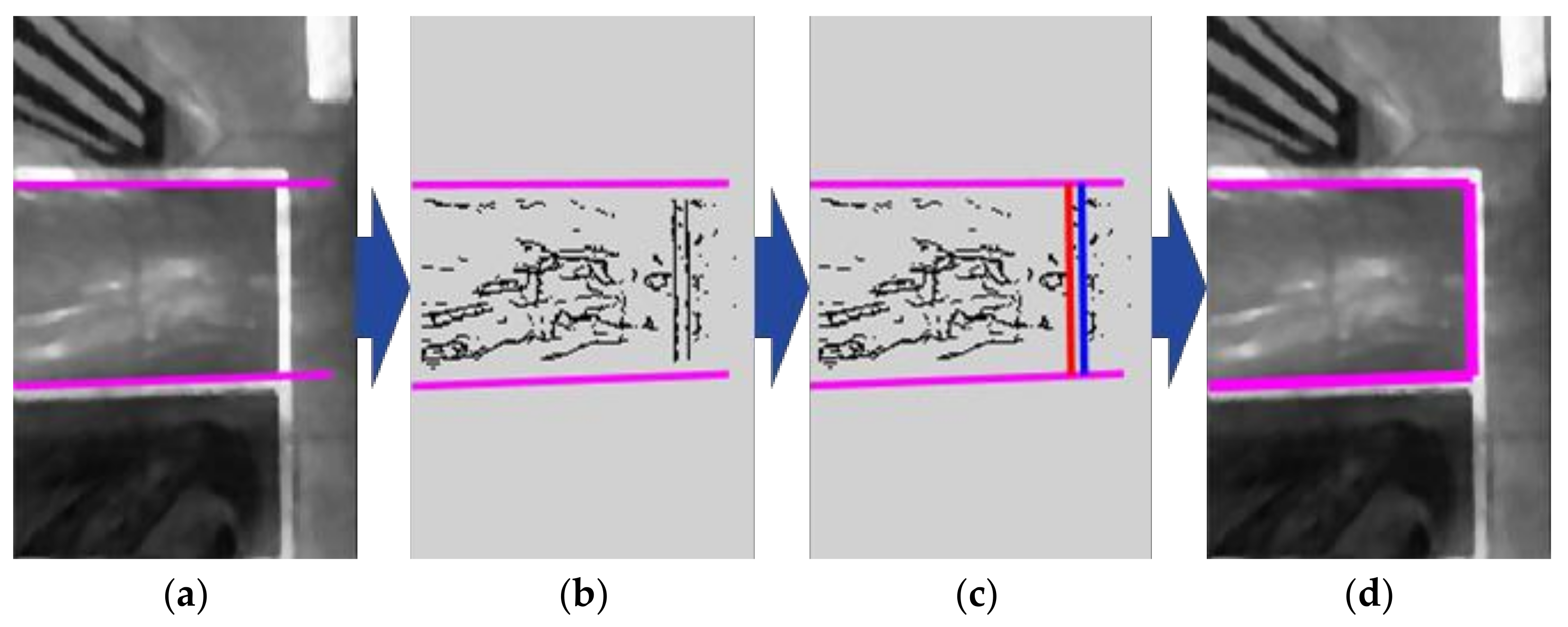

5.1. Separating Line Detection

- Randomly select one edge pixel.

- Randomly select two edge pixels whose orientations are opposite to that of the previously selected one.

- Estimate parallel line pair parameters using the selected three edge pixels via the least squares estimator.

- Count consensus sets (edge pixels) based on perpendicular distance. Orientations of the consensus sets should coincide with those of the randomly selected edge pixels.

- Repeat the above procedures and select the parameters that give the maximum number of consensus set.

- Re-estimate parallel line pair parameters using all edge pixels in the selected consensus set based on the least squares estimator.

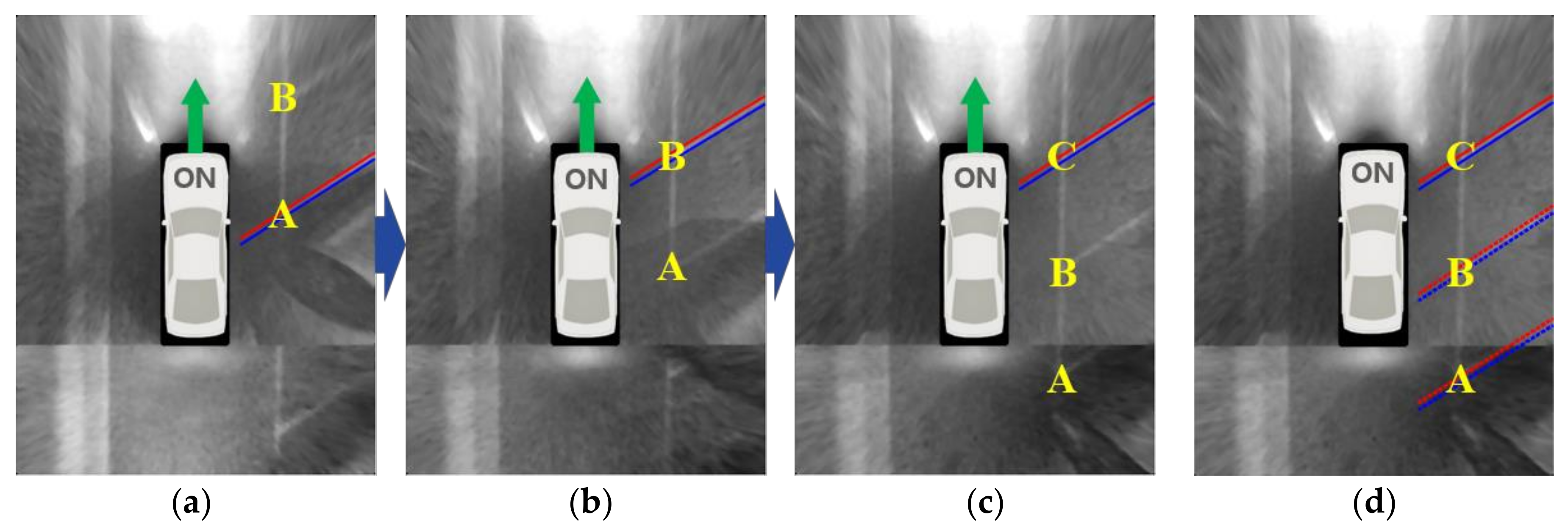

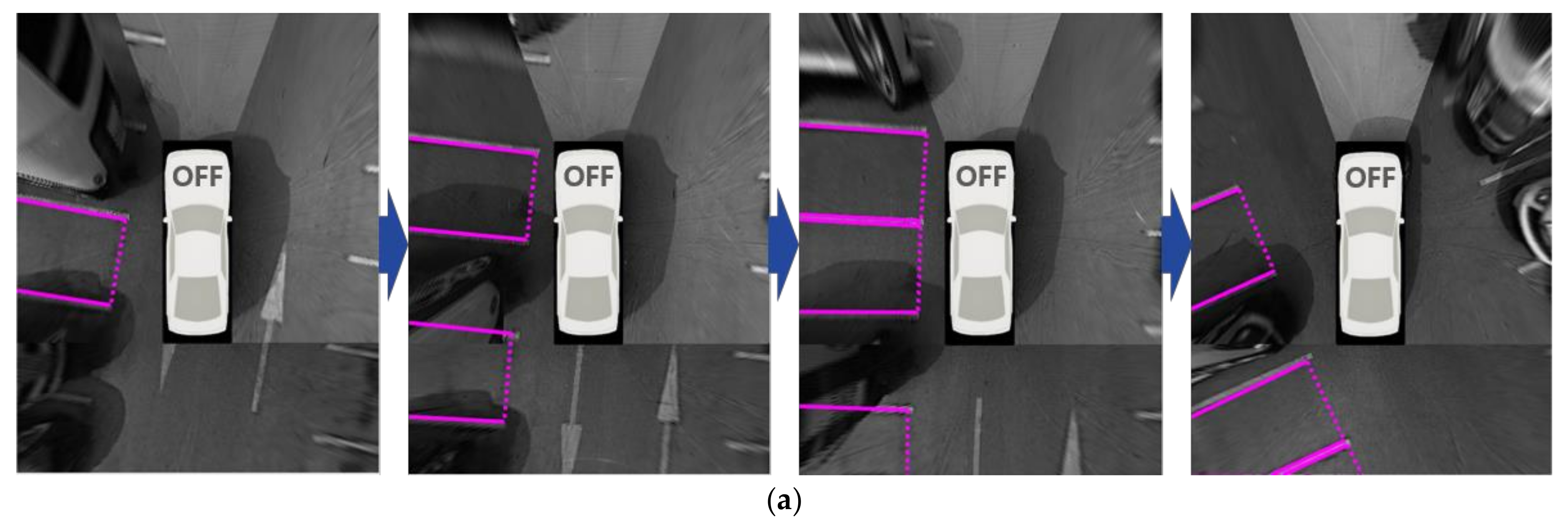

5.2. Separating Line Tracking

6. Parking Slot Generation

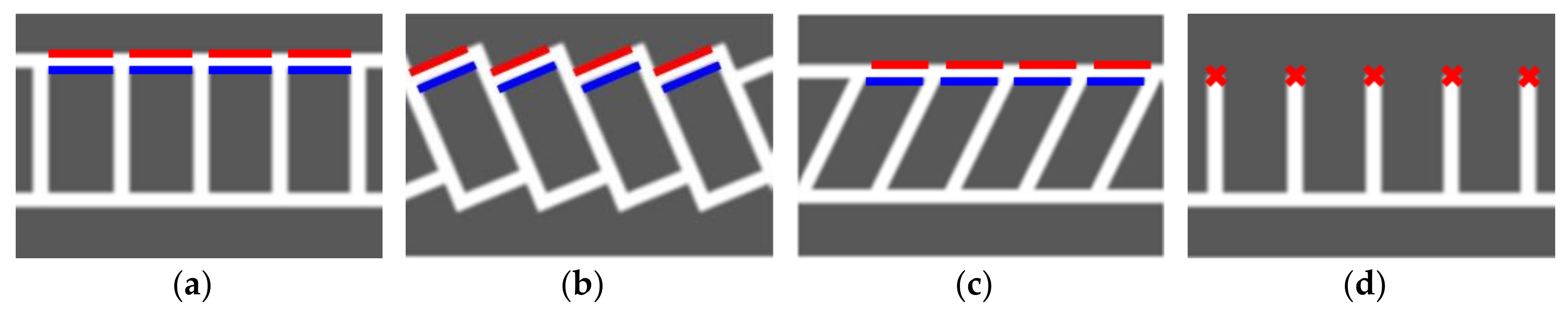

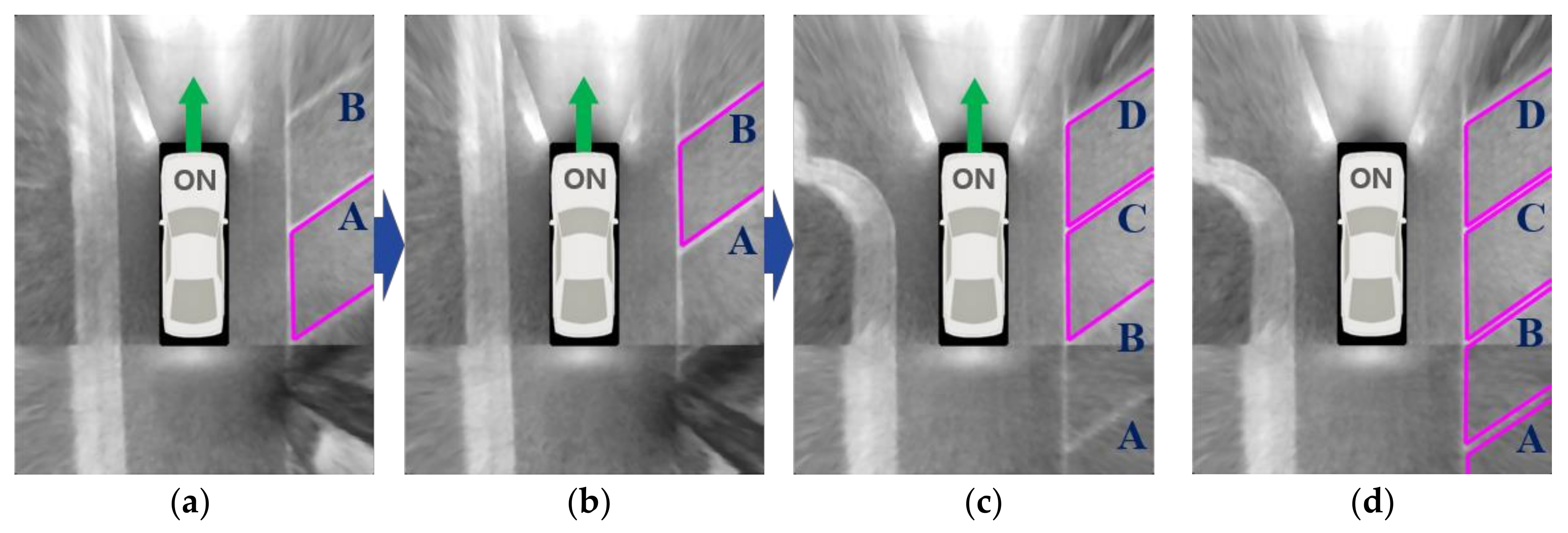

6.1. Parking Slot Candidate Generation

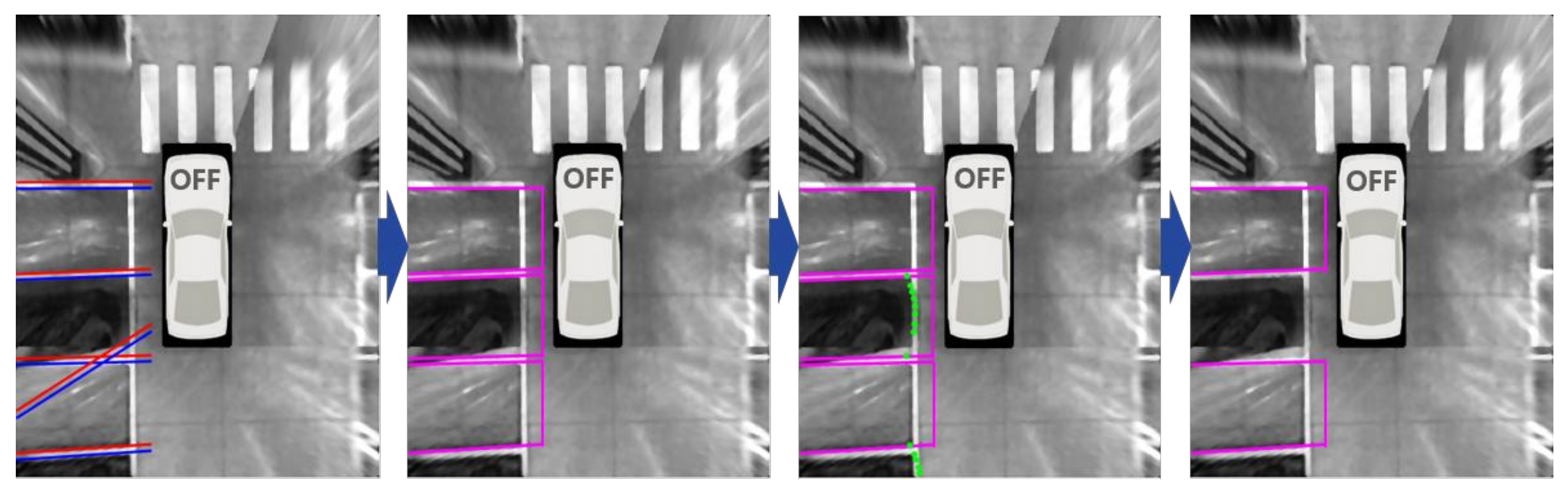

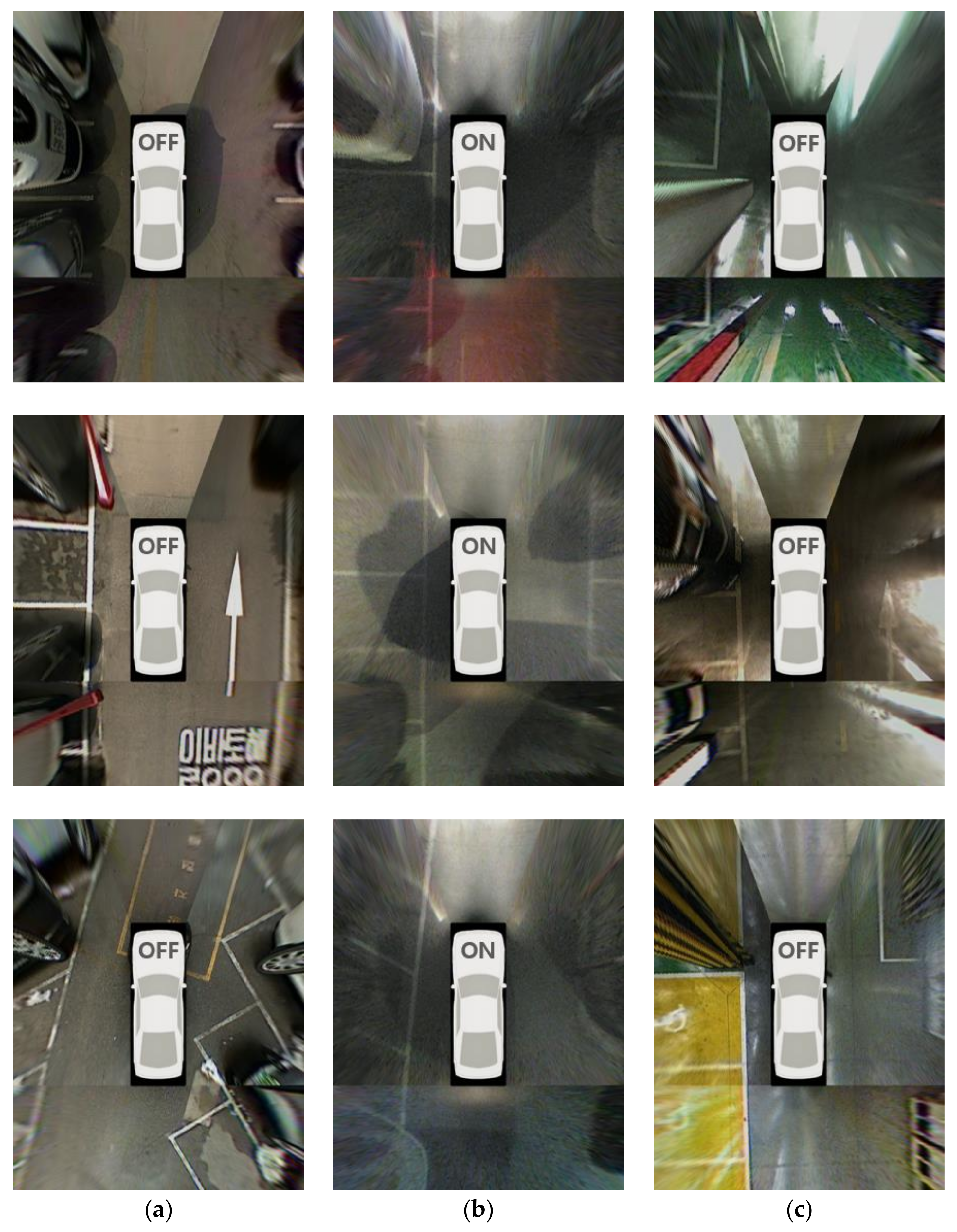

6.2. Parking Slot Occupancy Classification

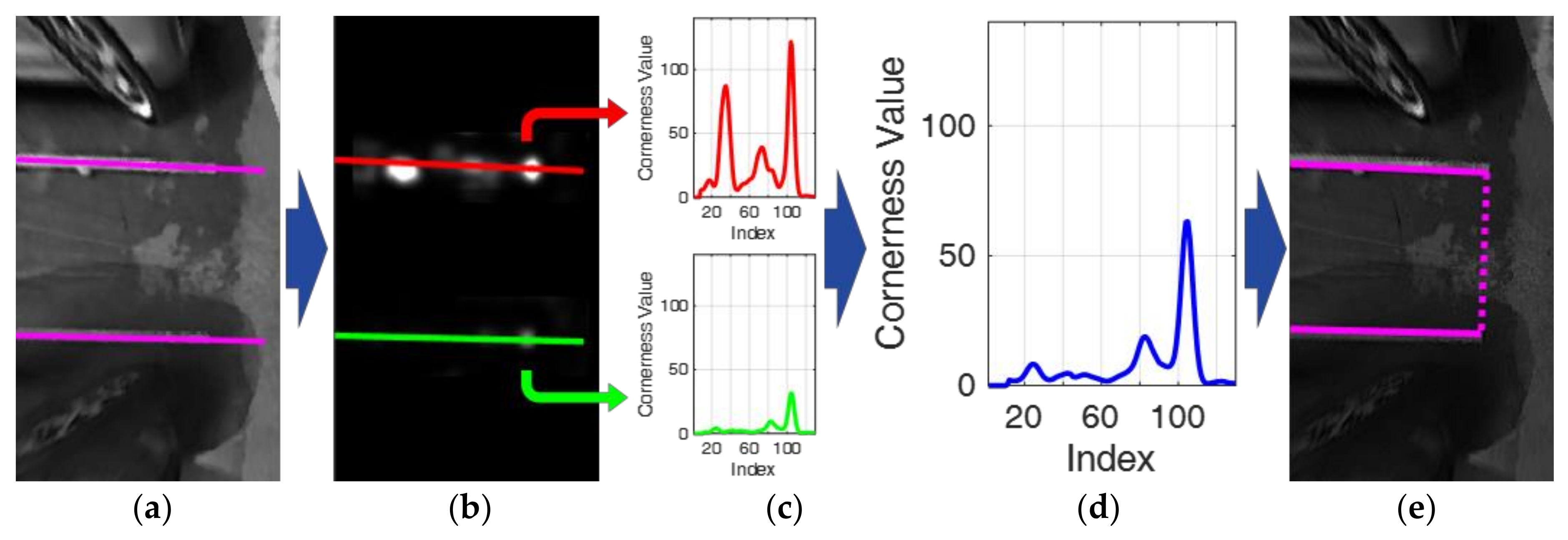

6.3. Parking Slot Entrance Detection

7. Parking Slot Tracking and Combining

8. Experiments

9. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Heimberger, M.; Horgan, J.; Hughes, C.; McDonald, J.; Yogamani, S. Computer Vision in Automated Parking Systems: Design, Implementation and Challenges. Image Vis. Comput. 2017, 68, 88–101. [Google Scholar] [CrossRef]

- Correa, A.; Boquet, G.; Morell, A.; Vicario, J.L. Autonomous Car Parking System through a Cooperative Vehicular Positioning Network. Sensors 2017, 17, 848. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.G.; Lee, Y.H.; Kim, J. Uniform user interface for semiautomatic parking slot marking recognition. IEEE Trans. Veh. Technol. 2010, 59, 616–626. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Sensor Fusion-Based Vacant Parking Slot Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2014, 15, 21–36. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Automatic Parking Space Detection and Tracking for Underground and Indoor Environments. IEEE Trans. Ind. Electron. 2016, 63, 5687–5698. [Google Scholar] [CrossRef]

- Hiramatsu, S.; Hibi, A.; Tanaka, Y.; Kakinami, T.; Iwata, Y.; Nakamura, M. Rearview camera based parking assist system with voice guidance. In Proceedings of the SAE World Congress and Exhibition, Detroit, MI, USA, 11–15 March 2002. [Google Scholar]

- Furutani, M. Obstacle detection systems for vehicle safety. In Proceedings of the Convergence International Congress & Exposition on Transportation Electronics, Detroit, MI, USA, 13 October 2004. [Google Scholar]

- Kageyama, Y. Look, No Hand! New Toyota Parks Itself. Available online: http://www.cnn.com/ (accessed on 14 January 2004).

- Jung, H.G.; Choi, C.G.; Yoon, P.J.; Kim, J. Novel user interface for semi-automatic parking assistance system. In Proceedings of the 31st FISITA World Automotive Congress, Yokohama, Japan, 22–27 October 2006. [Google Scholar]

- Ford FUSION. Available online: https://www.ford.com/cars/fusion/features/smart/#enhanced_active_park_assist (accessed on 21 February 2018).

- Mercedes-Benz E-Class. Available online: https://www.mercedes-benz.com/en/mercedes-benz/ innovation/the-remote-parking-pilot-in-the-new-e-class-video (accessed on 21 February 2018).

- Toyota PRIUS. Available online: https://www.toyota.com/prius/prius-features (accessed on 21 February 2018).

- Hyundai Genesis. Available online: http://www.hyundai.com/eu/en/Showroom/Cars/Genesis/PIP /index.html (accessed on 21 February 2018).

- Vestri, C.; Bougnoux, S.; Bendahan, R.; Fintzel, K.; Wybo, S.; Abad, F.; Kakinami, T. Evaluation of a vision-based parking assistance system. In Proceedings of the 8th IEEE International Conference on Intelligent Transportation Systems, Vienna, Austria, 3–6 September 2005. [Google Scholar]

- Suhr, J.K.; Jung, H.G.; Bae, K.; Kim, J. Automatic free parking space detection by using motion stereo-based 3D reconstruction. Mach. Vis. Appl. 2010, 21, 163–176. [Google Scholar] [CrossRef]

- Unger, C.; Wahl, E.; Ilic, S. Parking assistance using dense motion stereo. Mach. Vis. Appl. 2014, 25, 561–581. [Google Scholar] [CrossRef]

- Kaempchen, N.; Franke, U.; Ott, R. Stereo vision based pose estimation of parking lots using 3-D vehicle models. In Proceedings of the IEEE Intelligent Vehicles Symposium, Versailles, France, 17–21 June 2002. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J.H. 3D vision system for the recognition of free parking site location. Int. J. Autom. Technol. 2006, 7, 361–367. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Kim, J. Light stripe projection-based target position designation for intelligent parking-assist system. IEEE Trans. Intell. Transp. Syst. 2010, 11, 942–953. [Google Scholar] [CrossRef]

- Hashizume, A.; Ozawa, S.; Yanagawa, H. An approach to detect vacant parking space in a parallel parking area. In Proceedings of the 5th European Congress on Exhibition Intelligent Transport Systems and Services, Hannover, Germany, 4–6 June 2005. [Google Scholar]

- Abad, F.; Bendahan, R.; Wybo, S.; Bougnoux, S.; Vestri, C.; Kakinami, T. Parking space detection. In Proceedings of the 14th World Congress on Intelligent Transport Systems, Beijing, China, 12–15 October 2007. [Google Scholar]

- Jung, H.G.; Cho, Y.H.; Yoon, P.J.; Kim, J. Scanning laser radar-based target position designation for parking aid system. IEEE Trans. Intell. Transp. Syst. 2008, 9, 406–424. [Google Scholar] [CrossRef]

- Zhou, J.; Navarro-Serment, L.E.; Hebert, M. Detection of parking spots using 2D range data. In Proceedings of the 5th IEEE International Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012. [Google Scholar]

- Scheunert, U.; Fardi, B.; Mattern, N.; Wanielik, G.; Keppeler, N. Free space determination for parking slots using a 3D PMD sensor. In Proceedings of the IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 12–15 June 2007. [Google Scholar]

- Görner, S.; Rohling, H. Parking lot detection with 24 GHz radar sensor. In Proceedings of the 3rd International Workshop on Intelligent Transportation, Hamburg, Germany, 14–15 March 2006. [Google Scholar]

- Schmid, M.R.; Ates, S.; Dickmann, J.; Hundelshausen, F.; Wuensche, H.J. Parking space detection with hierarchical dynamic occupancy grids. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011. [Google Scholar]

- Dube, R.; Hahn, M.; Schutz, M.; Dickmann, J.; Gingras, D. Detection of Parked Vehicles from a Radar Based Occupancy Grid. In Proceedings of the IEEE Intelligent Vehicles Symposium, Ypsilanti, MI, USA, 8–11 June 2014. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Structure analysis based parking slot marking recognition for semi-automatic parking system. In Proceedings of the Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition and Structural and Syntactic Pattern Recognition, Hong Kong, China, 17–19 August 2006. [Google Scholar]

- Jung, H.G. Semi-automatic parking slot marking recognition for intelligent parking assist systems. J. Eng. 2014, 2014, 8–15. [Google Scholar] [CrossRef]

- Xu, J.; Chen, G.; Xie, M. Vision-guided automatic parking for smart car. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 5 October 2000. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Parking slot markings recognition for automatic parking assist system. In Proceedings of the IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006. [Google Scholar]

- Wang, C.; Zhang, H.; Yang, M.; Wang, X.; Ye, L.; Guo, C. Automatic Parking Based on a Bird’s Eye View Vision System. Adv. Mech. Eng. 2014, 2014, 1–13. [Google Scholar] [CrossRef]

- Tanaka, Y.; Saiki, M.; Katoh, M.; Endo, T. Development of image recognition for a parking assist system. In Proceedings of the 14th World Congress on Intelligent Transport Systems, London, UK, 9–13 October 2006. [Google Scholar]

- Houben, S.; Komar, M.; Hohm, A.; Luke, S.; Neuhausen, M.; Schlipsing, M. On-Vehicle Video-Based Parking Lot Recognition with Fisheye Optics. In Proceedings of the 16th IEEE International Conference on Intelligent Transportation Systems, Hague, Netherlands, 6–9 October 2013. [Google Scholar]

- Du, X.; Tan, K.K. Autonomous Reverse Parking System Based on Robust Path Generation and Improved Sliding Mode Control. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1225–1237. [Google Scholar] [CrossRef]

- Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Surround View based Parking Lot Detection and Tracking. In Proceedings of the IEEE Intelligent Vehicles Symposium, Seoul, Korea, 28 June–1 July 2015. [Google Scholar]

- Li, L.; Zhang, L.; Li, X.; Liu, X.; Shen, Y.; Xiong, L. Vision-based parking-slot detection: A benchmark and a learning-based approach. In Proceedings of the IEEE International Conference on Multimedia and Expo, Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Suhr, J.K.; Jung, H.G. Full-automatic recognition of various parking slot markings using a hierarchical tree structure. Opt. Eng. 2013, 52, 037203. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Fully-automatic recognition of various parking slot markings in Around View Monitor (AVM) image sequences. In Proceedings of the 15th IEEE International Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012. [Google Scholar]

- Lee, S.; Seo, S.W. Available parking slot recognition based on slot context analysis. IET Intell. Transp. Syst. 2016, 10, 594–604. [Google Scholar] [CrossRef]

- Lee, S.; Hyeon, D.; Park, G.; Baek, I.; Kim, S.W.; Seo, S.W. Directional-DBSCAN: Parking-slot Detection using a Clustering Method in Around-View Monitoring System. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Hyundai, Azera. Available online: https://www.hyundai.com/worldwide/en/cars/azera/highlights (accessed on 21 Feburary 2018).

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall: Englewood Cliffs, NJ, USA, 2008. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 1–6 September 1988. [Google Scholar]

| Category | Type | Number of Vacant Parking Slots |

|---|---|---|

| Lighting condition | Daytime | 235 |

| Nighttime | 214 | |

| Underground | 160 | |

| Parking slot type | Rectangular | 287 |

| Slanted rectangular | 98 | |

| Diamond | 120 | |

| Open rectangular | 104 | |

| Total | 609 | |

| Environment | #slot | #TP | #FP | Recall | Precision |

|---|---|---|---|---|---|

| Daytime | 235 | 230 | 4 | 97.87% | 98.29% |

| Nighttime | 214 | 202 | 1 | 94.39% | 99.51% |

| Underground | 160 | 148 | 9 | 92.50% | 94.27% |

| Total | 609 | 580 | 14 | 95.24% | 97.64% |

| Environment | #slot | #TP | #FP | Recall | Precision |

|---|---|---|---|---|---|

| Daytime | 235 | 219 | 25 | 93.19% | 89.75% |

| Nighttime | 214 | 65 | 161 | 30.37% | 28.76% |

| Underground | 160 | 101 | 53 | 63.13% | 65.58% |

| Total | 609 | 385 | 239 | 63.22% | 61.70% |

| Environment | #slot | #TP | #FP | Recall | Precision |

|---|---|---|---|---|---|

| Daytime | 235 | 65 | 1 | 27.66% | 98.48% |

| Nighttime | 214 | 36 | 0 | 16.82% | 100.00% |

| Underground | 160 | 150 | 8 | 93.75% | 94.94% |

| Total | 609 | 251 | 9 | 41.22% | 96.54% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suhr, J.K.; Jung, H.G. A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles. Sensors 2018, 18, 1213. https://doi.org/10.3390/s18041213

Suhr JK, Jung HG. A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles. Sensors. 2018; 18(4):1213. https://doi.org/10.3390/s18041213

Chicago/Turabian StyleSuhr, Jae Kyu, and Ho Gi Jung. 2018. "A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles" Sensors 18, no. 4: 1213. https://doi.org/10.3390/s18041213

APA StyleSuhr, J. K., & Jung, H. G. (2018). A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles. Sensors, 18(4), 1213. https://doi.org/10.3390/s18041213