Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes

Abstract

:1. Introduction

- First, we design and develop a novel power-law prior based channel optimization method that models the various errors associated with spectral reconstruction—namely error due to estimation (reconstruction error), noise (imaging error) and demosaicing (demosaicing error). These errors depend only on the camera parameters (e.g., spectral sensitivities of channels, the MSFA pattern, the demosaicing order, and variance of the sensor noise) and not on the content. To the best of our knowledge, this is the first model for defining all the different errors in a content-independent multi-spectral imaging pipeline.

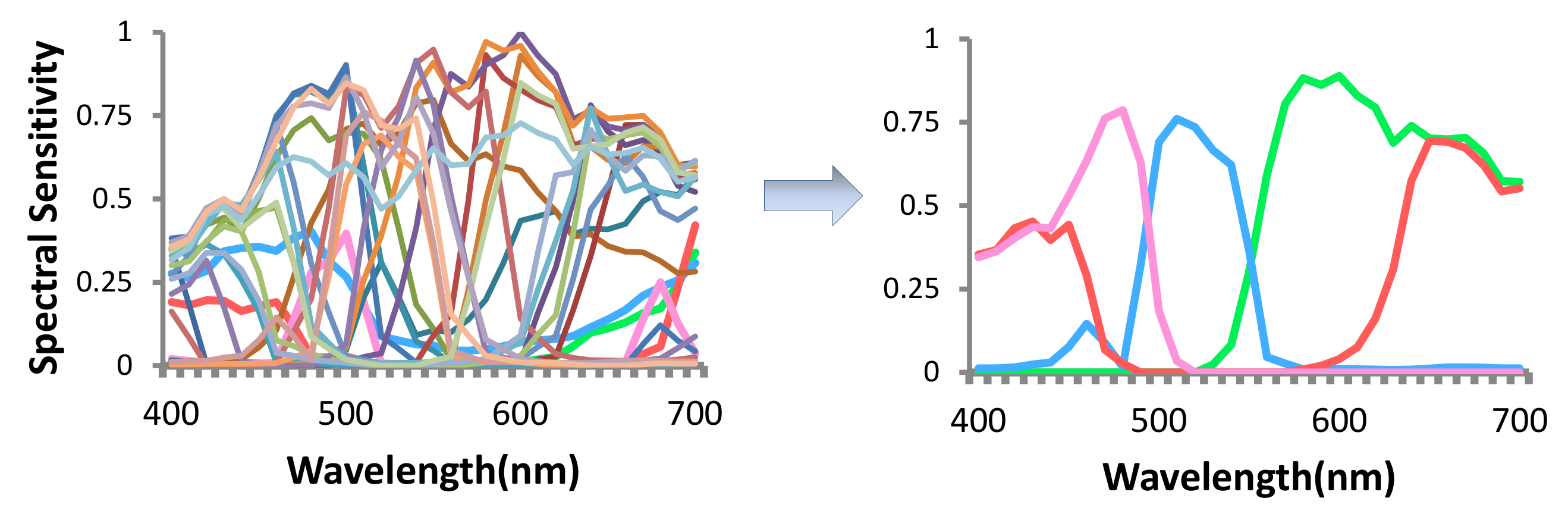

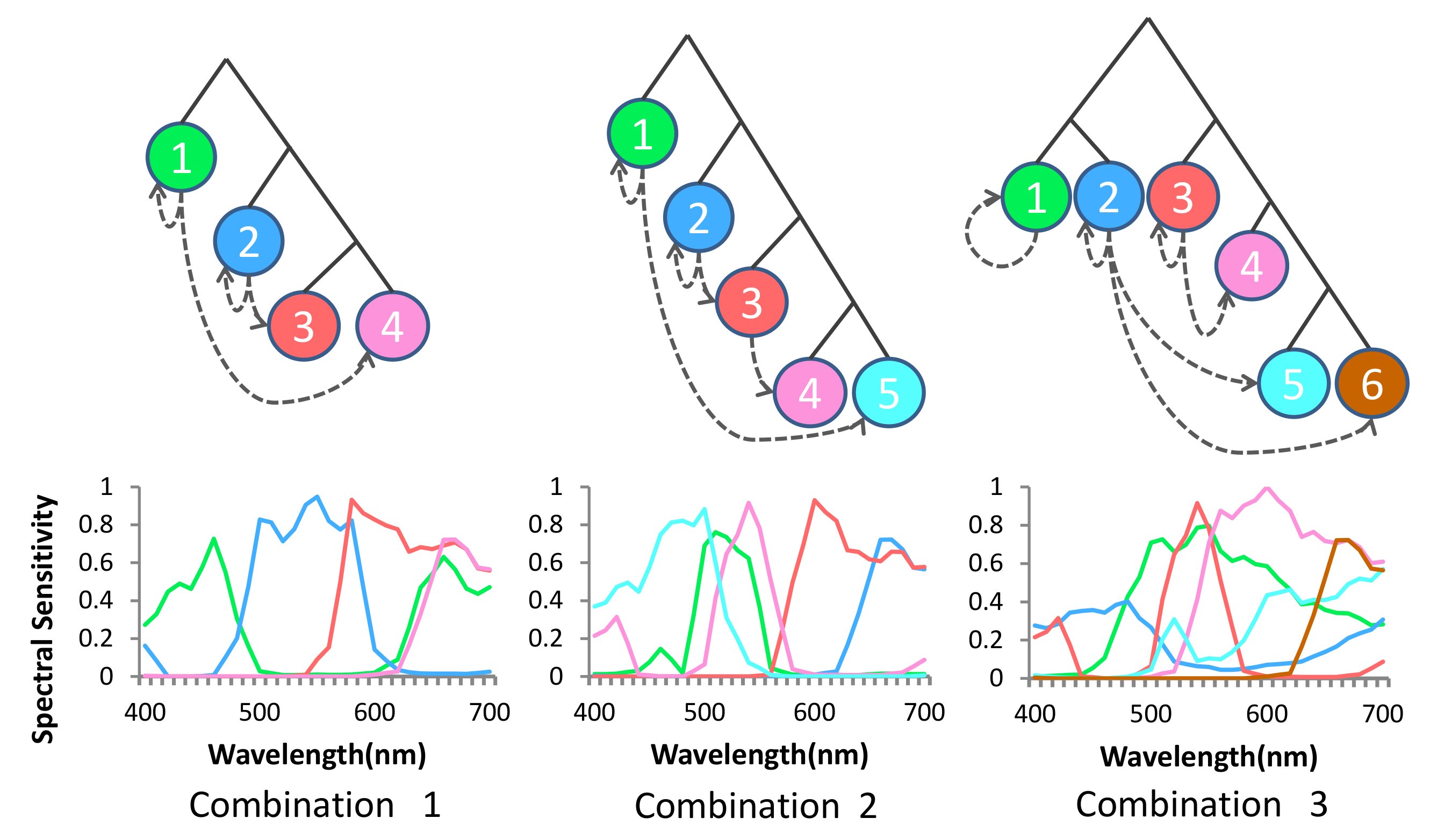

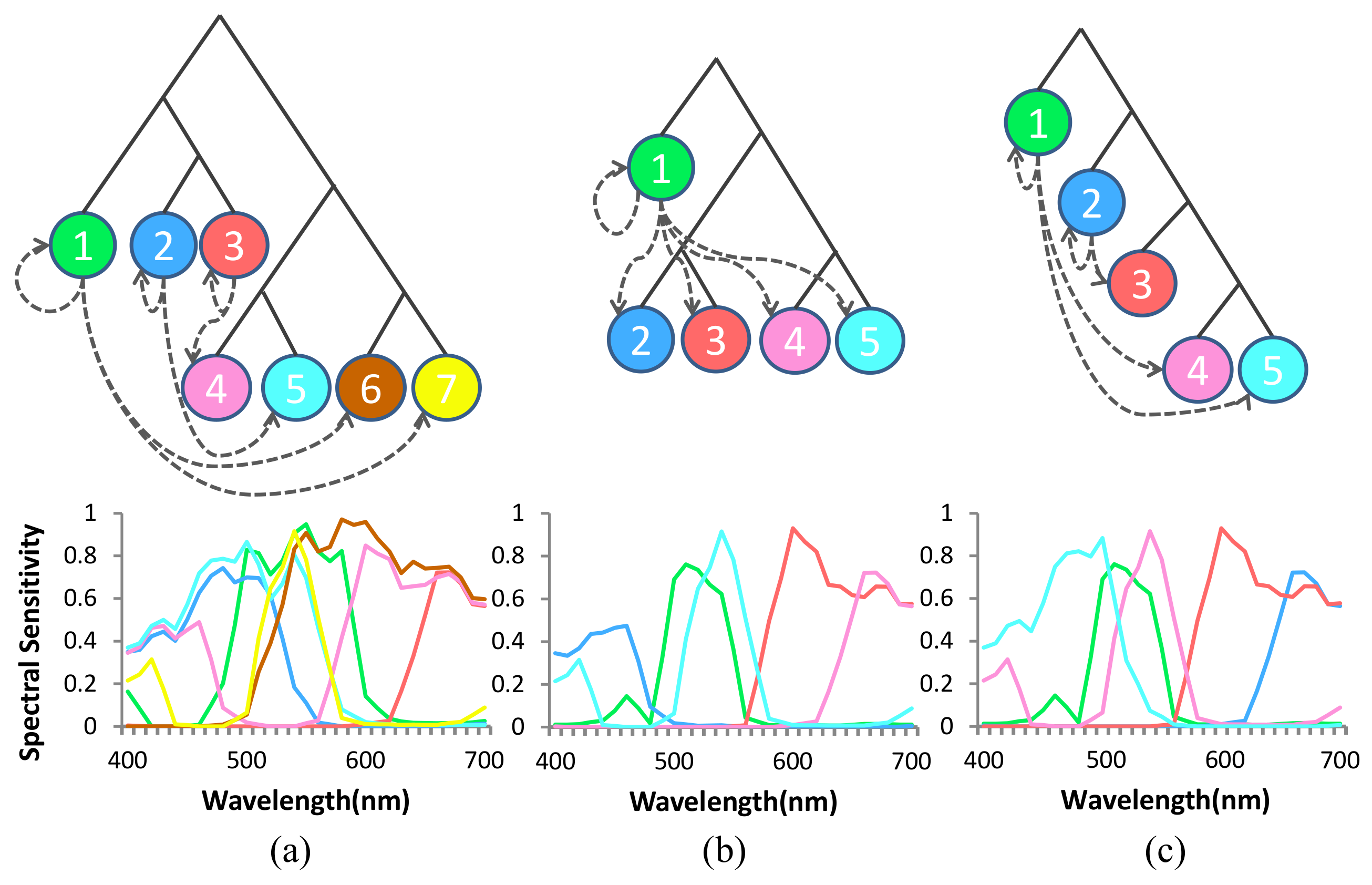

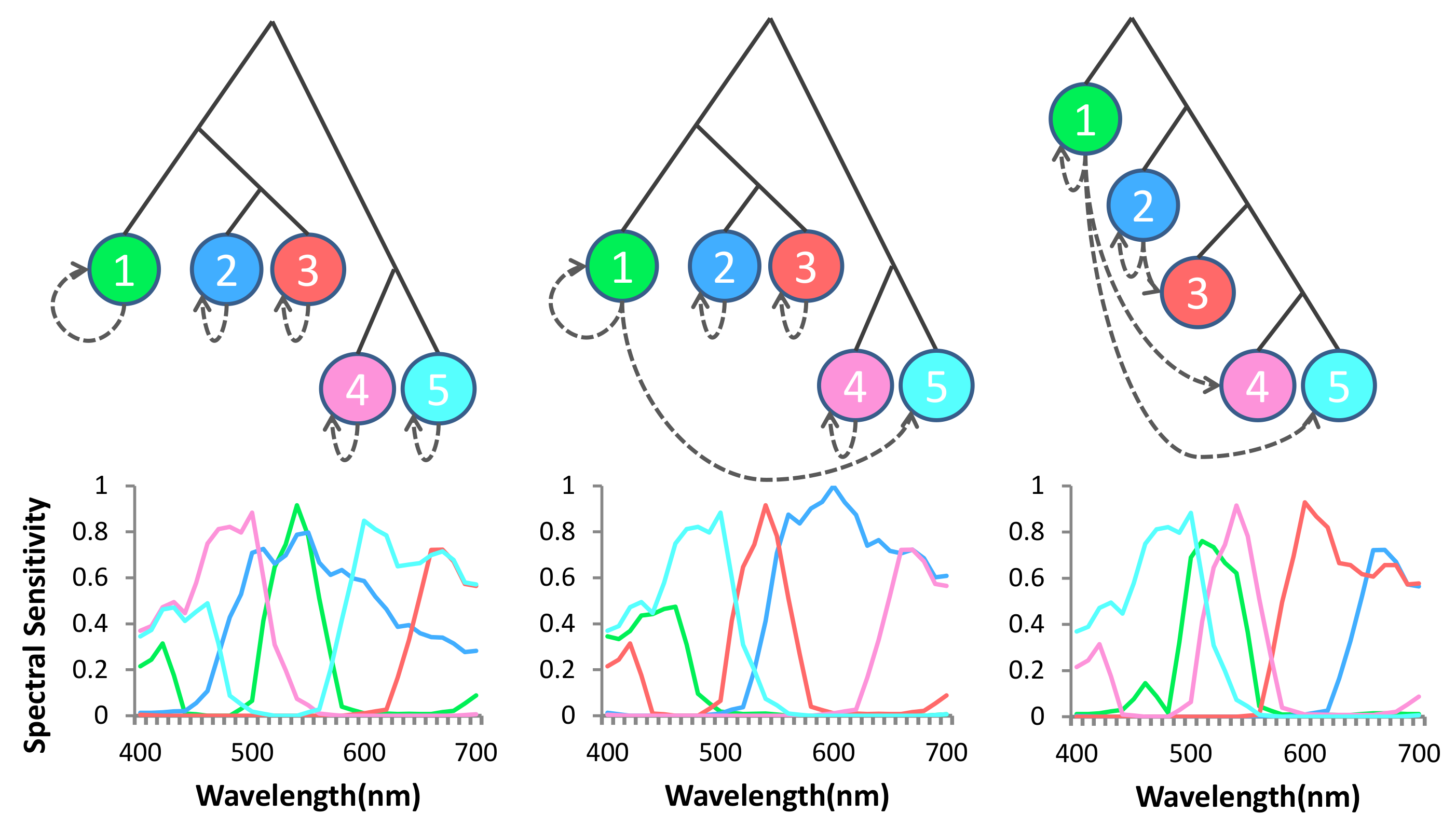

- Second, we construct an objective function that quantifies the total error using a combination of the three above-mentioned errors. Next, we use a discrete particle swarm optimization method to optimize the imaging pipeline by (1) selecting a few channels from a large set of candidate channels; (2) constructing a conducive mosaic pattern with the chosen channels on the MSFA; and (3) selecting a channel ordering during demosaicing that minimizes the objective function and hence the total error in spectral reconstruction.

2. Related Works

3. Modeling Error in Spectral Recovery

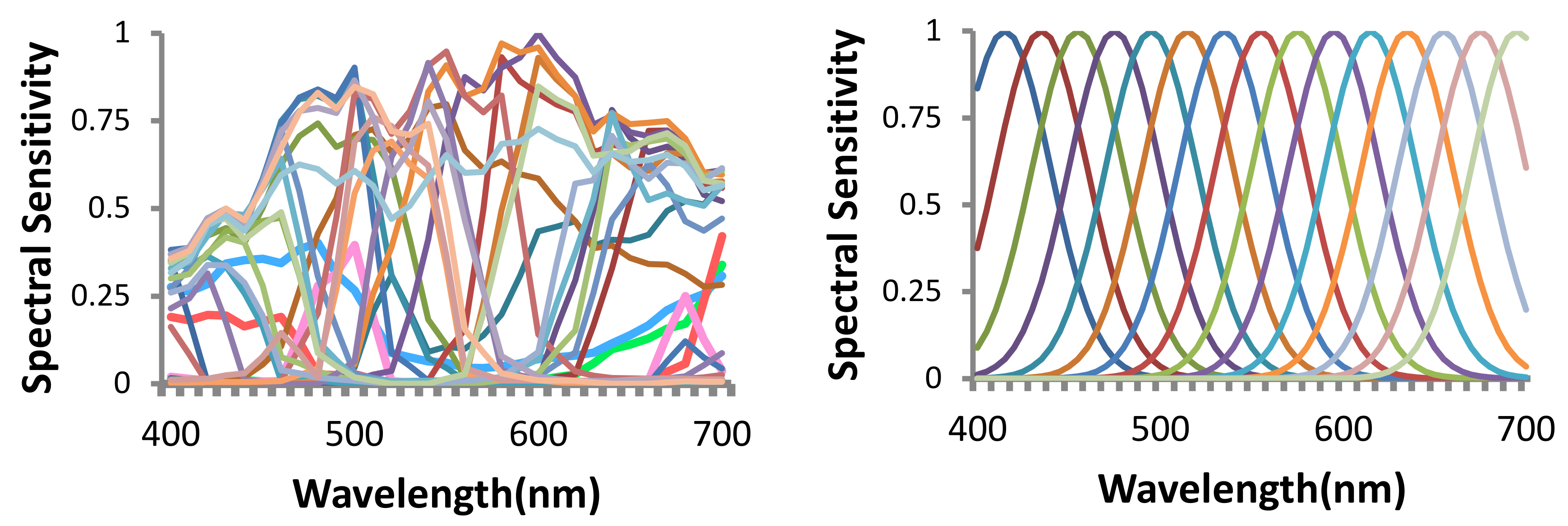

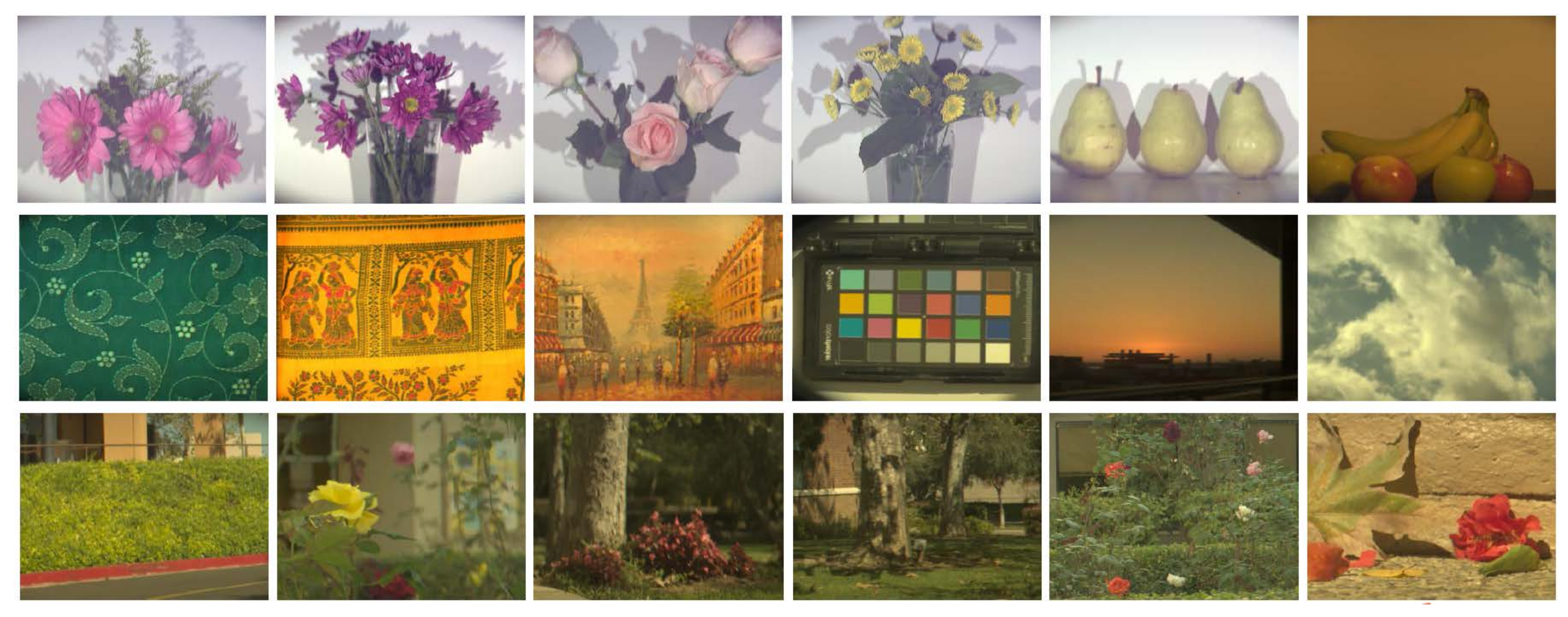

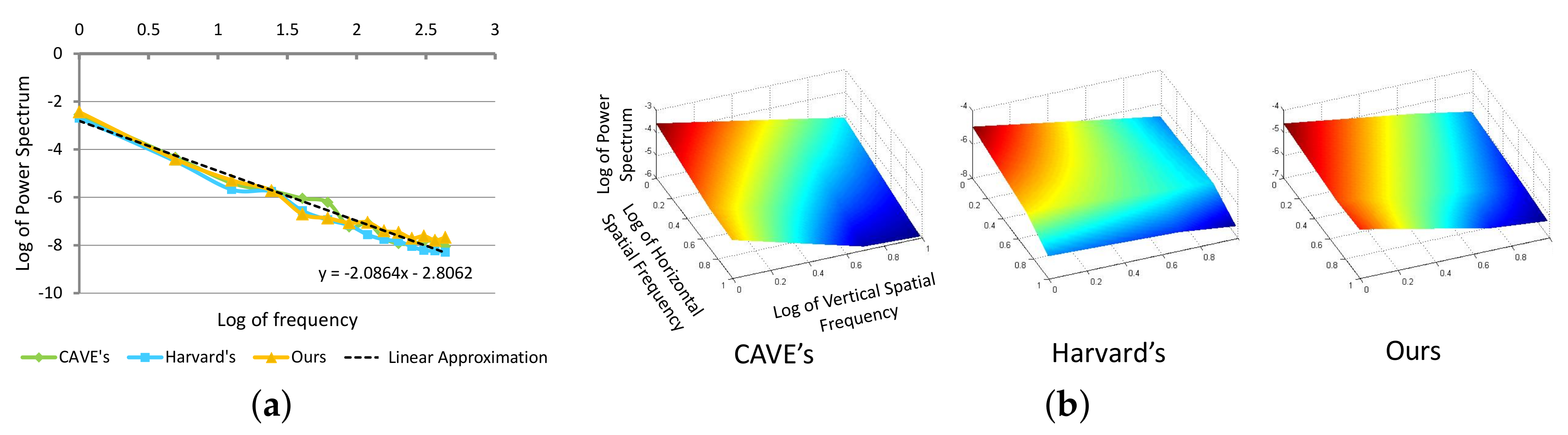

3.1. Spectral Characteristics of Natural Images

3.2. Modeling Recovery Error

3.3. Modeling Demosaicing Error and Imaging Noise

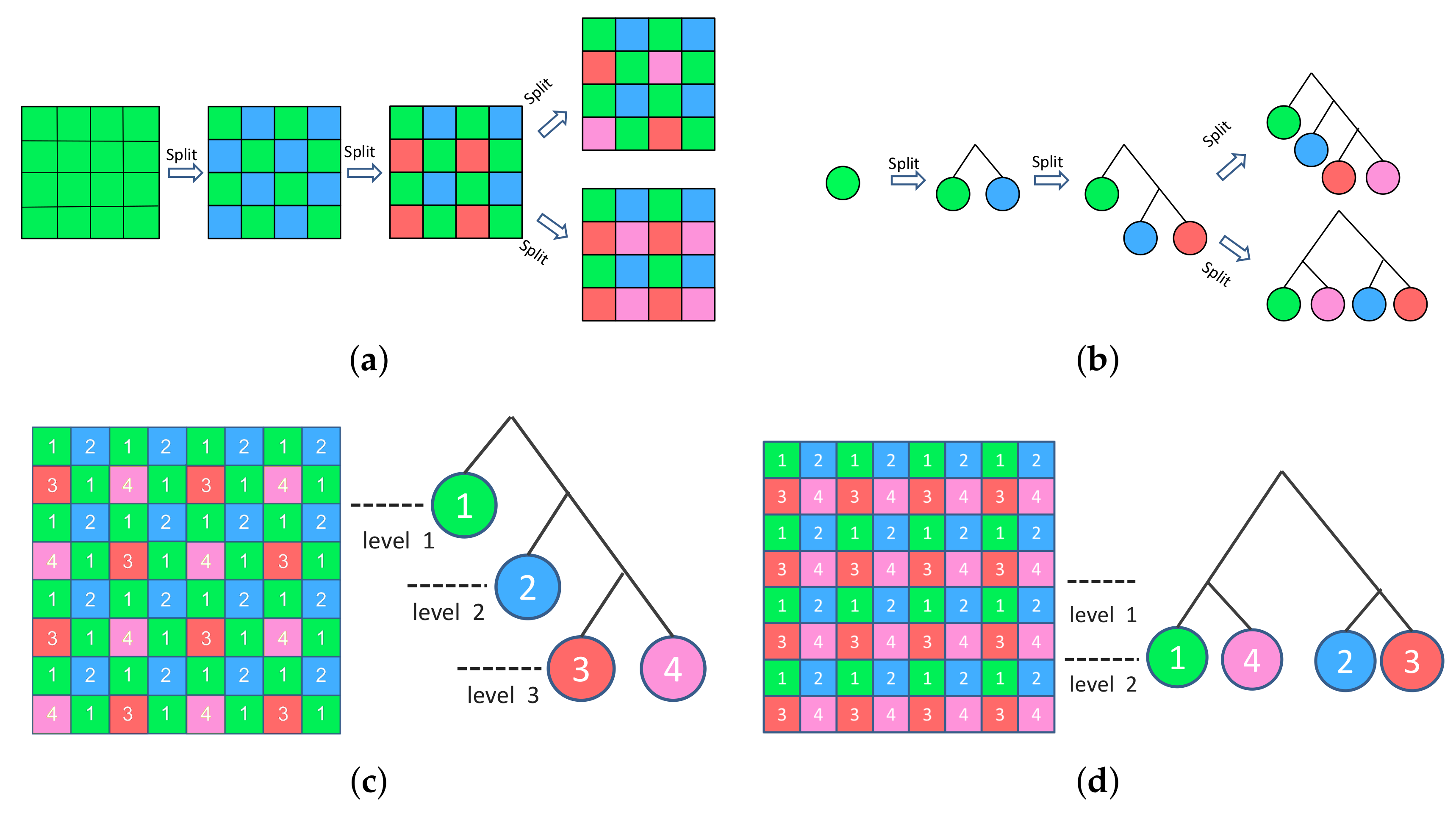

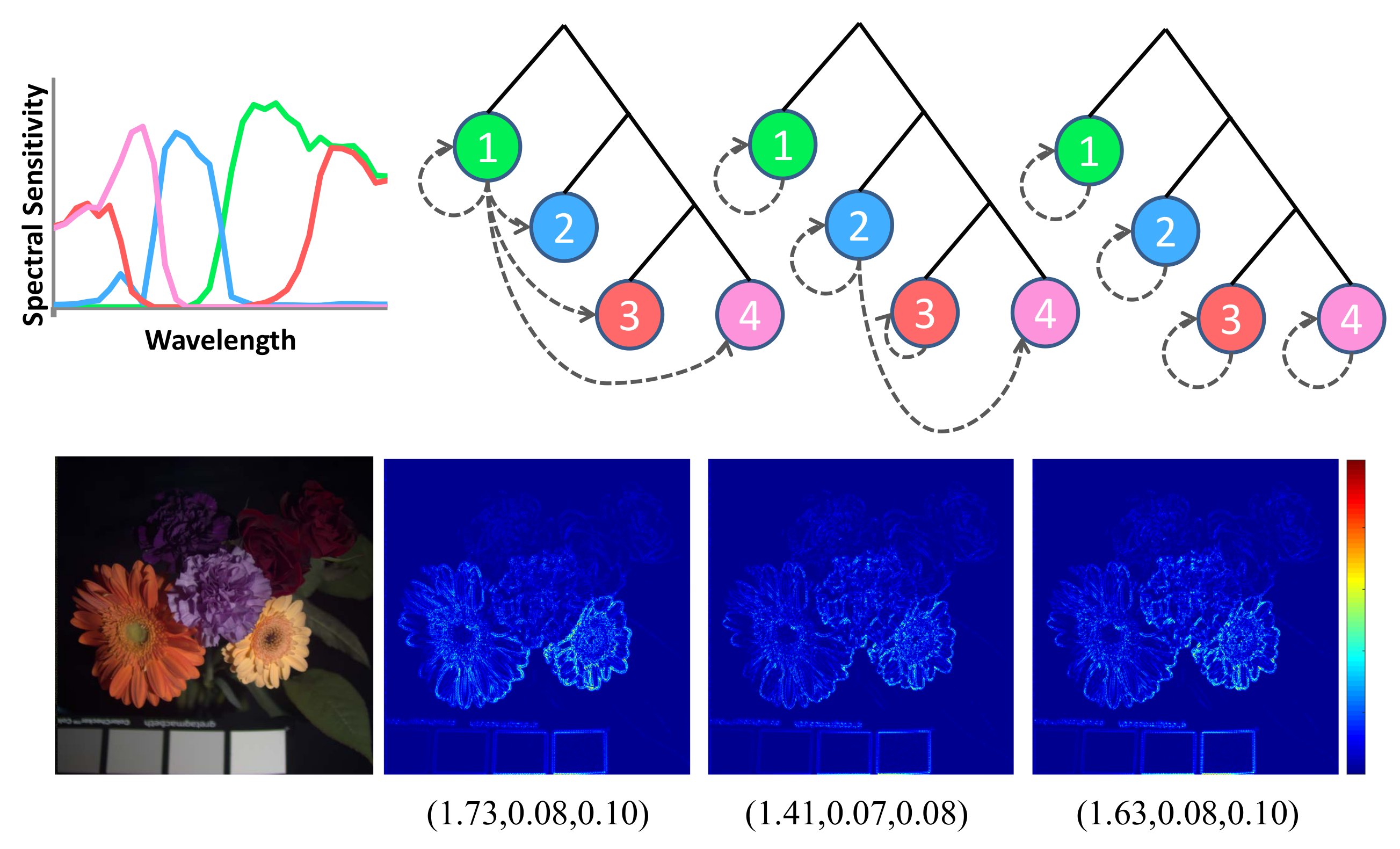

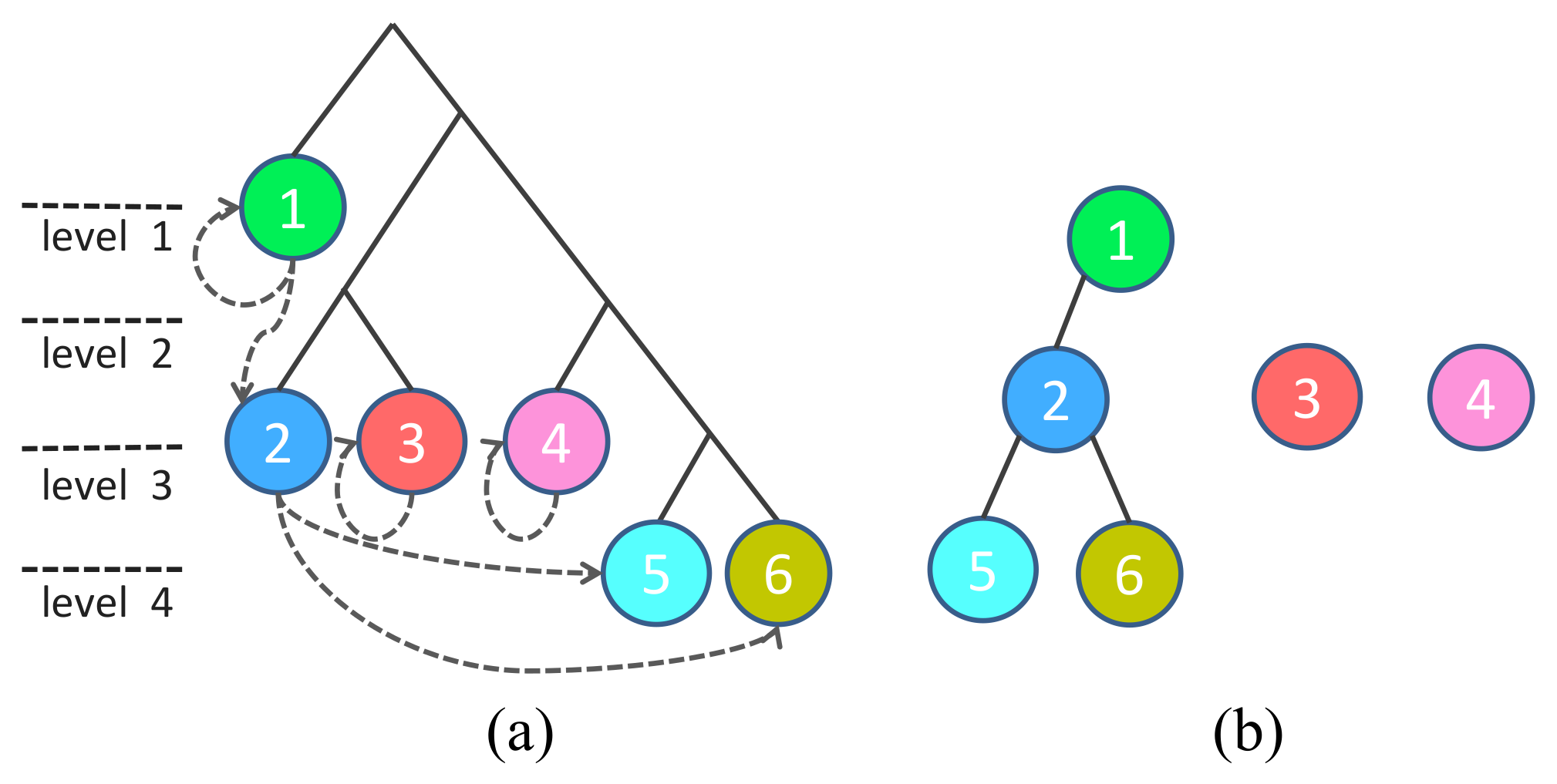

3.4. Channel-Independent Demosaicing Error

3.5. Channel-Dependent Demosaicing Error

4. Imaging Optimization Method

| Algorithm 1 The Proposed DPSO Method |

|

5. Evaluation and Comparison

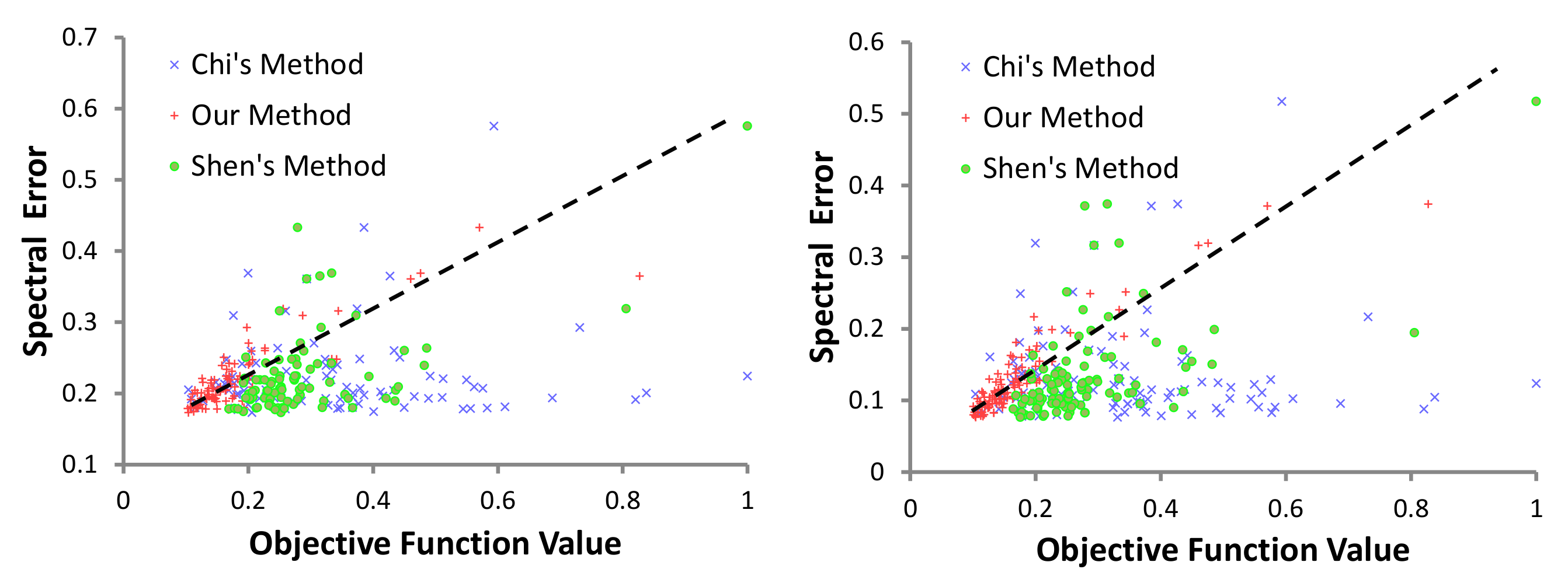

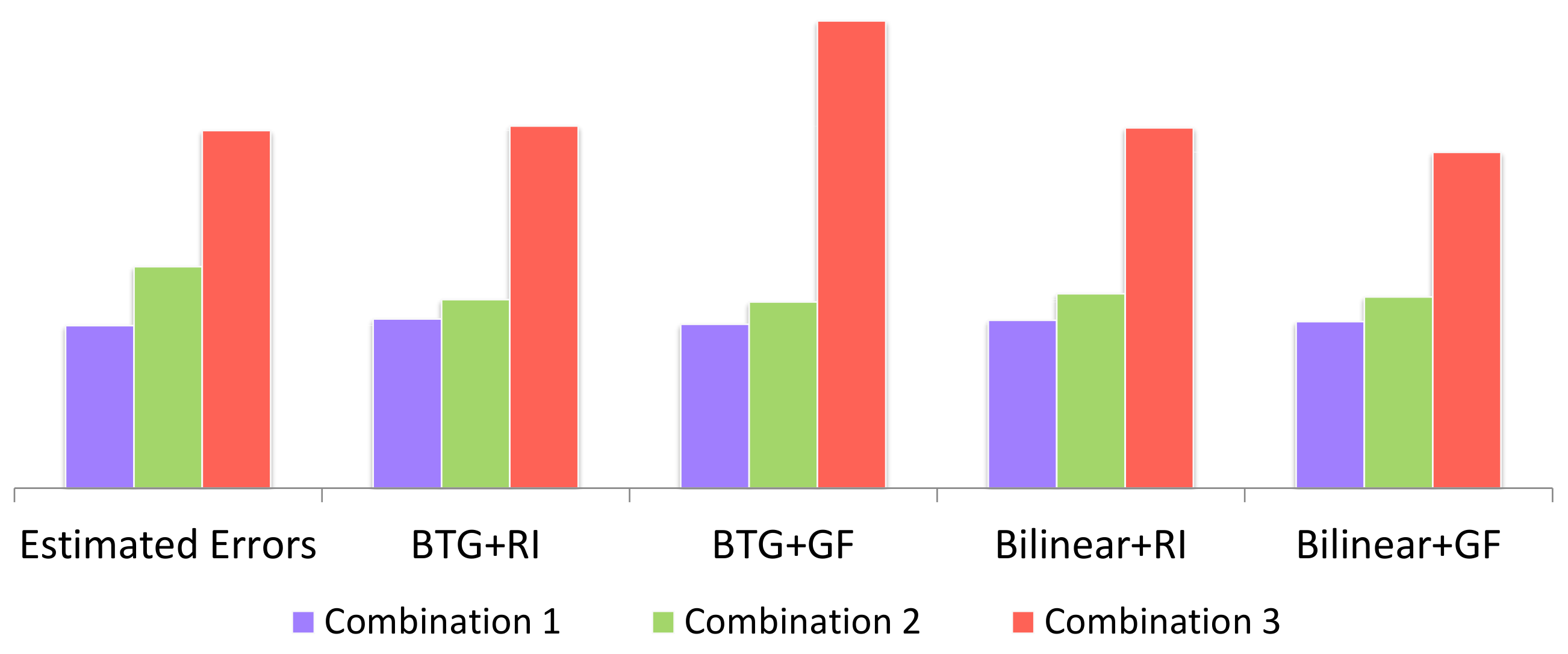

5.1. Error Models

5.2. Comparison with Previous Methods

6. Discussion

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chi, C.; Yoo, H.; Ben-Ezra, M. Multi-Spectral Imaging by Optimized Wide Band Illumination. Int. J. Comput. Vis. 2010, 86, 140–151. [Google Scholar] [CrossRef]

- Arad, B.; Ben-Shahar, O. Filter Selection for Hyperspectral Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3153–3161. [Google Scholar]

- Dannemiller, J.L. Spectral Reflectance of Natural Objects: How Many Basis Functions Are Necessary? J. Opt. Soc. Am. A 1992, 9, 507–515. [Google Scholar] [CrossRef]

- Chiao, C.-C.; Cronin, T.W.; Osorio, D. Color Signals in Natural Scenes: Characteristics of Reflectance Spectra and Effects of Natural Illuminants. J. Opt. Soc. Am. A 2000, 17, 218–224. [Google Scholar] [CrossRef]

- Hagen, N.; Kudenov, M.W. Review of Snapshot Spectral Imaging Technologies. Opt. Eng. 2013, 52, 090901. [Google Scholar] [CrossRef]

- Gao, L.; Bedard, N.; Hagen, N.; Kester, R.T.; Tkaczyk, T.S. Depth-resolved image mapping spectrometer (IMS) with structured illumination. Opt. Express 2011, 19, 17439–17452. [Google Scholar] [CrossRef] [PubMed]

- Mohan, A.; Raskar, R.; Tumblin, J. Agile Spectrum Imaging: Programmable Wavelength Modulation for Cameras and Projectors. Comput. Graph. Forum 2008, 27, 709–717. [Google Scholar] [CrossRef]

- Wagadarikar, A.A.; Pitsianis, N.P.; Sun, X.; Brady, D.J. Spectral Image Estimation for Coded Aperture Snapshot Spectral Imagers. In Image Reconstruction from Incomplete Data V; SPIE: Bellingham, WA, USA, 2008; p. 707602. [Google Scholar]

- Lin, X.; Liu, Y.; Wu, J.; Dai, Q. Spatial-spectral Encoded Compressive Hyperspectral Imaging. ACM Trans. Graph. 2014, 33, 233. [Google Scholar] [CrossRef]

- Jeon, D.S.; Choi, I.; Kim, M.H. Multisampling Compressive Video Spectroscopy. Comput. Graph. Forum 2016, 35, 467–477. [Google Scholar] [CrossRef]

- Mitra, K.; Cossairt, O.; Veeraraghavan, A. Can We Beat Hadamard Multiplexing? Data Driven Design and Analysis for Computational Imaging Systems. In Proceedings of the 2014 IEEE International Conference on Computational Photography (ICCP), Santa Clara, CA, USA, 2–4 May 2014. [Google Scholar]

- Yasuma, F.; Mitsunaga, T.; Iso, D.; Nayar, S.K. Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef] [PubMed]

- Sajadi, B.; Majumder, A.; Hiwada, K.; Maki, A.; Raskar, R. Switchable primaries using shiftable layers of color filter arrays. ACM Trans. Graph. 2011, 30, 65. [Google Scholar] [CrossRef]

- Monno, Y.; Kikuchi, S.; Tanaka, M.; Okutomi, M. A Practical One-Shot Multispectral Imaging System Using a Single Image Sensor. IEEE Trans. Image Process. 2015, 24, 3048–3059. [Google Scholar] [CrossRef] [PubMed]

- Parmar, M.; Reeves, S.J. Selection of Optimal Spectral Sensitivity Functions for Color Filter Arrays. IEEE Trans. Image Process. 2010, 19, 3190–3203. [Google Scholar] [CrossRef] [PubMed]

- Sadeghipoor, Z.; Lu, Y.M.; Süsstrunk, S. Optimum spectral sensitivity functions for single sensor color imaging. In Proceedings of the IS&T/SPIE Electronic Imaging, Burlingame, CA, USA, 22–26 January 2012; p. 829904. [Google Scholar]

- Park, J.I.; Lee, M.H.; Grossberg, M.D.; Nayar, S.K. Multispectral Imaging using Multiplexed Illumination. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Shen, H.L.; Yao, J.F.; Li, C.; Du, X.; Shao, S.J.; Xin, J.H. Channel selection for multispectral color imaging using binary differential evolution. Appl. Opt. 2014, 53, 634–642. [Google Scholar] [CrossRef] [PubMed]

- López-Álvarez, M.A.; Hernández-Andrés, J.; Valero, E.M.; Romero, J. Selecting algorithms, sensors, and linear bases for optimum spectral recovery of skylight. J. Opt. Soc. Am. A 2007, 24, 942–956. [Google Scholar] [CrossRef]

- Shimano, N. Optimization of Spectral Sensitivities with Gaussian Distribution Functions for a Color Image Acquisition Device in the Presence of Noise. Opt. Eng. 2006, 45, 013201. [Google Scholar] [CrossRef]

- Monno, Y.; Kitao, T.; Tanaka, M.; Okutomi, M. Optimal Spectral Sensitivity Functions for a Single-Camera One-Shot Multispectral Imaging System. In Proceedings of the 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 2137–2140. [Google Scholar]

- Jia, J.; Barnard, K.J.; Hirakawa, K. Fourier spectral filter array for optimal multispectral imaging. IEEE Trans. Comput. Imaging 2016, 25, 1530–1543. [Google Scholar]

- Lapray, P.J.; Wang, X.; Thomas, J.B.; Gouton, P. Multispectral Filter Arrays: Recent Advances and Practical Implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, C.; Zhao, J.; Yuan, Q. Efficient spectral reconstruction using a trichromatic camera via sample optimization. Vis. Comput. 2018, 1–11. [Google Scholar] [CrossRef]

- Shinoda, K.; Hamasaki, T.; Hasegawa, M.; Kato, S.; Ortega, A. Quality metric for filter arrangement in a multispectral filter array. In Proceedings of the Picture Coding Symposium (PCS), San Jose, CA, USA, 8–11 December 2013; pp. 149–152. [Google Scholar]

- Miao, L.; Qi, H.; Ramanath, R.; Snyder, W.E. Binary tree-based generic demosaicking algorithm for multispectral filter arrays. IEEE Trans. Image Process. 2006, 15, 3550–3558. [Google Scholar] [CrossRef] [PubMed]

- Jaiswal, S.P.; Fang, L.; Jakhetiya, V.; Pang, J.; Mueller, K.; Au, O.C. Adaptive multispectral demosaicking based on frequency-domain analysis of spectral correlation. IEEE Trans. Image Process. 2017, 26, 953–968. [Google Scholar] [CrossRef] [PubMed]

- Mihoubi, S.; Losson, O.; Mathon, B.; Macaire, L. Multispectral demosaicing using pseudo-panchromatic image. IEEE Trans. Comput. Imaging 2017, 3, 982–995. [Google Scholar] [CrossRef]

- Alvarez-Cortes, S.; Kunkel, T.; Masia, B. Practical Low-Cost Recovery of Spectral Power Distributions. Comput. Graph. Forum 2016, 35, 166–178. [Google Scholar] [CrossRef]

- Shimano, N.; Terai, K.; Hironaga, M. Recovery of spectral reflectances of objects being imaged by multispectral cameras. J. Opt. Soc. Am. A 2007, 24, 3211–3219. [Google Scholar] [CrossRef]

- Chakrabarti, A.; Zickler, T. Statistics of Real-World Hyperspectral Images. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 193–200. [Google Scholar]

- Parkkinen, J.P.; Hallikainen, J.; Jaaskelainen, T. Characteristic spectra of Munsell colors. J. Opt. Soc. Am. A 1989, 6, 318–322. [Google Scholar] [CrossRef]

- Stiles, W.S.; Wyszecki, G.; Ohta, N. Counting metameric object-color stimuli using frequency-limited spectral reflectance functions. J. Opt. Soc. Am. A 1977, 67, 779–786. [Google Scholar] [CrossRef]

- Goldstein, E.B.; Brockmole, J. Sensation and Perception; Cengage Learning: Boston, MA, USA, 2016. [Google Scholar]

- Torralba, A.; Oliva, A. Statistics of Natural Image Categories. Netw. Comput. Neural Syst. 2003, 14, 391–412. [Google Scholar] [CrossRef]

- Hyvarinen, A.; Hurri, J.; Hoyer, P.O. Natural Image Statistics; Springer: Berlin, Germany, 2009. [Google Scholar]

- Pouli, F.; Cunningham, D.W.; Reinhard, E. Image Statistics and their Applications in Computer Graphics. 2010. Available online: https://s3.amazonaws.com/academia.edu.documents/44894836/Image_Statistics_and_their_Applications_20160419-16347-1hlzcca.pdf?AWSAccessKeyId=AKIAIWOWYYGZ2Y53UL3A&Expires=1523335119&Signature=52fMxbpkPhRptNNF0p6pfPLE58A%3D&response-content-disposition=inline%3B%20filename%3DImage_statistics_and_their_applications.pdf (accessed on 10 April 2018).

- Spectral Database of CAVE Laboratory, Columbia University. Available online: http://www.cs.columbia.edu/CAVE/databases/multispectral/ (accessed on 1 February 2016).

- Lukac, R.; Plataniotis, K.N. Color Filter Arrays: Design and Performance Analysis. IEEE Trans. Consum. Electron. 2005, 51, 1260–1267. [Google Scholar] [CrossRef]

- Miao, L.; Qi, H. The Design and Evaluation of a Generic Method for Generating Mosaicked Multispectral Filter Arrays. IEEE Trans. Image Process. 2006, 15, 2780–2791. [Google Scholar] [CrossRef] [PubMed]

- Takamatsu, J.; Matsushita, Y.; Ogasawara, T.; Ikeuchi, K. Estimating Demosaicing Algorithms using Image Noise Variance. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 279–286. [Google Scholar]

- Shimano, N. Recovery of Spectral Reflectances of Objects Being Imaged Without Prior Knowledge. IEEE Trans. Signal Process. 2006, 15, 1848–1856. [Google Scholar] [CrossRef]

- Losson, O.; Macaire, L.; Yang, Y. Comparison of Color Demosaicing Methods. Adv. Imag. Electron. Phys. 2010, 162, 173–265. [Google Scholar]

- Li, X.; Gunturk, B.; Zhang, L. Image demosaicing: A systematic survey. In Visual Communications and Image Processing 2008; SPIE: Bellingham, WA, USA, 2008; Volume 6822, p. 68221J. [Google Scholar]

- Kennedy, J. Particle Swarm Optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. A discrete binary version of the particle swarm algorithm. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Orlando, FL, USA, 12–15 October 1997; Volume 5, pp. 4104–4108. [Google Scholar]

- Spectral Database of Spectral Color Research Group, University of Eastern Finland. Available online: https://www.uef.fi/spectral/spectral-database (accessed on 1 February 2016).

- Monno, Y.; Tanaka, M.; Okutomi, M. Multispectral demosaicking using adaptive kernel upsampling. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 3157–3160. [Google Scholar]

- Monno, Y.; Kiku, D.; Kikuchi, S.; Tanaka, M.; Okutomi, M. Multispectral demosaicking with novel guide image generation and residual interpolation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 645–649. [Google Scholar]

- Joshi, S.; Boyd, S. Sensor Selection via Convex Optimization. IEEE Trans. Signal Process 2009, 57, 451–462. [Google Scholar] [CrossRef]

| Methods | CAVE’s Dataset | Harvard’s Dataset | Our Dataset | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Max | Mean | Std | Max | Mean | Std | Max | Mean | Std | |

| GAP | 0.4518 | 0.3231 | 0.0880 | 0.2849 | 0.0794 | 0.0854 | 0.2602 | 0.1964 | 0.0421 |

| Chi and Monno’s | 0.4381 | 0.2852 | 0.0867 | 0.2498 | 0.0744 | 0.0753 | 0.2231 | 0.1880 | 0.0428 |

| Ours | 0.4115 | 0.2775 | 0.0814 | 0.2196 | 0.0629 | 0.0679 | 0.1999 | 0.1586 | 0.0342 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Majumder, A.; Zhang, H.; Gopi, M. Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes. Sensors 2018, 18, 1172. https://doi.org/10.3390/s18041172

Li Y, Majumder A, Zhang H, Gopi M. Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes. Sensors. 2018; 18(4):1172. https://doi.org/10.3390/s18041172

Chicago/Turabian StyleLi, Yuqi, Aditi Majumder, Hao Zhang, and M. Gopi. 2018. "Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes" Sensors 18, no. 4: 1172. https://doi.org/10.3390/s18041172

APA StyleLi, Y., Majumder, A., Zhang, H., & Gopi, M. (2018). Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes. Sensors, 18(4), 1172. https://doi.org/10.3390/s18041172