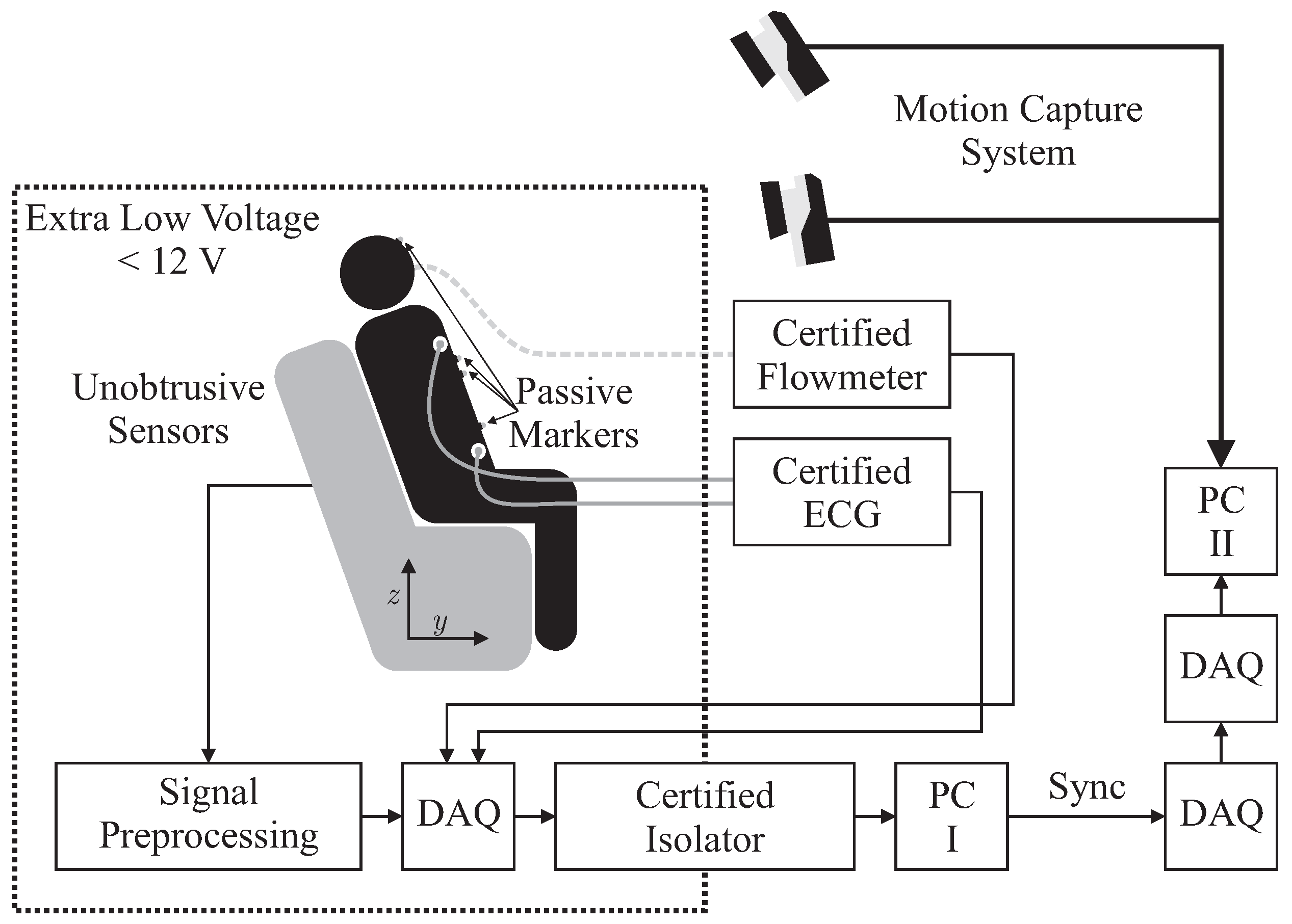

2.1. Hardware Setup

Several sensors were integrated into the backrest and the seat of an armchair for unobtrusive biosignal acquisition. To ensure safety, all sensors were operated with extra low voltage (below 12 V), which was provided by an EN 60601-1 certified power supply. After analog to digital conversion with a “NI USB-6212” (National Instruments, Austin, TX, USA) data acquisition system (DAQ) at a sampling frequency of Hz, data was transferred to PC I via USB using an EN 60601-1 certified USB isolation device. For reference biosignal acquisition, an “MP30” patient monitor, manufactured by Philips, Amsterdam, The Netherlands and a “Pneumotach Amplifier 1 Series 1110” by Hans Rudolph, Inc., Shawnee, KS, USA were used as analog front ends. To record reference motion data, the “Oqus” system from Qualisys AB, Göteborg, Sweden was used. It was configured with seven “Opus 500+” infrared (IR) tracking cameras with a resolution of four megapixels and a maximum frame rate of Hz. The aperture of the C-mount lenses with a focal length of 13 mm was set to f/4.0 and manual focusing was performed. Illumination was provided by rings of IR LEDs integrated into each camera unit. Seven passive reflective markers were used and tracked at Hz on PC II. To record analog data synchronously with marker positions, a synchronized USB-DAQ could be accessed by the Oqus system. However, since no isolation in accordance with EN 60601-1 could be guaranteed, the aforementioned method for sensor data acquisition by PC I was used. In addition, a synchronization signal was generated using a second DAQ, which was in turn recorded by the synchronized DAQ connected to PC II.

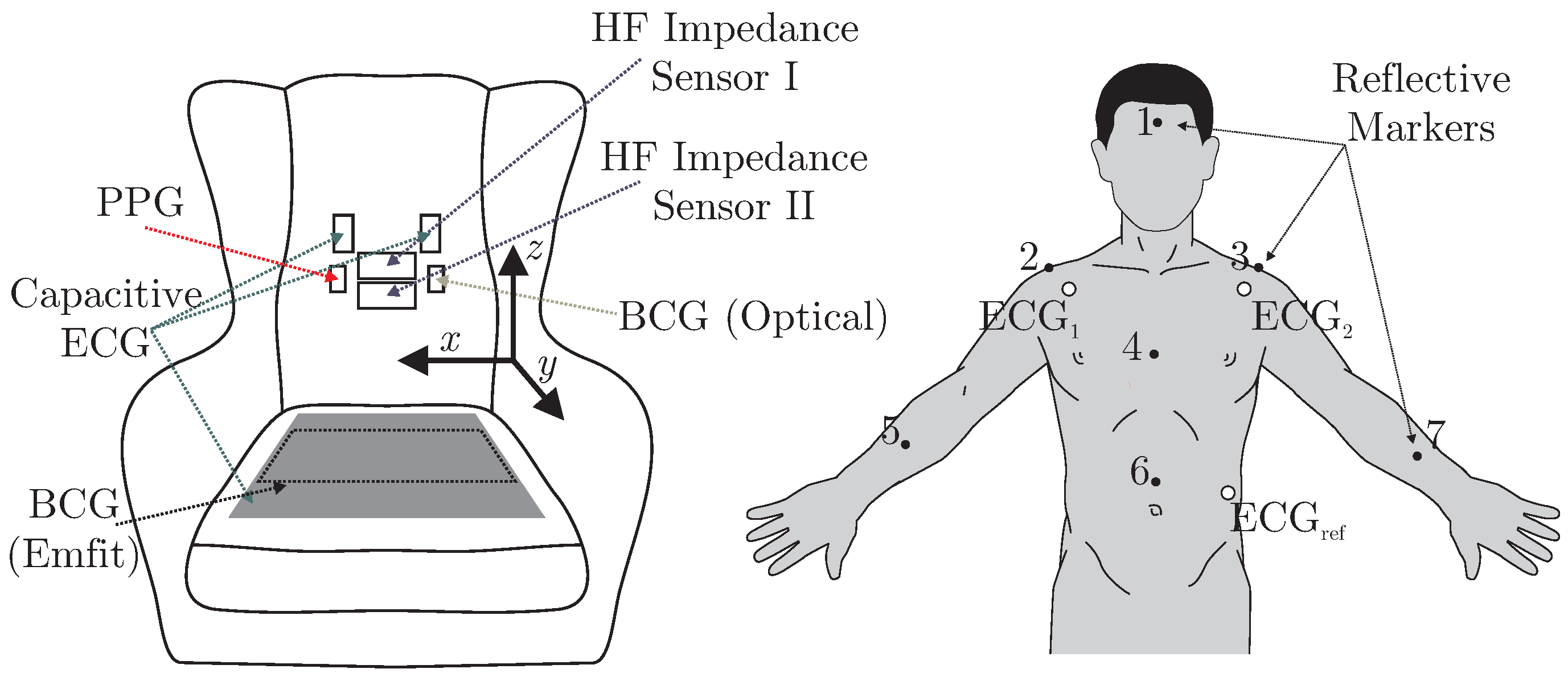

A total of six different sensors for unobtrusive biosignal acquisition were integrated into the backrest and the seat of the armchair (see

Figure 2 (left)). As the focus of this work is the overall system, no in-depth descriptions and schematics of the individual sensing modalities are given. The interested reader is referred to the respective references for more details (see below). For the acquisition of cardiac-related signals, four modalities were included. For one, a capacitive ECG system as described in [

23] with two electrodes of ultra-high input impedance was integrated into the backrest. A driven-right-leg (DRL) electrode was placed on the seat using conductive fabric. To acquire a PPG signal, a sensor consisting of three NIR LEDs and a centered photodiode was implemented using the design proposed in [

7]. The signal of the photodiode was bandpass-filtered (0.15 to 5 Hz) and amplified by 40 dB. An optical BCG (BCG Opt) was recorded with a second PPG module that was not facing the subject, but the padding of the backrest as described in [

5]. Another BCG signal was recorded from the seat of the chair using an “EMFi transducer” mat (L-Series by

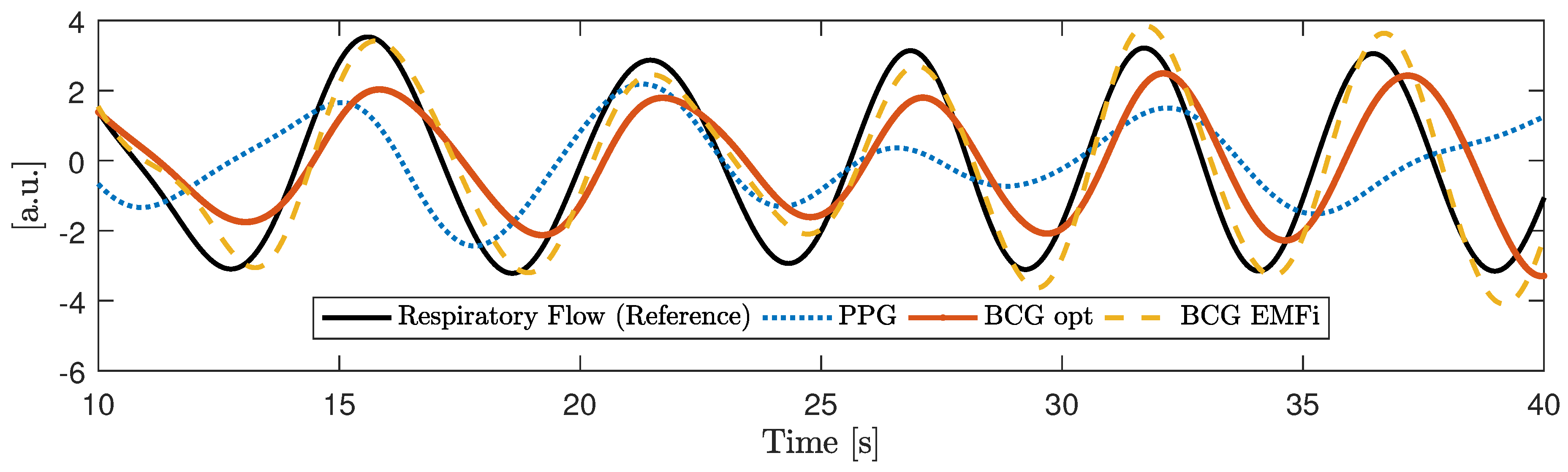

Emfit Ltd., Vaajakoski, Finland). A custom built analog amplifier/bandpass with a passband of 0.01 to 200 Hz and gain of 24.61 dB was used. To record respiratory signals, two capacitive HF impedance sensors were used with a straightforward approach: the thorax is modeled as a resistor-capacitor (RC)-element whose parameters change with cardiorespiratory activity. This RC-element is part of an oscillator circuit, and thus cardiorespiratory activity can be estimated by monitoring the resonant frequency [

24,

25,

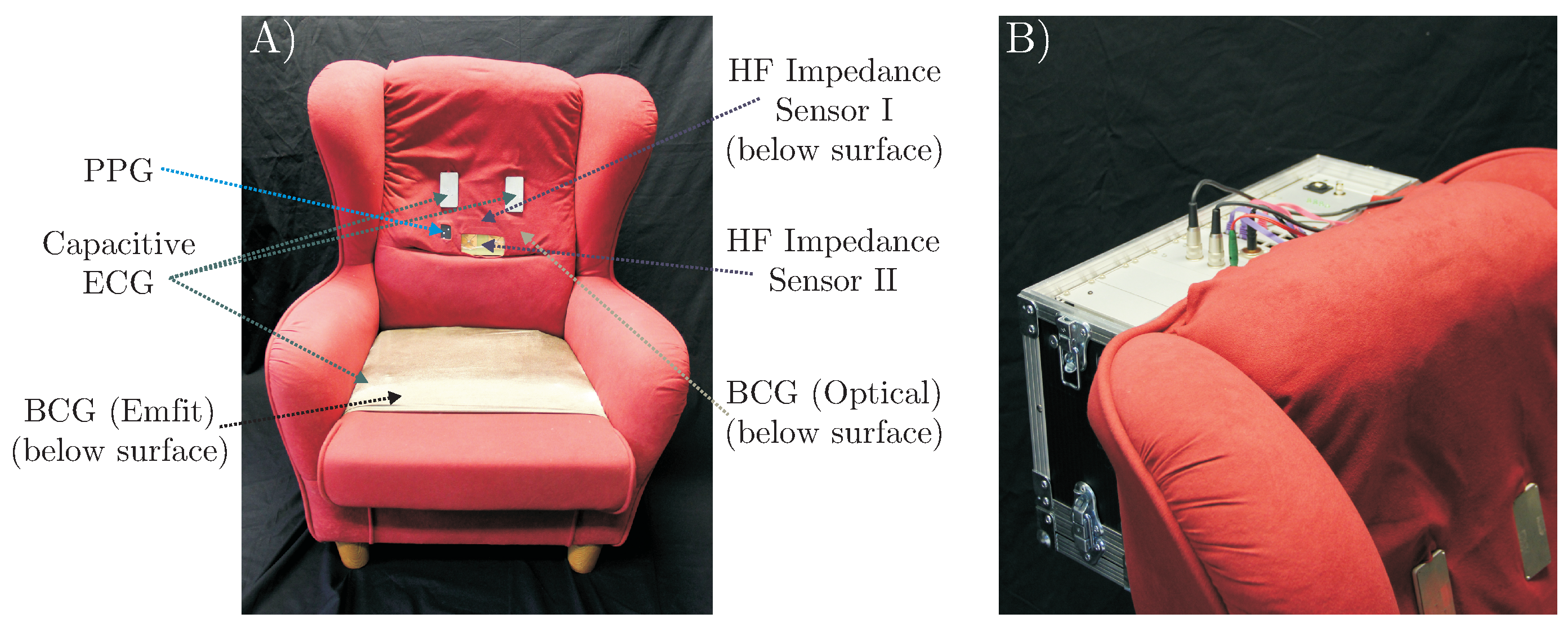

26]. A photograph of the complete setup is shown in

Figure 3.

2.2. Measurement Protocol

To evaluate the multimodal sensor system, two different measurement scenarios were investigated: “Motion Sequence” and “Video Sequence”. All recordings were performed on healthy volunteers in our lab as self-experimentation, and informed consent was obtained prior to our measurements. Details are given in

Table 1, including age, height, body-mass-index (BMI) and gender. Mean values and standard deviation (SD) are calculated. Note that subjects were free to participate in either scenario and in both scenarios.

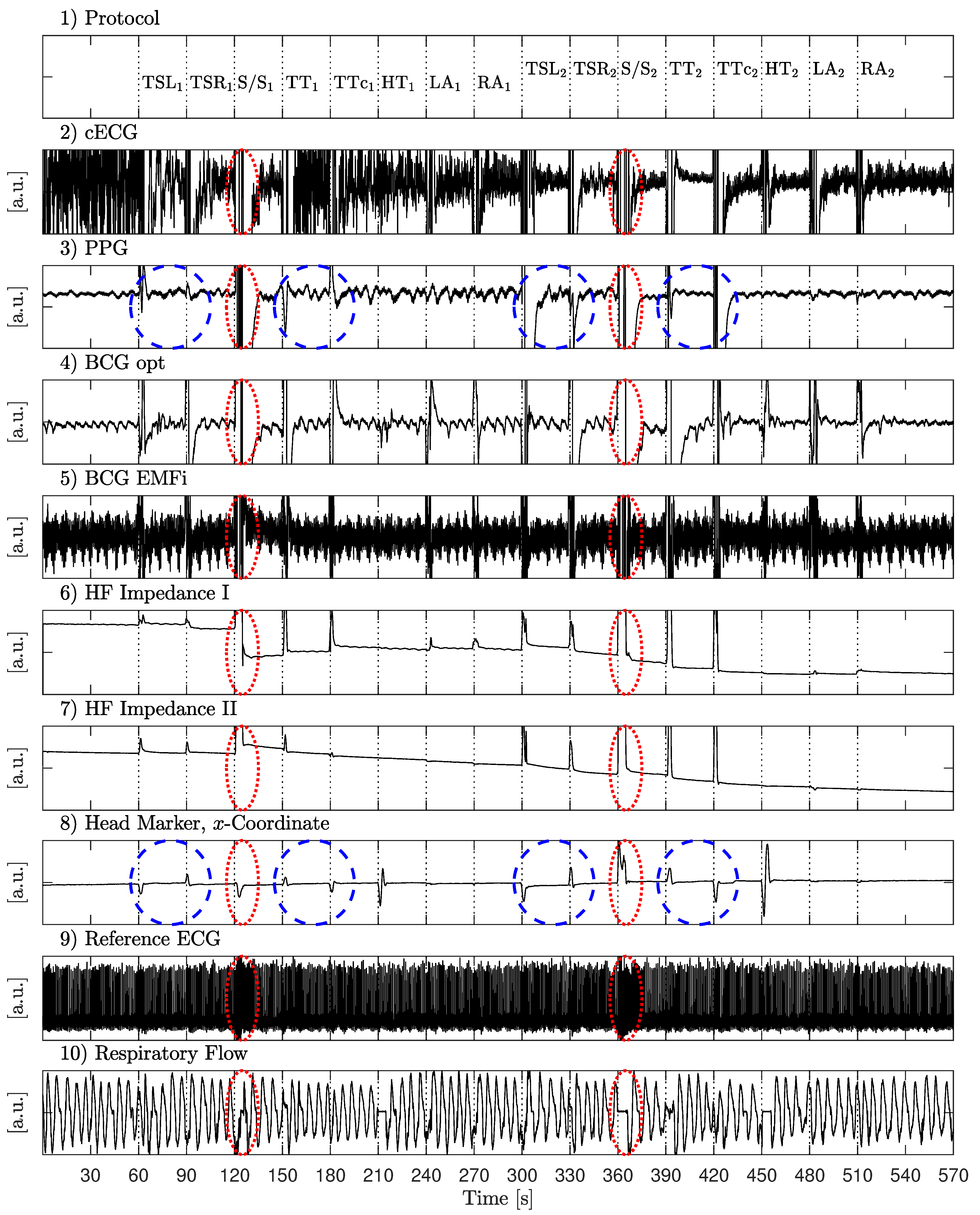

The first scenario aimed at identifying the effect of various movements on unobtrusive biosignal acquisition. Every 30 s, the subjects were asked to perform one of 16 specific movements as listed in

Table 2.

In the first half, movements (column 2) were performed with a low amplitude (column 3), in the second half, a higher amplitude was asked for (column 4). Between movements, subjects were asked so sit as still as possible. The recording started and ended with 60 s of motionless sitting. A total of 81 min of data from nine different subjects, including 5994 heartbeats, was recorded.

The second set of measurements were performed to analyze the system in a realistic application scenario. For this, subjects were asked to sit in the armchair and watch a movie of their choice. A total of 425 min of data from seven subjects, including 28,555 heartbeats, was recorded. The data is publicly available as part of the UnoViS database [

27], accessible for free from

https://www.medit.hia.rwth-aachen.de/unovis/.

2.3. Performance Analyses and Information Fusion

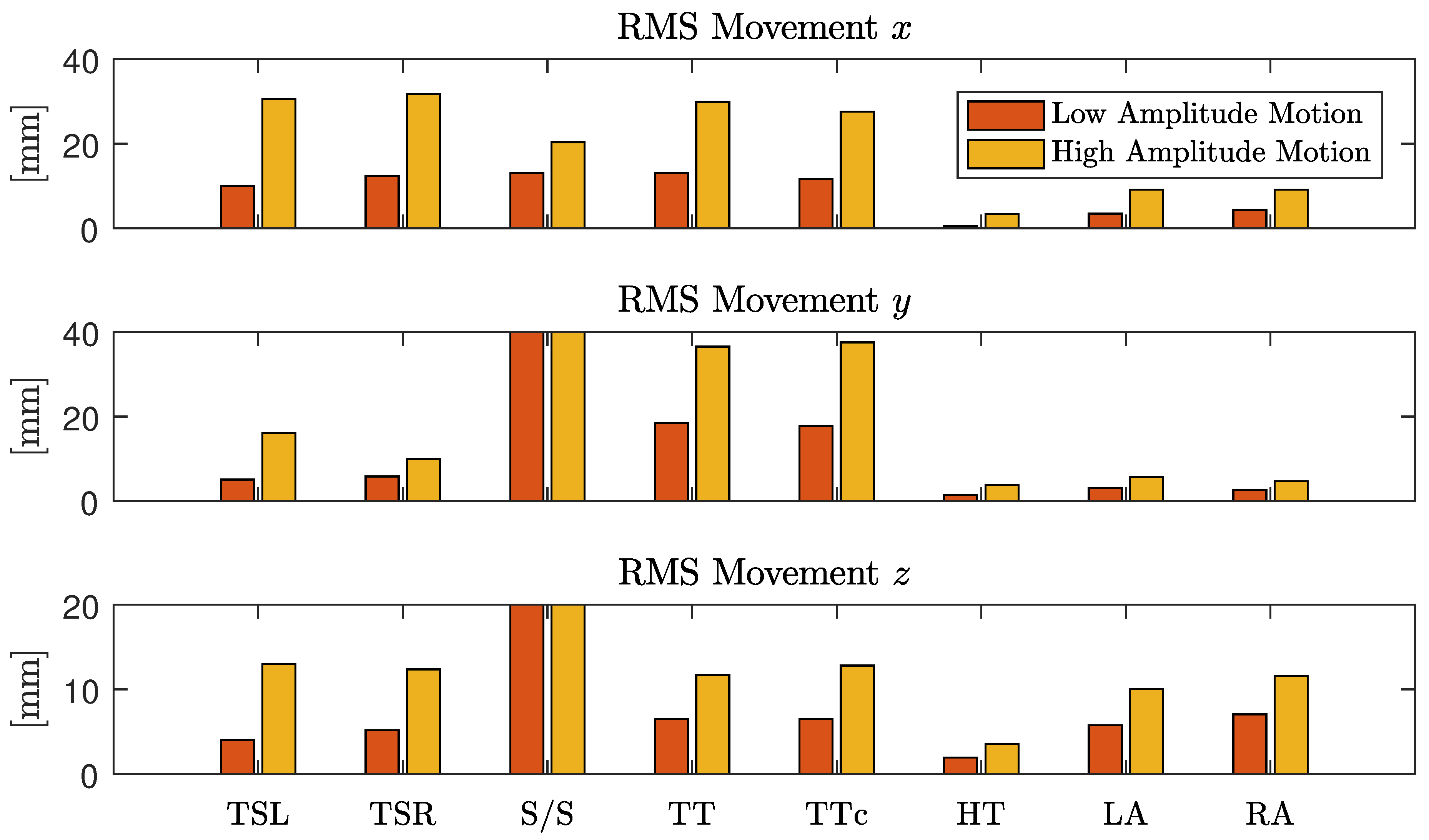

Using the “Opus 500+” tracking software, the position of each marker was exported as a three-dimensional coordinate. In the calibration process, the origin and orientation of the coordinate system was set as displayed in

Figure 2. To analyze the motion, the root mean square (RMS) value of displacement was analyzed for each coordinate separately. Since each marker position is given with respect to the origin of the coordinate system, we define movement as the AC component of each coordinate. Thus, for a signal with

N samples, the RMS movement values were calculated via

with

and

being the respective mean values, i.e., the DC component of that coordinate. For each instruction in the protocol, the motion was quantified and averaged over all subjects. To focus the movement estimation on the thorax, markers one (head), five (right arm) and seven (left arm) were excluded and motion estimation was averaged over the remaining markers.

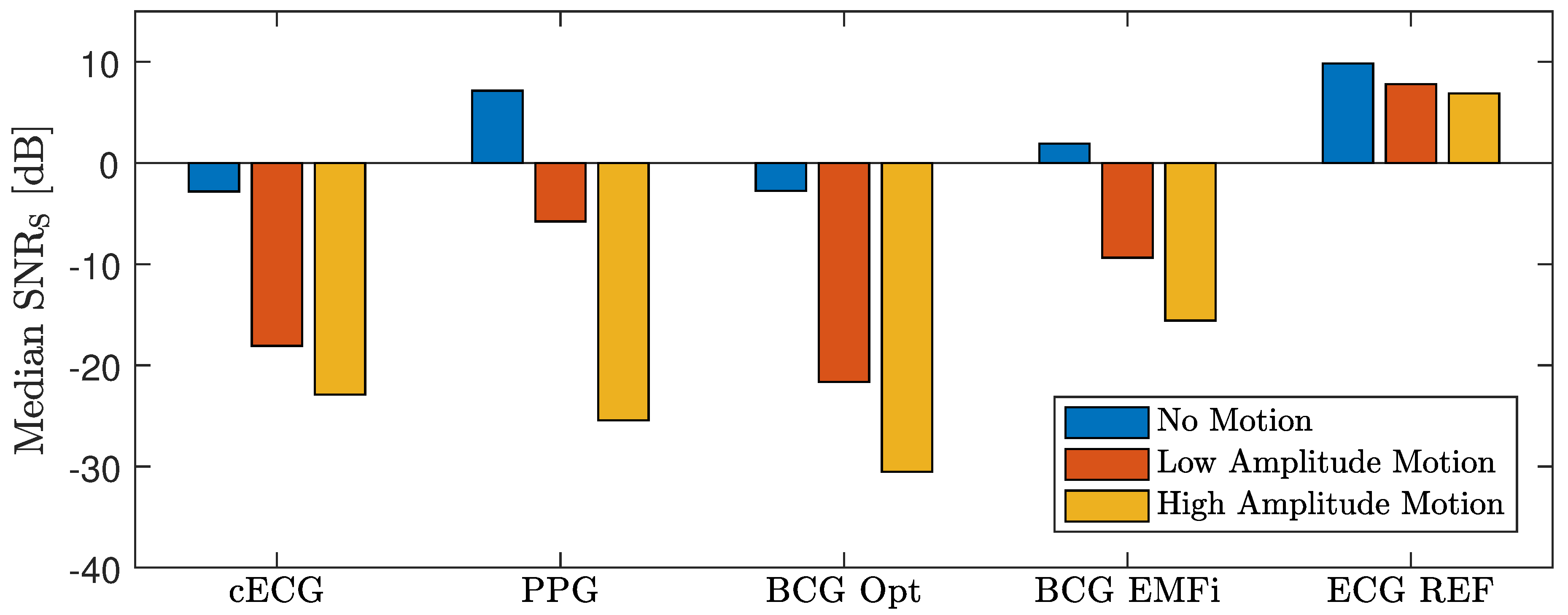

To analyze the effect of motion on the different modalities, three evaluations were performed. First, the effect of motion on the cardiac signal quality was analyzed. In many technical applications, the signal-to-noise ratio (SNR) is used for this type of analysis. However, a definition of SNR for biomedical signals is hard to obtain. One straightforward approach analyzes the frequency domain content of a cardiac signal and defines energy below a certain threshold (for example 5 Hz) as related to the desired signal and energy above as related to noise. While this can be implemented easily, it is based on the assumption that the influence of noise and artifacts is dominant in the high-frequency range. If this was the case, a perfect SNR could be achieved with low-pass filtering. Moreover, the sensors used in this setup all contained analog bandpass filters and thus a frequency-domain SNR analysis would report a high SNR even if the sensors were measuring no cardiac signal at all.

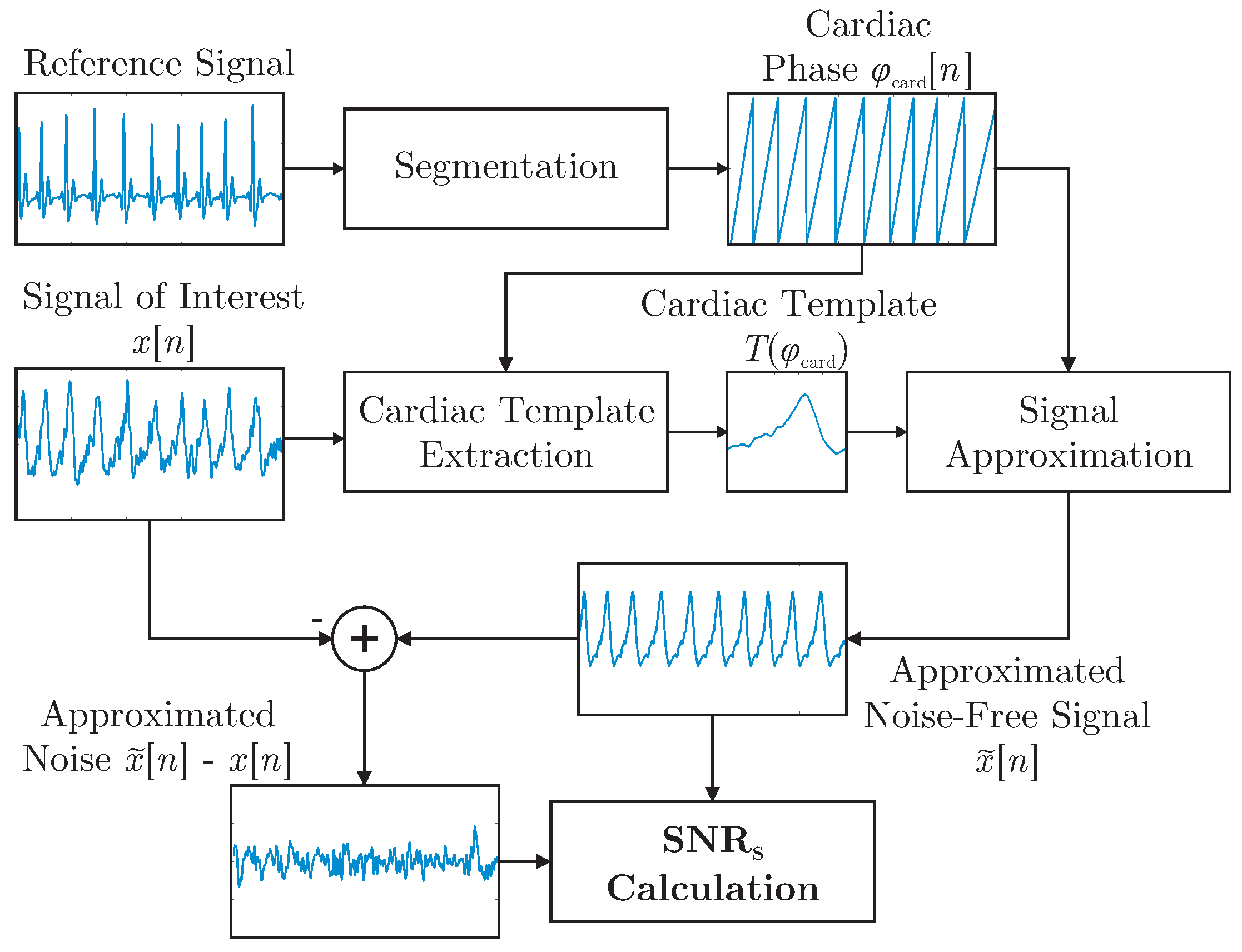

It was demonstrated in [

28] that a template function represented in terms of the cardiac phase

can be used to approximate arbitrary cardiac-related signals. In this work, the concept was expanded and used to define the shape-based SNR,

, which is visualized in

Figure 4. First, R-peaks detected in the reference ECG were used to segment the signal of interested

into individual cardiac cycles represented in terms of a cardiac phase

. In essence, all cycles were then interpolated to be of unit length and averaged to obtain the template

. For each modality and for each episode of the motion protocol, a separate template was extracted. Each template was based on the artifact free period right after the respective motion. This was done instead of creating a single template based on

all artifact free episodes to account for changes in signal morphology that can result from a change in posture after motion. To estimate

, the reference ECG and the extracted templates were used to create an estimation of the noise-free signal

via interpolation of

during both the artifact-free and the artifact period. The process is visualized for the artifact-free scenario in

Figure 4.

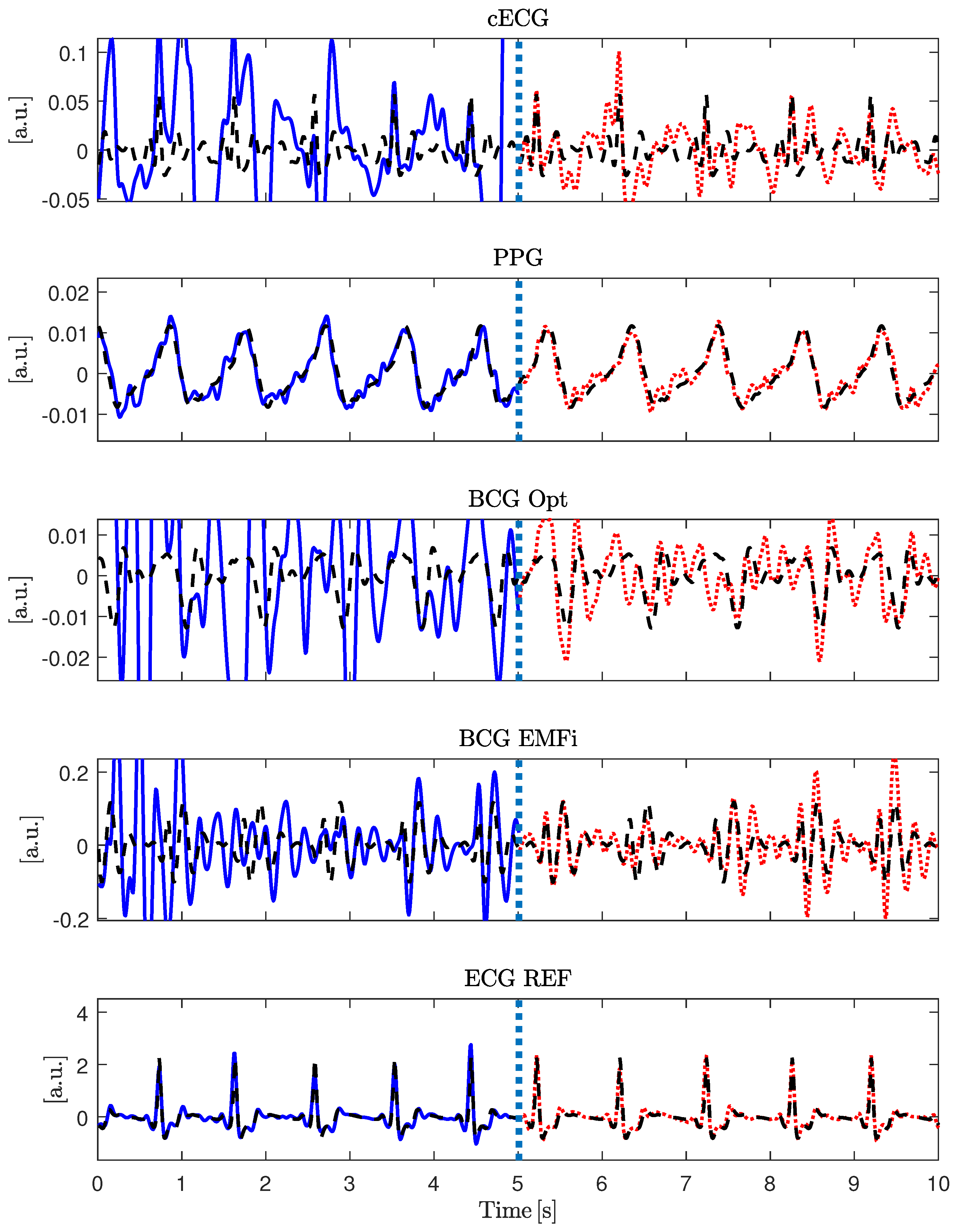

Figure 5 gives an example of the signal approximation for all modalities with and without artifacts.

To calculate

, a calculation in analogy to the regular SNR was used:

Here, N is the length of the signal , and is the signal’s noise-free estimation using the segmentation obtained from the reference ECG and the extracted template. To quantify the dependency of on the motion amplitudes , the correlation coefficient was calculated for each sensor and coordinate axis.

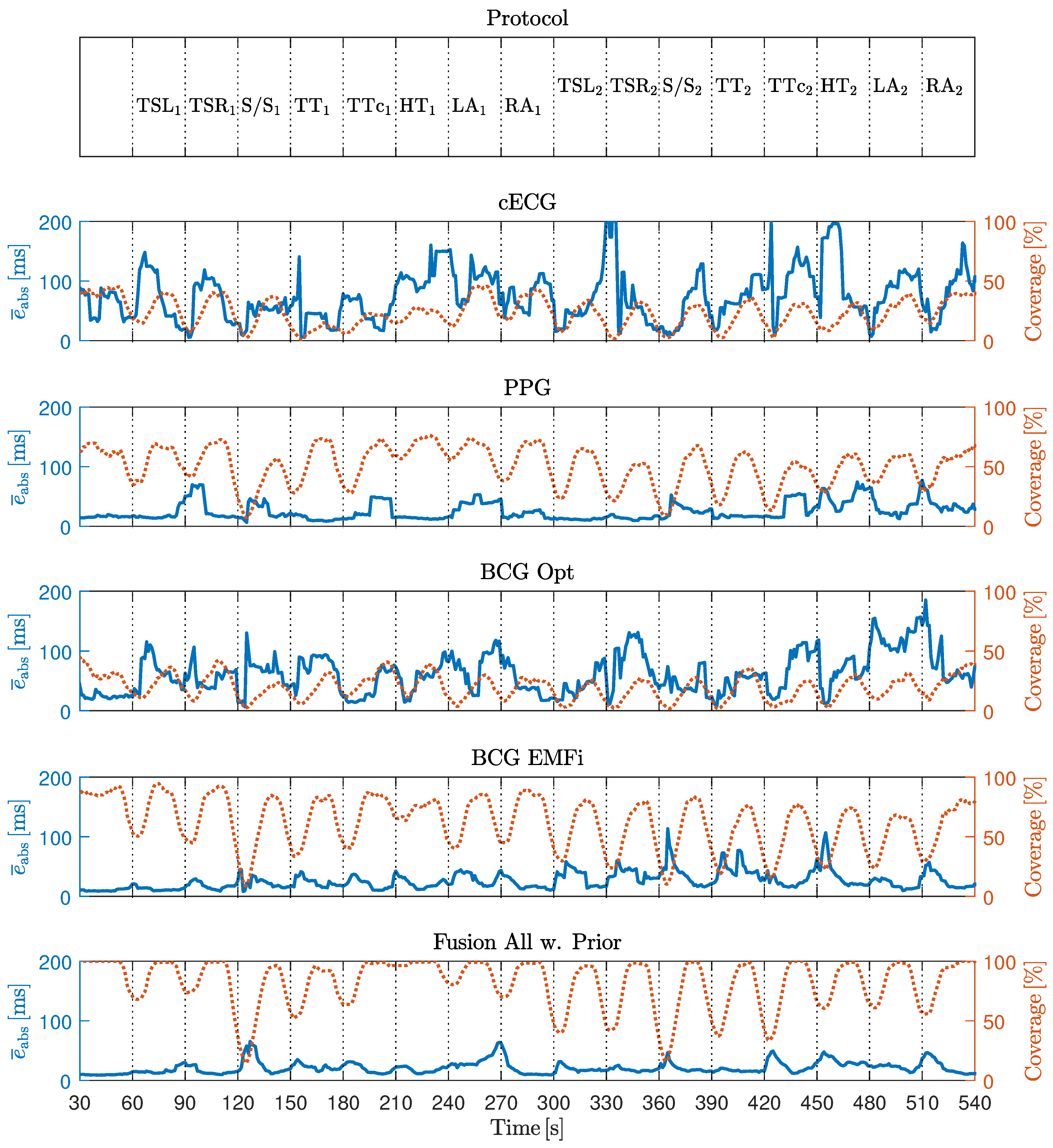

In the second experiment, a more application-oriented method of evaluation was used: in [

6], an augmentation to an algorithm termed xCLIE (multi-channel extended continuous local interval estimator) for beat-to-beat interval estimation [

4] was presented, which incorporates information from previous intervals to improve the current estimation. It was demonstrated that the algorithm can perform sensor fusion of multimodal cardiorespiratory signals to increase both coverage and accuracy. The interested reader is referred to [

4,

6] for details on the algorithm. In short, self-similarity of consecutive heart-beats is exploited for interval estimation. Thus, no a priori information about the signal’s morphology has to be available, which makes the algorithm modality-independent. To check the signal’s reliability, the algorithm calculates the ratio of the peak to the area under the curve of an autocorrelation-type function in a moving window. If this ratio is below a user-defined, fixed threshold, the algorithm deems the segment too noisy and reports no beat/interval. Thus, in addition to accuracy in terms of estimation error, the algorithm needs to be evaluated in terms of coverage, i.e., the ratio of detected beats

to the reference annotations

,

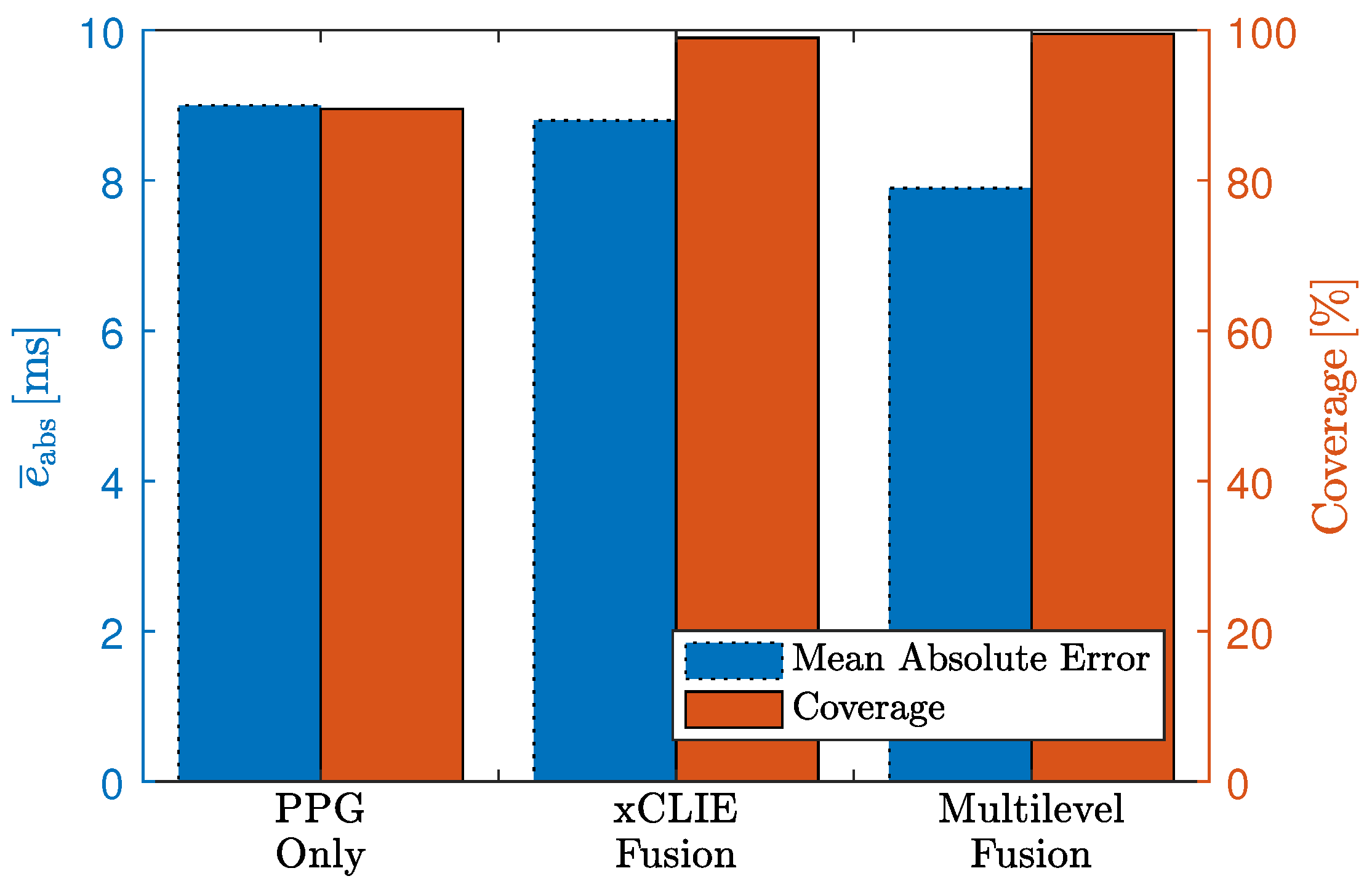

To analyze the effect of motion quantitatively, the estimation error and the coverage were analyzed for each modality, when fusing all modalities using the original algorithm and when using the adaptive prior introduced in [

6]. Evaluation was performed in terms of absolute interval estimation error (

) in milliseconds and coverage in percent.

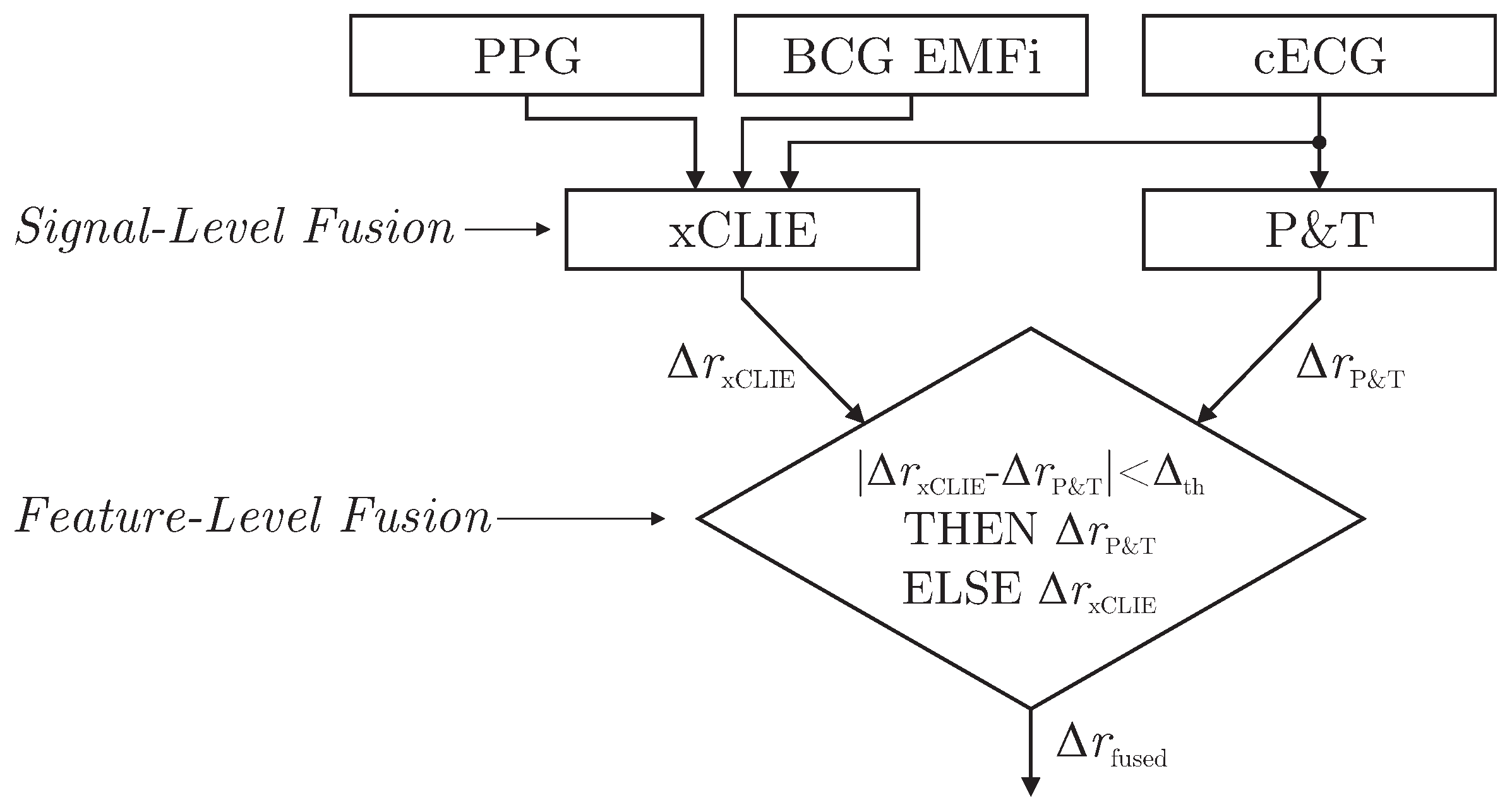

As demonstrated in [

6], the xCLIE algorithm is a powerful tool to estimate beat-to-beat intervals from multimodal sources. Moreover, the fusion of multiple channels was shown to increase both accuracy and robustness. In particular, the capacitively coupled ECG is known to be disturbed easily by motion artifacts due to triboelectric [

10] and other effects [

11]. On the other hand, with the exception of cECG, all cardiac-related signals recorded by the sensors of the chair are based on mechanical effects. Thus, even under perfect measurement conditions, estimated intervals would differ from those derived from the reference ECG if the mechanical pulse changed its position or shape relative to the QRS complex due to physiological effects. Thus, a mixed-level fusion algorithm was proposed as demonstrated in

Figure 6.

The xCLIE algorithm was used to estimate intervals

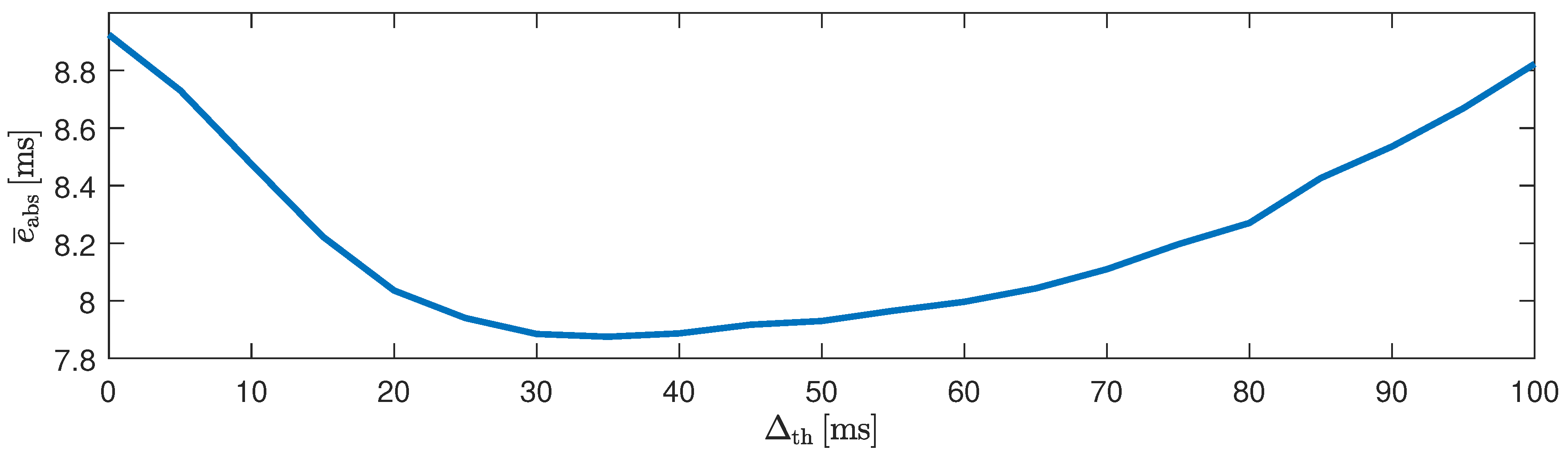

, fusing cardiac-related signal on the signal level. In addition, an implementation of the Pan and Tompkins (P&T) algorithm [

29] was used to localize R-peaks in the capacitive ECG and derive the respective intervals

. Next, a straightforward decision rule performed fusion on the feature level: if an interval derived from cECG via QRS-detection was available and the absolute difference of both estimators was smaller than a heuristically determined threshold

, the ECG-derived interval

was chosen for

. Otherwise,

was used.