A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality

Abstract

:1. Introduction

- A portable system that effectively provides a diverse haptic reaction at low cost was implemented to ensure that the hand haptic system can easily and conveniently used by anyone.

- Technical and statistical experiments were conducted to determine whether the proposed system provides a higher presence and greater immersion for users in immersive virtual reality.

2. Related Works

3. Portable Hand Haptic System

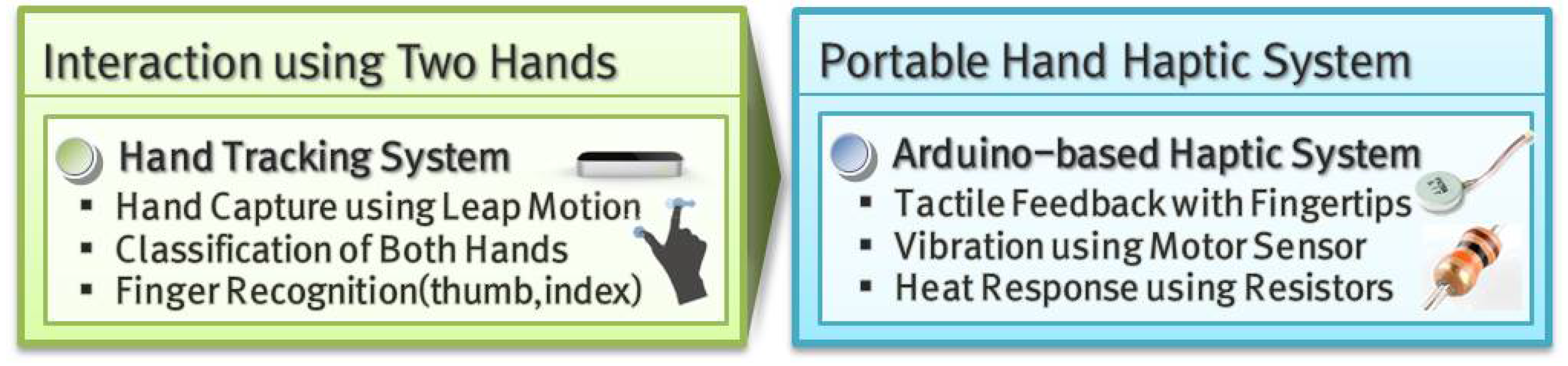

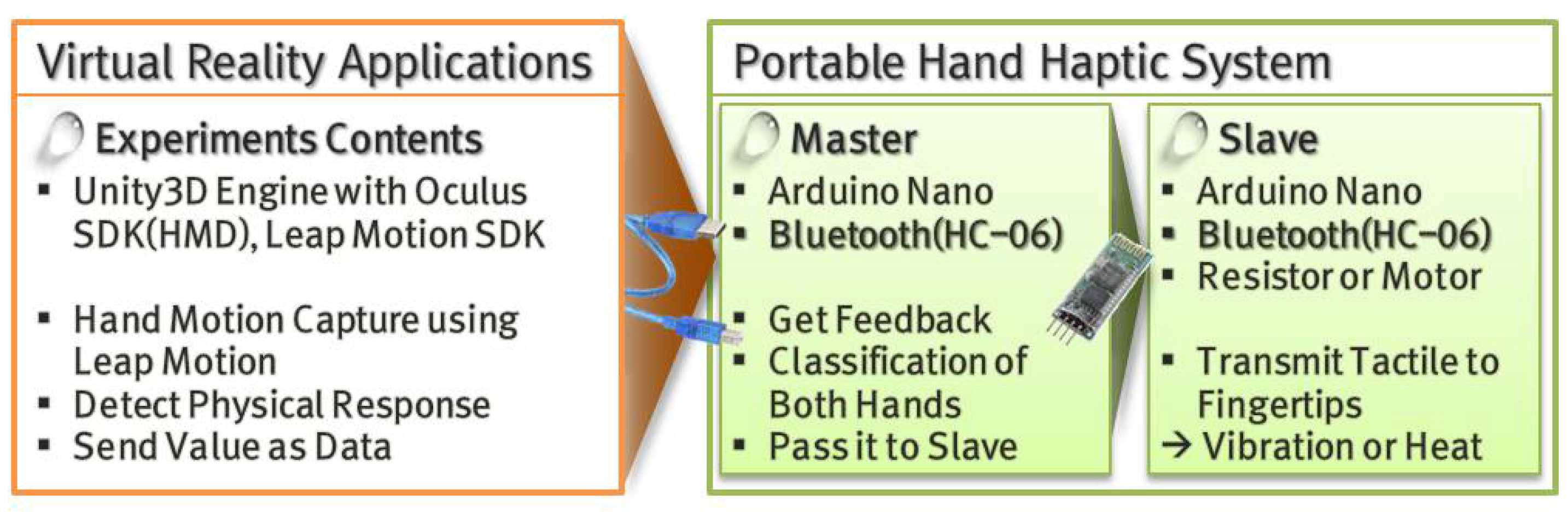

3.1. System Overview

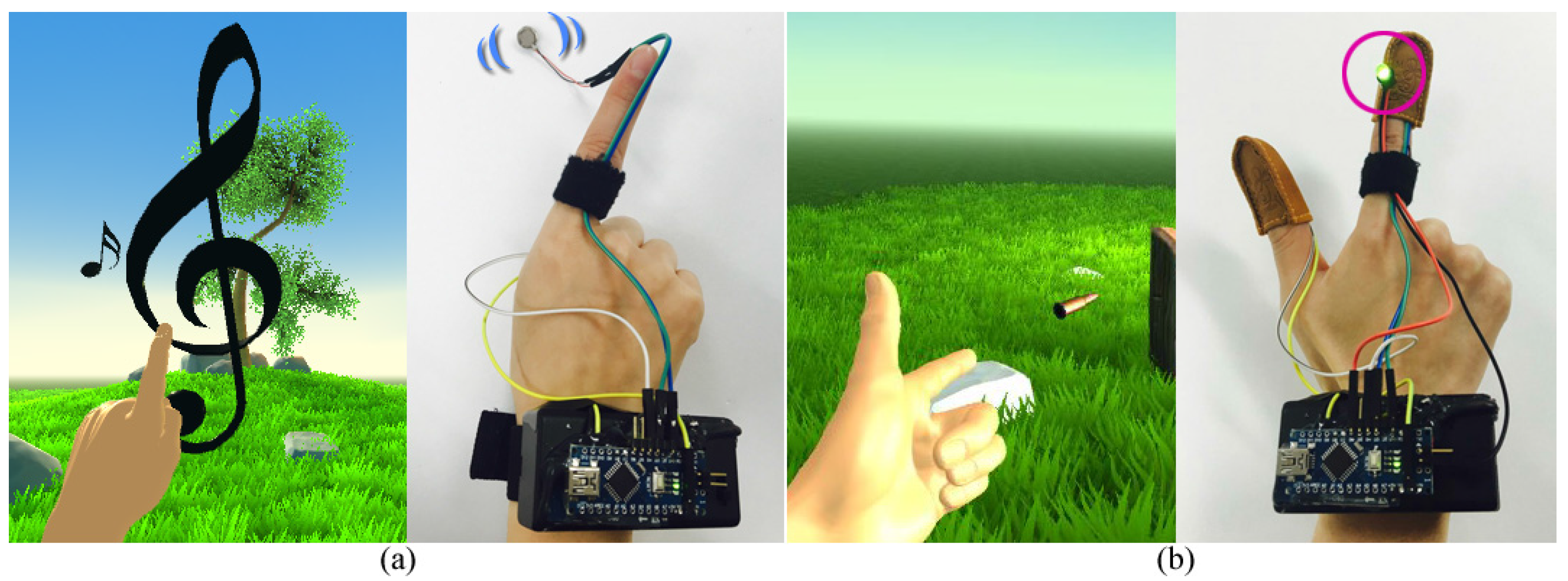

3.2. Arduino-Based Haptic System

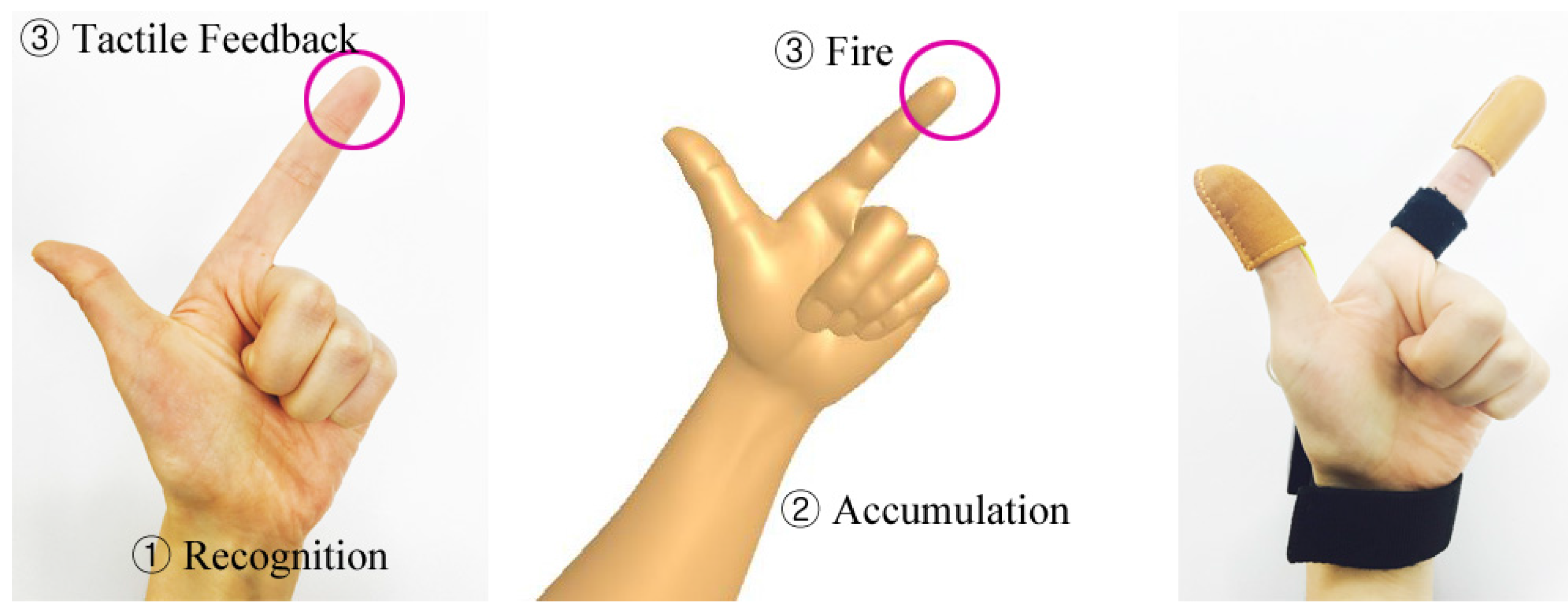

3.3. Interaction

| Algorithm 1 Finger recognition based on Leap Motion. |

|

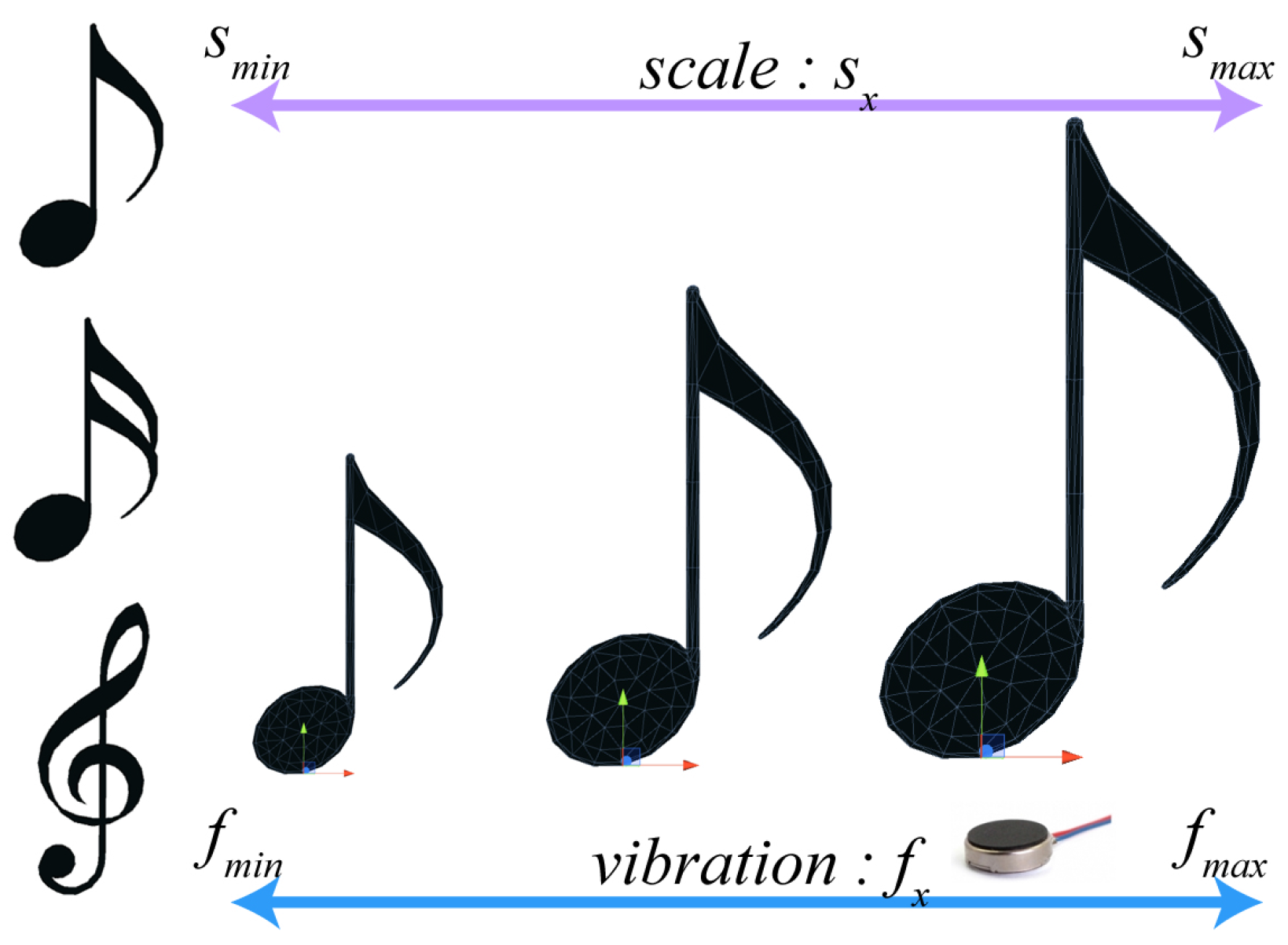

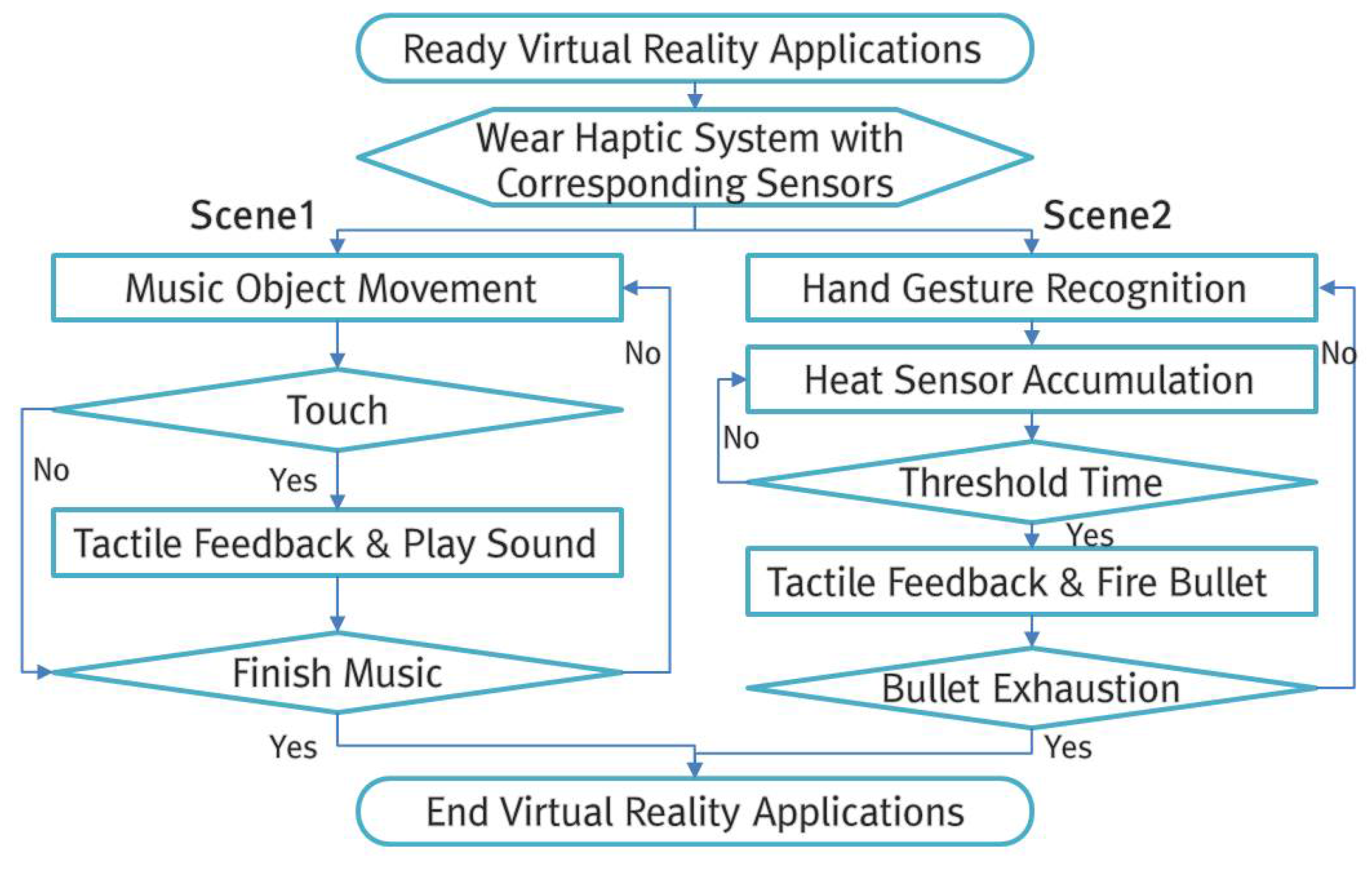

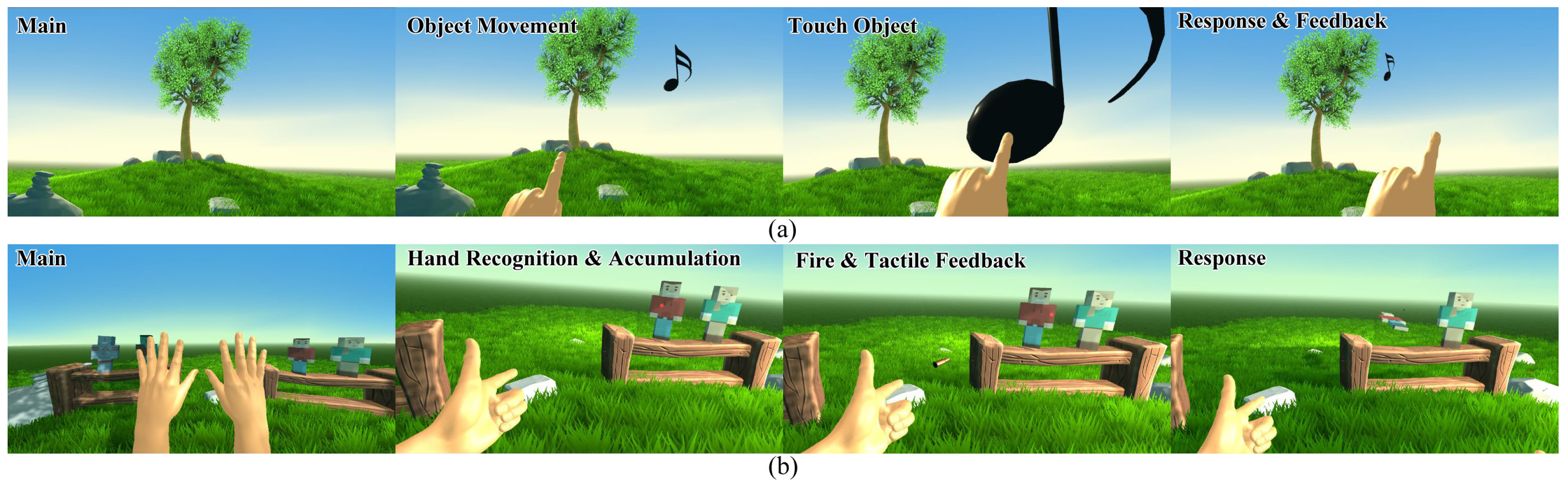

4. Virtual Reality Applications

| Algorithm 2 Hand recognition and heat response method. |

|

5. Experimental Results and Analysis

6. Limitations

7. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Slater, M.; Steed, A. A virtual presence counter. Presence Teleoper. Virtual Environ. 2000, 9, 413–434. [Google Scholar] [CrossRef]

- Slater, M. Measuring Presence: A Response to the Witmer and Singer Presence Questionnaire. Presence Teleoper. Virtual Environ. 1999, 8, 560–565. [Google Scholar] [CrossRef]

- Slater, M. Presence and the sixth sense. Presence Teleoper. Virtual Environ. 2002, 11, 435–439. [Google Scholar] [CrossRef]

- Sanchez-Vives, M.V.; Slater, M. From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 2005, 6, 332–339. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Sanchez-Vives, M.V. Enhancing our lives with immersive virtual reality. Front. Robot. AI 2016, 3. [Google Scholar] [CrossRef]

- Draper, J.V.; Kaber, D.B.; Usher, J.M. Telepresence. Hum. Factors 1998, 40, 354–375. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, N.; Takagi, H. Virtual Reality Environment Design of Managing Both Presence and Virtual Reality Sickness. J. Physiol. Anthropol. Appl. Hum. Sci. 2004, 23, 313–317. [Google Scholar] [CrossRef]

- Sutherland, I.E. A Head-mounted Three Dimensional Display. In Proceedings of the Fall Joint Computer Conference, Part I, San Francisco, CA, USA, 9–11 December 1968; pp. 757–764. [Google Scholar]

- Schissler, C.; Nicholls, A.; Mehra, R. Efficient HRTF-based Spatial Audio for Area and Volumetric Sources. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1356–1366. [Google Scholar] [CrossRef] [PubMed]

- Nordahl, R.; Berrezag, A.; Dimitrov, S.; Turchet, L.; Hayward, V.; Serafin, S. Preliminary Experiment Combining Virtual Reality Haptic Shoes and Audio Synthesis. In Proceedings of the 2010 International Conference on Haptics—Generating and Perceiving Tangible Sensations: Part II, Amsterdam, The Netherlands, 8–10 July 2010; pp. 123–129. [Google Scholar]

- Serafin, S.; Turchet, L.; Nordahl, R.; Dimitrov, S.; Berrezag, A.; Hayward, V. Identifcation of virtual grounds using virtual reality haptic shoes and sound synthesis. In Proceedings of the Eurohaptics 2010 Special Symposium, Amsterdam, The Netherlands, 7 July 2010; pp. 61–70. [Google Scholar]

- Slater, M.; Sanchez-Vives, M.V. Transcending the Self in Immersive Virtual Reality. IEEE Comput. 2014, 47, 24–30. [Google Scholar] [CrossRef]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Srinivasan, M.A.; Basdogan, C. Haptics in virtual environments: Taxonomy, research status, and challenges. Comput. Graph. 1997, 21, 393–404. [Google Scholar] [CrossRef]

- Carvalheiro, C.; Nóbrega, R.; da Silva, H.; Rodrigues, R. User Redirection and Direct Haptics in Virtual Environments. In Proceedings of the 2016 ACM on Multimedia Conference, Amsterdam, The Netherlands, 15–19 October 2016; pp. 1146–1155. [Google Scholar]

- Cheng, L.P.; Roumen, T.; Rantzsch, H.; Köhler, S.; Schmidt, P.; Kovacs, R.; Jasper, J.; Kemper, J.; Baudisch, P. TurkDeck: Physical Virtual Reality Based on People. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 11–15 November 2015; pp. 417–426. [Google Scholar]

- Perez, A.G.; Lobo, D.; Chinello, F.; Cirio, G.; Malvezzi, M.; Martin, J.S.; Prattichizzo, D.; Otaduy, M.A. Optimization-Based Wearable Tactile Rendering. IEEE Trans. Haptics 2016, PP, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Maisto, M.; Pacchierotti, C.; Chinello, F.; Salvietti, G.; Luca, A.D.; Prattichizzo, D. Evaluation of wearable haptic systems for the fingers in Augmented Reality applications. IEEE Trans. Haptics 2017, PP, 1. [Google Scholar] [CrossRef] [PubMed]

- Hayward, V.; Astley, O.R.; Cruz-Hernandez, M.; Grant, D.; Robles-de-la-Torre, G. Haptic interfaces and devices. Sens. Rev. 2004, 24, 16–29. [Google Scholar] [CrossRef]

- Burdea, G.C. Haptics issues in virtual environments. In Proceedings of the Computer Graphics International 2000, Geneva, Switzerland, 19–24 June 2000; pp. 295–302. [Google Scholar]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Displaying Sensed Tactile Cues with a Fingertip Haptic Device. IEEE Trans. Haptics 2015, 8, 384–396. [Google Scholar] [CrossRef] [PubMed]

- Yano, H.; Miyamoto, Y.; Iwata, H. Haptic Interface for Perceiving Remote Object Using a Laser Range Finder. In Proceedings of the World Haptics 2009—Third Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Salt Lake City, UT, USA, 18–20 March 2009; pp. 196–201. [Google Scholar]

- Dorjgotov, E.; Benes, B.; Madhavan, K. An Immersive Granular Material Visualization System with Haptic Feedback. In Theory and Practice of Computer Graphics; Lim, I.S., Duce, D., Eds.; The Eurographics Association: Aire-la-Ville, Switzerland, 2007. [Google Scholar]

- Danieau, F.; Fleureau, J.; Guillotel, P.; Mollet, N.; Christie, M.; Lécuyer, A. HapSeat: Producing motion sensation with multiple force-feedback embedded in a seat. In Proceedings of the 2012 ACM Symposium on VRST, Toronto, ON, Canada, 10–12 December 2012. [Google Scholar]

- Yokokohji, Y.; Hollis, R.L.; Kanade, T. What you can see is what you can feel-development of a visual/haptic interface to virtual environment. In Proceedings of the IEEE 1996 Virtual Reality Annual International Symposium, Santa Clara, CA, USA, 30 March–3 April 1996; pp. 46–53, 265. [Google Scholar]

- DiFranco, D.E.; Lee Beauregard, G.; Srinivasan, M.A. The Effect of Auditory Cues on the Haptic Perception of Stiffness in Virtual Environments. In Dynamic Systems and Control Division, ASME; American Society of Mechanical Engineers: New York, NY, USA, 1997; Volume 61, pp. 17–22. [Google Scholar]

- Sreng, J.; Bergez, F.; Legarrec, J.; Lécuyer, A.; Andriot, C. Using an Event-based Approach to Improve the Multimodal Rendering of 6DOF Virtual Contact. In Proceedings of the 2007 ACM Symposium on Virtual Reality Software and Technology, Newport Beach, CA, USA, 5–7 November 2007; pp. 165–173. [Google Scholar]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A 3-RSR Haptic Wearable Device for Rendering Fingertip Contact Forces. IEEE Trans. Haptics 2016, PP, 1. [Google Scholar] [CrossRef] [PubMed]

- Prattichizzo, D.; Chinello, F.; Pacchierotti, C.; Malvezzi, M. Towards Wearability in Fingertip Haptics: A 3-DoF Wearable Device for Cutaneous Force Feedback. IEEE Trans. Haptics 2013, 6, 506–516. [Google Scholar] [CrossRef] [PubMed]

- Schorr, S.B.; Okamura, A. Three-dimensional skin deformation as force substitution: Wearable device design and performance during haptic exploration of virtual environments. IEEE Trans. Haptics 2017, PP. [Google Scholar] [CrossRef] [PubMed]

- Bianchi, M.; Battaglia, E.; Poggiani, M.; Ciotti, S.; Bicchi, A. A Wearable Fabric-based display for haptic multi-cue delivery. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 277–283. [Google Scholar]

- Benali-Khoudja, M.; Hafez, M.; Kheddar, A. Thermal feedback interface requirements for virtual reality. In Proceedings of the EuroHaptics 2003 Posters, Dublin, Ireland, 6–9 July 2003; pp. 1–6. [Google Scholar]

- Scheggi, S.; Meli, L.; Pacchierotti, C.; Prattichizzo, D. Touch the Virtual Reality: Using the Leap Motion Controller for Hand Tracking and Wearable Tactile Devices for Immersive Haptic Rendering. In Proceedings of the ACM SIGGRAPH 2015 Posters, Los Angeles, CA, USA, 9–13 August 2015. [Google Scholar]

- Li, H.; Trutoiu, L.; Olszewski, K.; Wei, L.; Trutna, T.; Hsieh, P.L.; Nicholls, A.; Ma, C. Facial Performance Sensing Head-mounted Display. ACM Trans. Graph. 2015, 34, 47. [Google Scholar] [CrossRef]

- Zhao, W.; Chai, J.; Xu, Y.Q. Combining Marker-based Mocap and RGB-D Camera for Acquiring High-fidelity Hand Motion Data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Lausanne, Switzerland, 29–31 July 2012; pp. 33–42. [Google Scholar]

- Metcalf, C.D.; Notley, S.V.; Chappell, P.H.; Burridge, J.H.; Yule, V.T. Validation and Application of a Computational Model for Wrist and Hand Movements Using Surface Markers. IEEE Trans. Biomed. Eng. 2008, 55, 1199–1210. [Google Scholar] [CrossRef] [PubMed]

- Stollenwerk, K.; Vögele, A.; Sarlette, R.; Krüger, B.; Hinkenjann, A.; Klein, R. Evaluation of Markers for Optical Hand Motion Capture. Proceedings of 13. Workshop Virtuelle Realität und Augmented Reality der GI-Fachgruppe VR/AR, Bielefeld, Germany, 8–9 September 2016. [Google Scholar]

- Iason Oikonomidis, N.K.; Argyros, A. Efficient model-based 3D tracking of hand articulations using Kinect. In Proceedings of the British Machine Vision Conference, Durham, UK, 29 August–2 September 2011. [Google Scholar]

- Kamaishi, S.; Uda, R. Biometric Authentication by Handwriting Using Leap Motion. In Proceedings of the 10th International Conference on Ubiquitous Information Management and Communication, Danang, Viet Nam, 4–6 January 2016. [Google Scholar]

- Juanes, J.A.; Gómez, J.J.; Peguero, P.D.; Ruisoto, P. Practical Applications of Movement Control Technology in the Acquisition of Clinical Skills. In Proceedings of the 3rd International Conference on Technological Ecosystems for Enhancing Multiculturality, Porto, Portugal, 7–9 October 2015; pp. 13–17. [Google Scholar]

- Arduino-Foundation. Arduino, 2017. Available online: https://www.arduino.cc/ (accessed on 16 May 2017).

- Leap-Motion-INC. Leap Motion. 2017. Available online: https://www.leapmotion.com/ (accessed on 16 May 2017).

- Kim, H.; Kim, M.; Lee, W. HapThimble: A Wearable Haptic Device towards Usable Virtual Touch Screen. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, Santa Clara, CA, USA, 7–12 May 2016; pp. 3694–3705. [Google Scholar]

- Witmer, B.G.; Jerome, C.J.; Singer, M.J. The Factor Structure of the Presence Questionnaire. Presence: Teleoper. Virtual Environ. 2005, 14, 298–312. [Google Scholar] [CrossRef]

- Girard, A.; Marchal, M.; Gosselin, F.; Chabrier, A.; Louveau, F.; Lécuyer, A. HapTip: Displaying Haptic Shear Forces at the Fingertips for Multi-Finger Interaction in Virtual Environments. Front. ICT 2016, 3, 6. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Salvietti, G.; Hussain, I.; Meli, L.; Prattichizzo, D. The hRing: A wearable haptic device to avoid occlusions in hand tracking. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 134–139. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Bouchard, S.; St-Jacques, J.; Renaud, P.; Wiederhold, B.K. Side effects of immersions in virtual reality for people suffering from anxiety disorders. J. Cyberther. Rehabil. 2009, 2, 127–137. [Google Scholar]

| Question | ||||

|---|---|---|---|---|

| Phase | Standard | Minimum | Maximum | Mean (Standard Deviation, SD) |

| (a) Appropriateness of VR application and haptic system | ||||

| Scene1 | 3 (Appropriate) | 2.7 | 5 | 4.1 (0.61) |

| Scene2 | 3 (Appropriate) | 2.5 | 5 | 3.8 (0.59) |

| (b) Accuracy of coincidence of object touch and vibration | ||||

| Accuracy | 3 (Accurate) | 2 | 3 | 2.94 (0.22) |

| (c) Immersion provided by vibration | ||||

| Only H | 5 (Real) | 1 | 4 | 2.73 (0.94) |

| With S | 5 (Real) | 3.5 | 5 | 4.43 (0.52) |

| Vibration Wilcoxon Result (p-value) H : S | ||||

| (d) Accuracy of heat reaction delivered from fingertip | ||||

| Accuracy | 3 (Accurate) | 1 | 3 | 2.23 (0.44) |

| (e) Immersion provided by heat reaction | ||||

| Only H | 5 (Real) | 2 | 4 | 3.09 (0.69) |

| With S | 5 (Real) | 2 | 5 | 3.32 (0.76) |

| Heat Wilcoxon Result (p-value) H : S | ||||

| Total Wilcoxon Result (p-value) H : S | ||||

| Mean (Raw Data) | Standard Deviation (SD) | ||

|---|---|---|---|

| Total | H | 141.0 (5.88) | 5.31 |

| S(heat) | 148.2 (6.18) | 6.49 | |

| S(vibration) | 150.9 (6.29) | 7.92 | |

| Realism | H | 41.7 (5.96) | 2.10 |

| S(heat) | 42.9 (6.13) | 2.62 | |

| S(vibration) | 43.2 (6.17) | 3.03 | |

| Possibility of act | H | 24.5 (6.13) | 1.80 |

| S(heat) | 24.9 (6.23) | 1.58 | |

| S(vibration) | 25.2 (6.30) | 1.94 | |

| Quality of interface | H | 18.0 (6.00) | 1.18 |

| S(heat) | 18.6 (6.20) | 1.28 | |

| S(vibration) | 18.6 (6.20) | 1.28 | |

| Possibility of examine | H | 18.5 (6.17) | 1.20 |

| S(heat) | 19.2 (6.40) | 1.17 | |

| S(vibration) | 19.2 (6.40) | 1.33 | |

| Self-evaluation of performance | H | 12.2 (6.10) | 1.17 |

| S(heat) | 12.0 (6.00) | 1.26 | |

| S(vibration) | 12.0 (6.00) | 1.41 | |

| Sounds | H | 18.1 (6.03) | 0.29 |

| S(heat) | 18.3 (6.10) | 1.21 | |

| S(vibration) | 19.5 (6.50) | 1.25 | |

| Haptic | H | 8.0 (4.0) | 1.2 |

| S(heat) | 12.3 (6.15) | 0.81 | |

| S(vibration) | 13.2 (6.60) | 0.96 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.; Jeon, C.; Kim, J. A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality. Sensors 2017, 17, 1141. https://doi.org/10.3390/s17051141

Kim M, Jeon C, Kim J. A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality. Sensors. 2017; 17(5):1141. https://doi.org/10.3390/s17051141

Chicago/Turabian StyleKim, Mingyu, Changyu Jeon, and Jinmo Kim. 2017. "A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality" Sensors 17, no. 5: 1141. https://doi.org/10.3390/s17051141

APA StyleKim, M., Jeon, C., & Kim, J. (2017). A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality. Sensors, 17(5), 1141. https://doi.org/10.3390/s17051141