Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles

Abstract

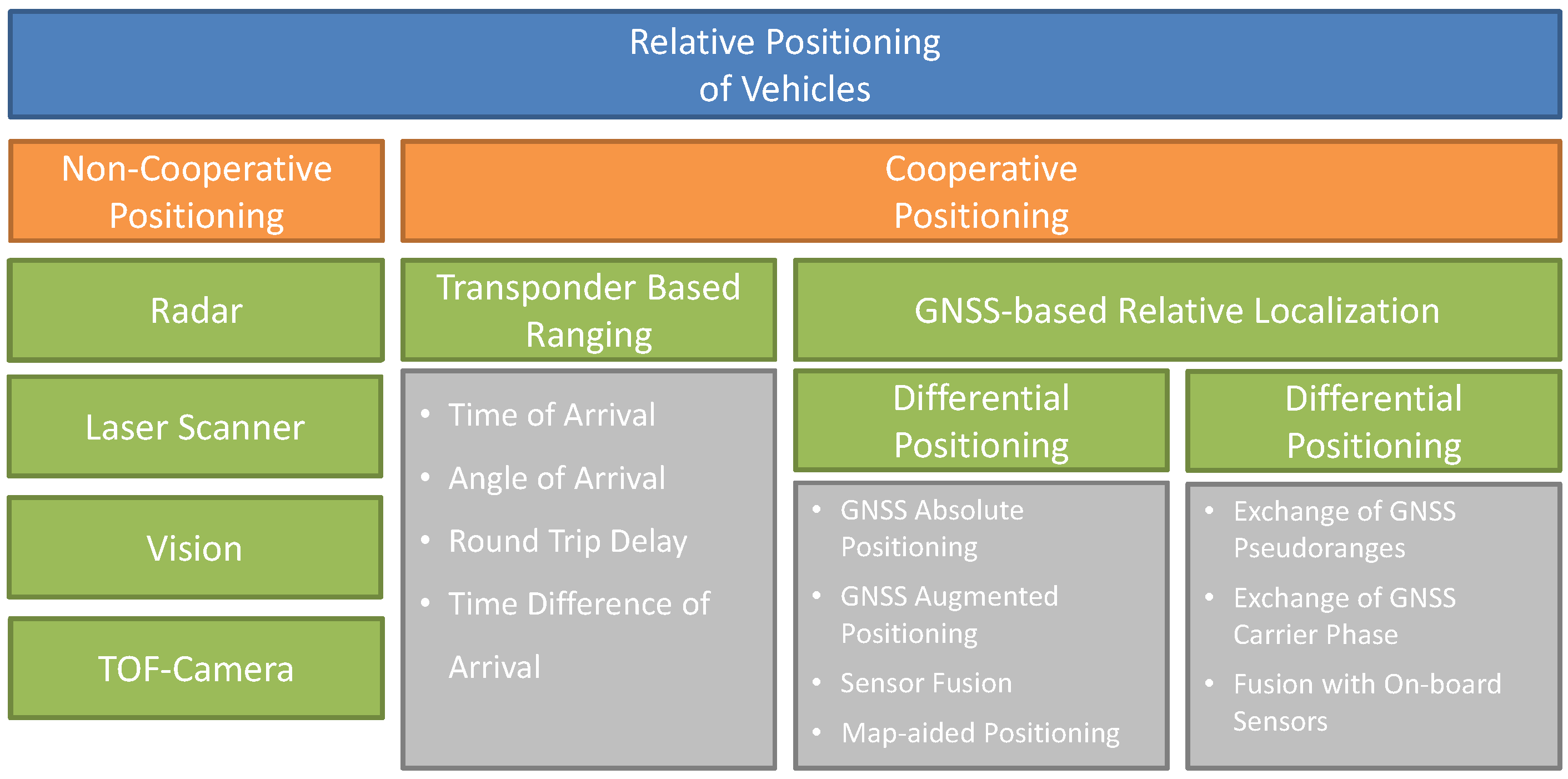

:1. Introduction

2. Requirement Analysis

2.1. Accuracy

2.2. Reliability

2.3. Availability

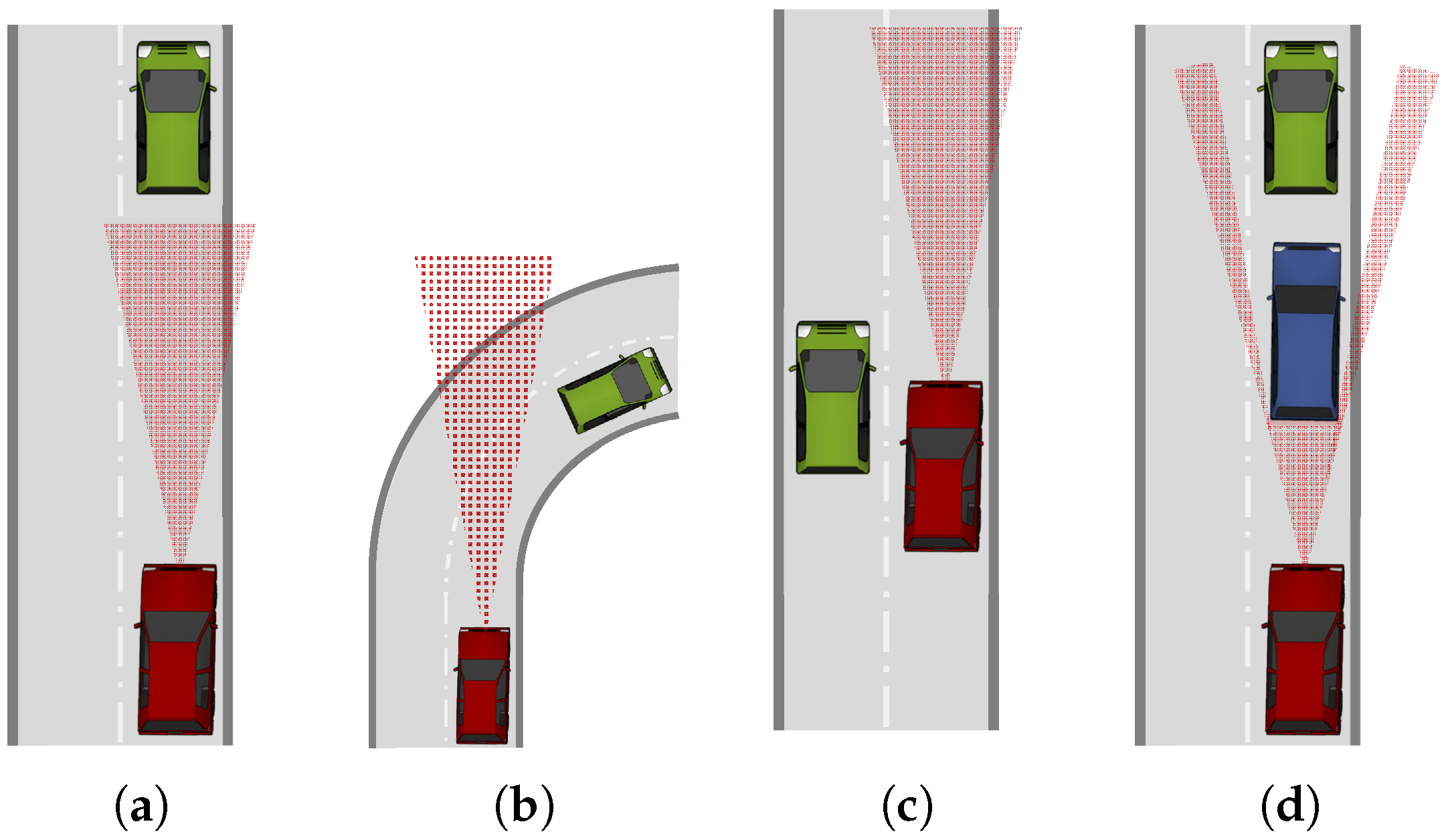

2.4. Detecting Range and Field of View

2.5. Dimension

2.6. Target Resolution and Identification

2.7. System Delay

2.8. Non-Technical Requirements

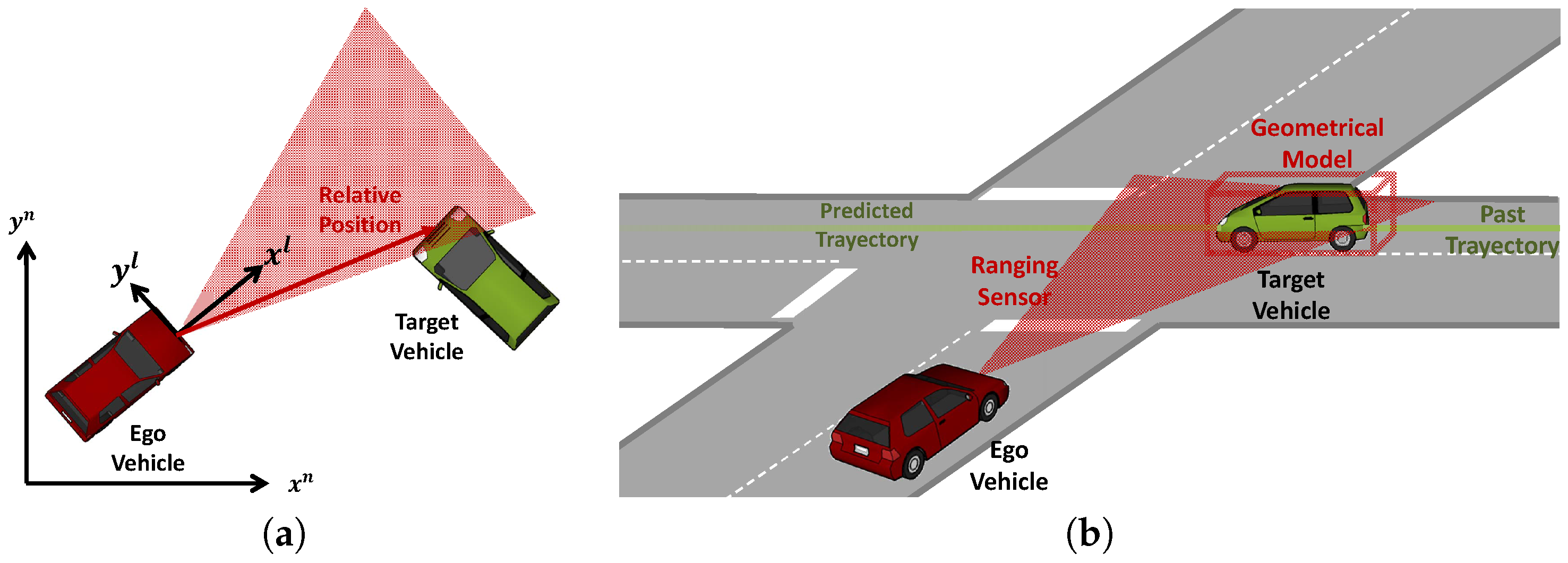

3. Non-Cooperative Relative Positioning

3.1. Radio Ranging

3.2. Laser Scanners

3.3. Time-Of-Flight Cameras

3.4. Vision-Based Solutions

4. Cooperative Relative Positioning

4.1. Transponder-Based Ranging

4.2. GNSS-Based Relative Localization

4.2.1. Absolute Positioning

4.2.2. Relative Positioning

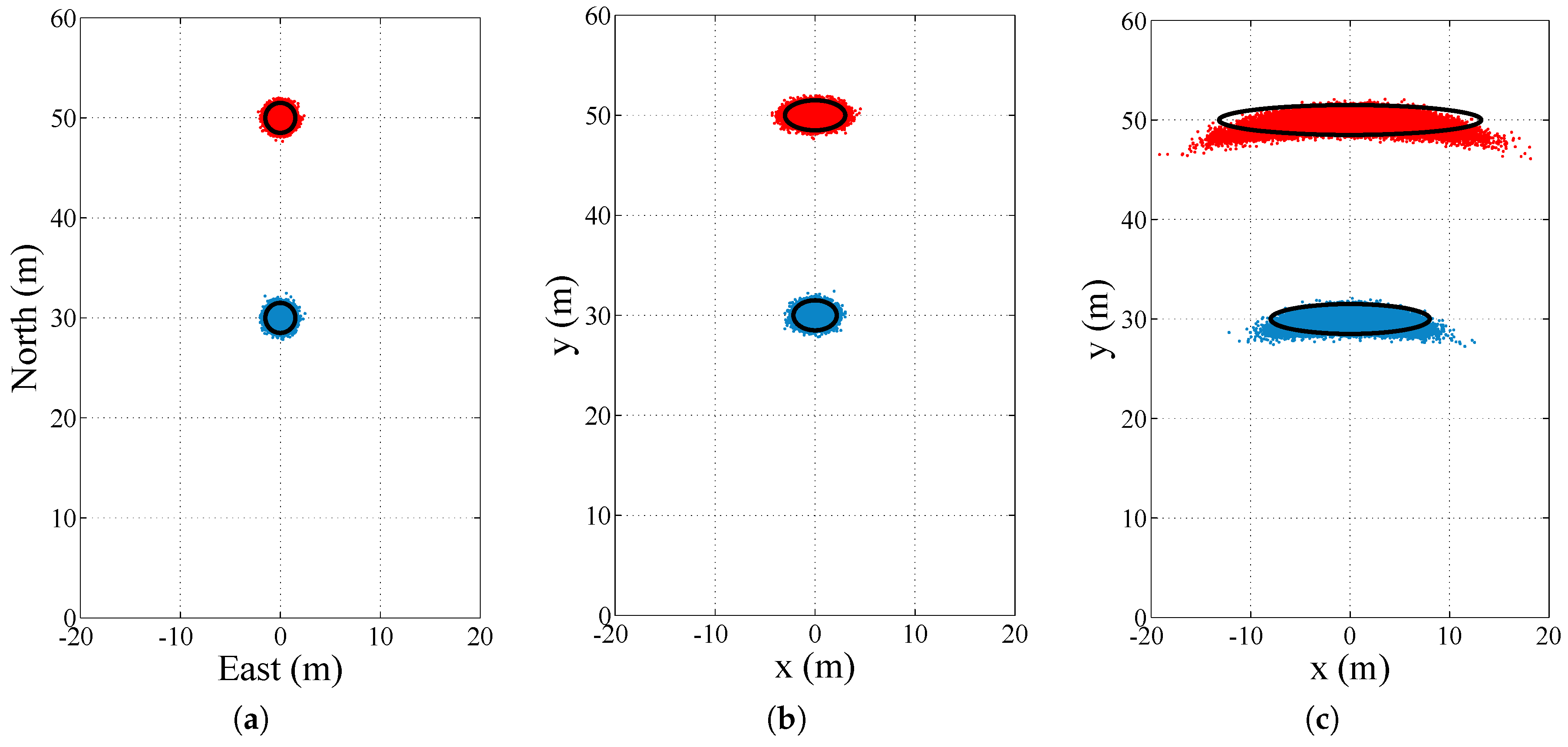

4.2.3. Relative Positioning with Raw GNSS Measurements

4.2.4. Differential Carrier Phase Ambiguity Resolution

4.3. Coordinate Frame Transformation

4.4. V2V Communication Performance

5. Sensor Fusion for Relative Vehicle Positioning

6. Conclusions

Acknowledgments

Conflicts of Interest

Abbreviations

| ACC | Automatic Cruise Control |

| CAM | Cooperative Awareness Message |

| ETSI | European Telecommunication Standards Institute |

| DGPS | Differential GPS |

| FCA | Forward Collision Avoidance |

| FMCW | Frequency-Modulated Constant Wave |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| INS | Inertial Navigation System |

| ITS | Intelligent Transportation Systems |

| ISM | Industrial, Scientific and Medical |

| LCA | Lane Change Assistant |

| LIDAR | Light Detection and Ranging |

| TOF | Time-Of-Flight |

| RSS | Received Signal Strength |

| RTD | Round-Trip Delay |

| SAE | Society of Automotive Engineers |

| UWB | Ultra-Wideband |

| V2V | Vehicle-to-Vehicle |

References

- Leonard, J.; How, J.; Teller, S.; Berger, M.; Campbell, S.; Fiore, G.; Fletcher, L.; Frazzoli, E.; Huang, A.; Karaman, S. A perception-driven Autonomous Urban Vehicle. J. Field Robot. 2008, 25, 727–774. [Google Scholar] [CrossRef]

- ETSI TS 102 637-2. Intelligent Transport Systems (ITS)—Vehicular Communications—Basic Set of Applications—Part 2: Specification of Cooperative Awareness Basic Service; Technical Report; European Telecommunications Standards Institute: Sophia-Antipolis, France, 2010. [Google Scholar]

- J2735. Dedicated Short Range Communications (DSRC) Message Set Dictionary; Technical Report; SAE International: Warrendale, PA, USA, 2015. [Google Scholar]

- Ess, A.; Schindler, K.; Leibe, B.; Van Gool, L. Object Detection and Tracking for Autonomous Navigation in Dynamic Environments. Int. J. Robot. Res. 2010, 29, 1707–1725. [Google Scholar] [CrossRef]

- Petrovskaya, A.; Thrun, S. Model based Vehicle Detection and Tracking for Autonomous Urban Driving. Auton. Robot. 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Shackleton, J.; VanVoorst, B.; Hesch, J. Tracking People with a 360-Degree Lidar. In Proceedings of the Seventh IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Boston, MA, USA, 29 August–1 September 2010; pp. 420–426.

- Shladover, S.E.; Tan, S.K. Analysis of Vehicle Positioning Accuracy Requirements for Communication-based Cooperative Collision Warning. J. Intell. Transp. Syst. 2006, 10, 131–140. [Google Scholar] [CrossRef]

- Ober, P.B. Integrity Prediction & Monitoring of Navigation Systems; Integricom Publishers: Leiden, The Netherlands, 2003. [Google Scholar]

- ISO 26262. Road Vehicles Functional Safety; Technical Report; International Organization for Standard (ISO): Geneva, Switzerland, 2011. [Google Scholar]

- Milanes, V.; Shladover, S.; Spring, J.; Nowakowski, C.; Kawazoe, H.; Nakamura, M. Cooperative Adaptive Cruise Control in Real Traffic Situations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 296–305. [Google Scholar] [CrossRef]

- Widmann, G.R.; Daniels, M.K.; Hamilton, L.; Humm, L.; Riley, B.; Schiffmann, J.K.; Schnelker, D.E.; Wishon, W.H. SAE TECHNICAL Comparison of Lidar-Based and Radar-Based Adaptive Cruise Control Systems. In Proceedings of the SAE 2000 World Congress, Detroit, MI, USA, 6–9 March 2000.

- Caveney, D. Cooperative Vehicular Safety Applications. IEEE Control Syst. Mag. 2010, 30, 38–53. [Google Scholar] [CrossRef]

- Obst, M.; Hobert, L.; Reisdorf, P. Multi-Sensor Data Fusion for Checking Plausibility of V2V Communications by Vision-Based Multiple-Object Tracking. In Proceedings of the IEEE Vehicular Networking Conference (VNC), Paderborn, Germany, 3–5 December 2014; pp. 143–150.

- Sand, S.; Dammann, A.; Mensing, C. Positioning in Wireless Communications Systems; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Alam, N.; Balaie, A.; Dempster, A. Dynamic Path Loss Exponent and Distance Estimation in a Vehicular Network Using Doppler Effect and Received Signal Strength. In Proceedings of the 72nd Vehicular Technology Conference Fall (VTC 2010-Fall), Ottawa, ON, Canada, 6–9 September 2010; pp. 1–5.

- Parker, R.; Valaee, S. Cooperative Vehicle Position Estimation. In Proceedings of the 7th IEEE International Conference on Communications ICC, Glasgow, Scotland, 24–28 June 2007; pp. 5837–5842.

- Alam, N.; Balaei, A.T.; Dempster, A.G. Range and Range-Rate Measurements Using DSRC: Facts and Challenges. In Proceedings of the IGNSS Symposium, Queensland, Australia, 1–3 December 2009.

- Kukshya, V.; Krishnan, H.; Kellum, C. Design of a System Solution for Relative Positioning of Vehicles using Vehicle-to-Vehicle Radio Communications during GPS outages. In Proceedings of the 62nd IEEE Vehicular Technology Conference (VTC-2005-Fall), Dallas, TX, USA, 25–28 September 2005; Volume 2, pp. 1313–1317.

- Schneider, M. Automotive Radar-Status and Trends. In Proceedings of the German Microwave Conference (GeMiC), Ulm, Germany, 5–7 April 2005.

- Bloecher, H.L.; Sailer, A.; Rollmann, G.; Dickmann, J. 79 GHz UWB automotive short range radar–Spectrum allocation and technology trends. Adv. Radio Sci. 2009, 7, 61–65. [Google Scholar] [CrossRef]

- 2011/485/EU. Commission Implementing Decision of 29 July 2011 Amending Decision 2005/50/EC on the Harmonisation of the 24 GHz Range Radio Spectrum Band for the Time-Limited Use by Automotive Short-Range Radar Equipment in the Community; Technical Report; European Commission: Brussels, Belgium, 2011. [Google Scholar]

- Goppelt, M.; Blöcher, H.L.; Menzel, W. Analytical Investigation of Mutual Interference between Automotive FMCW Radar Sensors. In Proceedings of the German Microwave Conference (GeMIC), Darmstadt, Germany, 14–16 March 2011; pp. 1–4.

- Goppelt, M.; Blöcher, H.L.; Menzel, W. Automotive Radar—Investigation of Mutual Interference Mechanisms. Adv. Radio Sci. 2010, 8, 55–60. [Google Scholar] [CrossRef] [Green Version]

- Issakov, V. Microwave Circuits for 24 GHz Automotive Radar in Silicon-based Technologies; Springer: Berlin/Heidelberg, Germany, 2010; p. 208. [Google Scholar]

- Schoor, M.; Yang, B. High-Resolution Angle Estimation for an Automotive FMCW Radar Sensor. In Proceedings of the International Radar Symposium (IRS), Cologne, Germany, 5–7 September 2007.

- Röckl, M.; Gacnik, J.; Schomerus, J.; Strang, T.; Kranz, M. Sensing the Environment for Future Driver Assistance combining Autonomous and Cooperative Appliances. In Proceedings of the 4th International Workshop on Vehicle-to-Vehicle Communications (V2VCOM), Eindhoven, The Netherlands, 3 June 2008; pp. 45–56.

- Gohring, D.; Wang, M.; Schnurmacher, M.; Ganjineh, T. Radar/Lidar Sensor Fusion for Car-following on Highways. In Proceedings of the 5th International Conference on Automation, Robotics and Applications (ICARA), Barcelona, Spain, 6–8 December 2011; pp. 407–412.

- Acharya, S.; Tracey, C.; Rafii, A. System Design of Time-of-Flight Range Camera for Car Park Assist and Backup Application. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Anchorage, AK, USA, 23–28 June 2008; pp. 1–6.

- Lange, R.; Seitz, P. Solid-state Time-of-flight Range Camera. IEEE J. Quantum Electron. 2001, 37, 390–397. [Google Scholar] [CrossRef]

- Foix, S.; Alenya, G.; Torras, C. Lock-in Time-of-Flight (ToF) Cameras: A Survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef] [Green Version]

- Elkhalili, O.; Schrey, O.; Ulfig, W.; Brockherde, W.; Hosticka, B.; Mengel, P.; Listl, L. A 64x8 Pixel 3-D CMOS Time of Flight Image Sensor for Car Safety Applications. In Proceedings of the 32nd European Solid-State Circuits Conference (ESSCIRC), Montreux, Switzerland, 19–21 September 2006; pp. 568–571.

- Hsu, S.; Acharya, S.; Rafii, A.; New, R. Performance of a Time-of-Flight Range Camera for Intelligent Vehicle Safety Applications. In Advanced Microsystems for Automotive Applications; Valldorf, J., Gessner, W., Eds.; VDI-Buch, Springer: Berlin/Heidelberg, Germany, 2006; pp. 205–219. [Google Scholar]

- Bernini, N.; Bertozzi, M.; Castangia, L.; Patander, M.; Sabbatelli, M. Real-time Obstacle Detection using Stereo Vision for Autonomous Ground Vehicles: A Survey. In Proceedings of the IEEE 17th International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 873–878.

- Sun, Z.; Bebis, G.; Miller, R. On-road Vehicle Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711. [Google Scholar] [PubMed]

- Sivaraman, S.; Trivedi, M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Singh, S.; Nelakuditi, S.; Roy Choudhury, R.; Tong, Y. Your Smartphone Can Watch the Road and You: Mobile Assistant for Inattentive Drivers. In Proceedings of the 13th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Hilton Head, SC, USA, 11–14 June 2012; pp. 261–262.

- Ren, Z.; Wang, C.; He, J. Vehicle Detection Using Android Smartphones. In Proceedings of the 7th International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Bolton Landing, NY, USA, 26–29 June 2013.

- Chang, K.; Oh, B.H.; Hong, K.S. An Implementation of Smartphone-Based Driver Assistance System Using Front and Rear Camera. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–13 January 2014; pp. 280–281.

- Haberjahn, M. Multilevel Datenfusion Konkurrierender Sensoren in der Fahrzeugumfelderfassung. Ph.D. Thesis, Humboldt-Universität zu Berlin, Berlin, Germany, 2013. [Google Scholar]

- Perrollaz, M.; Labayrade, R.; Royere, C.; Hautiere, N.; Aubert, D. Long Range Obstacle Detection Using Laser Scanner and Stereovision. In Proceedings of the IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 182–187.

- Gandhi, T.; Trivedi, M. Vehicle Surround Capture: Survey of Techniques and a Novel Omni-Video-Based Approach for Dynamic Panoramic Surround Maps. IEEE Trans. Intell. Transp. Syst. 2006, 7, 293–308. [Google Scholar] [CrossRef]

- Stein, G.; Gdalyahu, Y.; Shashua, A. Stereo-Assist: Top-down Stereo for Driver Assistance Systems. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 723–730.

- Labayrade, R.; Royere, C.; Gruyer, D.; Aubert, D. Cooperative Fusion for Multi-Obstacles Detection with use of Stereovision and Laser Scanner. Auton. Robot. 2005, 19, 117–140. [Google Scholar] [CrossRef]

- Nedevschi, S.; Danescu, R.; Frentiu, D.; Marita, T.; Oniga, F.; Pocol, C.; Graf, T.; Schmidt, R. High Accuracy Stereovision Approach for Obstacle Detection on Non-Planar Roads. In Proceedings of the IEEE Intelligent Engineering Systems (INES), Cluj-Napoca, Romania, 19–21 September 2004; pp. 211–216.

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R.; et al. An Empirical Evaluation of Deep Learning on Highway Driving. arXiv 2015. [Google Scholar]

- Stein, G.; Mano, O.; Shashua, A. Vision-Based ACC with a Single Camera: Bounds on Range and Range Rate Accuracy. In Proceedings of the IEEE Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 120–125.

- Giesbrecht, J.L.; Goi, H.K.; Barfoot, T.D.; Francis, B.A. A Vision-Based Robotic Follower Vehicle. In Proceedings of the SPIE Defense, Security, and Sensing, Orlando, FL, USA, 2009; Volume 7332, pp. 1–12.

- Lessmann, S.; Meuter, M.; Müller, D.; Pauli, J. Probabilistic Distance Estimation for Vehicle Tracking Application in Monocular Vision. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 17–21 April 2016; pp. 1199–1204.

- Petovello, M.G.; O’Keefe, K.; Chanv, B.; Spiller, S.; Pedrosa, C.; Xie, P.; Basnayake, C. Demonstration of Inter-Vehicle UWB Ranging to Augment DGPS for Improved Relative Positioning. J. Glob. Position. Syst. 2012, 11, 11–21. [Google Scholar] [CrossRef]

- MacGougan, G.; O’Keefe, K.; Klukas, R. Ultra-wideband Ranging Precision and Accuracy. Measur. Sci. Technol. 2009, 20, 1–13. [Google Scholar] [CrossRef]

- Morgan, Y. Accurate Positioning Using Short-Range Communications. In Proceedings of the International Conference on Ultra Modern Telecommunications Workshops (ICUMT), St. Petersburg, Russia, 12–14 October 2009; pp. 1–7.

- Staudinger, E.; Dammann, A. Round-Trip Delay Ranging with OFDM Signals—Performance Evaluation with Outdoor Experiments. In Proceedings of the 11th Workshop on Positioning, Navigation and Communication (WPNC), Dresden, Germany, 11–13 March 2014; pp. 1–6.

- Peker, A.; Acarman, T.; Yaman, C.; Yuksel, E. Vehicle Localization Enhancement with VANETs. In Proceedings of the Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 661–666.

- Kloeden, H.; Schwarz, D.; Biebl, E.M.; Rasshofer, R.H. Vehicle Localization Using Cooperative RF-Based Landmarks. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 387–392.

- Blumenstein, J.; Prokes, A.; Mikulasek, T.; Marsalek, R.; Zemen, T.; Mecklenbrauker, C. Measurements of Ultra-wide Band in-vehicle Channel—Statistical Description and TOA Positioning Feasibility Study. J. Wirel. Commun. Netw. 2015, 2015, 1–10. [Google Scholar] [CrossRef]

- Vychodil, J.; Blumenstein, J.; Mikulasek, T.; Prokes, A.; Derbek, V. Measurement of in-Vehicle Channel—Feasibility of Ranging in UWB and MMW Band. In Proceedings of the International Conference on Connected Vehicles and Expo (ICCVE), Vienna, Austria, 3–7 November 2014; pp. 695–698.

- Bonet, B.; Alcantarilla, I.; Flament, D.; Rodriguez, C.; Zarraoa, N. The Benefits of Multi-Constellation GNSS: Reaching up Even to Single Constellation GNSS Users. In Proceedings of the 22nd International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS), Savannah, GA, USA, 22–25 September 2009; pp. 1268–1280.

- Kaplan, E. Understanding GPS—Principles and Applications, 2nd ed.; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Skog, I.; Handel, P. In-Car Positioning and Navigation Technologies—A Survey. IEEE Trans. Intell. Transp. Syst. 2009, 10, 4–21. [Google Scholar] [CrossRef]

- Groves, P. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems, 2nd ed.; GNSS/GPS, Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Shin, E.H.; El-Sheimy, N. Accuracy Improvement of Low Cost INS/GPS for Land Applications. Ph.D. Thesis, University of Calgary, Department of Geomatics Engineering, Calgary, AB, Canada, 2001. [Google Scholar]

- Xu, Q.; Li, X.; Li, B.; Song, X.; Cai, Z. A Reliable Hybrid Positioning Methodology for Land Vehicles Using Low-Cost Sensors. IEEE Trans. Intell. Transp. Syst. 2016, 17, 834–847. [Google Scholar] [CrossRef]

- Li, X.; Xu, Q. A Reliable Fusion Positioning Strategy for Land Vehicles in GPS-denied Environments Based on Low-cost Sensors. IEEE Trans. Ind. Electron. 2016, in press. [Google Scholar] [CrossRef]

- Le Marchand, O.; Bonnifait, P.; Ibañez-Guzmán, J.; Bétaille, D.; Peyret, F. Characterization of GPS Multipath for Passenger Vehicles across Urban Environments. ATTI dell’Istituto Ital. Navig. 2009, 189, 77–88. [Google Scholar]

- Obst, M.; Bauer, S.; Wanielik, G. Urban Multipath Detection and Mitigation with Dynamic 3D Maps for Reliable Land Vehicle Localization. In Proceedings of the IEEE/ION Position Location and Navigation Symposium (PLANS), Myrtle Beach, SC, USA, 23–26 April 2012; pp. 685–691.

- Li, Z.; Leung, H. GPS/INS Integration Based Navigation with Multipath Mitigation for Intelligent Vehicles. In Proceedings of the 4th IEEE International Conference on Mechatronics (ICM), Kumamoto, Japan, 8–10 May 2007; pp. 1–5.

- Giremus, A.; Tourneret, J.Y.; Calmettes, V. A Particle Filtering Approach for Joint Detection/Estimation of Multipath Effects on GPS Measurements. IEEE Trans. Signal Process. 2007, 55, 1275–1285. [Google Scholar] [CrossRef] [Green Version]

- Lentmaier, M.; Krach, B.; Robertson, P. Bayesian Time Delay Estimation of GNSS Signals in Dynamic Multipath Environments. Int. J. Navig. Obs. 2008. [Google Scholar] [CrossRef]

- Grejner-Brzezinska, D.A.; Yi, Y.; Toth, C.K. Bridging GPS gaps in urban canyons: The benefits of ZUPTs. Navigation 2001, 48, 217–225. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Shin, E.H.; Niu, X. Kalman filter face-off: Extended vs. Unscented Kalman filters for integrated GPS and MEMS inertial. Inside GNSS 2006, 1, 48–54. [Google Scholar]

- Honkavirta, V.; Perala, T.; Ali-Loytty, S.; Piche, R. A Comparative Survey of WLAN Location Fingerprinting Methods. In Proceedings of the 6th Workshop on Positioning, Navigation and Communication (WPNC), Hannover, Germany, 19 March 2009; pp. 243–251.

- Hejc, G.; Seitz, J.; Vaupel, T. Bayesian Sensor Fusion of Wi-Fi Signal Strengths and GNSS Code and Carrier Phases for Positioning in Urban Environments. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 5–8 May 2014; pp. 1026–1032.

- Lu, H.; Zhang, S.; Dong, Y.; Lin, X. A Wi-Fi/GPS Integrated System for Urban Vehicle Positioning. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems (ITSC), Funchal, Portugal, 19–22 September 2010; pp. 1663–1668.

- Soloviev, A. Tight Coupling of GPS, Laser Scanner, and Inertial Measurements for Navigation in Urban Environments. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2008; pp. 511–525.

- Im, J.H.; Im, S.H.; Jee, G.I. Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area. Sensors 2016, 16, 1268. [Google Scholar] [CrossRef] [PubMed]

- Vivet, D.; Checchin, P.; Chapuis, R. Localization and Mapping Using Only a Rotating FMCW Radar Sensor. Sensors 2013, 13, 4527. [Google Scholar] [CrossRef] [PubMed]

- De Ponte Müller, F.; Diaz, E.M.; Rashdan, I. Cooperative Infrastructure-Based Vehicle Positioning. In Proceedings of the IEEE 84th Vehicular Technology Conference (VTC Fall), Montreal, QC, Canada, 18–21 September 2016; pp. 1060–1065.

- Laneurit, J.; Blanc, C.; Chapuis, R.; Trassoudaine, L. Multisensorial Data Fusion for Global Vehicle and Obstacles Absolute Positioning. In Proceedings of the IEEE Intelligent Vehicles Symposium, Columbus, OH, USA, 9–11 June 2003; pp. 138–143.

- Schreiber, M.; Königshof, H.; Hellmund, A.M.; Stiller, C. Vehicle Localization with Tightly Coupled GNSS and Visual Odometry. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 858–863.

- Olivares-Mendez, M.A.; Sanchez-Lopez, J.L.; Jimenez, F.; Campoy, P.; Sajadi-Alamdari, S.A.; Voos, H. Vision-Based Steering Control, Speed Assistance and Localization for Inner-City Vehicles. Sensors 2016, 16, 362. [Google Scholar] [CrossRef] [PubMed]

- Toledo-Moreo, R.; Betaille, D.; Peyret, F.; Laneurit, J. Fusing GNSS, Dead-Reckoning, and Enhanced Maps for Road Vehicle Lane-Level Navigation. IEEE J. Sel. Top. Signal Process. 2009, 3, 798–809. [Google Scholar] [CrossRef]

- Jiang, Y.; Qiu, H.; McCartney, M.; Sukhatme, G.; Gruteser, M.; Bai, F.; Grimm, D.; Govindan, R. CARLOC: Precise Positioning of Automobiles. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015; ACM: New York, NY, USA, 2015; pp. 253–265. [Google Scholar]

- Jimenez, F.; Monzon, S.; Naranjo, J.E. Definition of an Enhanced Map-Matching Algorithm for Urban Environments with Poor GNSS Signal Quality. Sensors 2016, 16, 193. [Google Scholar] [CrossRef] [PubMed]

- Roth, J.; Schaich, T.; Trommer, G. Cooperative GNSS-Based Method for Vehicle Positioning. Gyroscopy Navig. 2012, 3, 245–254. [Google Scholar] [CrossRef]

- Alam, N.; Tabatabaei Balaei, A.; Dempster, A.G. A DSRC Doppler-Based Cooperative Positioning Enhancement for Vehicular Networks With GPS Availability. IEEE Trans. Veh. Technol. 2011, 60, 4462–4470. [Google Scholar] [CrossRef]

- Dao, T.S.; Leung, K.Y.K.; Clark, C.M.; Huissoon, J.P. Markov-Based Lane Positioning using Intervehicle Communication. IEEE Trans. Intell. Transp. Syst. 2007, 8, 641–650. [Google Scholar] [CrossRef]

- Berefelt, F.; Boberg, B.; Nygårds, J.; Strömbäck, P. Collaborative GPS/INS Navigation in Urban Environment. In Proceedings of the ION National Technical Meeting, San Diego, CA, USA, 26–28 January 2004.

- Garello, R.; Lo Presti, L.; Corazza, G.; Samson, J. Peer-to-Peer Cooperative Positioning Part I: GNSS Aided Acquisition. INSIDE GNSS 2012, 7, 55–63. [Google Scholar]

- Röckl, M.; Strang, T.; Kranz, M. V2V Communications in Automotive Multi-Sensor Multi-Target Tracking. In Proceedings of the IEEE 68th Vehicular Technology Conference (VTC-Fall), Calgary, AB, Canada, 21–24 September 2008; pp. 1–5.

- Kellum, C.C. Basic Feasibility of GPS Positioning without Carrier-Phase Measurements as a Relative Position Sensor between Two Vehicles. In Proceedings of the National Technical Meeting of the Institute of Navigation, San Diego, CA, USA, 24–26 January 2005; pp. 903–910.

- Richter, E.; Obst, M.; Schubert, R.; Wanielik, G. Cooperative Relative Localization Using Vehicle-To-Vehicle Communications. In Proceedings of the 12th International Conference on Information Fusion (FUSION), Seattle, WA, USA, 6–9 July 2009; pp. 126–131.

- Alam, N.; Balaei, A.; Dempster, A. Positioning Enhancement with Double Differencing and DSRC. In Proceedings of the 23rd International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS), Portland, OR, USA, 21–24 September 2010; pp. 1210–1218.

- Alam, N.; Tabatabaei Balaei, A.; Dempster, A.G. Relative Positioning Enhancement in VANETs: A Tight Integration Approach. IEEE Trans. Intell. Transp. Syst. 2012, 14, 1–9. [Google Scholar] [CrossRef]

- Yang, D.; Zhao, F.; Liu, K.; Lim, H.B.; Frazzoli, E.; Rus, D. A GPS Pseudorange Based Cooperative Vehicular Distance Measurement Technique. In Proceedings of the 2012 IEEE 75th Vehicular Technology Conference (VTC Spring), Yokohama, Japan, 6–9 May 2012; pp. 1–5.

- De Ponte Müller, F.; Diaz, E.M.; Kloiber, B.; Strang, T. Bayesian Cooperative Relative Vehicle Positioning Using Pseudorange Differences. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 5–8 May 2014; pp. 434–444.

- Lassoued, K.; Bonnifait, P.; Fantoni, I. Cooperative Localization of Vehicles Sharing GNSS Pseudoranges Corrections with No Base Station Using Set Inversion. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 496–501.

- Basnayake, C.; Kellum, C.; Sinko, J.; Strus, J. GPS-Based Relative Positioning Test Platform for Automotive Active Safety Systems. In Proceedings of the 19th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS), Fort Worth, TX, USA, 26–29 September 2006; pp. 1457–1467.

- Hwang, S.S.; Speyer, J. Particle Filters With Adaptive Resampling Technique Applied to Relative Positioning Using GPS Carrier-Phase Measurements. IEEE Trans. Control Syst. Technol. 2011, 19, 1384–1396. [Google Scholar] [CrossRef]

- Travis, W.; Bevly, D. Trajectory Duplication Using Relative Position Information for Automated Ground Vehicle Convoys. In Proceedings IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 5–8 May 2008; pp. 1022–1032.

- Travis, W.E. Path Duplication Using GPS Carrier-Based Relative Position for Automated Ground Vehicle Convoys. Ph.D. Thesis, Auburn University, Auburn, AL, USA, 2010. [Google Scholar]

- Ansari, K.; Wang, C.; Wang, L.; Feng, Y. Vehicle-to-Vehicle Real-Time Relative Positioning Using 5.9 GHz DSRC Media. In Proceedings of the IEEE 78th Vehicular Technology Conference (VTC Fall), Las Vegas, NV, USA, 2–5 September 2013; pp. 1–7.

- Ansari, K.; Feng, Y.; Tang, M. A Runtime Integrity Monitoring Framework for Real-Time Relative Positioning Systems Based on GPS and DSRC. IEEE Trans. Intell. Transp. Syst. 2014, 16, 1–13. [Google Scholar] [CrossRef]

- Luo, N.; Lachapelle, G. Relative Positioning of Multiple Moving Platforms using GPS. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 936–948. [Google Scholar]

- De Ponte Müller, F.; Navarro Tapia, D.; Kranz, M. Precise Relative Positioning of Vehicles with On-the-Fly Carrier Phase Resolution and Tracking. Int. J. Distrib. Sens. Netw. 2015. [Google Scholar] [CrossRef]

- Zeng, S. Performance Evaluation of Automotive Radars Using Carrier-Phase Differential GPS. IEEE Trans. Instrum. Measur. 2010, 59, 2732–2741. [Google Scholar] [CrossRef]

- Serrano, L.; Kim, D.; Langley, R.B.; Itani, K.; Ueno, M. A GPS Velocity Sensor: How Accurate can it be?—A First Look. In Proceedings of the ION National Technical Meeting, San Diego, CA, USA, 26–28 January 2004; pp. 875–885.

- Henkel, P.; Günther, C. Attitude Determination with Low-Cost GPS/INS. In Proceedings of the 26th International Technical Meeting of The Satellite Division of the Institute of Navigation (ION GNSS+), Nashville, TN, USA, 16–20 September 2013; pp. 2015–2023.

- Henkel, P.; Iafrancesco, M. Tightly Coupled Position and Attitude Determination with Two Low-Cost GNSS Receivers. In Proceedings of the 11th International Symposium on Wireless Communications Systems (ISWCS), Manchester, UK, 20–23 September 2014; pp. 895–900.

- IEEE. Standard for Information Technology-Telecommunications and Information Exchange between Systems-Local and Metropolitan Area Networks-Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications; Technical Report; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- ETSI ES 202 663. Intelligent Transport Systems (ITS); European Profile Standard for the Physical and Medium Access Control Layer of Intelligent Transport Systems Operating in the 5 GHz Frequency Band; Technical Report 1.1.0; European Telecommunications Standards Institute: Sophia-Antipolis, France, 2009. [Google Scholar]

- Willke, T.L.; Tientrakool, P.; Maxemchuk, N.F. A survey of inter-vehicle communication protocols and their applications. IEEE Commun. Surv. Tutor. 2009, 11, 3–20. [Google Scholar] [CrossRef]

- Bhoi, S.K.; Khilar, P.M. Vehicular Communication: A Survey. IET Netw. 2014, 3, 204–217. [Google Scholar] [CrossRef]

- Karagiannis, G.; Altintas, O.; Ekici, E.; Heijenk, G.; Jarupan, B.; Lin, K.; Weil, T. Vehicular Networking: A Survey and Tutorial on Requirements, Architectures, Challenges, Standards and Solutions. IEEE Commun. Surv. Tutor. 2011, 13, 584–616. [Google Scholar] [CrossRef]

- De Ponte Müller, F.; Diaz, E.M.; Rashdan, I. Cooperative Positioning and Radar Sensor Fusion for Relative Localization of Vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 1060–1065.

- Schmidt, R.K.; Kloiber, B.; Schüttler, F.; Strang, T. Degradation of Communication Range in VANETs Caused by Interference 2.0—Real-World Experiment; Communication Technologies for Vehicles; Springer: Berlin/Heidelberg, Germany, 2011; pp. 176–188. [Google Scholar]

- Viriyasitavat, W.; Boban, M.; Tsai, H.M.; Vasilakos, A. Vehicular Communications: Survey and Challenges of Channel and Propagation Models. IEEE Veh. Technol. Mag. 2015, 10, 55–66. [Google Scholar] [CrossRef]

- Joo, J.; Eyobu, O.S.; Han, D.S.; Jeong, H.J. Measurement Based V2V Path Loss Analysis in urban NLOS Scenarios. In Proceedings of the 8th International Conference on Ubiquitous and Future Networks (ICUFN), Vienna, Austria, 5–8 July 2016; pp. 73–75.

- Mecklenbrauker, C.F.; Molisch, A.F.; Karedal, J.; Tufvesson, F.; Paier, A.; Bernado, L.; Zemen, T.; Klemp, O.; Czink, N. Vehicular Channel Characterization and its Implications for Wireless System Design and Performance. Proc. IEEE 2011, 99, 1189–1212. [Google Scholar] [CrossRef]

- Molisch, A.F.; Tufvesson, F.; Karedal, J.; Mecklenbrauker, C.F. A Survey on Vehicle-to-Vehicle Propagation Channels. IEEE Wirel. Commun. 2009, 16, 12–22. [Google Scholar] [CrossRef]

- Rodriguez, I.; Almeida, E.P.L.; Lauridsen, M.; Wassie, D.A.; Gimenez, L.C.; Nguyen, H.C.; Soerensen, T.B.; Mogensen, P. Measurement-Based Evaluation of the Impact of Large Vehicle Shadowing on V2X Communications. In Proceedings of the 22th European Wireless Conference, Oulu, Finland, 18–20 May 2016; pp. 1–8.

- He, R.; Molisch, A.F.; Tufvesson, F.; Zhong, Z.; Ai, B.; Zhang, T. Vehicle-to-Vehicle Propagation Models With Large Vehicle Obstructions. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2237–2248. [Google Scholar] [CrossRef]

- Teixeira, F.A.; e Silva, V.F.; Leoni, J.L.; Macedo, D.F.; Nogueira, J.M. Vehicular Networks using the IEEE 802.11p Standard: An experimental Analysis. Veh. Commun. 2014, 1, 91–96. [Google Scholar] [CrossRef]

- Boban, M.; d’Orey, P.M. Exploring the Practical Limits of Cooperative Awareness in Vehicular Communications. IEEE Trans. Veh. Technol. 2015, 65, 3904–3916. [Google Scholar] [CrossRef]

- Kloiber, B.; Strang, T.; Rockl, M.; de Ponte-Muller, F. Performance of CAM Based Safety Applications Using ITS-G5A MAC in High Dense Scenarios. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 654–660.

- Eckhoff, D.; Sofra, N.; German, R. A Performance Study of Cooperative Awareness in ETSI ITS G5 and IEEE WAVE. In Proceedings of the 10th Annual Conference on Wireless on-Demand Network Systems and Services (WONS), Banff, AB, Canada, 18–20 March 2013; pp. 196–200.

- Kloiber, B.; Rico-Garcia, C.; Härri, J.; Strang, T. Update Delay: A New Information-Centric Metric for a Combined Communication and Application Level Reliability Evaluation of CAM Based Safety Applications. In Proceedings of the 19th ITS World Congress, Vienna, Austria, 22–26 October 2012.

- Martelli, F.; Renda, M.E.; Resta, G.; Santi, P. A Measurement-Based Study of Beaconing Performance in IEEE 802.11p Vehicular Networks. In Proceedings of the 31st Annual IEEE International Conference on Computer Communications (INFOCOM), Orlando, FL, USA, 25–30 March 2012; pp. 1503–1511.

- Kato, T.; Ninomiya, Y.; Masaki, I. An Obstacle Detection Method by Fusion of Radar and Motion Stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 182–188. [Google Scholar] [CrossRef]

- Broggi, A.; Cerri, P. A radar driven fusion with vision for vehicle detection. PReVENT Fusion Forum e-J. 2006, 1, 17–18. [Google Scholar]

- Bombini, L.; Cerri, P.; Medici, P.; Alessandretti, G. Radar-Vision Fusion for Vehicle Detection. In Proceedings of the International Workshop on Intelligent Transportation, Hamburg, Germany, 14–15 March 2006; pp. 65–70.

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-Road Vehicle Detection and Tracking Using mmW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Cesic, J.; Markovic, I.; Cvisic, I.; Petrovic, I. Radar and Stereo Vision Fusion for Multitarget tracking on the Special Euclidean Group. Robot. Auton. Syst. 2016, 83, 338–348. [Google Scholar] [CrossRef]

- Blanc, C.; Trassoudaine, L.; Gallice, J. EKF and Particle Filter Track-to-Track Fusion: A Quantitative Comparison from Radar-Lidar Obstacle Tracks. In Proceedings of the 7th International Conference on Information Fusion, Stockholm, Sweden, 25–28 July 2005; Volume 2, pp. 1303–1310.

- Rasshofer, R.H.; Gresser, K. Automotive Radar and Lidar Systems for Next Generation Driver Assistance Functions. Adv. Radio Sci. 2005, 3, 205–209. [Google Scholar] [CrossRef]

- Mahlisch, M.; Schweiger, R.; Ritter, W.; Dietmayer, K. Sensorfusion Using Spatio-Temporal Aligned Video and Lidar for Improved Vehicle Detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 424–429.

- Walchshäusl, L.; Lindl, R.; Vogel, K.; Tatschke, T. Detection of Road Users in Fused Sensor Data Streams for Collision Mitigation. In Advanced Microsystems for Automotive Applications; Springer: Berlin/Heidelberg, Germany, 2006; pp. 53–65. [Google Scholar]

- Cho, H.; Seo, Y.W.; Vijaya Kumar, B.; Rajkumar, R. A Multi-Sensor Fusion System for Moving Object Detection and Tracking in Urban Driving Environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1836–1843.

- Vu, T.D.; Aycard, O.; Tango, F. Object Perception for Intelligent Vehicle Applications: A Multi-Sensor Fusion Approach. In Proceedings of the IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 8–11 June 2014; pp. 774–780.

- Röckl, M.; Frank, K.; Strang, T.; Kranz, M.; Gačnik, J.; Schomerus, J. Hybrid Fusion Approach Combining Autonomous and Cooperative Detection and Ranging Methods for Situation-Aware Driver Assistance Sytems. In Proceedings of the IEEE International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Cannes, France, 15–18 September 2008; pp. 1–5.

- Fujii, S.; Fujita, A.; Umedu, T.; Kaneda, S.; Yamaguchi, H.; Higashinoz, T.; Takai, M. Cooperative Vehicle Positioning via V2V Communications and Onboard Sensors. In Proceedings of the IEEE Vehicular Technology Conference (VTC Fall), San Francisco, CA, USA, 5–8 September 2011; pp. 1–5.

- Wang, D.; O’Keefe, K.; Petovello, M. Decentralized Cooperative Positioning for Vehicle-to-Vehicle (V2V) Application Using GPS Integrated with UWB Range. In Proceedings of the ION Pacific PNT Meeting, Honolulu, HI, USA, 22–25 April 2013; pp. 793–803.

| Sensor | Frequency | Bandwidth | Range | Azimuth Angle | Accuracy | Cycle |

|---|---|---|---|---|---|---|

| Bosch LRR3 | 77 | 1 | 250 | ±15 | 0.1 , 0.12 , - | 80 |

| Delphi ESR | 77 | - | 174 | ±10 | 1.8 , 0.12 , - | 50 |

| Continental ARS30x | 77 | 1 | 250 | ±8.5 | 1.5%, 0.14 , 0.1 | 66 |

| SMS UMRR Type 40 | 24 | 250 | 250 | ±18 | 2.5%, 0.28 , - | 79 |

| TRW AC100 | 24 | 100 | 150 | ±8 | -, -, 0.5 | - |

| Sensor | Dimensional Resolution | Range | Azimuth Angle | Accuracy | Cycle |

|---|---|---|---|---|---|

| Quanergy M8-1 | 3D | 150 | 360 | 0.05 , -, 0.03 | 33 |

| Ibeo LUX | 2D | 200 | 110 | 0.1 , -, 0.125 | 20 |

| Continental SRL1 | 2D | 10 | 27 | 0.1 , 0.5 m/s, - | 10 |

| Velodyne HDL-64E S2 | 3D | 120 | 360 | 0.02 , -, 0.09 | 50 |

| Sensor | Resolution | Range | Azimuth Angle | Accuracy | Cycle |

|---|---|---|---|---|---|

| PMD CamBoard | 200 × 200 | 7 | 40 | -, -, - | 60 |

| PMD CamCube | 200 × 200 | - | - | -, -, - | - |

| SwissRanger SR4000 | 176 × 144 | 10 | 40 | 1 , -, - | 50 |

| Relative Positioning Technique | RSS Ranging | Radar | Laser Scanner | ToF Camera | Vision Based | RTD | GNSS Only | Differential GNSS | Diff. GNSS + INS | GNSS Carrier Phase |

|---|---|---|---|---|---|---|---|---|---|---|

| Cooperative | No | No | No | No | No | Yes | Yes | Yes | Yes | Yes |

| Accuracy | 5–20 | 0.1 0.2 0.1 | 0.02 0.5 0.1 | 0.1 0.2 0.1 | − 1–5 | + 0.5–1 | − 2–10 0.01 0.25–10 | ∘ 0.5–5 0.01 0.25–10 | + 0.5–2 0.01 0.25–1 | 0.01–0.05 0.01 0.25–10 |

| Availability | ∘ | ∘ | ∘ | − | ∘ | + | − | − | + | |

| Reliability | ∘ | + | ∘ | ∘ | ∘ | + | + | |||

| Range and Field of View | 400 360 | ∘ 250 ±15 | ∘ 200 360 | 20 40 | − 40 ±20 | 300 360 | 400 360 | 400 360 | 400 360 | 400 360 |

| Dimensional Resolution | 1D | ∘ 2D | + 2D/3D | + 2D/3D | + 2D/3D | 1D | + 3D | + 3D | + 3D | + 3D |

| Target Resolution | ∘ | ∘ | + | + | ||||||

| Non-Technical | ∘ | − | + | + | − | − |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Ponte Müller, F. Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors 2017, 17, 271. https://doi.org/10.3390/s17020271

De Ponte Müller F. Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors. 2017; 17(2):271. https://doi.org/10.3390/s17020271

Chicago/Turabian StyleDe Ponte Müller, Fabian. 2017. "Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles" Sensors 17, no. 2: 271. https://doi.org/10.3390/s17020271

APA StyleDe Ponte Müller, F. (2017). Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors, 17(2), 271. https://doi.org/10.3390/s17020271