1. Introduction

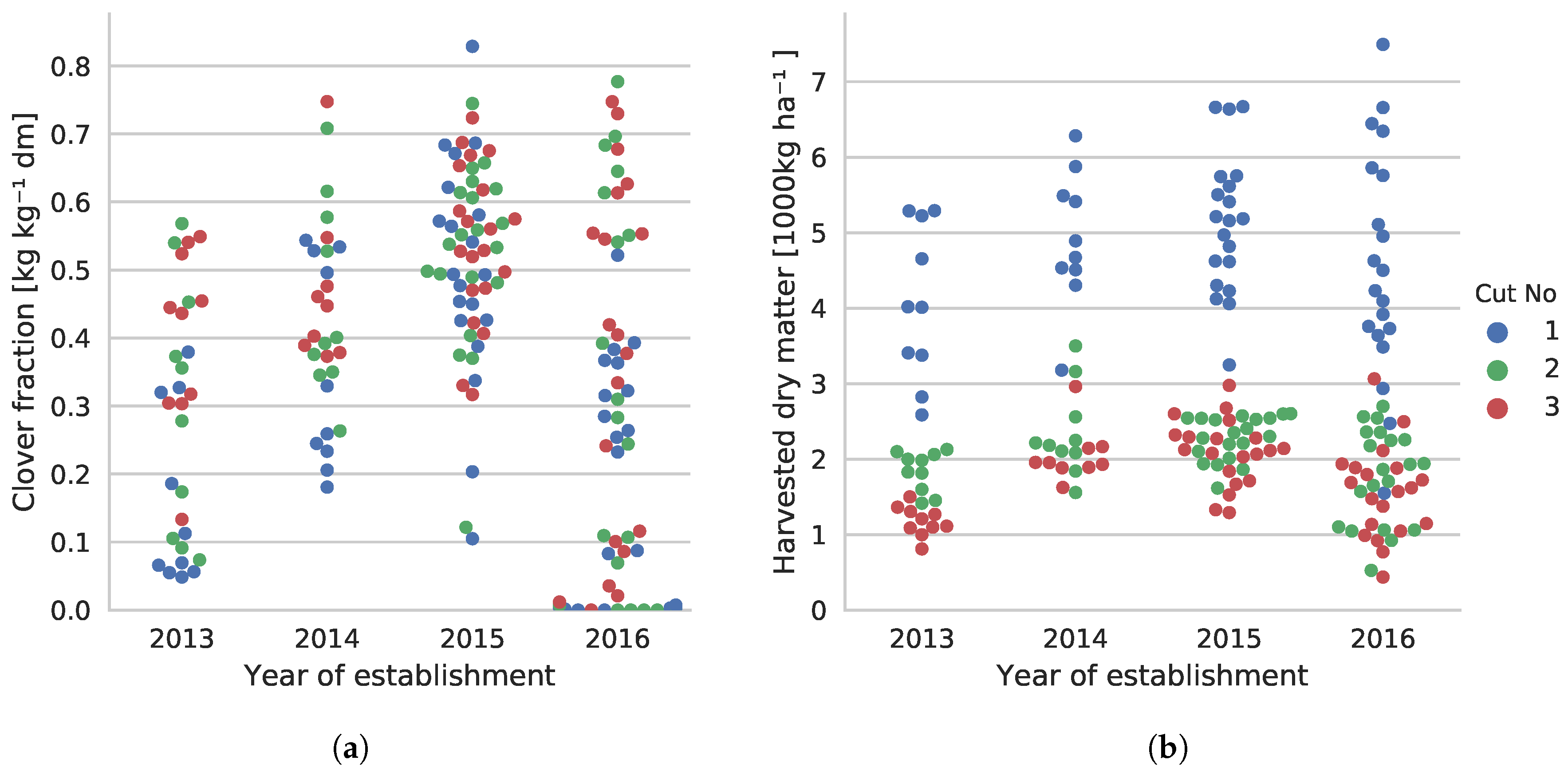

Estimation of the clover/grass ratio is essential for optimal fertilization, as it has significant economic potential for the dairy industry. Clover-grass combines high productivity and low environmental impact if the nitrogen (N) supply is adjusted according to the actual clover content. Nonetheless, manure and fertilizers are used largely irrespective of clover content due to poor ability to assess clover content and lack of knowledge on the correlation between fertilizer (N) and clover content during the season.

A high clover fraction gives a higher feed uptake and thus higher performance for cows when the harvested material is used for fodder. The goal is, therefore, to get a larger clover percentage into the clover pastures. Clover is an N-fixing crop able to capture and utilize free N from the air, while grass relies on soil available N for its growth and development. Clover is, however, also able to take up and utilize N from the soil, which creates competition between grass and clover if there is a sufficient amount of soil N available. In cases of low soil-available N the clover will outcompete the grass crop. However, due to its faster growth, the grass will outcompete the clover crop if high amounts of soil N are available. Therefore, it is possible to control the competition and thereby the clover-grass ratio by applying more or less N. Determining the clover-grass ratio thus automatically has great potential for optimizing N applications.

Clover and grass are often grown in mixtures, as clover-grass leys with different species increase the yield stability [

1,

2] and herbage quality [

3] compared to fertilized grass-only leys. This is due to niche complementarity [

4] and the greater protein content of the clover [

5,

6]. Thus, properly managed clover-grass mixtures produce a greater yield compared to pure stands of grass and clover [

7,

8]. In order to implement targeted fertilization in practice, it is important to firstly estimate actual clover content in a sward and, secondly optimize fertilization based on clover content.

In agriculture today, the amount of N added is based on coarse visual inspections, which are subject to errors and do not cover the variability within a single field or between fields. Automated and precise methods for estimating clover/grass ratio could potentially improve the fertilization strategy, thus improving yield and quality of biomass, resulting in a great financial impact. Positive environmental impacts include reduced N leaching (as more N will be absorbed and utilized by the clover-grass), and potentially reduced CO

2 emissions, as some fertilization operations can be skipped if the correct amount of clover is present early in the life-span of the clover-grass field [

9,

10].

Researchers have previously sought to estimate the content of clover-grass in images. Bonesmo et al. [

11] developed an image processing software program for pixel-wise classification of clover-grass images. The program used color indexes, edge detection, and morphological operations on the color images to distinguish and quantify the amount of soil, grass, clover, and large weeds. The software estimations and the manually-labeled clover coverage showed a high correlation (r

2 = 0.81).

Himstedt et al. [

12] used digital image analysis on images of grass-legume mixtures from a pot experiment to determine the relative legume dry matter contribution. The image analysis was used to determine the legume coverage (red clover, white clover, or alfalfa) by applying a sequence of morphological erosions and dilations. In each image, the legumes were manually encircled to determine the actual legume coverage. The estimated legume coverage showed a high correlation to both the actual legume coverage (r

2 =

) and the relative legume dry matter contribution (r

2 = 0.82). Himstedt et al. [

13] proposed an extension to estimate the absolute legume contribution using legume coverage, total biomass, and logit transformation. Himstedt et al. [

14] improved on the image analysis method by filtering the images in hue, saturation, and lightness (HSL) space and applying color segmentation to separate plants and soil after a sequence of morphological operations.

Rayburn [

15] correlated randomly sampled points in clover-grass images to relative legume and grass fractions. A regularly spaced grid was placed on top of each image at a random location, and the pixel at each grid location was manually classified. The distribution of classes was used to estimate the relative fractions. The estimated grass and legume fractions were not significantly different from the measured fractions and they showed a high correlation (r

2 =

).

McRoberts et al. [

16] used local binary patterns to estimate the grass fraction from color images converted to grayscale. The images were tiled into

pixel blocks, which were manually labeled as either legumes, grass, or unknown. The local binary patterns were made to be rotational invariant by grouping rotational similar patterns together. A histogram of the local binary pattern groups along with the height were used to estimate the grass fraction with a strong correlation (r

2 =

).

Automatic segmentation of images from clover-grass fields is a research area with great potential in precision agriculture. Nevertheless, there has been limited success in automated estimation of clover and grass content from red, green, and blue (RGB) images so far. The primary limitation of current methods involve the uncertainty in the image recognition process. Although the use of morphological operations has been shown to correlate with the clover content in the images, it lacks robustness with regards to scale invariance, field conditions, and estimation uncertainty. With varying clover sizes, camera or vegetation heights, or camera resolutions, the parameters of the existing methods need adjustments. This is illustrated by the drop in performance in the work of Himstedt et al. [

13] when moving from green house pots to field conditions.

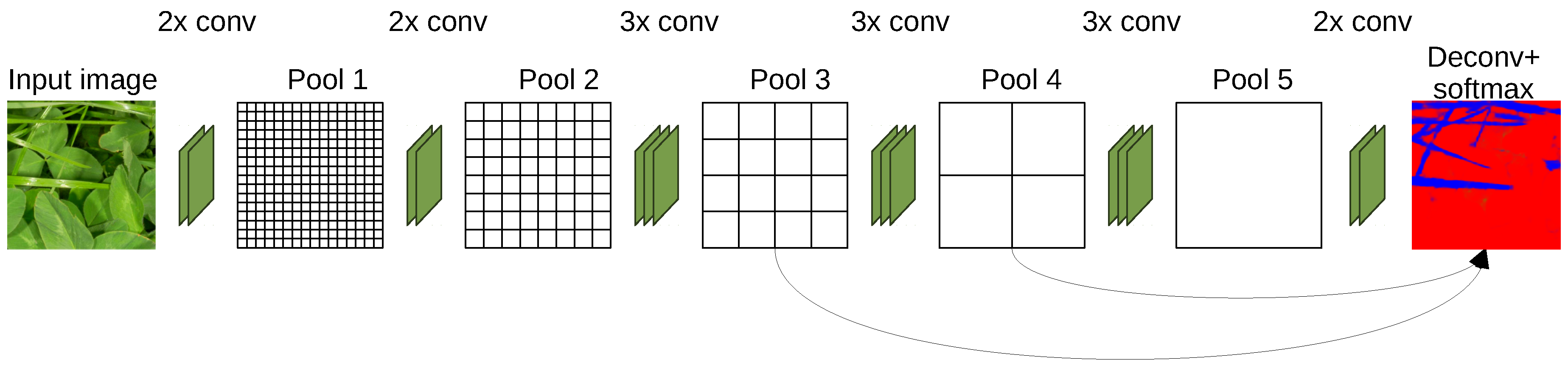

The aim of this project is to automatically determine the clover fraction in clover-grass fields through the use of machine learning to analyze RGB images for grass, clover, and weeds. We follow the procedure of splitting the challenge of estimating the clover content in dry matter from images into a two-step problem, similarly to Himstedt et al. [

12]. However, instead of relying on simple patch detections using morphological operations, we propose training a deep convolutional neural network, based on the fully convolutional network (FCN) architecture [

17], to directly classify relevant plant species visible in the images [

18,

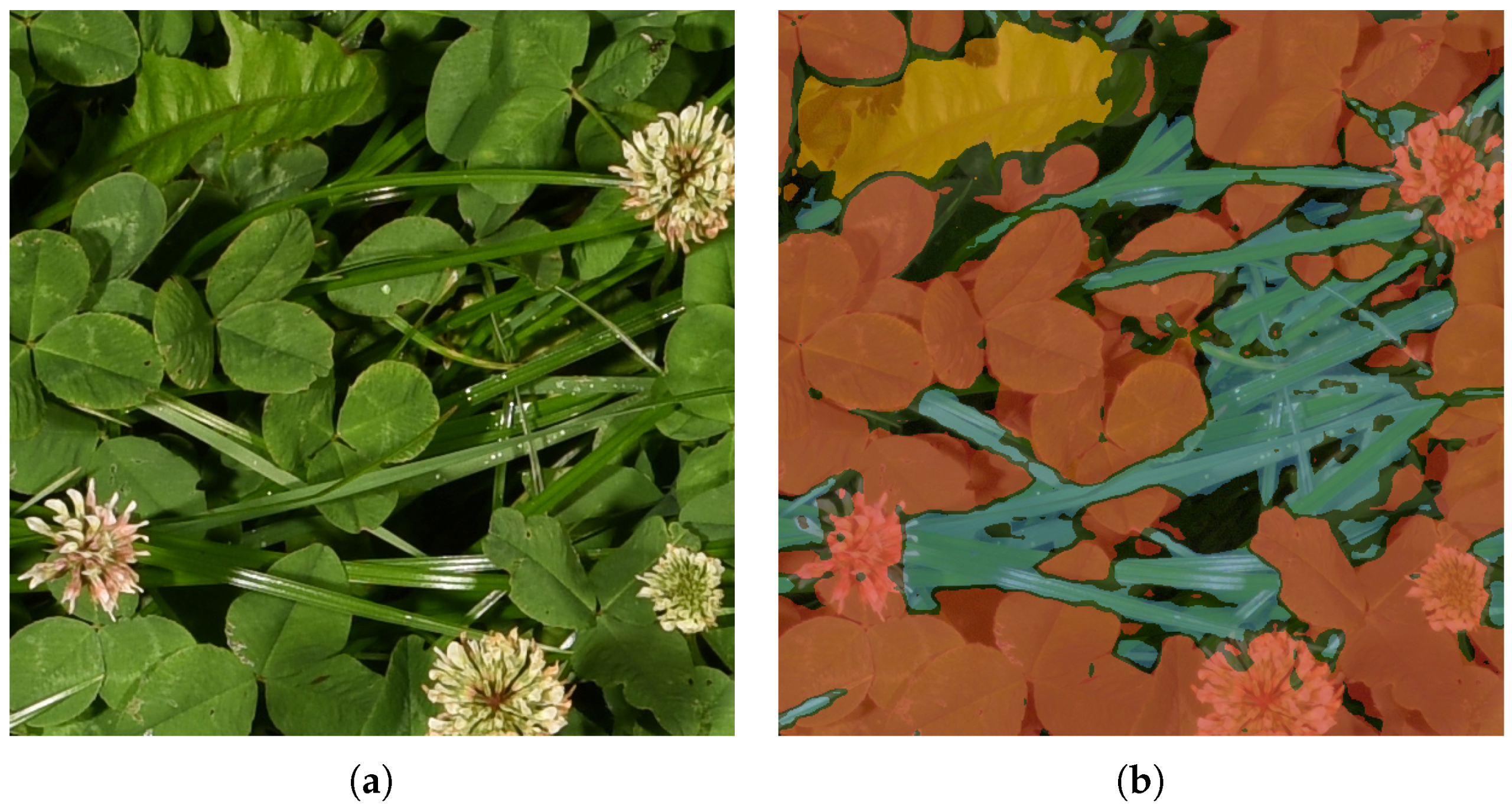

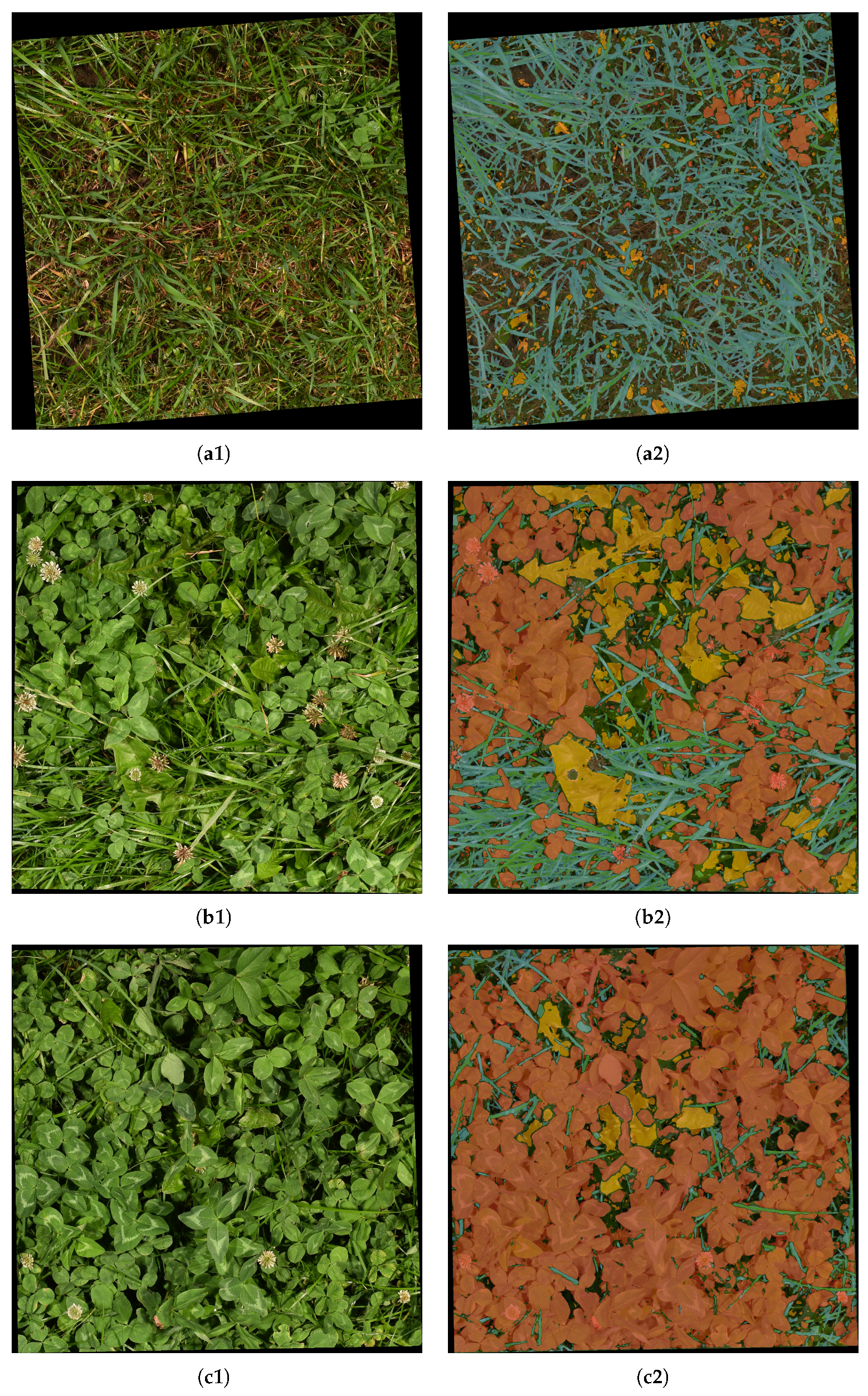

19]. This provides the latter stage of clover content estimation with information on the field patch composition and the detected coverage of grasses, clovers, and weeds in the image. Specifically, the selected convolutional neural network is designed to output a semantically segmented image, specifying the plant species of every pixel in the image. An example of such automatic semantic segmentation is shown in

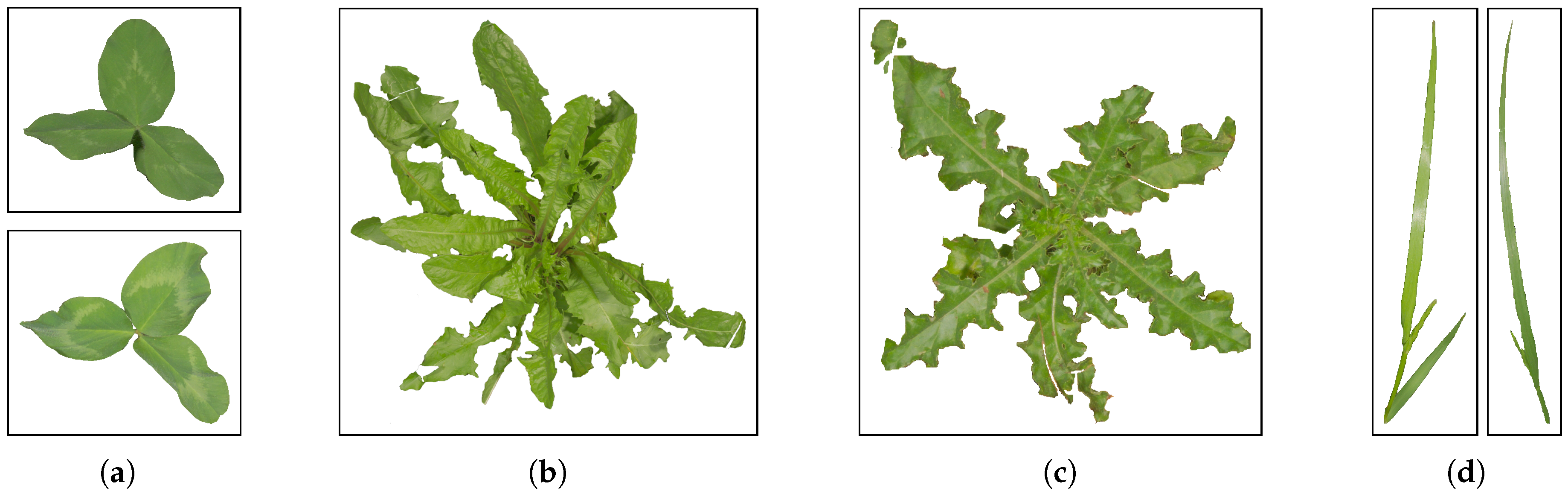

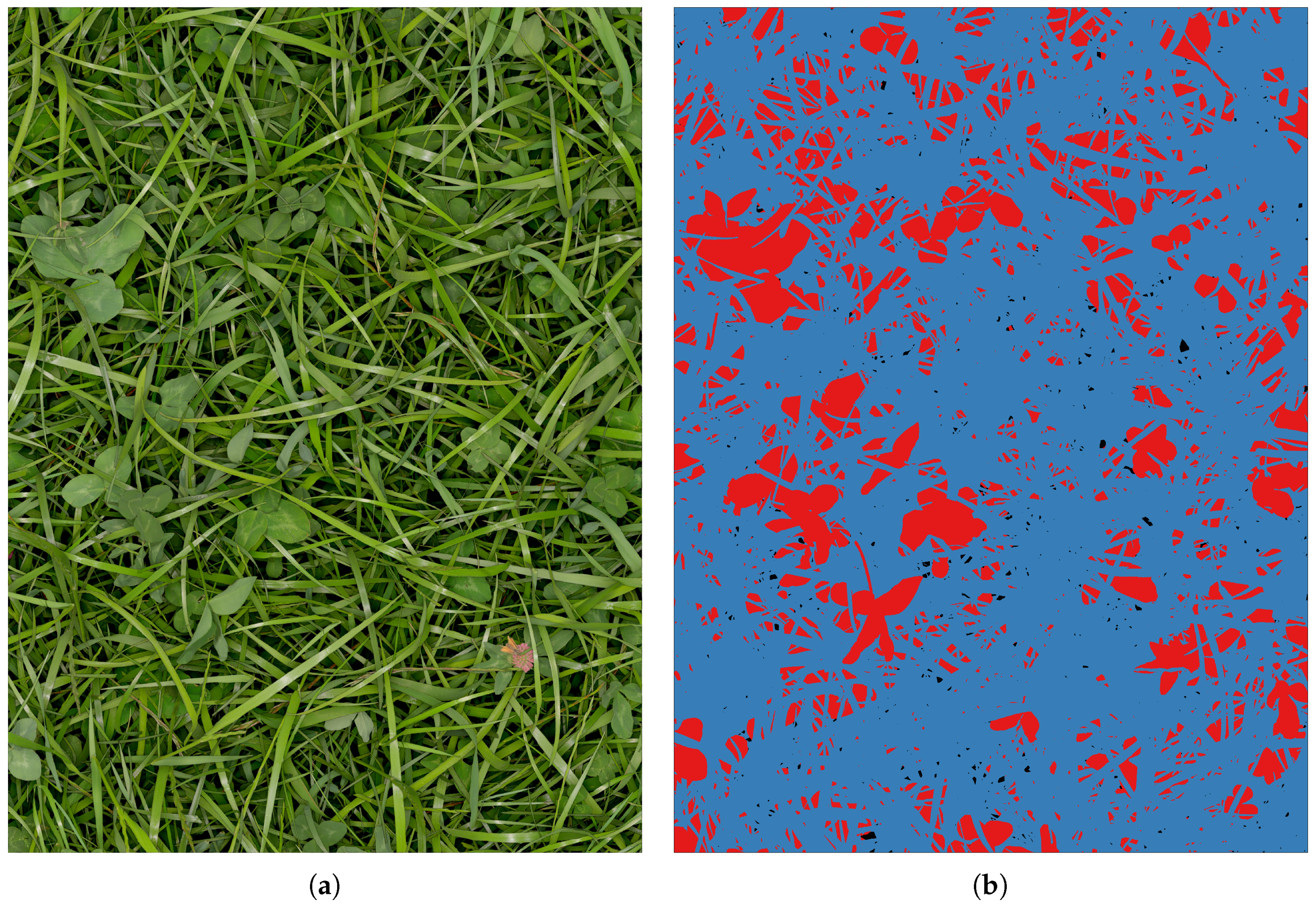

Figure 1, where pixels classified as grasses, clovers, and weeds are visualized by blue, red, and yellow overlay, respectively.

The main challenge of moving from manually designed features of the previous methods to learned features using neural networks is the significant increase in demand for ground truth data. To obviate this demand, a simulation environment was designed to generate labeled images of specific field compositions, from which the discriminating features are learned. The trained neural networks were then evaluated against human-annotated images for pixel-wise classification. Finally, a trained network was combined with the latter processing stage to estimate the clover content of the dry matter directly from images.

3. Results

As a result of splitting the task of determining the clover fraction into two problems, the presented results were separated similarly. First, the ability to semantically segment 10 test images was evaluated, followed by evaluation of the dry matter contents on all the gathered sample pairs.

3.1. Image Segmentation

To investigate the requirements of using real plant samples for clover-grass simulations, as shown in

Section 2.2, the same network architecture was trained twice with different numbers of training samples. Therefore, the two trained models only differed in terms of the plant variation in the training images.

One model was trained on simulated images from all 195 plant samples. The other model was trained on simulated images from a subset of the 195 plant samples. The subset of plant samples was selected randomly and made up 25% of the 195 plant samples.

The FCN-8s models, trained on simulated data, were evaluated with respect to the quality of the semantic segmentation of real images into clover, grass, and weed pixels. This was tested on the 10 hand-annotated image crops. Following the accuracy metrics of Long et al. [

17], the pixel-wise accuracy, mean intersection over union, and frequency-weighted intersection over union were calculated for each model and are shown in

Table 1.

For a fair comparison to the state of the art, the morphological approach of Bonesmo et al. [

11] was implemented and evaluated. The test images for this approach were downscaled to match the original GSD stated in the work by Bonesmo et al. [

11]. Additionally, the parameters were fine-tuned to the images, since the provided parameters led to poor classification results.

The FCN-8s architecture has a receptive field of pixels, defining the area of the input image used for each pixel classification. Along the borders of the image this context information is reduced, leading to a reduced accuracy in the border region. This effect is particularly pronounced when the image size is reduced, as the border region takes up a larger portion of the total image area. To avoid this effect, the test images were semantically segmented in their original sizes, and later cropped, leading to a useful context in the evaluation.

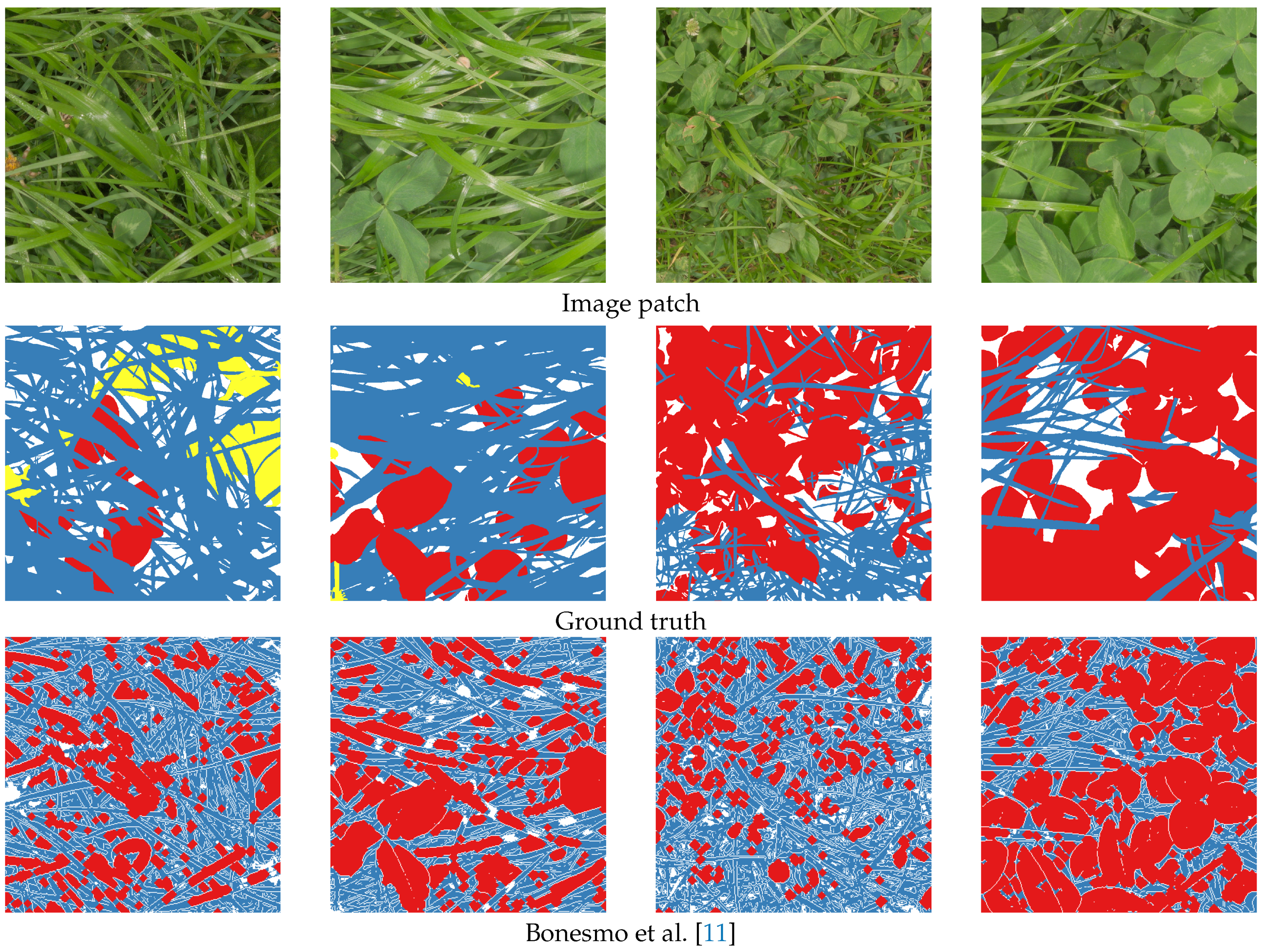

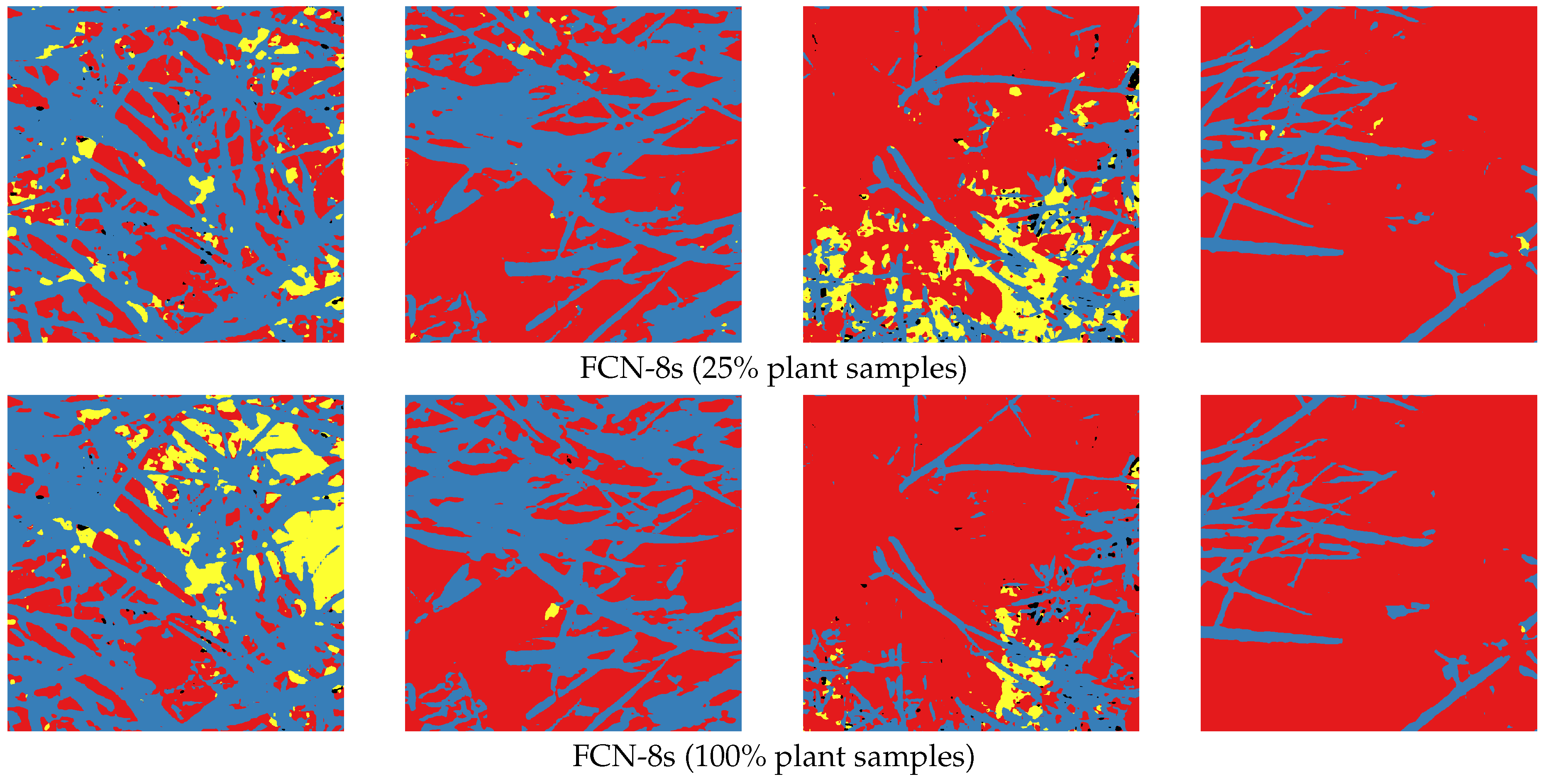

From the segmentation results shown in

Table 1, the improvement from the previous method is clear. The trained convolutional neural network increased the pixelwise accuracy by 17.9 percentage points due to the much higher level of abstraction.

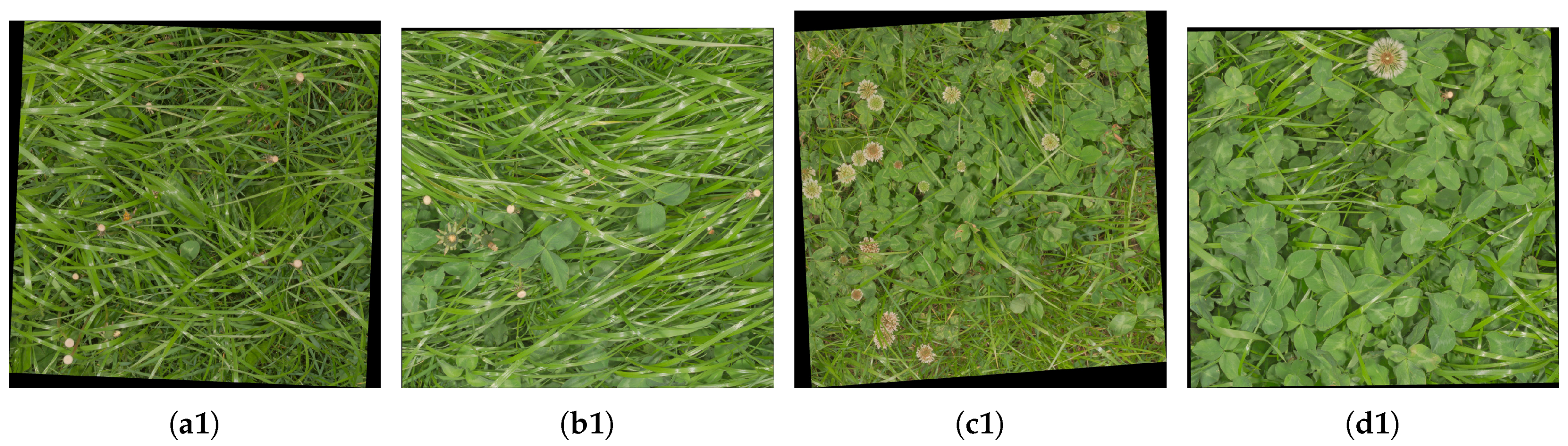

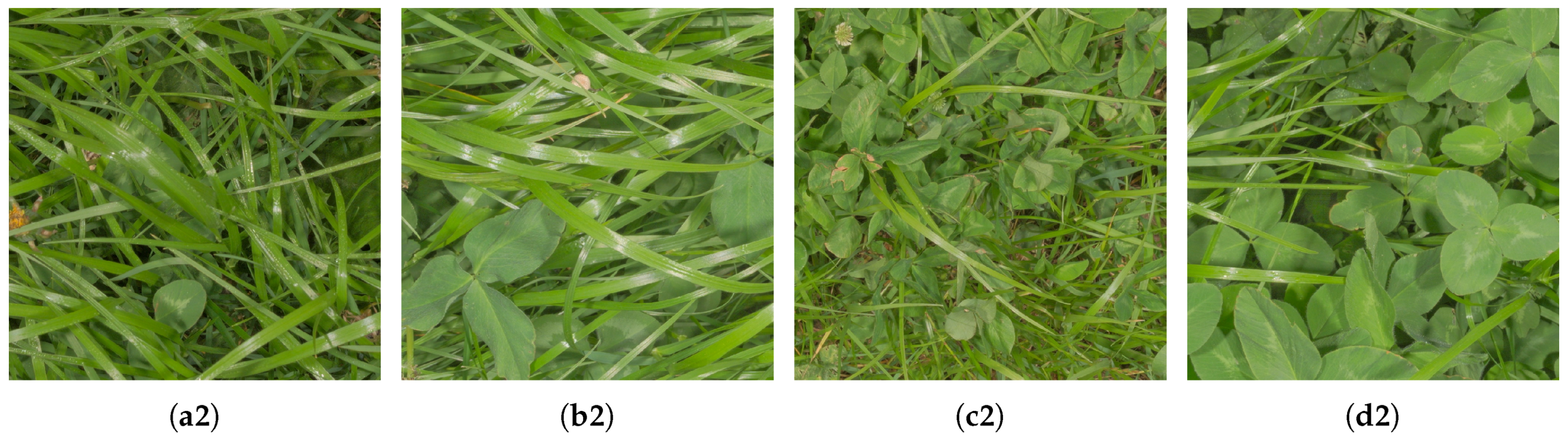

Qualitative results of the semantic segmentation with the varying clover, grass, and weed densities of

Figure 4 are shown in

Figure 8. Several observations regarding the FCN-8s models are clearly visible in the four samples:

The networks learned to identify both grass and clover despite heavy occlusion.

Use of 100% of the samples resulted in a great improvement in weed detection. The majority of the weeds were correctly identified in the first column, while the number of false positives in the third column was reduced.

Both FCN-8s models were slightly biased towards detecting clover. While the classified grass and weed pixels appeared to follow the contours of the plants in the image, the clover classification appeared to be the default choice of the network. This was beneficial in the fourth row, but led to general misclassifications in the second and third rows.

These observations are supported by the quantitative results in

Table 1. The networks reached a high pixel-wise accuracy of

and

for 25% and 100% samples, respectively. The increase in mean intersection over union (IoU) was of

percentage points when moving from 25% to 100% samples, while the frequency-weighted increase was of

, supporting the argument that the less-present class of weeds is classified much better.

The approach of Bonesmo et al. [

11] can in some cases detect clovers and grasses with high accuracy. This was clearly demonstrated in the fourth column. The morphological operations were, however, challenged by the varying leaf sizes across the test images. This was exemplified in the second and third column, where wide grass was classified as clover, and small clovers were classified as grass, respectively.

The possible use of leaf texture and the surrounding context by the FCN-8s network is believed to be of high importance when segmenting clover grass mixtures. Due to the high level of occlusions in the images, only a fraction of every plant is typically visible, while the leaf texture of the visible part remains.

3.2. Clover Fraction Estimation

To verify the usefulness of the trained CNN and validate the coupling between visual clover content in the canopy and clover dry matter fraction, the best-performing FCN-8s CNN was utilized to semantically segment the images from the 179 sample pairs.

In contrast to the isolated semantic segmentation case of

Section 3.1, it was beneficial to increase the stability of the clover-grass analysis at the cost of sparsity in the semantic segmentation. It was better for shadow regions and foreign objects to be ignored than falsely classified, as they influenced the dry matter composition estimation. To apply this, the traditional non-max suppression used for semantic segmentation was substituted by a custom threshold on the individual softmax score maps of the CNN. By qualitative visual inspection of five images, the softmax thresholds were defined as 0.95, 0.8, and 0.3 for clover, weeds, and grass, respectively. This corresponds well to the overestimation of clover and underestimation of grass in

Figure 8 in

Section 3.1. Three samples of the thresholded segmentation of clover-grass images are shown in

Figure 9.

Qualitatively, the three samples of semantic segmentation demonstrated an excellent understanding of the images by the convolutional neural network across vegetation densities and clover fractions. Visible weeds, clover, and grasses were detected with high accuracy while withered vegetation and ground were correctly ignored.

The coupling between the clover-grass images and dry matter composition was evaluated using the clover fraction metrics defined in Equations (

1) and (

2) for dry matter samples and image samples, respectively:

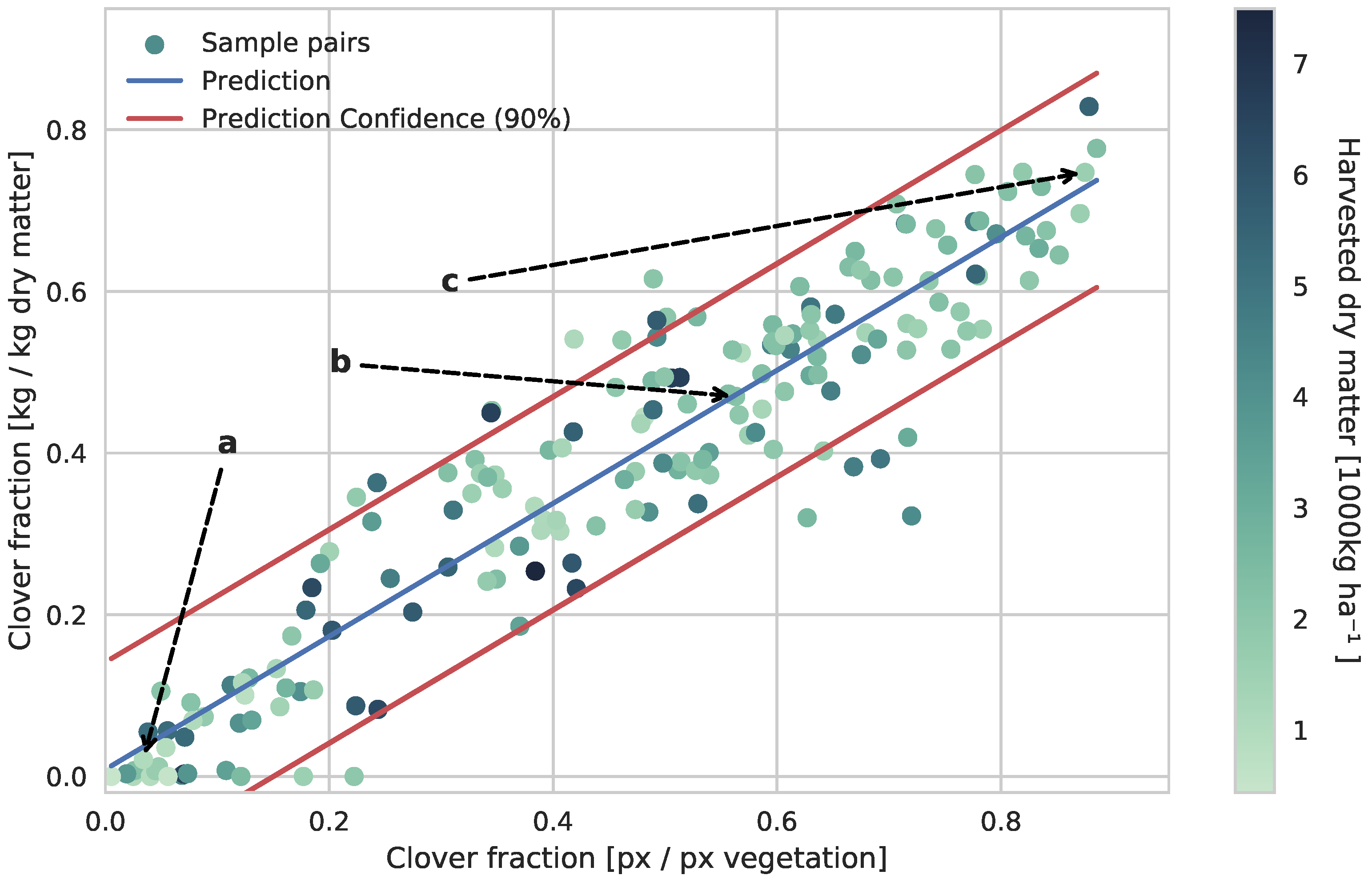

The result of the automated CNN-driven image analysis of visual clover content is shown in

Figure 10. The relationship between the CNN-driven analysis and the dry matter clover ratio in the photographed clover-grass patches was eminent and similar to the manually obtained relationship shown by Himstedt et al. [

14]. The linear regression based on the 179 primary samples pairs led to a clover dry matter fraction prediction with a standard deviation of 7.8%. This was achieved while covering the spread of total yield, clover fraction, weed levels, fraction of red and white clover, and time in season of the sample pairs.

Besides the general fitness of the linear regression, multiple outliers appeared in

Figure 10. Looking into the underlying image samples and image analysis, two distinct causes were observed.

The image was out of focus in sparse or low-yield vegetation.

The low-yield outliers along the x-axis all suffered from overestimated clover content. Due to the loss of focus in these samples, blurred vegetation on the ground was often misclassified as clover.

The clover-covered area of the canopy did not always represent the dry matter composition. In the range of 30–60% dry matter clover fraction, the canopy could be less representative of the harvested samples. This was the result of the heavy occlusions and was directly linked to the challenges of using the top-down view. The furthest outlier was for example a dense clover grass mixture, covered by a shallow layer of clover leaves, giving the impression of a much higher clover fraction.

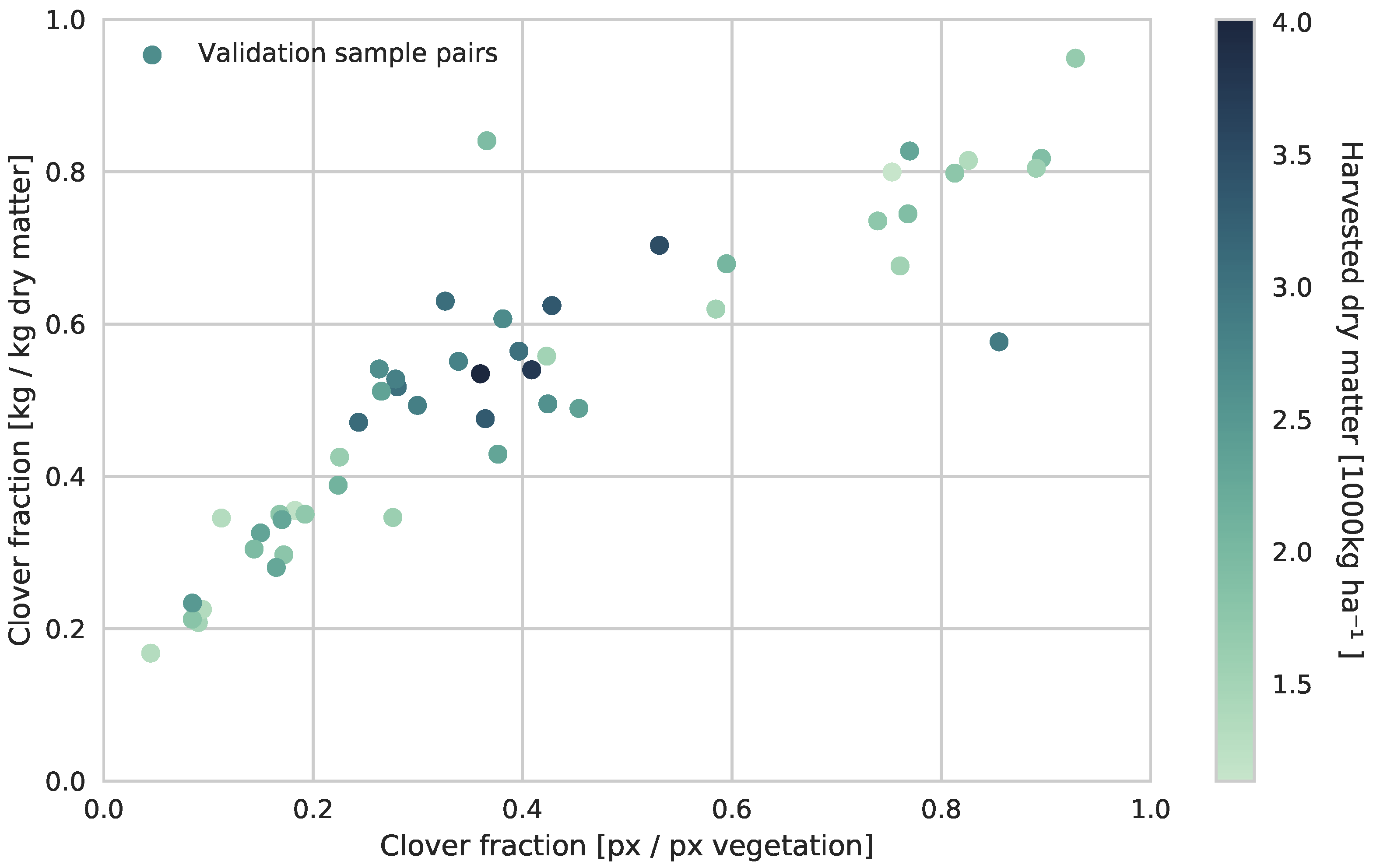

The trained CNN was also evaluated on the validation field located 210 km from the primary field site, while preserving the threshold parameters. The sample pair relationship of the validation field is shown in

Figure 11. When comparing the results of the two field sites, the validation site led to a similar relationship, with an introduction of an offset. This offset shifts the validation sample pairs towards the upper region of the prediction confidence of the estimator in

Figure 10, leading to a general underestimation of the clover fraction in the validation field.

4. Discussion

The ability to train a CNN for image segmentation using solely simulated images has been shown to have good prospects, as it allows one to train a network for tasks for which it would otherwise be unfeasible to achieve the needed amount of data. The acquired 195 plant samples for training have been additionally been reduced by , leading to a pixelwise accuracy drop of percentage points. This drop is mainly a result of worsened distinction between clover and weeds, caused by the use of only six weeds. This low number of samples does not cover the variation of weeds in the test data in terms of number of neither species nor appearances. This demonstrates that high classification accuracies can be achieved on real images with only few plant samples used for training, and this translates to reaching a working prototype for semantic segmentation within hours with manual labor, as opposed to weeks or months if following a traditional work flow of manual image labeling.

Care should be taken when training the convolutional neural network to span the variations in the test data by training using the corresponding variations in the training data. This was the case for vegetation density and varying ratios of clover, grass, and weeds in the simulated images. More care should be taken when simulating training data to imitate natural and common errors, introduced when collecting images. These include lighting conditions, color temperature, image noise, and blurring. Several of the images with a high ratio of misclassified pixels were blurred. As the network was trained on sharp images, it is believed that the number of misclassifications can be reduced by introducing blurred simulated images in the training data.

From the estimate of the clover content of the dry matter we see that the uncertainty is larger in cases where the clover and grass are mixed compared to cases dominated by one species. This is because the camera can only see the canopy of the plant cover, and plants of one species that is hidden by the others would thereby make the estimated ratio less accurate. In cases where the sward is dominated by one species, the estimated dry matter-ratio would be less affected by this phenomenon. Nonetheless, the estimated ratio is close to the real ratio even in cases where the real ratio is close to 50%.

When evaluating the system on the validation field at a separate location, the automated image-based estimation of the dry matter clover fraction does not translate accurately between the two field sites. The design of the two field trials differs largely, mainly in terms of absence of red clover in the validation field seed mixture, fertilization strategies, organic or conventional farming, and variation of cultivars in the plots. Through experiments outside of this paper, it has been shown that the system accurately translates to the validation field when lowering the threshold value for detecting clover pixels from 0.95 to 0.85. This suggests a visual difference between the clovers in the two field sites, leading to partly unclassified clovers. By introducing larger variations of clovers from multiple locations in the image simulation, the CNN should be generalized to better handle natural visual variances between the fields.

It is essential to be aware of the accuracy of alternative methods for estimation the botanical dry matter composition. While separating a forage sample into species by hand does provide the ground truth composition for this paper, this is not feasible for real applications. Fair comparisons include vision-based evaluation by expert consultants in the field and near-infrared reflectance spectroscopy (NIRS)-based estimation, often integrated in grass sward harvesters used for research.

While the visual estimation accuracy of consultants remains undocumented, this method is time consuming and requires the consultant to inspect the clover-grass throughout the field and map it accordingly. NIRS-based methods, such as in [

23], show comparable estimation accuracy of grass swards of either white clover or red clover, by use of distinct models for each case. The accuracy on grassland swards with mixed red and white clover species, as in this experiment, has not been investigated.

To utilize the botanical composition of clover-grass leys for targeted fertilization, the information must be available at the time of fertilization. The largest amount of fertilizer is typically applied in the spring prior to the start of the growth period. At this stage NIRS for identifying the botanical composition is ruled out, since the crop is too small for harvesting. This leaves a great potential for the non-destructive approach of monitoring clover-grass mixtures in this paper. Future work includes extending the presented method for clover content estimation to preseason image samples. This extension allows the camera-based system to provide the necessary botanical information for optimizing the fertilizing strategy, for every fertilization. Following the approach presented in this paper, the image analysis of the extension can be operational with a couple of days of labor for gathering relevant image samples and cropping out representative plant samples for data simulation. Other future work relates to extending the parametric information level delivered by the convolutional neural network to further improve the dry matter composition estimates, or directly predicting the dry matter botanical composition in the neural network.