Efficient Background Segmentation and Seed Point Generation for a Single-Shot Stereo System

Abstract

:1. Introduction

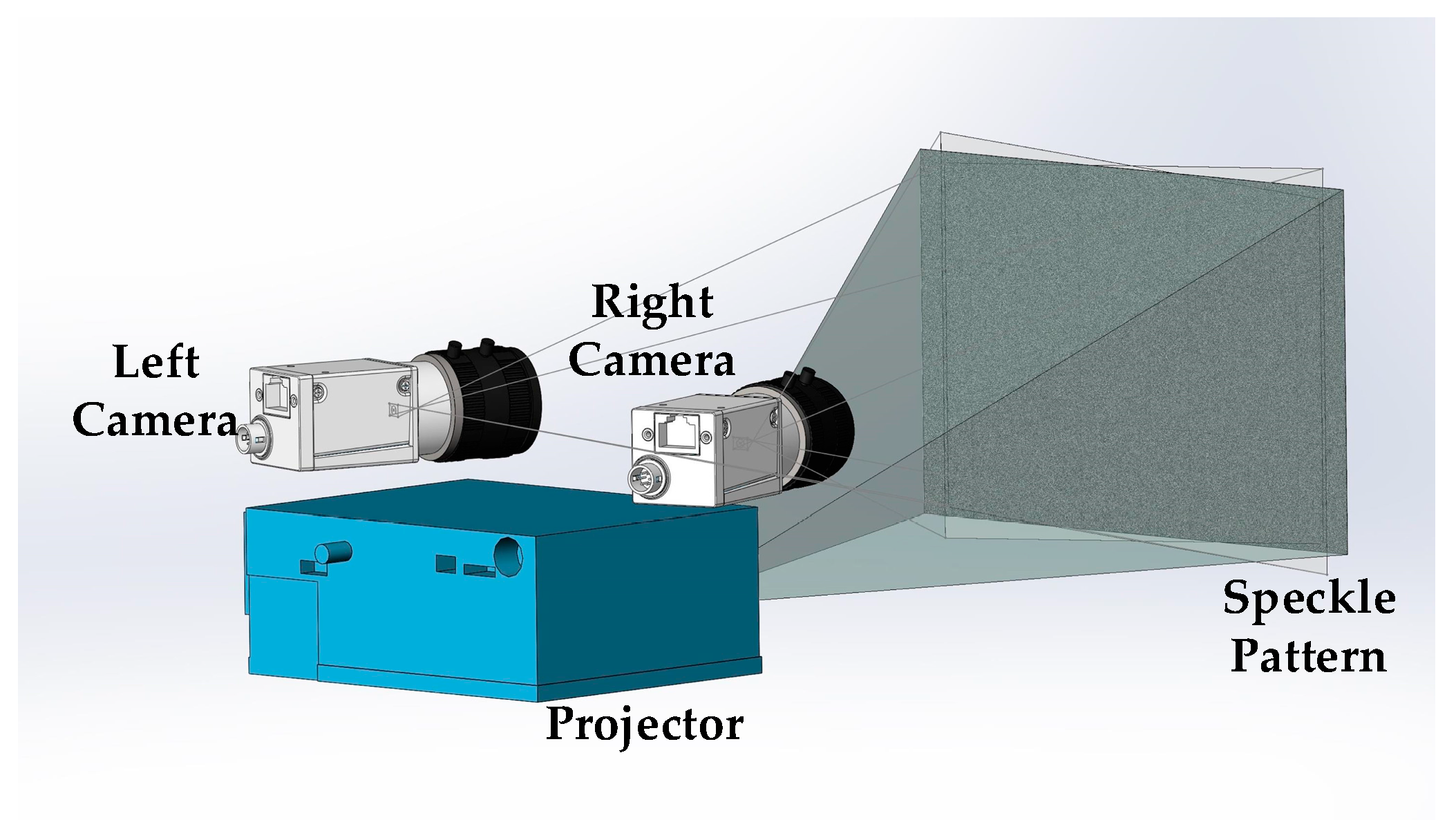

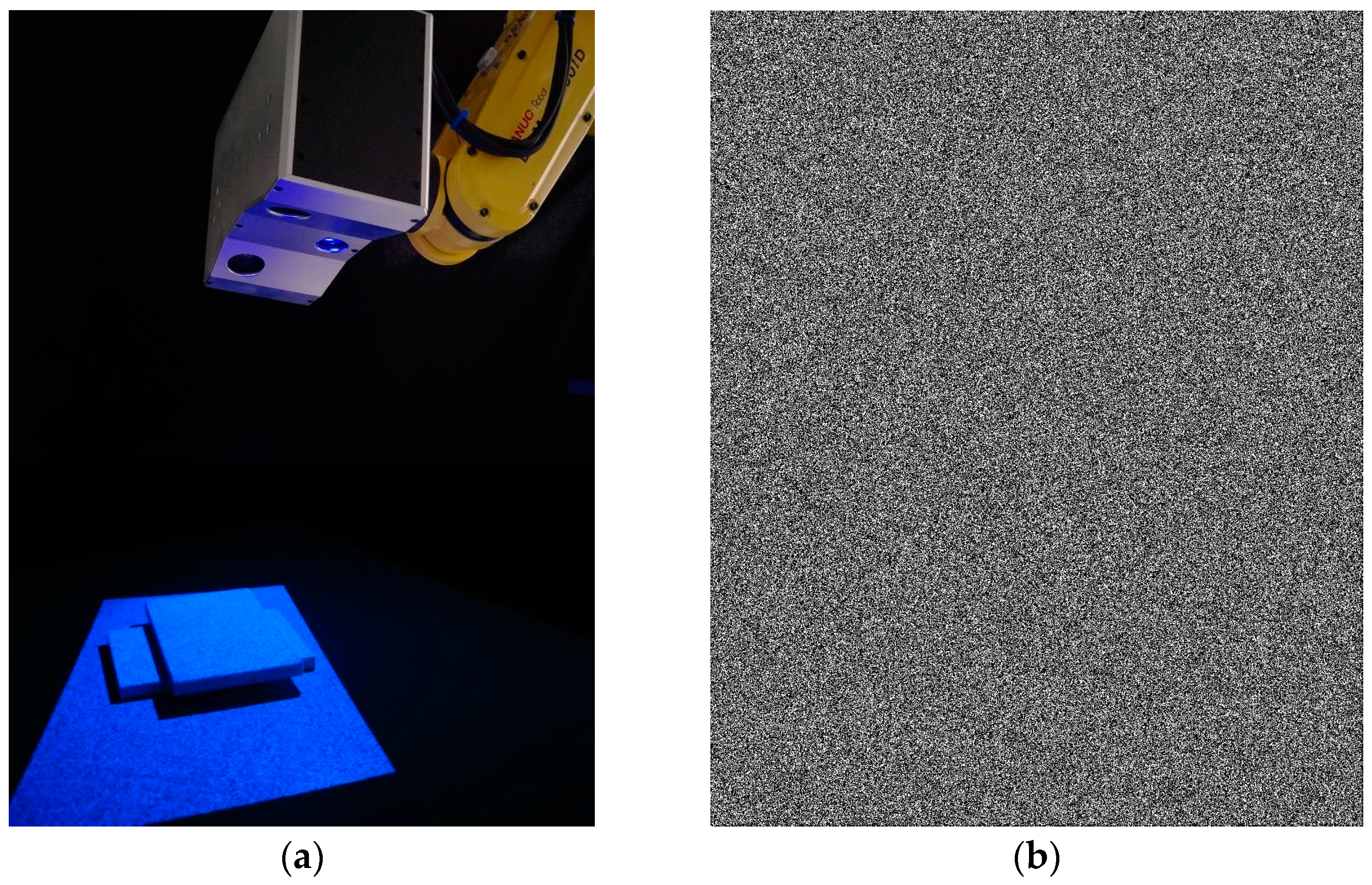

2. Working Principle of Single-Shot Stereo System

3. Stereo Matching of the Single-Shot Stereo System

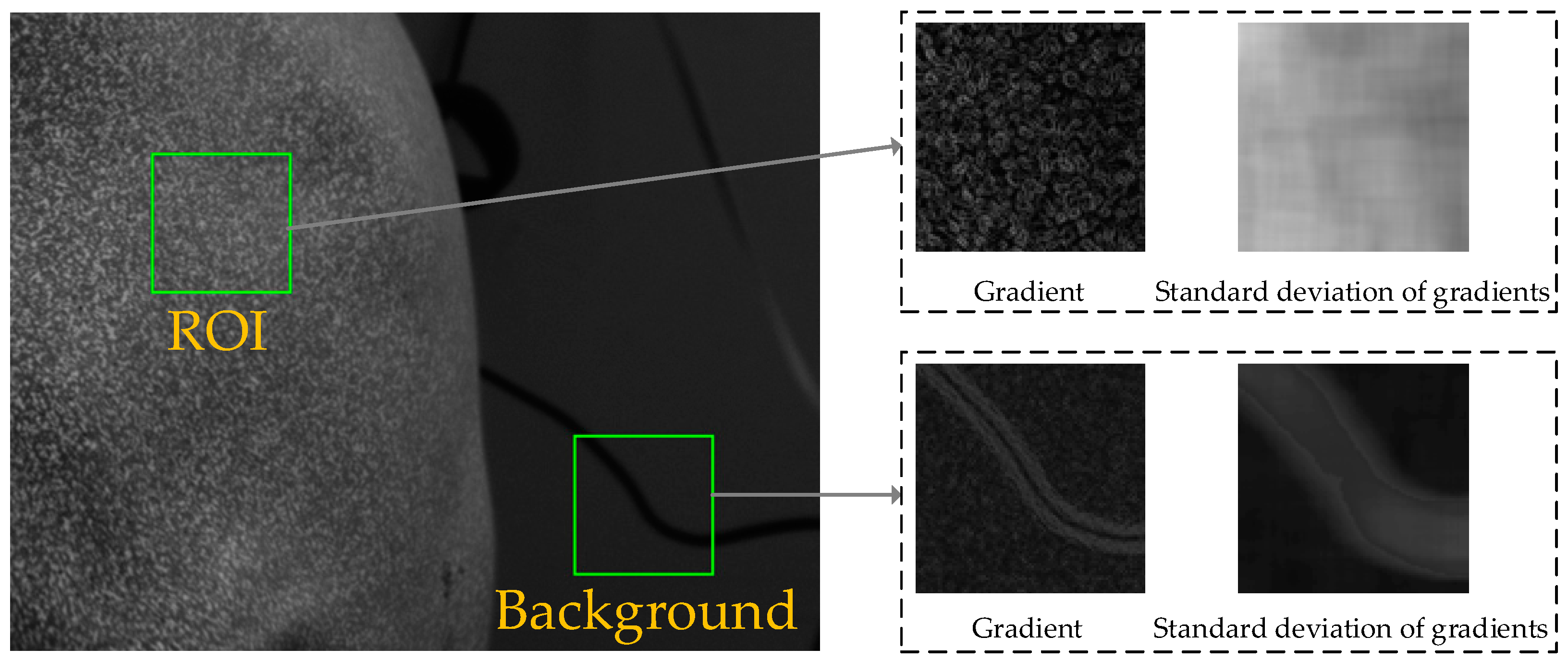

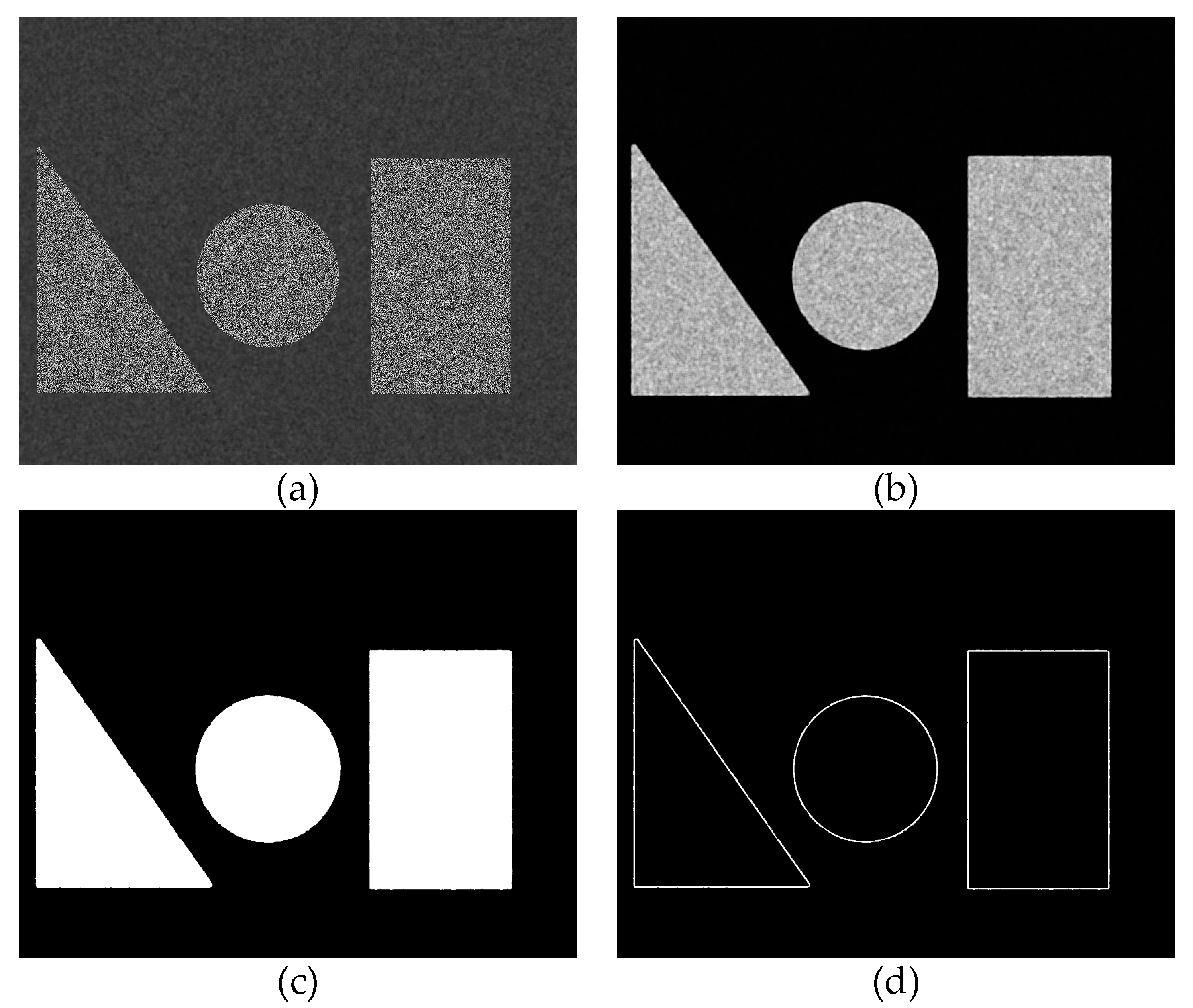

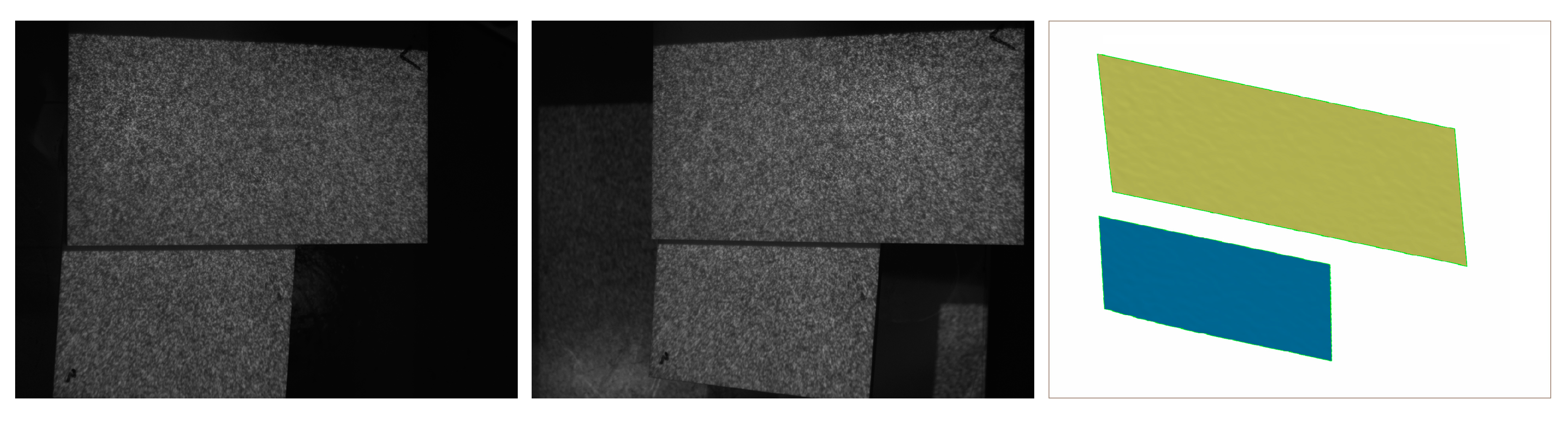

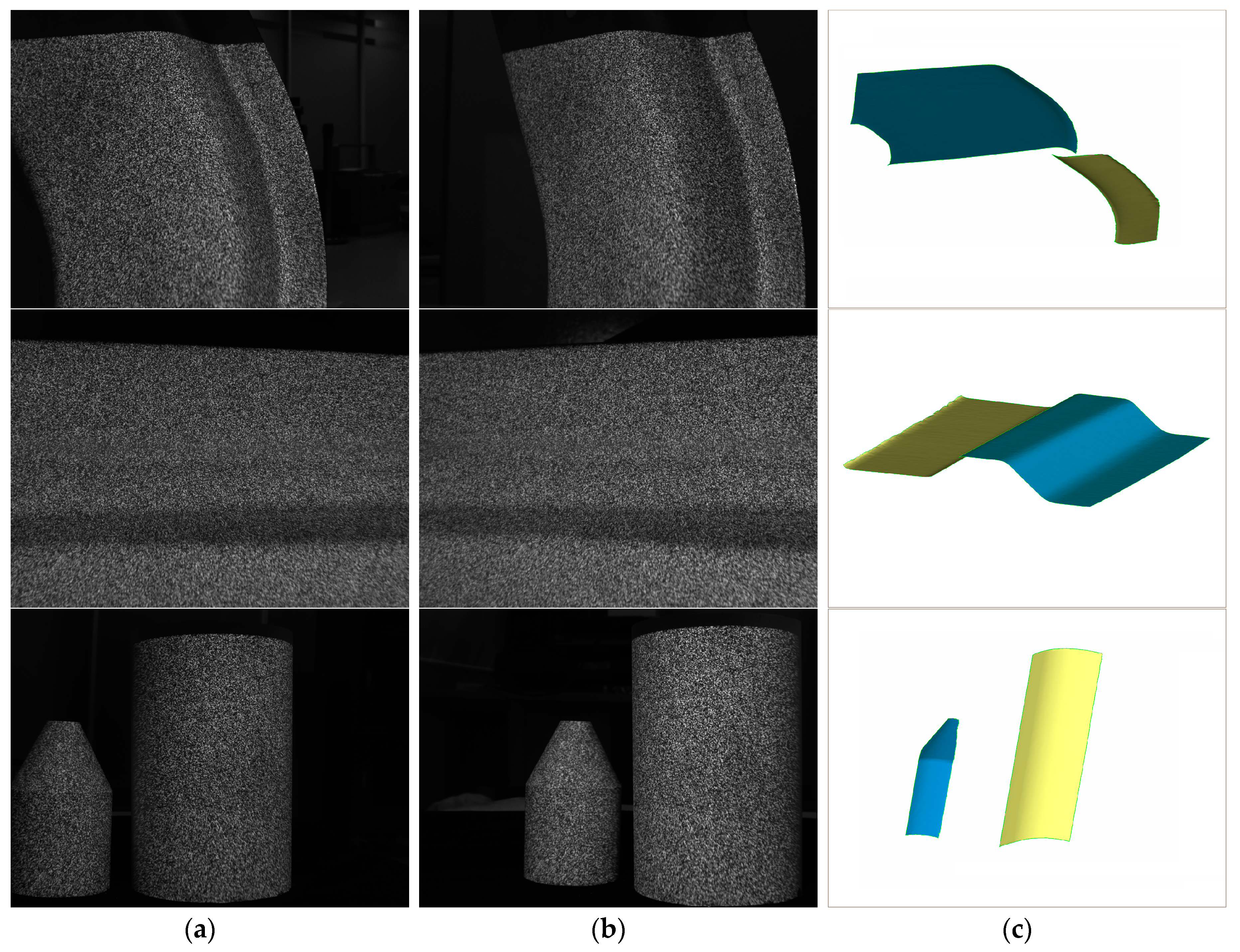

3.1. Background Segmentation

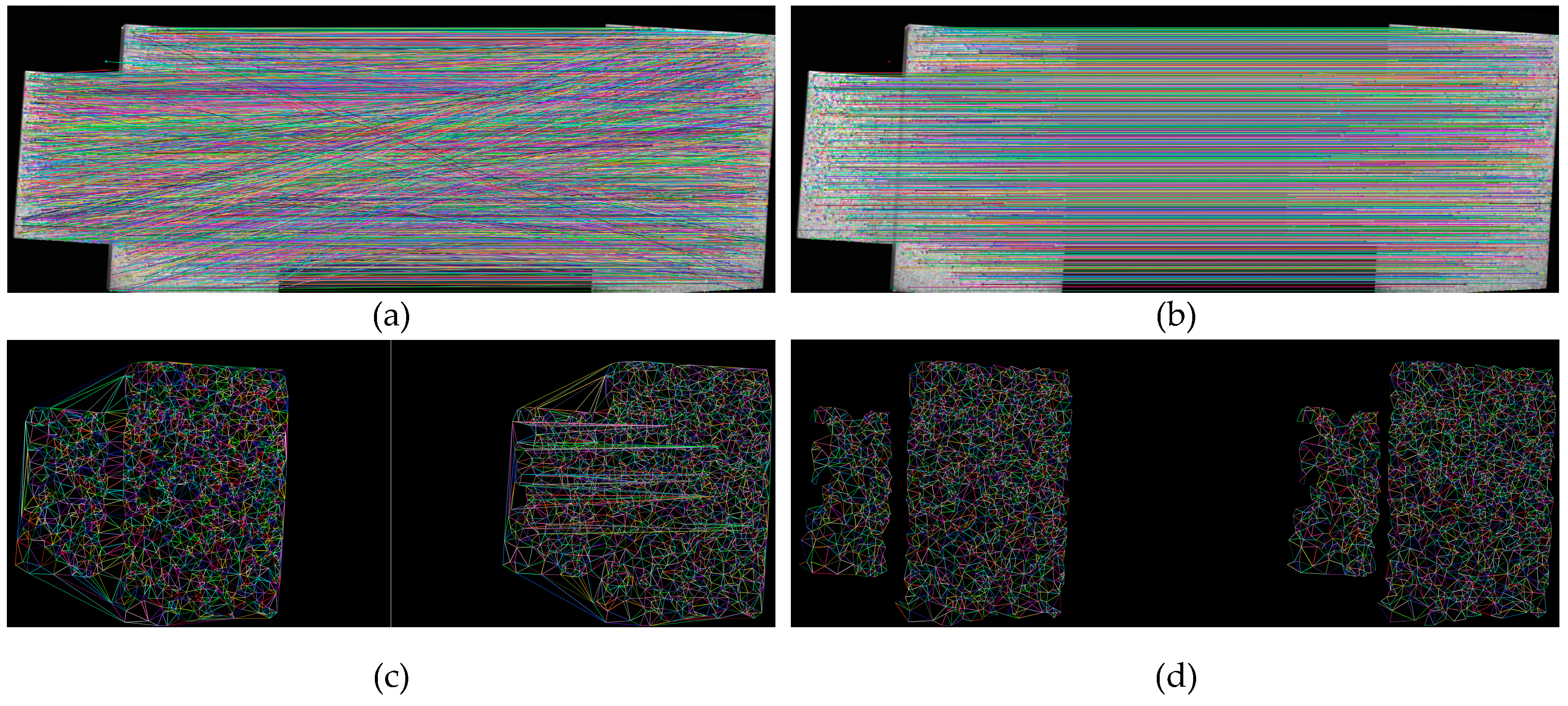

3.2. Feature-Based Matching for the Coarse-Matched Triangle Set

3.2.1. Coarse Match by Scale-Invariant Feature Transform

3.2.2. Removal of Wrong Matches

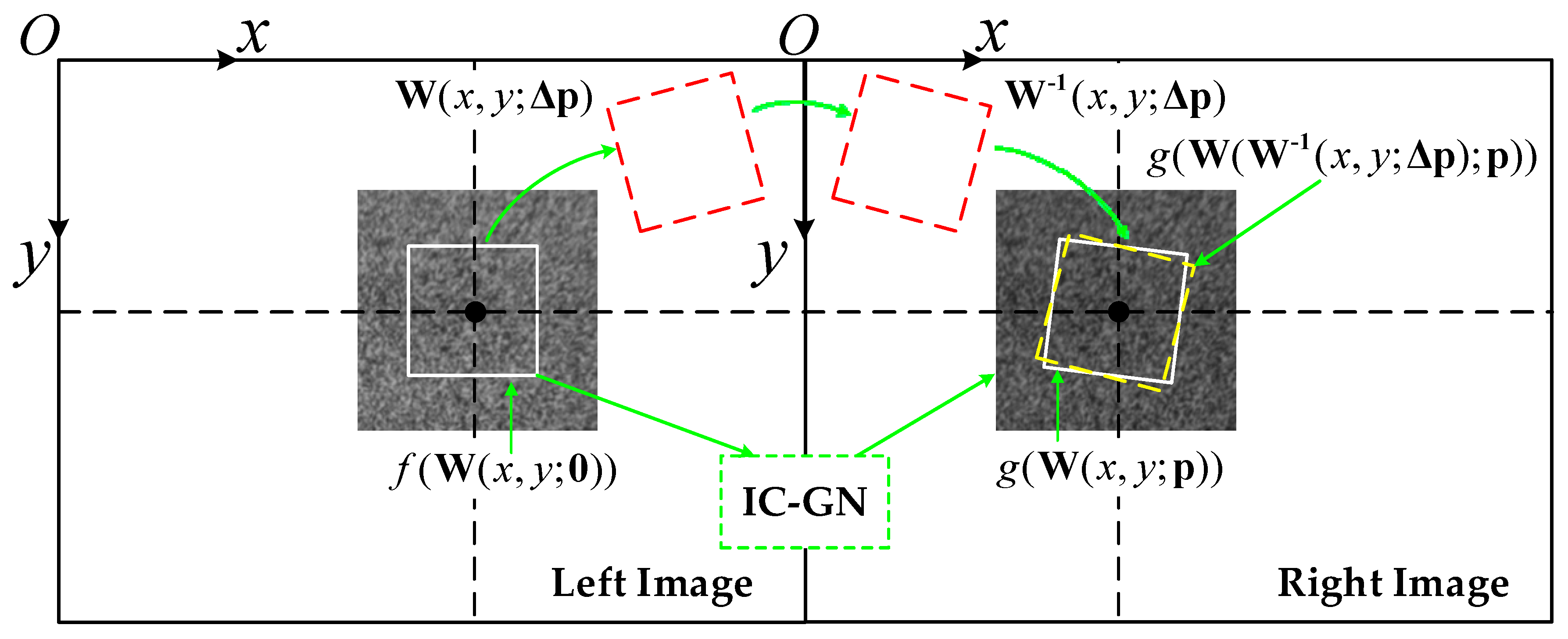

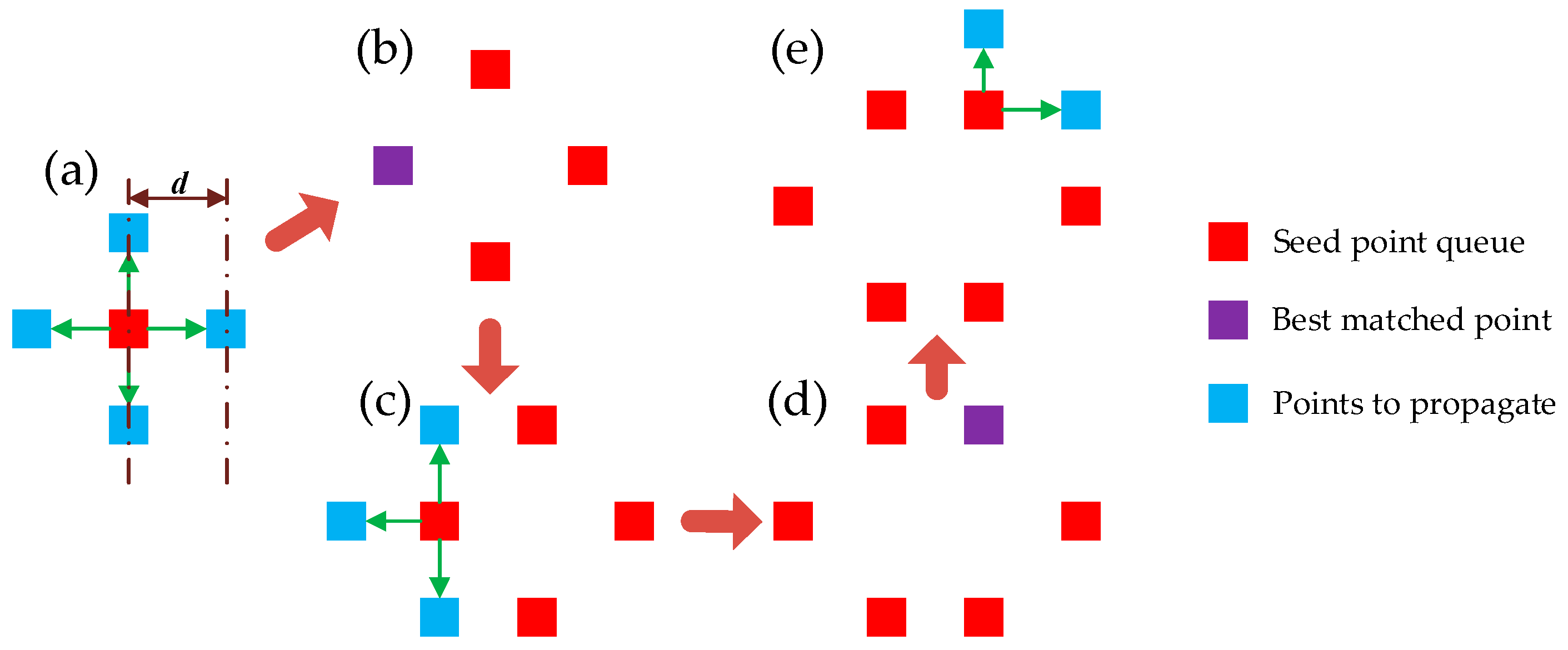

3.3. Seed Point Generation and Propagation by IC-GN

3.3.1. First-Order DIC Using IC-GN

3.3.2. Seed Point Generation and Efficient Propagation

4. Experiments and Discussions

4.1. Efficiency Test of Background Segmentation

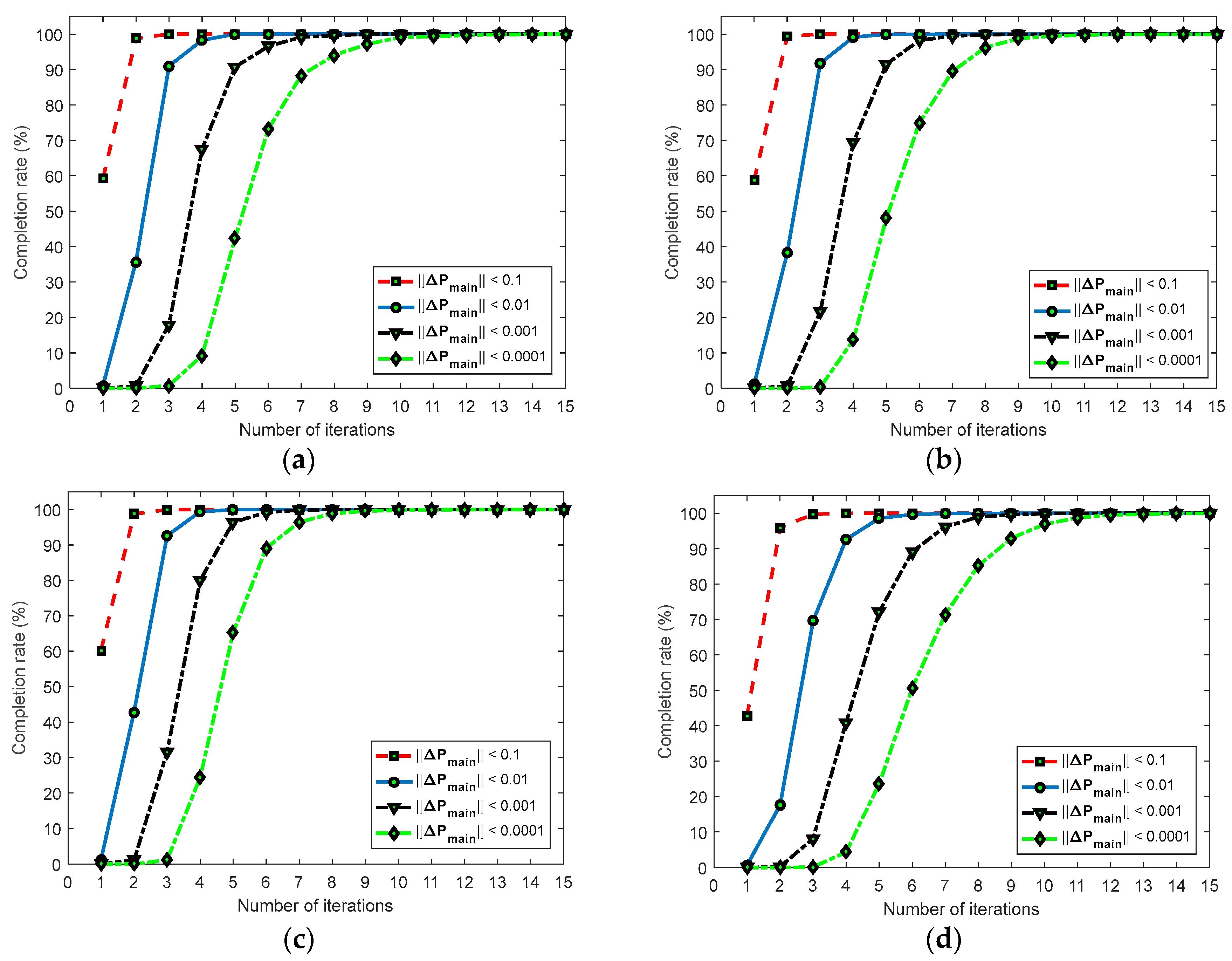

4.2. Efficiency Test of Seed Point Generation

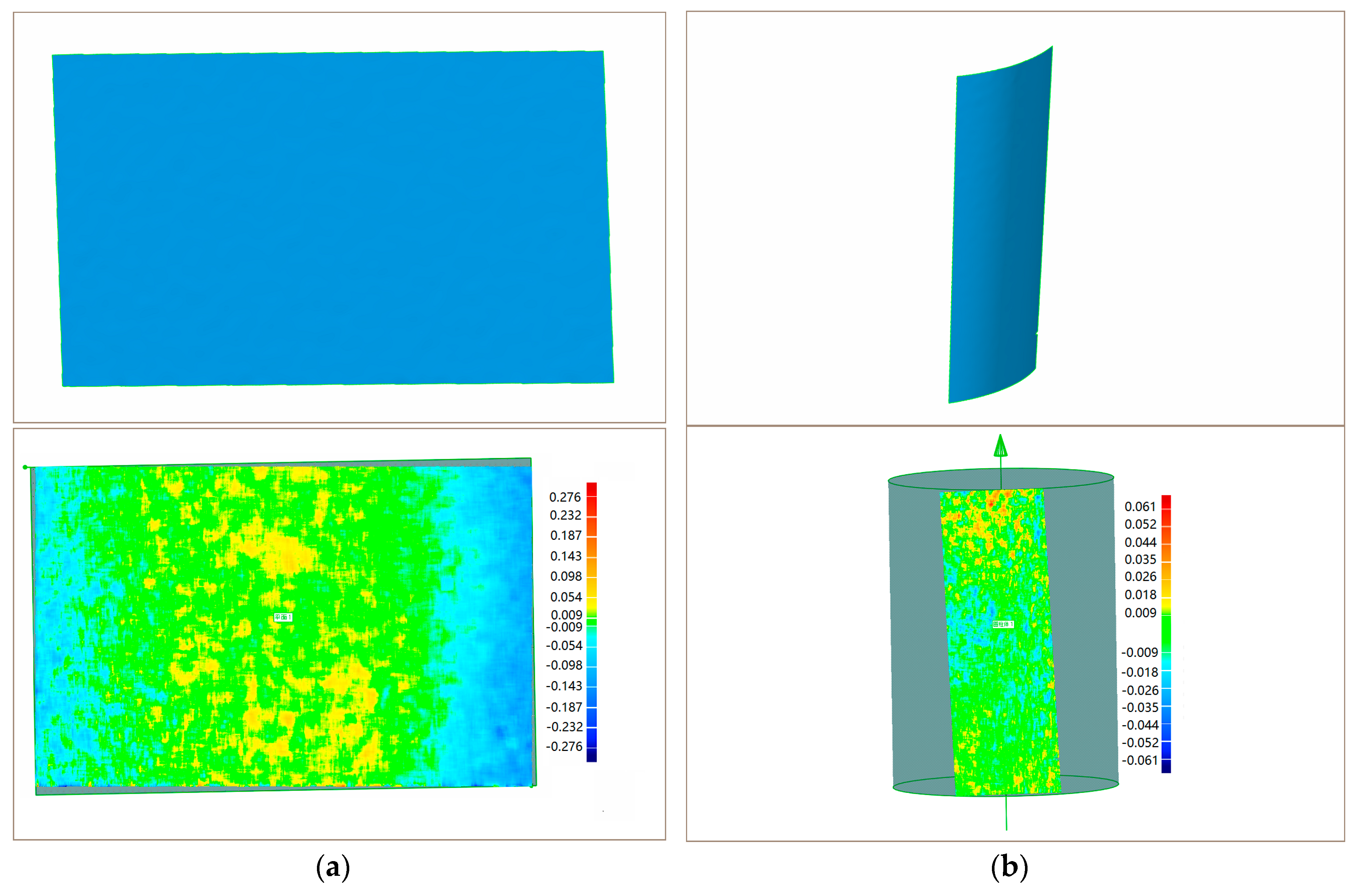

4.3. Precision Evaluation

5. Conclusions

Acknowledgements

Author Contributions

Conflicts of Interest

References

- Yu, C.; Chen, X.; Xi, J. Modeling and calibration of a novel one-mirror galvanometric laser scanner. Sensors 2017, 17, 164. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.T.; Slaughter, D.C.; Max, N.; Maloof, J.N.; Sinha, N. Structured light-based 3D reconstruction system for plants. Sensors 2015, 15, 18587–18612. [Google Scholar] [CrossRef] [PubMed]

- Xiao, S.; Tao, W.; Zhao, H. A flexible fringe projection vision system with extended mathematical model for accurate three-dimensional measurement. Sensors 2016, 16, 612. [Google Scholar] [CrossRef] [PubMed]

- Chi, S.; Xie, Z.; Chen, W. A laser line auto-scanning system for underwater 3D reconstruction. Sensors 2016, 16, 1534. [Google Scholar] [CrossRef] [PubMed]

- Massot-Campos, M.; Oliver-Codina, G. Optical sensors and methods for underwater 3D reconstruction. Sensors 2015, 15, 31525–31557. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Hong, Z.; Zhang, Y.; Han, Y.; Zhou, R.; Shen, B. Automatic path planning and navigation with stereo cameras. In Proceedings of the 2014 3rd International Workshop on Earth Observation and Remote Sensing Applications, Changsha, China, 11–14 June 2014; pp. 289–293. [Google Scholar]

- Bräuerburchardt, C.; Heinze, M.; Schmidt, I.; Kühmstedt, P.; Notni, G. Underwater 3D surface measurement using fringe projection based scanning devices. Sensors 2016, 16, 13. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xi, J.T.; Jiang, T.; Jin, Y. Research and development of an accurate 3D shape measurement system based on fringe projection: Model analysis and performance evaluation. Precis. Eng. 2008, 32, 215–221. [Google Scholar]

- Jung, J.; Yoon, S.; Ju, S.; Heo, J. Development of kinematic 3D laser scanning system for indoor mapping and as-built bim using constrained slam. Sensors 2015, 15, 26430–26456. [Google Scholar] [CrossRef] [PubMed]

- Martino, J.M.D.; Fernández, A.; Ayubi, G.A.; Ferrari, J.A. Differential 3D shape retrieval. Opt. Lasers Eng. 2014, 58, 114–118. [Google Scholar] [CrossRef]

- Chen, F.; Chen, X.; Xie, X.; Feng, X.; Yang, L. Full-field 3D measurement using multi-camera digital image correlation system. Opt. Lasers Eng. 2013, 51, 1044–1052. [Google Scholar] [CrossRef]

- Kieu, H.; Pan, T.; Wang, Z.; Le, M.; Nguyen, H.; Vo, M. Accurate 3D shape measurement of multiple separate objects with stereo vision. Meas. Sci. Technol. 2014, 25, 1–7. [Google Scholar] [CrossRef]

- Nguyen, H.; Wang, Z.; Quisberth, J. Accuracy comparison of fringe projection technique and 3D digital image correlation technique. In Advancement of Optical Methods in Experimental Mechanics; Springer International Publishing: Berlin, Germany, 2016; pp. 195–201. [Google Scholar]

- Bai, R.; Jiang, H.; Lei, Z.; Li, W. A novel 2nd-order shape function based digital image correlation method for large deformation measurements. Opt. Lasers Eng. 2017, 90, 48–58. [Google Scholar] [CrossRef]

- Pan, B.; Li, K.; Tong, W. Fast, robust and accurate digital image correlation calculation without redundant computations. Exp. Mech. 2013, 53, 1277–1289. [Google Scholar] [CrossRef]

- Pan, B.; Chen, Y.Q.; Zhou, Y. Large deformation measurement using digital image correlation: A fully automated approach. Appl. Opt. 2012, 51, 7674–7683. [Google Scholar]

- Pan, B. An evaluation of convergence criteria for digital image correlation using inverse compositional gauss–newton algorithm. Strain 2014, 50, 48–56. [Google Scholar] [CrossRef]

- Dai, X.; He, X.; Shao, X.; Chen, Z. Real-time 3D digital image correlation method and its application in human pulse monitoring. Appl. Opt. 2016, 55, 696. [Google Scholar]

- Gao, Y.; Cheng, T.; Su, Y.; Xu, X.; Zhang, Y.; Zhang, Q. High-efficiency and high-accuracy digital image correlation for three-dimensional measurement. Opt. Lasers Eng. 2015, 65, 73–80. [Google Scholar] [CrossRef]

- Huang, J.; Zhu, T.; Pan, X.; Qin, L.; Peng, X.; Xiong, C.; Fang, J. A high-efficiency digital image correlation method based on a fast recursive scheme. Meas. Sci. Technol. 2010, 21, 35101–35112. [Google Scholar] [CrossRef]

- Pan, B. Reliability-guided digital image correlation for image deformation measurement. Appl. Opt. 2009, 48, 1535–1542. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Kieu, H.; Nguyen, H.; Le, M. Digital image correlation in experimental mechanics and image registration in computer vision: Similarities, differences and complements. Opt. Lasers Eng. 2015, 65, 18–27. [Google Scholar] [CrossRef]

- Sun, C.; Zhou, Y.; Chen, J.; Miao, H. Measurement of deformation close to contact interface using digital image correlation and image segmentation. Exp. Mech. 2015, 55, 1525–1536. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Chen, X.; Xi, J.; Jin, Y.; Sun, J. Accurate calibration for a camera–projector measurement system based on structured light projection. Opt. Lasers Eng. 2009, 47, 310–319. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from grey level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Muja, M. Flann-Fast Library for Approximate Nearest Neighbors User Manual. Available online: https://www.cs.ubc.ca/research/flann/uploads/FLANN/flann_manual-1.8.4.pdf (accessed on 30 November 2017).

- Silva, L.C.; Petraglia, M.R.; Petraglia, A. A robust method for camera calibration and 3-D reconstruction for stereo vision systems. In Proceedings of the 12th European Signal Processing Conference, Vienna, Austria, 6–10 Septemer 2004; pp. 1151–1154. [Google Scholar]

- Shewchuk, J.R. Triangle—A 2D quality mesh generator and delaunay triangulator. Tex. Mon. 1996. [CrossRef]

- Schreier, H.W.; Braasch, J.R.; Sutton, M.A. Systematic errors in digital image correlation caused by intensity interpolation. Opt. Eng. 2000, 39, 2915–2921. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhou, P.; Goodson, K.E. Subpixel displacement and deformation gradient measurement using digital image/speckle correlation (disc). Opt. Eng. 2001, 40, 1613–1620. [Google Scholar] [CrossRef]

- Sun, Y.; Pang, J.H.L. Study of optimal subset size in digital image correlation of speckle pattern images. Opt. Lasers Eng. 2007, 45, 967–974. [Google Scholar]

- Rosenberg, D.J. Box Filter. U.S. Patent 4,187,182, 5 February 1980. [Google Scholar]

- Pan, B.; Xie, H.; Wang, Z. Equivalence of digital image correlation criteria for pattern matching. Appl. Opt. 2010, 49, 5501–5509. [Google Scholar] [CrossRef] [PubMed]

| Without Removal | With Removal | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | 0.1070 | 0.0995 | 2.6 | 1448 | 322 | 22.2% | 0.1163 | 0.0988 | 2.7 | 758 | 756 | 99.7% |

| S | 0.0911 | 0.0945 | 2.6 | 3964 | 1061 | 26.8% | 0.1022 | 0.0988 | 2.7 | 2310 | 2272 | 98.4% |

| C | 0.0952 | 0.0913 | 2.6 | 8709 | 2715 | 31.2% | 0.0979 | 0.0849 | 2.6 | 6359 | 4697 | 73.9% |

| F | 0.1239 | 0.1043 | 3.1 | 38,832 | 3146 | 8.1% | 0.1355 | 0.1017 | 3.2 | 14,096 | 12,205 | 86.6% |

| PN | NM | PM | SD | D | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| CMM | PMS | CMM | PMS | CMM | PMS | CMM | PMS | CMM | PMS | |

| Plane | 15 | 491,347 | −0.004 | −0.276 | 0.003 | 0.251 | 0.001 | 0.038 | ||

| Cylinder | 44 | 253,580 | −0.008 | −0.040 | 0.011 | 0.061 | 0.004 | 0.009 | 79.952 | 79.911 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Chen, X.; Xi, J. Efficient Background Segmentation and Seed Point Generation for a Single-Shot Stereo System. Sensors 2017, 17, 2782. https://doi.org/10.3390/s17122782

Yang X, Chen X, Xi J. Efficient Background Segmentation and Seed Point Generation for a Single-Shot Stereo System. Sensors. 2017; 17(12):2782. https://doi.org/10.3390/s17122782

Chicago/Turabian StyleYang, Xiao, Xiaobo Chen, and Juntong Xi. 2017. "Efficient Background Segmentation and Seed Point Generation for a Single-Shot Stereo System" Sensors 17, no. 12: 2782. https://doi.org/10.3390/s17122782

APA StyleYang, X., Chen, X., & Xi, J. (2017). Efficient Background Segmentation and Seed Point Generation for a Single-Shot Stereo System. Sensors, 17(12), 2782. https://doi.org/10.3390/s17122782