Aspect-Aided Dynamic Non-Negative Sparse Representation-Based Microwave Image Classification

Abstract

:1. Introduction

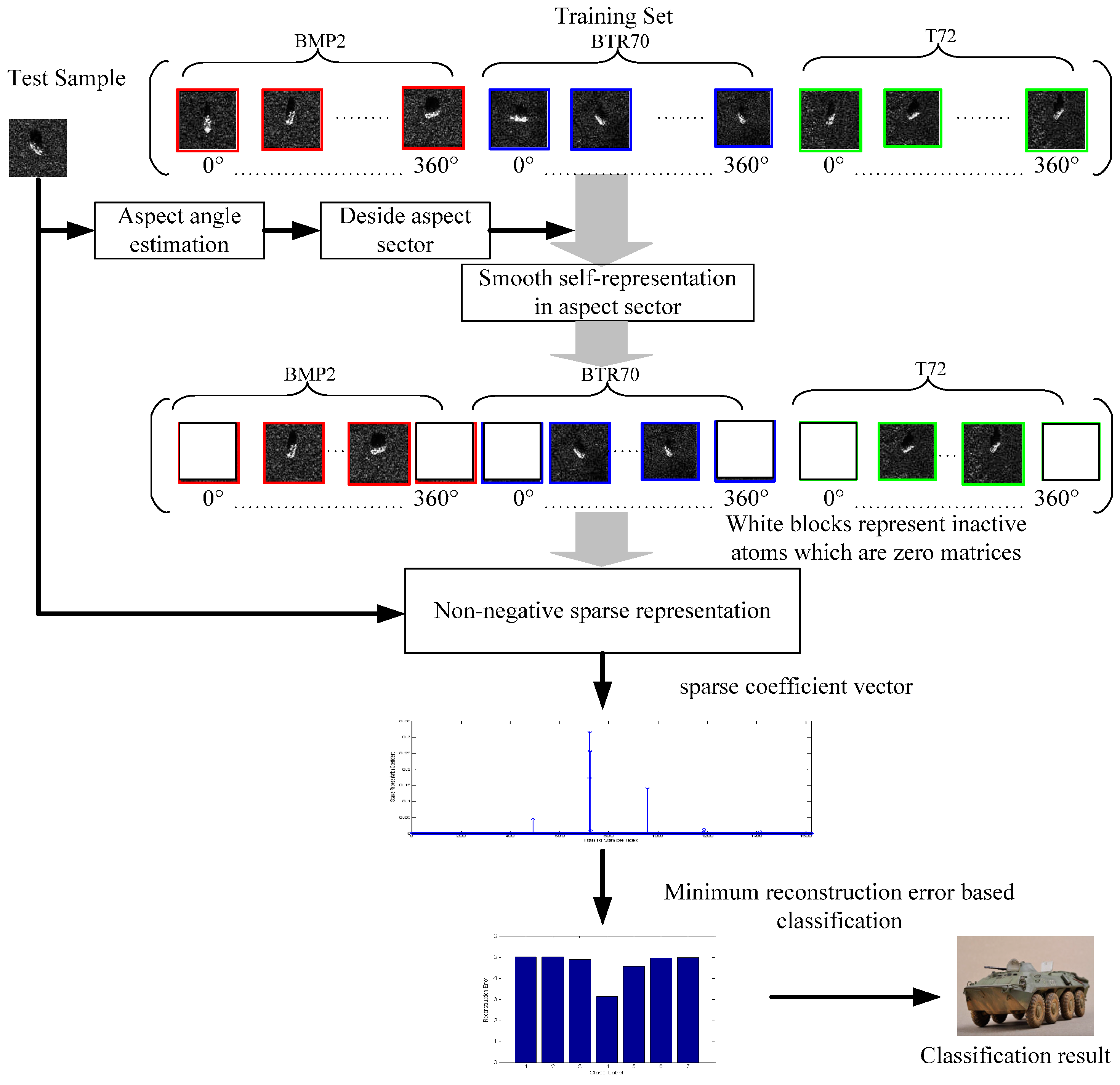

2. Aspect-Aided Dynamic Active Atoms Selection

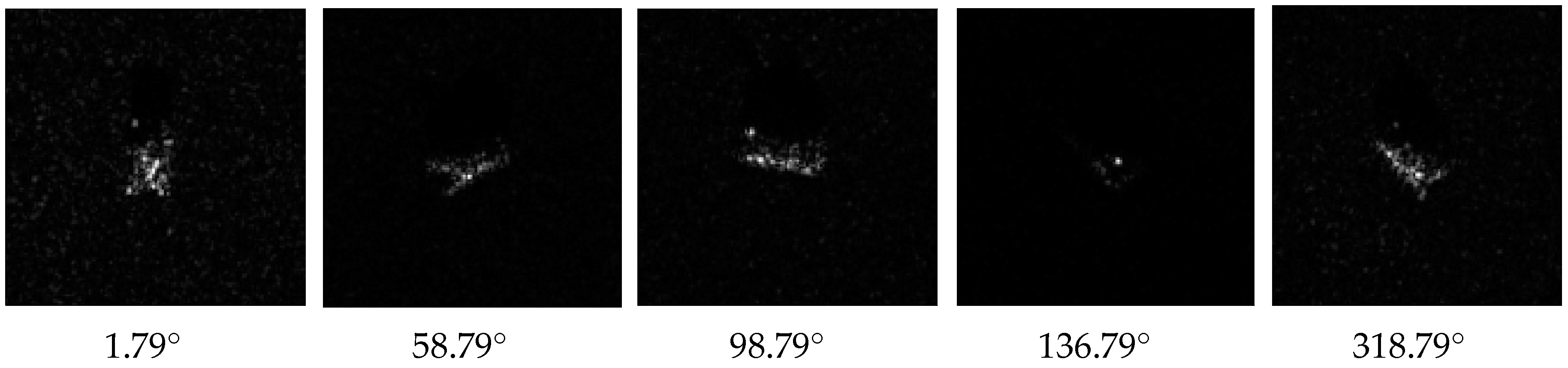

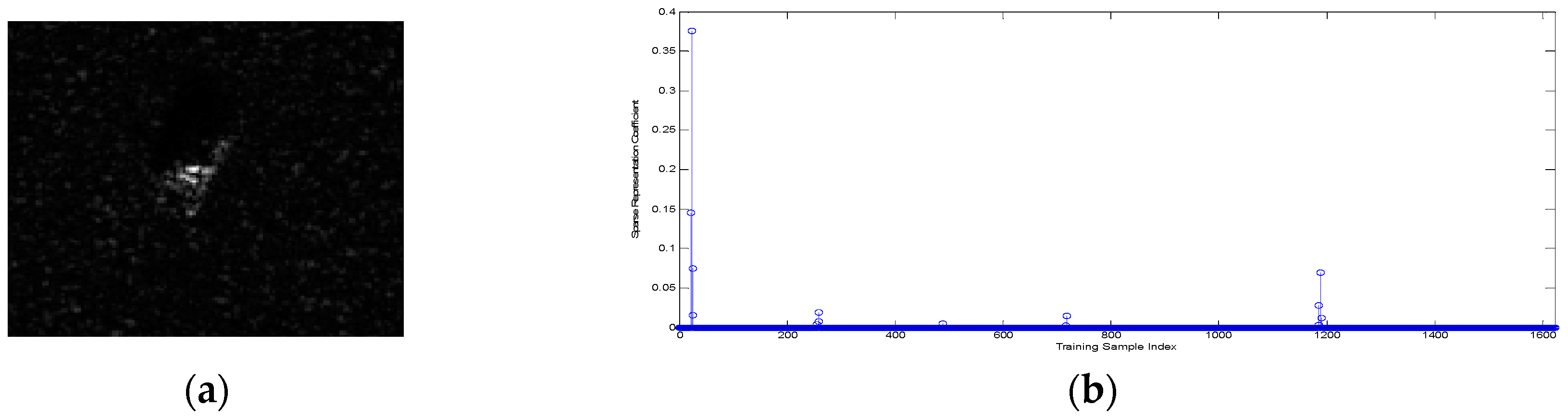

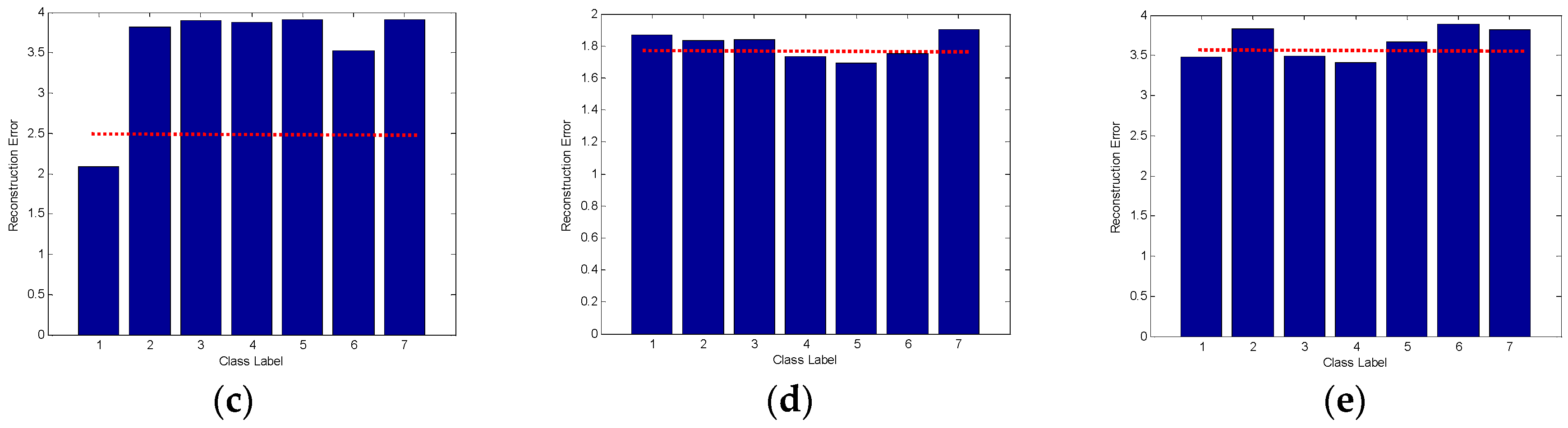

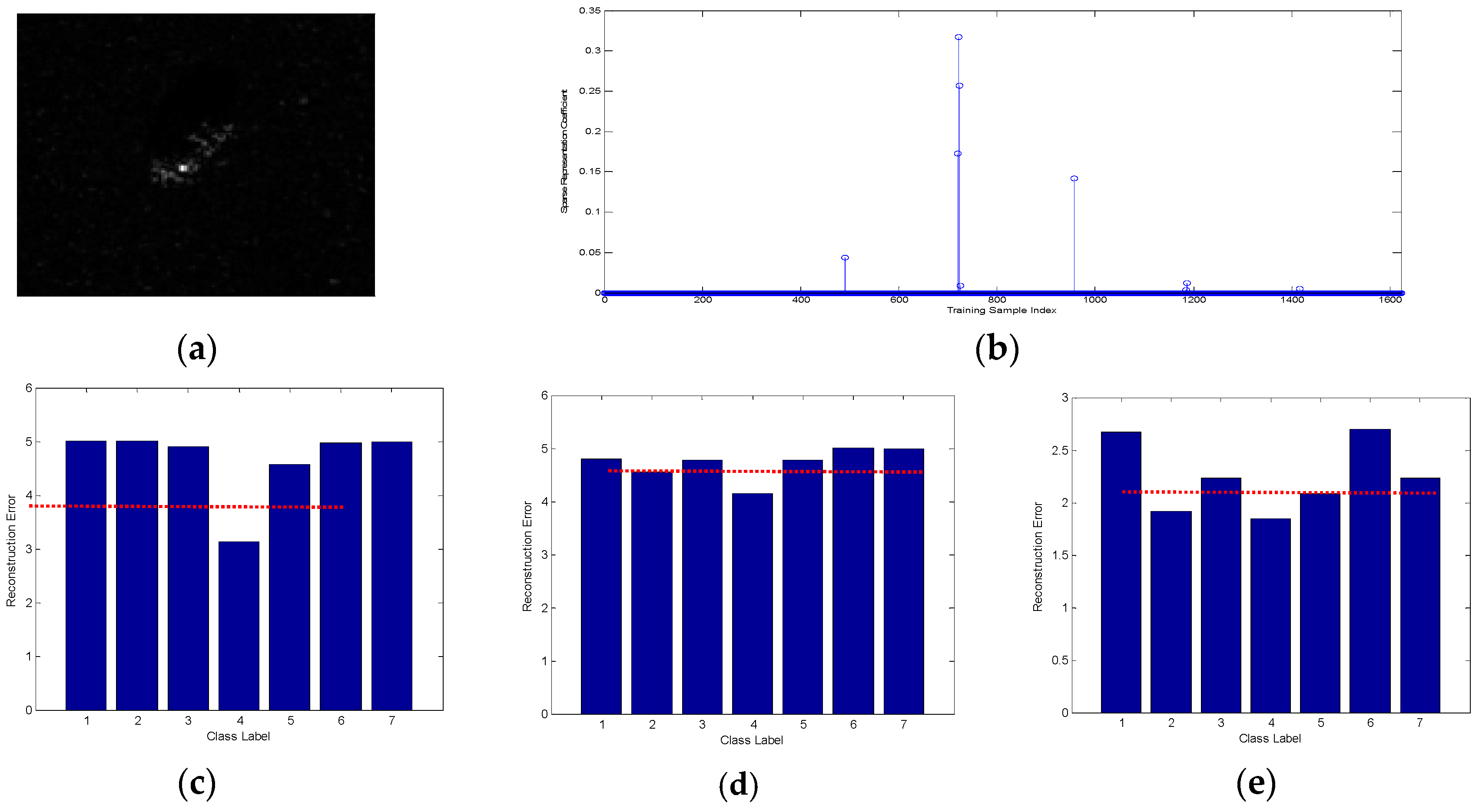

2.1. Estimation of the Testing Sample Aspect Angle

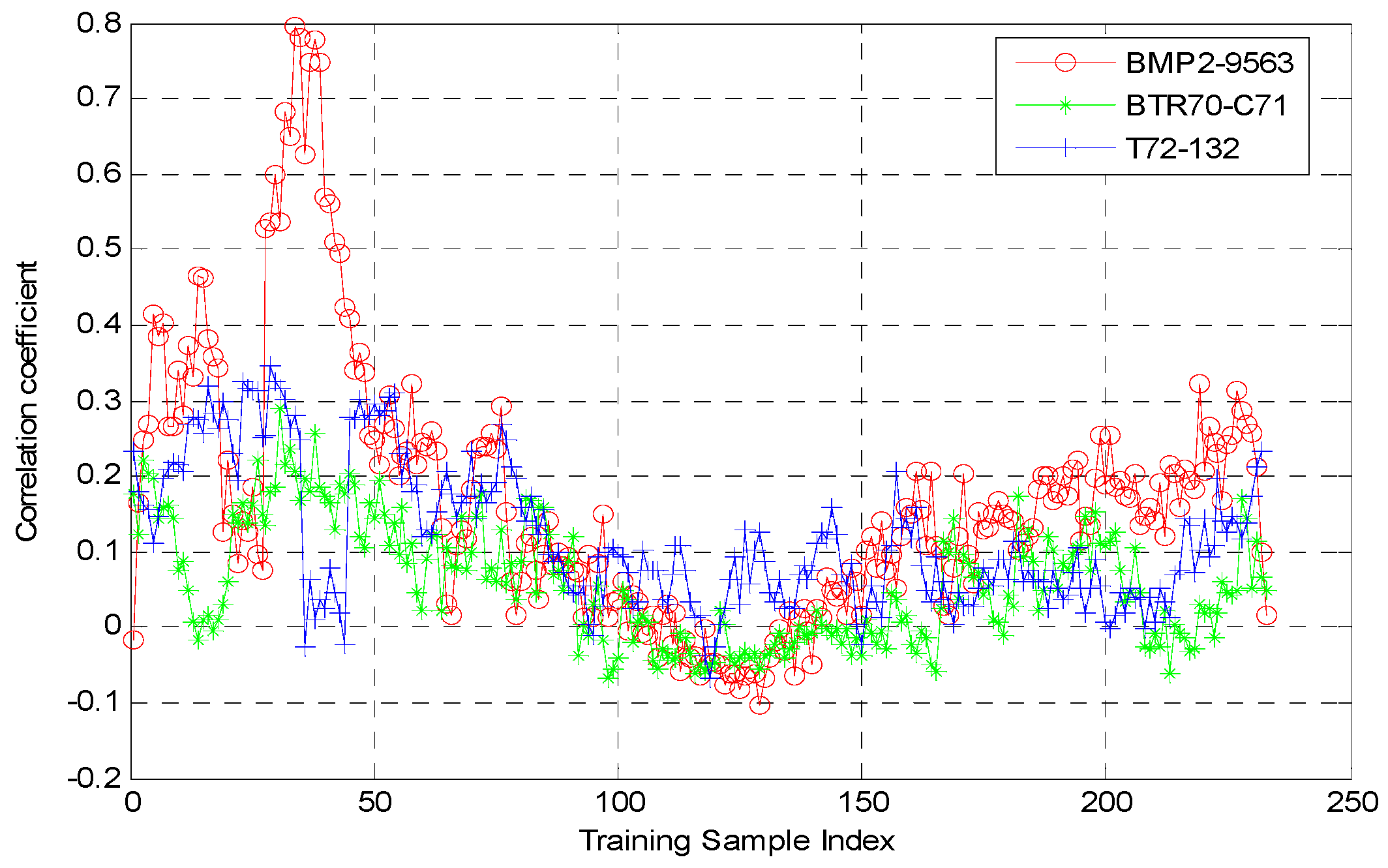

2.2. Enhanced Atoms Selection in Aspect Sector Based on Smooth Self-Representation

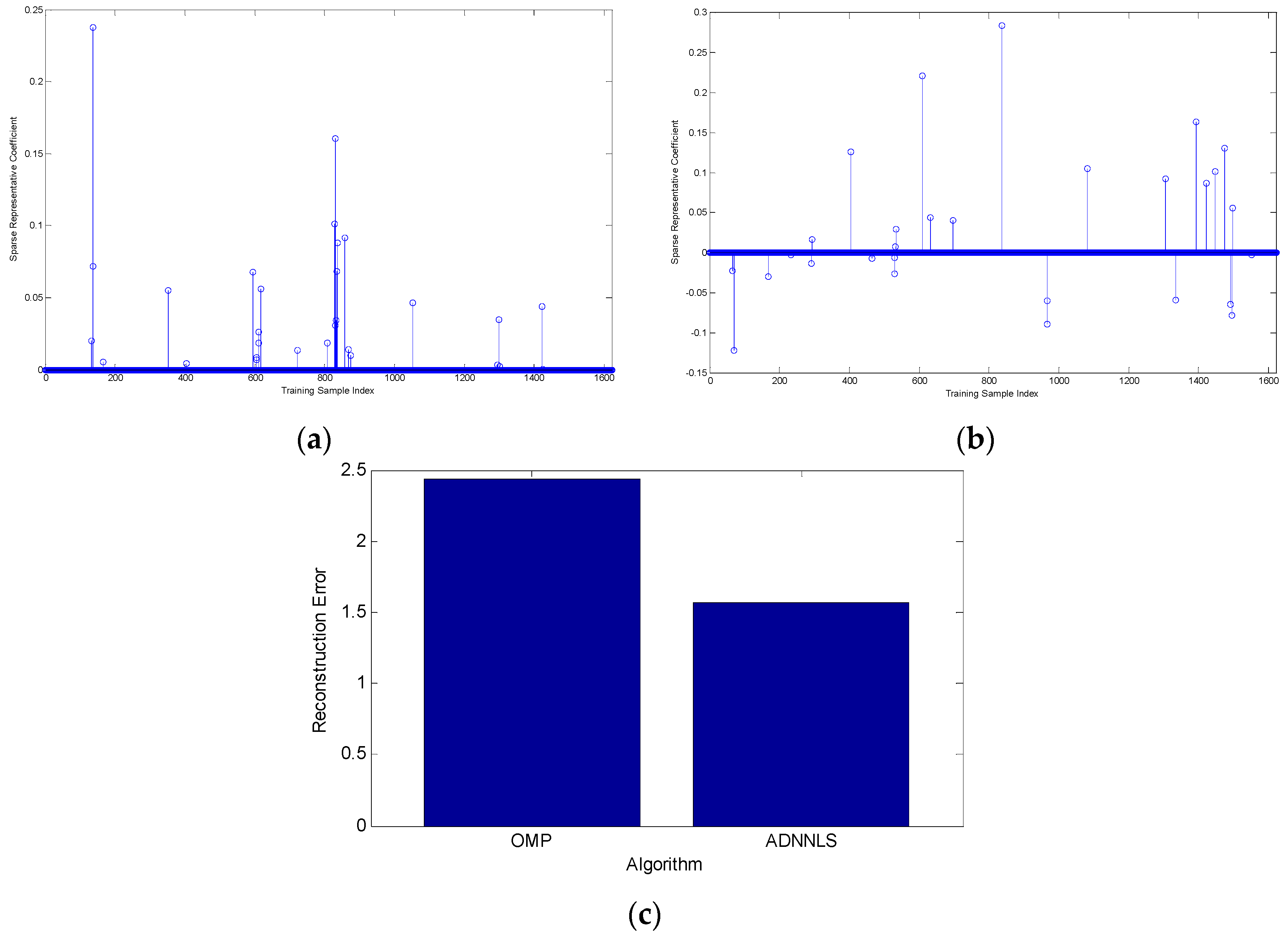

3. Non-Negative Least Square Sparse Representation Classifier

3.1. Basic Sparse Representation Classifier

3.2. Non-Negative Least Square Sparse Representation Classifier

3.3. Microwave Image Classification with ADNNLS Sparse Representation

| Algorithm 1: ADNNLS sparse representation for microwave image recognition |

| Input: |

| All types of training samples |

| : All test samples |

| Output: the identity of |

| Steps: |

|

4. Experiments and Discussion

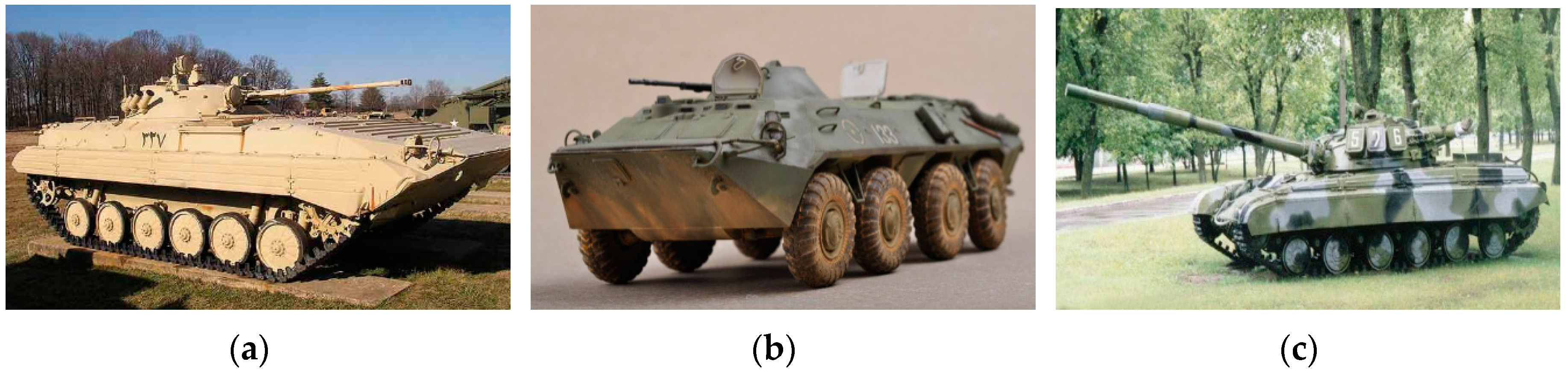

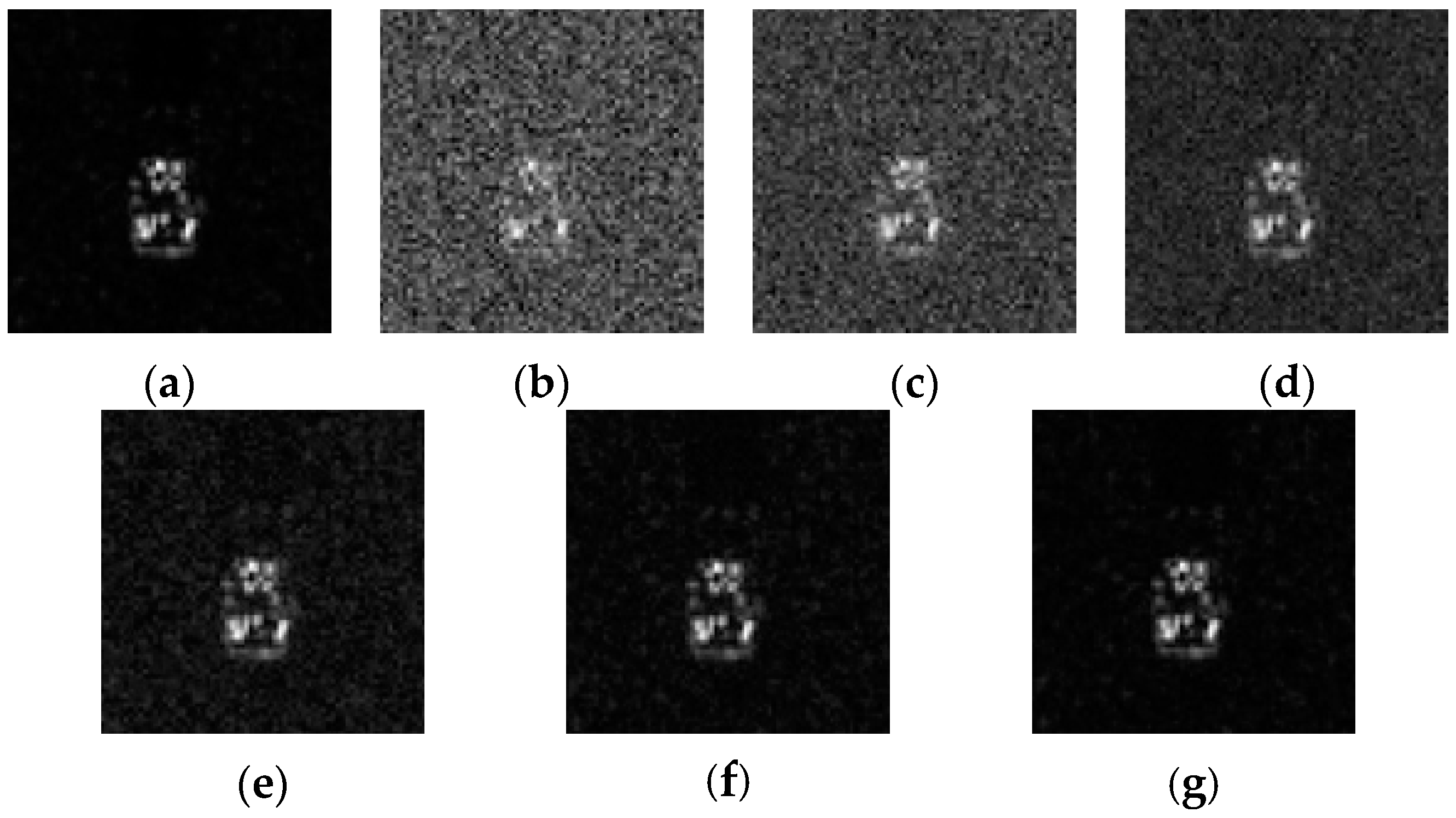

4.1. Description of the Dataset

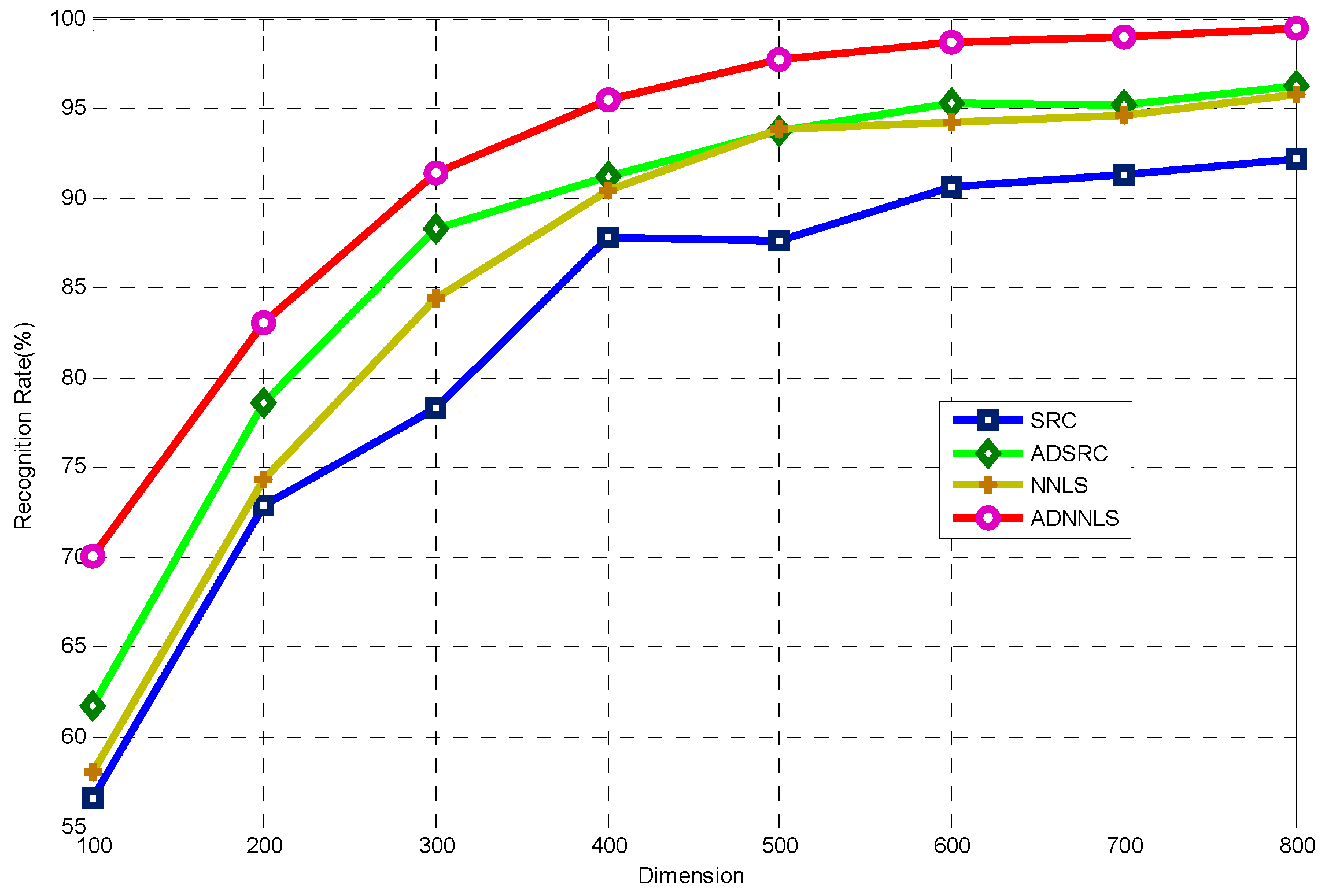

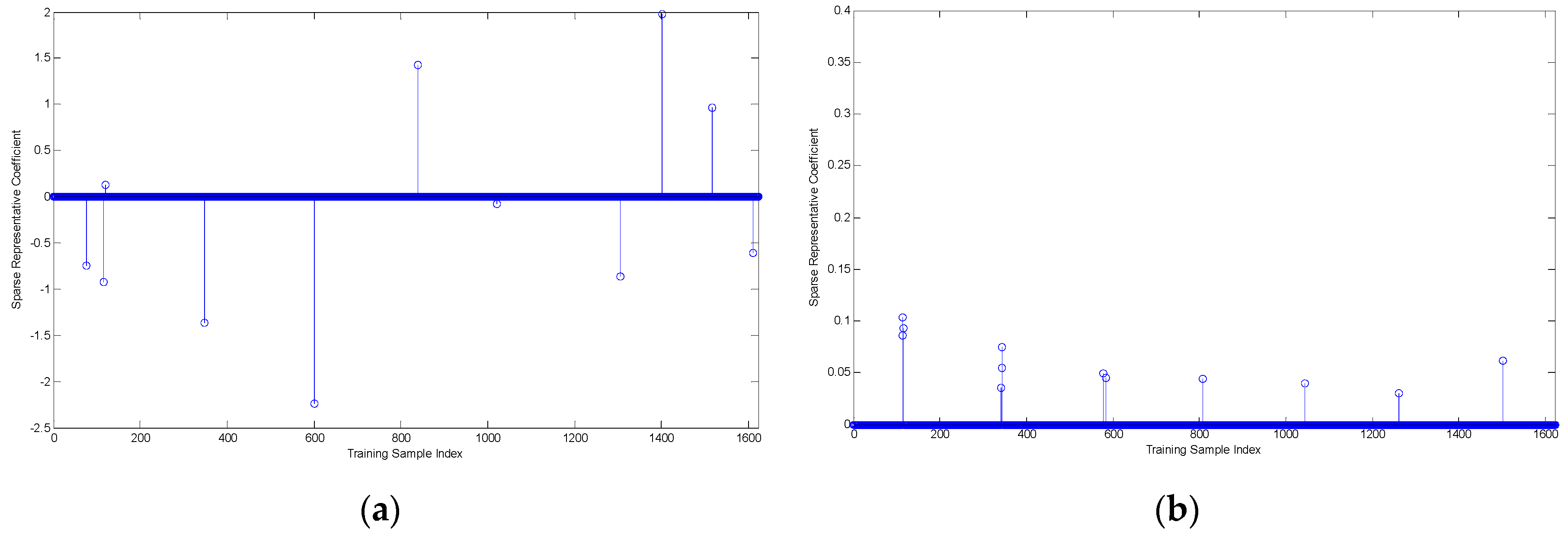

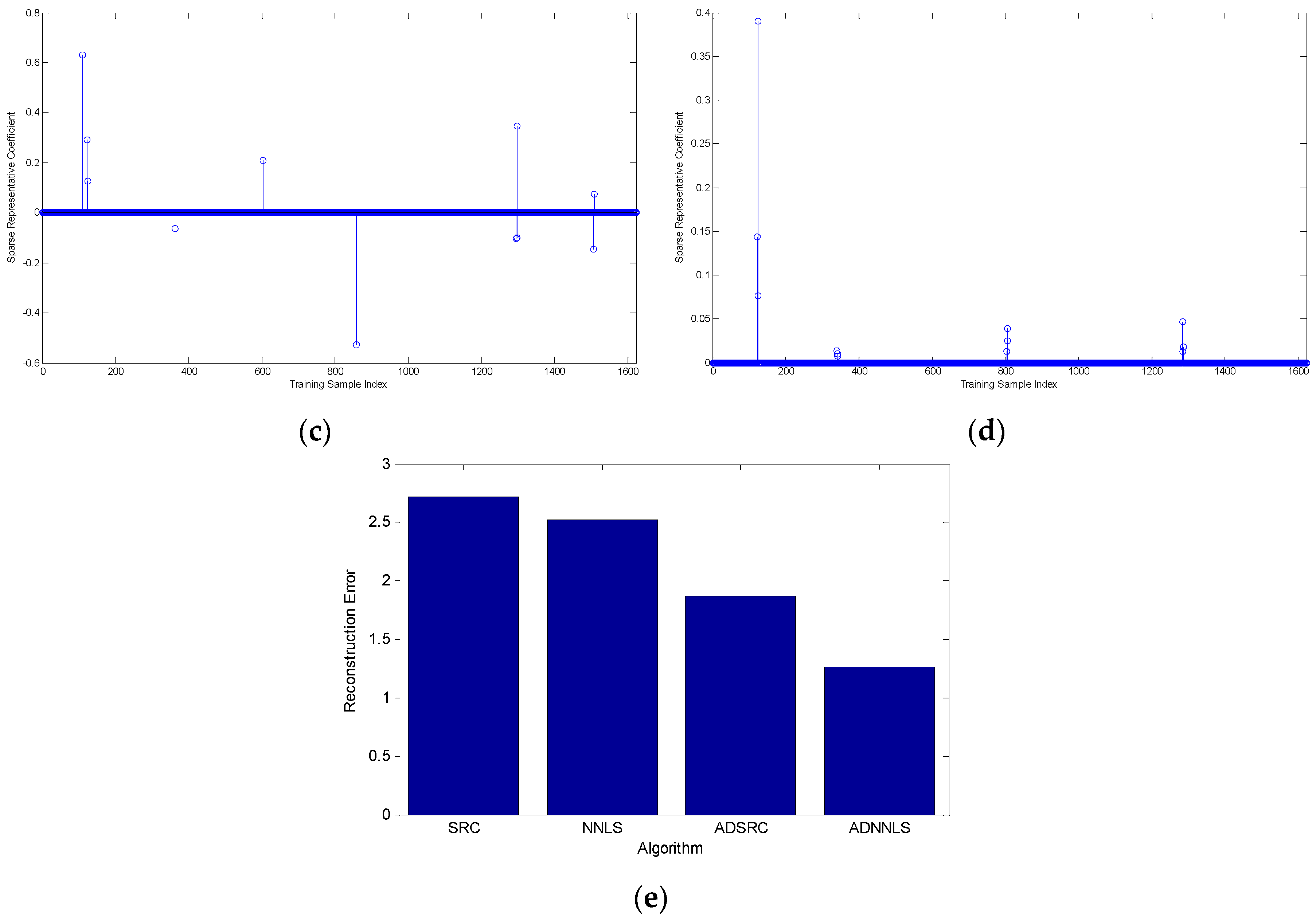

4.2. Classification Results

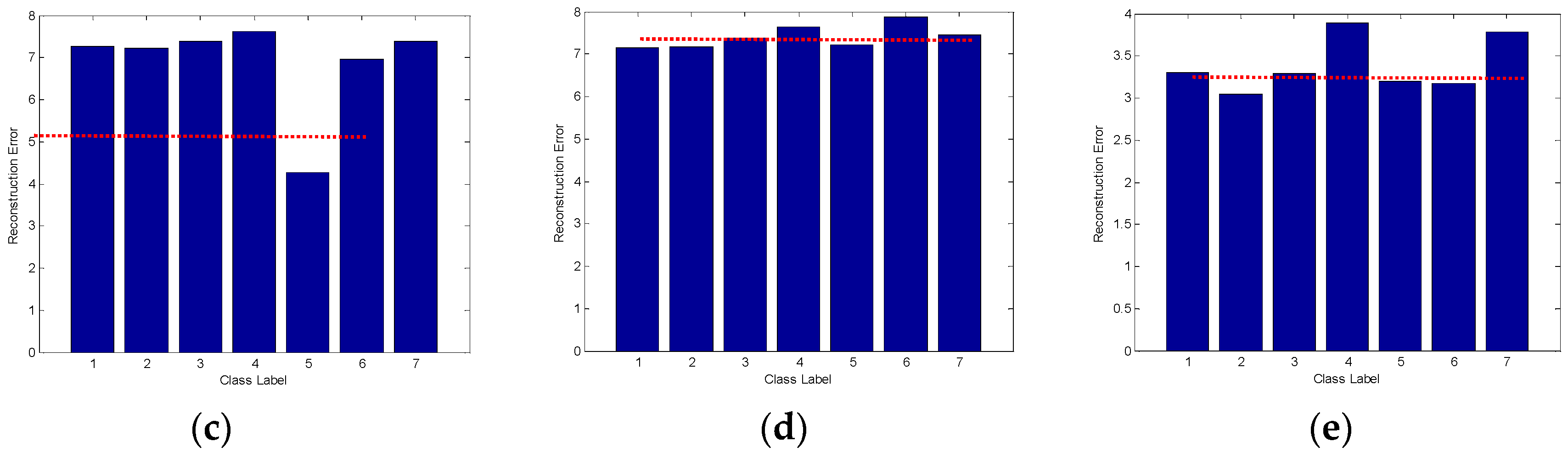

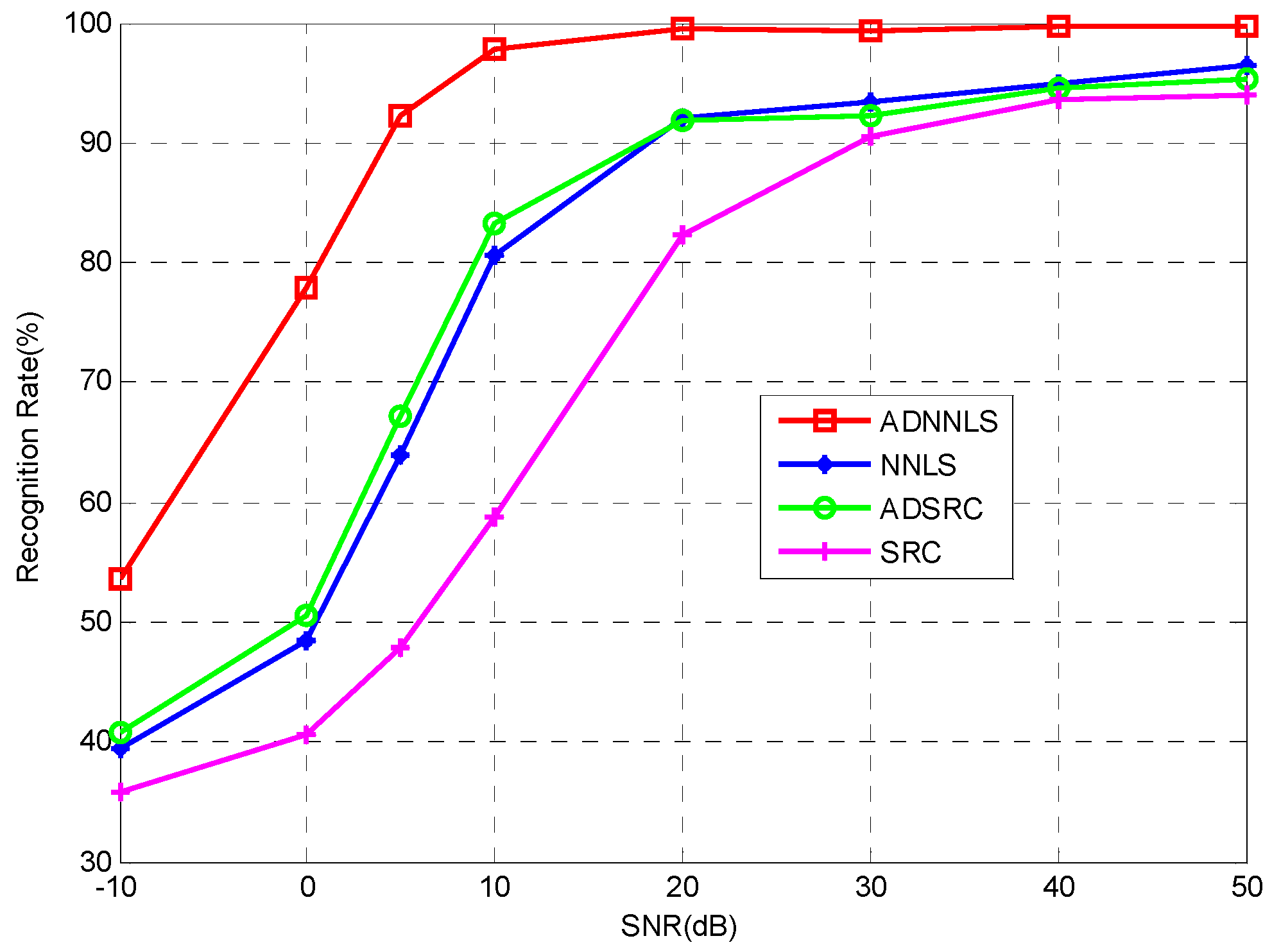

4.3. Robustess to Noise

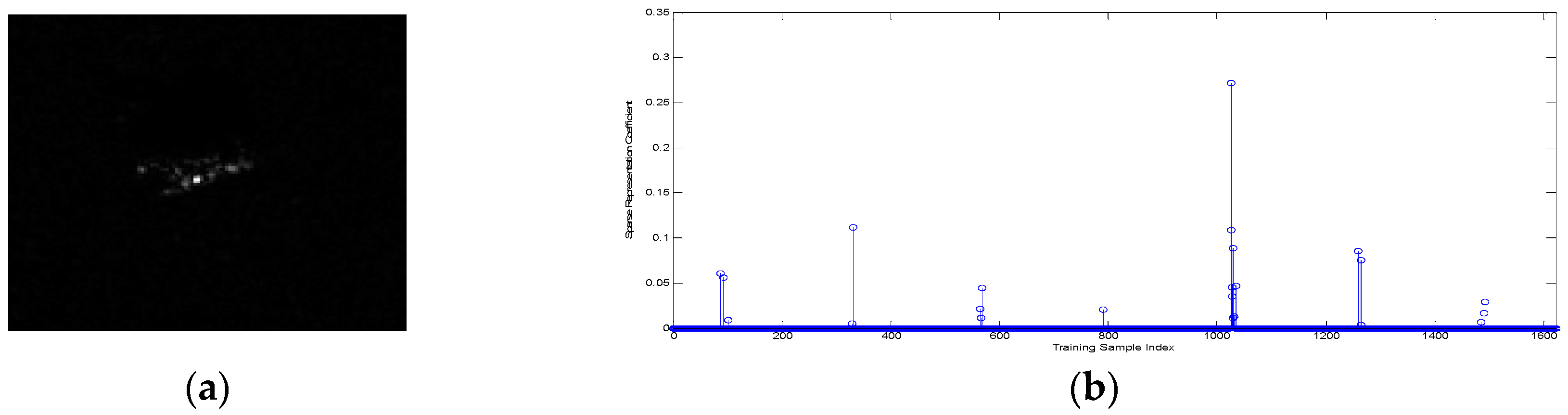

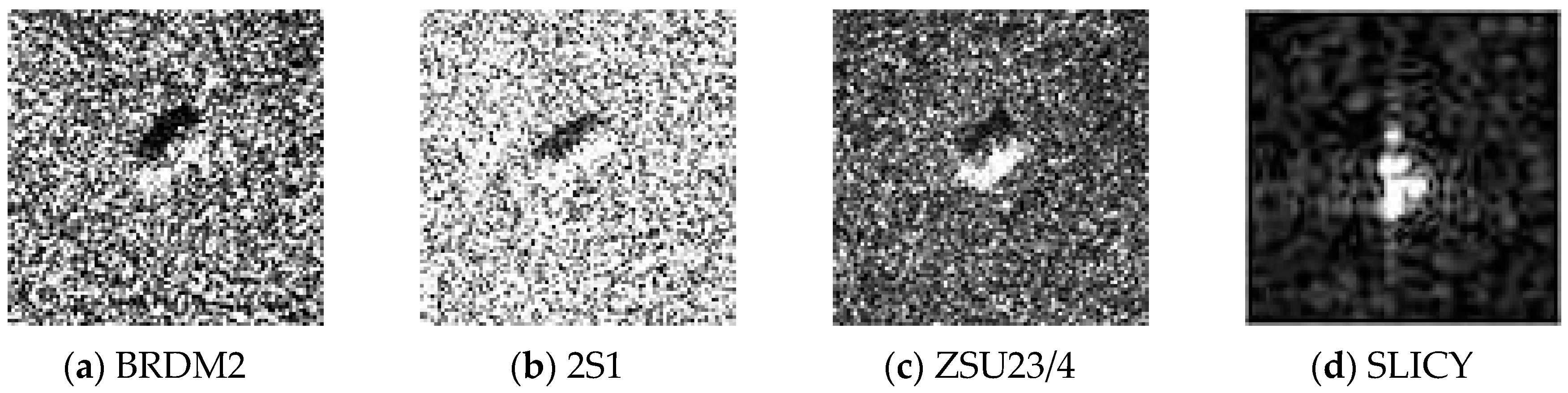

4.4. Experiments Conducted with Depression Angle Variations

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lindell, D.B.; Long, D.G. Multiyear Arctic Ice Classification Using ASCAT and SSMIS. Remote Sens. 2016, 8, 294. [Google Scholar] [CrossRef]

- Islam, T.; Rico-Ramirez, M.A.; Srivastava, P.K. CLOUDET: A Cloud Detection and Estimation Algorithm for Passive Microwave Imagers and Sounders Aided by Naive Bayes Classifier and Multilayer Perceptron. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 4296–4301. [Google Scholar] [CrossRef]

- Jun, D.; Bo, C.; Hongwei, L. Convolutional Neural Network with Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar]

- Liu, M.; Wu, Y.; Zhao, W.; Zhang, Q.; Li, M.; Liao, G. Dempster–Shafer Fusion of Multiple Sparse Representation and Statistical Property for SAR Target Configuration Recognition. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1106–1109. [Google Scholar] [CrossRef]

- Liu, M.; Wu, Y.; Zhang, Q. Synthetic Aperture Radar Target Configuration Recognition Using Locality-preserving Property and the Gamma Distribution. IET Radar Sonar Navig. 2016, 10, 256–263. [Google Scholar] [CrossRef]

- Novak, L.; Owirka, G.; Netishen, C. Performance of a High-resolution Polarimetric SAR Automatic Target Recognition System. Lincoln Lab J. 1993, 6, 11–23. [Google Scholar]

- Ma, C.; Wen, G.J.; Ding, B.Y. Three-dimensional Electromagnetic Model-based Scattering Center Matching Method for Synthetic Aperture Radar Automatic Target Recognition by Combining Spatial and Attributed Information. J. Appl. Remote Sens. 2016, 10, 122–134. [Google Scholar] [CrossRef]

- Huang, X.; Qiao, H.; Zhang, B. SAR Target Configuration Recognition Using Tensor Global and Local Discriminant Embedding. IEEE Geosci. Remote Sens. Lett. 2016, 13, 222–226. [Google Scholar] [CrossRef]

- Cui, Z.Y.; Cao, Z.J.; Yang, J.Y. Target Recognition in Synthetic Aperture Radar Images via Non-negative Matrix Factorisation. IET Radar Sonar Navig. 2015, 9, 1376–1385. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust Face Recognition via Sparse Representation. IEEE Trans. Patt. Anal. Mach. Intell. 2009, 31, 210–227. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Bao, G.; Xu, Y.; Ye, Z. Supervised Monaural Speech Enhancement Using Complementary Joint Sparse Representations. IEEE Signal Process. Lett. 2016, 23, 237–241. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral Image Classification with Robust Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 641–645. [Google Scholar] [CrossRef]

- Fang, L.Y.; Li, S.T. Face Recognition by Exploiting Local Gabor Features with Multitask Adaptive Sparse Representation. IEEE Trans. Instrum. Measur. 2015, 64, 2605–2615. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, W.D.; Chang, P.C.; Liu, J.; Yan, Z.; Wang, T.; Li, F.Z. Kernel Sparse Representation-Based Classifier. IEEE Trans. Signal Process. 2012, 60, 1624–1632. [Google Scholar] [CrossRef]

- Li, Y.; Ngom, A. Classification Approach Based on Non-Negative Least Squares. Neurocomput 2013, 118, 41–57. [Google Scholar] [CrossRef]

- Li, Y.; Ngom, A. Nonnegative Least-Squares Methods for the Classification of High-Dimensional Biological Data. IEEE/ACM Trans. Comput. Biol. Bioinform. 2013, 10, 447–456. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Nasrabadi, N.M.; Zhang, Y.; Huang, T.S. Multi-view Automatic Target Recognition Using Joint Sparse Representation. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2481–2497. [Google Scholar] [CrossRef]

- Cheng, J.; Li, L.; Li, H.S. SAR Target Recognition Based on Improved Joint Sparse Representation. EURASIP J. Adv. Signal Process. 2014, 2014, 1–12. [Google Scholar] [CrossRef]

- Dong, G.G.; Kuang, G.Y.; Wang, N. SAR Target Recognition via Joint Sparse Representation of Monogenic Signal. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 3316–3328. [Google Scholar] [CrossRef]

- Dong, G.G.; Kuang, G.Y. SAR Target Recognition via Sparse Representation of Monogenic Signal on Grassmann Manifolds. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 1308–1319. [Google Scholar] [CrossRef]

- Xing, X.W.; Ji, K.F.; Zou, H.X. Ship Classification in TerraSAR-X Images with Feature Space Based Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1562–1566. [Google Scholar] [CrossRef]

- Liu, H.C.; Li, S.T. Decision Fusion of Sparse Representation and Support Vector Machine for SAR Image Target Recognition. Neurocomputing 2013, 113, 97–104. [Google Scholar] [CrossRef]

- Cao, Z.; Xu, L.; Feng, J. Automatic Target Recognition with Joint Sparse Representation of Heterogeneous Multi-view SAR Images over a Locally Adaptive Dictionary. Signal Process. 2016, 126, 27–34. [Google Scholar] [CrossRef]

- Hou, B.; Ren, B.; Ju, G.; Li, H.; Jiao, L.; Zhao, J. SAR Image Classification via Hierarchical Sparse Representation and Multisize Patch Features. IEEE Trans. Geosci. Remote Sens. 2016, 13, 33–37. [Google Scholar] [CrossRef]

- Feng, J.; Cao, Z.; Pi, Y. Polarimetric Contextual Classification of PolSAR Images Using Sparse Representation and Superpixels. Remote Sens. 2014, 6, 7158–7181. [Google Scholar] [CrossRef]

- Zhang, L.M.; Sun, L.J.; Zou, B. Fully Polarimetric SAR Image Classification via Sparse Representation and Polarimetric Features. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 3923–3932. [Google Scholar] [CrossRef]

- Yang, F.; Gao, W.; Xu, B. Multi-Frequency Polarimetric SAR Classification Based on Riemannian Manifold and Simultaneous Sparse Representation. Remote Sens. 2015, 7, 8469–8488. [Google Scholar] [CrossRef]

- Xie, W.; Jiao, L.C.; Zhao, J. PolSAR Image Classification via D-KSVD and NSCT-Domain Features Extraction. IEEE Signal Process. Lett. 2016, 13, 227–231. [Google Scholar] [CrossRef]

- Principe, J.; Zhao, Q.; Xu, D. A Novel ATR Classifier Exploiting Pose Information. In Proceedings of the Image Understanding Workshop, Monterey, CA, USA, 30 October–5 November 1998; pp. 833–836.

- Shafiee, S.; Kamangar, F.; Ghandehari, L. Cluster-Based Multi-task Sparse Representation for Efficient Face Recognition. In Proceedings of the 2014 IEEE Southwest Symposium on Image Analysis and Interpretation, San Diego, CA, USA, 6–8 April 2014; pp. 125–128.

- Hu, H.; Lin, Z.; Feng, J.; Zhou, J. Smooth Representation Clustering. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3834–3841.

- Von Luxburg, U. A Tutorial on Spectral Clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Yang, J.; Zhang, D. Robust Sparse Coding for Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 625–632.

- Donoho, D. For Most Large Underdetermined Systems of Linear Equations the Minimal ℓ 1-Norm Solution Is Also the Sparsest Solution. Commun. Pure Appl. Math. 2006, 59, 797–829. [Google Scholar] [CrossRef]

- Sharon, Y.; Wright, J.; Ma, Y. Computation and Relaxation of Conditions for Equivalence between ℓ1 and l ℓ0 Minimization; CSL Technical Report UILU-ENG-07-2208; University of Illinois: Champaign, IL, USA, 2007. [Google Scholar]

- Donoho, D.; Elad, M. Optimal Sparse Representation in General (Nonorthogonal) Dictionaries via ℓ1 Minimization. Proc. Nat. Acad. Sci. USA 2003, 100, 2197–2202. [Google Scholar] [CrossRef] [PubMed]

- Tibshirani, R. Regression Shrinkage and Selection via the LASSO. J. R. Stat. Soc. B 1996, 58, 267–288. [Google Scholar]

- Candes, E.; Romberg, J.; Tao, T. Stable Signal Recovery from Incomplete and Inaccurate Measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef]

- Donoho, D. For Most Large Underdetermined Systems of Linear Equations the Minimal ℓ 1-Norm near Solution Approximates the Sparest Solution. Commun. Pure Appl. Math. 2006, 59, 907–934. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: London, UK, 2004. [Google Scholar]

- Yang, A.Y.; Sastry, S.S.; Ganesh, A.; Ma, Y. Fast ℓ 1-minimization Algorithms and an Application in Robust Face Recognition: A review. In Proceedings of the 17th IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1849–1852.

| Number of Targets | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| Training sample type | BMP2 | BMP2 | BMP2 | BTR70 | T72 | T72 | T72 |

| (17°) | sn-9563 | sn-9566 | sn-c21 | sn-c71 | sn-132 | sn-812 | sn-s7 |

| Number | 233 | 232 | 233 | 233 | 232 | 231 | 228 |

| Testing sample type | BMP2 | BMP2 | BMP2 | BTR70 | T72 | T72 | T72 |

| (15°) | sn-9563 | sn-9566 | sn-c21 | sn-c71 | sn-132 | sn-812 | sn-s7 |

| Number | 195 | 196 | 196 | 196 | 196 | 195 | 191 |

| BRDM2 | 2S1 | ZSU234 | SLICY | |

|---|---|---|---|---|

| Training Set (17°) | 298 | 299 | 299 | 298 |

| Testing Set (30°) | 298 | 288 | 288 | 288 |

| Testing Set (45°) | 298 | 303 | 303 | 303 |

| Depression | Classifier | |||

|---|---|---|---|---|

| SRC | NNLS | ADSRC | ADNNLS | |

| 30° | 84.41% | 84.71% | 93.40% | 96.44% |

| 45° | 59.82% | 65.10% | 77.81% | 79.21% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Yang, Q.; Liu, M.; Jia, Y.; Liu, S.; Li, G. Aspect-Aided Dynamic Non-Negative Sparse Representation-Based Microwave Image Classification. Sensors 2016, 16, 1413. https://doi.org/10.3390/s16091413

Zhang X, Yang Q, Liu M, Jia Y, Liu S, Li G. Aspect-Aided Dynamic Non-Negative Sparse Representation-Based Microwave Image Classification. Sensors. 2016; 16(9):1413. https://doi.org/10.3390/s16091413

Chicago/Turabian StyleZhang, Xinzheng, Qiuyue Yang, Miaomiao Liu, Yunjian Jia, Shujun Liu, and Guojun Li. 2016. "Aspect-Aided Dynamic Non-Negative Sparse Representation-Based Microwave Image Classification" Sensors 16, no. 9: 1413. https://doi.org/10.3390/s16091413

APA StyleZhang, X., Yang, Q., Liu, M., Jia, Y., Liu, S., & Li, G. (2016). Aspect-Aided Dynamic Non-Negative Sparse Representation-Based Microwave Image Classification. Sensors, 16(9), 1413. https://doi.org/10.3390/s16091413