Abstract

In recent years, surface Electromyography (sEMG) signals have been effectively applied in various fields such as control interfaces, prosthetics, and rehabilitation. We propose a neck rotation estimation from EMG and apply the signal estimate as a game control interface that can be used by people with disabilities or patients with functional impairment of the upper limb. This paper utilizes an equation estimation and a machine learning model to translate the signals into corresponding neck rotations. For testing, we designed two custom-made game scenes, a dynamic 1D object interception and a 2D maze scenery, in Unity 3D to be controlled by sEMG signal in real-time. Twenty-two (22) test subjects (mean age 27.95, std 13.24) participated in the experiment to verify the usability of the interface. From object interception, subjects reported stable control inferred from intercepted objects more than 73% accurately. In a 2D maze, a comparison of male and female subjects reported a completion time of 98.84 s. ± 50.2 and 112.75 s. ± 44.2, respectively, without a significant difference in the mean of the one-way ANOVA (p = 0.519). The results confirmed the usefulness of neck sEMG of sternocleidomastoid (SCM) as a control interface with little or no calibration required. Control models using equations indicate intuitive direction and speed control, while machine learning schemes offer a more stable directional control. Control interfaces can be applied in several areas that involve neck activities, e.g., robot control and rehabilitation, as well as game interfaces, to enable entertainment for people with disabilities.

1. Introduction

Video games have evolved into a more sophisticated ecosystem due to the advancement of technology in the 21st century. The same integration into society is also on an upward uptrend. Games come in all forms and shapes, ranging from smartphones to dedicated gaming hardware and consoles. Edutainment, development games, and children’s games are some of the integration processes that exist to date [1,2,3]. In general, games have proven to be the central point of engagement, socialization, and connectivity in students’ lives [3,4]. Despite these developments, people with disabilities have not been adequately served by the industry. Accessibility of video games is especially a challenge for people with disabilities due to the control interfaces primarily comprised of the gamepad, keyboard, and touch panel.

The control interface for people with disabilities is a significant issue that is multifaceted and varies with the user. The main challenge stems from the fact that different individuals have different needs and abilities even within the same spectrum of disability [5]. In an attempt for inclusivity, the game industry is customizing controls to make them disabled-accessible, i.e., commercially available game interfaces are customized/adapted to the current user needs [6]. A game enthusiast has taken advantage of the modification in the past with tremendous results targeting the face and tongue [7,8]. Despite its success with pro-gamers, an interface that is usable by casual users without requiring overly complex customization is highly desirable.

The development of an access control interface for people with disabilities with intuitive control mechanisms is needed to improve the quality of life of the target group. Several approaches have been employed in the research area, ranging from modifications in the input system to new body controls. The most frequently reported approaches touch biopotential signals such as Electromyogram (EMG) [9], Electrooculogram (EOG) [10], Electroencephalogram (EEG) [11], and body sways [12,13].

The non-intrusive nature of sEMG and its ease of modulation contributes considerably to much of its usage in research. Integration of sEMG into different daily activities ranging from prosthetics [14], robotic control [15,16,17,18], wheelchair control [19,20], and more is also possible for this reason. In addition, EMG has been used as a serious game control—referred to as exergaming [21,22,23]—and the general premise of using EMG-controlled games is that motor skills acquired through them will be translated into useful skills, such as rehabilitation or prosthetic controls.

A method in [24] investigated how exergaming improves motor skills and whether there is a transfer of skills over time. The authors reported in-game adaptability and accuracy with minimal or no translation from games to daily activities. A similar method was reported in [23], where the authors developed a system to facilitate rehabilitation exercises to recover patients. The target of the game was to increase motivation and performance and to quantify measurable progress. Researchers have found that exergaming can improve exercise adherence, engage participants, sustain motivation, and result in other benefits [25,26,27,28].

In this paper, we propose to utilize the neck EMG of the left and right sternocleidomastoid (SCM) muscles and the facial EMG of the Masseter muscle to develop a Human-Machine Interface (HMI) for game control. Scientists in different fields have been using SCM EMG to estimate its various responses and applications [29,30,31]. The masseter has been touted as one of the strongest muscles in the human body, given its anatomical purpose in jaw movement [32], and, coupled with the fact that upper body muscles are not affected even in spinal cord injury (SCI), the combination forms a good source of HMI for disabled patients [33,34].

EMG of the neck and face have been used in previous studies as control inputs. In [34], Williams et al. applied EMG of the neck muscle and head turns in an attempt to restore cursor control to patients with tetraplegia. The target of the study was computer-controlled recovery for patients with SCI. They used head orientation and EMG of the facial and neck muscles—users turned their heads or altered muscle contractions to change the position of the computer cursor. The authors further expanded the research into robotic control. In this case, the EMG signal from the neck was used as the input source for the 3D robot command [18]. Other research has also attempted to confirm the usability of neck EMG in feedback and control recovery for disabled individuals.

Game controller interfaces for people with disabilities in the literature focusing on motor impairments can be categorized into remapping/modification control inputs and developing alternative controls. In remapping, the target is to remodel or upgrade the standard joystick using software of additional hardware to suit user requirements [35]. Alternative controllers focus on new input signals for analysis. These include, but are not limited to, voice commands, mouth, vision-based gestures [36], and bio-signals [37,38,39,40,41].

EMG as a control interface for games can be categorized in various degrees of freedom (DoF) or control dimensions [42]. Single click (e.g., in firing command) [21,23,43], 1 DoF (e.g., in left and right movement) [26,27], 2 DoF (e.g., in left, right, top and down movement) [14], or 3 or more DoF (input combination).

This paper is a continuation of a previously published article [44], in which we explored the implementation of control interfaces in 1D. This work reports on further developments being made, notably on machine learning (ML) generalizations from trained models and multiple-dimensional controls or 2D controls, by incorporating additional EMG sensors. Target use cases are individuals with Amelia or amputees as control schemes or entertainment interfaces. With a focus on biofeedback, users can control custom-made games to the desired effect. The custom game is designed in a Unity 3D game engine to ensure control of the design scene. We modeled the relationship between subject neck rotation EMG using an equation and ML approach and applied the results to the game biofeedback interface.

The remainder of this paper is subdivided as follows: Section 2 describes the materials used, the design of the game scene, and evaluation methods; Section 3 shows the verification of control performance and the results obtained; Section 4 discusses the results; and Section 5 is the conclusion drawn from the paper.

2. Materials and Methods

2.1. Setup and Data Acquisition

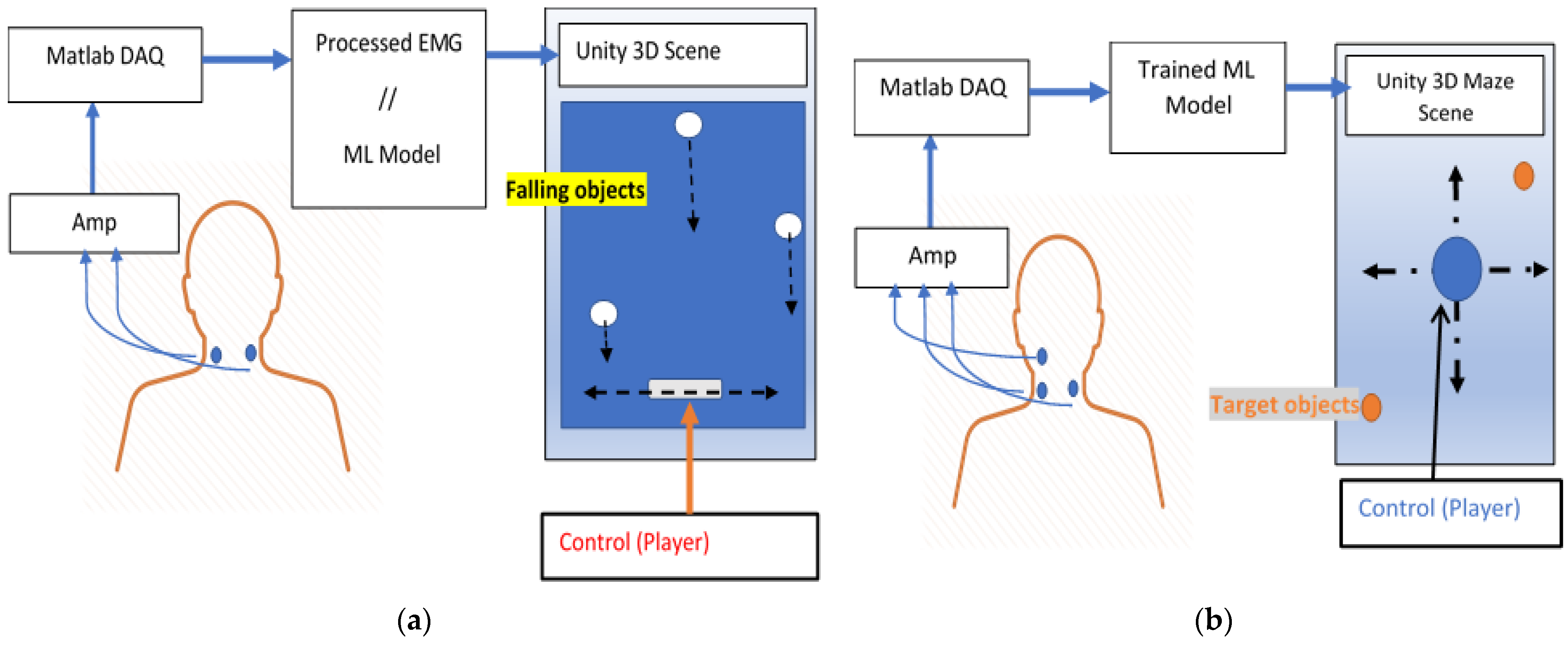

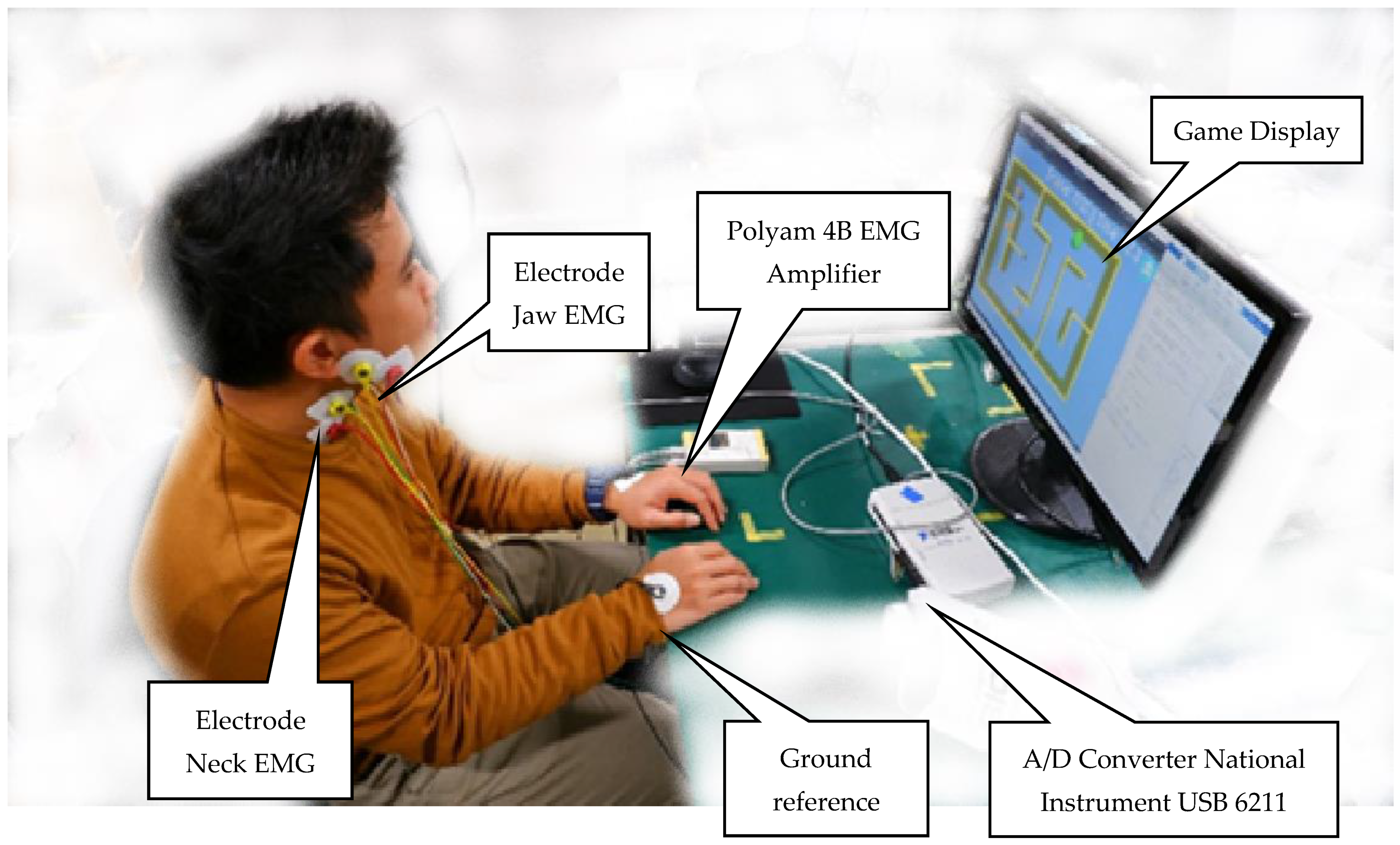

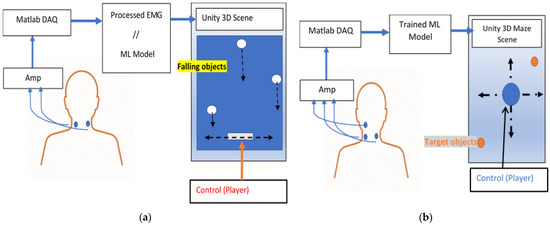

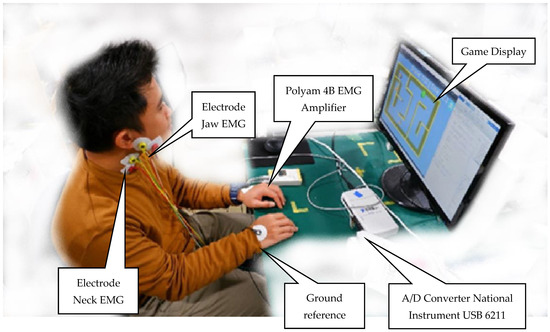

The setup used for the system for experiment one is as shown in Figure 1a. The target neck muscles were the left and right sternocleidomastoid (SCM), recorded with gel-based adhesive electrodes, Biorode SDC-H® from Sekisui Plastics Co. Ltd., Tokyo Japan. The recorded EMG was amplified using polyam4B and converted from A/D using National Instrument USB 6211 with a 16 bit 250 kS/s multifunction IO device at a sampling rate of 2000 Hz. Data were recorded using the Data Acquisition Toolbox 4.0 from MATLAB. The setup for experiment two is as shown in Figure 1b. In this case, the target muscles were the left and right SCM and Masseter EMG. The same data acquisition protocol was observed, albeit with three channels as opposed to the previous case. The setup was performed on a laptop with Windows 10, 16 GB RAM and an NVIDIA graphics card GTX 1060, MATLAB 2019a, and Unity 3D version 2019.4.12.

Figure 1.

Data acquisition and game concept: (a) experiment one; (b) experiment two.

The raw EMG obtained is integrated and the FIR is filtered using moving averages to generate the associated processed signal. In the case of an ML model, the trained model is used for inference to classify which direction is selected. The user sits in front of the PC monitor at a comfortable distance (approximately 0.5 m). The game setup requires the user to maintain visual feedback with the game scene. Thus, the rotation is within a normal head rotation range with little or no strain.

2.2. Unity3D Engine Game Development

In this paper, we use the Unity 3D game engine to develop the scenes for experiments one and two. Unity 3D has a built-in physics system that is utilized to confirm the interaction/collision of game objects. In this case, the game object player is controlled by command input from the processed EMG or the ML model generated from MATLAB in real-time. Connectivity between MATLAB and Unity 3D is handled by either serial communication or TCP/IP with a local host. The update rate (communication speed) is hampered by the game update function. This ranges from 70–100 frames per second (fps).

2.2.1. Experiment One: Intercepting Game

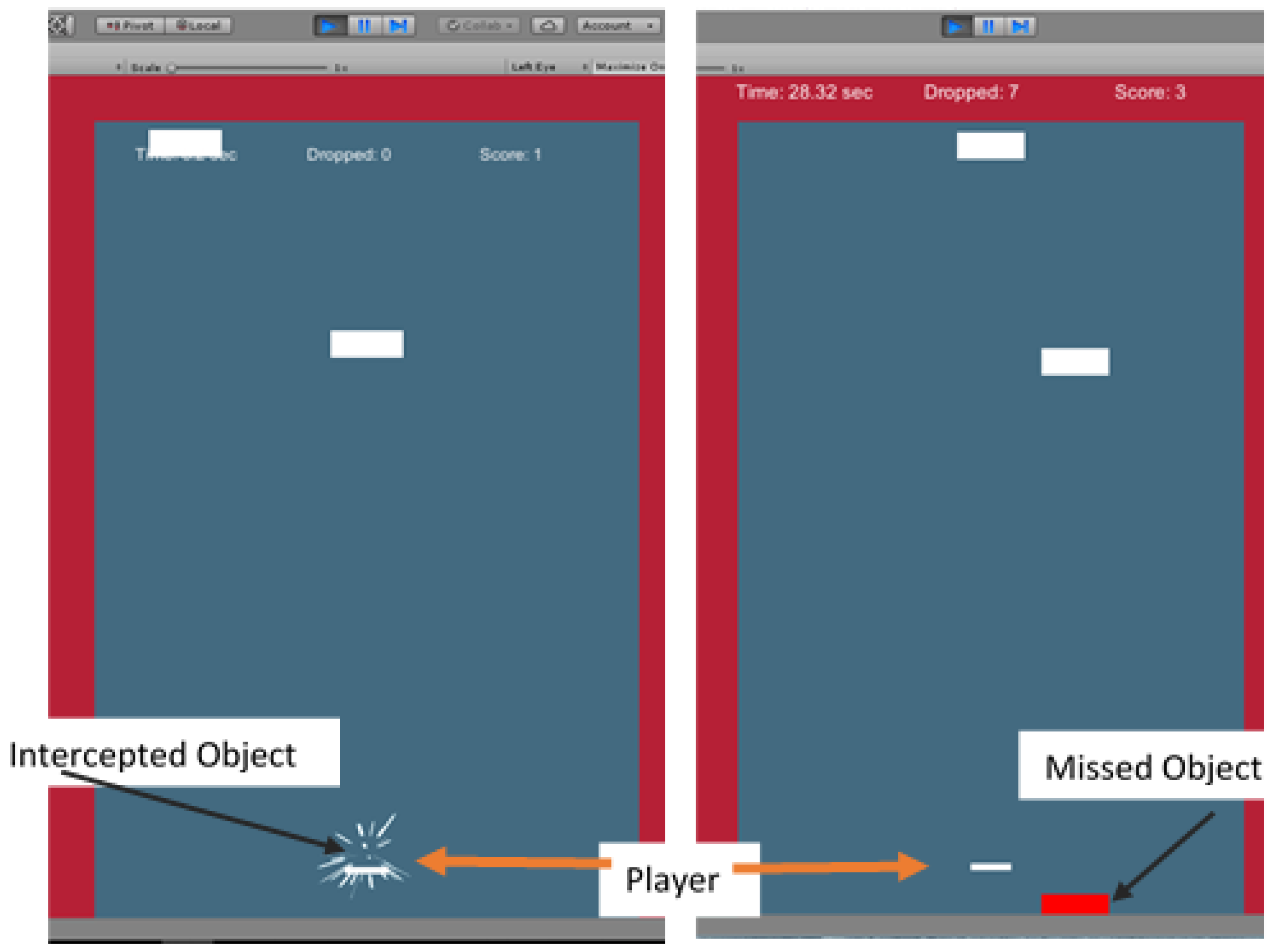

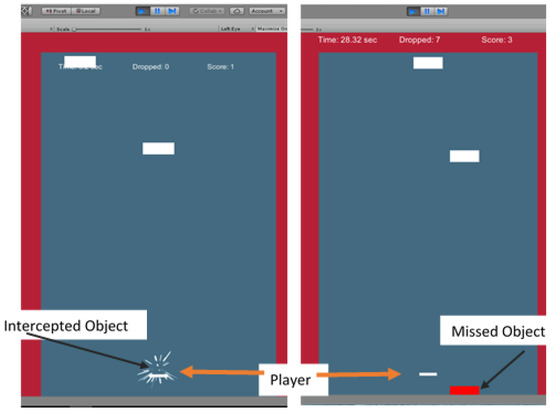

The game is set as illustrated in Figure 1a and an example scene is shown in Figure 2. The player is set at the bottom, with the ability to move left and right directions (x-axis), referred to as 1D movement. The interceptor object appears at the top of the scene in a predefined position in a random order after a period of 2 s, which ensures comparability between subjects.

Figure 2.

Example scenes of experiment one (intercepting game) showing intercepted and missed objects.

The object that appears is moving downwards at a constant speed due to gravity. If the player intercepts an object, a score is recorded, a visual particle effect is displayed, and the object is destroyed. In a case where the object is not intercepted, the distance from the object to the player is recorded for further analysis, and a color change (particle effect) is displayed to mark the missed object. In this setup, difficulty control is exercised with the level of appearance of the object. In the game, the total object intercepted (score), as well as the time, are displayed, as shown in Figure 2. All users play the game until the dropped object set expires—in this case, 5 drops.

2.2.2. Experiment Two: Maze Game

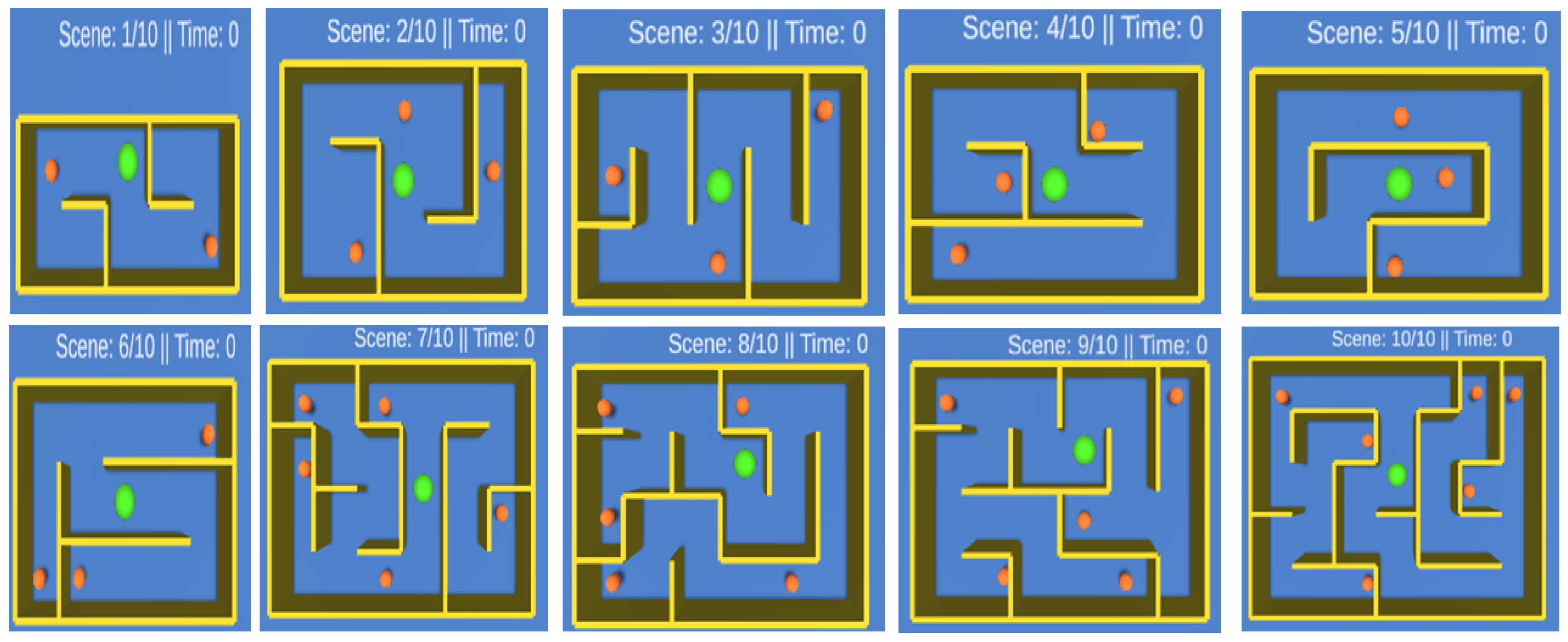

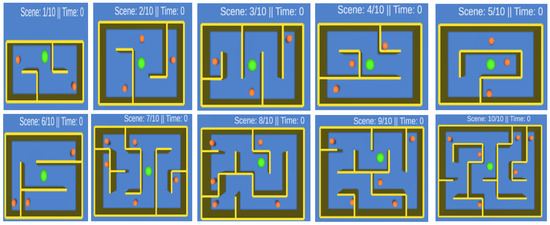

The game mechanism and setup for experiment two are shown in Figure 1b. Figure 3 shows the user interaction experiment of two game scenes. In this case, the user moves the player to collect all the targets in the maze scene. The maze scenes were developed following the procedural scene generation program, which can be found in [45]. For consistency between the test subjects, we selected 10 scenes, as shown in Figure 4, with various target objects and navigational obstacles. In this scene, the player is located in the center of the game and can move in all four directions, i.e., right, left, up, and down (x- and y-axis). The preparatory scene (scene 0) was used to confirm accurate input commands from the bite and neck EMG.

Figure 3.

Test subject settings showing electrode positions for neck, jaw EMG, and ground reference (hand). The user sits comfortably in front of a PC monitor displaying the game. They rotate the head left and right to achieve left-right player control and bite action for top-down controls. The objective is to collect all the target objects.

Figure 4.

The maze scene used in the setup. When all the target objects are intercepted, a csv file is created, logging the time taken and the player trajectory. Then the next scene is loaded.

A scene starts with the time at zero. The user commands the position of the player towards the targets—illustrated by a video clip in the attachment. When the target collides with the player, a hit (intercept) is recorded, and the intercepted object is destroyed. When all targets are destroyed, the next scene is loaded. The time is taken and the position logs of the player are registered for all moves for further analysis.

Each scene is designed with a random number of turns in both the horizontal (y-axis) and vertical axis. A turn is considered a continuous trajectory until a change of direction is required. In this case, for a subject to complete level 1 (scene 1), the user can move the player (cursor) to the left to collect the first target before moving the player right to the original position. The user then moves the cursor down, followed by a left movement to collect a second target. In total, a minimum of 3 turns on the horizontal axis (h = 3) and 1 turn on the vertical axis (v = 1) are needed to complete this scene.

The number of turns in each scene is recorded and used as an analytical metric in the results section. The trajectory length, taken as the sum of distance traveled in the x and y-axis, is also used in the analysis. Similarly, command input and completion time are logged for further analysis as evaluation parameters.

2.3. Head Rotation Estimation

The success of the experiment majorly depends on the accurate estimation of head rotation. EMG has been applied in previous cases to infer rotation estimation methods. In this paper, we focus on two methods: an equation model and an ML approach. In literature, real-time EMG control recommends a maximum latency of 300 ms [46]. In our method, raw EMG was recorded by DAQ with a window size of 30 and 50 ms for the equation and ML model, respectively, corresponding to 30–100 samples of the EMG sequence at a sampling rate of 2000 Hz. This value was found to satisfy the real-time system requirements.

2.3.1. Equation Estimation

Due to the symmetrical and/or near symmetry of the neck motion, rotation produces a counterclockwise (quasi-tension) effect on the EMG data, and the EMG signal is minimal when not rotating. According to a derivation in [43], the estimation of the ith neck angle can be calculated by taking the difference between the processed EMG of left and right SCM muscles as described in (1). In this case, X1 and X2 are the processed EMGs of the left and right SCM, respectively.

where θest is the head rotation. We proposed an alternative model, as expressed in (2). The advantage of the proposed model is to point out the inverse relationship that exists between left and right SCM with head rotation. Further details can be found in [44]. Changing the position of the player is carried out by

2.3.2. Machine Learning (ML) Estimation

We explored the ML approach, which could be useful in the prediction of left-right neck rotation classification as an alternative to the equation model. This was obtained from the previous experiment and by the equation model. The estimated angle is found to be erratic and requires computationally expensive filters to obtain smooth signals in real-time. Given the nature of the required performance, we targeted an ML model that was pre-trained with one or two subject data and generalized to all test subjects. In this way, setup time is reduced simply by connecting the EMG electrodes in a plug-and-play model.

For training, data on 2 subjects were recorded performing left and right neck movements for 2.5 min per subject. The data were further subdivided into processing window sizes (50 ms) and corresponding classes defined from visual observations. Three classes were used: left turn, right turn, and center. The total data generated for training by two subjects in 5 min at a sampling rate of 2000 Hz is (2000 × 5 × 60) = 600,000 samples per channel. After window subdivision, we have 6000 labeled train sequence observations per channel.

In this step, we derive the features of the EMG sequence. Five features to choose from were mean absolute value, zero crossings, integrated absolute value, waveform length, and band power of the sampled data in accordance with other classification researches [46]. In total, 10 predictor features (5 features of each SCM signal) were used for classification. We employed a leaner classification in MATLAB to conduct training with the prepared data set. Three types of ML algorithms, k-Nearest Neighbor (KNN), Support Vector Machine (SVM), and Ensemble, were used [47]. The hyper-parameters for each classifier were initialized with default settings with fivefold cross-validation. In KNN, the cosine distance metric and weighted distance method were evaluated. In SVM, linear and Gaussian kernel functions were evaluated. In Ensemble, bagged trees and boosted trees methods were evaluated.

The performance of the three types is proportional to the demand for different computing resources. The adopted method is based on classification accuracy and computation time. The performance of the ML model is validated with the classification accuracy indicators, as shown in Table 1. From this performance, the Ensemble is selected, which has the best repeat accuracy and response time.

Table 1.

Machine learning training and performance.

2.4. Participants

Twenty-two (22) healthy subjects took part in this experiment. Subjects were solicited through online invitations as game scene test volunteers. The entire test population had a mean age of 27.95 years (std = 13.24). Two of the participants were minors (10 and 13 years old). Data capturing and soliciting were carried out with the guidance of their parents. Of these participants, 5 subjects were randomly selected as test subjects for experiment 1, consisting of dynamic scenes, while the rest were assigned to experiment two. This group was further subdivided based on the visible gender differences. Group 1 consisted of 10 male subjects, and group 2 consisted of 7 female subjects. Of these, 3 data subjects were discarded due to incorrect data recording (2 males and 1 female subjects). All participants (or guardians in the case of minors) provided written consent following the approval procedures issued by the ethics committee of Gifu University.

In the experiment protocol, experiment one is performed as a proof of concept to validate the control scheme. Subjects in 2D experiments performed EMG-controlled scenes first and keyboard controls (certain individuals) much later to avoid pre-exposure to game mechanics. No skills or game requirements were needed in the selection criteria.

3. Performance Verification and Results

3.1. Performance Verification

3.1.1. Head Rotation Validation

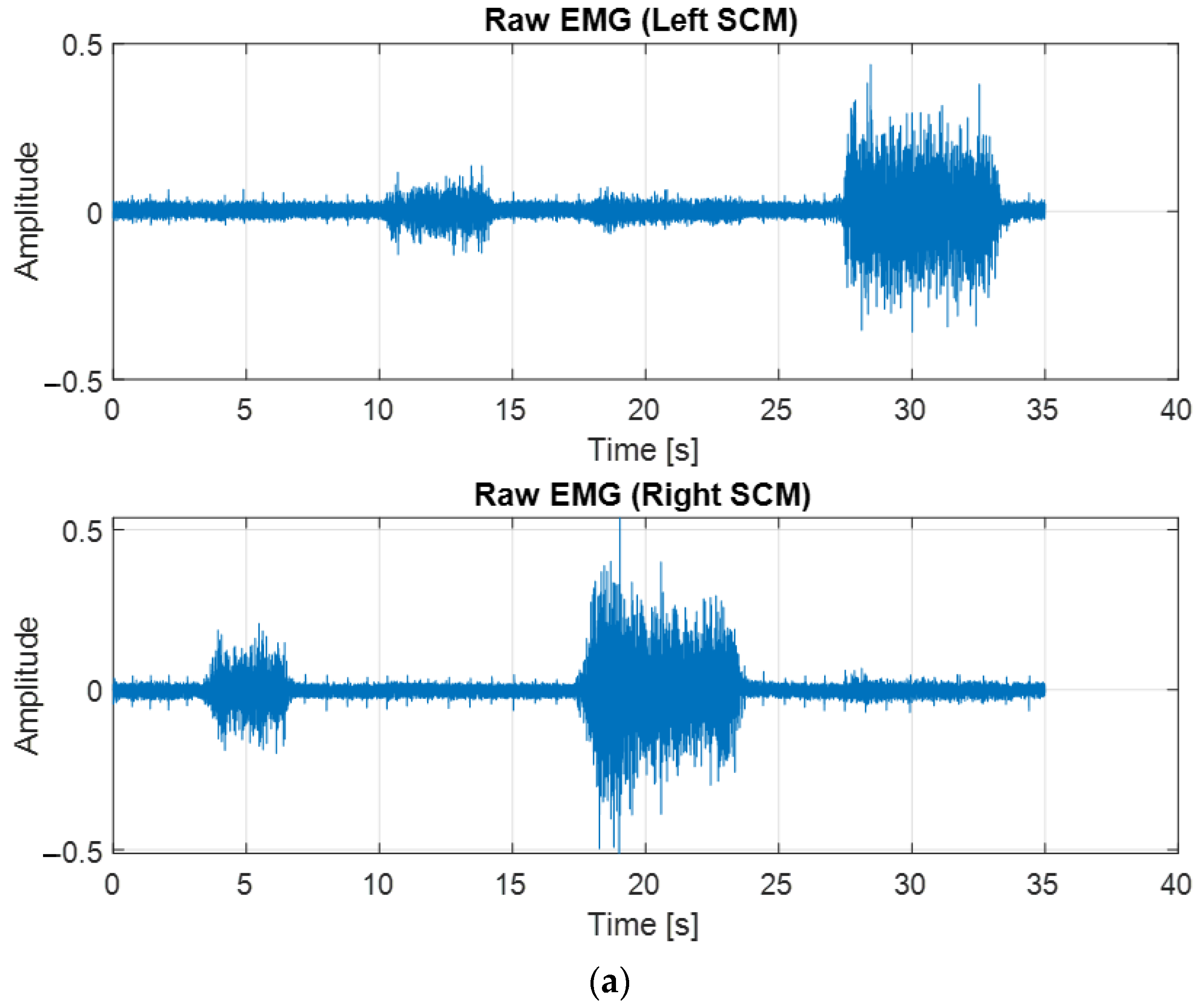

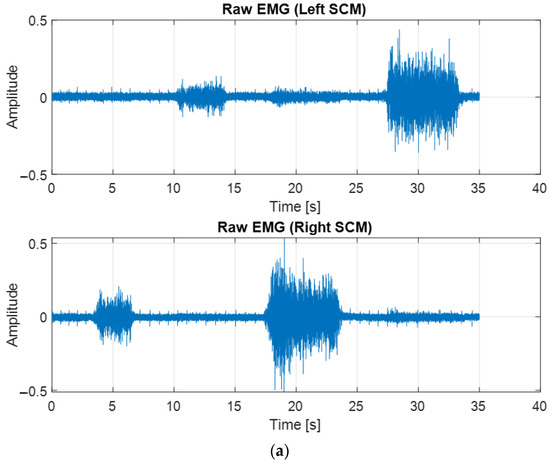

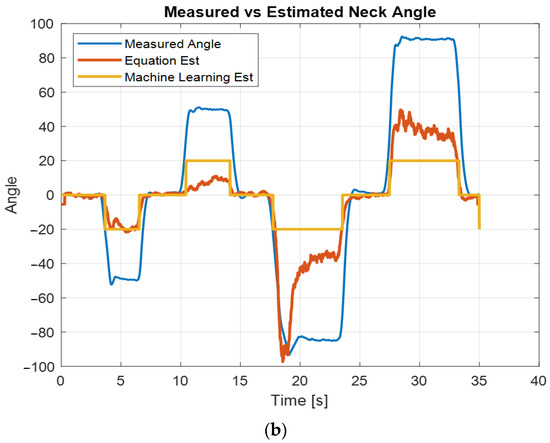

To validate the proposed equation model, we used a motion sensor mounted in a 3D Virtual Reality (3D-VR) Head Mounted Display (HMD) manufactured by FOVE®. The target was to use the inbuilt inertial motion unit (IMU) to formulate a reference. Similar results would be achieved with an IMU attached to the head. In this case, we designed a simple scene with equidistant objects placed on the left and right sides of the virtual scene and the reference point in the center. The user was to turn their head to view each object sequentially from the center (reference point), holding for at least 1 s at each point in the VR environment. Figure 5a shows the raw signal from the neck EMG signal generated when turning the head in a VR environment. Two left turns and two right turns were performed (weak and strong neck turns).

Figure 5.

Validation results of the estimated head (neck) rotation using machine learning and equation models: (a) raw EMG for left and right neck turns; and (b) the corresponding estimation of neck rotation using equation and ML.

Figure 5b shows the estimation results using the equation and the ML model. The ML model output (yellow line) is factored with twenty-five of the three classes of 1, 0, and −1 for easy visibility. When the signal is weak, as it was in the first two turns (Figure 5b between 3–15 s), the equation model reports inconsistent estimates due to the stochasticity of the resulting EMG. This is also the case on a strong neck turn where the initial estimate reached a value of less than −100 before settling down (around −40). The ML model, on the other hand, is capable of accurately delivering consistent output regardless of signal strength differences.

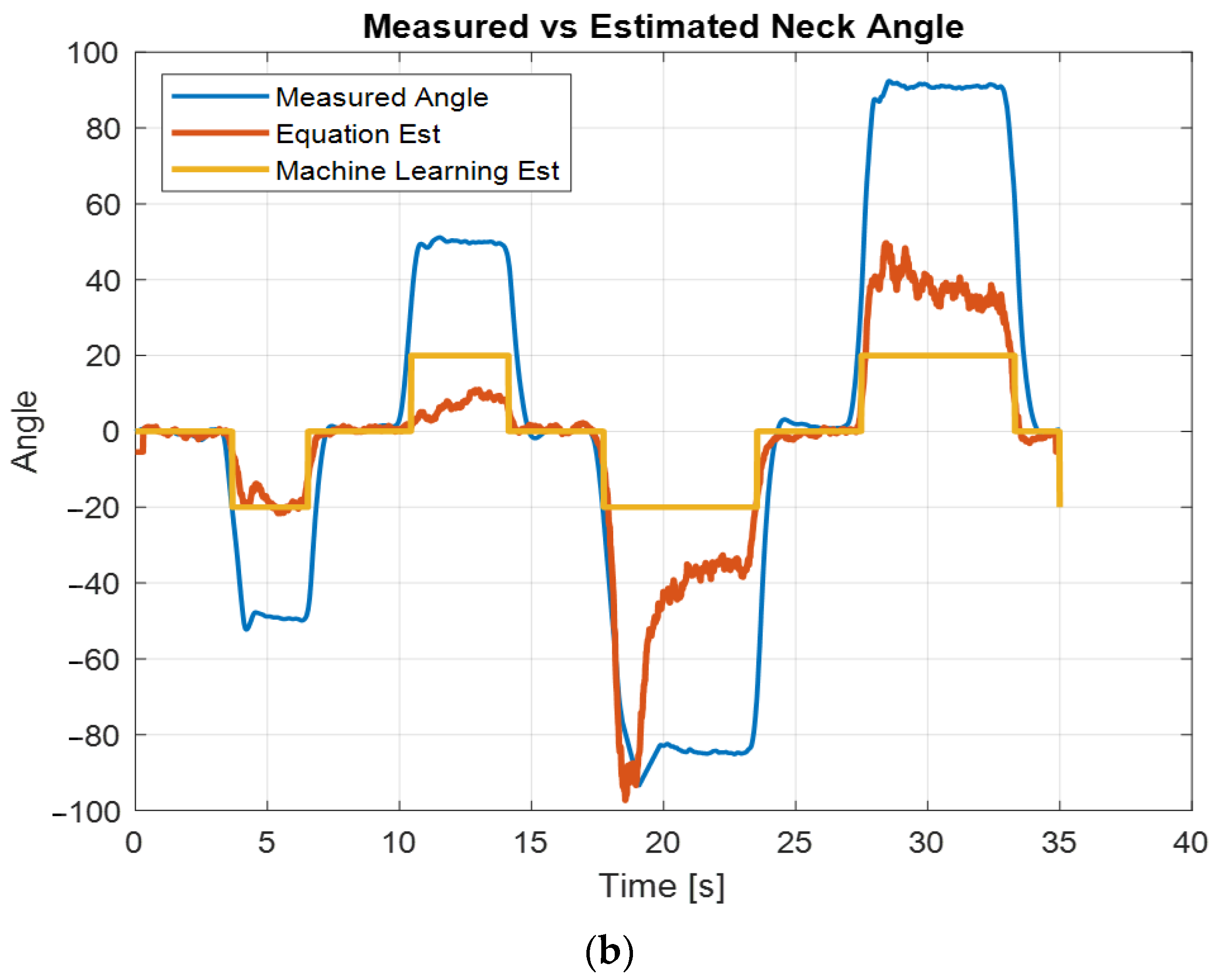

3.1.2. Bite EMG

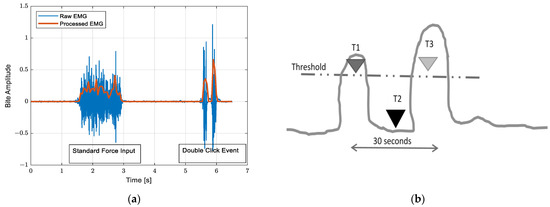

The setup proposed in this research uses three input channels to implement four directional controls. In this case, the bite EMG was further processed to signal a change in direction during movement. The mechanism used is similar to a double click in computer terminology. The bite EMG was scanned for the event, shown in Figure 6, as a double-click. If no event occurs, the included EMG is used as a standard input signal to go up or down, depending on the selected direction.

Figure 6.

Bite EMG for standard input and double-click event showing sequence of trigger T1, T2, and T3: (a) raw and processed bite EMG; and (b) double-click event.

A double-click event registers if a first threshold (T1) is crossed, a rest (T2), and a corresponding crossing of the second threshold (T3) within the specified time. In this case, we use a duration of 30 s to complete one cycle. These are shown in Figure 6b as T1, T2, and T3.

3.2. Results

3.2.1. Experiment One

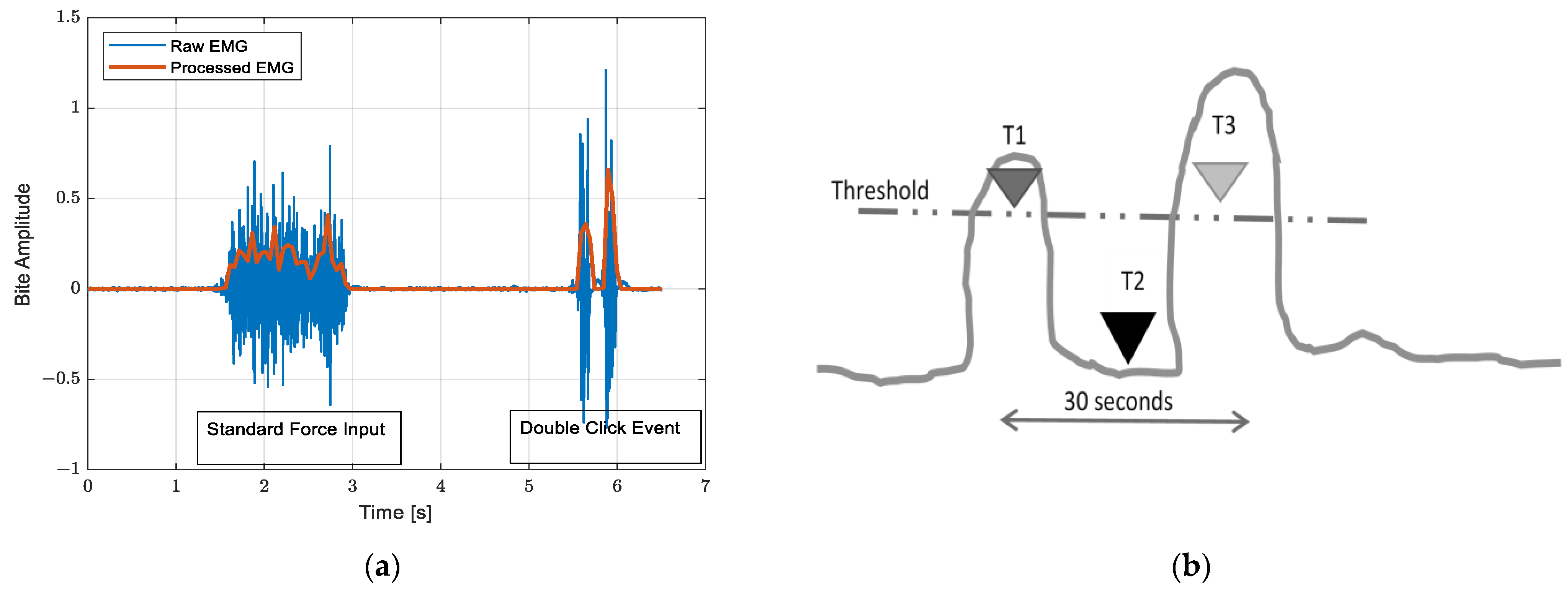

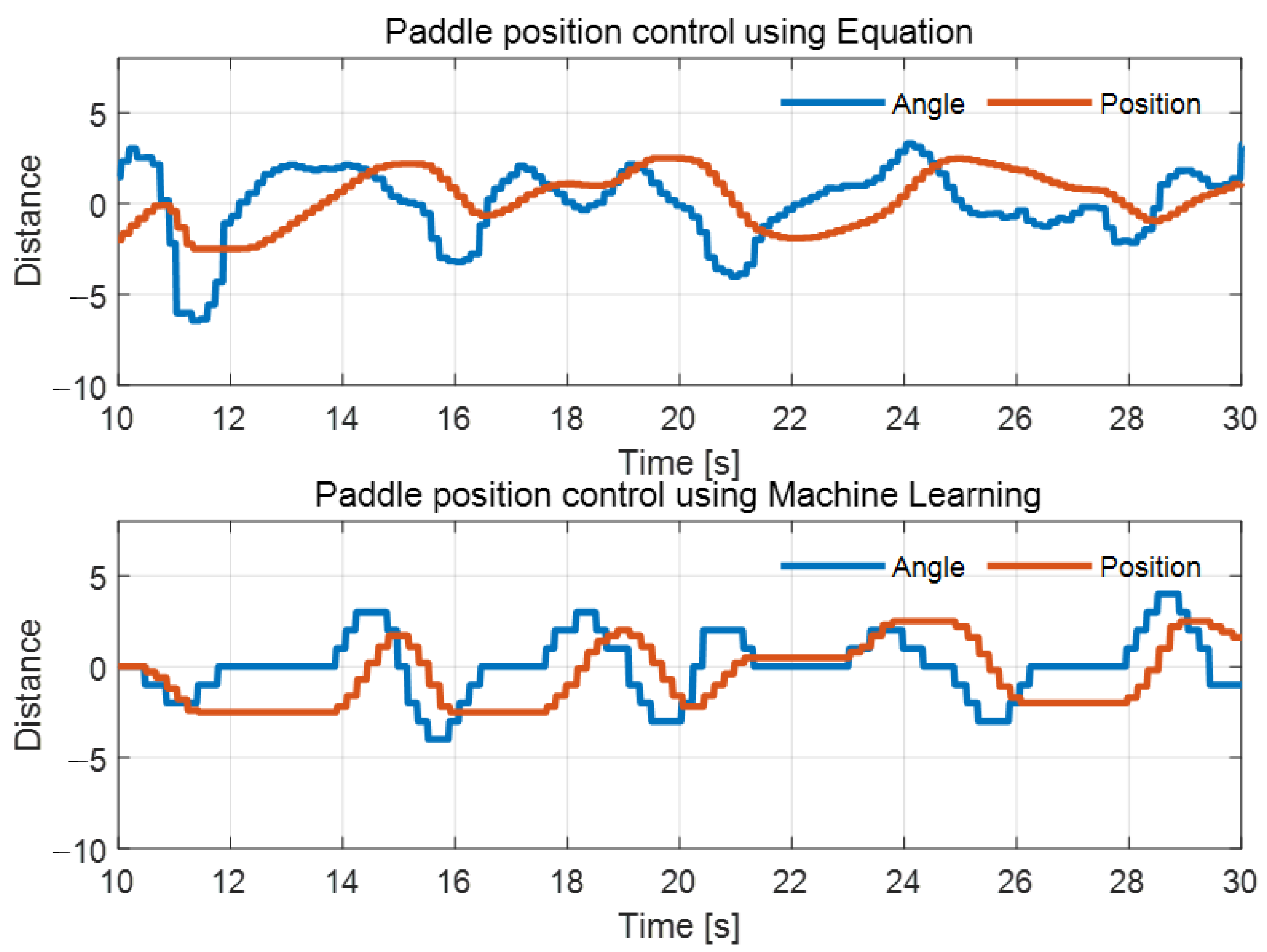

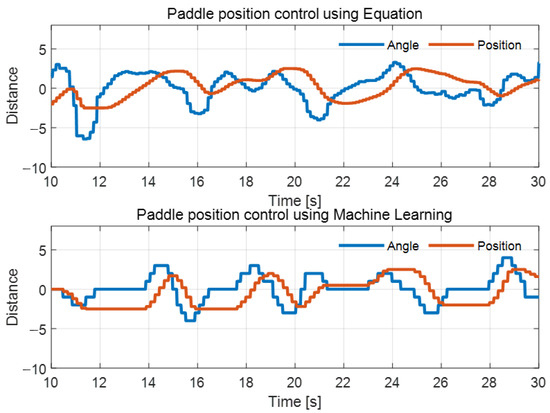

We tested the usability of the system using five subjects. Figure 7 shows the angle input commands (θest) and the corresponding player position along the x-axis. The distance, in this case, is the displacement of the paddle from the center position. The approximate angle of the ML model has been factored by five for visibility. We considered uniform game difficulty settings and compared the percentages of objects dropped and intercepted.

Figure 7.

Input angle and corresponding paddle position for equation and ML model.

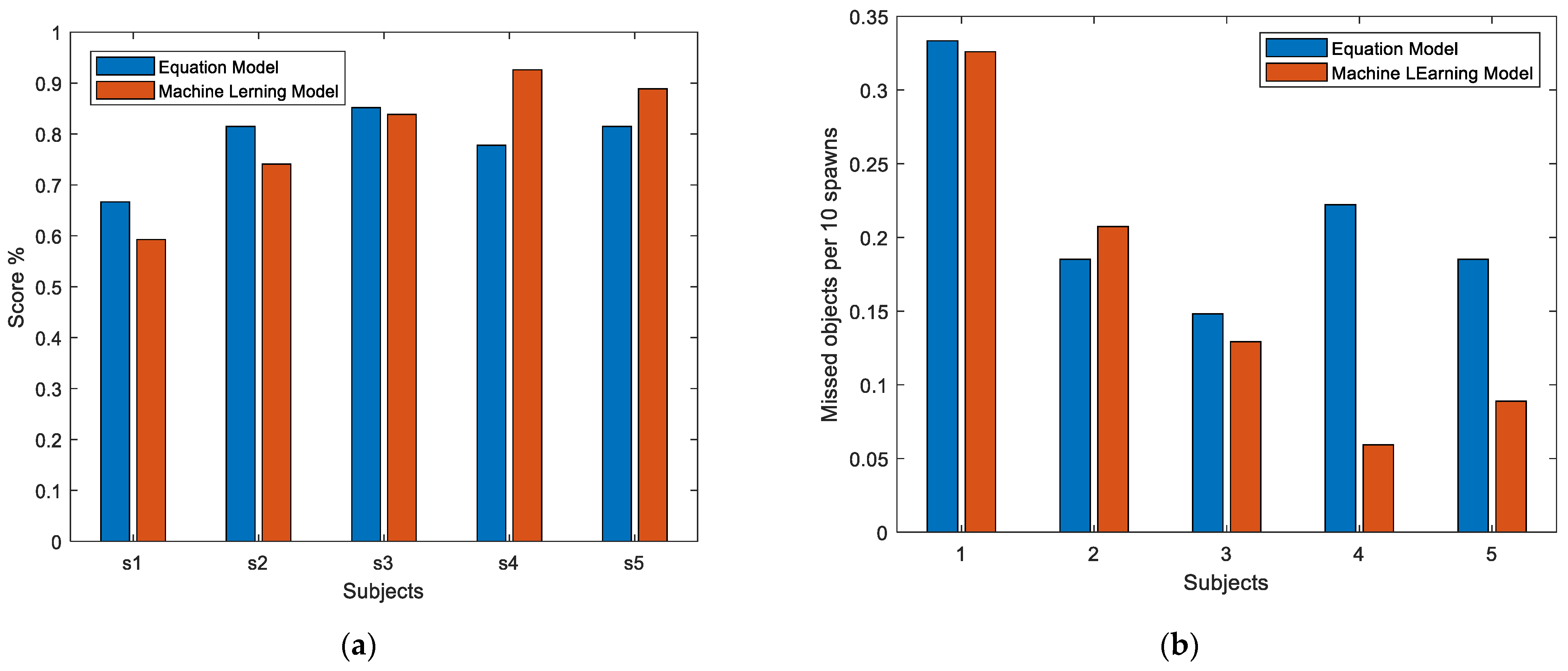

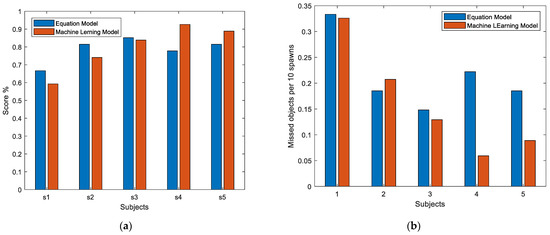

All the subjects reviewed demonstrated accurate game control with little or no training time. Figure 8a shows the scores for the subjects. Users controlled the player with average scores of 75.52% and 79.73% for the equation and the ML model, respectively. This confirmed the ease of use as no subject was previously exposed to the game.

Figure 8.

Game performance metrics showing scores and miss rate of control model for experiment 1 setup: (a) scores (total intercepted objects) for experiment one; and (b) average miss rate.

Figure 8b shows the average miss rate of the two models. As expected, the equation model had a higher number of missed objects compared to the ML model. Previous studies show the equation model to have higher speeds but lower precision. This is because the non-stationarity of θest makes fine-tuned moves difficult to achieve. In other words, the ML model was more stable than the equation model. Based on these results, we opted to focus on the ML model in a 2D environment as described below.

3.2.2. Experiment Two

Completion Time

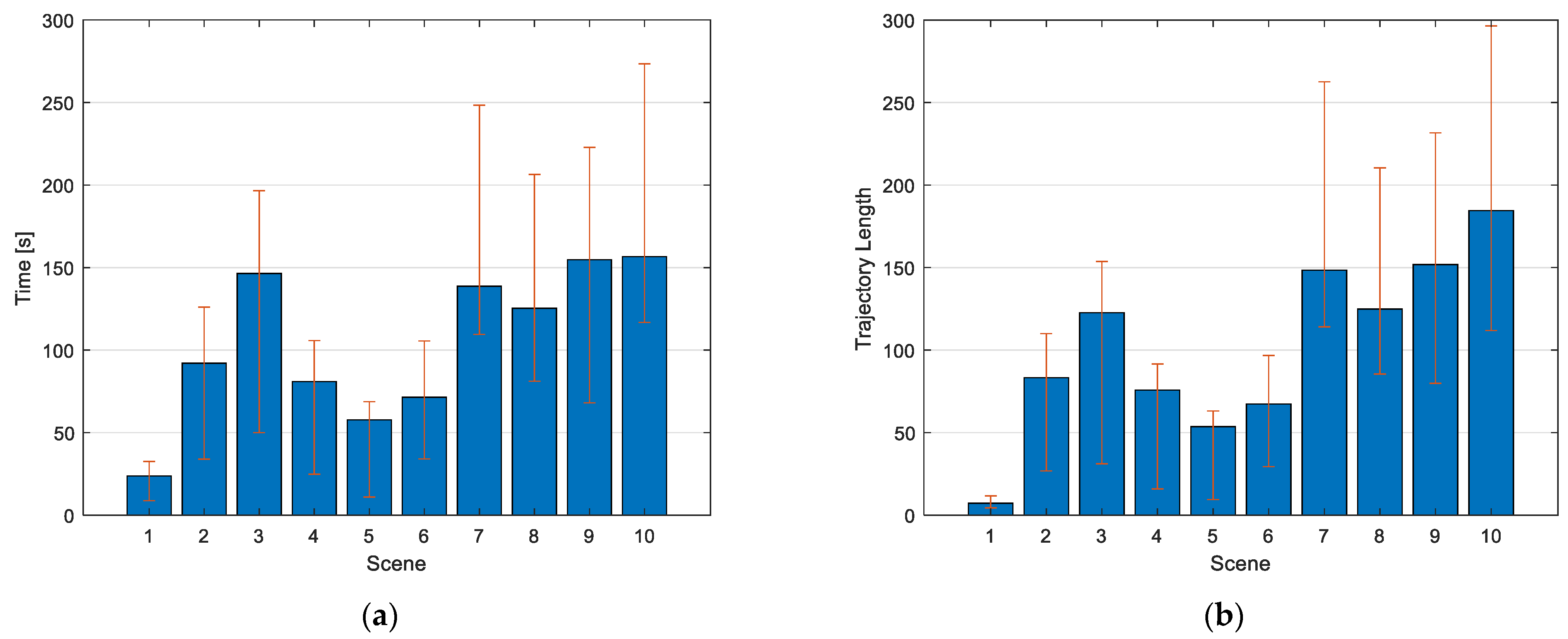

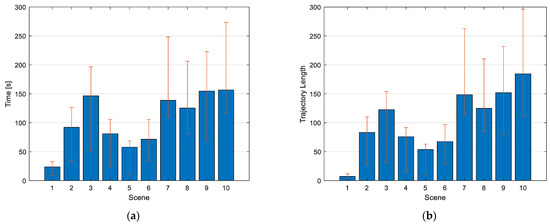

We tested the performance of the system using fourteen subjects (eight males and six females) for all different scenes (difficulty adjustment). The objective for the user is to direct the player to collect all the target objects in a short time using any chosen trajectory. Player positions and completion time are logged and reported here for comparison. Figure 9 shows the average time taken and the total trajectory length (total distance traveled in the x- and y-axis) for all scenes (levels).

Figure 9.

Average performance of all subjects indicated by the completion time and trajectory length of the experiment scenes: (a) average completion time; and (b) average path length.

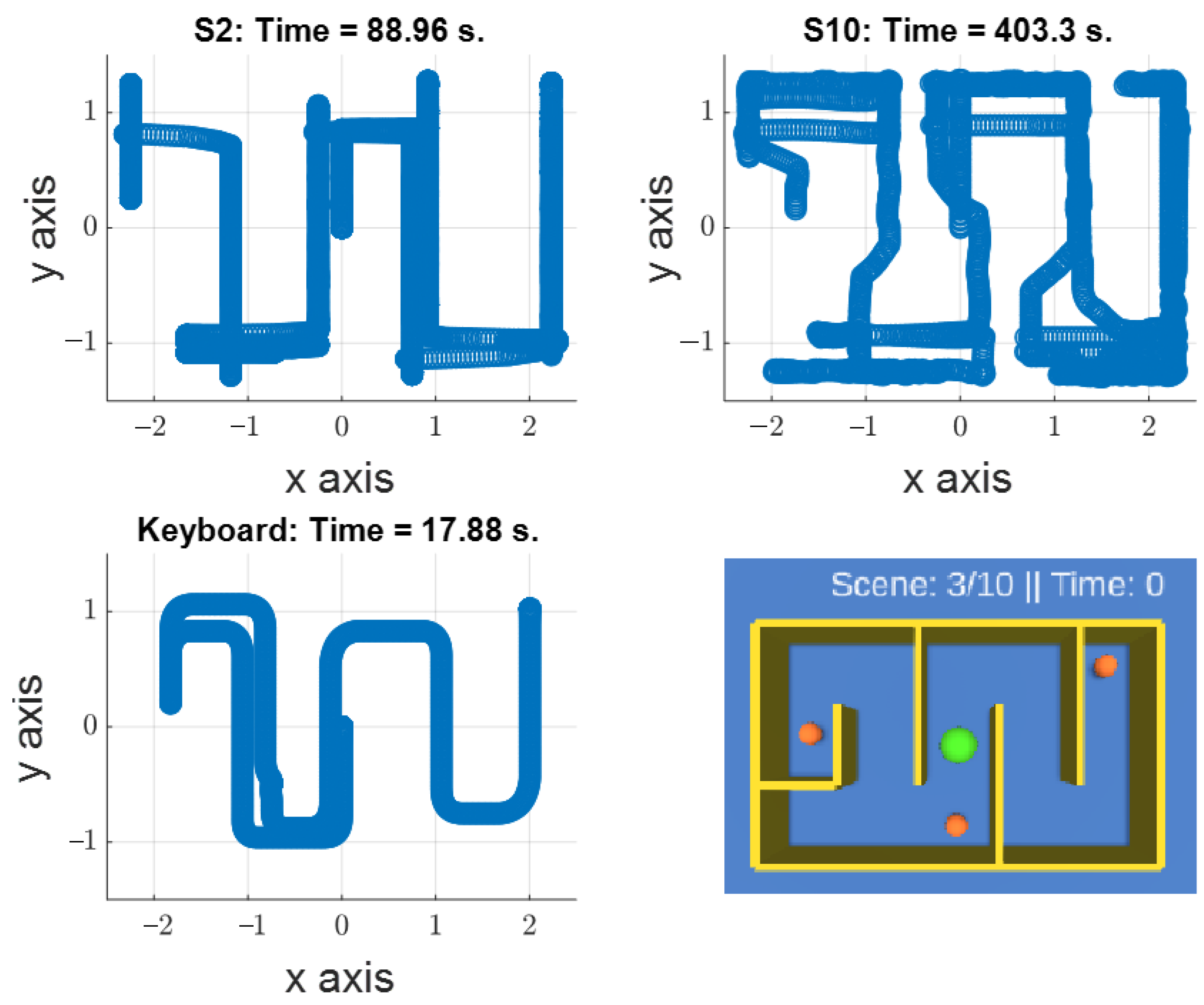

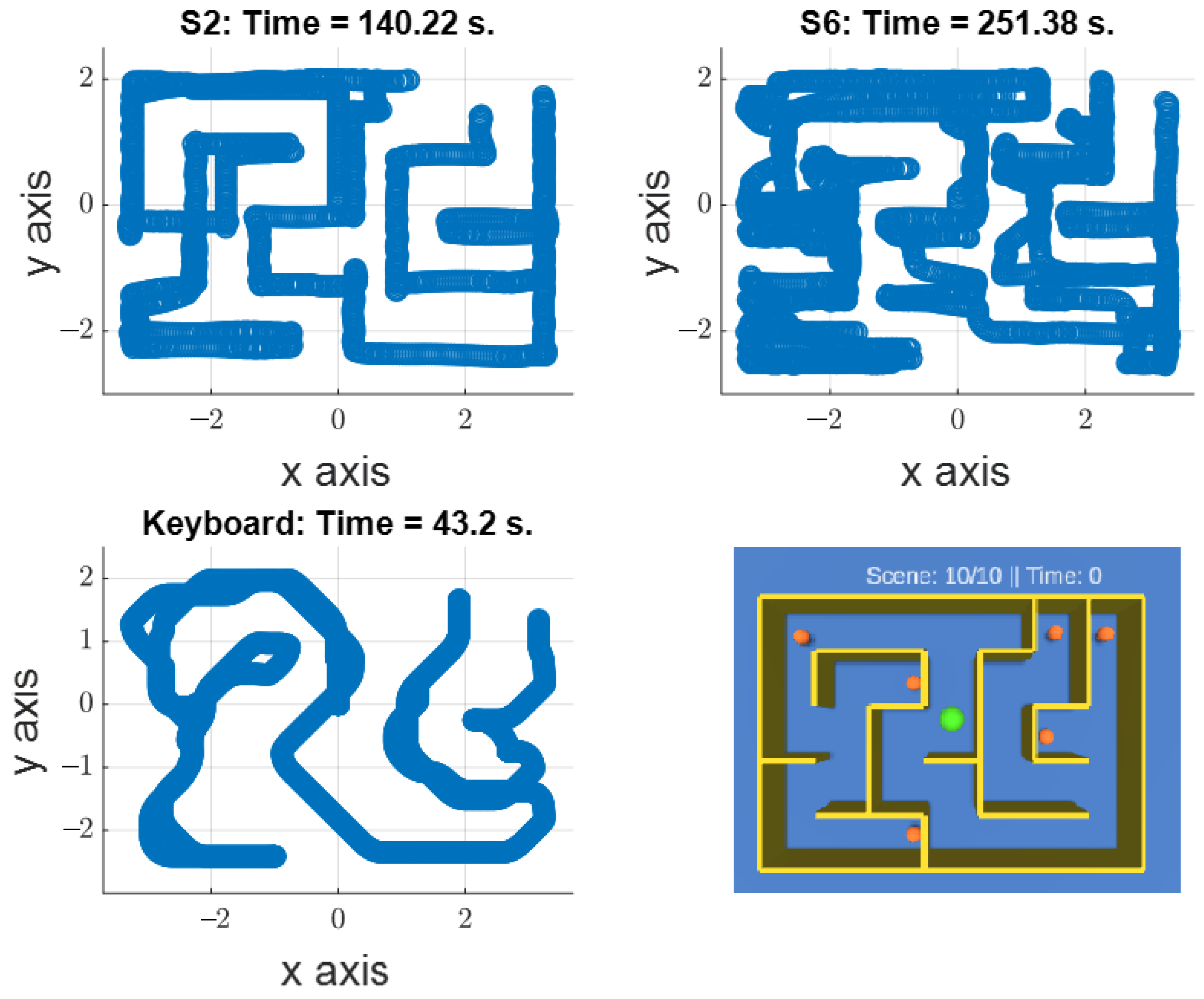

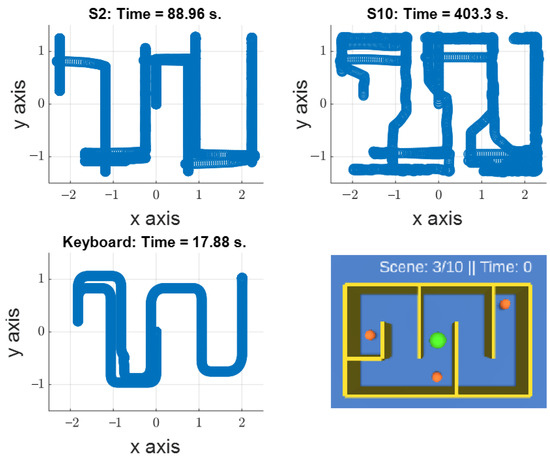

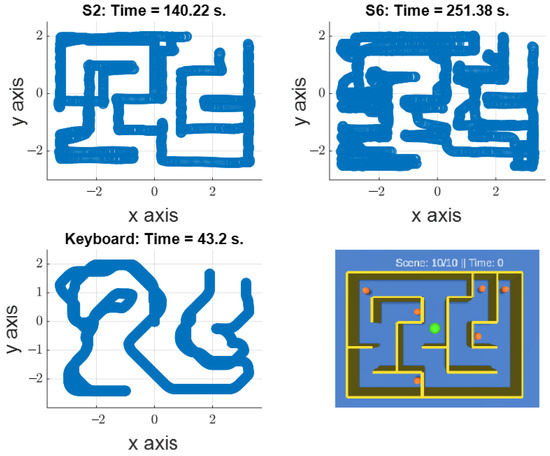

Scenes 3 and 10 are the most time-expensive in the experiment, although the trajectory length is longer in scene 10. This is attributed to the positioning of scene 3, i.e., before the user gets accustomed to controlling the interface. The trajectory of the two scenes (scenes 3 and 10) are shown in Figure 10 and Figure 11. From this trajectory, it is clear that the EMG control is handled in a uni-dimensional manner by the user—indicated by straight vertical and horizontal lines—and further exacerbated by a setup that seems to enforce the regulation by using impenetrable horizontal and vertical collider walls.

Figure 10.

The trajectory of the fastest subject (S2) and the slowest subject (S10) command input for scene 3 with corresponding completion time. The keyboard and scene architecture are provided for contextual reference.

Figure 11.

The trajectory of the fastest subject (S2) and the slowest subject (S6) command input for scene 10 with corresponding completion time.

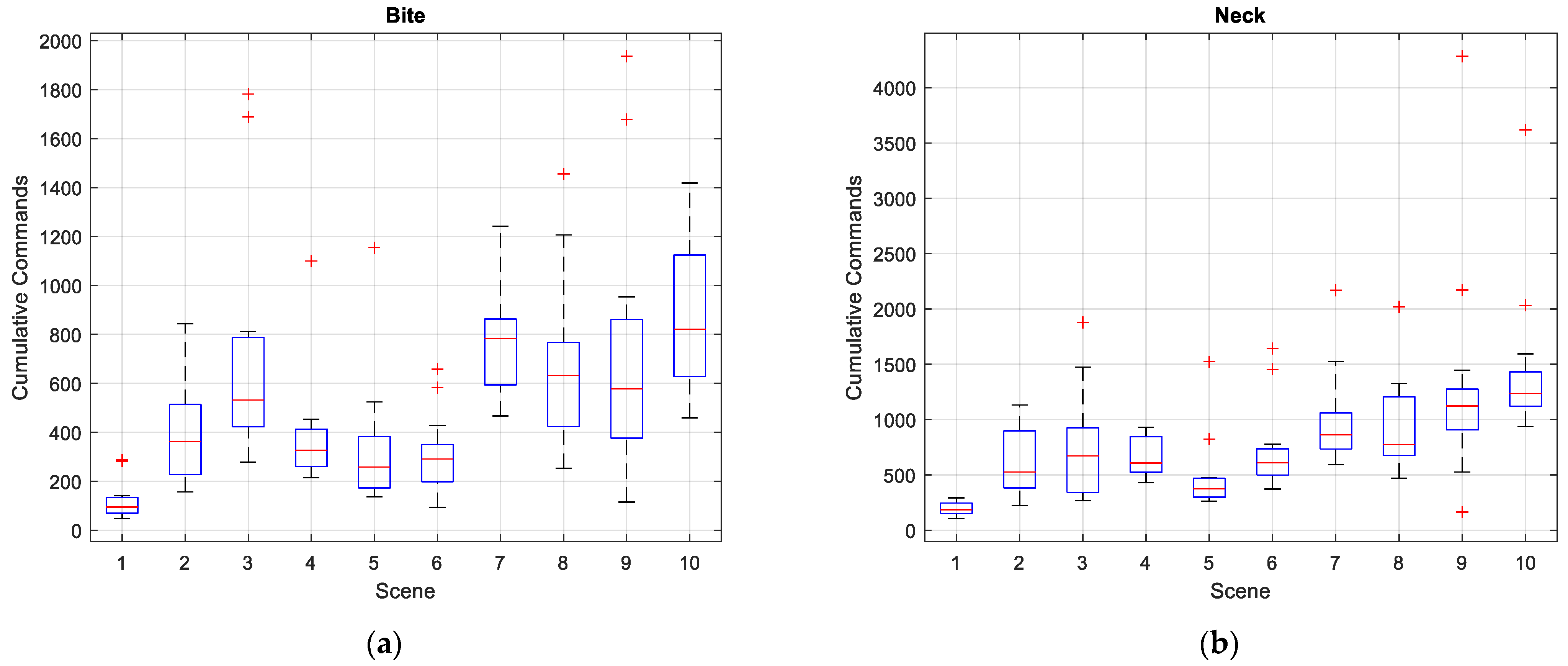

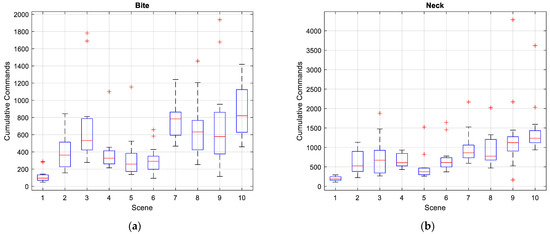

Input Command

In Figure 12, the red line central mark indicates the median, and the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively. The whiskers extend to the most extreme data points not considered outliers, and the outliers are plotted individually using the ‘+’ red symbol. The performance of the succeeding scenes is compared based on the commands given by all subjects. These commands are summed to provide a single index for each subject. From these results, the SCM EMG is an estimate of twice the Masseter EMG, partly due to the game design with the horizontal (left and right) dimensions being more large than vertical (up and down).

Figure 12.

Boxplot of all user cumulative command input from SCM and Masseter EMG: (a) SCM commands; and (b) Masseter rotation commands.

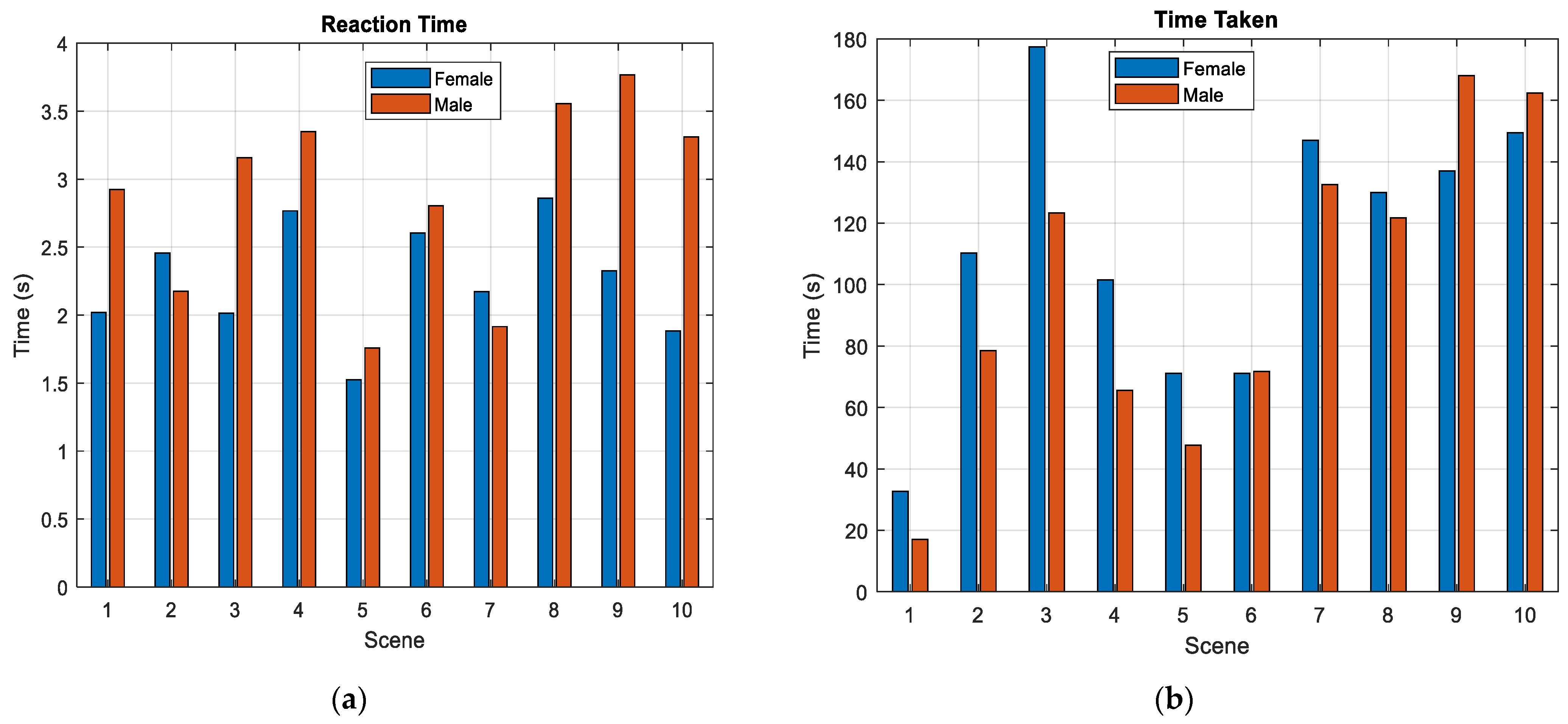

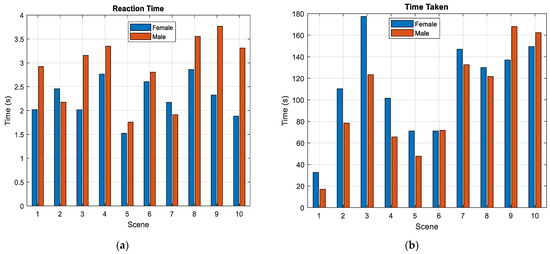

Group Comparison

The test subjects contained distinguishable gender groupings that we separated to find out if there were significant control differences. In division, six female subjects and fourteen male subjects’ data were tested for statistical significance, as shown in Table 2. We performed a one-way ANOVA test on reaction time (the time taken before a viewable control input is displayed when the scene is loaded), completion time, and summed neck and bite EMG. As shown in Table 2, there was no significant difference between the two groups as indicated by the completion time (P(F > 0.43) = 0.519). Similarly, there was no statistical difference between the mean of the neck and bite EMG (p = 0.48 and p = 0.7595, respectively). The reaction time showed a significant difference, with female subjects having a fast response compared to male subjects (p = 0.0299). Figure 13 shows the bar graphs of the two groups.

Table 2.

Statistical tests of subject’s performance.

Figure 13.

Comparison of performance for male and female subjects: (a) reaction time; and (b) total time taken.

Overall Performance

The overall performance is captured for all evaluated scenes. In Table 3, the scenes can be reorganized into 1, 5, 6, 4, 2, 8, 7, 3, 9, and 10, based on the completion time. This rating coincides with the vertical index (v-index) order adopted in the scene. This is further elaborated by the correlation test shown in Table 4.

Table 3.

Overall performance of all subjects.

Table 4.

The correlation amongst variables.

In Table 4, scene difficulty measured by completion time increases with increasing v-index. In the test, the v-index and completion time were found to be strongly correlated, (r(8) = 0.892) compared to that of h-index (r(8) = 0.790), which remained significant. The turning index (h and v indices) positively correlated with the input command, with the v-index strongly correlated with the two indices. The input command was associated with the expected completion time (r = 0.98 and 0.891 for SCM and Masseter EMG, respectively).

4. Discussion

This paper introduces a methodology for controlling the game interface using SCM and Masseter EMG. Two models for translating raw EMG signals to input commands have been explored: the equation and the ML model. The proposed equation accentuates the differences that may exist between the SCM muscle tension and hence has a stable performance instead of the quasi-tension between the muscles, as illustrated in Figure 4. Thus, it has good performance for the input signal close to zero compared to the conventional method. The proposed equation model provides a dynamic interface according to other methods proposed in [18,21].

SCM muscles were used due to their near-uniform symmetry in left and right turns. Figure 5 shows that the data obtained can be used without a lot of calibration and standardization processes. The ML algorithm used was Ensemble due to its high accuracy (93.9%) and prediction time, as shown in Table 1. The generalization of the trained model was satisfied with all subjects reviewed without the need for recalibration or retraining, which improved usability and ease of setup (in plug-and-play settings) as recommended in playing the game using bio-signals [41,48,49,50].

The results in Figure 7 confirm the ease of use of the system. The angle input in Figure 7 for the equation model is continuous. Therefore, the model achieves both direction and speed control in the game interface. In the ML model, the direction of the paddle control was accurate, but the speed control was achieved by the accumulation of movement in the same direction. In this step, the ML model had a more stable directional stability and poorer speed control compared to the equation model.

In a 2D environment, the completion time of the subject indicates the difficulty of the task. This was found to be related to the number of vertical turns the user had to make, as confirmed by the correlation coefficient (r(8) = 0.892). From these results, the most challenging scenes were 10, 9, and 3. In the setup, Bite EMG controlled vertical movement (up and down). The subject had to double-click to change the orientation of the player, which may have contributed to the increase in difficulty along the vertical axis.

As shown in Table 4, the completion time of the test groups was uniform, with a slight difference. Using one-way ANOVA, the mean reaction time for the female to male subjects was significant (p = 0.0299). On average, female subjects reacted 0.41 s faster than males. However, reaction time reported a low correlation with completion time (r = 0.316). On the other hand, male subjects completed the scene 12.91 s faster than female subjects.

5. Conclusions

We successfully developed a control interface using SCM and Masseter EMG and tested its performance as a game input methodology. We modeled the control interface using an equation and machine learning approaches. The equation model featured more intuitive tracking and speed control, while the machine learning model provided more stable directional control with reduced speed control. The performance of the two schemes was comparable to the different computational requirements in a 1D environment.

In a 2D environment, the machine learning model was robust and generalizable to all subjects belonging to different demographics as indicated by age variations (27.95 years std = 13.24). The performance of all the subjects confirmed the applicability of the interface with little or no calibration needed.

The setup and results confirm the usability of the interface system with varying difficulty settings. All participants were able to navigate the scenes with minimal instructions. None of the participants reported tension or discomfort after the experiment.

Moreover, the system can be applied in control, rehabilitation, and/or game interfaces. Further research improvement will be conducted to implement robot control with the developed system.

Author Contributions

Conceptualization, M.S. (Minoru Sasaki), P.W.L. and J.K.M.; methodology, J.K.M., P.W.L., K.M. and W.N.; software, Y.S. and K.M.; validation, M.S.A.b.S., W.R., W.C. and M.S. (Maciej Sulowicz); formal analysis, P.W.L., J.K.M. and M.S.A.b.S.; investigation, J.K.M. and P.W.L.; resources, K.M., J.K.M. and P.W.L.; data curation, P.W.L., J.K.M., Y.S. and M.S.A.b.S.; writing—original draft preparation, J.K.M. and P.W.L.; writing—review and editing, W.R., W.N., M.S. (Minoru Sasaki) and W.C.; visualization, W.R., W.C., M.S. (Maciej Sulowicz) and K.M.; supervision, M.S. (Minoru Sasaki). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Gifu University ethics (approval procedures number 27–226, Year 2020) issued by Gifu University ethics committee).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Campo, P.; Dangles, O. An overview of games for entomological literacy in support of sustainable development. Curr. Opin. Insect Sci. 2020, 40, 104–110. [Google Scholar] [CrossRef] [PubMed]

- Forouzandeh, N.; Drees, F.; Forouzandeh, M.; Darakhshandeh, S. The effect of interactive games compared to painting on preoperative anxiety in Iranian children: A randomized clinical trial. Complement. Ther. Clin. Pract. 2020, 40, 101211. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Lawendy, B.; Goldenberg, M.G.; Grober, E.; Lee, J.Y.; Perlis, N. Can video games enhance surgical skills acquisition for medical students? A systematic review. Surgery 2021, 169, 821–829. [Google Scholar] [CrossRef]

- Barr, M. Student attitudes to games-based skills development: Learning from video games in higher education. Comput. Hum. Behav. 2018, 80, 283–294. [Google Scholar] [CrossRef]

- Vickers, S.; Istance, H.; Heron, M.J. Accessible Gaming for People with Physical and Cognitive Disabilities: A Framework for Dynamic Adaptation. In Proceedings of the Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 19–24. [Google Scholar] [CrossRef]

- Bailey, J.M. Adaptive Video Game Controllers Open Worlds for Gamers With Disabilities. The New York Times. 20 February 2019. Available online: https://www.nytimes.com/2019/02/20/business/video-game-controllers-disabilities.html (accessed on 13 January 2021).

- Winkie, L. For Disabled Gamers Like BrolyLegs, Esports Is an Equalizer. Available online: https://www.vice.com/en/article/ywgqxv/for-disabled-gamers-like-brolylegs-esports-is-an-equalizer (accessed on 13 January 2021).

- Donovan, T. Disabled and Hardcore The Story of the Man Behind Call of Duty’s N0M4D Controls. Available online: https://www.eurogamer.net/articles/2011-07-13-disabled-and-hardcore-article (accessed on 13 January 2021).

- Caesarendra, W.; Lekson, S.U.; Mustaqim, K.A.; Winoto, A.R.; Widyotriatmo, A. A classification method of hand EMG signals based on principal component analysis and artificial neural network. In Proceedings of the 2016 International Conference on Instrumentation, Control, and Automation, ICA 2016, Bandung, Indonesia, 29–31 August 2016; pp. 22–27. [Google Scholar]

- López, A.; Fernández, M.; Rodríguez, H.; Ferrero, F.; Postolache, O. Development of an EOG-based system to control a serious game. Measurement 2018, 127, 481–488. [Google Scholar] [CrossRef]

- Yeh, S.C.; Hou, C.L.; Peng, W.H.; Wei, Z.Z.; Huang, S.; Kung, E.Y.C.; Lin, L.; Liu, Y.H. A multiplayer online car racing virtual-reality game based on internet of brains. J. Syst. Archit. 2018, 89, 30–40. [Google Scholar] [CrossRef]

- Malone, L.A.; Padalabalanarayanan, S.; McCroskey, J.; Thirumalai, M. Assessment of Active Video Gaming Using Adapted Controllers by Individuals With Physical Disabilities: A Protocol. JMIR Res. Protoc. 2017, 6, e116. [Google Scholar] [CrossRef] [PubMed]

- Thirumalai, M.; Kirkland, W.B.; Misko, S.R.; Padalabalanarayanan, S.; Malone, L.A. Adapting the wii fit balance board to enable active video game play by wheelchair users: User-centered design and usability evaluation. J. Med. Internet Res. 2018, 20, 36–48. [Google Scholar] [CrossRef]

- Prahm, C.; Vujaklija, I.; Kayali, F.; Purgathofer, P.; Aszmann, O.C. Game-Based Rehabilitation for Myoelectric Prosthesis Control. JMIR Serious Games 2017, 5, e3. [Google Scholar] [CrossRef]

- Laksono, P.W.; Kitamura, T.; Muguro, J.; Matsushita, K.; Sasaki, M.; Suhaimi, M.S.A.b. Minimum mapping from EMG signals at human elbow and shoulder movements into two DoF upper-limb robot with machine learning. Machines 2021, 9, 56. [Google Scholar] [CrossRef]

- Laksono, P.W.; Matsushita, K.; Suhaimi, M.S.A.b.; Kitamura, T.; Njeri, W.; Muguro, J.; Sasaki, M. Mapping three electromyography signals generated by human elbow and shoulder movements to two degree of freedom upper-limb robot control. Robotics 2020, 9, 83. [Google Scholar] [CrossRef]

- Sasaki, M.; Matsushita, K.; Rusyidi, M.I.; Laksono, P.W.; Muguro, J.; Suhaimi, M.S.A.b.; Njeri, W. Robot control systems using bio-potential signals. AIP Conf. Proc. 2020, 2217, 20008. [Google Scholar] [CrossRef]

- Williams, M.R.; Kirsch, R.F. Evaluation of head orientation and neck muscle EMG signals as three-dimensional command sources. J. Neuroeng. Rehabil. 2015, 12, 25. [Google Scholar] [CrossRef] [PubMed]

- Han, J.S.; Zenn Bien, Z.; Kim, D.J.; Lee, H.E.; Kim, J.S. Human-Machine Interface for wheelchair control with EMG and Its Evaluation. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology-Proceedings, Cancun, Mexico, 17–21 September 2003; Volume 2, pp. 1602–1605. [Google Scholar]

- Tello, R.J.M.G.; Bissoli, A.L.C.; Ferrara, F.; Müller, S.; Ferreira, A.; Bastos-Filho, T.F. Development of a Human Machine Interface for Control of Robotic Wheelchair and Smart Environment. IFAC-PapersOnLine 2015, 48, 136–141. [Google Scholar] [CrossRef]

- Lyu, M.; Chen, W.-H.; Ding, X.; Wang, J.; Pei, Z.; Zhang, B. Development of an EMG-Controlled Knee Exoskeleton to Assist Home Rehabilitation in a Game Context. Front. Neurorobotics 2019, 13, 67. [Google Scholar] [CrossRef] [PubMed]

- Gutiérrez, Á.; Sepúlveda-Muñoz, D.; Gil-Agudo, Á.; de los Reyes Guzmán, A. Serious Game Platform with Haptic Feedback and EMG Monitoring for Upper Limb Rehabilitation and Smoothness Quantification on Spinal Cord Injury Patients. Appl. Sci. 2020, 10, 963. [Google Scholar] [CrossRef]

- Garcia-Hernandez, N.; Garza-Martinez, K.; Parra-Vega, V.; Alvarez-Sanchez, A.; Conchas-Arteaga, L. Development of an EMG-based exergaming system for isometric muscle training and its effectiveness to enhance motivation, performance and muscle strength. Int. J. Hum. Comput. Stud. 2019, 124, 44–55. [Google Scholar] [CrossRef]

- van Dijk, L.; van der Sluis, C.K.; van Dijk, H.W.; Bongers, R.M. Learning an EMG Controlled Game: Task-Specific Adaptations and Transfer. PLoS ONE 2016, 11, e0160817. [Google Scholar] [CrossRef]

- Dębska, M.; Polechoński, J.; Mynarski, A.; Polechoński, P. Enjoyment and Intensity of Physical Activity in Immersive Virtual Reality Performed on Innovative Training Devices in Compliance with Recommendations for Health. Int. J. Environ. Res. Public Health 2019, 16, 3673. [Google Scholar] [CrossRef]

- Prahm, C.; Kayali, F.; Sturma, A.; Aszmann, O. PlayBionic: Game-Based Interventions to Encourage Patient Engagement and Performance in Prosthetic Motor Rehabilitation. PM R 2018, 10, 1252–1260. [Google Scholar] [CrossRef]

- Prahm, C.; Kayali, F.; Vujaklija, I.; Sturma, A.; Aszmann, O. Increasing motivation, effort and performance through game-based rehabilitation for upper limb myoelectric prosthesis control. In Proceedings of the International Conference on Virtual Rehabilitation, ICVR, Montreal, QC, Canada, 19–22 June 2017; Volume 2017. [Google Scholar]

- Rowland, J.L.; Malone, L.A.; Fidopiastis, C.M.; Padalabalanarayanan, S.; Thirumalai, M.; Rimmer, J.H. Perspectives on Active Video Gaming as a New Frontier in Accessible Physical Activity for Youth With Physical Disabilities. Phys. Ther. 2016, 96, 521–532. [Google Scholar] [CrossRef]

- Muguro, J.K.; Sasaki, M.; Matsushita, K. Evaluating Hazard Response Behavior of a Driver Using Physiological Signals and Car-Handling Indicators in a Simulated Driving Environment. J. Transp. Technol. 2019, 9, 439–449. [Google Scholar] [CrossRef]

- Sommerich, C.M.; Joines, S.M.B.; Hermans, V.; Moon, S.D. Use of surface electromyography to estimate neck muscle activity. J. Electromyogr. Kinesiol. 2000, 10, 377–398. [Google Scholar] [CrossRef]

- Steenland, H.W.; Zhuo, M. Neck electromyography is an effective measure of fear behavior. J. Neurosci. Methods 2009, 177, 355–360. [Google Scholar] [CrossRef] [PubMed]

- Benno, M.; Nigg, W.H. Biomechanics of the Musculo-Skeletal System, 3rd ed.; Wiley: Toronto, ON, Canada, 2007; ISBN 978-0-470-01767-8. [Google Scholar]

- Seth, N.; Freitas, R.C.D.; Chaulk, M.; O’Connell, C.; Englehart, K.; Scheme, E. EMG pattern recognition for persons with cervical spinal cord injury. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Toronto, ON, Canada, 24–28 June 2019; Volume 2019, pp. 1055–1060. [Google Scholar]

- Williams, M.R.; Kirsch, R.F. Evaluation of head orientation and neck muscle EMG signals as command inputs to a human-computer interface for individuals with high tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2008, 16, 485–496. [Google Scholar] [CrossRef] [PubMed]

- Iacopetti, F.; Fanucci, L.; Roncella, R.; Giusti, D.; Scebba, A. Game console controller interface for people with disability. In Proceedings of the CISIS 2008: 2nd International Conference on Complex, Intelligent and Software Intensive Systems, Barcelona, Spain, 4–7 March 2008; pp. 757–762. [Google Scholar]

- Arozi, M.; Caesarendra, W.; Ariyanto, M.; Munadi, M.; Setiawan, J.D.; Glowacz, A. Pattern recognition of single-channel sEMG signal using PCA and ANN method to classify nine hand movements. Symmetry 2020, 12, 541. [Google Scholar] [CrossRef]

- Raisamo, R.; Patomäki, S.; Hasu, M.; Pasto, V. Design and evaluation of a tactile memory game for visually impaired children. Interact. Comput. 2007, 19, 196–205. [Google Scholar] [CrossRef]

- Zhang, B.; Tang, Y.; Dai, R.; Wang, H.; Sun, X.; Qin, C.; Pan, Z.; Liang, E.; Mao, Y. Breath-based human–machine interaction system using triboelectric nanogenerator. Nano Energy 2019, 64, 103953. [Google Scholar] [CrossRef]

- Choudhari, A.M.; Porwal, P.; Jonnalagedda, V.; Mériaudeau, F. An Electrooculography based Human Machine Interface for wheelchair control. Biocybern. Biomed. Eng. 2019, 39, 673–685. [Google Scholar] [CrossRef]

- Sirvent Blasco, J.L.; Iáñez, E.; Úbeda, A.; Azorín, J.M. Visual evoked potential-based brain-machine interface applications to assist disabled people. Expert Syst. Appl. 2012, 39, 7908–7918. [Google Scholar] [CrossRef]

- Müller, P.N.; Achenbach, P.; Kleebe, A.M.; Schmitt, J.U.; Lehmann, U.; Tregel, T.; Göbel, S. Flex Your Muscles: EMG-Based Serious Game Controls. In Joint International Conference on Serious Games; Springer: Cham, Switzerland, 2020; Volume 12434 LNCS, pp. 230–242. [Google Scholar]

- Dalgleish, M. There are no universal interfaces: How asymmetrical roles and asymmetrical controllers can increase access diversity. G|A|M|E Games Art Media Entertain. 2018, 1, 11–25. [Google Scholar]

- Shin, D.; Kambara, H.; Yoshimura, N.; Kang, Y.; Koike, Y. Control of a brick-breaking game using electromyogram. Int. J. Eng. Technol. (IJET) 2013, 6, 128–131. [Google Scholar] [CrossRef]

- Muguro, J.K.; Sasaki, M.; Matsushita, K.; Njeri, W.; Laksono, P.W.; Suhaimi, M.S.A.b. Development of neck surface electromyography gaming control interface for application in tetraplegic patients’ entertainment. AIP Conf. Proc. 2020, 2217, 030039. [Google Scholar] [CrossRef]

- Nambiar, S. Gamedolphin Unity Maze. Available online: https://github.com/gamedolphin (accessed on 2 February 2021).

- Côté-Allard, U.; Fall, C.L.; Drouin, A.; Campeau-Lecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep Learning for Electromyographic Hand Gesture Signal Classification Using Transfer Learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; ISBN 1493938436. [Google Scholar]

- DelPreto, J.; Rus, D. Plug-and-play gesture control using muscle and motion sensors. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 439–448. [Google Scholar] [CrossRef]

- Grammenos, D.; Savidis, A.; Stephanidis, C. Designing universally accessible games. Comput. Entertain. 2009, 7, 1–29. [Google Scholar] [CrossRef]

- Shalal, N.S.; Aboud, W.S. Smart robotic exoskeleton: A 3-DOF wrist-forearm rehabilitation. J. Robot. Cont. 2021, 2, 476–483. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).