Abstract

Multivariate space–time datasets are often collected at discrete, regularly monitored time intervals and are typically treated as components of time series in environmental science and other applied fields. To effectively characterize such data in geostatistical frameworks, valid and practical covariance models are essential. In this work, we propose several classes of multivariate spatio-temporal covariance matrix functions to model underlying stochastic processes whose discrete temporal margins correspond to well-known autoregressive and moving average (ARMA) models. We derive sufficient and/or necessary conditions under which these functions yield valid covariance matrices. By leveraging established methodologies from time series analysis and spatial statistics, the proposed models are straightforward to identify and fit in practice. Finally, we demonstrate the utility of these multivariate covariance functions through an application to Kansas weather data, using co-kriging for prediction and comparing the results to those obtained from traditional spatio-temporal models.

1. Introduction

Multivariate space–time datasets frequently arise in environmental science, meteorology, geophysics, and many other fields. Examples include studying the impact of soil greenhouse gas fluxes on global warming potential, or analyzing temperature–precipitation relationships under climate change (see [1,2,3], among others). Typically, temporal data are collected at regularly spaced intervals, in contrast to spatial data that are often recorded at irregular locations, such as weather stations. With the increasing availability and complexity of such datasets, it is essential to develop efficient models that capture their intricate dependence structures.

This paper focuses on constructing valid covariance matrix functions that jointly incorporate spatial and temporal information for multivariate random fields. While the spatial statistics literature includes various spatial models, few account for discrete-time dependencies, despite time series playing a crucial role in most environmental and geophysical processes. Traditional approaches often rely on separable space–time covariance structures, which assume the overall covariance is the product of purely spatial and purely temporal components. While computationally convenient, these models ignore space–time interactions that are often fundamental to the underlying physical processes. An increasing body of work has highlighted the importance of nonseparable models. For example, ref. [4] introduced nonseparable stationary spatio-temporal covariance functions, and subsequent generalizations for both stationary and nonstationary processes were developed in [5,6,7], among others. Applications to environmental data such as air pollution are explored by [8,9], while ref. [10] incorporates an inflated gamma distribution to model precipitation trends with zero inflation. However, most of these models are constructed under the assumption of continuous time. In practice, time is typically observed on a discrete, regular grid, whereas spatial locations are distributed more irregularly. Although some models incorporate discrete time through stochastic differential equations or spectral methods (e.g., [11,12]), these approaches often lack closed-form expressions for the covariance structure. While ref. [13] deals with the univariate case, in this work, we derive explicit covariance matrix functions for multivariate space–time processes with discrete temporal components, where the temporal margins follow well-established autoregressive and moving average (ARMA) models. Leveraging the rich theoretical foundation of ARMA processes along with classical spatial modeling, we aim to build flexible, interpretable, and computationally feasible models.

In many modern scientific applications, such as geosciences, environmental monitoring, and economics, large numbers of variables are observed simultaneously. These variables are often correlated, and borrowing information from related (secondary) variables can improve the prediction of a primary variable, especially when the latter is sparsely observed. For simplicity, spatial variables are often modeled separately, ignoring cross-variable dependencies.A key contribution of this work is the development of multivariate spatial covariance structures that capture both within-variable spatial dependence and cross-variable covariances, while also incorporating discrete time information. This enables more accurate predictions through co-kriging across a wide range of applications. While previous efforts have been made in this direction, many are limited to purely spatial or continuous-time settings, or they rely on Bayesian frameworks. Notable contributions include [14,15,16,17], among others. For example, multivariate Poisson-lognormal spatial models have improved predictions in traffic safety studies [18], and recent works have established kriging formulas [19] and copula-based models [20] for multivariate spatial data. We aim to integrate parameter interpretability from analytic model expressions into a unified space–time framework to facilitate multivariate fitting and co-kriging.

On a global scale, many spatial datasets are collected using spherical coordinates. Euclidean-based distances and covariance structures can become distorted on the sphere, especially over large distances, making spherical modeling critical in geophysical and atmospheric sciences. Recent advances include constructions of isotropic positive definite functions on spheres [21], covariance functions for stationary and isotropic Gaussian vector fields [22], and isotropic variogram matrix functions expressed through ultraspherical polynomials [23]. Drawing from these approaches, we also extend some of our discrete-time multivariate spatio-temporal models to spherical domains to ensure validity across both Euclidean and spherical spaces.

We aim to develop a flexible multivariate spatio-temporal modeling framework that incorporates discrete-time structure, spatial correlation (in both Euclidean and spherical spaces), and cross-variable dependencies. Specifically, we consider a p-variate space–time random field

with covariance matrix function

where each entry

for , where and denote the d-dimensional unit sphere and Euclidean space, respectively. The process is stationary in both space and time if is constant for all and depends only on the spatial lag and temporal lag t. We then denote the spatial and temporal margins as and , respectively, following [24]. In practice, analyzing multivariate space–time data often begins with marginal exploration, applying time series models to study temporal behavior and multivariate spatial analysis to capture cross-variable structure. Given the substantial research advances in both areas, combining their strengths provides a robust foundation for model development, selection, and estimation.

The remainder of this paper is organized as follows. In Section 2, we propose several classes of multivariate spatio-temporal covariance matrix functions, whose discrete-time margins follow ARMA models. We derive necessary and sufficient conditions for these functions to define valid covariance matrices. Section 3 extends the models to incorporate general ARMA margins. In Section 4, we apply our models to Kansas weather data to demonstrate their performance in spatio-temporal prediction compared to traditional methods.

2. Moving-Average-Type Temporal Margin

We begin constructing the foundation of our overall framework by examining the covariance structure corresponding to a first-order moving average (MA(1)) model in the discrete temporal margin. It is straightforward to verify that Equation (1) satisfies the defining properties of an MA(1) process at a fixed spatial location. Notably, this structure does not rely on the assumption of temporal stationarity. The main challenge in proving the validity of Equation (1) lies in its nature as a discrete space–time matrix function that varies across different time scales, making it more complex than simply verifying a static covariance matrix. Theorem 8 in [25] offers useful insights that support the proof of the following Theorem 1 (see Appendix A).

Theorem 1.

Let and , or be matrix functions, and let be symmetric, i.e., . Then, the function

is a covariance matrix function on if and only if the following two conditions are satisfied:

- (i)

- is a covariance matrix function on ,

- (ii)

- is a covariance matrix function on .

This theorem reduces the verification of a complex space–time problem to that of a purely spatial covariance model. Building upon the foundational structure developed earlier, we are now prepared to incorporate a broader range of spatial covariance margins to enrich the class of admissible models. Specifically, we integrate the widely used Matérn-type spatial covariance function into our framework and derive the full set of parameter conditions required to ensure validity. In Theorem 2, we begin with a parsimonious Matérn structure in which all smoothness parameters in are assumed to be equal, as specified in Equation (4) below. Theorem 3 of [14] provides necessary and sufficient conditions under various settings for Equation (4) to define a valid covariance matrix. These results offer important insights that inform the conditions of the theorem and corollary that follows.

Theorem 2.

, , .

Let , , and be constant vectors. , and let , . The sufficient condition for the matrix function

to be a correlation matrix function on is that the constant c satisfies

- And if , (3) is also necessary.

- where

The following theorem generalizes the parsimonious Matérn covariance structure by relaxing the constraint that all smoothness parameters in must be equal, as in Equation (4). Following [14], we assume that is a general multivariate Matérn covariance function in Theorem 3. In addition, the choice of c is assumed to satisfy the conditions of Theorem 2 in [13], ensuring that the main diagonal elements of the resulting matrix structure are valid univariate correlation functions.

Theorem 3.

Let , , , be constant vectors. , . A sufficient and necessary condition for the matrix function,

to be a correlation matrix function on is that the constant c satisfies

given . Where

, , .

is defined like with replaced with , and

If fact, from [14], is a valid covariance matrix if and only if

where . Therefore, we can show that , , and . Under certain conditions, the minimum of the left-hand side of inequality (8) can be equal to zero, which leads to the following corollary.

Corollary 1.

The sufficient and necessary condition for Equation (5) to be a correlation matrix function can be reduced to in the following cases:

(a) When , , ,

(b) When , , ,

The proofs of the theorems and corollary are deferred to the Appendix A. It is well known that setting in the Matérn covariance function yields the exponential form. This leads to the following example:

Example 1.

Let , , , and be assumed as in Theorem 3, and take ; then, A sufficient and necessary condition for the matrix function of exponential type

to be a stationary correlation matrix function on is that the constant c satisfies inequality (6). Where

, , .

3. ARMA Type Temporal Margin

In the previous section, we considered the spatio-temporal covariance structure with a moving average of order one (MA(1)) as the temporal margin. in this section, we extend the covariance matrix to more general cases involving some other autoregressive and moving average (ARMA) temporal margins.

The following model establishes the necessary and sufficient conditions for a valid spatio-temporal covariance matrix with ARMA-type temporal dependence. As before, this theorem assumes uniform in .

Theorem 4.

, , , .

Let , , be constant vectors. , and let , or . A sufficient condition for the matrix function

to be a correlation matrix function on is that the constant c satisfies the following:

- And if , (13) is also necessary.

- where

We now extend this theorem to various values of in . As in the preceding section, we follow [14] and assume that both and below are general multivariate Matérn covariance functions. Furthermore, we assume that the choice of c satisfies the conditions in Theorem 4 in [13], ensuring that the main diagonal elements in the resulting matrix structure are valid univariate correlation functions.

Theorem 5.

Let , , , be constant vectors. , or . A sufficient and necessary condition for matrix function,

to be a correlation matrix function on is that the constant c satisfies

where

,

is defined like , with replaced with , .

Incorporating different values into the model allows for more detailed spatial parameterization, enabling a more precise capture of spatial trends. Once again, the condition in this theorem can be simplified under several special cases:

Corollary 2.

The sufficient and necessary condition for Equation (15) to be a correlation matrix function can be reduced to in the following cases:

(a) When , , ,

(b) When , , ,

The proof of this corollary is similar to that of Corollary 1. The temporal margin in both theorems is given by

which is a linear combination of valid correlation matrices. This structure encompasses a family of valid spatio-temporal correlation functions with stationary AR(1) (first-order autoregressive model), AR(2), and ARMA(2,1) temporal margins. The parameters and for can be interpreted as the spatial scaling and smoothness parameters, respectively. The parameters and govern the temporal dynamics, while c serves as a mixing parameter balancing the two components.

To apply the proposed parametric models, one may first use time series techniques to fit ARMA models at each spatial location. This process can help determine the appropriate ARMA order and provide starting values for , , and c. Final parameter estimation can then be performed using either maximum likelihood estimation or the weighted least squares method of [26] (see also Equation (22) in [27]). For the spatial component, standard procedures in spatial statistics can be employed to estimate initial values for and the cross-correlation parameters , . For instance, one can use the fitted parameters from the marginal spatial and cross-correlation functions at different time lags as starting points. Additional insights into the temporal structure can be obtained using tools such as the autocorrelation function (ACF), partial autocorrelation function (PACF), and information criteria like AIC and BIC. Since the temporal margin can initially be analyzed independently, this step provides useful guidance for model selection. Ultimately, the choice of the final model should be guided by space–time fitting criteria, which are generally robust to small variations in the marginal temporal model. Simplicity is also an important consideration in final model selection. Therefore, the proposed models, along with the stepwise estimation strategy, offer a practical and flexible approach by decomposing the complex spatio-temporal modeling problem into two more manageable steps. The proposed framework also provides an intuitive path toward modeling multivariate spatio-temporal processes, where each spatial location may follow an ARMA-type temporal process. One benefit of this approach is that it allows the multivariate MA(1) process to be approximated by analyzing marginal trends. Since the spatial correlation structure can differ across variables and time lags, it is often beneficial to estimate the trend separately at each time lag to obtain more accurate initial values. These components can then be integrated into a unified model, which is subsequently refined using joint estimation.

For the data application presented in the next section, parameter estimation was conducted using the least squares method from [7] and the techniques developed in [27]. Extending these techniques to accommodate general ARMA(p, q) temporal margins would require further theoretical development of the results presented here. However, such extensions remain computationally feasible, particularly when using Cressie’s weighted least squares approach. We leave the exploration of more complex temporal margins for future research.

4. Data Example: Kansas Daily Temperature Data

This dataset is sourced from the National Oceanic and Atmospheric Administration (NOAA) and includes observations from 105 weather stations across Kansas. For our real-data application, we focus on two highly correlated variables: daily maximum and minimum temperatures recorded over 8030 days, from 1 January 1990, to 31 December 2011, across all 105 counties. To preprocess the data, we compute weekly averages over the 8030 days, resulting in 1144 weeks of average maximum and minimum temperatures, which we use as our raw dataset. To reduce short-term variability to obtain a more stable pattern, we compute weekly averages from the daily temperature data. We divide the dataset into training and testing sets: the first 800 weeks (approximately the first fifteen years) are used for training, and the remaining 344 weeks (the last seven years) are used for testing. To detrend and deseasonalize the data, we follow the procedure outlined in [27] by subtracting the overall mean weekly temperature for each calendar week. Specifically:

Let be the weekly average temperature in year y, week w, location i; be the average temperature for week w at location i across n years; and represents the weekly value at location i with the seasonal mean removed, defined as:

where

This deseasonalization step removes the dominant annual signal and yields weekly anomalies, which reveals the underlying MA(1) correlation pattern.

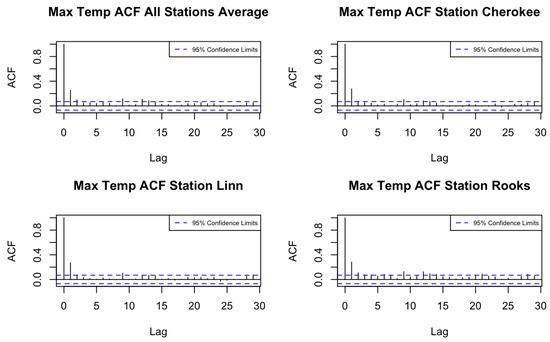

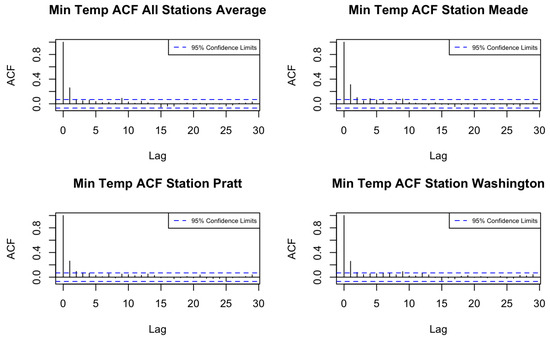

We then compute the autocorrelation function (ACF) and cross-correlation function (CCF) of the de-trended minimum and maximum temperature series across the 105 counties using the training period. Figure 1 and Figure 2 display the ACFs of average maximum and minimum temperatures for all locations, as well as for three randomly chosen stations. Based on the ACF and CCF plots, both variables exhibit a pattern consistent with a moving average process of order one (MA(1)), supporting the use of a spatio-temporal model with an MA(1) temporal margin.

Figure 1.

ACFs of maximum temperature in Kansas counties.

Figure 2.

ACFs of minimum temperature in Kansas counties.

The next step is to calculate space–time correlation using detrended data, for model fitting. Since the data includes many location pairs at each distance, it is hard to extract stable spatial trends across time lags. To reduce noise, we apply spatial binning using and , which means that we average the spatial correlations within each 4-km bin and discard any empty bins. The binned correlations are the input data for further model fitting. We use the least squares optimization method to fit empirical spatial correlations for minimum temperature, maximum temperature, and their cross-correlation at lag zero. These fits provide suitable initial values for the PMM, SMM, and Cauchy models introduced below.

Guided by this exploratory analysis, using an MA(1)-type temporal margin is a suitable choice for Theorem 3 application. While the correlation approaches 1 at the distance in Theorem 3, real world data often exhibits a nugget effect and must be accounted for. By incorporating the nugget effect as described in Theorem 3, we formulate the proposed model, referred to as the PMM (Partially Mixed Model) based on in Equation (5), as follows:

Cressie’s weighted-least-squares optimization method [26] for parameter estimation (Algorithm 1):

| Algorithm 1 Estimation Procedure |

| Initialize parameters: |

| ; |

| Set iteration counter ; |

| Repeat |

| Compute predicted covariances in Equation (19) at across all distances; |

| Calculate weighted sum of squares: |

| ; |

| Update parameters by minimizing using the L-BFGS-B algorithm; |

| ; |

| until convergence: , for a small threshold . |

Finally, the fitted and estimated parameter values for the PMM model are as follows: , , , , , , , , , , , , , and all are set to 2.5. All of the estimated parameters satisfy the conditions in Theorem 3 to ensure Equation (19) is valid as a covariance matrix function. Otherwise, the involved matrix is not invertible, and co-kriging cannot be performed. Next, we apply the purely spatial multivariate Matérn model (SMM), as proposed in [14], for comparison with the incorporated nugget effect.

In addition, we compared the performance of the Cauchy separable model in continuous time, as proposed in [14], with the nugget effect incorporated.

where .

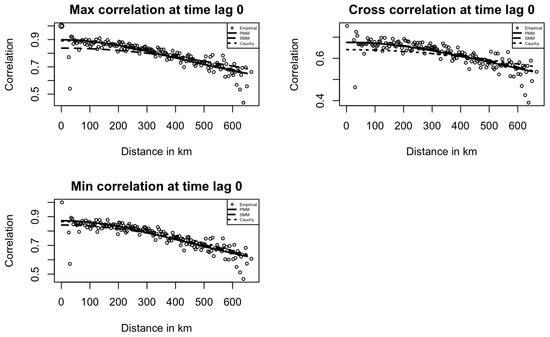

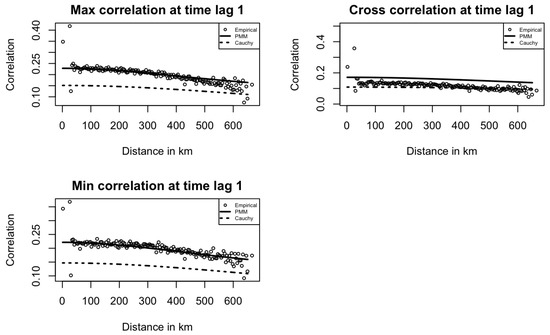

Figure 3 and Figure 4 show the fitted PMM, SMM, and Cauchy models at time lags of 0 and 1 for maximum temperature, minimum temperature, and their cross space–time correlations. In Figure 3, the PMM model fits the empirical correlations better than the SMM and Cauchy models, capturing the underlying structure more accurately. In Figure 4, for maximum and minimum temperature correlations at lag 1, the PMM better capture the correlation patterns, while the Cauchy model performs slightly better for the cross-correlation.

Figure 3.

Empirical Temperature space–time correlations and fitted models at time lag 0 in Kansas.

Figure 4.

Empirical Temperature space–time correlations and fitted models at time lag 1 in Kansas.

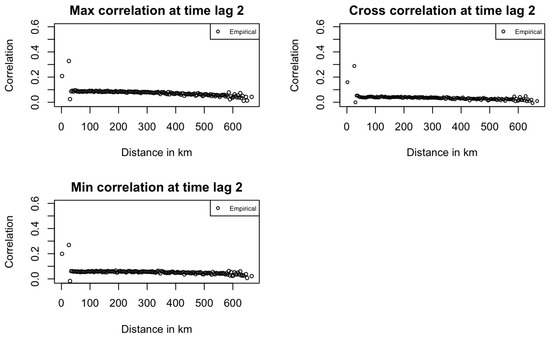

Across both figures, correlation dispersion increases at long distances, as seen in the first plot of Figure 3. This pattern aligns with real-world expectations, where correlation typically decreases with distance, and it also contributes to reduced model fitting performance. Figure 5 shows that at time lag 2, all correlations are near zero, highlighting the MA(1) temporal structure in the data. PMM model correlation estimates are also zero by definition.

Figure 5.

Empirical Temperature space–time correlations at time lag 2 in Kansas.

After fitting the PMM, SMM, and Cauchy models on the training data, the next step is to perform co-kriging for prediction on the testing data, as described below.

The response variable at location , time is estimated as follows:

where the weights and are obtained by solving the following:

where:

is the covariance matrix across distances at time lag for variable Y and

In the PMM and Cauchy models, co-kriging is performed using the minimum and maximum values across all locations at and as input data, and SMM model uses only.

In addition, we consider a traditional time series modeling approach. Since standard time series prediction functions in the R package do not support forecasting with fixed parameters, we developed a custom implementation using the Innovations algorithm described in [4]. We fit the time series model on the training data for maximum temperatures at all 105 stations. Specifically, for each station, we estimated the parameters and , which are the key components of a moving average process of order one (MA(1)), and we used them to generate predictions on the testing data.

Finally, predictions were obtained for the testing period. The root mean squared error (RMSE) and 95% data interval was computed for each method to assess predictive performance. Table 1 reports the model performance across all counties for maximum temperature.

Table 1.

Kansas maximum temperature RMSE statistics.

The percentage of Stations with the Lowest RMSE shows that the PMM model outperforms the others at most locations, achieving the lowest RMSE at 93.3% of the 105 stations. This demonstrates the model’s broad applicability and consistency across different locations. Consequently, the PMM model also produces the lowest average RMSE across all locations. While this difference may seem small, it is important in the de-seasonalized weekly average temperature data, where fluctuations are limited, making even small improvements both statistically and practically meaningful; see [28]. Moreover, the PMM model proves to be more reliable at individual stations, consistently providing better local predictions. This suggests that the model captures more complex spatial structures than simple MA(1) temporal margin or simple spatial correlation margin like the SMM model. The models used for comparison also have strong performance, using the marginal average as the starting points. All models shared those initial value together, so slight improvements are still considered beneficial. Based on this analysis, the proposed PMM model demonstrates consistently better predictive performance, particularly when the temporal margin of the space–time process is properly modeled using an MA(1) structure. The PMM model can also perform well when there is a large number of missing values in the primary variable by leveraging information from the secondary variable, which time series models are unable to utilize. Also, in real-world applications involving complex spatial–temporal data, model selection can be challenging. The PMM model stands out as an easy choice by simply using the marginal spatial and temporal correlations to select the appropriate structure in the PMM model. These results suggest that incorporating both strongly correlated spatial components and discrete-time dependence improves the overall predictive accuracy.

5. Discussion

This work presents a foundational framework for direct modeling of space–time random fields with spatially correlated structures and time series components. The methodology developed here enables the integration of spatial covariance models with some autoregressive and moving average temporal structures, offering a tractable yet flexible approach for analyzing spatio-temporal data. Looking ahead, several avenues for further development are promising. One direction is to incorporate more complex forms of temporal dependence, such as general ARMA or nonstationary time dynamics, to better reflect the intricate temporal behaviors observed in environmental and geophysical data. From an inferential standpoint, parameter estimation techniques can be enhanced by moving beyond least squares approaches. Specifically, adopting maximum likelihood estimation to fit the full correlation structure could lead to more efficient and statistically robust inference, particularly when the data exhibits strong space–time interactions. Additionally, while some of the current framework relies on the Matérn class of spatial covariance functions due to its theoretical and practical appeal, other families of spatial structures, including compactly supported or nonstationary models, may offer advantages in specific applications. Exploring these alternatives can further improve the adaptability of the modeling strategy to diverse scientific domains.

Author Contributions

Conceptualization, J.D. and R.T.; methodology, J.D. and R.T.; software, R.T.; validation, R.T. and J.D.; formal analysis, R.T. and J.D.; investigation, R.T.; resources, J.D. and R.T.; data curation, R.T.; writing—original draft preparation, R.T.; writing—review and editing, J.D.; visualization, R.T.; supervision, J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this paper will be made available by the authors upon request.

Acknowledgments

The authors sincerely thank the Associate Editor and the two anonymous reviewers for their careful reading of the previous version of the manuscript and for their constructive comments and suggestions, which have greatly improved the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ARMA | Autoregressive and Moving Average |

| ACF | Autocorrelation Function (ACF) |

| PMM | Partially Mixed Model |

| RMSE | Root Mean Squared Error |

Appendix A. Proofs

Proof of Theorem 1.

Under the assumptions (i) and (ii), we are going to apply Theorem 8 of [29] to verify that (1) is a covariance matrix function on . Clearly, . Thus, it suffices that the inequality

or, equivalently,

holds for every positive integer n, any , and any .

Since is a covariance matrix function on , its transpose equals , so that

Notice that the matrix function can be written as follows:

This is a sum of two separable covariance matrix functions and is thus a covariance matrix function on . Based on Theorem 8 of [29], we obtain

where the last equality follows from . Thus, inequality (A1) is derived. Conversely, suppose that Equation (1) is a covariance matrix function on . Then, for arbitrary n locations and l integer time points at each location, we formulate pairs and , choose the corresponding vectors as the products , , and obtain

or

or

Letting gives

This implies that is a covariance matrix function on , based on Theorem 8 of [29]. Thus, condition (i) is confirmed.

Similarly, in order to confirm condition (ii), in Equation (A2), we take , divide both sides by l, and obtain

Letting gives

This implies that is a covariance matrix function on , based on Theorem 8 of [29]. □

Proof of Theorem 2.

Following from Theorem 1, it is equivalent to show that the inequality (3) is a necessary and sufficient condition for to be a valid covariance matrix function on with . Under the scenario of this theorem,

For sufficiency in general, by applying Theorem 2 in [22], A positive linear combination of two covariance matrix functions is also a valid covariance matrix function on . Since condition (3) holds—i.e., , for , and is a spatial correlation matrix function, Equation (A3) is also a valid covariance matrix function. Furthermore, by Theorem 1, Equation (2) defines a valid spatio-temporal covariance matrix function.

When , we will show condition (3) is both sufficient and necessary. To this end, let’s consider the spectral density of the Matérn class of a function (see Equation (32) of [30]). The Fourier transforms of and are given as follows:

where ,

and

respectively. Hence, it is reduced to show that inequality (3) is necessary and sufficient for and to be nonnegative definite, which means , , , and

based on Cramér’s Theorem [31]. From Theorem 2 in [13], we already know that and if and only if

Also, and if and only if

Since , and , entail

To evaluate inequalities (A4) and (A5), noting that , we expand the LHS of Equations (A4) and (A5) with this positive factor removed.

- For the sufficient part, other than using Theorem 2 in [22], we can work on by using inequality along with , we can prove both inequalities (A4) and (A5). Finally noting that condition automatically satisfies inequalities (A7) and (A8), therefore Equation (2) is a valid correlation matrix function if and only if . □

Proof of Theorem 3.

If we let

in Theorem 1, from which we only need to show is a valid covariance matrix function. By Cramér’s theorem in spectral domain, it is to show that the Fourier transform of is nonnegative definite. Consider the following Fourier transform matrix function:

where

then it is to show that, the condition (6) is equivalent to for any based on Cramér’s Theorem, since , have been ensured by condition like (A8) with and replaced with and following [13,14], i=1,2.

Let . Note that

Moreover inequalities (8) and (9) imply . The proof of this equivalence can be proved if we can show . Because

by using inequality , we have

where the last inequality holds since , , then if are all greater than zero. When , it follows from (A9) that holds automatically. So the remaining case is when , and that the RHS of (A9) being greater than or equal to zero for all if and only if

□

Proof of Corollary 1.

The sufficiency can be proved by following the proof of Theorem 2. For the necessary condition, first note that those conditions will ensure (8) and (9) by Theorem 3 in [14] and the inequality (6) will be evaluated in the following cases.

Proof of Theorem 4.

The sufficiency can be established by following the similar proof of Theorem 2. The necessary part will be proved using Cramér’s Theorem for the case as follows. The Fourier transform of (12) is equal to

where ,

Hence, it is reduced to show that is necessary for to be nonnegative definite for any based on Cramér’s Theorem. Which means , , and

From [13], we already know that if and only if

Also, if and only if

To evaluate (A10), noting that , We expand the left-hand side of (A10), omitting the positive factor, as follows: Letting ,

With , , letting in yields , which is greater than zero, so . Moreover, satisfies the constraint in inequality (13). Finally, the nonnegative definiteness of for any implies . □

Proof of Theorem 5.

The proof idea and procedure are similar to those of Theorem 3. Following a similar setup, we consider the Fourier transform of Equation (15) as follows:

where

It remains to show that condition (16) is equivalent to for all , based on Cramér’s Theorem. Since and are guaranteed by a condition similar to (A8), with and replaced by and respectively, following [13,14] for . To this end, note that

Similar to the proof of Theorem 3, inequalities (8) and (9) imply that , and .

References

- Gaspari, G.; Cohn, S.E. Construction of correlation functions in two and three dimensions. Q. J. R. Meteorol. Soc. 1999, 125, 723–757. [Google Scholar] [CrossRef]

- Sain, S.R.; Furrer, R.; Cressie, N. A spatial analysis of multivariate output from regional climate models. Ann. Appl. Stat. 2011, 5, 150–175. [Google Scholar] [CrossRef]

- Tebaldi, C.; Lobell, D.B. Towards probabilistic projections of climate change impacts on global crop yields. Geophys. Res. Lett. 2008, 35, L08705. [Google Scholar] [CrossRef]

- Cressie, N.; Huang, H.-C. Classes of nonseparable, spatio-temporal stationary covariance functions. J. Am. Stat. Assoc. 1999, 94, 1330–1340. [Google Scholar] [CrossRef]

- Ma, C. Families of spatio-temporal stationary covariance models. J. Stat. Plann. Inference 2003, 116, 489–501. [Google Scholar] [CrossRef]

- Castruccio, S.; Stein, M.L. Global space-time models for climate ensembles. Ann. Appl. Stat. 2013, 7, 1593–1611. [Google Scholar] [CrossRef]

- Cressie, N.; Wikle, C.K. Statistics for Spatio-Temporal Data; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Wan, Y.; Xu, M.; Huang, H.; Chen, S.X. A spatio-temporal model for the analysis and prediction of fine particulate matter concentration in Beijing. Environmetrics 2021, 32, e2648. [Google Scholar] [CrossRef]

- Xu, J.; Yang, W.; Han, B.; Wang, M.; Wang, Z.; Zhao, Z.; Bai, Z.; Vedal, S. An advanced spatio-temporal model for particulate matter and gaseous pollutants in Beijing, China. Atmos. Environ. 2019, 211, 120–127. [Google Scholar] [CrossRef]

- Medeiros, E.S.; de Lima, R.R.; Olinda, R.A.; Dantas, L.G.; Santos, C.A.C. Space–time kriging of precipitation: Modeling the large-scale variation with model GAMLSS. Water 2019, 11, 2368. [Google Scholar] [CrossRef]

- Storvik, G.; Frigessi, A.; Hirst, D. Stationary time autoregressive representation. Stat. Probab. Lett. 2002, 60, 263–269. [Google Scholar]

- Stein, M.L. Statistical methods for regular monitoring data. J. R. Stat. Soc. Ser. B 2005, 67, 667–687. [Google Scholar] [CrossRef]

- Demel, S.S.; Du, J. Spatio-temporal models for some data sets in continuous space and discrete time. Stat. Sin. 2015, 25, 81–98. [Google Scholar]

- Gneiting, T.; Kleiber, W.; Schlather, M. Matérn cross-covariance functions for multivariate random fields. J. Am. Stat. Assoc. 2010, 105, 1167–1177. [Google Scholar] [CrossRef]

- Sain, S.R.; Cressie, N. A spatial model for multivariate lattice data. J. Econom. 2007, 140, 226–259. [Google Scholar] [CrossRef]

- Zhu, X.; Huang, D.; Pan, R.; Wang, H. Multivariate spatial autoregressive model for large scale social networks. J. Econom. 2020, 215, 591–606. [Google Scholar] [CrossRef]

- Dörr, C.; Schlather, M. Covariance models for multivariate random fields resulting from pseudo cross-variograms. J. Multivar. Anal. 2023, 205, 105199. [Google Scholar] [CrossRef]

- Hosseinpour, M.; Sahebi, S.; Zamzuri, Z.; Yahaya, A.; Ismail, N. Predicting crash frequency for multi-vehicle collision types using multivariate Poisson-lognormal spatial model: A comparative analysis. Accid. Anal. Prev. 2018, 118, 277–288. [Google Scholar] [CrossRef]

- Somayasa, W.; Makulau; Pasolon, Y.B.; Sutiari, D.K. Universal kriging of multivariate spatial data under multivariate isotropic power type variogram model. In Proceedings of the 7th International Conference on Mathematics—Pure, Applied and Computation (ICoMPAC 2020), Surabaya, Indonesia, 24 October 2020. [Google Scholar]

- Krupskii, P.; Genton, M.G. A copula model for non-Gaussian multivariate spatial data. J. Multivar. Anal. 2019, 169, 264–277. [Google Scholar] [CrossRef]

- Gneiting, T. Strictly and non-strictly positive definite functions on spheres. Bernoulli 2013, 19, 1327–1349. [Google Scholar] [CrossRef]

- Ma, C. Stationary and isotropic vector random fields on spheres. Math. Geosci. 2012, 44, 765–778. [Google Scholar] [CrossRef]

- Du, J.; Ma, C.; Li, Y. Isotropic variogram matrix functions on spheres. Math. Geosci. 2013, 45, 341–357. [Google Scholar] [CrossRef]

- Ma, C. Spatio-temporal variograms and covariance models. Adv. Appl. Probab. 2005, 37, 706–725. [Google Scholar] [CrossRef][Green Version]

- Du, J.; Ma, C. Spherically invariant vector random fields in space and time. IEEE Trans. Signal Process. 2011, 59, 5921–5929. [Google Scholar] [CrossRef]

- Cressie, N. Statistics for Spatial Data, rev. ed.; Wiley: New York, NY, USA, 1993. [Google Scholar]

- Gneiting, T. Nonseparable, stationary covariance functions for space-time data. J. Am. Stat. Assoc. 2002, 97, 590–600. [Google Scholar] [CrossRef]

- Gneiting, T.; Genton, M.G.; Guttorp, P. Geostatistical space-time models, stationarity, separability, and full symmetry. Monogr. Stat. Appl. Probab. 2006, 107, 151–174. [Google Scholar]

- Ma, C. Vector random fields with second-order moments or second-order increments. Stoch. Anal. Appl. 2011, 29, 197–215. [Google Scholar] [CrossRef]

- Stein, M.L. Interpolation of Spatial Data: Some Theory for Kriging; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Wackernagel, H. Multivariate Geostatistics, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).