Abstract

Information Geometry is a useful tool to study and compare the solutions of a Stochastic Differential Equations (SDEs) for non-equilibrium systems. As an alternative method to solving the Fokker–Planck equation, we propose a new method to calculate time-dependent probability density functions (PDFs) and to study Information Geometry using Monte Carlo (MC) simulation of SDEs. Specifically, we develop a new MC SDE method to overcome the challenges in calculating a time-dependent PDF and information geometric diagnostics and to speed up simulations by utilizing GPU computing. Using MC SDE simulations, we reproduce Information Geometric scaling relations found from the Fokker–Planck method for the case of a stochastic process with linear and cubic damping terms. We showcase the advantage of MC SDE simulation over FPE solvers by calculating unequal time joint PDFs. For the linear process with a linear damping force, joint PDF is found to be a Gaussian. In contrast, for the cubic process with a cubic damping force, joint PDF exhibits a bimodal structure, even in a stationary state. This suggests a finite memory time induced by a nonlinear force. Furthermore, several power-law scalings in the characteristics of bimodal PDFs are identified and investigated.

1. Introduction

Stochastic Differential Equations (SDEs) (Equation (5)) are used to model various phenomena in nature, including Brownian motion, asset pricing, population dynamics, COVID-19 spread and interaction [1,2,3,4,5,6], and various other non-equilibrium processes. Due to their stochasticity, SDEs do not have an unique solution, but a distribution of solutions. A Fokker–Planck Equation (FPE) [7] is a Partial Differential Equation (PDE) that describes how the probability density of solutions of a SDE evolves with time.

Comparing solutions of different SDEs can be achieved by looking at different statistics of the solutions like mean and variance. However, when we are interested in large fluctuations and extreme events in the solutions, simple statistics might not suffice. In such cases, quantifying and comparing the time evolution of probability density functions (PDFs) of solutions will provide us with more information [8]. The time evolution of PDFs can be studied and compared through the framework of information geometry [9], wherein PDFs are considered as points on a Riemannian manifold and their time evolution can be considered as a motion on this manifold. In general, in order to have a manifold structure on the probability space in information geometry, we need to define a metric. Several different metrics can be defined on a probability space [10,11,12,13].

Different metrics have different physical and mathematical significance. For example, the Wasserstein metric (also known as the Earth mover’s distance) naturally comes up in optimal transport problems [14]; the Ruppeiner metric is based on the geometry of equilibrium thermodynamics [13]. In this work, we use a metric related to the Fisher Information [15], known as the Fisher Information metric [16,17].

Here, denotes a continuous family of PDFs parametrized by parameters . This metric was used to physically represent the number of statistically distinguishable states [11,18,19]. Note that two Gaussians with same standard deviation but different means are statistically indistinguishable, if the difference in their means are much smaller than their standard deviation.

If a time-dependent PDF is considered as a continuous family of PDFs parameterized by a single parameter, time t, the scalar metric can be given by:

However, time in classical mechanics is a passive quantity that cannot be changed by an external control. The infinitesimal distance on the manifold is then given by . Here, is the Information Length defined by:

The Information Length represents the dimensionless distance, which measures the total distance traveled on the manifold, or the total number of distinguishable states a system passes through during the course of its evolution. It has previously been used in optimization problems [20]. It was also used to study dynamical systems, thermodynamics, phase transitions, memory effects, and self-organization [21,22,23,24,25,26,27,28].

The gradient of , , then represents a velocity on this manifold

Note that we use the notation instead of to make it clear that it is a quantity defined for a time-dependent PDF. represents the rate of change of statistically distinguishable states in a time-evolving PDF, and is sometimes referred to in the literature [29,30,31] as the Information Rate. Note that in information theory [32], the term “Information Rate” is used for the rate at which information is passed over the channel [33].

As for the physical significance of , in a non-equilibrium thermodynamic system, is related to the entropy production [30]. has also been used to study causality [29] and abrupt changes in the dynamics of a system [8]. is equivalent to the (symmetric) KL divergence of infinitesimally close PDFs, as shown in Appendix E. It should also be noted that defined by Equation (4) has the dimensions of , and the time-integral of gives a dimensionless distance in Equation (3).

Due to the lack of general mathematical techniques to solve SDEs or its associated FPE, analytical study of SDEs using Information Geometry ( and ) has been limited to a few special cases [24,25,34,35]. To date, numerical studies have relied on solving the associated FPE [24,34], which has the advantage of generating smooth time-dependent PDFs and information diagnostics, but has the limitations outlined in Table 1. To overcome the limitations of a FPE solver, in this work, we develop a Monte Carlo (MC) method to study time-dependent PDFs and the Information Geometry of SDEs.

Table 1.

Comparison between grid-based FPE solver and MC SDE simulation.

The main aims of this paper are twofold. The first aim is to develop a new MC SDE simulation method and validate it by recovering the previous results obtained using the FPE method. The second is to calculate unequal time joint PDFs and investigate the effect of nonlinear forces on PDF form and various power-scaling relations. The remainder of the paper is organized as follows: Section 2.1 gives a brief introduction of the theory of MC SDE simulation. Section 2.2 develops the methods to measure Information Geometry from the simulation. Using this method, we compare a linear and a nonlinear SDE in Section 3. In Section 4, we showcase the measurement of joint PDFs of the same variable but at unequal times, which is not possible using FPE solvers. We then study a type of phase transition in the joint PDF of the nonlinear SDE and numerically verify its theoretically calculated scaling relations. Discussions are found in Section 5.

2. Methods

2.1. SDE Simulation

A typical SDE for the variable x in d dimensions has the following form:

Here, is known as the drift vector (drift coefficient in 1D), the diffusion tensor (diffusion coefficient in 1D) and is the Weiner process [37]. represents an infinitesimal random noise term, making the equation stochastic. Generalizations to SDEs can be achieved with more general noise terms and higher-order derivative terms, but are not pursued here. The associated FPE describes the time evolution of the PDF of solutions of the SDE.

Instead of numerically solving the FPE, in this work, we use a Monte Carlo (MC) method to estimate by simulating a large number of instances of an SDE. Explicit numerical solution of an SDE involves choosing an initial position and iteratively updating it to get the value at time t, . For the MC simulation, we start with a set of initial positions sampled from the desired initial distribution and numerically solve each of them independently. We can then use the set of samples at time t, , to compute the desired statistics. We can formally write this iteration step as follows:

Here, denotes an arbitrary update scheme. There are several methods [38] by which we can create this update. Throughout this work, we will be working with autonomous SDEs ( and do not explicitly depend on time) with diagonal noise ( is a diagonal matrix). Therefore, we will use a simple update scheme called the Milstein scheme [39]:

Here, is a random sample from . Note that the Milstein scheme is only accurate up to , and this error can accumulate through the course of the simulation. Therefore, to control the error in the numerical update scheme, we will adaptively choose the time step for updates by setting a local error tolerance. Local error at time t is defined as the deviation between a single step update made with time step and a two-step update made with the time step each.

Here, we omitted the dependence of on and for brevity. and are chosen in such a way that . To satisfy the local error tolerance , we need to choose , such that . The exact prescription on how this choice is made can be found here [40].

Computing requires computing the derivative of of the numerical solutions. Since derivatives are sensitive to numerical noise, we need accurate estimates of the probability distribution. This is achieved by numerically solving a large number of SDEs (we use at least samples in this work). This is an impractically large number for most computers. Numerically finding solutions, with each requiring around 10,000 time steps (depending on the required accuracy) will require at least 1600 GB of memory, assuming 64-bit floating point values. Additionally, assuming a fast 2 ms per solution, the entire simulation will take around 11 h if carried out serially. To solve these problems, we use GPU computing. With GPU computing [41], we can perform updates on a set of values , simultaneously using Equation (7) to get . can then be removed from memory after computing the desired statistics, making it memory efficient. As for the runtime, a GPU-based parallel implementation [42] in Python takes around 4 min to simulate samples for 10,000 time steps on a consumer laptop equipped with Nvidia RTX 2080 GPU. See Appendix A for detailed scaling relations of simulation runtime.

2.2. Estimating

The form of in Equation (4) makes it unsuitable for numerical computation due to the presence of term in the denominator, which can become zero. We therefore rewrite the equation using the redefinition .

To calculate , we first estimate the PDF using histograms. (It would be more accurate to use kernel density estimators [43], but that is more computationally expensive). The derivative in Equation (10) is approximated as a finite difference and the integral as a Riemann sum. The specific methods and the error estimates are provided in Appendix B. After computing , information length can be computed from the numerical integration of Equation (3).

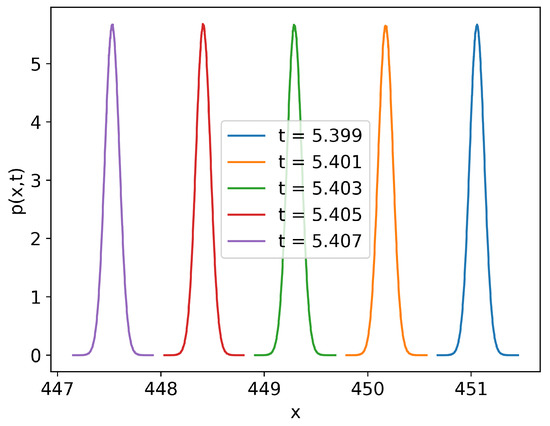

A major source of error in the estimation of is due to the compact support and overlap of numerically estimated probability densities. (This will also be a source of error with numerical FPE solvers). During the simulation, time steps are chosen adaptively by setting a tolerance for the local numerical error. However, in some regimes, this results in the system evolving too fast, so that there is little or no overlap between the probability densities at adjacent time steps (Figure 1). Theoretically, these densities (Figure 1) have the support of the entire real line, and the only error will be caused by approximating the derivative and the integral. However, in practice, we are running the MC simulation with a finite sample size, and computers have finite numerical precision; as such, the estimated densities have compact support. Figure 2 shows how the amount of overlap between the densities affects the error in estimate. There are two main sources of sub-optimal overlap: changes in mean and changes in standard deviation.

Figure 1.

Probability density at adjacent time steps for the SDE with initial normal distribution . Time steps were chosen adaptively to limit local error to . The specific time interval was chosen to showcase the lack of overlap between PDFs.

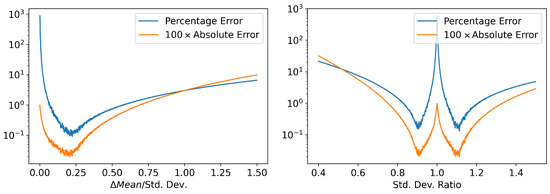

Figure 2.

(left) Error in calculation for two Gaussians with same standard deviation (Std. Dev. = 1) but with different means. The error estimate of is lowest when . (right) Error in calculation for two Gaussians with same Mean (Mean = 0) but with different standard deviation. The error estimate is lowest when ratio of standard deviation is approximately or (≈0.9). Discretized version of Equation (10) was used for the estimate and PDF was approximated by a histogram with 703 bins. We considered samples for each distribution. is chosen to be 1. Estimates were repeated 40 times and mean of the error was taken.

Consider chosen adaptively to satisfy the local error tolerance. Now assume for some , we get optimal overlap. If , we can wait for a few steps before estimating the . However, if , we can choose a as a temporary time step and generate a collection of points from using Equation (7), which results in optimal overlap between densities. The local error will still be smaller than the tolerance since . In order to derive the value of , we use the Milstein update scheme and restrict ourselves to the one-dimensional case for simplicity. First, we look at the change in mean value.

Taking the expectation value of both sides, we get:

Here, we use the fact that and . Note that and are independent random variables. For X and Y, the independent random variables are .

In order to achieve optimal overlap (Figure 2 (left)), we need .

Now to derive the effect on change in standard deviation on , a similar calculation for can be performed, which yields:

Now, in order to achieve optimal overlap, we need (Figure 2 (right)). when and when .

Note that we have only considered the first and second moments here. It is potentially possible to improve the accuracy of the estimate by considering higher moments. However, this improvement will be marginal, since overlap between two distributions is most affected by its first and second moments.

After calculating both the time steps and , we take the minimum of the two to perform the update on to get , where . After the estimation, the is discarded and we continue the simulation with . This prevents any significant slowing in the simulation if . Note that calculating and using the entire set of points will be computationally expensive. Only a small subset is used to perform this calculation.

After is estimated, we can calculate the information length by approximating the integral as a Riemann sum.

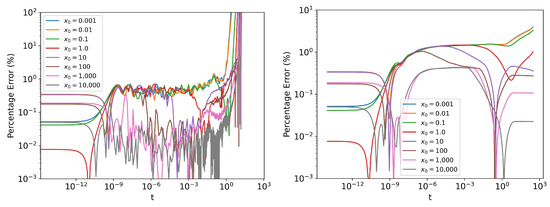

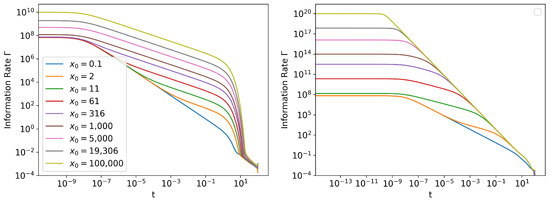

Looking at Figure 3, it can be seen than the percentage error in blows up towards the end of the simulation. This is when the system approaches a stationary state and the probability density stops evolving. The exact reaches zero, whereas the numerical estimate will have a small nonzero error (Figure 4). Even though the percentage error in becomes large, the absolute error remains small (Figure 2 (left)) and will have minimal contribution to the error in Information Length calculation. However, when the initial distribution is ’closer’ to the stationary distribution (small values in Figure 3), the absolute value of Information Length will be small, and the error in estimate will have a more significant contribution to the Information Length calculation.

Figure 3.

Error in estimated (left) and (right) by simulating parallel instances of the Equation with initial values sampled from the normal distribution and computing the PDFs using histograms with 703 bins. The local error tolerance was . Note that an initial step size was chosen to produce more frequent estimates in the initial part of the simulation. See Appendix D for exact solution.

Figure 4.

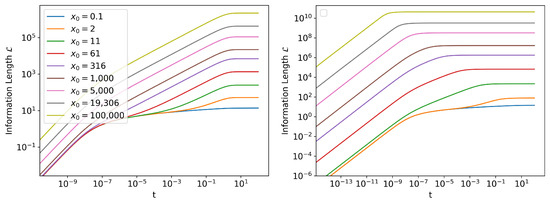

The of (left) Linear SDE and (right) Cubic SDE for different initial conditions , as described in Section 3. Note that towards the end of the range of t, the values will be dominated by errors, as shown in Figure 3. Therefore instead of dropping to zero, they will have a finite nonzero value. For exact solution of Linear SDE, see Appendix D.

It is to be noted that measuring of multi-dimensional problems is a significant computational challenge which requires further investigation. MC SDE simulation is better at handling such problems compared with FPE solvers due to its linear scaling with number of dimensions. However, this comes at the price of accuracy when estimating PDFs of higher dimensional problems. It is still possible to accurately study from marginal PDFs of multi-dimensional problems using MC SDE simulation.

A python implementation of SDE Simulation, along with the measurement used in this work, can be found here [42].

3. Linear vs. Cubic Statistics

In this section, we will use methods developed in the previous section and study nonlinear damping of the Information Geometry of a stochastic process. We will compare the Ornstein–Uhlenbeck process [44], a model for prototypical linear driven dissipative process, defined by linear SDE:

with a cubic SDE defined by the equation:

The cubic damping term has been previously used to model emissions [45], phase transitions [24], and self-organized shear flows [46].

Throughout this section, unless otherwise stated, we restrict ourselves to the values and . The initial distribution is always , and we run the SDE simulation from to . The data in this section were generated using samples, and PDF was approximated by a histogram with 703 bins, which was chosen using an empirical formula . The time steps were adaptively chosen by setting local error tolerance at .

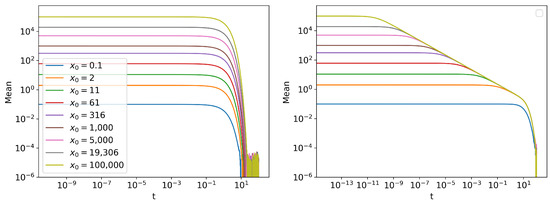

Two of the simplest statistics that can be measured are the mean and the standard deviation of the distribution (Figure 5 and Figure 6). The trends in mean can be readily seen by taking expectation value on both sides of Equations (16) and (17). For the linear SDE, we have , which can be solved to get . For the Cubic SDE, we can follow similar steps:

Figure 5.

The mean of the distribution for different initial conditions of (left) linear SDE and (right) cubic SDE.

Figure 6.

The standard deviation of the distribution for different initial conditions of (left) linear SDE and (right) cubic SDE. Note that for the linear SDE, all the lines overlap.

The approximate solution of the mean value of Cubic SDE is only valid when the standard deviation of the distribution is much smaller than the mean (Appendix C). Note that in Equation (20), when , , making the trajectory independent of . This can be seen in Figure 5 (right), when all the lines merge into one. However, around , the approximation fails, since the value of the standard deviation (Figure 6 (right)) and the mean (Figure 5 (right)) becomes comparable. Therefore, the curves fail to follow the same trajectory for .

The trends in standard deviation of the Linear SDE are readily explained by Equation (A14), which does not depend on initial mean position , but only on the initial standard deviation, the drift coefficient, and the diffusion coefficient. As we write this paper, no exact analytic solution exists for the Cubic SDE. However, an approximate analytic treatment can be found here [47].

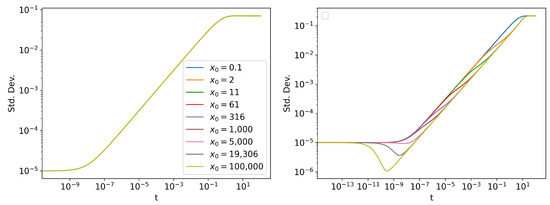

We define the asymptotic Information Length () to be the Information Length it took for the system to reach the stationary state of its PDF.

Analytically, a SDE reaches its stationary state as . However, numerically, we see that the probability density stops evolving after a finite time. We can see this from Figure 7, as becomes a constant. will still continue to increase slightly due to numerical error, but this contribution will be negligible, as shown in Figure 3. measures how many statistically distinguishable states the system passes through to reach its stationary state. From Figure 6, it is evident that, compared to the linear SDE, the PDF of the cubic SDE undergoes a lot more change before reaching its stationary state, for larger values of . For small values of , the trend in standard deviation is similar between linear and cubic SDEs, since the initial evolution of the cubic process is Gaussian. This is confirmed in Figure 7, which shows that for large values of , is significantly larger for the cubic SDE for same values of and, for small values, the values are comparable.

Figure 7.

The Information Length of (left) linear SDE and (right) cubic SDE for different initial conditions . For exact solution of linear SDE, see Appendix D.

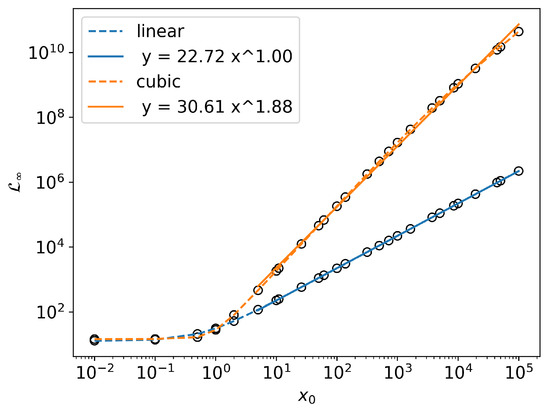

Information Length Scaling

In Figure 7, we have already seen that depends on and has different behavior for linear and cubic SDEs. In [47], by numerically solving the FPE (also analytically for the linear SDE), it was shown that for large values of , shows different scaling behavior for linear and cubic SDEs. For the linear SDE, . For the cubic SDE, , where . We reproduce this result in Figure 8. Note that, since is a dimensionless quantity, it is not possible to derive these values theoretically using dimensional analysis. In the absence of general analytic tools to study this property, numerical methods are indispensable.

Figure 8.

Scaling behavior of with respect to for linear and cubic SDE with and .

4. Unequal Time Joint PDF: Bimodality for Cubic Force

A clear advantage of MC SDE Simulation over solving FPE is the ability to compute the unequal time joint PDFs . Unequal time joint PDFs have been previously used for causality and to establish causal relations. In this section, we showcase the ability of MC SDE simulation to estimate and study its properties.

4.1. Unequal Time PDFs in the Stationary State

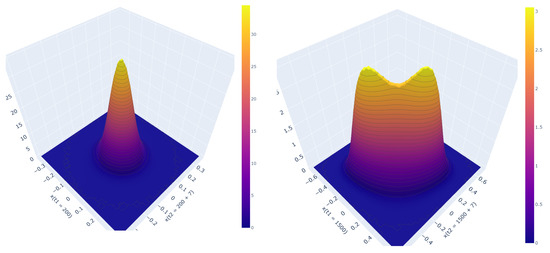

For the linear SDE is always a Gaussian, but with a covariance matrix that depends on and . However, for the cubic SDE, we see the emergence of a bimodal distribution (Figure 9 (right)) depending on and values. This behavior prevails even after the system has reached its stationary state, but now only depends on the difference . The bimodality indicates a finite memory induced by the non-linearity. In order to further study this behavior, we first ensure the system has reached a stationary state by simulating from to with a sample size of for the cubic process. After setting , values are chosen from the data generated by further simulating the system for 300 time units. The PDF was approximated by a histogram with bins. The time steps were adaptively chosen by setting the local error tolerance as .

Figure 9.

for (left) linear SDE with and and (right) cubic SDE with and . For both the SDEs, and . Both SDEs have reached their stationary states.

Note that, because of the symmetry of diffusion term and the drift term, the bimodality of for cubic SDE is always symmetric with respect to the line . Therefore to make the numerical study of the bimodality easier, we restrict our attention to the diagonal of the joint probability density , which is then normalized to integrate to one.

To quantify the bimodality of , we use the following fitting function to perform a nonlinear fit and estimate the parameters.

To motivate this fitting function, note that for , we get a purely quartic exponential, since it is nothing but the stationary state of the cubic SDE. For , the correlation between points reaches zero, , the product of two independent stationary distributions. The quadratic exponential terms are motivated by Gaussian distribution of the noise, and are found to describe the data accurately. There are two quadratic exponential terms, since the SDE is symmetric about the point and the bimodal peaks occur symmetrically on opposite sides.

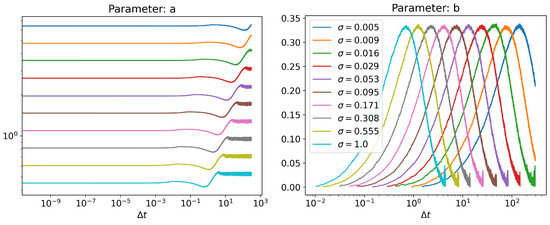

In the fitting function, Parameter a is a measure of the overall height of the density curve. Parameter b represents the ratio of contribution from the quadratic exponential to the quartic exponential, and denotes the degree of bimodality. Parameter e is the location of the peaks. Parameter c is a measure of the overall spread of the density function, while parameter d is a measure of the spread of bimodal peaks. Note that a nonzero value for parameter e and b denotes bimodality in the distribution.

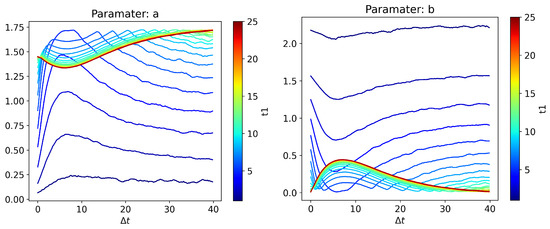

From Figure 10, we can see that the joint PDF becomes bimodal for a range of values, since parameters b and e have nonzero values. The standard deviation of the quadratic term denoted by parameter d is almost a constant for a fixed , whereas the location of the bimodal peak denoted by parameter e changes slightly, but never becomes zero. That means that while transitioning from a bimodal to a unimodal distribution, the bimodal peaks do not continuously merge into one another, but slowly become less prominent and eventually disappear, as inferred from the value of parameter b. The artifacts towards the end of the curves of parameters b and e are due to the fact that it is not possible to consistently fit parameters b and e when there is negligible contribution from the quadratic exponential term as .

Figure 10.

Panels show the values of a, b, c, d and e parameters of Equation (22), obtained through nonlinear function fitting at each time point , expressed as a function of for different noise levels . To ensure the density function for the cubic process reached a stationary state, we chose . Note that for parameters b, d and e, the domain of the plots is restricted to the region where parameter b is nonzero. Noise starts dominating outside this region, since there is negligible contribution from the quadratic terms, and nonlinear fit cannot find a consistent unique value for these parameters.

The values of parameter a and c in Figure 10 can be understood by first considering the and limits. For , we have , where is the stationary state. The diagonal of joint PDF then becomes . Here, after integrating x, we can find, for all values of , which can be numerically verified. When , since there is no correlation, . The normalized diagonal then becomes . The normalization factor can be derived by integrating out x. Therefore, when , we have and , which agrees with numerical results. For intermediate values, there will be correlation, and the behavior cannot be easily explained.

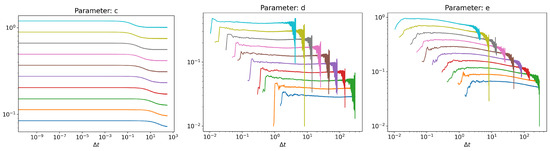

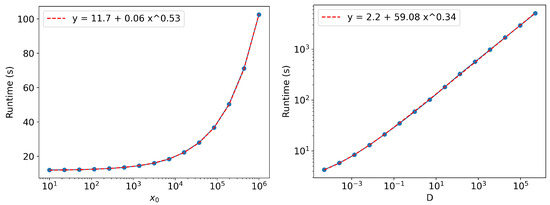

The trends in Figure 10 for different values can be explained by looking at scaling relations. For the cubic equation, we have . Looking at the individual terms, we can infer the dimensions, and . Therefore, we expect the following scaling relations:

These relations are numerically verified in Figure 11 for a fixed value of , for parameters a, c, d, e, and the relationship between noise and , corresponding to peak value of parameter b. Note that in Figure 11, because parameter a is a normalization constant. . The peak value of parameter b seems to be a constant with the value of . It cannot be derived from simple scaling arguments alone; further investigation is required to understand its origin.

Figure 11.

(left) Scaling behavior of peak poisition of parameter b with respect to noise level and (right) Scaling behavior of parameter values corresponding to the peak position of parameter b.

4.2. Evolution of Bimodality in the Non-Stationary State

In this section, we will look at how the bimodality in the joint PDF evolves qualitatively before has reached a stationary state. Since bimodality occurs only for the cubic process, the following results are for the SDE . To this end, we follow the same prescription from Section 4, but with a slightly modified version of the fitting function (Equation (22)).

This modification is made since for some and values, there is no contribution from the quartic exponential term, unlike in the case of stationary state, where there is always a quartic exponential contribution to the distribution.

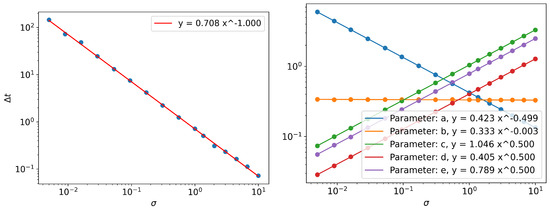

The nonstationary phase exhibits (Figure 12) rich behavior, which asymptotically transitions to the stationary state behavior as becomes large. For small values, Parameter a ≪ Parameter b, since has very little contribution from the quartic exponential term. This is because the initial distribution is a Gaussian distribution, and it is slowly evolving towards the quartic exponential distribution in the stationary state. For larger values, Parameter a starts dominating, since has predominant contribution from quartic exponential term, as expected of the system reaching its quartic exponential stationary state. For some intermediate values, depending on values, we see an interesting behavior where becomes purely a quartic exponential (Parameter b = 0) twice before becoming a mixture of quadratic and quartic exponential terms. Further investigation into this behavior is not undertaken at this time, and only serves to demonstrate the potential capabilities of GPU-accelerated MC SDE simulation for future work.

Figure 12.

Behavior of (left) Parameter a and (right) Parameter b for different and values.

5. Discussion

In this work, we developed a method for fast and accurate study of the Information Geometry of SDEs using Monte Carlo simulation. We identified the computational challenges and overcame them by using GPU computing. Specifically, the major limitation with MC SDE simulation in the estimation of was the sub-optimal overlap of PDFs at subsequent time points. We solved this problem by developing an interpolation method to compute PDFs with optimal overlap.

As an application of the new method, we compared the Information Geometry of SDEs with a linear and a cubic damping force. We were able to reproduce the analytic results for the linear SDE and the previous numerical results [34] for cubic SDE obtained using FPE solvers. This was particularly true of large values of , for the linear case and for the cubic case, where when and . We further showcased the advantage of MC SDE simulation over FPE solver by computing the joint PDF . Unlike the linear SDE, the cubic SDE led to an interesting bimodal PDF , which was observed even after reaching a stationary state. After reaching the stationary state only depends on . In the stationary state, we further studied the bimodality by quantifying it and looking at the power-law scaling relations with respect to noise levels and provided theoretical scaling arguments. The maximum value of the ratio of quadratic to quartic contribution (Parameter b) is found to be a constant irrespective of the noise levels, which requires further study. Finally, we qualitatively looked at in the nonstationary state. The MC SDE simulation can be an important tool for further studying this behavior.

It is important to note that the methods that we developed here for one stochastic variable are general, and can be extended for more than one variable, as well as for investigating different metrics or thermodynamic quantities. These will be addressed in future work. Furthermore, it will be of interest to investigate fast implementations of Kernel Density Estimators [48,49] which will improve the accuracy of joint PDF estimates. We note that in numerical experiments, it was seen that compared with histograms, using Kernel Density Estimators to estimate PDFs provided 2–5 times reduction in error with identical PDFs, albeit with a performance trade-off.

Author Contributions

Conceptualization, A.A.T. and E.-j.K.; Formal analysis, A.A.T.; Investigation, A.A.T. and E.-j.K.; Methodology, A.A.T.; Project administration, E.-j.K.; Resources, A.A.T.; Software, A.A.T.; Supervision, E.-j.K.; Validation, A.A.T. and E.-j.K.; Visualization, A.A.T.; Writing—original draft, A.A.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, A.A.T., upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SDE | Stochastic Differential Equation. |

| FPE | Fokker–Planck Equation. |

| Probability Density Function. | |

| MC | Monte Carlo. |

| GPU | Graphics Processing Unit. |

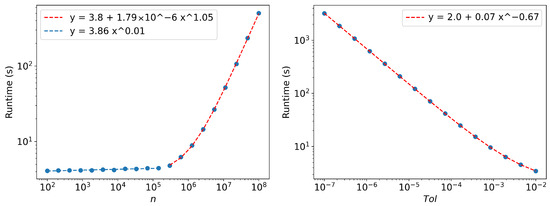

Appendix A. Runtime Scaling

We use the linear O-U process to study the runtime scaling relations of MC SDE simulation. Simulations start with n samples from an initial distribution . The simulation were run for 100 time units on a workstation with AMD EPYC 7451 24-Core CPU and Nvidia Titan RTX GPU, using the implementation here [42]. Milstein scheme was used with adaptive time steps with local error tolerance . Unless otherwise stated, the parameters will have the values: . Note that computation only involves the MC SDE simulation. Calculating the statistics and probability density will require additional computation. This might increase the overall computational time, but the value of the power in the scaling relationship will remain the same.

For MC SDE simulation, as seen in Figure A1, initially the runtime weakly depends on n since computations are being done in parallel. But when n is significantly larger than the number of parallel processors, the computations need to be done in batches and the runtime scales linearly.

Figure A1.

(left) Scaling of runtime with respect to the number of samples n. (right) Scaling of runtime with respect to local error tolerance .

Without adaptive time stepping, numerical error depends on the choice of step size . The runtime is expected to scale as . But by setting a local error tolerance we see a better scaling as seen in Figure A1.

From Figure A2, the runtime of MC SDE simulation scales as with respect to initial position . This is better compared to fixed grid-size FPE solvers where at least an scaling is expected. We also see better than linear runtime scaling with respect to noise levels. Runtime .

Figure A2.

(left) Scaling of runtime with respect to different initial position . Note that unlike other plots in this section, the y-axis is not in log scale. (right) Scaling of runtime with respect to different noise levels .

Appendix B. Discretization Error

Differentiation can be approximated by a finite difference:

Other methods of numerical differentiation exists, but are not suitable for the algorithms in this work. The error in using finite difference to approximate a derivative can be derived by looking at the Taylor expansion.

An integral can be approximate by the Trapezoidal rule:

where the interval is divided into N sub-interval and . If the sub-interval have equal width the error in the approximation can be bounded [50].

Appendix C. Jensen’s Equality

Jensen’s inequality states that for a random variable X and convex function

Here we will derive the error term for this inequality. We assume that is at least twice differentiable in the domain of interest. Then we can write the Taylor series of around with the remainder term as follows:

Taking expectation on both sides we get:

For the case of we have:

where is the skewness of the distribution and the standard deviation.

Appendix D. OU Process Exact Solution

For the SDE , if the initial distribution of states is given by the following:

Then at an arbitrary time time the probability density if given by [35]:

We then use Integrate[] in Mathematica to evaluate Equation (4), which upon simplification yields the following closed form expression for :

This expression can be further integrated to get the Information Length.

A detailed analysis of the problem can be found here [34].

Appendix E. Γ from KL Divergence

KL divergence between two PDFs and is defined as:

Now consider a time-dependent PDF . The equivalence between KL divergence and can be shown as below.

Note that from Equation (A23) onwards for brevity. To simplify Equation (A24) we use the normalization condition for the PDF , which also leads to the conditions and . Using the same arguments, it can be shown that:

References

- Oksendal, B. Stochastic Differential Equations: An Introduction with Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Sauer, T. Numerical solution of stochastic differential equations in finance. In Handbook of Computational Finance; Springer: Cham, Switzerland, 2012; pp. 529–550. [Google Scholar]

- Panik, M.J. Stochastic Differential Equations: An Introduction with Applications in Population Dynamics Modeling; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Kareem, A.M.; Al-Azzawi, S.N. A stochastic differential equations model for internal COVID-19 dynamics. In Proceedings of the Journal of Physics: Conference Series, Babylon, Iraq, 5–6 December 2020; IOP Publishing: Bristol, UK, 2021; Volume 1818, p. 012121. [Google Scholar]

- Mahrouf, M.; Boukhouima, A.; Zine, H.; Lotfi, E.M.; Torres, D.F.; Yousfi, N. Modeling and forecasting of COVID-19 spreading by delayed stochastic differential equations. Axioms 2021, 10, 18. [Google Scholar] [CrossRef]

- El Koufi, A.; El Koufi, N. Stochastic differential equation model of COVID-19: Case study of Pakistan. Results Phys. 2022, 34, 105218. [Google Scholar] [CrossRef] [PubMed]

- Risken, H. Fokker-planck equation. In The Fokker-Planck Equation; Springer: Cham, Switzerland, 1996; pp. 63–95. [Google Scholar]

- Guel-Cortez, A.J.; Kim, E.j. Information geometric theory in the prediction of abrupt changes in system dynamics. Entropy 2021, 23, 694. [Google Scholar] [CrossRef] [PubMed]

- Amari, S.I.; Nagaoka, H. Methods of Information Geometry; American Mathematical Society: Boston, MA, USA, 2000; Volume 191. [Google Scholar]

- Gibbs, A.L.; Su, F.E. On choosing and bounding probability metrics. Int. Stat. Rev. 2002, 70, 419–435. [Google Scholar] [CrossRef]

- Majtey, A.; Lamberti, P.W.; Martin, M.T.; Plastino, A. Wootters’ distance revisited: A new distinguishability criterium. Eur. Phys. J. At. Mol. Opt. Plasma Phys. 2005, 32, 413–419. [Google Scholar] [CrossRef]

- Diosi, L.; Kulacsy, K.; Lukacs, B.; Racz, A. Thermodynamic length, time, speed, and optimum path to minimize entropy production. J. Chem. Phys. 1996, 105, 11220–11225. [Google Scholar] [CrossRef]

- Ruppeiner, G. Thermodynamics: A Riemannian geometric model. Phys. Rev. A 1979, 20, 1608. [Google Scholar] [CrossRef]

- Gangbo, W.; McCann, R.J. The geometry of optimal transportation. Acta Math. 1996, 177, 113–161. [Google Scholar] [CrossRef]

- Frieden, B.R. Science from Fisher Information; Cambridge University Press: Cambridge, UK, 2004; Volume 974. [Google Scholar]

- Facchi, P.; Kulkarni, R.; Man’ko, V.; Marmo, G.; Sudarshan, E.; Ventriglia, F. Classical and quantum Fisher information in the geometrical formulation of quantum mechanics. Phys. Lett. A 2010, 374, 4801–4803. [Google Scholar] [CrossRef]

- Itoh, M.; Shishido, Y. Fisher information metric and Poisson kernels. Differ. Geom. Appl. 2008, 26, 347–356. [Google Scholar] [CrossRef]

- Wootters, W.K. Statistical distance and Hilbert space. Phys. Rev. D 1981, 23, 357. [Google Scholar] [CrossRef]

- Braunstein, S.L.; Caves, C.M. Statistical distance and the geometry of quantum states. Phys. Rev. Lett. 1994, 72, 3439. [Google Scholar] [CrossRef] [PubMed]

- Cafaro, C.; Alsing, P.M. Information geometry aspects of minimum entropy production paths from quantum mechanical evolutions. Phys. Rev. E 2020, 101, 022110. [Google Scholar] [CrossRef] [PubMed]

- Hollerbach, R.; Kim, E.j.; Schmitz, L. Time-dependent probability density functions and information diagnostics in forward and backward processes in a stochastic prey–predator model of fusion plasmas. Phys. Plasmas 2020, 27, 102301. [Google Scholar] [CrossRef]

- Kim, E.J.; Hollerbach, R. Time-dependent probability density functions and information geometry of the low-to-high confinement transition in fusion plasma. Phys. Rev. Res. 2020, 2, 023077. [Google Scholar] [CrossRef]

- Kim, E.J. Investigating information geometry in classical and quantum systems through information length. Entropy 2018, 20, 574. [Google Scholar] [CrossRef]

- Kim, E.J.; Hollerbach, R. Geometric structure and information change in phase transitions. Phys. Rev. E 2017, 95, 062107. [Google Scholar] [CrossRef]

- Heseltine, J.; Kim, E.j. Comparing information metrics for a coupled Ornstein–Uhlenbeck process. Entropy 2019, 21, 775. [Google Scholar] [CrossRef]

- Kim, E.J.; Heseltine, J.; Liu, H. Information length as a useful index to understand variability in the global circulation. Mathematics 2020, 8, 299. [Google Scholar] [CrossRef]

- Crooks, G.E. Measuring thermodynamic length. Phys. Rev. Lett. 2007, 99, 100602. [Google Scholar] [CrossRef]

- Feng, E.H.; Crooks, G.E. Far-from-equilibrium measurements of thermodynamic length. Phys. Rev. E 2009, 79, 012104. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.J.; Guel-Cortez, A.J. Causal Information Rate. Entropy 2021, 23, 1087. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.J. Information geometry and non-equilibrium thermodynamic relations in the over-damped stochastic processes. J. Stat. Mech. Theory Exp. 2021, 2021, 093406. [Google Scholar] [CrossRef]

- Kim, E.J. Information Geometry, Fluctuations, Non-Equilibrium Thermodynamics, and Geodesics in Complex Systems. Entropy 2021, 23, 1393. [Google Scholar] [CrossRef]

- Brillouin, L. Science and Information Theory; Courier Corporation: Chelmsford, MA, USA, 2013. [Google Scholar]

- Kelly, J.L., Jr. A new interpretation of information rate. In The Kelly Capital Growth Investment Criterion: Theory and Practice; World Scientific: Singapore, 2011; pp. 25–34. [Google Scholar]

- Kim, E.J.; Hollerbach, R. Signature of nonlinear damping in geometric structure of a nonequilibrium process. Phys. Rev. E 2017, 95, 022137. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.j.; Lee, U.; Heseltine, J.; Hollerbach, R. Geometric structure and geodesic in a solvable model of nonequilibrium process. Phys. Rev. E 2016, 93, 062127. [Google Scholar] [CrossRef]

- Scott, D.W.; Sain, S.R. Multidimensional density estimation. Handb. Stat. 2005, 24, 229–261. [Google Scholar]

- Durrett, R. Probability: Theory and Examples; Cambridge University Press: Cambridge, UK, 2019; Volume 49. [Google Scholar]

- Kloeden, P.E.; Platen, E. Stochastic differential equations. In Numerical Solution of Stochastic Differential Equations; Springer: Cham, Switzerland, 1992; pp. 103–160. [Google Scholar]

- Mil’shtejn, G. Approximate integration of stochastic differential equations. Theory Probab. Appl. 1975, 19, 557–562. [Google Scholar] [CrossRef]

- Ilie, S.; Jackson, K.R.; Enright, W.H. Adaptive time-stepping for the strong numerical solution of stochastic differential equations. Numer. Algorithms 2015, 68, 791–812. [Google Scholar] [CrossRef]

- Farber, R. CUDA Application Design and Development; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Thiruthummal, A.A. CUDA Parallel SDE Simulation. 2022. Available online: https://github.com/keygenx/SDE-Sim (accessed on 6 June 2022).

- Chen, Y.C. A tutorial on kernel density estimation and recent advances. Biostat. Epidemiol. 2017, 1, 161–187. [Google Scholar] [CrossRef]

- Uhlenbeck, G.E.; Ornstein, L.S. On the theory of the Brownian motion. Phys. Rev. 1930, 36, 823. [Google Scholar] [CrossRef]

- Gutiérrez, R.; Gutiérrez-Sánchez, R.; Nafidi, A.; Ramos, E. A diffusion model with cubic drift: Statistical and computational aspects and application to modelling of the global CO2 emission in Spain. Environ. Off. Int. Environ. Soc. 2007, 18, 55–69. [Google Scholar] [CrossRef]

- Newton, A.P.; Kim, E.J.; Liu, H.L. On the self-organizing process of large scale shear flows. Phys. Plasmas 2013, 20, 092306. [Google Scholar] [CrossRef]

- Kim, E.J.; Hollerbach, R. Time-dependent probability density function in cubic stochastic processes. Phys. Rev. E 2016, 94, 052118. [Google Scholar] [CrossRef]

- Heer, J. Fast & accurate gaussian kernel density estimation. In Proceedings of the 2021 IEEE Visualization Conference (VIS) IEEE, New Orleans, LA, USA, 24–29 October 2021; pp. 11–15. [Google Scholar]

- Silverman, B.W. Algorithm AS 176: Kernel density estimation using the fast Fourier transform. J. R. Stat. Soc. Ser. (Appl. Stat.) 1982, 31, 93–99. [Google Scholar] [CrossRef]

- Cruz-Uribe, D.; Neugebauer, C. An elementary proof of error estimates for the trapezoidal rule. Math. Mag. 2003, 76, 303–306. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).