Abstract

Time series analysis comprises a wide repertoire of methods for extracting information from data sets. Despite great advances in time series analysis, identifying and quantifying the strength of nonlinear temporal correlations remain a challenge. We have recently proposed a new method based on training a machine learning algorithm to predict the temporal correlation parameter, , of flicker noise (FN) time series. The algorithm is trained using as input features the probabilities of ordinal patterns computed from FN time series, , generated with different values of . Then, the ordinal probabilities computed from the time series of interest, , are used as input features to the trained algorithm and that returns a value, , that contains meaningful information about the temporal correlations present in . We have also shown that the difference, , of the permutation entropy (PE) of the time series of interest, , and the PE of a FN time series generated with , , allows the identification of the underlying determinism in . Here, we apply our methodology to different datasets and analyze how and correlate with well-known quantifiers of chaos and complexity. We also discuss the limitations for identifying determinism in highly chaotic time series and in periodic time series contaminated by noise. The open source algorithm is available on Github.

1. Introduction

Thanks to huge advances in data science and computing power, a wide repertoire of time series analysis methods [1,2,3,4,5,6,7] are available for the quantitative characterization of time series and are routinely used in all fields of science and technology, social sciences, economy and finance, etc. Since different methods have different requirements and involve different approximations, no single method can be expected to perform well over all types of data. Therefore, despite huge advances, extracting reliable information from stochastic or high dimensional signals remains a challenge. As any algorithm will return, at least, a number (i.e., a “feature” that encapsulates some property of the time series), in order to interpret the information in the obtained features and to assess the performance of different algorithms, appropriate surrogates [8] or a “reference model” (where the systems that generates the data are known) need to be used. The comparison of the features obtained from the time series of interest with those obtained from surrogate time series or from reference time series allows testing (and even quantifying) some particular property of the time series of interest.

We have recently proposed a new method for estimating the strength of the temporal correlations in a given time series, which uses flicker noise (FN), a fully stochastic process, as the reference model [9]. A FN time series, , is characterized by a power spectrum , with being a parameter that quantifies the temporal correlations present in the signal [10]. The method proposed in [9] combines the use of symbolic ordinal analysis [11,12] and machine learning (ML): We utilize the ordinal probabilities computed from FN time series generated with different values as input features to a ML algorithm. The algorithm is trained to return the value of , which estimates the real value of a FN time series from the probabilities of the ordinal patterns of length D, calculated from the same time series. Then, after the training stage, the ordinal probabilities now computed from a time series of interest, , are provided as input features to the ML algorithm that returns a value, , which encapsulates reliable information about the strength of the temporal correlations present in . By calculating the difference, , between the permutation entropy (PE) of and that of a FN time series generated with , , we were also able to identify determinism in .

Our approach is, thus, based on reducing a large number of features (with , we have ordinal probabilities) to only two: the value returned by the ML algorithm; and the permutation entropy, , computed with the ordinal probabilities. Dimensionality reduction is a well known technique [13,14,15] that has been used to tackle a variety of problems. With ordinal probabilities, for instance, it is possible to distinguish between noise and chaos by reducing the set of probabilities to only two features—the permutation entropy and the complexity; or the permutation entropy and the Fisher information—as demonstrated in [16,17,18,19]. In our methodology, we not only apply dimensionality reduction but also use a fully stochastic “reference” FN time series: we compare the value of of the time series of interest with that of a FN time series generated with . We have shown that the entropy difference, , may provide good contrast for distinguishing fully stochastic time series from a time series with a degree of determinism [9].

The method we proposed has in fact a large degree of flexibility because, instead of ordinal analysis and the permutation entropy, different symbolization rules [20,21] and different entropies [22,23,24] could be tested. In addition, while we use a simple artificial neural network, other algorithms could be evaluated. Different combinations may provide different results and particular combinations may result in optimized performance for the analysis of particular types of time series.

We have shown that the algorithm returns meaningful information even from time series that are very short: for the synthetic examples considered in [9], we could distinguish whether the dynamics is mainly chaotic or stochastic with only 100 data points. However, an open question is as follows: What are the limitations in terms of the level of chaos, the level of noise and the length of the time series? Here, we address this issue by using as examples the time series generated with the Logistic map, the map and the Schuster map. We also address the following questions: Can we distinguish a highly chaotic time series from a stochastic one? Can we identify a periodic signal hidden by noise? In addition, to gain insight into the information encapsulated by and , we contrast them with well known quantifiers of chaos and complexity: the maximum Lyapunov exponent and the ordinal-based statistical complexity measure [25].

2. Methodology

The methodology proposed in [9] can be described in a few steps:

- 1.

- Calculate the ordinal probabilities (OPs) of a large set of FN time series generated with different values of and use them as features to train a ML algorithm to return the (known) value of ;

- 2.

- Calculate the OPs of the time series of interest, , and use them as features to the trained ML algorithm, which returns a value (see Section 2.1);

- 3.

- Generate a FN time series with and calculate its permutation entropy (PE), (see Section 2.2);

- 4.

- Calculate the relative difference, , between the PE of , and :

- 5.

- Use the value of to quantify the strength of the temporal correlations in the time series of interest and use the value of to identify underlying determinism: if , is mainly stochastic, otherwise there is some determinism.

In the implementation proposed in [9], the probabilities of the 720 ordinal patterns of length (described in the next section) were used as input features to the ML algorithm. Then, these features were reduced to only two: the scalar value returned by the ML algorithm, , which quantifies the temporal correlations presented in the time series of interest ; and the permutation entropy, (Equation (4)). Then, the value of was compared with the PE of a FN time series generated with and the relative difference, (Equation (1)), was found to provide contrast for identifying determinism in . The value of can be used to organize a set of time series according to their level of stochasticity (the lower the value of , the larger the stochasticity level) and, by appropriately selecting a threshold value, can be used to classify the time series into two categories: mainly stochastic and mainly deterministic.

2.1. Machine Learning Algorithm

A wide range of ML algorithms are available nowadays. Since we want to regress the information of the features ( probabilities) into one real value, (a classical scalar regression problem), an appropriate simple option is a feed forward artificial neural network (ANN). Mathematically, the ANN can de described as follows. Considering a set of inputs of features with output , the ANN can be sketched by the following:

where and are matrices of weights, and are biases column vectors, f and correspond to activation functions and the “*” symbols corresponds to a tensor product. In this sense, is the result of the transformation , which can be understood as a new representation of the inputs . The elements of the tensors are the parameters of the ANN, which are calibrated in the training state. ANNs are well known, and we refer the reader to our previous work [9] for details about the network structure and the training procedure. We remark that our ANN is a fast and automatic tool and it performed well in all the cases we tested, with a computational time and in a standard notebook, of a few seconds for the analysis of time series with data points. However, we do not claim that an ANN is an optimal choice, since different ML algorithms may be even more efficient. It is important to notice also that the optimal choice will likely depend on the characteristics of the time series (length, frequency content, level of noise, etc.).

2.2. Ordinal Analysis and Permutation Entropy

Ordinal analysis and the permutation entropy were proposed by Bandt and Pompe [11] almost 20 years ago and they are now well-known. For their interdisciplinary applications, we refer the reader to a recent Focus Issue [12].

Here, we compute the ordinal patterns of length with the algorithm proposed in [26]. For , there are possible patterns. The patterns are calculated with an overlap of data points, i.e., for a time series with N data points, ordinal patterns are obtained, which are then used to evaluate the ordinal probabilities . Then, the normalized permutation entropy is calculated as follows.

2.3. Quantifiers of Chaos and Complexity

The Lyapunov exponents measure the local divergence of infinitesimally close trajectories, and positive exponents indicate chaos [27]. While there are important challenges when calculating them from high-dimensional and/or noisy data [3,28], in the case of a one-dimensional dynamical system for which its governing equation is known, the Lyapunov exponent, , can be straightforwardly calculated as follows.

A popular measure for characterizing complex systems is the statistical complexity measure [25] that takes into account the distance to the most regular state and the most random states of the system. It is defined as follows:

where is the entropy and Q is the distance between the distribution that describes the state of the system, , and the equilibrium distribution, , that maximizes the entropy.

As we use the probabilities of the ordinal patterns [16,29], is the normalized permutation entropy and . The distance between and is calculated with the Jensen–Shannon divergence:

where a normalization factor .

3. Datasets

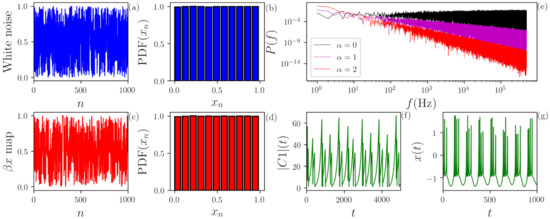

We analyze time series generated by the following stochastic or deterministic dynamical systems. Typical time series examples are presented in Figure 1.

Figure 1.

(a,b) Uniformly distributed white noise and its probability distribution function (PDF). (c,d) Time series generated by iteration of the map with and its PDF. We observe that the deterministic map depicts a very similar PDF of the white noise. (e) Power spectral density (PSD) of flicker noise (FN) (black), (magenta) and (red). By definition, the PSD of the FN decays as . (f,g) Time evolution of two continuous chaotic systems: (f) of the three-waves system and (g) x of the Hindmarsh–Rose model.

3.1. Flicker Noise

Flicker noise (FN), also called as colored noise, is used for training the ML algorithm. FN time series are stochastic and characterized by a temporal correlation coefficient . The power spectrum of this signal is given by , and different values result in different “colors”. We used the open Python library colorednoise.py [30,31] to generate FN time series with different values. An example is shown in panel (e) of Figure 1.

3.2. Uniform Noise

Uniform noise is a stochastic process with no memory and uniform distribution in . Panels (a) and (b) of Figure 1 depict an example of a uniformly distributed white noise and its PDF, respectively.

3.3. Random Walk

A one-dimensional random walk time series is defined by , where is a memory-less stochastic Gaussian process with mean 0 and standard deviation 1.

3.4. Periodic or Chaotic Signals Contaminated by Noise

In order to generate time series that represent periodic or chaotic signals contaminated by noise, we used the map equation . For a periodic signal, is the sin map, with period ; for a chaotic signal, is the map (see below). In both cases, is normalized , is a uniform white noise and controls the stochastic component of ; for , the signal is fully deterministic (periodic or chaotic depending on the map used), while for the signal is fully stochastic and memory-less.

3.5. Logistic Map

The Logistic map is a popular nonlinear dynamical system defined by , where r is the control parameter that allows us to obtain periodic or chaotic signals.

3.6. Map

The generalized Bernoulli chaotic map, also known as map, is defined by , where controls the dynamical characteristic of the map. Panel (c) and (d) of Figure 1 depict the evolution of a map with and its PDF, respectively. Here, we observe that it cannot be visually distinguished from uniform noise; however, the level of chaos can be estimated with the Lyapunov exponent, which has an exact solution in this case: [27]. Therefore, the higher the parameter, the more chaotic the signal is.

3.7. Schuster Map

The Schuster map [32] generates intermittent signals with a power spectrum and is defined as , where we used z as a parameter control.

3.8. Lorenz System

The Lorenz system is also a well-known dynamical system [33], defined by three rate equations: , and Here we use typical parameters , and that generate chaotic trajectories.

3.9. Rossler System

The Rossler system [34] is also a well-known dynamical system, defined by the equations , and . Here, we used typical parameters for generating chaotic trajectories: and .

3.10. Three Waves System

This system is composed of three first-order autonomous differential equations written in terms of the complex slowly varying wave amplitude [35] , and , where and we used , and . The chaotic dynamics can be observed at the amplitude variations of , which is depicted in panel (f) of Figure 1.

3.11. Hindmarsh–Rose Model

The Hindmarsh–Rose model [36] is a set of three rate equations that models the neural activity. An example is shown in panel (g) of Figure 1. The equations are , and and the parameters used are , , , and .

4. Results

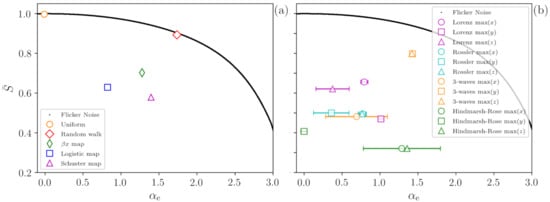

A demonstration of the methodology is presented in Figure 2 that shows, in the plane (, ), the values obtained from time series generated with the dynamical systems described in the previous section. All the time series analyzed possesses points and the error bars represent the standard deviation over 1000 time series generated with different initial conditions or noise seeds.

Figure 2.

Characterization of stochastic and chaotic time series in the plane (normalized permutation entropy ; correlation coefficient returned by the ML algorithm). Panel (a) presents results for discrete systems. The black line represents a set of 10,000 FN signals and decreases as the temporal correlation increases. The stochastic cases (uniform noise and random walk) are very close to the FN signals. Otherwise, the chaotic signal (, logistic and Schuster maps signals) depict a lower compared to the FN signals. Panel (b) presents results for continuous systems. Here, we analyze the sequence of maxima of each variable and, again, we observe that the vertical distance to the FN curve reveals that the time series are not fully stochastic. All time series posses points and the error bars represent the standard deviation over 1000 time series generated with different initial conditions or noise seeds. Small error bars are not shown.

Figure 2a presents results for discrete systems, while Figure 2b, for continuous systems. In both panels the black line represents FN signals generated with different values of , which are perfectly recovered by the ANN (that returns a value equal to ). As expected, for , since the FN signal is white noise [37]. For , some ordinal patterns occur in the time series more frequently than others, and the value of decreases.

In panel (a), the orange circle represents time series of uniform white noise, and the ANN returns the correct value (There is almost no dispersion in the returned value of , therefore, the error bar is not shown). The red diamond represents random walk signals; for them, the ANN returns (Again, there is almost no dispersion in the value of ). We observe that the red diamond is very close to the black line, providing a clear indication of the highly stochastic nature of a time series generated by a one-dimensional random walk.

On the other hand, when we analyze chaotic signals (time series from map with , from logistic map with and from Schuster map with ), we observe that the distance between the symbols and the FN noise curve (black line) allows identifying the signals as not fully stochastic, i.e., the distance to the FN curve uncovers determinism in the signals.

For continuous dynamical systems, the results obtained with ordinal analysis strongly depend on the lag time between the data points, which can be performed in multiple ways, for instance using the maxima of each variable or even the first minimum of the mutual information [38]. While this dependence with the lag allows identifying different time scales in a complex signal [39,40], it renders it difficult to compare different signals. Therefore, for the continuous dynamical systems considered (Lorenz, Rossler, 3-waves and Hindmarsh–Rose), the time series analyzed here are the sequence of maxima of each variable of the system. Due to the fact that the variables obey different equations and have oscillations with different properties, we can expect obtaining different values of . Despite a large dispersion in the values obtained, it can be observed in Figure 2b that all the systems depict a substantial distance to the FN curve, clearly revealing that they are not fully stochastic. The dispersion in the permutation entropy values is of the order of 1%; therefore, vertical error bars are not shown.

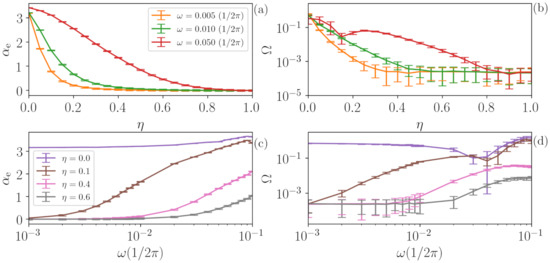

4.1. Comparison with Standard Quantifiers

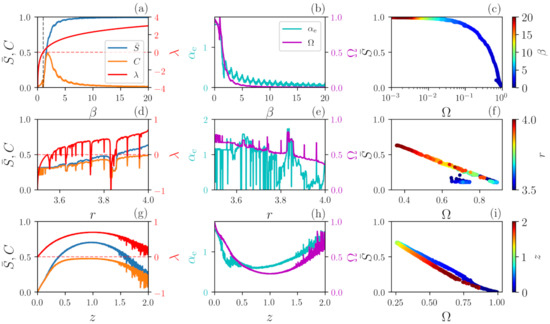

Next, we compare, for the three chaotic maps, the quantifiers obtained with our approach, and , with well-known quantifiers of chaos and complexity including the Lyapunov exponent, and the ordinal statistical complexity, C (described in Section 2.3).

The results are depicted in Figure 3. Panels (a), (b) and (c) show results for the map. For , the map is not chaotic since . In this range, decreases while remains constant. The map is chaotic for , since . At , and vary abruptly and both decreases as increases. There is negative correlation between and and also between and . If is too large, and determinism can no longer be identified. We note that the small oscillations of capture the changes in dynamics when approaches an integer number since the PDF of is homogeneous for integer but becomes inhomogeneous for non-integer values [41], which is not clearly observed in the other quantifiers.

Figure 3.

Analysis of deterministic signals generated by chaotic maps. First column depicts the normalized permutation entropy , the complexity C (left vertical scale) and the Lyapunov exponent (right scale). Second column depicts the new quantifiers (left scale) and (right scale). The third column presents vs. ; the color codes represent the control parameter of each map. Panels (a–c) illustrate the case of map as a function of ; (d–f) show the Logistic map as a function of r; and (g–i) depict the Schuster map as a function of z.

In panels (d), (e) and (f) for the logistic map, periodic windows embedded in chaotic regions are detected in the Lyapunov exponent, and the quantifiers , C and also show abrupt variations. We note that identifies the deterministic nature of the signals since, for the entire interval of r, remains well above zero and remains well below one.

Similar results are found for the Schuster map, panels (g), (h) and (i): is anti-correlated with and confirms that the signal is always deterministic since relatively large values are observed for the entire interval of z. Even though a signal generated by the Schuster map has the same power spectrum as Flicker noise, its value varies non-monotonically with z [32], contrary to the line that is obtained from FN signals. This is due to the fact that signals generated by the Schuster map and FN signals have different sets of ordinal probabilities.

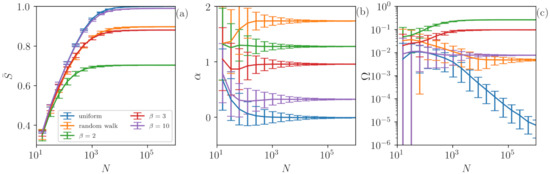

4.2. Influence of the Length of the Time Series

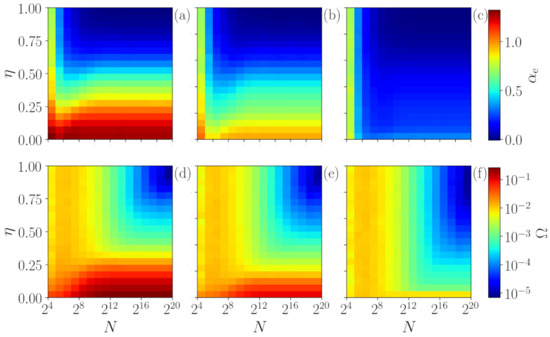

The results so far indicate that our methodology is very precise for characterizing chaotic and stochastic signals when long times series are analyzed (in Figure 2 and Figure 3 ). However, an important question is the following: What is the role of the time series size in the analysis? In order to address this issue, we investigate both stochastic and chaotic time series with different lengths N. Figure 4 displays the role of time series length N in (a), in the output of the ANN (b) and in (c) for chaotic and for stochastic signals. Here, chaotic time series are represented by signals generated with the map, while stochastic ones are given by uniform white noise and a random walk.

Figure 4.

The role of time series length N is studied using (a), the output of the ANN (b) and (c) for both chaotic and stochastic signals. The results are stable for , indicating the robustness of our methodology. Here, we analyze time series and also uniform noise and random walk signals. Despite having similar values of , our method is able to distinguish between the chaotic and stochastic cases even for (number of ordinal patterns) for and . However, for the chaoticity is too high () therefore existing a great local divergence of the trajectory. In this case, the deterministic nature cannot be detected since the ordinal probability distribution is as uniform as a distribution of a white noise signal with .

One can observe that, in general, for a small number of data points (), our method is unable to distinguish the signals as all of them are overlapping. Interestingly, for , our method is able to distinguish the chaotic signal generated by the map with (green lines), since depicts values that are higher than those of the other signals. As N is increased, the signal of is also separated and characterized as chaotic. Surprisingly, for (the number of features), we are already able to distinguish the chaotic signals of and from the stochastic ones (uniform and random walk) by using the quantifier . The results improve as the number of points increases and, for , the analysis stabilizes. Here, an important point is that even if the chaotic and stochastic signals have similar permutation entropy values (), is able to capture their difference. On the other hand, if the chaoticity of the signal is too high, as observed for (purple lines), . In this case the ordinal probabilities of the signal are as uniform as those of white noise, which means that we are unable to identify determinism.

Figure 5 shows that the above observations remain robust when noise is added to the chaotic signal. As explained in Section 3.4, we control the amount of noise in the analyzed time series by varying the parameter . If the time series is too long and has too much noise, does not identify determinism (blue region). On the other hand, if the time series is not long, identifies determinism even when there is no determinism for (orange region). Therefore, for a correct interpretation of the information contained in the value of , we need to compare it with the value of obtained from “reference” time series of the same length as the time series of interest, generated by a known stochastic process such as Flicker noise.

Figure 5.

Robustness of the method to the application of additive noise in the map. The upper row (a–c) depicts the values in color-code as a function of the percentage of noise and the series’ size N for , respectively. The lower row (d–f) shows for the same simulations.

4.3. Analysis of Periodic Signals Contaminated by Noise

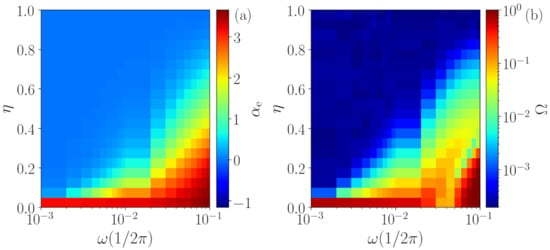

Another challenge to our methodology is the identification of periodic signals with added noise. The results obtained when varying the parameter (see Section 3.4) are depicted in Figure 6, where panel (a) shows the temporal correlation returned by the ANN and panel (b) shows the quantifier , both as a function of . Here, the periodic signals with points and different frequencies are analyzed.

Figure 6.

Analysis of periodic signals contaminated with white noise using the temporal correlation (a) and the quantifier (b). The results show that, when the noise strength, , increases, the deterministic nature of the periodic signal gradually vanishes and, for large enough , only stochastic dynamics is identified. However, the frequency of the signal is important, because low frequency signals are identified as stochastic at lower noise levels. Moreover, even when the signal is characterized as stochastic, a nonzero temporal correlation can be estimated.

First, one can observe that, for , all signals are characterized as deterministic (high value of ) with a high temporal correlation (high value of ), which is expected from a periodic signal. Secondly, as we can also expect for , all signals are identified as stochastic and memory-less (zero temporal correlation), since the added noise is white. However, for intermediate values of , an interesting behavior is observed. The frequency of the signal is indeed important, because periodic signals with low frequencies are characterized as stochastic time series for lower values of (panel (b)). For instance, for , the signal is characterized as noisy even for very small values of , while for signals with , the deterministic nature of the original time series is kept for . As expected, the temporal correlation of the signals (panel (a)) also depends on the frequency . As is increased, the higher the frequency of the signal, the slower the way (i.e., high-frequency signals loss memory slower than low-frequency signals). Since the noise being added is white and thus, is memory-less, one can expect that and for . However, we see that the temporal correlation can be high even when is very small. Therefore, these signals are first identified as noisy with non-zero time correlation, and, subsequently, they are identified as noisy with no time correlation.

These results and conclusions are robust for different realizations of the noise, as is shown in Figure 7, which depicts curves with fixed values of (upper row) as a function of . Panel (a) shows the temporal correlation coefficient , while panel (b) shows the quantifier . Following the same idea, the lower row shows three examples with fixed values as a function of the periodic signal frequency . In all cases, the error bars represent the dispersion over 1000 different noise realizations, and we can observe that the trends discussed previously persist over different noise realizations.

Figure 7.

The effects of noise on a periodic signal do not depend on the noise’s specific realization. The error bars indicate the dispersion over 1000 analyses with different realization of the added noise. Here, the upper row represents three examples of (a) and (b) as a function of for a fixed value of . The lower row (c,d) shows three examples as a function of for fixed values of following the same representation. The dispersion in all cases is sufficiently low that the trends discussed in Figure 6 remain.

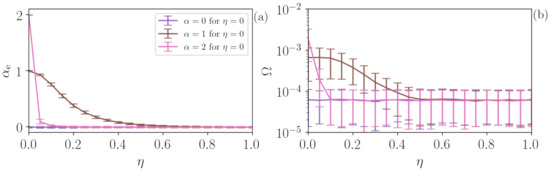

4.4. Analysis of Two Stochastic Processes

In experimental stochastic systems, two (or more) stochastic dynamics are often present. In order to test the performance of the algorithm in this situation, we consider the same approach as in the previous section, , but now is not a periodic signal but a Flicker noise. The results are depicted in Figure 8, where panels (a) and (b) present and , respectively, and are calculated from 1000 FN time series with (purple), (brown) and (pink). For (FN noise) , as increases due to the influence of the uniform white noise, decreases to 0 and (pink line) decays faster due to the slower dynamics, while (purple) does not change since there is no time correlation in both and . is extremely low for all cases () identifying the full stochasticity of the time series.

Figure 8.

Analysis of FN time series contaminated with uniform noise. (a) The addition of uniform noise decreases the predicted by the ML algorithm, leading from the value of the FN time series to value to for uniform noise. also decreases the value of (b), revealing the increase in the degree of stochasticity of the time series. The addition of uniform noise is, thus, similar in FN and in deterministic time series.

5. Discussions and Conclusions

We have analyzed stochastic and deterministic time series using an algorithm [9] that automatically reduces the dimensionality of the feature space from 720 probabilities of ordinal patterns to 2 features: the degree of stochasticity () and the strength of the temporal correlations (). We have analyzed the performance and limitations of the algorithm, presenting applications to different datasets, including highly chaotic and periodic signals with added white noise.

For the analysis of chaotic time series, we have shown that and are able to capture the rich dynamics that chaotic systems can depict, where the transitions between periodic windows and chaos are evident. In general, negative correlation between the Lyapunov exponent and and between the permutation entropy and were found. For highly chaotic signals, when the time evolution of the system is very similar to a stochastic process, and and our methodology characterizes highly chaotic signals as stochastic ones.

In addition, we have studied periodic signals contaminated with noise. In this case, our method captures the transition from deterministic time series to stochastic ones. However, we have shown that the period of the signal is indeed important, with fast signals being identified as deterministic even with large noise. We have found that when the noise contamination increases, periodic signals lose their deterministic feature but preserve a nonzero temporal correlation.

For future work, it will be interesting to analyze whether the performance of our methodology can be improved by using different lengths of the ordinal pattern, D, or different lags between the data points that define the ordinal patterns. It is well known that the ordinal patterns distribution varies with the time scale of the analysis [19] and it will also be interesting, for future work, to address the relevant question of whether our method is able to estimate, from the same time series, different values of by using different lags.

We remark that the automatic and easy-to-use time series analysis tool that we propose is here is freely available at [42]. We believe that it will be a valuable contribution to the wide repertoire of time series analysis tools that are available nowadays. Many applications are foreseen. As an example, for ultra-fast optical random number generation [43,44,45], it is crucial to generate optical chaotic signals that are as uncorrelated and as ‘‘pseudorandom’’ as possible, for which its deterministic nature is hidden by noise-like properties. Many different setups have been proposed to generate such broad-band, high-entropy optical signals [46,47,48,49,50]. Our algorithm allows an automatic comparison of the strength of the correlations and the level of randomness of signals generated by different setups. Moreover, the algorithm may be used to identify, in a given experimental setup, the optimal operation conditions and parameters that produce optical signals with the lowest temporal correlations (lowest ) or with highest level of randomness (lowest ).

Author Contributions

Conceptualization: B.R.R.B., K.L.R., R.C.B. and C.M.; methodology and Software, B.R.R.B.; investigation, B.R.R.B.; validation, B.R.R.B., K.L.R. and R.C.B.; discussion, B.R.R.B., K.L.R., R.C.B., T.L.P., S.R.L. and C.M.; visualization: B.R.R.B.; manuscript preparation: B.R.R.B., K.L.R., R.C.B. and C.M. All authors have read and agreed to the published version of the manuscript.

Funding

B.R.R.B., T.L.P. and S.R.L. acknowledge partial support of Conselho Nacional de Desenvolvimento Científico e Tecnológico, CNPq, Brazil, Grant No. 302785/2017-5, 308621/2019-0 and the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior, Brasil (CAPES), Finance Code 001. R.C.B. was supported by BrainsCAN at Western University through the Canada First Research Excellence Fund (CFREF) and by the NSF through a NeuroNex award (#2015276). C.M. was funded by the Spanish Ministerio de Ciencia, Innovacion y Universidades (PGC2018-099443-B-I00) and the ICREA ACADEMIA program of Generalitat de Catalunya. K.L.R. was supported by a scholarship from the Deutsche Akademischer Austauschdienst (DAAD).

Data Availability Statement

All codes and datasets relevant to our methodology are freely available at [42].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.L.C.; Shih, H.H.; Zheng, Q.N.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. A 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Mallat, S. A Wavelet Tour of Signal Processing; Academic Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Kantz, H.; Schreiber, T. Nonlinear Time Series Analysis; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Brunton, S.L.; Kutz, J.N. Data-driven Science and Engineering: Machine Learning, Dynamical Systems, and Control; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Fulcher, B.D.; Jones, N.S. A computational framework for automated time-series phenotyping using massive feature extraction. Cell Syst. 2017, 5, 527–531. [Google Scholar] [CrossRef]

- Zou, Y.; Donner, R.V.; Marwan, N.; Donges, J.F.; Kurths, J. Complex network approaches to nonlinear time series analysis. Phys. Rep. 2019, 787, 1–97. [Google Scholar] [CrossRef]

- Carlsson, G. Topological methods for data modelling. Nat. Rev. Phys. 2020, 2, 697–708. [Google Scholar] [CrossRef]

- Lancaster, G.; Iatsenko, D.; Pidde, A.; Ticcinelli, V.; Stefanovska, A. Surrogate data for hypothesis testing of physical systems. Phys. Rep. 2018, 748, 1–60. [Google Scholar] [CrossRef]

- Boaretto, B.R.R.; Budzinski, R.C.; Rossi, K.L.; Prado, T.L.; Lopes, S.R.; Masoller, C. Discriminating chaotic and stochastic time series using permutation entropy and artificial neural networks. Sci. Rep. 2021, 11, 15789. [Google Scholar] [CrossRef]

- Beran, J.; Feng, Y.; Ghosh, S.; Kulik, R. Long-Memory Processes; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Bandt, C.; Pompe, B. Permutation entropy: A natural complexity measure for time series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Rosso, O.A. Permutation Entropy & Its Interdisciplinary Applications. Available online: https://www.mdpi.com/journal/entropy/special_issues/Permutation_Entropy (accessed on 4 August 2021).

- Tenenbaum, J.B.; De Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef] [PubMed]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Van Der Maaten, L.; Postma, E.; Van den Herik, J. Dimensionality reduction: A comparative review. J. Mach. Learn. Res. 2009, 10, 66–71. [Google Scholar]

- Rosso, O.A.; Larrondo, H.A.; Martin, M.T.; Plastino, A.; Fuentes, M.A. Distinguishing noise from chaos. Phys. Rev. Lett. 2007, 99, 154102. [Google Scholar] [CrossRef] [Green Version]

- Olivares, F.; Plastino, A.; Rosso, O. Contrasting chaos with noise via local versus global information quantifiers. Phys. Lett. A 2012, 376, 1577–1583. [Google Scholar] [CrossRef]

- Ravetti, M.G.; Carpi, L.C.; Gonçalves, B.A.; Frery, A.C.; Rosso, O.A. Distinguishing noise from chaos: Objective versus subjective criteria using horizontal visibility graph. PLoS ONE 2014, 9, e108004. [Google Scholar] [CrossRef]

- Spichak, D.; Kupetsky, A.; Aragoneses, A. Characterizing complexity of non-invertible chaotic maps in the Shannon-Fisher information plane with ordinal patterns. Chaos Solitons Fractals 2021, 142, 110492. [Google Scholar] [CrossRef]

- Daw, C.S.; Finney, C.E.A.; Tracy, E.R. A review of symbolic analysis of experimental data. Rev. Sci. Inst. 2003, 74, 913–930. [Google Scholar] [CrossRef]

- Corso, G.; Prado, T.d.L.; Lima, G.Z.S.; Kurths, J.; Lopes, S.R. Quantifying entropy using recurrence matrix microstates. Chaos Interdiscip. Nonlinear Sci. 2018, 28, 083108. [Google Scholar] [CrossRef] [PubMed]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Richman, J.S.; Moorman, J.R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. 2000, 278, H2039–H2049. [Google Scholar] [CrossRef] [Green Version]

- Prado, T.L.; Corso, G.; dos Santos Lima, G.Z.; Budzinski, R.C.; Boaretto, B.R.R.; Ferrari, F.A.S.; Macau, E.E.N.; Lopes, S.R. Maximum entropy principle in recurrence plot analysis on stochastic and chaotic systems. Chaos Interdiscip. Nonlinear Sci. 2020, 30, 043123. [Google Scholar] [CrossRef] [PubMed]

- Martin, M.T.; Plastino, A.; Rosso, O.A. Generalized statistical complexity measures: Geometrical and analytical properties. Physica A 2006, 369, 439–462. [Google Scholar] [CrossRef]

- Parlitz, U.; Berg, S.; Luther, S.; Schirdewan, A.; Kurths, J.; Wessel, N. Classifying cardiac biosignals using ordinal pattern statistics and symbolic dynamics. Comput. Biol. Med. 2012, 42, 319–327. [Google Scholar] [CrossRef]

- Ott, E. Chaos in Dynamical Systems; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Mitschke, F.; Damming, M. Chaos vs. noise in experimental data. Int. J. Bifurc. Chaos 1993, 3, 693–702. [Google Scholar] [CrossRef]

- Rosso, O.A.; Masoller, C. Detecting and quantifying stochastic and coherence resonances via information-theory complexity measurements. Phys. Rev. E 2009, 79, 040106. [Google Scholar] [CrossRef] [Green Version]

- Library to Generate a Flicker Noise. Available online: https://github.com/felixpatzelt/colorednoise (accessed on 4 August 2021).

- Timmer, J.; Koenig, M. On generating power law noise. Astron. Astrophys. 1995, 300, 707. [Google Scholar]

- Schuster, H.G.; Just, W. Deterministic Chaos: An Introduction; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Lorenz, E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef] [Green Version]

- Rössler, O.E. An equation for continuous chaos. Phys. Lett. A 1976, 57, 397–398. [Google Scholar] [CrossRef]

- Lopes, S.; Chian, A.L. Controlling chaos in nonlinear three-wave coupling. Phys. Rev. E 1996, 54, 170. [Google Scholar] [CrossRef]

- Hindmarsh, J.L.; Rose, R.M. A model of neuronal bursting using three coupled first order differential equations. Proc. R. Soc. Lond. B Biol. Sci. 1984, 221, 87–102. [Google Scholar]

- Little, D.J.; Kane, D.M. Permutation entropy of finite-length white-noise time series. Phys. Rev. E 2016, 94, 022118. [Google Scholar] [CrossRef]

- De Micco, L.; Fernández, J.G.; Larrondo, H.A.; Plastino, A.; Rosso, O.A. Sampling period, statistical complexity, and chaotic attractors. Phys. A Stat. Mech. Its Appl. 2012, 391, 2564–2575. [Google Scholar] [CrossRef]

- Zunino, L.; Soriano, M.C.; Fischer, I.; Rosso, O.A.; Mirasso, C.R. Permutation-information-theory approach to unveil delay dynamics from time-series analysis. Phys. Rev. E 2010, 82, 046212. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aragoneses, A.; Carpi, L.; Tarasov, N.; Churkin, D.V.; Torrent, M.C.; Masoller, C.; Turitsyn, S.K. Unveiling Temporal Correlations Characteristic of a Phase Transition in the Output Intensity of a Fiber Laser. Phys. Rev. Lett. 2016, 116, 033902. [Google Scholar] [CrossRef] [Green Version]

- Lopes, S.R.; Prado, T.d.L.; Corso, G.; Lima, G.Z.d.S.; Kurths, J. Parameter-free quantification of stochastic and chaotic signals. Chaos Solitons Fractals 2020, 133, 109616. [Google Scholar] [CrossRef] [Green Version]

- Repository with the ANN. Available online: https://github.com/brunorrboaretto/chaos_detection_ANN/ (accessed on 4 August 2021).

- Uchida, A.; Amano, K.; Inoue, M.; Hirano, K.; Naito, S.; Someya, H.; Oowada, I.; Kurashige, T.; Shiki, M.; Yoshimori, S. Fast physical random bit generation with chaotic semiconductor lasers. Nat. Photonics 2008, 2, 728–732. [Google Scholar] [CrossRef]

- Sakuraba, R.; Iwakawa, K.; Kanno, K.; Uchida, A. Tb/s physical random bit generation with bandwidth-enhanced chaos in three-cascaded semiconductor lasers. Opt. Express 2015, 23, 1470–1490. [Google Scholar] [CrossRef]

- Zhang, L.; Pan, B.; Chen, G.; Guo, L.; Lu, D.; Zhao, L.; Wang, W. 640-Gbit/s fast physical random number generation using a broadband chaotic semiconductor laser. Sci. Rep. 2017, 7, 45900. [Google Scholar] [CrossRef]

- Oliver, N.; Soriano, M.C.; Sukow, D.W.; Fischer, I. Dynamics of a semiconductor laser with polarization-rotated feedback and its utilization for random bit generation. Opt. Lett. 2011, 36, 4632–4634. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Feng, C.; Zhang, M.; Liu, Y.; Zhang, Y. Suppression of Time Delay Signature Based on Brillouin Backscattering of Chaotic Laser. IEEE Photon J. 2017, 9, 1502408. [Google Scholar] [CrossRef]

- Li, S.S.; Chan, S.C. Chaotic Time-Delay Signature Suppression in a Semiconductor Laser With Frequency-Detuned Grating Feedback. IEEE J. Sel. Top. Quantum Electron. 2015, 21, 541–552. [Google Scholar]

- Correa-Mena, A.G.; Lee, M.W.; Zaldívar-Huerta, I.E.; Hong, Y.; Boudrioua, A. Investigation of the Dynamical Behavior of a High-Power Laser Diode Subject to Stimulated Brillouin Scattering Optical Feedback. IEEE J. Quantum Electron. 2020, 56, 1–6. [Google Scholar] [CrossRef]

- Bouchez, G.; Malica, T.; Wolfersberger, D.; Sciamanna, M. Optimized properties of chaos from a laser diode. Phys. Rev. E 2021, 103, 042207. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).