Abstract

In this paper, we present a novel blind signal detector based on the entropy of the power spectrum subband energy ratio (PSER), the detection performance of which is significantly better than that of the classical energy detector. This detector is a full power spectrum detection method, and does not require the noise variance or prior information about the signal to be detected. According to the analysis of the statistical characteristics of the power spectrum subband energy ratio, this paper proposes concepts such as interval probability, interval entropy, sample entropy, joint interval entropy, PSER entropy, and sample entropy variance. Based on the multinomial distribution, in this paper the formulas for calculating the PSER entropy and the variance of sample entropy in the case of pure noise are derived. Based on the mixture multinomial distribution, the formulas for calculating the PSER entropy and the variance of sample entropy in the case of the signals mixed with noise are also derived. Under the constant false alarm strategy, the detector based on the entropy of the power spectrum subband energy ratio is derived. The experimental results for the primary signal detection are consistent with the theoretical calculation results, which proves that the detection method is correct.

1. Introduction

With the rapid development of wireless communication, spectrum sensing has been deeply studied and successfully applied in cognitive radio (CR). Common spectrum sensing methods [1,2] can be classified as matched filter detection [3], energy-based detection [4], and cyclostationary-based detection [5], among others. Matched-filtering is the optimum detection method when the transmitted signal is known [6]. Energy detection is considered to be the optimal method when there is no prior information of the transmitted signal. Cyclostationary detection is applicable to signals with cyclostationary features [2]. Energy detection can be classified into two categories: time domain energy detection [4] and frequency domain energy detection [7,8]. The power spectrum subband energy ratio (PSER) detector [9] is a local power spectrum energy detection technology. In order to enable PSER to be used in full spectrum detection, a new detector-based PSER is proposed in this paper, whose detection performance is higher than that of time domain energy detection.

In information theory, entropy is a measure of the uncertainty associated with a discrete random variable, and differential entropy is a measure of uncertainty of a continuous random. As the uncertainty of noise is higher than that of the signal, the entropy of the noise is higher than that of the signal, which is the basis of using entropy to detect signals. Information entropy has been successfully applied to signal detection [10,11,12]. The main entropy detection methods can be classified into two categories: the time domain and the frequency domain.

In the time domain, a signal with a low signal-noise ratio (SNR) is annihilated in the noise, and the estimated entropy is actually the entropy of the noise; therefore, the time domain entropy-based detector does not have an adequate detection performance. Nagaraj [12] introduced a time domain entropy-based matched-filtering for primary users (PU) detection and presented a likelihood ratio test for detecting a PU signal. Gu et al. [13] presented a new cross entropy-based spectrum sensing scheme that has two time-adjacent detected data sets of the PU.

In the frequency domain, the spectral bin amplitude of the signal is obviously higher than that of the noise. Therefore, the frequency domain entropy-based detector is widely applied in many fields. Zhang et al. [10,14] presented a spectrum sensing scheme based on the entropy of the spectrum magnitude, which has been used in many practical applications. For example, Jakub et al. [15] used this scheme to assist in blind signal detection, Zhao [16] improved the two-stage entropy detection method based on this scheme, and Waleed et al. [17] applied this scheme to maritime radar network. So [18] used the conditional entropy of the spectrum magnitude to detect unauthorized user signals in cognitive radio networks. Guillermo et al. [11] proposed an improved entropy estimation method based on Bartlett periodic spectrum. Ye et al. [19] proposed a method based on the exponential entropy. Zhu et al. [20] compared the performances of several entropy detectors in primary user detection. In these papers, the mean of the test statistics based on entropy could be calculated by differential entropy, however the variance of the test statistics based on entropy was not given in these papers, that is, its calculation formula was unknown. In detection theory, the variance of test statistics plays a very important role, therefore the detection based on entropy has a major drawback.

In the above references, none of the entropy-based detectors made use of the PSER. The PSER is a common metric used to represent the proportion of signal energy in a single spectral line. It has been extensively applied in the fields of machine design [21], earthquake modeling [22], remote communication [23,24], and geological engineering [25]. The white noise power spectrum bin conforms to the Gaussian distribution, and its PSER conforms to the Beta distribution [9]. Compared with the entropy detector based on spectral line amplitude, the PSER entropy detector has special properties.

This paper is organized as follows. In Section 2, the theoretical formulas of PSER entropy under pure noise and mixed signal case are deduced. The statistical characteristics of PSER entropy are summarized and the computational complexity of the main statistics are analyzed. Section 3 describes the derivation process of the PSER entropy detector under the constant false alarm strategy in detail. In Section 4, experiments are carried out to verify the accuracy of the PSER entropy detector, and the detection performance is compared with other detection methods. Section 5 provides additional details concerning the research process. The conclusions are drawn in Section 6.

2. PSER Entropy

PSER entropy takes the form of a classical Shannon entropy. Theoretically, the Shannon entropy can be approximated by the differential entropy; therefore, this method is adopted in the existing entropy-based signal detection methods [10]. However, this method has the disadvantage that the actual value is not the same as the theoretical value in some cases. Therefore, on the basis of analyzing the statistical characteristics of PSER entropy, this paper proposes a new method to calculate PSER entropy without using differential entropy. Firstly, the range of PSER [0,1] is divided into several equally spaced intervals. Then, under pure noise, the PSER entropy and its variance are derived using the multinomial distribution. Under signal mixed with noise, the PSER entropy and its variance are derived using the mixed multinomial distribution.

2.1. Probability Distribution for PSER

The signal mixed with additive Gaussian white noise (GWN) can be expressed as

where is the number of sampling points; is the signal to be detected; is the signal; is the GWN with a mean of zero and a variance of ; represents the hypothesis corresponding to “no signal transmitted”; and corresponds to “signal transmitted”. The single-sided spectrum of is

where is the imaginary unit, and the arrow superscript denotes a complex function. The line in the power spectrum of can be expressed as

and represent the real and imaginary parts of the signal, respectively. and represent the real and imaginary parts of the noise, respectively.

The PSER is defined as the ratio of the sum of the adjacent bins from the bin in the power spectrum to the entire spectrum energy, i.e.,

where , represents the total energy in the power spectrum and represents the total energy of the adjacent bins. When there is noise in the power spectrum, it is clear that is a random variable.

The probability distribution for is described in detail in [9]. Under , follows a beta distribution with parameters ,. Under , follows a doubly non-central beta distribution with parameters and non-centrality parameters , and , i.e., [9] (p. 7),

where , i.e., the SNR of the spectral bin; , the SNR of the d spectral lines starting from the spectral line; is the SNR of the spectral lines not contained in the selected subband. The probability density function (PDF) for [9] (p. 7) is

where . The cumulative distribution function (CDF) for [9] (p. 7) is

The subband used in this paper only contains one spectral bin, i.e., ; therefore, follows the distribution

The probability density function (PDF) for is

where .

The cumulative distribution function (CDF) for is

For the convenience of description, is replaced by .

2.2. Basic Definitions

is in the range of [0,1]. The range is divided into intervals at , i.e., , ,…, . Then the interval is , where . The probability that falls into the interval [26] is

where . is called the interval probability.

The PSER values of all spectral lines are regarded as a sequence . Let the number of times the data in falls into the interval be , which is a random variable, and , . Let . The frequency of the data in sequence falling into the interval is denoted as , i.e., , and .

The random variable is equal to , which is called the sample entropy of PSER. The mean of the sample entropy of PSER in the interval is and is the interval entropy. Notice that any two are not independent of each other; therefore, any two are not independent of each other as well. Let , i.e.,

is called sample entropy of PSER. can be abbreviated as or .

By the definition of entropy, entropy is a mean, and it has no variance. However, the sample entropy is the entropy of a PSER sequence sample; therefore the sample entropy is a random variable, and it has a mean and a variance. The mean of the sample entropy is

where and are the numbers of intervals and spectral bins, respectively. can be called the total entropy or PSER entropy. The variance of the sample entropy is denoted as . In signal detection, and are very important, and the calculation of and is discussed in the following sections.

2.3. Calculating PSER Entropy Using the Differential Entropy

2.3.1. The Differential Entropy for

Under , by the definition of differential entropy, the differential entropy for is

where is the base of the logarithm. When ,

2.3.2. PSER Entropy Calculated Using Differential Entropy

According to the calculation process of the spectrum magnitude entropy presented by Zhang in [10] and the equation given in [27] (p. 247), when , the PSER entropy under is

If , then is negative.

2.3.3. The Defect of the PSER Entropy Calculated Using Differential Entropy

PSER entropy is the mean of the sample entropy, and the sample entropy is nonnegative, therefore, the PSER entropy is nonnegative too. However, the PSER entropy calculated by Equation (14) is not always nonnegative. Especially when , the PSER entropy is negative. The reason why Equation (14) is not always nonnegative is that the differential entropy is not always nonnegative.

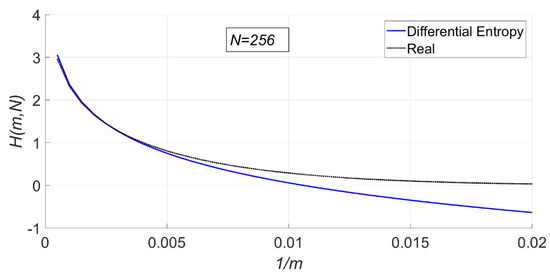

The difference between the real PSER entropy calculated by simulation experiment and that calculated by differential entropy is shown in Figure 1. At least Monte Carlo simulation experiments were carried out under and different values of .

Figure 1.

Comparison between the power spectrum subband energy ratio (PSER) entropy calcu-lated using differential entropy and the real PSER entropy.

In Figure 1, the solid line is the PSER entropy calculated by the differential entropy, while the dotted line is the PSER entropy. When is very large (), the results are close. However, when is small (), the difference between the two methods is large, and even the total entropy calculated by the differential entropy is negative, which is inconsistent with the actual result. Therefore, in this paper a more reasonable method to calculate the PSER entropy is.

2.4. PSER Entropy under

2.4.1. Definitions and Lemmas

Under , all obey the same beta distribution. According to (10), the interval probability in interval is

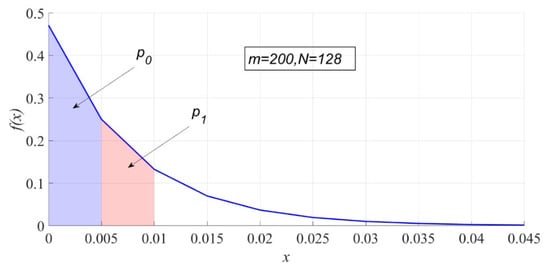

is essentially the area of the interval on the probability density map. Figure 2 shows and when is 200, and is 128.

Figure 2.

The interval probability under .

Let . It is a typical multinomial distribution problem that data fall into intervals. By the probability formula of multinomial distribution, the probability of is

where

which is the multinomial coefficient. The following lemmas are used in the following analysis.

Lemma 1.

If k is a non-negative integer, then [28] (p. 183)

Lemma 2.

If

is a non-negative integer, then

Proof.

The left side of Equation (18) is . From Lemma 1, the right side of Equation (18) is

☐

Lemma 3.

If

and

are a non-negative integer, and

, then [28] (p. 183)

Lemma 4.

If

and

are a non-negative integer, and

, then

Proof.

From Lemma 3, the right side of Equation (20) is

☐

2.4.2. Statistical Characteristics of

The mean of [28] (p. 183) is

The mean-square value of [28] (p. 183) is

The variance of [28] (p. 183) is

2.4.3. Statistical Characteristics of

For the convenience of description, let

The mean of the is the mean of all the entropy values of in the interval, that is,

is the interval entropy. The following definition is not used to define the interval entropy in this paper:

as this definition is the entropy of all probabilities of in the interval.

The mean-square value of is

The variance of is

when , the joint entropy of two interval is

when , , i.e., the mean-square value of .

2.4.4. Statistical Characteristics of

The total entropy with intervals and spectral bins is

Theorem 1.

The PSER entropy is equal to the sum of all the interval entropy, i.e.,

Proof.

According to Lemma 2,

☐

Theorem 2.

The mean of the mean-square value of the sample entropy is equal to the sum of all the joint entropy of two intervals, i.e.,

Proof.

From Lemmas 2 and 4,

☐

Theorem 3.

The variance of the sample entropy is

For the convenience of description, and under are replaced by and , respectively.

2.4.5. Computational Complexity

The calculation time of each statistic is mainly consumed in factorial calculation and the traversal of all cases.

Factorial calculation involves two cases: and . They both have a time complexity of .

There are two methods to traverse all cases: the traversal of one selected interval and the traversal of two selected intervals. The corresponding expressions for these two methods are and , respectively.

The traversal of one selected interval requires that take all the values from 0 to . Considering the time spent computing the factorial, its time complexity is . Therefore, the time complexity of calculating the interval entropy is .

The traversal of two selected intervals requires firstly selection of spectral bins from all spectral bins, and then select spectral bins from the remaining spectral bins. If and are fixed, then the time complexity is

requires listing all the combinations of and , and its time complexity is

i.e., . The time complexity of calculating the interval joint entropy is .

Calculating the total entropy requires computation of all interval entropies, so its time complexity is .

It takes the most time to calculate the variance of the sample entropy . As all interval joint entropies have to be computed, the time complexity of calculating is . In the following experiments, in order to ensure better detection performance, the values of and should not be too small, such as and , and therefore the calculation time will be very long.

2.5. PSER Entropy under

2.5.1. Definitions and Lemmas

Under , obey , and different have different non-centrality parameters, and therefore the calculation of total entropy and sample entropy variance under is much more complicated than that under . According to Equation (10), the interval probability in interval is

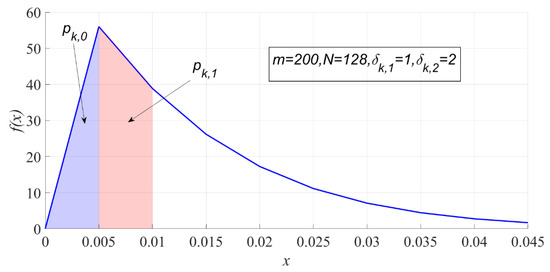

where . The subscript stands for the label of . Figure 3 shows and when , , , and .

Figure 3.

The interval probability of PSER under .

Under , different obey different probability distributions, therefore this is a multinomial distribution problem under mixture distributions. Let

where is the number of times of falls into the interval. represents the times of all falls into the interval. In a sample, since can only fall into one interval, only can be 0 or 1, and .

By the probability formula of multinomial distribution, the probability when is

The following lemmas are used in the following analysis.

Lemma 5.

If

is a non-negative integer, then

Proof.

☐

Lemma 6.

If

is a non-negative integer, then

Proof.

The left side of Equation (35) is . From Lemma 5, the right side of Equation (35) is

☐

Lemma 7.

If

and

are a non-negative integer, and

, then

Proof.

☐

2.5.2. Statistical Characteristics of

The mean of is

The mean-square value of is

The variance of is

2.5.3. Statistical Characteristics of

The mean of the is

The mean-square value of is

The variance of is

when , the joint entropy of two interval is

when , , i.e., the mean-square value of .

2.5.4. Statistical Characteristics of

Under , the PSER entropy is

Theorem 4.

Under, the PSER entropy is equal to the sum of all the interval entropy, i.e.,

Proof.

☐

The mean of the mean-square value of is

The variance of is

For the convenience of description, and are denoted as and under .

2.5.5. Computational Complexity

Under , many statistics take a much longer time than under . The calculation time is mainly consumed in two aspects: calculation of , and the factorial calculation and the traversal of all cases.

- Calculation of

As seen from Equation (32), is expressed by infinite double series under , and its value could only be obtained by numerical calculation. Since the number of calculation terms is set to be large, it will take a significant amount of calculation time.

- 2.

- Factorial calculation

Similar to the analysis under , the time complexity of the factorial calculation is .

- 3.

- The traversal of all cases

There are two methods to traverse all cases: the traversal of one selected interval and the traversal of two selected intervals. The corresponding expressions for these two methods are and , respectively.

Calculating is a process of choosing of lines, and its time complexity is . Similar to the analysis under , the computational complexity of is . Therefore, the time complexity of calculating the interval entropy is .

The computational complexity of is . Therefore the time complexity of calculating the interval joint entropy is . The time complexity of calculating the PSER entropy is . The time complexity of calculating the variance of the sample entropy is .

3. Signal Detector Based on the PSER Entropy

In this section, a signal detection method based on PSER entropy is deduced under the constant false alarm (CFAR) strategy according to the PSER entropy and sample entropy variance derived in Section 2. This method is also called full power spectrum subband energy ratio entropy detector (FPSED), because it detects on the full power spectrum.

3.1. Principle

Signal detection based on PSER entropy takes the sample entropy as a detection statistic to judge whether there is a signal in the whole spectrum. The sample entropy is

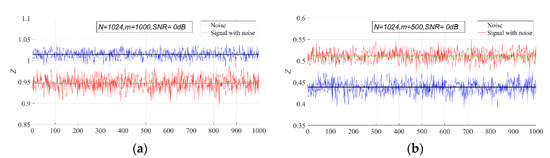

The PSER entropy of GWN is obviously different from that of the mixed signal. In general, the PSER entropy of the mixed signal will be less than that of GWN, but sometimes it will also be greater than that of GWN. This can be seen in Figure 4.

Figure 4.

Comparison of the PSER entropy between GWN and the mixed signal (N = 1024, signal–noise ratio (SNR) = 0 dB). (a) m = 1000; (b) m = 500. The blue broken line is the sample entropy of GWN, and the red broken line is the sample entropy of the Ricker signal. The black line is the PSER entropy of GWN, and the blue line is the PSER entropy of the Ricker signal.

In Figure 4a, the PSER entropy of GWN is higher than that of the noisy Ricker signal. However, the PSER entropy of GWN is lower than that of the noisy Ricker signal in Figure 4b. Therefore, when setting the detection threshold of the PSER entropy detector, the relationship between the PSER entropy of the signal and that of noise should be considered.

3.1.1. The PSER Entropy of a Signal Less Than That of GWN

When the PSER entropy of signal is less than that of GWN, let the threshold be which tests the decision statistic. If the test statistic is less than , the signal is deemed to be present, and it is absent otherwise, i.e.,

The distribution of is regarded as Gaussian in this paper, so

Under the CFAR strategy, the false alarm probability can be expressed as follows:

can be derived from Equation (47)

The detection probability can be expressed as follows:

Substituting Equation (48) into Equation (49), can then be evaluated as follows:

3.1.2. The PSER Entropy of a Signal Larger Than That of GWN

When the PSER entropy of a signal is larger than that of GWN, let the threshold be . If the test statistic is larger than , the signal is deemed to be present, and it is absent otherwise, i.e.,

The false alarm probability is

and

The detection probability is

Substituting Equation (53) into Equation (54), can then be evaluated as follows:

3.2. Other Detection Methods

In the following experiment, the PSER entropy detector compare with the commonly used full spectrum energy detection (FSED) [29] and matched-filtering detector (MFD) methods, under the same condition. In this section, we introduce these two detectors.

3.2.1. Full Spectrum Energy Detection

The performance of FSED is exactly the same as that of classical energy detection (ED). The total spectral energy is measured by the sum of all spectral lines in the power spectrum, that is,

Let be the SNR, that is, . When the detection length is large enough, obeys a Gaussian distribution:

Let the threshold be . The false alarm probability and detection probability can be expressed as follows:

where .

3.2.2. Matched Filter Detection

The main advantage of matched filtering is the short time to achieve a certain false alarm probability or detection probability. Hence, it requires perfect knowledge of the signal. In the time domain, the detection statistic of matched filtering is

where is the transmitted signal, and is signal to be detector. Let , i.e., all energy of the signal, and is the threshold. The false alarm probability and detection probability can be expressed as follows:

and .

4. Experiments

For this section, we verified and compared the detection performances of the FPSED, FSED, and MTD discussed in Section 3 through Monte Carlo simulations. The primary signal was a binary phase shift keying(BPSK) modulated signal with symbol ratio , carrier frequency 1000 Hz, and sampling frequency Hz.

We performed all Monte Carlo simulations for at least independent trials. We set to 0.05. We used mean-square error (MSE) to measure the deviation between the theoretical values and actual statistical results. All the programs in the experiment were run in MATLAB set up on a laptop with a Core i5 CPU and 16GB RAM.

Since the PSER entropy , and the sample entropy variance and cannot be calculated, a large number of simulation data were generated in the experiment to obtain , , and , which replace , , and , respectively.

4.1. Experiments under

This section verifies whether the calculation results of each statistic is correct by comparing the statistical results with the theoretical calculation result. The effects of noise intensity, the number of spectral lines and the number of intervals on interval probability, interval entropy, PSER entropy, and the variance of sample entropy are analyzed.

4.1.1. Influence of Noise

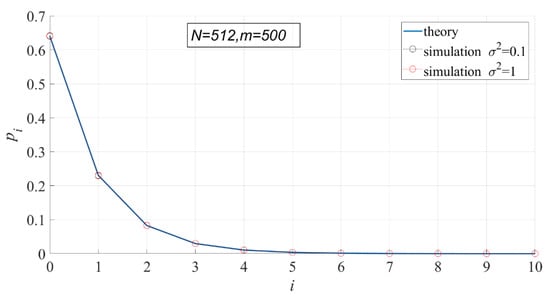

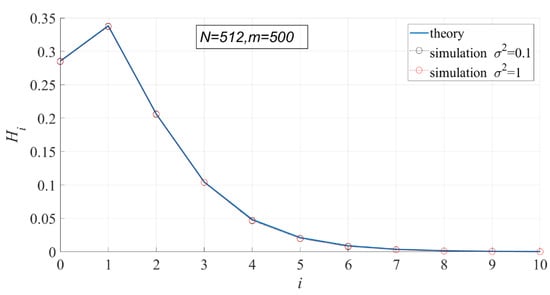

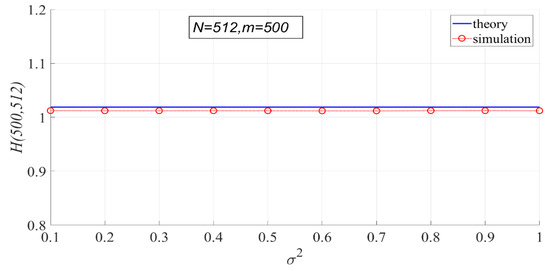

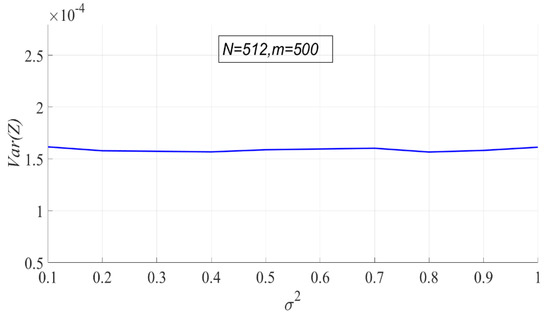

According to the probability density function of PSER, PSER has nothing to do with noise intensity. Therefore, noise intensity has no effect on each statistic under . In the following experiment, the variance of noise has 10 values ranging from 0.1 to 1 at 0.1 intervals, , and .

In Figure 5 and Figure 6, the theoretical and actual values of interval probability and interval entropy under different noise intensities are compared. The results show that the theoretical values are in good agreement with the statistical values, and the noise intensity has no effect on interval probability. In the first few intervals ( < 7), the interval probabilities are large, so the interval entropies are also large, contributing more to the total entropy.

Figure 5.

Comparison of the theoretical interval probability and the actual interval probability.

Figure 6.

Comparison of the theoretical interval entropy and the actual interval entropy.

In Figure 7, the theoretical values of PSER entropies are compared with the actual values. It can be seen that the theoretical values are basically consistent with the actual values, but the actual values are slightly smaller than the theoretical values. The actual values do not change with noise intensity, indicating that noise intensity has no effect on PSER entropy.

Figure 7.

Comparison of theoretical PSER entropy and actual PSER entropy.

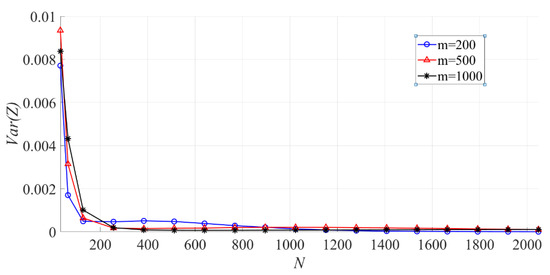

Since the theoretical variance of sample entropy cannot be calculated, only the variation of the actual variance of sample entropy with noise intensity is shown in Figure 8. It can be seen that the variance of sample entropy does not change with the noise intensity.

Figure 8.

The actual variance of sample entropy.

The above experiments show that the actual statistical results are consistent with the theoretical calculation results, indicating that the calculation methods of interval probability, interval entropy, PSER entropy, and sample entropy variance determined in this paper are correct.

4.1.2. Influence of

In Figure 9, the effect of on the interval probability is shown. When is fixed, the larger is, the larger is, and the interval probabilities in other intervals will be smaller. The reason for that is that the larger is, the smaller the energy ratio of each line to the entire power spectrum.

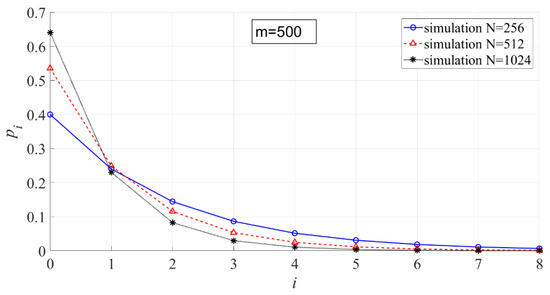

Figure 9.

The effect of on the interval probability ( = 500, = 256, 512, 1024).

In Figure 10, the effect of on the interval entropy is shown. The larger is, the smaller .

Figure 10.

The effect of on the interval entropy ( = 500, = 256, 512, 1024).

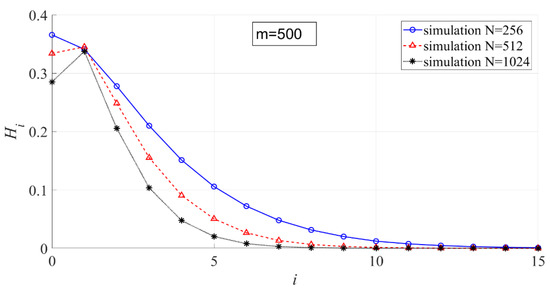

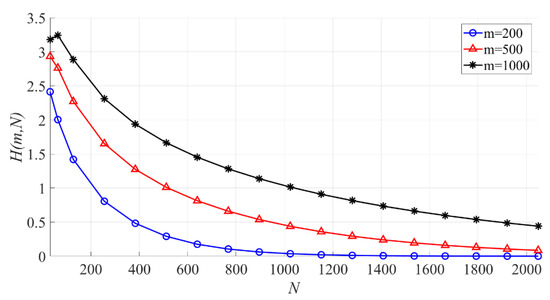

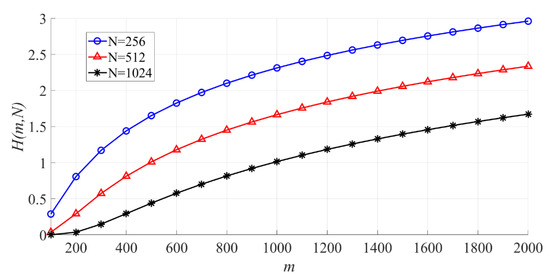

In Figure 11, the effect of on the interval entropy is shown. When is fixed, the larger is, the smaller becomes. When is the same, the larger is, the larger the PSER entropy is.

Figure 11.

The effect of on the PSER entropy ( = 200, 500, 1000).

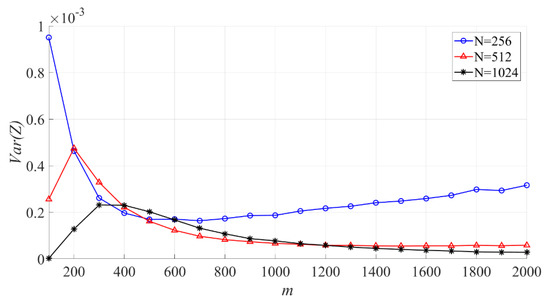

In Figure 12, the effect of on the variance of the sample entropy is shown. When is fixed, the larger is, the smaller becomes.

Figure 12.

The effect of on the variance of the sample entropy ( = 200, 500, 1000).

4.1.3. Influence of

In Figure 13, the effect of on the PSER entropy is shown. When is fixed, the larger is, the larger becomes.

Figure 13.

The effect of on the PSER entropy ( = 256, 512, 1024).

In Figure 14, the effect of on the variance of the sample entropy is shown. When is fixed, the larger is, the variance of sample entropy increases first, then decreases, and then increases slowly.

Figure 14.

The effect of on the variance of the sample entropy ( = 256, 512, 1024).

4.1.4. The Parameters for the Next Experiment

After theoretical calculations and experimental statistical analysis, the PSER entropy and sample entropy variance used in the following experiments were obtained, as shown in Table 1.

Table 1.

The parameters under .

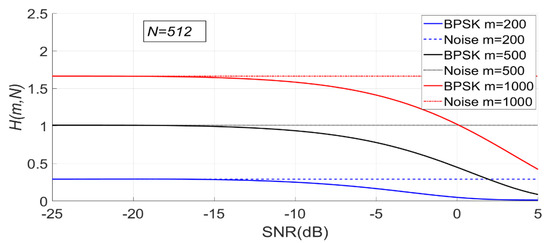

4.2. Experiments under

When is fixed, the change of PSER entropy of the BPSK signal with noise under is shown in Figure 15. It can be seen that the PSER entropy of BPSK signal decreases gradually with the increase of SNR. When the SNR is less than −15 dB, the PSER entropy of noise and that of the BPSK signal are almost the same; therefore, it is impossible to distinguish between noise and the BPSK signal.

Figure 15.

The change of PSER entropy of the binary phase shift keying (BPSK) signal with noise when is fixed ( = 200, 500, 1000, = 512).

Since the PSER entropy of the BPSK signal is always less than that of noise, the threshold should be used in BPSK signal detection.

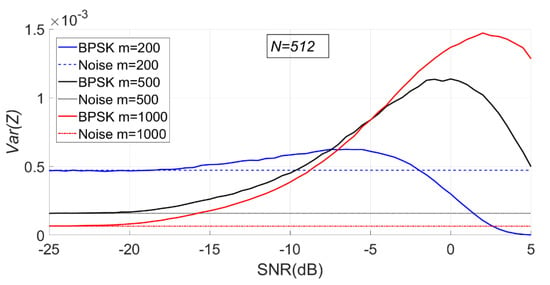

As can be seen from Figure 16, with the increase of SNR, the sample entropy variance of the BPSK signal first increases and then gradually decreases.

Figure 16.

The change of sample entropy variance of BPSK signal with noise when is fixed ( = 200, 500, 1000, = 512).

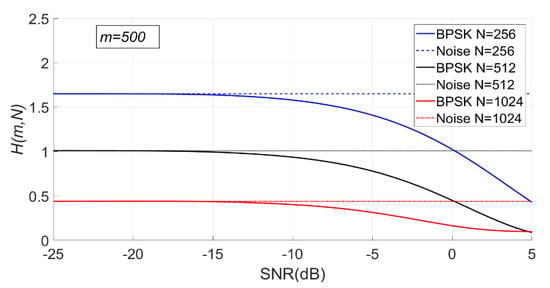

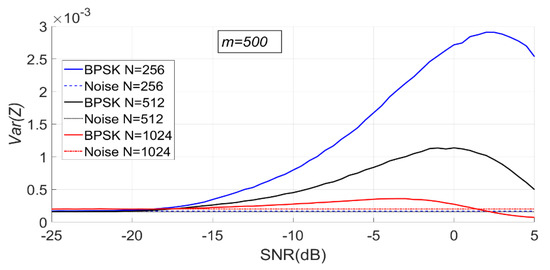

When is fixed, the change of PSER entropy and sample entropy variance of BPSK signal with noise under is shown in Figure 17 and Figure 18. It can be seen that the PSER entropy of BPSK signal decreases gradually with the increase of SNR.

Figure 17.

The change of PSER entropy of the BPSK signal with noise when is fixed ( = 500, = 256, 512, 1024).

Figure 18.

The change of sample entropy variance of the BPSK signal with noise when is fixed ( = 500, = 256, 512, 1024).

As can be seen from Figure 18, with the increase of SNR, the sample entropy variance of the BPSK signal first increases and then gradually decreases.

4.3. Comparison of Detection Performance

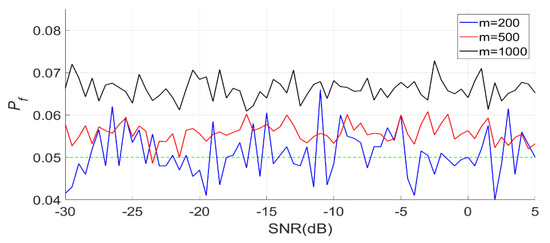

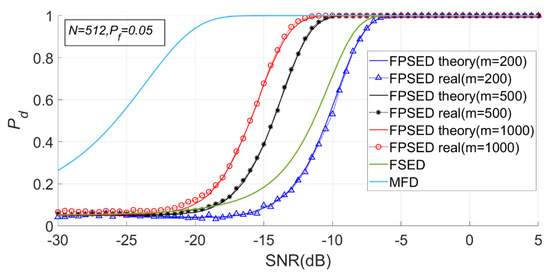

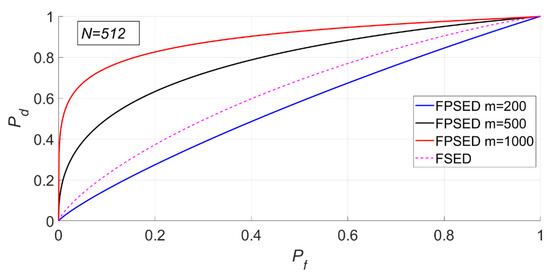

When is 512, is 200, 500 and 1000, respectively. The detection results of the BSPK signal are shown in Figure 19, Figure 20 and Figure 21.

Figure 19.

Actual false alarm probabilities of the PSER entropy detector ( = 200, 500, 1000, = 512).

Figure 20.

Detection probabilities of full spectrum energy detection (FSED), matched-filtering detector (MFD) and PSER entropy detectors ( = 200, 500, 1000, = 512).

Figure 21.

Receiver operating characteristic (ROC) curve of FSED and PSER entropy detectors ( = 200, 500, 1000, = 512).

In Figure 19, the actual false alarm probabilities fluctuate slightly and do not change with the SNR, which is consistent with the characteristics of constant false alarm.

It can be seen from Figure 20 and Figure 21 that the detection probability of the PSER entropy detector is obviously better than that of the FSED method when is 1000. However, when is 200, the detection performance is lower than that of FSED. There is no doubt that the detection performance of matched filtering is the best.

The MSEs of the actual and theoretical probabilities of these experiments are given in Table 2.

Table 2.

Mean-square errors (MSEs) between actual and theoretical probabilities ( = 512).

The deviation between the actual and theoretical detection probabilities was very small, which indicated that the PSER entropy detector was accurate.

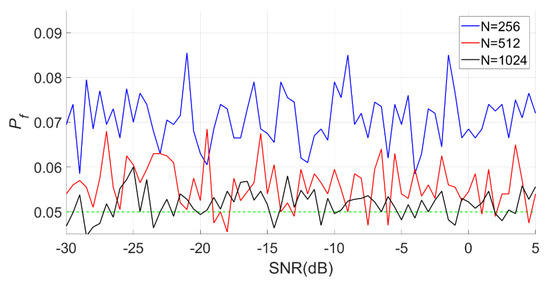

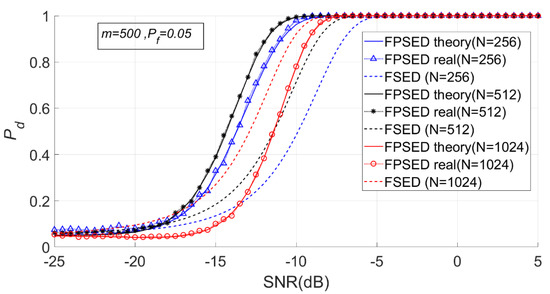

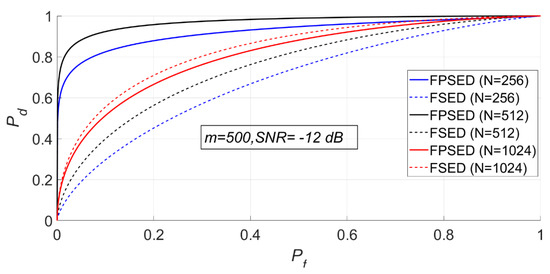

When is 500, is 256, 512, and 1024, respectively. The detection results for the BSPK signal are shown in Figure 22, Figure 23 and Figure 24.

Figure 22.

Actual false alarm probabilities of the PSER entropy detectors ( = 500, = 256, 512, 1024).

Figure 23.

Detection probabilities of FSED and PSER entropy detectors (m = 500, = 256, 512, 1024).

Figure 24.

ROC of FSED and PSER entropy detectors ( = 500, = 256, 512, 1024).

It can be seen from Figure 23 and Figure 24 that when is fixed, a larger does not necessarily imply a better detection performance. The detection probability when is 1024 is lower than that when is 512. However, the detection performance of the full spectrum energy detection method will improve with the increase of .

The MSEs of the actual and theoretical probabilities of these experiments are given in Table 3. The deviations between the actual and theoretical detection probabilities was very small when was 512 or 1024, however were higher when was 256.

Table 3.

MSEs between actual and theoretical probabilities ( = 500).

5. Discussions

5.1. Theoretical Calculation of Statistics

In Section 2, we analyzed the computational time complexity of each statistic. When and are large, the theoretical values of some statistics, such as under , , , , and under , cannot be calculated. This restricts the further analysis on the detection performance of the PSER entropy detector.

There are two ways to solve this problem: reducing the computational complexity and finding an approximate solution. Which way is feasible requires further study.

5.2. Experience of Selecting Parameters

The detection probability of PSER entropy detection is related to the number of intervals , the number of power spectrum lines , and the SNR of the spectrum lines. Since the mathematical expressions of many statistics are too complex, the influence of the three factors on cannot be accurately analyzed at present. Based on a large number of experiments, we summarize the following experiences as recommendations for setting parameters.

- (1)

- cannot be too small. It can be seen from Figure 20, that, if is too small, then the detection performance of the PSER entropy detector will be lower than that of energy detector. We suggest that .

- (2)

- must be close to . It can be seen from Figure 23, that is not better when bigger. A large number of experiments show that when is close to , the detection probability is good.

- (3)

- When is fixed, can be adjusted appropriately through experiments.

5.3. Advantages of the PSER Entropy Detector

When using PSER entropy detection, the noise intensity does not need to be estimated in advance, and prior information of the signal to be detected is not needed. Therefore, the PSER entropy detector is a typical blind signal detection method.

5.4. Further Research

The detection performance of the PSER entropy detector will be further improved if some methods are used to improve the SNR of signals. In future research, a denoising method can be used to improve the SNR, the Welch or autoregressive method can be used to improve the quality of power spectrum estimation, and multi-channel cooperative detection can be used to increase the accuracy of detection.

6. Conclusions

In this paper, the statistical characteristics of PSER entropy are derived through strict mathematical analysis, and the theoretical formulas for calculating the PSER entropy and sample entropy variance from pure noise and mixed signals are obtained. In the process of derivation, we do not use the classical method of approximating PSER entropy using differential entropy, but use interval division and the multinomial distribution to calculate PSER entropy. The calculation results of this method are consistent with the simulation results, which shows that this method is correct. This method is not only suitable for a large number of intervals, but also suitable for a small number of intervals. A signal detector based on the PSER entropy was created according to these statistical characteristics. The performance of the PSER entropy detector is obviously better than that of the classical energy detector. This method does not need to estimate the noise intensity or require any prior information of the signal to be detected, and therefore it is a complete blind signal detector.

The PSER entropy detector can not only be used in spectrum sensing, but also in vibration signal detection, seismic monitoring, and pipeline safety monitoring.

Author Contributions

Conceptualization, Y.H. and H.L.; formal analysis, Y.H. and H.L.; investigation, Y.H. and H.L.; methodology, Y.H. and H.L.; validation Y.H., S.W. and H.L.; writing—original draft, H.L.; writing—review and editing, Y.H. and S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Science and Technology Nova Plan of Beijing City (No. Z201100006820122) and Fundamental Research Funds for the Central Universities (No. 2020RC14).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data is simulated.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gupta, M.S.; Kumar, K. Progression on spectrum sensing for cognitive radio networks: A survey, classification, challenges and future research issues. J. Netw. Comput. Appl. 2019, 143, 47–76. [Google Scholar] [CrossRef]

- Arjoune, Y.; Kaabouch, N. A Comprehensive Survey on Spectrum Sensing in Cognitive Radio Networks: Recent Advances, New Challenges, and Future Research Directions. Sensors 2019, 19, 126. [Google Scholar] [CrossRef] [PubMed]

- Kabeel, A.A.; Hussein, A.H.; Khalaf, A.A.M.; Hamed, H.F.A. A utilization of multiple antenna elements for matched filter based spectrum sensing performance enhancement in cognitive radio system. AEU Int. J. Electron. C 2019, 107, 98–109. [Google Scholar] [CrossRef]

- Chatziantoniou, E.; Allen, B.; Velisavljevic, V.; Karadimas, P.; Coon, J. Energy Detection Based Spectrum Sensing Over Two-Wave with Diffuse Power Fading Channels. IEEE Trans. Veh. Technol. 2017, 66, 868–874. [Google Scholar] [CrossRef]

- Reyes, H.; Subramaniam, S.; Kaabouch, N.; Hu, W.C. A spectrum sensing technique based on autocorrelation and Euclidean distance and its comparison with energy detection for cognitive radio networks. Comput. Electr. Eng. 2016, 52, 319–327. [Google Scholar] [CrossRef]

- Yucek, T.; Arslan, H. A survey of spectrum sensing algorithms for cognitive radio applications. IEEE Commun. Surv. Tutor. 2009, 11, 116–130. [Google Scholar] [CrossRef]

- Gismalla, E.H.; Alsusa, E. Performance Analysis of the Periodogram-Based Energy Detector in Fading Channels. IEEE Trans. Signal Process. 2011, 59, 3712–3721. [Google Scholar] [CrossRef]

- Dikmese, S.; Ilyas, Z.; Sofotasios, P.C.; Renfors, M.; Valkama, M. Sparse Frequency Domain Spectrum Sensing and Sharing Based on Cyclic Prefix Autocorrelation. IEEE J. Sel. Areas Commun. 2017, 35, 159–172. [Google Scholar] [CrossRef]

- Li, H.; Hu, Y.; Wang, S. Signal Detection Based on Power-Spectrum Sub-Band Energy Ratio. Electronics 2021, 10, 64. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Zhang, Q.Y.; Melodia, T. A frequency-domain entropy-based detector for robust spectrum sensing in cognitive radio networks. IEEE Commun. Lett. 2010, 14, 533–535. [Google Scholar] [CrossRef]

- Prieto, G.; Andrade, A.G.; Martinez, D.M.; Galaviz, G. On the Evaluation of an Entropy-Based Spectrum Sensing Strategy Applied to Cognitive Radio Networks. IEEE Access 2018, 6, 64828–64835. [Google Scholar] [CrossRef]

- Nagaraj, S.V. Entropy-based spectrum sensing in cognitive radio. Signal Process. 2009, 89, 174–180. [Google Scholar] [CrossRef]

- Gu, J.; Liu, W.; Jang, S.J.; Kim, J.M. Spectrum Sensing by Exploiting the Similarity of PDFs of Two Time-Adjacent Detected Data Sets with Cross Entropy. IEICE Trans. Commun. 2011, E94B, 3623–3626. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Q.; Wu, S. Entropy-based robust spectrum sensing in cognitive radio. IET Commun. 2010, 4, 428. [Google Scholar] [CrossRef]

- Nikonowicz, J.; Jessa, M. A novel method of blind signal detection using the distribution of the bin values of the power spectrum density and the moving average. Digit. Signal Process. 2017, 66, 18–28. [Google Scholar] [CrossRef]

- Zhao, N. A Novel Two-Stage Entropy-Based Robust Cooperative Spectrum Sensing Scheme with Two-Bit Decision in Cognitive Radio. Wirel. Pers. Commun. 2013, 69, 1551–1565. [Google Scholar] [CrossRef]

- Ejaz, W.; Shah, G.A.; Ul Hasan, N.; Kim, H.S. Optimal Entropy-Based Cooperative Spectrum Sensing for Maritime Cognitive Radio Networks. Entropy 2013, 15, 4993–5011. [Google Scholar] [CrossRef]

- So, J. Entropy-based Spectrum Sensing for Cognitive Radio Networks in the Presence of an Unauthorized Signal. KSII Trans. Internet Inf. 2015, 9, 20–33. [Google Scholar]

- Ye, F.; Zhang, X.; Li, Y. Collaborative Spectrum Sensing Algorithm Based on Exponential Entropy in Cognitive Radio Networks. Symmetry 2016, 8, 112. [Google Scholar] [CrossRef]

- Zhu, W.; Ma, J.; Faust, O. A Comparative Study of Different Entropies for Spectrum Sensing Techniques. Wirel. Pers. Commun. 2013, 69, 1719–1733. [Google Scholar] [CrossRef]

- Islam, M.R.; Uddin, J.; Kim, J. Acoustic Emission Sensor Network Based Fault Diagnosis of Induction Motors Using a Gabor Filter and Multiclass Support Vector Machines. Adhoc Sens. Wirel. Netw. 2016, 34, 273–287. [Google Scholar]

- Akram, J.; Eaton, D.W. A review and appraisal of arrival-time picking methods for downhole microseismic data. Geophysics 2016, 81, 71–91. [Google Scholar] [CrossRef]

- Legese Hailemariam, Z.; Lai, Y.C.; Chen, Y.H.; Wu, Y.H.; Chang, A. Social-Aware Peer Discovery for Energy Harvesting-Based Device-to-Device Communications. Sensors 2019, 19, 2304. [Google Scholar] [CrossRef]

- Pei-Han, Q.; Zan, L.; Jiang-Bo, S.; Rui, G. A robust power spectrum split cancellation-based spectrum sensing method for cognitive radio systems. Chin. Phys. B 2014, 23, 537–547. [Google Scholar]

- Mei, F.; Hu, C.; Li, P.; Zhang, J. Study on main Frequency precursor characteristics of Acoustic Emission from Deep buried Dali Rock explosion. Arab. J. Geoences 2019, 12, 645. [Google Scholar] [CrossRef]

- Moddemeijer, R. On estimation of entropy and mutual information of continuous distributions. Signal Process. 1989, 16, 233–248. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2006; p. 737. [Google Scholar]

- Chung, K.L.; Sahlia, F.A. Elementary Probability Theory, with Stochastic Processes and an Introduction to Mathematical Finance; Springer: New York, NY, USA, 2004; p. 402. [Google Scholar]

- Sarker, M.B.I. Energy Detector Based Spectrum Sensing by Adaptive Threshold for Low SNR in CR Networks; Wireless and Optical Communication Conference: New York, NY, USA, 2015; pp. 118–122. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).