Improving the Retrieval of Arabic Web Search Results Using Enhanced k-Means Clustering Algorithm

Abstract

1. Introduction

- We compile a suitable dataset to test clustering algorithms. Each word in the dataset has multiple meanings; thus, it is hoped that clustering will collate all documents having a word with the same meaning.

- We investigate the enhanced k-means algorithm on Arabic IR, including the impact of the stemming process.

- Compare the performance of enhanced k-means, the regular k-means algorithms on the dataset, and how their performance measures are impacted by the stemming process. Results significance were confirmed using paired t-test.

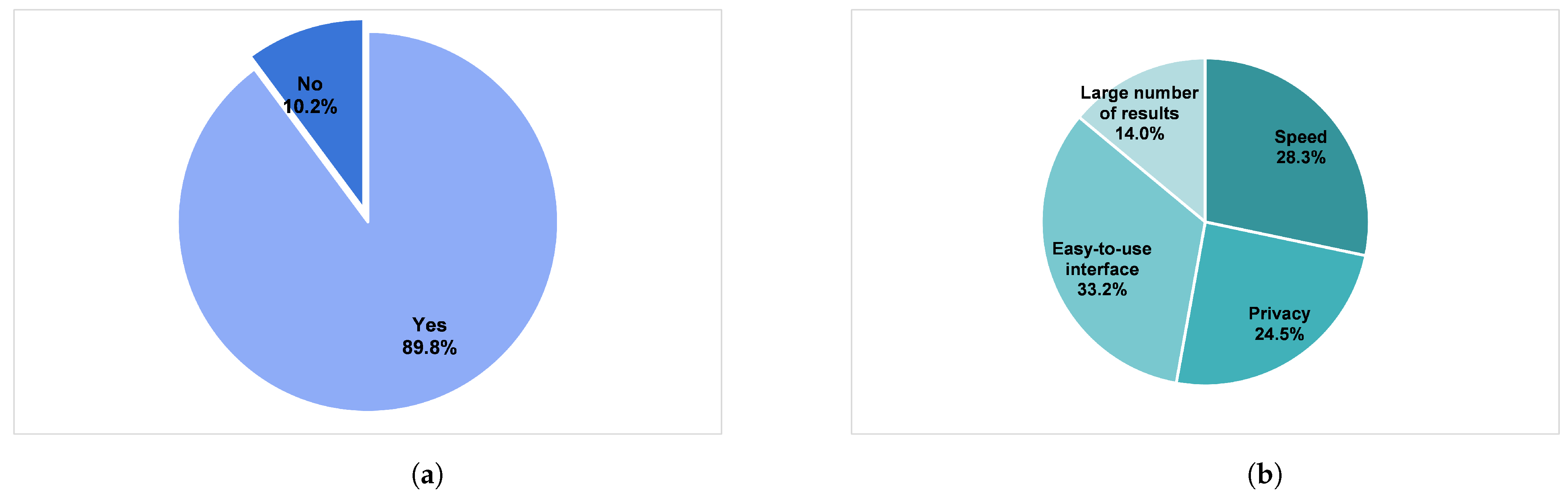

- Surveyed users for their preference in doing a web search.

2. Background

2.1. Challenges in Arabic IR

- Ambiguity. In Arabic, words with similar spelling may have different pronunciations and meanings that can only be determined by the context and proper knowledge of the grammar. However, when ambiguity persists, it is resolved through the diacritical markings. Unfortunately, in the modern writing system the diacritics are not written, as it is assumed—erroneously—the reader will disambiguate the meaning. Azmi and Almajed [8] have shown this is far from the truth and that ambiguity is a serious problem in MSA as the problem of finding the proper semantic meaning of a given word is a non-trivial task. Just to give an idea, a single undiacritized word (عقد: Eqd), could be any of the following, “necklace”, “knots”, “contract”, “decade”, “pact”, and “complicated”. A study showed that for each undiacritized word, it is possible to have (on average) 11.6 different interpretations/meanings [9]. Farghaly and Shaalan [10] reported on a firm that has been working on machine translation for the last 50 years; they saw as many as 19.2 ambiguities for a token in MSA, while for most languages, it was on average 2.3.

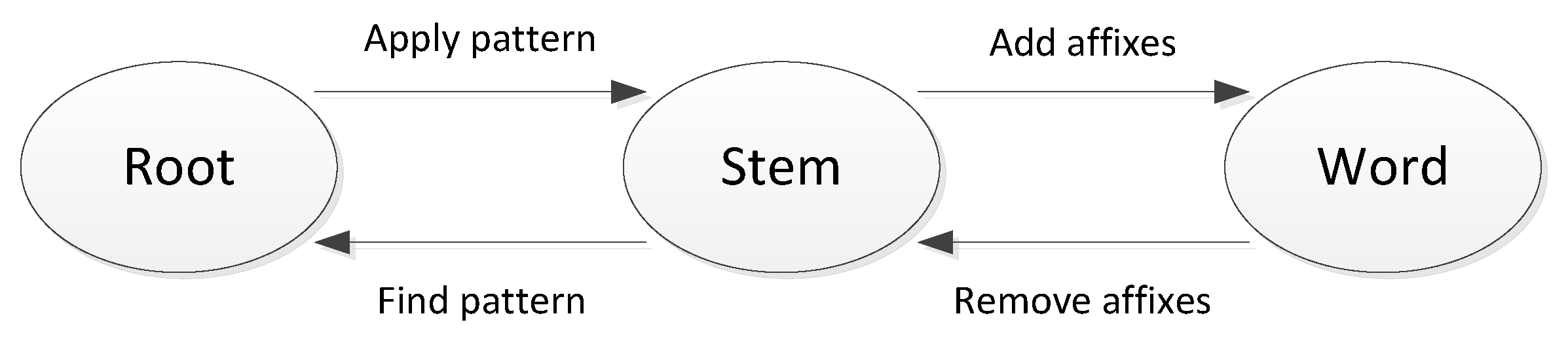

- Arabic morphology, complex yet systematic. The nouns and verbs are derived from roots by applying templates, thereby generating stems. Applying templates often involves introducing infixes or deleting or replacing letters from the root. We may also join multiple prefixes and/or suffixes to a stem to form a word. Prefixes include prepositions, determiners, and coordinating conjunctions, while suffixes include attached pronouns, gender indicator, and number markers [7]. The most common Arabic root has three consonants (triliteral roots), and the largest one has five-consonants (quinquiliteral roots). The consonant root can be viewed as a core around which are clustered a wide array of potential meanings, depending on which pattern is keyed into the root [11]. The number of lexical roots in Arabic has been estimated to range between 5000 and 6500 [11].Figure 1 shows the general word construction system in Arabic. Table 1 provides an example of a complex Arabic word with different affixes, a simple example of how morphology may impact retrieval. One of the very common prefixes in Arabic is the definite article (ال: Al) “the”, always prefixed to another word and never stands alone. This leads to clustering a large number of alphabetically grouped documents in the index file.

- Irregular (or broken) plurals, for example, leaf → leaves (in English). In Arabic it is more common. About 41% of the Arabic plurals are broken, constituting about 10% of the text in large Arabic corpora [12].

- Out of vocabulary (OOV) words, such as a named entity or technical term. The OOV is a common source of error in any retrieval system. In [13], it was reported that half of the OOV words in Arabic are a named entity. One study reported 15 different spellings for Condoleezza (former US Secretary of State), with four different ones found on the CNN-Arabic website alone [12].

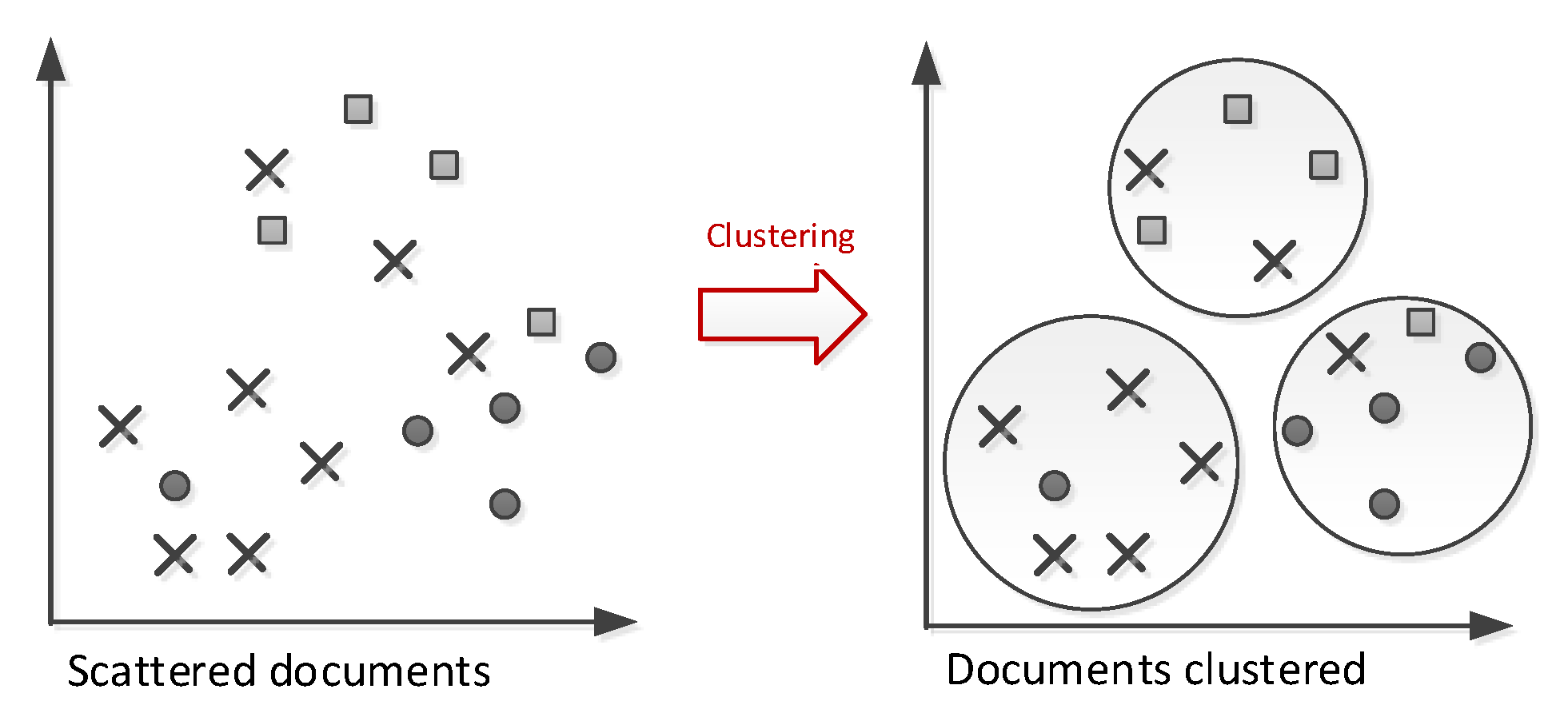

2.2. Clustering

3. Related Work

3.1. Non-Arabic Text Clustering

3.2. Arabic Text Clustering

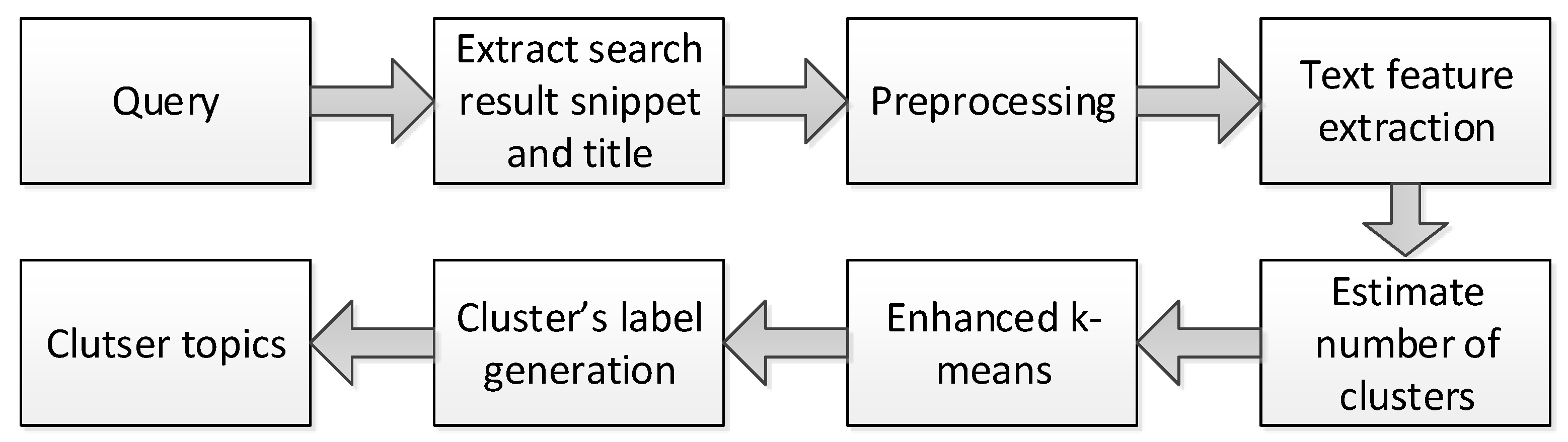

4. Proposed System

| Algorithm 1: The enhanced k-means algorithm. |

|

5. Results and Discussion

5.1. The Dataset

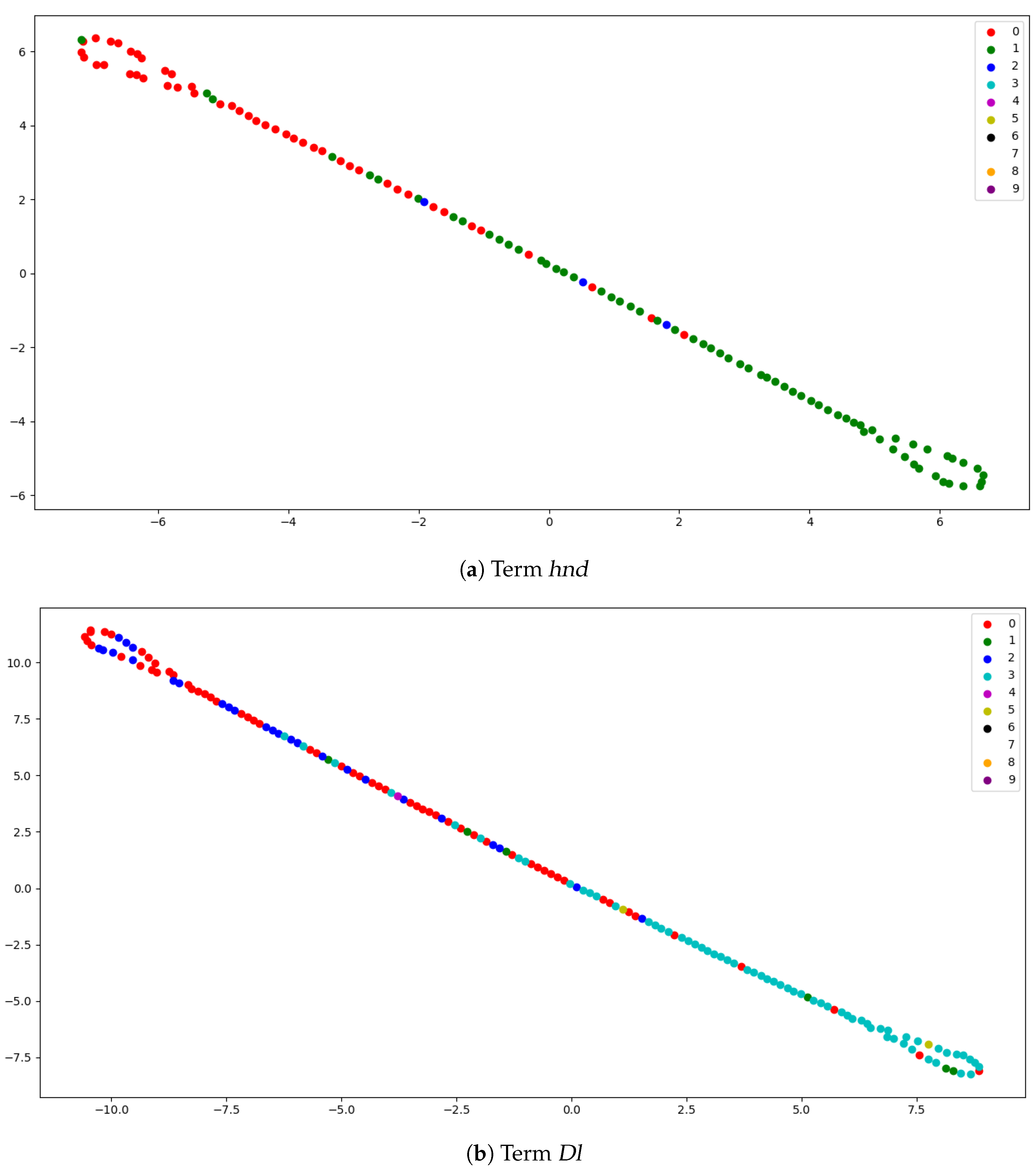

5.2. Estimating the Number of Clusters

5.3. Labeling Clusters

5.4. Performance of Enhanced k-Means

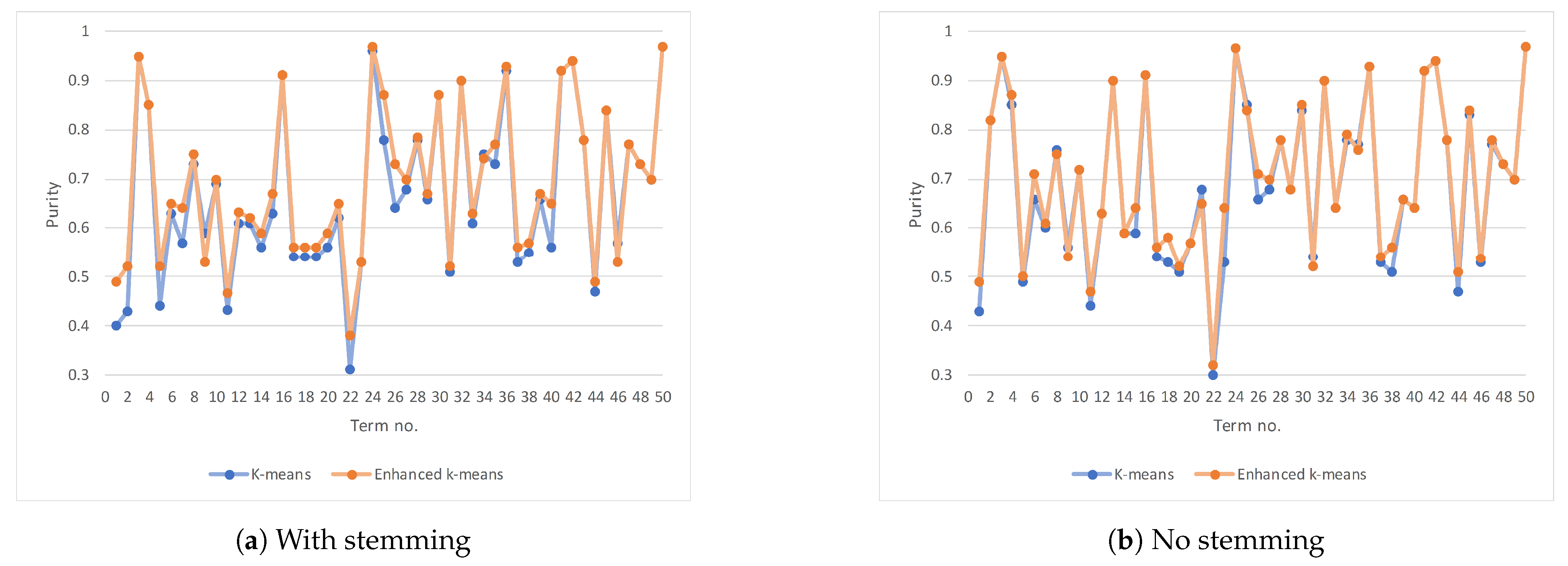

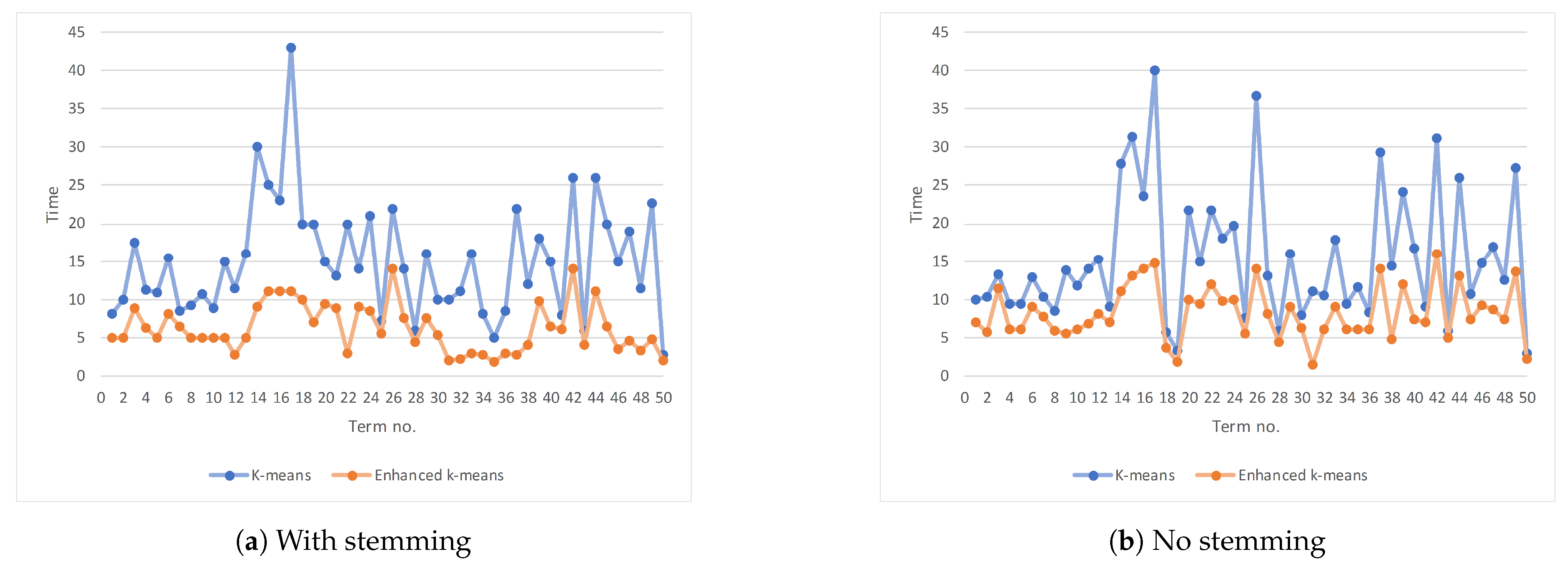

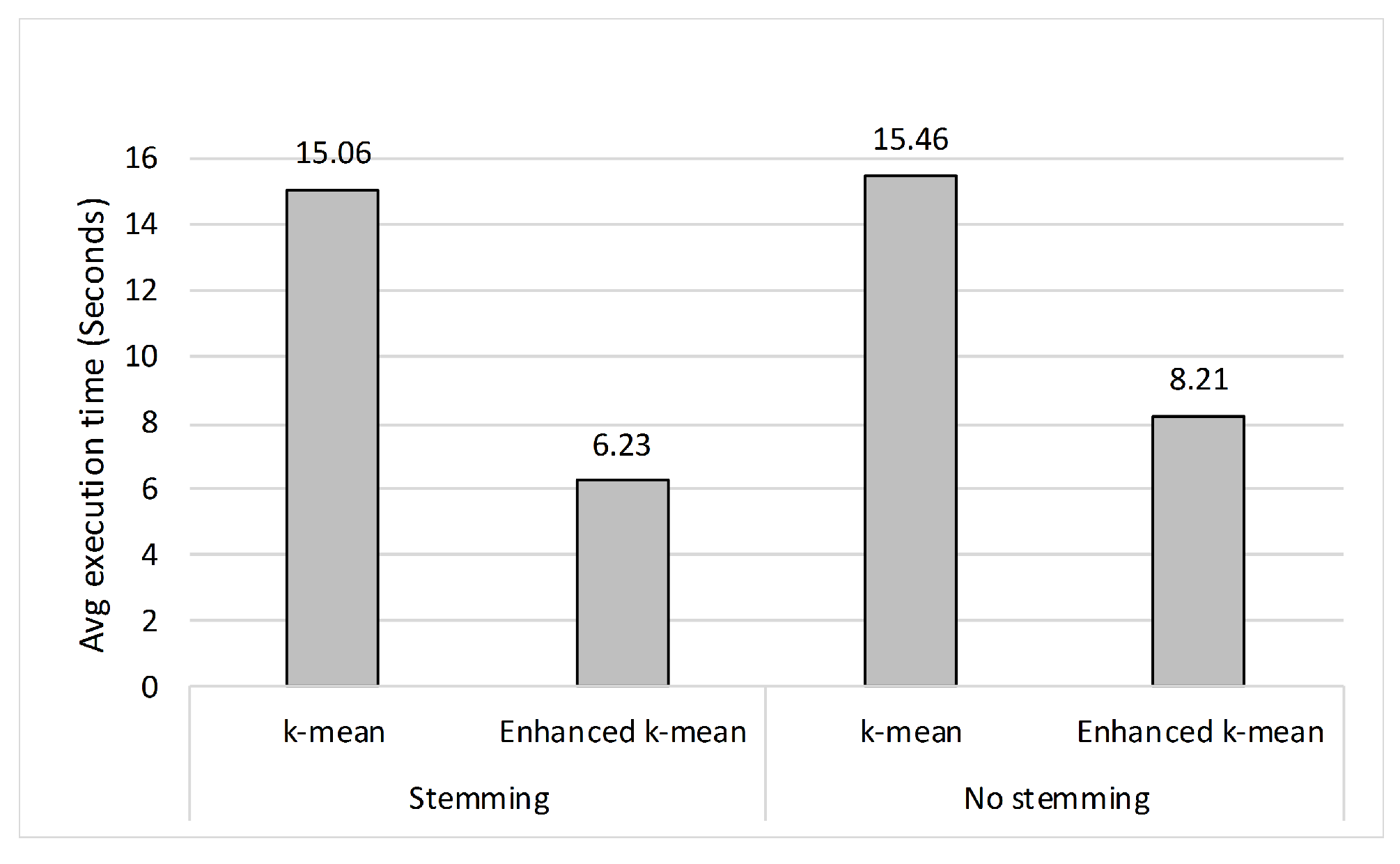

5.5. Comparison between k-Means and the Enhanced k-Means

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wong, J.L. Real World Evidence Collaboration and Convergence for Change: Big Data, Digital and Tech—and Real World Applications and Implications for Industry. Available online: www.emwa.org/media/3036/5c-big-data-digital-biomarkers-and-emerging-data-sources-j-wong.pdf (accessed on 14 August 2020).

- Chui, M.; Manyika, J.; Bughin, J.; Dobbs, R.; Roxburgh, C.; Sarrazin, H.; Sands, G.; Westergren, M. The social economy: Unlocking value and productivity through social technologies. McKinsey Glob. Inst. 2012, 4, 35–58. [Google Scholar]

- Bernstein, P. SearchYourCloud Survey, It Takes up to 8 Attempts to Find an Accurate Search Result. 2013. Available online: www.techzone360.com/topics/techzone/articles/2013/11/05/359192-searchyourcloud-survey-it-takes-up-8-attempts-find.htm (accessed on 14 August 2020).

- Rohith, G. Introduction to Machine Learning Algorithms. 2018. Available online: https://towardsdatascience.com/support-vector-machine-introduction-to-machine-learning-algorithms-934a444fca47 (accessed on 7 June 2018).

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Hirschberg, J.; Manning, C.D. Advances in natural language processing. Science 2015, 349, 261–266. [Google Scholar] [CrossRef] [PubMed]

- Darwish, K.; Magdy, W. Arabic information retrieval. Found. Trends Inf. Retr. 2014, 7, 239–342. [Google Scholar] [CrossRef]

- Azmi, A.M.; Almajed, R.S. A survey of automatic Arabic diacritization techniques. Nat. Lang. Eng. 2015, 21, 477–495. [Google Scholar] [CrossRef]

- Debili, F.; Achour, H.; Souissi, E. La Langue Arabe et L’ordinateur: De L’étiquetage Grammatical à la Voyellation Automatique. Correspondances No. 71; de l’Institut de Recherche sur le Maghreb Contemporain: Aryanah, Tunisia, 2002; pp. 10–28. [Google Scholar]

- Farghaly, A.; Shaalan, K. Arabic natural language processing: Challenges and solutions. ACM Trans. Asian Lang. Inf. Process. (TALIP) 2009, 8, 14:1–14:22. [Google Scholar] [CrossRef]

- Ryding, K.C. A Reference Grammar of Modern Standard Arabic; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Azmi, A.M.; Aljafari, E.A. Universal web accessibility and the challenge to integrate informal Arabic users: A case study. Univers. Access Inf. Soc. 2018, 17, 131–145. [Google Scholar] [CrossRef]

- Al-Fedaghi, S.; Al-Anzi, F. A new algorithm to generate Arabic root-pattern forms. In Proceedings of the 11th National Computer Conference and Exhibition, Baltimore, MD, USA, 17–20 October 1988; King Fahd University of Petroleum and Minerals: Dhahran, Saudi Arabia, 1989; pp. 391–400. [Google Scholar]

- Lloyd, S. Least Squares Quantization in PCM; Technical Report RR-5497; Bell Laboratories: Murray Hill, NJ, USA, 1957. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 21 June–18 July 1965; Volume 1, pp. 281–297. [Google Scholar]

- Jin, X.; Han, J. K-Means Clustering. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2017; pp. 695–697. [Google Scholar]

- Tan, P.N.; Steinbach, M.; Kumar, V. Introduction to Data Mining; Pearson Education: London, UK, 2016. [Google Scholar]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; Wiley: New York, NY, USA, 1990. [Google Scholar]

- Tibshirani, R.; Walther, G.; Hastie, T. Estimating the number of clusters in a dataset via the gap statistic. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2001, 63, 411–423. [Google Scholar] [CrossRef]

- Sithara, E.P.; Abdul-Nazeer, K.A. A Hybrid K Harmonic Means with ABC Clustering Algorithm using an Optimal K value for High Performance Clustering. Int. J. Cybern. Inform. 2016, 5, 51–59. [Google Scholar]

- Zamir, O.; Etzioni, O. Grouper: A dynamic clustering interface to Web search results. Comput. Netw. 1999, 31, 1361–1374. [Google Scholar] [CrossRef]

- Ngo, C.L.; Nguyen, H.S. A tolerance rough set approach to clustering web search results. In Proceedings of the European Conference on Principles of Data Mining and Knowledge Discovery, Pisa, Italy, 20–24 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 515–517. [Google Scholar]

- Subhashini, R.; Kumar, V.J.S. The Anatomy of Web Search Result Clustering and Search Engines. Indian J. Comput. Sci. Eng. 2005, 1, 392–401. [Google Scholar]

- Fahim, A.; Salem, A.; Torkey, F.; Ramadan, M. An efficient enhanced k-means clustering algorithm. J. Zhejiang Univ. Sci. A 2006, 7, 1626–1633. [Google Scholar] [CrossRef]

- Lee, S.; Lee, W.; Chung, S.; An, D.; Bok, I.; Ryu, H. Selection of Cluster Hierarchy Depth in Hierarchical Clustering Using K-Means Algorithm. In Proceedings of the 2007 IEEE International Symposium on Information Technology Convergence (ISITC 2007), Jeonju, Korea, 23–24 November 2007; pp. 27–31. [Google Scholar]

- Cheng, L.; Sun, Y.; Wei, J. A Text Clustering Algorithm Combining K-Means and Local Search Mechanism. In Proceedings of the 2009 IEEE International Conference on Research Challenges in Computer Science, Shanghai, China, 28–29 December 2009; pp. 53–56. [Google Scholar]

- Bide, P.; Shedge, R. Improved Document Clustering using k-means algorithm. In Proceedings of the 2015 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, India, 5–7 March 2015; pp. 1–5. [Google Scholar]

- Gupta, S.; Kumar, R.; Lu, K.; Moseley, B.; Vassilvitskii, S. Local search methods for k-means with outliers. Proc. VLDB Endow. 2017, 10, 757–768. [Google Scholar] [CrossRef]

- Chen, J.; Lin, X.; Xuan, Q.; Xiang, Y. FGCH: A fast and grid based clustering algorithm for hybrid data stream. Appl. Intell. 2019, 49, 1228–1244. [Google Scholar] [CrossRef]

- Bhopale, A.P.; Tiwari, A. Swarm optimized cluster based framework for information retrieval. Expert Syst. Appl. 2020, 154, 113441:1–113441:16. [Google Scholar] [CrossRef]

- Froud, H.; Benslimane, R.; Lachkar, A.; Ouatik, S.A. Stemming and similarity measures for Arabic Documents Clustering. In Proceedings of the 2010 5th IEEE International Symposium On I/V Communications and Mobile Network, Rabat, Morocco, 30 September–2 October 2010; pp. 1–4. [Google Scholar]

- Al-Sulaiti, L.; Atwell, E.S. The design of a corpus of Contemporary Arabic. Int. J. Corpus Linguist. 2006, 11, 135–171. [Google Scholar] [CrossRef]

- Al-Omari, O.M. Evaluating the effect of stemming in clustering of Arabic documents. Acad. Res. Int. 2011, 1, 284–291. [Google Scholar]

- Ghanem, O.A.; Ashour, W.M. Stemming effectiveness in clustering of Arabic documents. Int. J. Comput. Appl. 2012, 49, 1–6. [Google Scholar]

- Saad, M.K.; Ashour, W.M. OSAC: Open source Arabic corpora. In Proceedings of the Sixth International Symposium on Electrical and Electronics Engineering and Computer Science (EEECS’10), Lefke, Cyprus, 25–26 November 2010; pp. 118–123. [Google Scholar]

- Sahmoudi, I.; Lachkar, A. Clustering web search results for effective Arabic language browsing. Int. J. Nat. Lang. Comput. (IJNLC) 2013, 2, 17–31. [Google Scholar] [CrossRef]

- Sahmoudi, I.; Lachkar, A. Interactive system based on web search results clustering for Arabic query reformulation. In Proceedings of the 2014 Third IEEE International Colloquium in Information Science and Technology (CIST), Tetouan, Morocco, 20–22 October 2014; pp. 300–305. [Google Scholar]

- Sahmoudi, I.; Lachkar, A. Formal concept analysis for Arabic web search results clustering. J. King Saud Univ. Comput. Inf. Sci. 2017, 29, 196–203. [Google Scholar] [CrossRef]

- Alghamdi, H.M.; Selamat, A.; Abdulkarim, N.S. Arabic web pages clustering and annotation using semantic class features. J. King Saud Univ. Comput. Inf. Sci. 2014, 26, 388–397. [Google Scholar] [CrossRef]

- Mousser, J. A Large Coverage Verb Taxonomy for Arabic. In Proceedings of the 7th Conference on International Language Resources and Evaluation (LREC’10), Valletta, Malta, 17–23 May 2010; pp. 2675–2681. [Google Scholar]

- Fejer, H.N.; Omar, N. Automatic Arabic text summarization using clustering and keyphrase extraction. In Proceedings of the 6th IEEE International Conference on Information Technology and Multimedia, Putrajaya, Malaysia, 18–20 November 2014; pp. 293–298. [Google Scholar]

- Abuaiadah, D. Using bisect k-means clustering technique in the analysis of Arabic documents. ACM Trans. Asian Low-Resour. Lang. Inf. Process. (TALLIP) 2016, 15, 1–13. [Google Scholar] [CrossRef]

- Abuaiadah, D.; El Sana, J.; Abusalah, W. On the impact of dataset characteristics on Arabic document classification. Int. J. Comput. Appl. 2014, 101, 31–38. [Google Scholar] [CrossRef]

- Alghamdi, H.M.; Selamat, A. Arabic Web page clustering: A review. J. King Saud Univ. Comput. Inf. Sci. 2019, 31, 1–14. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Fakhry, A.E.; Abdel-Basset, M.; El-henawy, I. Arabic text clustering using improved clustering algorithms with dimensionality reduction. Clust. Comput. 2019, 22, 4535–4549. [Google Scholar] [CrossRef]

- Taghva, K.; Elkhoury, R.; Coombs, J. Arabic stemming without a root dictionary. In Proceedings of the International Conference on Information Technology: Coding and Computing (ITCC’05)-Volume II, Las Vegas, NV, USA, 4–6 April 2005; Volume 1, pp. 152–157. [Google Scholar]

- Khoja, S.; Garside, R. Stemming Arabic Text; Computing Department, Lancaster University: Lancaster, UK, 1999. [Google Scholar]

- Harman, D. How effective is suffixing? J. Am. Soc. Inf. Sci. 1991, 42, 7–15. [Google Scholar] [CrossRef]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

| ـهم | ت | كاتب | و |

|---|---|---|---|

| hm | t | kAtb | w |

| conjunction | verb | subject pronoun | object pronoun |

| (ظهر : Zhr) | (عام : EAm) | (فطر : fTr) | (سبح : sbH) | (انف : Anf) |

| (فجر : fjr) | (حمام : HmAm) | (فك : fk) | (شاب : $Ab) | (عد : Ed) |

| (كتب : ktb) | (هند : hnd) | (خال : xAl) | (شب : $b) | (ناس : nAs) |

| (ذهب : *hb) | (امر : Amr) | (كاحل : kAHl) | (شرح : $rH) | (حاجب : HAjb) |

| (جد : jd) | (اذن : A*n) | (لحم : lHm) | (سن : sn) | (قسم : qsm) |

| (نفسي : nfsy) | (بسط : bsT) | (لوح : lwH) | (سر : sr) | (شهد : $hd) |

| (شعر : $Er) | (ضل : Dl) | (نبع : nbE) | (قف : qf) | (تولى : twlY) |

| (شال : $Al) | (عين : Eyn) | (نص : nS) | (تحرير : tHryr) | (مال : mAl) |

| (ماك : mAk) | (فريد : fryd) | (نمر : nmr) | (تمر : tmr) | (طرف : Trf) |

| (شارب : $Arb) | (فصل : fSl) | (قص : qS) | (تسويق : tswyq) | (كره : krh) |

| Hard Clustering | Soft Clustering | |||

|---|---|---|---|---|

| Gap | Gap + | Gap | Gap + | |

| Statistics | Elbow | Statistics | Elbow | |

| Number of correct answers | 19 | 30 | 40 | 47 |

| Accuracy | 38% | 60% | 82% | 94% |

| Label (Stemmed) | Label (Unstemmed) | |||

|---|---|---|---|---|

| Clus # | Term | Score | Term | Score |

| 0 | (ذهب : *hb) | 9.991 | (الذهب : Al*hb) | 10.395 |

| (اسعار : AsEAr) | 6.080 | (اسعار : >sEAr) | 5.663 | |

| (عيار : EyAr) | 3.664 | (سعر : sEr) | 4.223 | |

| (سعر : sEr) | 3.608 | (عيار : EyAr) | 3.967 | |

| (ثلاثاء : vlAvA’) | 2.961 | (الثلاثاء : AlvlAvA’) | 3.615 | |

| 1 | (ذهب : *hb) | 1.650 | (الذهب : Al*hb) | 0.899 |

| (عمل : Eml) | 1.407 | (ذهبت : *hbt) | 0.746 | |

| (ولد : wld) | 1.179 | (وقت : wqt) | 0.668 | |

| (مشاهير : m$Ahyr) | 1.179 | (مسلسل : mslsl) | 0.650 | |

| (اغنياء : AgnyA’) | 1.121 | (ذهبا : *hbA) | 0.631 | |

| 2 | (ذهب : *hb) | 0.739 | (تعرف : tErf) | 0.924 |

| (حديث : Hdyv) | 0.622 | (بياجيه : byAjyh) | 0.675 | |

| (جرام : jrAm) | 0.613 | (اسود : Aswd) | 0.592 | |

| (شبكة : $bkp) | 0.529 | (ايقاع : AyqAE) | 0.577 | |

| (يبلغ : yblg) | 0.466 | (مي : my) | 0.577 | |

| Term | Stemming | No Stemming | ||||

|---|---|---|---|---|---|---|

| Purity | Entropy | Time | Purity | Entropy | Time | |

| كتب | 0.95 | 0.28 | 8.90 | 0.95 | 0.28 | 11.40 |

| ذهب | 0.85 | 0.47 | 6.20 | 0.87 | 0.38 | 6.00 |

| شعر | 0.64 | 0.68 | 6.40 | 0.61 | 0.78 | 7.80 |

| قف | 0.56 | 0.69 | 2.80 | 0.54 | 0.69 | 14.00 |

| شهد | 0.53 | 0.90 | 3.45 | 0.54 | 0.90 | 9.18 |

| Measure | Paired Differences | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 95% C.I. | ||||||||||

| Mean | SD | SE | Lower | Upper | t | df | p (2-tailed) | tcrit | Significant | |

| Purity | 0.0118 | 0.0626 | 0.0088 | −0.006 | 0.0296 | 1.3314 | 49 | 0.1892 | 2.0096 | No |

| Entropy | 0.0047 | 0.0671 | 0.0095 | −0.0144 | 0.0238 | 0.4935 | 49 | 0.6238 | 2.0096 | No |

| Time | 1.9798 | 2.9134 | 0.4120 | 1.1518 | 2.8078 | 4.8051 | 49 | 0.000015 | 2.0096 | Yes |

| Term | Centroid to Its Datapoints (avg) | Between the Centroids | ||

|---|---|---|---|---|

| Stemmed | Unstemmed | Stemmed | Unstemmed | |

| عام | 71.09 | 78.16 | 117.00 | 153.00 |

| كتب | 60.93 | 66.95 | 80.50 | 80.50 |

| بسط | 126.56 | 127.31 | 211.00 | 321.50 |

| كاحل | 95.29 | 95.31 | 146.00 | 146.00 |

| انف | 42.39 | 61.04 | 100.50 | 116.50 |

| سر | 63.30 | 98.05 | 106.50 | 159.50 |

| Terms | Stemming | No Stemming | ||||||

|---|---|---|---|---|---|---|---|---|

| k-Means | Enhanced k-Means | k-Means | Enhanced k-Means | |||||

| Purity | Time | Purity | Time | Purity | Time | Purity | Time | |

| كتب | 0.95 | 17.46 | 0.95 | 8.90 | 0.95 | 13.30 | 0.95 | 11.40 |

| ذهب | 0.85 | 11.14 | 0.85 | 6.20 | 0.85 | 9.38 | 0.87 | 6.00 |

| شعر | 0.57 | 8.55 | 0.64 | 6.40 | 0.60 | 10.31 | 0.61 | 7.80 |

| قف | 0.53 | 22.00 | 0.56 | 2.80 | 0.53 | 29.24 | 0.54 | 14.00 |

| شهد | 0.57 | 15.00 | 0.53 | 3.45 | 0.53 | 14.74 | 0.54 | 9.18 |

| Paired Diff. | |||||||

|---|---|---|---|---|---|---|---|

| Meas. | Stem. | Mean | SD | t | p (2-tail) | tcrit | Signif. |

| Purity | No | 0.011 | 0.024 | 3.247 | 0.002 | 2.01 | Yes |

| Yes | 0.023 | 0.033 | 4.673 | 2.35 × 10−5 | 2.01 | Yes | |

| Time | No | −7.256 | 5.675 | −9.042 | 5.11 × 10−12 | 2.01 | Yes |

| Yes | −8.829 | 5.929 | −10.53 | 3.52 × 10−14 | 2.01 | Yes | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsuhaim, A.F.; Azmi, A.M.; Hussain, M. Improving the Retrieval of Arabic Web Search Results Using Enhanced k-Means Clustering Algorithm. Entropy 2021, 23, 449. https://doi.org/10.3390/e23040449

Alsuhaim AF, Azmi AM, Hussain M. Improving the Retrieval of Arabic Web Search Results Using Enhanced k-Means Clustering Algorithm. Entropy. 2021; 23(4):449. https://doi.org/10.3390/e23040449

Chicago/Turabian StyleAlsuhaim, Amjad F., Aqil M. Azmi, and Muhammad Hussain. 2021. "Improving the Retrieval of Arabic Web Search Results Using Enhanced k-Means Clustering Algorithm" Entropy 23, no. 4: 449. https://doi.org/10.3390/e23040449

APA StyleAlsuhaim, A. F., Azmi, A. M., & Hussain, M. (2021). Improving the Retrieval of Arabic Web Search Results Using Enhanced k-Means Clustering Algorithm. Entropy, 23(4), 449. https://doi.org/10.3390/e23040449