Inferring What to Do (And What Not to)

Abstract

1. Introduction

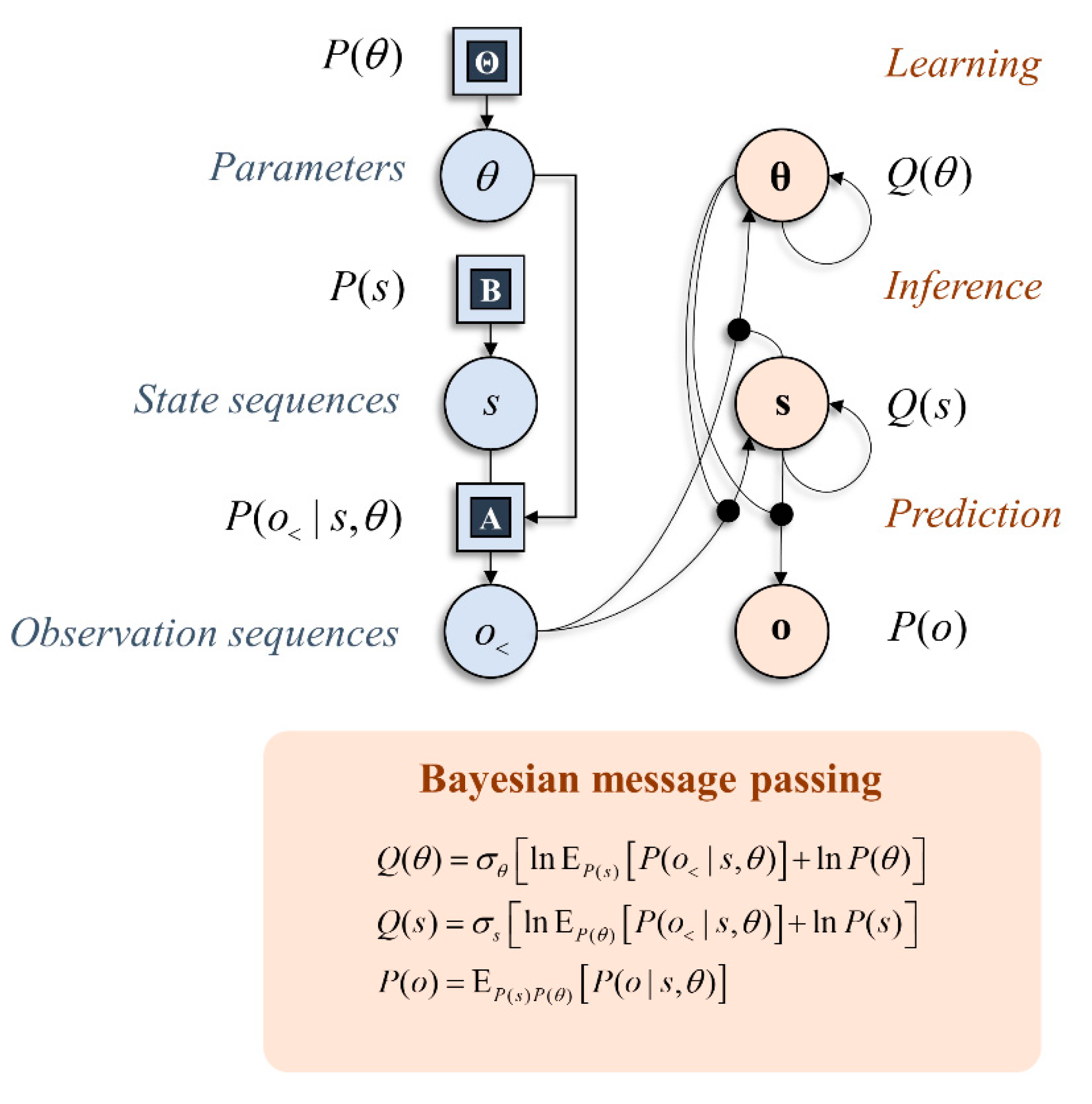

2. Graphical Models and Inference

3. Information and Experimental Design

3.1. Mutual Information

3.2. Attention Networks and Disconnection

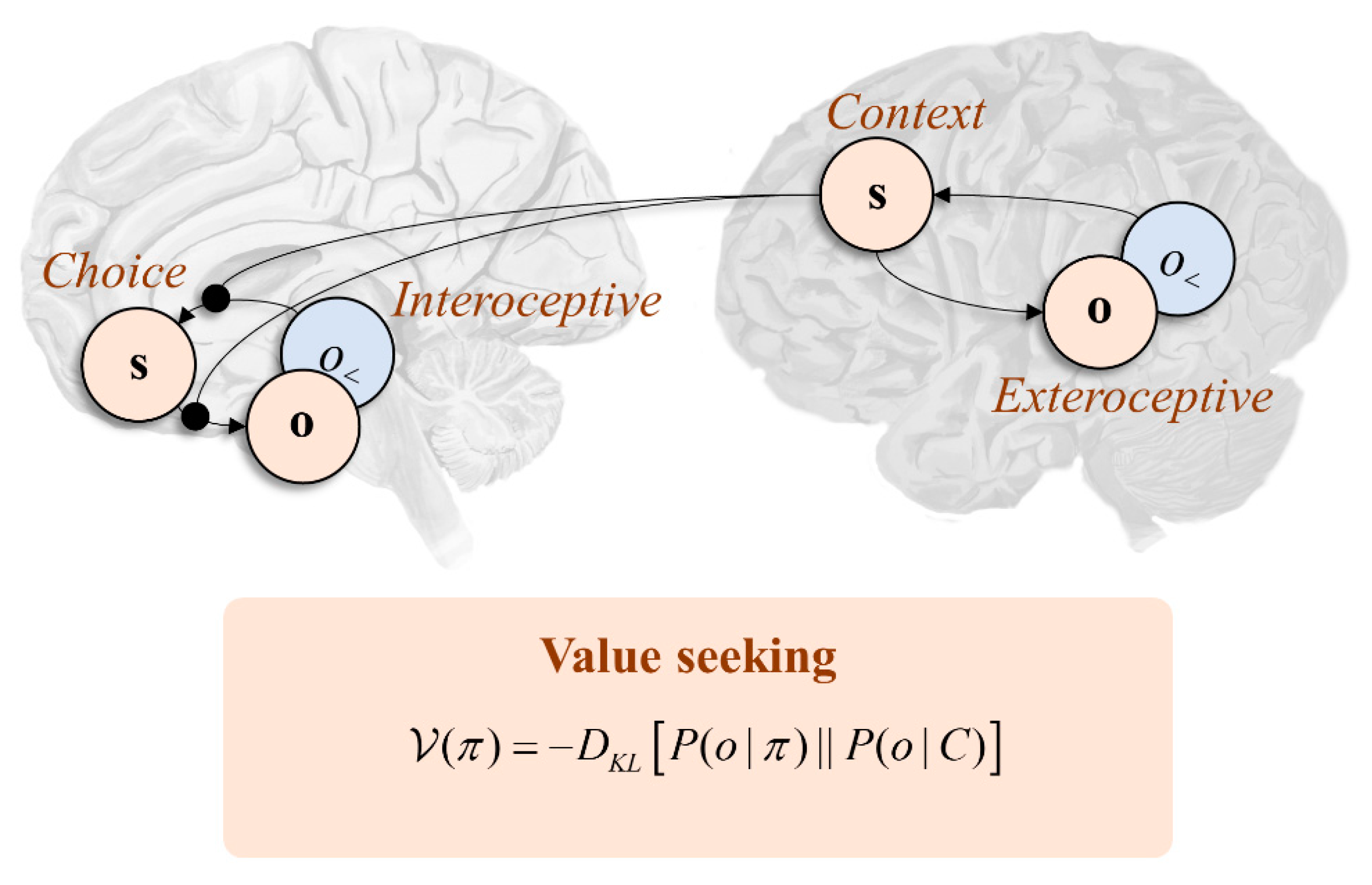

4. Utility and Steady State

4.1. KL-Control

4.2. Prefrontal Cortex

5. Planning as Inference

5.1. Expected Free Energy

5.2. Perseveration

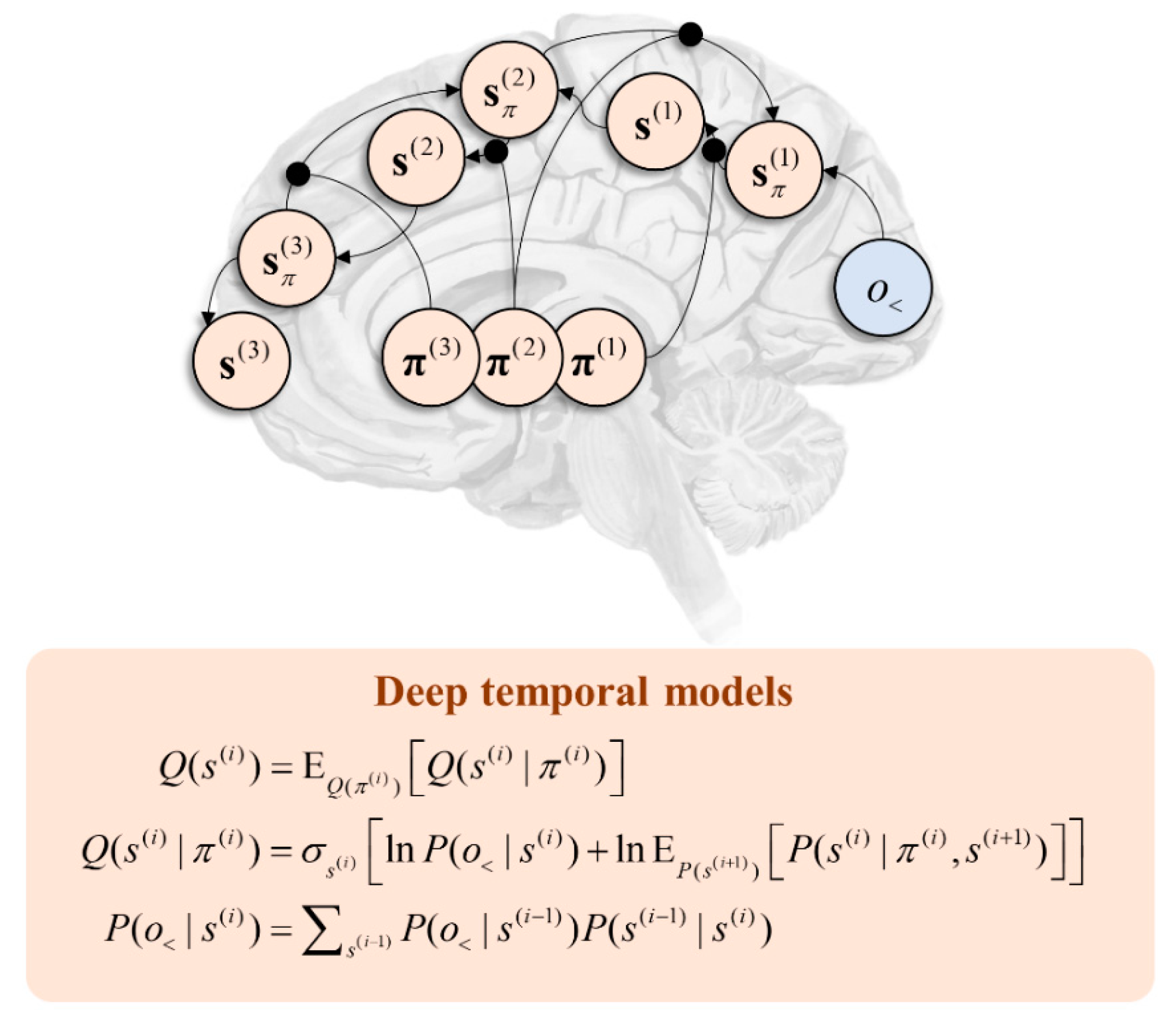

6. Deep Generative Models

6.1. Temporal Hierarchies

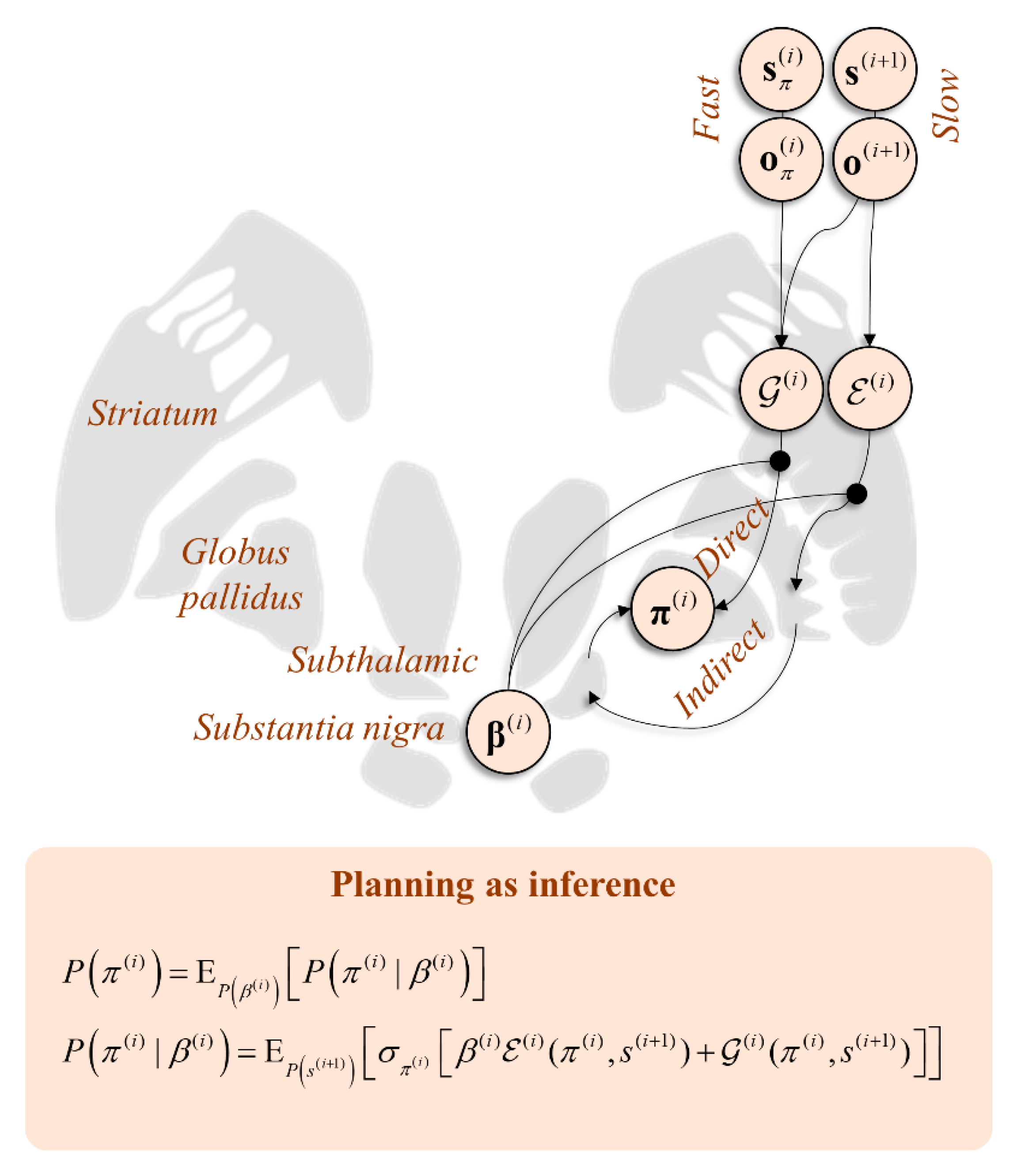

6.2. Direct and Indirect Pathways

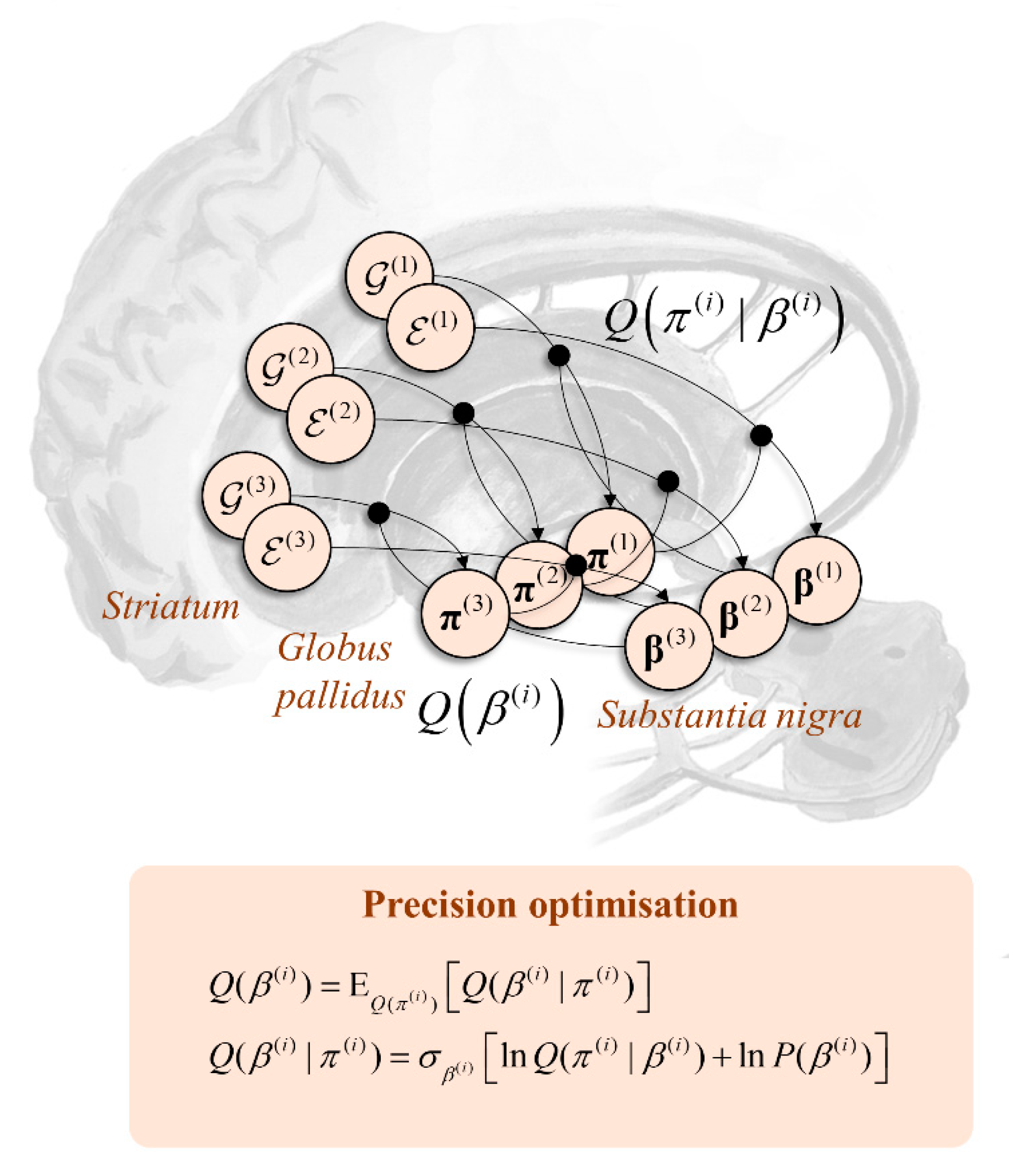

7. Precision Optimisation

7.1. Reciprocal Messages

7.2. Nigrostriatal Loops

8. Discussion

9. Conclusions

Funding

Conflicts of Interest

References

- Attias, H. Planning by Probabilistic Inference. In Proceedings of the 9th International Workshop on Artificial Intelligence and Statistics, Key West, FL, USA, 3–6 January 2003. [Google Scholar]

- Botvinick, M.; Toussaint, M. Planning as inference. Trends Cogn. Sci. 2012, 16, 485–488. [Google Scholar] [CrossRef]

- Friston, K.; Samothrakis, S.; Montague, R. Active inference and agency: Optimal control without cost functions. Biol. Cybern. 2012, 106, 523–541. [Google Scholar] [CrossRef]

- Berger-Tal, O.; Nathan, J.; Meron, E.; Saltz, D. The Exploration-Exploitation Dilemma: A Multidisciplinary Framework. PLoS ONE 2014, 9, e95693. [Google Scholar] [CrossRef]

- Lindley, D.V. On a Measure of the Information Provided by an Experiment. Ann. Math. Statist. 1956, 27, 986–1005. [Google Scholar] [CrossRef]

- Itti, L.; Baldi, P. Bayesian surprise attracts human attention. Adv. Neural Inf. Process. Syst. 2006, 18, 547. [Google Scholar] [CrossRef]

- Oudeyer, P.-Y.; Kaplan, F. What is Intrinsic Motivation? A Typology of Computational Approaches. Front. Neurorobot. 2007, 1, 6. [Google Scholar] [CrossRef]

- Biehl, M.; Guckelsberger, C.; Salge, C.; Smith, S.C.; Polani, D. Expanding the Active Inference Landscape: More Intrinsic Motivations in the Perception-Action Loop. Front. Neurorobot. 2018, 12, 45. [Google Scholar] [CrossRef]

- Todorov, E. Linearly-solvable Markov decision problems. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007. [Google Scholar]

- Kinjo, K.; Uchibe, E.; Doya, K. Evaluation of linearly solvable Markov decision process with dynamic model learning in a mobile robot navigation task. Front. Neurorobot. 2013, 7, 7. [Google Scholar] [CrossRef]

- Parr, T.; Markovic, D.; Kiebel, S.J.; Friston, K.J. Neuronal message passing using Mean-field, Bethe, and Marginal approximations. Sci. Rep. 2019, 9, 1889. [Google Scholar] [CrossRef]

- Parr, T.; Friston, K.J. The Anatomy of Inference: Generative Models and Brain Structure. Front. Comput. Neurosci. 2018, 12. [Google Scholar] [CrossRef]

- Jahanshahi, M.; Obeso, I.; Rothwell, J.C.; Obeso, J.A. A fronto-striato-subthalamic-pallidal network for goal-directed and habitual inhibition. Nat. Rev. Neurosci. 2015, 16, 719–732. [Google Scholar] [CrossRef]

- Gurney, K.; Prescott, T.J.; Redgrave, P. A computational model of action selection in the basal ganglia. I. A new functional anatomy. Biol. Cybern. 2001, 84, 401–410. [Google Scholar] [CrossRef]

- Fuster, J.M.; Bodner, M.; Kroger, J.K. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature 2000, 405, 347–351. [Google Scholar] [CrossRef]

- van de Laar, T.; de Vries, B. A Probabilistic Modeling Approach to Hearing Loss Compensation. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 2200–2213. [Google Scholar] [CrossRef]

- Forney, G.D., Jr.; Vontobel, P.O. Partition functions of normal factor graphs. arXiv 2011, arXiv:1102.0316. [Google Scholar]

- Dauwels, J. On variational message passing on factor graphs. In Proceedings of the ISIT 2007 IEEE International Symposium on Information Theory, Nice, France, 24–29 June 2007. [Google Scholar]

- Loeliger, H.A. An introduction to factor graphs. IEEE Signal Process. Mag. 2004, 21, 28–41. [Google Scholar] [CrossRef]

- de Vries, B.; Friston, K.J. A Factor Graph Description of Deep Temporal Active Inference. Front. Comput. Neurosci. 2017, 11. [Google Scholar] [CrossRef]

- Loeliger, H.A.; Dauwels, J.; Hu, J.; Korl, S.; Ping, L.; Kschischang, F.R. The factor graph approach to model-based signal processing. Proc. IEEE 2007, 95, 1295–1322. [Google Scholar] [CrossRef]

- Hohwy, J. The Self-Evidencing Brain. Noûs 2016, 50, 259–285. [Google Scholar] [CrossRef]

- Hohwy, J. Self-supervision, normativity and the free energy principle. Synthese 2020. [Google Scholar] [CrossRef]

- van de Laar, T.W.; de Vries, B. Simulating Active Inference Processes by Message Passing. Front. Robot. AI 2019, 6. [Google Scholar] [CrossRef]

- Friston, K.; FitzGerald, T.; Rigoli, F.; Schwartenbeck, P.; Pezzulo, G. Active Inference: A Process. Theory. Neural Comput. 2017, 29, 1–49. [Google Scholar] [CrossRef]

- Hebb, D.O. The first stage of perception: Growth of the assembly. Organ. Behav. 1949, 60–78. [Google Scholar] [CrossRef]

- Brown, T.H.; Zhao, Y.; Leung, V. Hebbian Plasticity A2. In Encyclopedia of Neuroscience; Squire, L.R., Ed.; Academic Press: Oxford, UK, 2009; pp. 1049–1056. [Google Scholar]

- George, D.; Hawkins, J. Towards a mathematical theory of cortical micro-circuits. PLoS Comput. Biol. 2009, 5, e1000532. [Google Scholar] [CrossRef]

- Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference; Morgan Kaufmann: San Fransisco, CA, USA, 1988. [Google Scholar]

- Clark, A. A nice surprise? Predictive processing and the active pursuit of novelty. Phenomenol. Cogn. Sci. 2018, 17, 521–534. [Google Scholar] [CrossRef]

- MacKay, D.J. Information-based objective functions for active data selection. Neural Comput. 1992, 4, 590–604. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vis. Res. 2000, 40, 1489–1506. [Google Scholar] [CrossRef]

- Mirza, M.B.; Adams, R.A.; Mathys, C.D.; Friston, K.J. Scene Construction, Visual Foraging, and Active Inference. Front. Comput. Neurosci. 2016, 10. [Google Scholar] [CrossRef]

- Andreopoulos, A.; Tsotsos, J. A computational learning theory of active object recognition under uncertainty. Int. J. Comput. Vis. 2013, 101, 95–142. [Google Scholar] [CrossRef]

- Ognibene, D.; Baldassarre, G. Ecological Active Vision: Four Bio-Inspired Principles to Integrate Bottom-Up and Adaptive Top-Down Attention Tested with a Simple Camera-Arm Robot. IEEE Trans. Auton. Ment. Dev. 2014, 7, 3–25. [Google Scholar] [CrossRef]

- Wurtz, R.H.; McAlonan, K.; Cavanaugh, J.; Berman, R.A. Thalamic pathways for active vision. Trends Cogn. Sci. 2011, 5, 177–184. [Google Scholar] [CrossRef]

- Friston, K.; Adams, R.A.; Perrinet, L.; Breakspear, M. Perceptions as Hypotheses: Saccades as Experiments. Front.Psychol. 2012, 3, 151. [Google Scholar] [CrossRef]

- Mirza, M.B.; Adams, R.A.; Mathys, C.; Friston, K.J. Human visual exploration reduces uncertainty about the sensed world. PLoS ONE 2018, 13, e0190429. [Google Scholar] [CrossRef]

- Yang, S.C.; Lengyel, M.; Wolpert, D.M. Active sensing in the categorization of visual patterns. eLife 2016, 5, e12215. [Google Scholar] [CrossRef]

- Halligan, P.W.; Marshall, J.C. Neglect of Awareness. Conscious. Cogn. 1998, 7, 356–380. [Google Scholar] [CrossRef]

- Albert, M.L. A simple test of visual neglect. Neurology 1973, 23, 658. [Google Scholar] [CrossRef]

- Fullerton, K.J.; McSherry, D.; Stout, R.W. Albert’s Test: A Neglected Test. of Perceptual Neglect. Lancet 1986, 327, 430–432. [Google Scholar] [CrossRef]

- Husain, M.; Mannan, S.; Hodgson, T.; Wojciulik, E.; Driver, J.; Kennard, C. Impaired spatial working memory across saccades contributes to abnormal search in parietal neglect. Brain 2001, 124, 941–952. [Google Scholar] [CrossRef]

- Malhotra, P.; Mannan, S.; Driver, J.; Husain, M. Impaired Spatial Working Memory: One Component of the Visual Neglect Syndrome? Cortex 2004, 40, 667–676. [Google Scholar] [CrossRef]

- Mannan, S.K.; Mort, D.J.; Hodgson, T.L.; Driver, J. Revisiting Previously Searched Locations in Visual Neglect: Role of Right Parietal and Frontal Lesions in Misjudging Old Locations as New. J. Cogn. Neurosci. 2005, 17, 340–354. [Google Scholar] [CrossRef]

- Corbetta, M.; Shulman, G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002, 3, 201–215. [Google Scholar] [CrossRef] [PubMed]

- Bartolomeo, P.; Thiebaut de Schotten, M.; Chica, A.B. Brain networks of visuospatial attention and their disruption in visual neglect. Front. Hum. Neurosci. 2012, 6, 110. [Google Scholar] [CrossRef] [PubMed]

- Makris, N.; Kennedy, D.N.; McInerney, S.; Sorensen, A.G.; Wang, R.; Caviness, V.S., Jr.; Pandya, D.N. Segmentation of Subcomponents within the Superior Longitudinal Fascicle in Humans: A Quantitative, In Vivo, DT-MRI Study. Cereb. Cortex 2004, 15, 854–869. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Goel, A.; Jhawar, S.S.; Patil, A.; Rangnekar, R.; Goel, A. Neural Circuitry: Architecture and Function—A Fiber Dissection Study. World Neurosurg. 2019, 125, e620–e638. [Google Scholar] [CrossRef]

- de Schotten, M.T.; Dell’Acqua, F.; Forkel, S.; Simmons, A.; Vergani, F.; Murphy, D.G.M.; Catani, M. A lateralized brain network for visuospatial attention. Nat. Neurosci. 2011, 14, 1245–1246. [Google Scholar] [CrossRef]

- Zimmermann, E.; Lappe, M. Visual Space Constructed by Saccade Motor Maps. Front. Hum. Neurosci. 2016, 10. [Google Scholar] [CrossRef][Green Version]

- Parr, T.; Mirza, M.B.; Cagnan, H.; Friston, K.J. Dynamic causal modelling of active vision. J. Neurosci. 2019, 39, 6265–6275. [Google Scholar] [CrossRef]

- Parr, T.; Friston, K.J. The Computational Anatomy of Visual Neglect. Cereb. Cortex 2018, 28, 1–14. [Google Scholar] [CrossRef]

- Parr, T.; Friston, K.J. Active Inference, Novelty and Neglect. In Processes of Visuospatial Attention and Working Memory; Hodgson, T., Ed.; Springer International Publishing: Cham, Germany, 2019; pp. 115–128. [Google Scholar]

- Parr, T.; Friston, K.J. Uncertainty, epistemics and active inference. J. R. Soc. Interface 2017, 14. [Google Scholar] [CrossRef]

- Cannon, W.B. Organization for physiological homeostasis. Physiol. Rev. 1929, 9, 399–431. [Google Scholar] [CrossRef]

- Kwon, C.; Ao, P.; Thouless, D.J. Structure of stochastic dynamics near fixed points. Proc. Natl. Acad. Sci. USA 2005, 102, 13029. [Google Scholar] [CrossRef] [PubMed]

- Parr, T.; Costa, L.D.; Friston, K. Markov blankets, information geometry and stochastic thermodynamics. Philos. Trans. Royal Soc. A Math. Phys. Eng. Sci. 2020, 378, 20190159. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. A free energy principle for a particular physics. arXiv 2019, arXiv:1906.10184. [Google Scholar]

- Friston, K.; Ao, P. Free Energy, Value, and Attractors. Comput. Math. Methods Med. 2012, 2012, 27. [Google Scholar] [CrossRef]

- Friston, K. Life as we know it. J. R. Soc. Interface 2013, 10. [Google Scholar] [CrossRef]

- Tanji, J.; Hoshi, E. Behavioral planning in the prefrontal cortex. Curr. Opin. Neurobiol. 2001, 11, 164–170. [Google Scholar] [CrossRef]

- Szczepanski, S.M.; Knight, R.T. Insights into Human Behavior from Lesions to the Prefrontal Cortex. Neuron 2014, 83, 1002–1018. [Google Scholar] [CrossRef]

- Funahashi, S. Functions of delay-period activity in the prefrontal cortex and mnemonic scotomas revisited. Front. Syst. Neurosci. 2015, 9. [Google Scholar] [CrossRef]

- Barbas, H. General Cortical and Special Prefrontal Connections: Principles from Structure to Function. Annu. Rev. Neurosci. 2015, 38, 269–289. [Google Scholar] [CrossRef]

- Barbas, H.; Zikopoulos, B. The Prefrontal Cortex and Flexible Behavior. Neuroscientist 2007, 13, 532–545. [Google Scholar] [CrossRef]

- Price, J.L.; Carmichael, S.T.; Drevets, W.C. Networks related to the orbital and medial prefrontal cortex; a substrate for emotional behavior? In Progress in Brain Research; Holstege, G., Bandler, R., Saper, C.B., Eds.; Elsevier: Amsterdam, The Netherlands, 1996; Chapter 31; pp. 523–536. [Google Scholar]

- Nee, D.E.; D’Esposito, M. The hierarchical organization of the lateral prefrontal cortex. eLife 2016, 5, e12112. [Google Scholar] [CrossRef]

- Ondobaka, S.; Kilner, J.; Friston, K. The role of interoceptive inference in theory of mind. Brain Cogn. 2017, 112, 64–68. [Google Scholar] [CrossRef] [PubMed]

- Seth, A.K.; Friston, K.J. Active interoceptive inference and the emotional brain. Philos. Trans. Royal Soc. B Biol. Sci. 2016, 371, 20160007. [Google Scholar] [CrossRef]

- Seth, A.K. Interoceptive inference, emotion, and the embodied self. Trends Cogn. Sci. 2013, 17, 565–573. [Google Scholar] [CrossRef] [PubMed]

- Corcoran, A.W.; Hohwy, J. Allostasis, interoception, and the free energy principle: Feeling our way forward. In The Interoceptive Mind: From Homeostasis to Awareness; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Corcoran, A.W.; Pezzulo, G.; Hohwy, J. From allostatic agents to counterfactual cognisers: Active inference, biological regulation, and the origins of cognition. Biol. Philos. 2020, 35. [Google Scholar] [CrossRef]

- Allen, M.; Levy, A.; Parr, T.; Friston, K.J. In the Body’s Eye: The Computational Anatomy of Interoceptive Inference. BioRxiv 2019, 603928. [Google Scholar] [CrossRef]

- Wimmer, R.D.; Schmitt, L.I.; Davidson, T.J.; Nakajima, M.; Deisseroth, K.; Halassa, M.M. Thalamic control of sensory selection in divided attention. Nature 2015, 526, 705. [Google Scholar] [CrossRef]

- Goldman-Rakic, P.S. Circuitry of Primate Prefrontal Cortex and Regulation of Behavior by Representational Memory. In Comprehensive Physiology; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2011. [Google Scholar]

- Arnsten, A.F.T. The Neurobiology of Thought: The Groundbreaking Discoveries of Patricia Goldman-Rakic 1937–2003. Cereb. Cortex 2013, 23, 2269–2281. [Google Scholar] [CrossRef]

- Coull, J.T. Neural correlates of attention and arousal: Insights from electrophysiology, functional neuroimaging and psychopharmacology. Progress Neurobiol. 1998, 55, 343–361. [Google Scholar] [CrossRef]

- Parr, T.; Rikhye, R.V.; Halassa, M.M.; Friston, K.J. Prefrontal Computation as Active Inference. Cereb. Cortex 2020, 30, 682–695. [Google Scholar] [CrossRef]

- Damasio, A.R. The somatic marker hypothesis and the possible functions of the prefrontal cortex. Philos. Trans. R. Soc. Lond. B 1996, 351, 1413–1420. [Google Scholar]

- Barbas, H.; García-Cabezas, M.Á. How the prefrontal executive got its stripes. Curr. Opin. Neurobiol. 2016, 40, 125–134. [Google Scholar] [CrossRef]

- Gu, X.; Hof, P.R.; Friston, K.J.; Fan, J. Anterior Insular Cortex and Emotional Awareness. J. Comp. Neurol. 2013, 521, 3371–3388. [Google Scholar] [CrossRef]

- Mufson, E.J.; Mesulam, M.M.; Pandya, D.N. Insular interconnections with the amygdala in the rhesus monkey. Neuroscience 1981, 6, 1231–1248. [Google Scholar] [CrossRef]

- Devinsky, O.; Morrell, M.J.; Vogt, B.A. Contributions of anterior cingulate cortex to behaviour. Brain 1995, 118, 279–306. [Google Scholar] [CrossRef] [PubMed]

- Romanski, L.M.; Tian, B.; Fritz, J.; Mishkin, M.; Goldman-Rakicm, P.S.; Goldman-Rakic, J.P. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat. Neurosci. 1999, 2, 1131–1136. [Google Scholar] [CrossRef] [PubMed]

- Wilson, F.A.; Scalaidhe, S.P.; Goldman-Rakic, P.S. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science 1993, 260, 1955. [Google Scholar] [CrossRef]

- O’Callaghan, C.; Vaghi, M.M.; Brummerloh, B.; Cardinal, R.N.; Robbins, T.W. Impaired awareness of action-outcome contingency and causality during healthy ageing and following ventromedial prefrontal cortex lesions. Neuropsychologia 2018, 128, 282–289. [Google Scholar]

- Harlow, J.M. Passage of an Iron Rod Through the Head. J. Neuropsychiatry Clinical Neurosci. 1999, 11, 281–283. [Google Scholar] [CrossRef]

- Eslinger, P.J.; Damasio, A.R. Severe disturbance of higher cognition after bilateral frontal lobe ablation: Patient EVR. Neurology 1985, 35, 1731. [Google Scholar] [CrossRef]

- Damasio, H.; Grabowski, T.; Frank, R.; Galaburda, A.M.; Damasio, A.R. The return of Phineas Gage: Clues about the brain from the skull of a famous patient. Science 1994, 264, 1102–1105. [Google Scholar] [CrossRef] [PubMed]

- Papez, J.W. A proposed mechanism of emotion. Arch. Neurol. Psychiatry 1937, 38, 725–743. [Google Scholar] [CrossRef]

- Bechara, A.; Damasio, A.R.; Damasio, H.; Anderson, S.W. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 1994, 50, 7–15. [Google Scholar] [CrossRef]

- Friston, K.; Rigoli, F.; Ognibene, D.; Mathys, C.; Fitzgerald, T.; Pezzulo, G. Active inference and epistemic value. Cogn. Neurosci. 2015, 6, 187–214. [Google Scholar] [CrossRef]

- Dayan, P.; Hinton, G.E.; Neal, R.M.; Zemel, R.S. The Helmholtz machine. Neural Comput. 1995, 7, 889–904. [Google Scholar] [CrossRef]

- Beal, M.J. Variational Algorithms for Approximate Bayesian Inference; University of London United Kingdom: London, UK, 2003. [Google Scholar]

- Freedman, M.; Black, S.; Ebert, P.; Binns, M. Orbitofrontal function, object alternation and perseveration. Cereb. Cortex 1998, 8, 18–27. [Google Scholar] [CrossRef]

- Nyhus, E.; Barceló, F. The Wisconsin Card Sorting Test and the cognitive assessment of prefrontal executive functions: A critical update. Brain Cogn. 2009, 71, 437–451. [Google Scholar] [CrossRef]

- O’Reilly, R.C. The What and How of prefrontal cortical organization. Trends Neurosci. 2010, 33, 355–361. [Google Scholar]

- Friston, K.J.; Rosch, R.; Parr, T.; Price, C.; Bowman, H. Deep temporal models and active inference. Neurosci. Biobehav. Rev. 2017, 77, 388–402. [Google Scholar] [CrossRef]

- Kiebel, S.J.; Daunizeau, J.; Friston, K.J. A Hierarchy of Time-Scales and the Brain. PLoS Comput. Biol. 2008, 4, e1000209. [Google Scholar] [CrossRef]

- Cocchi, L.; Sale, M.V.; Gollo, L.L.; Bell, P.T.; Nguyen, V.T.; Zalesky, A.; Breakspear, M.; Mattingley, J.B. A hierarchy of timescales explains distinct effects of local inhibition of primary visual cortex and frontal eye fields. Elife 2016, 5, e15252. [Google Scholar] [CrossRef] [PubMed]

- Hasson, U.; Yang, E.; Vallines, I.; Heeger, D.J.; Rubin, N. A Hierarchy of Temporal Receptive Windows in Human Cortex. J. Neurosci. Off. J. Soc. Neurosci. 2008, 28, 2539–2550. [Google Scholar] [CrossRef] [PubMed]

- Murray, J.D.; Bernacchia, A.; Freedman, D.J.; Romo, R.; Wallis, J.D.; Cai, X.; Padoa-Schioppa, C.; Pasternak, T.; Seo, H.; Lee, D.; et al. A hierarchy of intrinsic timescales across primate cortex. Nat. Neurosci. 2014, 17, 1661–1663. [Google Scholar] [CrossRef] [PubMed]

- Zeki, S.; Shipp, S. The functional logic of cortical connections. Nature 1988, 335, 311–317. [Google Scholar] [CrossRef]

- Felleman, D.J.; Van Essen, D.C. Distributed Hierarchical Processing in the Primate Cerebral Cortex. Cereb. Cortex 1991, 1, 1–47. [Google Scholar] [CrossRef]

- FitzGerald, T.; Dolan, R.; Friston, K. Model averaging, optimal inference, and habit formation. Front. Hum. Neurosci. 2014. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Lee, S.W.; Shimojo, S.; O’Doherty, J.P. Neural computations underlying arbitration between model-based and model-free learning. Neuron 2014, 81, 687–699. [Google Scholar] [CrossRef]

- Barceló, F.; Cooper, P.S. Quantifying Contextual Information for Cognitive Control. Front. Psychol. 2018, 9. [Google Scholar] [CrossRef]

- Galea, J.M.; Bestmann, S.; Beigi, M.; Jahanshahi, M.; Rothwell, J.C. Action Reprogramming in Parkinson’s Disease: Response to Prediction Error Is Modulated by Levels of Dopamine. J. Neurosci. 2012, 32, 542. [Google Scholar] [CrossRef]

- Frank, M.J. Dynamic Dopamine Modulation in the Basal Ganglia: A Neurocomputational Account of Cognitive Deficits in Medicated and Nonmedicated Parkinsonism. J. Cogn. Neurosci. 2005, 17, 51–72. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.; Schwartenbeck, P.; FitzGerald, T.; Moutoussis, M.; Behrens, T.; Dolan, R.J. The anatomy of choice: Dopamine and decision-making. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20130481. [Google Scholar] [CrossRef] [PubMed]

- Moss, J.; Bolam, J.P. A Dopaminergic Axon Lattice in the Striatum and Its Relationship with Cortical and Thalamic Terminals. J. Neurosci. 2008, 28, 11221. [Google Scholar] [CrossRef] [PubMed]

- Freeze, B.S.; Kravitz, A.V.; Hammack, N.; Berke, J.D.; Kreitzer, A.C. Control of Basal Ganglia Output by Direct and Indirect Pathway Projection Neurons. J. Neurosci. 2013, 33, 18531–18539. [Google Scholar] [CrossRef]

- Nambu, A. A new dynamic model of the cortico-basal ganglia loop. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 2004; pp. 461–466. [Google Scholar]

- Wall, N.R.; Parra, M.D.L.; Callaway, E.M.; Kreitzer, A.C. Differential innervation of direct- and indirect-pathway striatal projection neurons. Neuron 2013, 79, 347–360. [Google Scholar] [CrossRef] [PubMed]

- Gertler, T.S.; Chan, C.S.; Surmeier, D.J. Dichotomous Anatomical Properties of Adult Striatal Medium Spiny Neurons. J. Neurosci. 2008, 28, 10814. [Google Scholar] [CrossRef] [PubMed]

- Schwartenbeck, P.; FitzGerald, T.H.B.; Mathys, C.; Dolan, R.; Friston, K. The Dopaminergic Midbrain Encodes the Expected Certainty about Desired Outcomes. Cereb. Cortex 2015, 25, 3434–3445. [Google Scholar] [CrossRef]

- Schultz, W.; Dayan, P.; Montague, P.R. A Neural Substrate of Prediction and Reward. Science 1997, 275, 1593. [Google Scholar] [CrossRef]

- Haber, S.N. The primate basal ganglia: Parallel and integrative networks. J. Chem. Neuroanat. 2003, 26, 317–330. [Google Scholar] [CrossRef]

- Hesp, C.; Smit, R.; Allen, M.; Friston, K.; Ramstead, M. Deeply felt affect: The emergence of valence in deep active inference. PsyArXiv 2019. [Google Scholar] [CrossRef]

- Seamans, J.K.; Yang, C.R. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Prog. Neurobiol. 2004, 74, 1–58. [Google Scholar] [CrossRef] [PubMed]

- Parr, T.; Friston, K.J. Attention or salience? Curr. Opin. Psychol. 2019, 29, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Sadeh, N.; Javdani, S.; Jackson, J.J.; Reynolds, E.K.; Potenza, M.N.; Gelernter, J.; Lejuez, C.W.; Verona, E. Serotonin transporter gene associations with psychopathic traits in youth vary as a function of socioeconomic resources. J. Abnorm. Psychol. 2010, 119, 604–609. [Google Scholar] [CrossRef] [PubMed]

- Prosser, A.; Friston, K.J.; Bakker, N.; Parr, T. A Bayesian Account of Psychopathy: A Model of Lacks Remorse and Self-Aggrandizing. Comput. Psychiatry 2018, 2, 1–49. [Google Scholar] [CrossRef]

- Beer, J.S.; John, O.P.; Scabini, D.; Knight, R.T. Orbitofrontal Cortex and Social Behavior: Integrating Self-monitoring and Emotion-Cognition Interactions. J. Cogn. Neurosci. 2006, 18, 871–879. [Google Scholar] [CrossRef]

- Somerville, L.H.; Kelley, W.M.; Heatherton, T.F. Self-esteem modulates medial prefrontal cortical responses to evaluative social feedback. Cerebral Cortex 2010, 20, 3005–3013. [Google Scholar] [CrossRef]

- Goossens, L.; Kukolja, J.; Onur, O.A.; Fink, G.R.; Maier, W.; Griez, E.; Schruers, K.; Hurlemann, R. Selective processing of social stimuli in the superficial amygdala. Hum. Brain Mapp. 2009, 30, 3332–3338. [Google Scholar] [CrossRef]

- Craig, M.C.; Catani, M.; Deeley, D.; Latham, R.; Daly, E.; Kanaan, R.; Picchioni, M.; McGuire, P.K.; Fahy, T.; Murphy, D.G.M. Altered connections on the road to psychopathy. Mol. Psychiatry 2009, 14, 946–953. [Google Scholar] [CrossRef]

- Chavez, R.S.; Heatherton, T.F. Multimodal frontostriatal connectivity underlies individual differences in self-esteem. Soc. Cogn. Affect. Neurosci. 2014, 10, 364–370. [Google Scholar] [CrossRef]

- Chester, D.S.; Lynam, D.R.; Powell, D.K.; DeWall, C.N. Narcissism is associated with weakened frontostriatal connectivity: A DTI study. Soc. Cogn. Affect. Neurosci. 2015, 11, 1036–1040. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parr, T. Inferring What to Do (And What Not to). Entropy 2020, 22, 536. https://doi.org/10.3390/e22050536

Parr T. Inferring What to Do (And What Not to). Entropy. 2020; 22(5):536. https://doi.org/10.3390/e22050536

Chicago/Turabian StyleParr, Thomas. 2020. "Inferring What to Do (And What Not to)" Entropy 22, no. 5: 536. https://doi.org/10.3390/e22050536

APA StyleParr, T. (2020). Inferring What to Do (And What Not to). Entropy, 22(5), 536. https://doi.org/10.3390/e22050536