1. Introduction

The vanishing probability of winning in a long enough sequence of coin flips features in the opening scene of Tom Stoppard’s play “

Rosencrantz and Guildenstern Are Dead”, where the protagonists are betting on coin flips. Rosencrantz, who bets on heads each time, has won 92 flips in a row, leading Guildenstern to suggest that they are within the range of supernatural forces. Furthermore, he was actually right, as the king had already sent for them [

1].

Although coin-tossing experiments are ubiquitous in courses on elementary probability theory, and coin tossing is regarded as a prototypical random phenomenon of unpredictable outcome, the exact amounts of predictable and unpredictable information related to flipping a biased coin was not discussed in the literature. The discussion on whether the outcome of naturally tossed coins is truly random [

2], or if it can be manipulated (and therefore predicted) [

3,

4] has been around perhaps for as long as coins existed. It is worth mentioning that tossing of a real coin obeys the physical laws and is inherently a deterministic process, with the outcome that, formally speaking, might be determined if the initial state of the coin is known [

5].

All in all, the toss of a coin has been a method used to determine random outcomes for centuries [

4]. The practice of flipping a coin was ubiquitous for taking decisions under uncertainty, as a chance outcome is often interpreted as the expression of divine will [

1]. Individuals who are told by the coin toss to make an important change are reported much more likely to make a change and are happier six months later than those who were told by the coin to maintain the status quo in their lives [

6].

If the coin is not fair, the outcome of future flipping can be either (i.) anticipated intuitively by observing the whole sequence of sides shown in the past in search for the possible patterns and repetitions, or (ii.) guessed instantly from the side just showed up. In our brain, the stored routines and patterns making up our experience are managed by the basal

ganglia, and

insula, highly sensitive to any change, takes care of our present awareness and might feature the guess on the coin toss outcome [

7]. Trusting our gut, we unconsciously look for patterns in sequences of shown sides,

a priori perceiving any coin as unfair.

In the present paper, we propose the information theoretic study of the most general models for “integer” and fractional flipping a biased coin. We show that these stochastic models are singular (along with many other well-known stochastic models), and therefore their parameters—the side repeating probabilities—cannot be inferred from assessing frequencies of shown sides (see

Section 2 and

Section 4). In

Section 3, we demonstrate that some uncertainty about the coin flipping outcome can nevertheless be resolved from the presently shown side and the sequence of sides occurred in the past, so that the actual level of uncertainty attributed to flipping a biased coin can be lower than assessed by entropy. We suggest that the entropy function can therefore be decomposed into the predictable and unpredictable information components (

Section 3). Interestingly, the efficacy of the side forecasting strategies (i.) and (ii.) mentioned above can be quantified by the distinct information theoretic quantities—the excess entropy and conditional mutual information, respectively (

Section 3). The decomposition of entropy into the predictable and unpredictable information components is justified rigorously at the end of

Section 3.

In

Section 4, we introduce a backward-shift Markov chain transition matrix generalizing the standard “integer” coin flipping model for fractional order flipping. Namely, the

fractional order Markov chain is defined as a convergent infinite binomial series in the “integer”-order transition matrix that assumes strong coupling between the chain states (coin tossing outcomes) at different times. The fractional backward shift transition operator

does not reflect any physical process.

On the one hand, our fractional coin-tossing model is intrinsically similar to the

fractional random walks introduced recently in [

8,

9,

10,

11,

12] in the context of Markovian processes defined on networks. In contrast to the normal random walk where the walker can reach in one time-step only immediately connected nodes, the fractional random walker governed by a fractional Laplacian operator is allowed to reach any node in one time-step dynamically introducing a small-world property to the network. On the other hand, our fractional order Markov chain is closely related to the

Autoregressive Fractional Integral Moving Average (ARFIMA) models [

13,

14,

15], a fractional order signal processing technique generalizing the conventional integer order models—autoregressive integral moving average (ARIMA) and autoregressive moving average (ARMA) model [

16]. In the context of time series analysis, the proposed fractional coin-flipping model resolves the fractional order time-backward outcomes (i.e.,

memories [

17,

18,

19,

20,

21]) as the moving averages over all

future states of the chain—that explains the title of our paper. We also show that the side repeating probabilities considered independent of each other in the standard, “integer” coin-tossing model appear to be entangled with one another as a result of strong coupling between the future states in fractional flipping. Finally, we study the evolution of the predictable and unpredictable information components of entropy in the model of fractional flipping a biased coin (

Section 5). We conclude in the last section.

2. The Model of a Biased Coin

A biased coin prefers one side over another. If this preference is stationary, and the coin tosses are independent of each other, we describe coin flipping by a Markov chain defined by the stochastic transition matrix, viz.,

in which the states, ‘

heads’ (“0”) or ‘

tails’ (“1”), repeat themselves with the probabilities

and

, respectively. The Markov chain Equation (

1) generates the stationary sequences of states, viz.,

when

, or

when

, or

when

, but describes flipping a fair coin if

.

For a symmetric chain,

, the relative frequencies (or densities) of ‘

head’ and ‘

tail’,

are equal each other,

and therefore the entropy function, expressing the amount of uncertainty about the coin flip outcome, viz.,

attains the maximum value,

bit, uniformly for all

. On the contrary, flipping the coin when

(or

) generates the stationary sequences of no uncertainty,

(see

Figure 1). In Equation (

3) and throughout the paper, we use the following conventions reasonable by a limit argument:

. The information difference between the amounts of uncertainty on a smooth statistical manifold parametrized by the probabilities

p and

q is calculated using the

Fisher information matrix (FIM) [

22,

23,

24], viz.,

However, since

bit, for

, the FIM,

is degenerate (with eigenvalues

,

), and therefore the biased coin model Equation (

1) is singular, along with many other stochastic models, such as Bayesian networks, neural networks, hidden Markov models, stochastic context-free grammars, and Boltzmann machines [

25]. The singular FIM (

4) assumes that the parameters of the model,

p and

q, cannot be inferred from assessing relative frequencies of sides in sequences generated by the Markov chain Equation (

1).

3. Predictable and Unpredictable Information in the Model of Tossing a Biased Coin

Although coin tossing is traditionally regarded as a prototypical random experiment of unpredictable outcome, some amount of uncertainty in the model Equation (

1) can be dispelled before tossing a coin. Namely, we can consider the entropy function Equation (

3) as a sum of the

predictable and

unpredictable information components,

where the predictable part

estimates the amount of

apparent uncertainty about the future flipping outcome that might be resolved from the sequence of sides shown already, and

estimates the amount of

true uncertainty that cannot be inferred neither from the past, nor from present outcomes anyway. It is reasonable to assume that both functions,

P and

U, in Equation (

6) should have the same form as the entropy function in Equation (

3), viz.,

Furthermore, as the more frequent the side, the higher the forecast accuracy, we assume that the partition function

featuring the predicting potential in already shown sequences for forecasting the side

k is obviously proportional to the relative frequency of that side,

. Denoting the relevant proportionality coefficient as

in

, we obtain

. Given the already shown sequence of coin sides

, the average amount of uncertainty about the flipping outcome is assessed by the entropy rate [

24] of the Markov chain Equation (

1), viz

and therefore, the

excess entropy [

25,

26,

27], quantifying the apparent uncertainty of the flipping outcome that can be resolved by discovering the repetition, rhythm, and patterns over the whole (infinite) sequence of sides shown in the past,

, equals

The excess entropy

attains the maximum value of 1 bit over the stationary sequences but equals zero for

(see

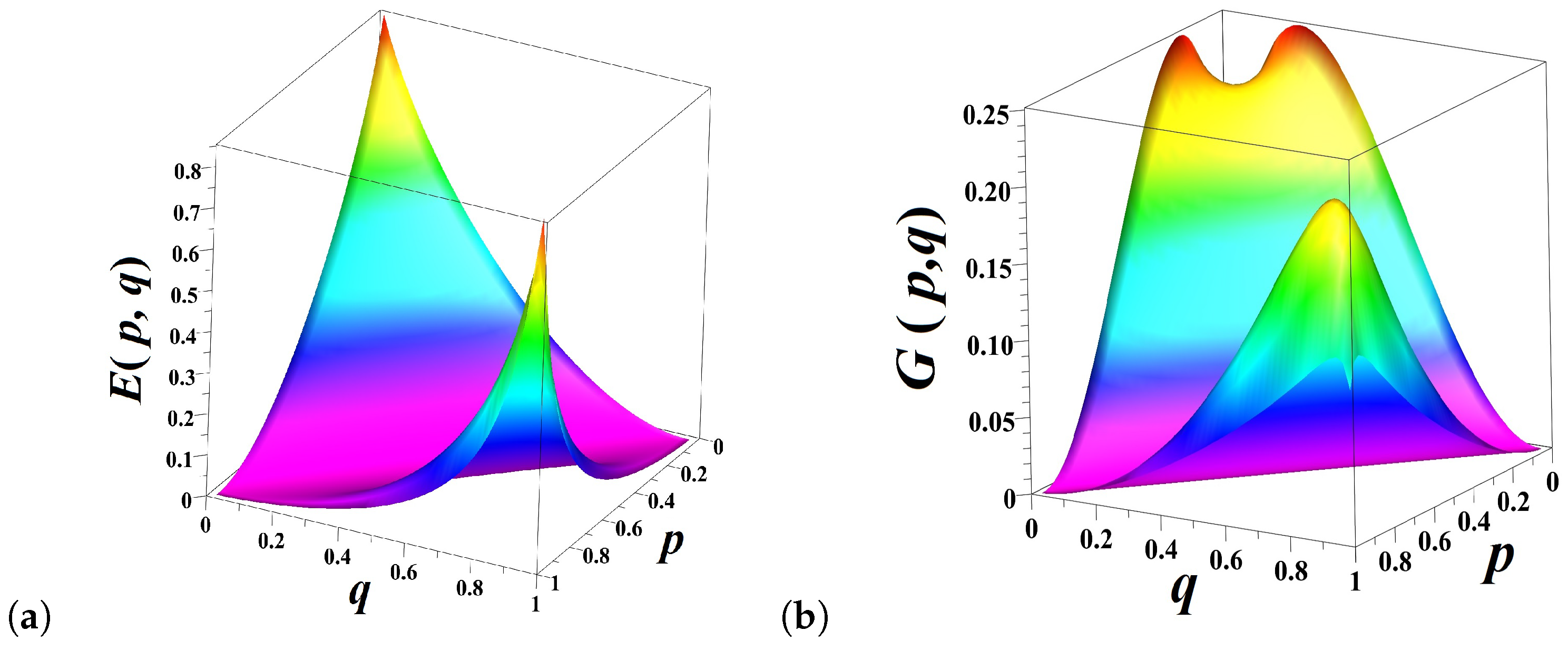

Figure 2a).

Moreover, the next flipping outcome can be guessed from the present state alone, and the level of accuracy of such a guess can be assessed by the

mutual information between the present state and the future state conditioned on the past state

[

25,

28], viz.,

The mutual information (

10) is a component of the entropy rate (

9) growing as

and

. For

, the rise of

destructive interference between two incompatible hypotheses on

- (i)

alternating the present side at the next tossing (if ), or

- (ii)

repeating the present side at the next tossing (when )

causes the attenuation and cancellation of mutual information (

10) (

Figure 2b).

By summing (

9) and (

10), we obtain the amounts of predictable and unpredictable information, respectively:

where

is the entropy of the present state conditional on the future and past states of the chain. The latter conditional entropy is naturally expressed via the entropy of the future state conditional on the present

, the entropy of the present state conditional on the past

, and the entropy of the future state conditional on the past

as following:

. The accuracy of the obtained information decomposition of entropy,

is demonstrated immediately by the following computation involving the conditional entropies:

The predictable information component

amounts to

over the stationary sequences but disappears for

(

Figure 3a). On the contrary, the share of unpredictable information

attains the maximum value

, for

(

Figure 3b).

4. The Model of Fractional Flipping a Biased Coin

In our work, we define the model of fractional flipping a biased coin using the fractional differencing of non-integer order [

29,

30] for the discrete time stochastic processes [

31,

32,

33]. The Grunwald-Letnikov fractional difference

of order

with the unit step

and the time lag operator

T is defined [

18,

29,

30,

34,

35,

36] by

where

is fixed

-delay, and

is the binomial coefficient that can be written for integer or non-integer order

using the Gamma function, viz.,

It should be noted that for a Markov chain defined by Equation (

1), the Grunwald-Letnikov fractional difference of a non-integer order

takes form of the following infinite series of binomial type, viz.,

that converges absolutely, for

. In Equation (

16), we have used a formal structural similarity between the fractional order difference operator and the power series of binomial type in order to introduce a

fractional backward-shift transition operator for any fractional order

as a convergent infinite power series of the transition matrix Equation (

1), viz.,

The backward-shift fractional transition matrix defined by Equation (

17) is a stochastic matrix preserving the structure of the initial Markov chain Equation (

1), for any

. Since the power series of binomial type in Equation (

17) is convergent and summable for any value

, we have also introduced in Equation (

17) the

fractional probabilities, and , as the corresponding elements of the fractional transition matrix. The fractional transition operator Equation (

17) describes fractional flipping a biased coin for

as a

moving average over the probabilities of

all future outcomes of the Markov chain Equation (

1) described by integer powers

,

. The fractional Markov chain Equation (

17) is also similar to the

fractional random walks introduced recently in [

8,

9,

10,

11,

12]. In these research efforts, the fractional Laplace operator describing anomalous transportation in connected networks and the fractional degree of a node are related to integer powers of the network adjacency matrix

for

for which the element

is the total number of all possible trajectories connecting nodes

i and

j by paths of length

m. The fractional characteristics of the graph not only incorporate information related to the number of nearest neighbors of a node, but also include information of all far away neighbors of the node in the network, allowing for long-range transitions between the nodes and featuring anomalous diffusion [

10].

In the proposed fractional Markov chain Equation (

17), the kernel function (which can be called

memory function following [

19,

20,

21,

37]) establishes strong coupling between the outcome of fractional coin flipping for the fractional order parameter

and the probabilities of all future outcomes of the “integer”-order Markov chain Equation (

1). It is worth mentioning that the fractional transition probabilities in Equation (

17) equal those in the “integer”-order flipping model Equation (

1) as

, viz.,

but coincide with the densities Equation (

2) of the ‘

head’ and ‘

tail’ states, as

, viz.,

Thus, the minimal value of the fractional order parameter (

) in the model Equation (

17) may be attributed to the “integer”-order coin flipping when no information about the future flipping outcomes is available, i.e., the very moment of time when the present side of coin is revealed. Furthermore, the maximum value of the fractional order parameter (

) corresponds to the maximum available information about all future coin-tossing outcomes. Averaging over all future states of the chain as

recovers the density of states Equation (

19) of the Markov chain Equation (

1) precisely as expected.

The transformation Equation (

17) defines the

—flow of fractional probabilities over the fractional order parameter

as shown in

Figure 4a. In fractional flipping,

, the state repetition probabilities

and

get entangled with one another due to the normalization factor

in Equation (

17). For the integer order coin flipping model

, the state repetition probabilities

and

are independent of each other (as shown by flow arrows on to top face of the cube in

Figure 4a) but they are linearly dependent,

, as

(see the bottom face of the cube in

Figure 4a).

The degree of entanglement as a function of the fractional order parameter

can be assessed by the expected divergence between the fractional model probabilities,

and

, in the models Equation (

1) and Equation (

17), viz.,

The integrand in Equation (

20) turns to zero when the probabilities are independent of one another (as

) but equals the doubled

Kullback–Leibler divergence (relative entropy) [

24] between

p and

(

q and

) as

(due to the obvious

symmetry of expressions). The degree of probability entanglement defined by Equation (

20) attains the maximum value at

(

Figure 4b).

Since the vector of ‘

head’ and ‘

tail’ densities Equation (

2) is an eigenvector for all integer powers

, it is also an eigenvector for the fractional transition operator

, for any value of the fractional order parameter

. Therefore, the fractional dynamics of transition probabilities does not change the densities of states in the Markov chain, so that the entropy function Equation (

3) is an invariant of fractional dynamics in the model Equation (

17) (

Figure 4a). The Fisher information matrix Equation (

4) is redefined for the probabilities

, viz.,

which is also degenerate because the symmetry

is preserved in all the expressions for all values

. The nontrivial eigenvalue of the FIM Equation (

21) turns to zero as well, for the stationary sequences with

. The fractional flipping a biased coin model is singular, as well as the integer time flipping model Equation (

1).

5. Evolution of Predictable and Unpredictable Information Components over the Fractional Order Parameter

The predictable and unpredictable information components defined by Equations ((

9)–(

11)) can be calculated for the fractional transition matrix Equation (

17), for any value of the fractional order parameter

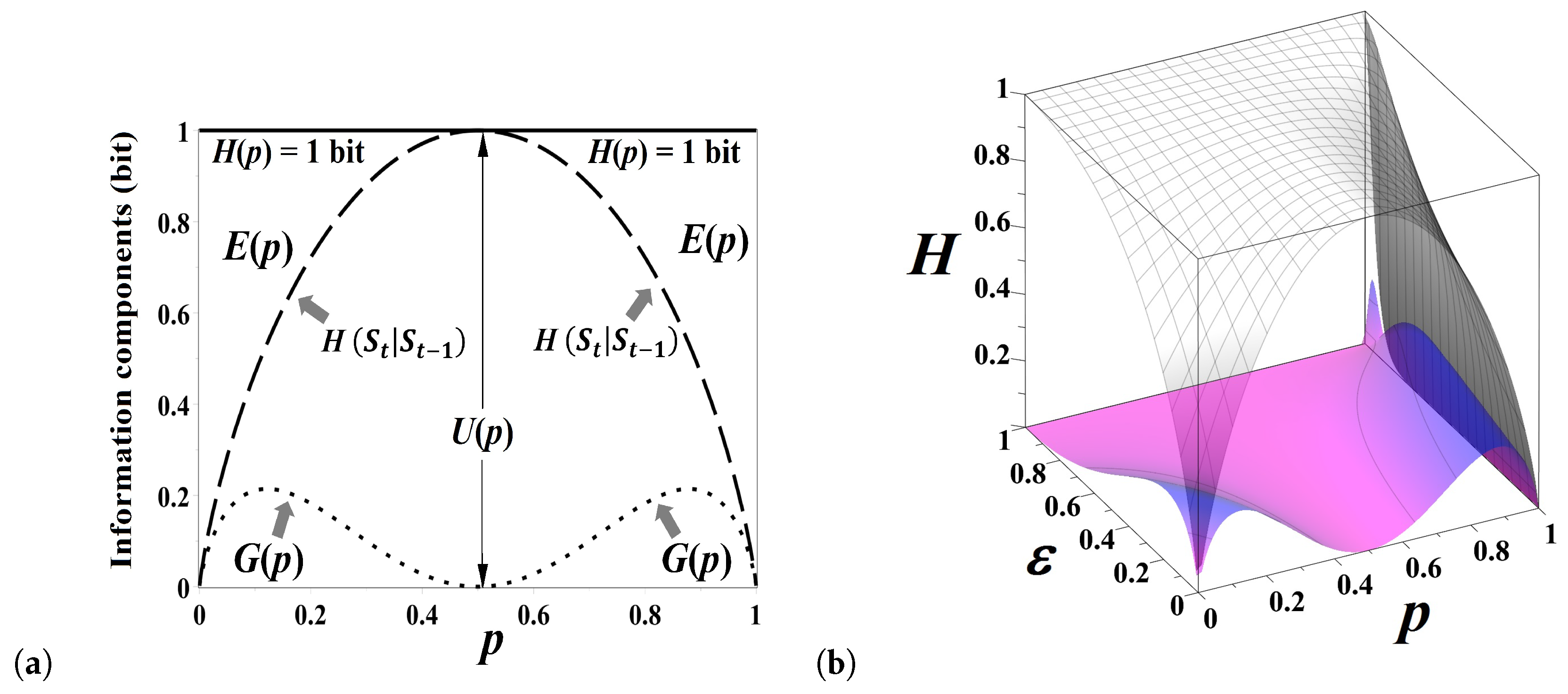

. In the present section, without loss of generality, we discuss the case of symmetric chain,

. For a symmetric chain, the densities of both states are equal,

, so that

bit, uniformly for all

(

Figure 5a). The excess entropy Equation (

9) quantifying predictable information encoded in the historical sequence of showed sides for a symmetric chain reads as follows [

38]:

Forecasting the future state through discovering patterns in sequences of shown sides Equation (

22) loses any predictive power when the coin is fair,

, but

bit when the series is stationary (i.e.,

, or

). The mutual information Equation (

10) measuring the reliability of the guess about the future state provided the present state is known [

38],

increases as

(

) attaining maximum at

(

). The effect of destructive interference between two incompatible hypotheses about alternating the current state (

) and repeating the current state (

) culminates in fading this information component when the coin is fair,

(

Figure 5a). The difference between the entropy rate

and the mutual information

may be viewed as the “

degree of fairness” of the coin that attains maximum (

bit) for the fair coin

(see

Figure 5a).

The entropy decomposition presented in

Figure 5a for “integer”-order flipping (

) evolves over the fractional order parameter,

as shown in

Figure 5b: the decomposition of entropy shown in

Figure 5a corresponds to the outer face of the three dimensional

Figure 5b. When

, the sequence of coin sides shown in integer flipping is stationary, so that there is no uncertainty about the coin tossing outcome. However, the amount of uncertainty for

grows to 1 bit, for fractional flipping as

. When

, the repetition probability of coin sides equals its relative frequency,

, and therefore uncertainty about the future state of the chain cannot be reduced anyway,

bit. Interestingly, there is some gain of predictable information component

for

as

(see

Figure 5b). The information component

quantifies the goodness of guess of the flipping outcome from the present state of the chain, so that the gain observed in

Figure 5b might be interpreted as the reduction of uncertainty in a stationary sequence due to the choice of the present state, “0” or “1”. Despite the dramatic demise of unpredictable information for fractional flipping as

, the fair coin (

) always stays fair.