Abstract

The entropy production in stochastic dynamical systems is linked to the structure of their causal representation in terms of Bayesian networks. Such a connection was formalized for bipartite (or multipartite) systems with an integral fluctuation theorem in [Phys. Rev. Lett. 111, 180603 (2013)]. Here we introduce the information thermodynamics for time series, that are non-bipartite in general, and we show that the link between irreversibility and information can only result from an incomplete causal representation. In particular, we consider a backward transfer entropy lower bound to the conditional time series irreversibility that is induced by the absence of feedback in signal-response models. We study such a relation in a linear signal-response model providing analytical solutions, and in a nonlinear biological model of receptor-ligand systems where the time series irreversibility measures the signaling efficiency.

1. Introduction

The irreversibility of a process is the possibility to infer the existence of a time’s arrow looking at an ensemble of realizations of its dynamics [1,2,3]. This concept appears in the nonequilibrium thermodynamics quantification of dissipated work or entropy production [4,5,6], and it relates the probability of paths with their time-reversal conjugates [7].

Fluctuation theorems have been developed to describe the statistical properties of the entropy production and its relation to information-theoretic quantities in both Hamiltonian and Langevin dynamics [8,9,10]. Particular attention was given to measurement-feedback controlled models [11,12] inspired by the Maxwell’s demon [13], a gedanken-experiment in which mechanical work is extracted from thermodynamic systems using information. An ongoing experimental effort is put in the design and optimization of such information engines [14,15,16,17].

Theoretical studies clarified the role of fluctuations in feedback processes described by bipartite (or multipartite) stochastic dynamics, where fluctuation theorems set lower bounds on the entropy production of subsystems in terms of the Horowitz-Esposito information flow [18,19,20], or in terms of the transfer entropy [21,22] in the interaction between subsystems. Those inequalities form the second law of information thermodynamics [23], whose latest generalization was given in the form of geometrical projections into local reversible dynamics manifolds [24,25].

Time series can be obtained measuring continuous underlying dynamics at a finite frequency , and this is the case of most real data. A measure of irreversibility for time series was defined in [26] as the Kullback–Leibler divergence [27] between the probability density of a time series realization and that of its time-reversal conjugate. Time series are non-bipartite in general, and this prevents an unambiguous identification and additive separation of the physical entropy production or heat exchanged with thermal baths [28]. Then the time series irreversibility does not generally converge to the physical bipartite (or multipartite) entropy production in the limit of high sampling frequency , except for special cases such as Langevin systems with constant diffusion coefficients, as we will discuss here.

The time series irreversibility measure defined in [26] depends on the statistical properties of the whole time series for non-Markovian processes. We define a measure of irreversibility that considers only the statistics of single transitions, and we call it mapping irreversibility. It recovers the irreversibility of whole time series only for Markovian systems. We study fluctuations of the mapping irreversibility introducing its stochastic counterpart. In the bivariate case of two interacting variables x and y, we study the conditional stochastic mapping irreversibility defined as the difference between that of the joint process and that of a single marginal subsystem [29].

We define signal-response models as continuous-time stationary stochastic processes characterized by the absence of feedback. In the bidimensional case, a signal-response model consists of a fluctuating signal x and a dynamic response y. In a recent work [30] we studied the information processing properties of linear (multidimensional) signal-response models. In that framework we defined a measure of causal influence to quantify how the macroscopic effects of asymmetric interactions are observed over time.

The backward transfer entropy is the standard transfer entropy [27] calculated in the ensemble of time-reversed trajectories. It was already shown to have a role in stochastic thermodynamics, in gambling theory, and in anti-causal linear regression models [21].

We derive an integral fluctuation theorem for time series of signal-response models that involves the backward transfer entropy. From this follows the II Law of Information thermodynamics for signal-response models, i.e., that the backward transfer entropy of the response y on the past of the signal x is a lower bound to the conditional mapping irreversibility. Please note that in general the conditional entropy production is zero lower bounded. Then our result shows that in time series it is the asymmetry of the interaction between subsystems x and y, namely the absence of feedback that links irreversibility with information.

For the basic linear signal-response model (BLRM) discussed in [30], in the limit of small observational time the backward transfer entropy converges to the causal influence. Also, in the BLRM, we find that the causal influence rate converges to the Horowitz-Esposito [18] information flow.

A key quantity here is the observational time , and the detection of the irreversibility of processes from real (finite) time series data is based on a fine-tuning of this parameter. We introduce such discussion with a biological model of receptor-ligand systems, where the entropy production measures the robustness of signaling.

The motivation of the present work is a future application of the stochastic thermodynamics framework to the analysis of time series data in biology and finance.

The paper is structured as follows. In Section 2 we provide a general introduction to stochastic thermodynamics introducing the irreversible entropy production. In Section 3 we state the setting and formalism we use for the treatment of bivariate time series, we define the stochastic (conditional) mapping irreversibility, we introduce the irreversibility density, and we review the general integral fluctuation theorem [31]. In Section 4 we discuss the integral fluctuation theorem for signal-response models involving the backward transfer entropy, and in Section 5.1 and Section 5.2 we show how it applies to the BLRM, and to a biological model of receptor-ligand systems. In the Discussion section we review the results and we motivate further our work. We provide an Appendix section where the analytical results for the mapping irreversibility, for the backward transfer entropy, and for the Horowitz-Esposito information flow in the BLRM are discussed.

2. Introduction to Continuous Information Thermodynamics

2.1. Entropy Production in Heat Baths

Let us consider an ensemble of trajectories generated by a Markovian (memoryless) continuous-time stochastic process composed of two interacting variables x and y subject to Brownian noise . The stochastic differential equations (SDEs) describing such kind of processes can be written in the Ito interpretation [32] as:

where and are diffusion coefficients whose dependence takes into account the case of multiplicative noise. Brownian motion is characterized by . The dynamics in (1) is bipartite, which means conditionally independent in updating: .

The bipartite structure of (1) is fundamental in stochastic thermodynamics, because it allows the identification [28] and additive separation [18] of the heat exchanged with thermal baths in separate contact with x and y subsystems, . These are given by the detailed balance relation [5,10]:

where is defined as the event of variable x assuming value at time , and similarly is the event of variable x assuming value at time t. An analogous expression to (2) holds for subsystem y. Time-integrals of the updating probabilities and can be written in terms of the SDE (1) using Onsager-Machlup action functionals [19,33].

Stochastic thermodynamics quantities are defined in single realizations of the probabilistic dynamics, in relation to the ensemble distribution [31,34]. As an example, the stochastic joint entropy is , and its thermal (ensemble) average is the macroscopic entropy . The explicit time dependence of describes the ensemble dynamics that in stationary processes is a relaxation to steady state. A SDE system such as (1) can be transformed into an equivalent partial differential equation in terms of probability currents [35], that is, the Fokker-Planck equation . Probability currents are related to average velocities with .

2.2. Feedbacks and Information

The stochastic entropy of subsystem x unaware of the other subsystem y is , and its time variation is . The apparent entropy production of subsystem x with its heat bath is , and its thermal average can be negative due to the interaction with y, in apparent violation of the thermodynamics II Law. This is the case of Maxwell’s demon strategies [11,12], where information on x gained by the measuring device y is exploited to exert a feedback force and extract work from x, such as in the feedback cooling of a Brownian particle [19,36]. Integral fluctuation theorems [10,23] (IFTs) provide lower bounds on the subsystems’ macroscopic entropy production and extracted work in terms of information-theoretic measures [27]. The stochastic mutual information is defined as , where “” stands for stochastic. Its time derivative can be separated into contributions corresponding to single trajectory movements and ensemble probability currents in the two directions, . The stochastic information flux in the x direction has the form , where . An analogous expression holds for . The thermal average is the Horowitz-Esposito information flow [18,36]. At steady state it takes the form:

A recent formulation [19] upper bounds the average work extracted in feedback systems that in the steady-state bipartite framework is proportional to the x bath entropy change , with the information flow (3) towards the y sensor. Such a result is recovered with a different formulation in terms of transfer entropies, and it is the Ito inequality [10,21,24] that reads:

where forward and backward stochastic transfer entropy [22] are respectively defined as , and .

2.3. Irreversible Entropy Production

The stochastic (total) irreversible entropy production [18,24,36] of the joint system and thermal baths is:

where is the joint system stochastic entropy change. If the ensemble is at steady state . If we further assume that diffusion coefficients in (1) are nonzero constants, and this is the case of Langevin systems [28] where these are proportional to the temperature, then the conditional probability is equivalent to under the time integral sign [10]. More precisely the term almost surely vanishes. Then the irreversible entropy production (5) takes the form:

Equation (6) shows the connection between entropy production and irreversibility of trajectories. The thermal average has the form of a Kullback–Leibler divergence [26,27] and satisfies . Using the Ito inequality [10,21,24] for both and does not lead to a positive lower bound in continuous (bipartite) stationary processes: . Nevertheless, it is clear that the irreversible entropy production is strictly positive when the interaction between subsystems is nonconservative [9]. Our main interest is the stationary dissipation due to asymmetric interactions between subsystems, and how it is manifested in time series.

3. Bivariate Time Series Information Thermodynamics

3.1. Setting and Definition of Causal Representations

Let us assume that we can measure the state of the system at a frequency , thus obtaining time series. The finite observational time makes the updating probability not bipartite: . Therefore, a clear identification of thermodynamics quantities in time series is not possible. Let us take the Markovian SDE system (1) as the underlying process, and let us further assume stationarity. Then the statistical properties of time series obtained from a time discretization can be represented in the form of Bayesian networks, where links correspond to the way in which the joint probability density of states at the two instants t and is factorized. Still, there are multiple ways of such factorization. We say that a Bayesian network is a causal representation of the dynamics if conditional probabilities are expressed in a way that variables at time depend on variables at the same time instant or on variables at the previous time instant t (and not vice-versa), and that the dependence structure is done in order to minimize the total number of conditions on the probabilities. This corresponds to a minimization of the number of links in the Bayesian network describing the dynamics with observational time . Importantly, the causal representation is a way of factorizing the joint probability , and not a claim of causality between observables.

We define the combination as a pair of successive states of the joint system separated by a time interval , . We use the identity functional for an unambiguous specification of the backward combination . This is defined as the time-reversed conjugate of the combination , meaning the inverted pair of the same two successive states, . We defined backward variables of the type meaning , such correspondences being possible only when states at both times t and are given. The subsystems variables and backward variables are similarly defined as , , , and .

3.2. Definition of Mapping Irreversibility and the Standard Integral Fluctuation Theorem

A measure of coarse-grained entropy production for time series can be defined replacing with the nonzero observational time in the general expression (5) obtaining:

where we assumed stationarity, . By definition converges to the physical entropy production in the limit , and it is a lower bound to it [37]. Importantly, such coarse-grained entropy production cannot have the form of an irreversibility measure such as (6) because . With “irreversibility form” we mean that its thermal average is a Kullback–Leibler divergence measuring the distinguishability between forward and time-reverse paths. Therefore, we decided to keep the widely accepted time series irreversibility definition given in [26], in its form for stationary Markovian systems. Anyway, we are interested in the time-reversal asymmetry of time series from even more general models or data where no identification of thermodynamic quantities is required.

For the study of fluctuations, we define the stochastic mapping irreversibility with observational time of the joint system as:

The thermal average is called mapping irreversibility, , and it describes the statistical properties of a single transition over an interval . However, since the underlying dynamics (1) is Markovian, it describes the irreversibility of arbitrary long time series.

Let us note that does not generally converge to the total physical entropy production (5) in the limit because of the non-bipartite structure of for any . It does converge anyway in most physical situations where bipartite underlying dynamics such as (1) has constant and strictly positive diffusion coefficients, and this is the case of Langevin systems. This is because the Brownian increments and are dominating the dynamics for small intervals , then the estimate of (with ) based on is improved with the knowledge of just by a term of order , where we assumed a smooth . Therefore in the limit it is almost surely and , see Appendix D.

The stochastic mapping irreversibility satisfies the standard integral fluctuation theorem [31], i.e.,:

where , , and is the whole space of the combination . From the convexity of the exponential function it follows that the mapping irreversibility is non-negative. This is the standard thermodynamics II Law inequality for the joint system time series:

Similarly, we define the stochastic mapping irreversibility for the two subsystems as and , these being called the marginals [29]. Their ensemble averages are respectively denoted and , and they also satisfy the standard II Law.

Although bivariate time series derived from the joint process (1) are Markovian, the one-dimensional subsystems time series are generally not. This is because subsystems trajectories are a coarse-grained representation of the full dynamics, and to reproduce the statistical properties of those trajectories a non-Markovian dynamics has to be assumed. Therefore and are generally different from the irreversibility calculated on a whole time series. The mapping irreversibility describes the statistical properties of the whole time series only if it is Markovian. This is surely the case if x is not influenced by y in (1), , and motivated our study of signal-response models [30].

We define the conditional mapping irreversibility of y given x as the difference between the mapping irreversibility of the joint system and the mapping irreversibility of system x alone:

This can be considered as a time series generalization to the conditional entropy production introduced in [29]. Also, the conditional mapping irreversibility satisfies the standard integral fluctuation theorem and the corresponding II Law-like inequality .

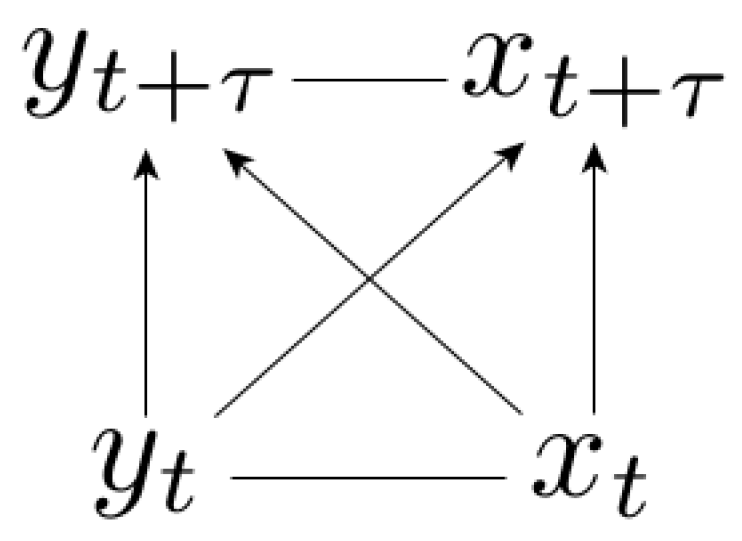

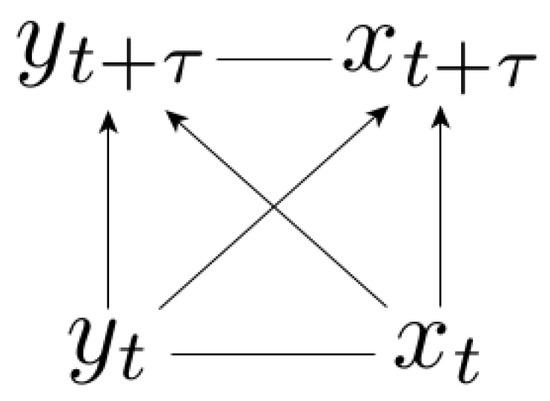

In the general case where the evolution of each variable is influenced by the other variable (Equation (1)), we have a complete causal representation resulting from the dynamics (Figure 1), meaning that all edges are present in the Bayesian network. Please note that the horizontal arrows are non-directed because of the factorization degeneracy, meaning that the causal representation is given by multiple network topologies. In the complete case (Figure 1) we were not able to provide a more accurate characterization of the conditional mapping irreversibility than the one given by the standard fluctuation theorem and the corresponding II Law-like inequality, .

Figure 1.

Complete causal representation. The arrows represent the way we factorize the joint probability density. In the complete case the causal representation is fully degenerate: .

Let us recall that the inequalities for continuous bipartite systems [10,18,19,21,24] apply to the apparent entropy production , and do not influence the total irreversible entropy production . Similarly, those results do not influence the mapping irreversibility and the conditional mapping irreversibility , for which in general only the standard zero lower bound can be provided.

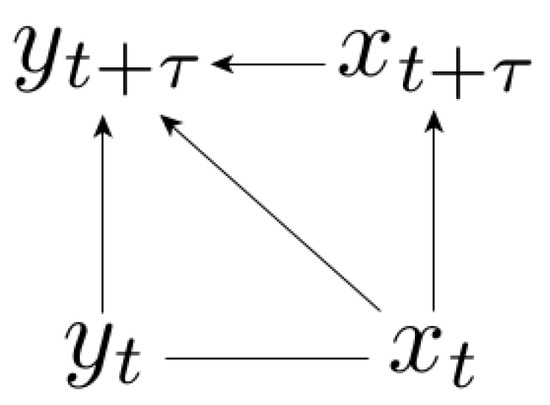

We aim to relate the irreversibility of time series (8) to the (discrete) information flow between subsystems variables over time. We argue that fluctuation theorems linking the irreversibility of time series with information arise because of missing edges in the causal representation of the dynamics in terms of Bayesian networks. In the bivariate case there is only one class of time series generated from continuous underlying dynamics for which integral fluctuation theorems involving information measures can be written, and it corresponds to dynamics without feedback: the signal-response models.

3.3. Ito Inequality for Time Series

The Ito fluctuation theorem [10,21,24] for bipartite non-Markovian dynamics can be extended to Markovian non-bipartite time series if we modify the subsystems apparent entropy production into the explicitly non-bipartite form :

Then the Ito fluctuation theorem for time series is written:

In stationary processes the mutual information is time invariant, , and (13) implies the II Law-like inequality:

Similar to the apparent entropy production for bipartite systems, also is not ensured to be positive if system x acts like a Maxwell’s demon.

The definition (12) does not have a clear physical meaning in time series, apart from its convergence to the apparent entropy production for , again for a continuous underlying dynamics with constant nonzero diffusion coefficients. In addition, does not have the form of a Kullback–Leibler divergence, and is then not considered a measure of irreversibility. Therefore, in the following we will be interested instead in the conditional mapping irreversibility (Equation (11)). Importantly, there is no general connection between and information measures, and such connection will result instead from the topology of the causal representation in signal-response models.

3.4. The Mapping Irreversibility Density

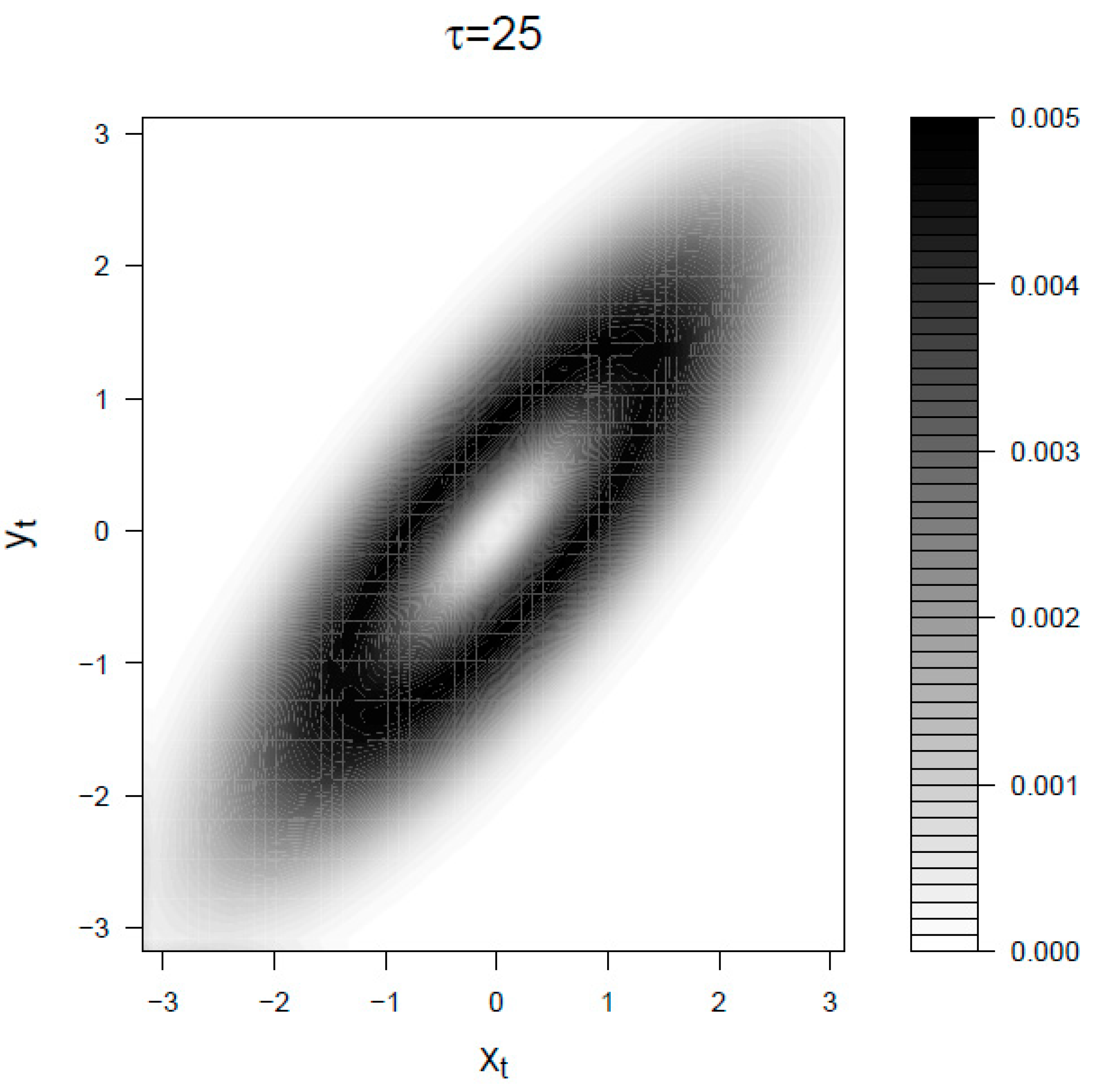

Let us use an equivalent representation of the mapping irreversibility in terms of backward probabilities [38] defined as . For stationary processes it holds and . We introduce here the mapping irreversibility density (with observational time ) for stationary processes as:

The mapping irreversibility density tells us which situations contribute more to the time series irreversibility of the macroscopic process. is proportional to the distance (precisely to the Kullback–Leibler divergence [27]) of the distribution of future states to the distribution of past states of the same condition .

4. The Fluctuation Theorem for Time Series of Signal-Response Models

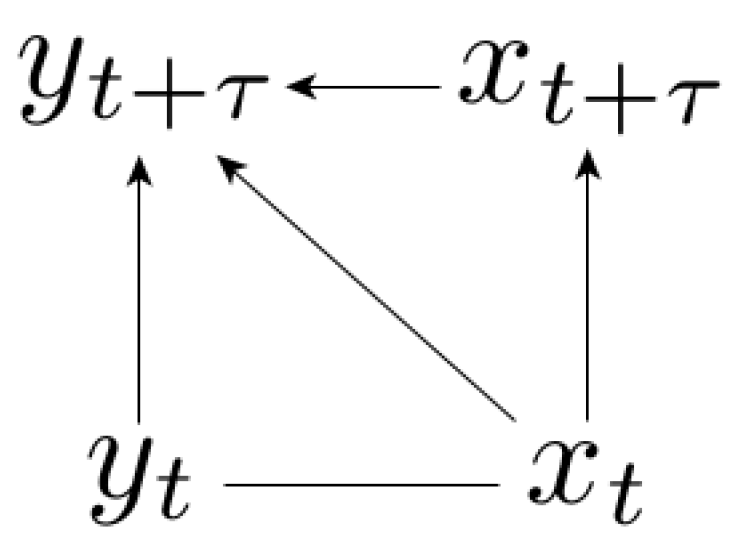

If the system is such that the variable y does not influence the dynamics of the variable x, then we are dealing with signal-response models (Figure 2). The stochastic differential equation for signal-response models is written in the Ito representation [32] as:

The absence of feedback is written in . As a consequence, the conditional probability satisfies , and the corresponding causal representation is incomplete, see the Bayesian network in Figure 2. In other words, signal-response models are specified by the property that is conditionally independent on given .

Figure 2.

Causal representation of signal-response models. The joint probability density is factorized into .

For signal-response models we can provide a lower bound on the entropy production that is more informative than Equation (10), and that involves the backward transfer entropy . The backward transfer entropy [21] is a measure of discrete information flow towards the past, and is here defined as the standard transfer entropy for the ensemble of time-reversed combinations . The stochastic counterpart as a function of is defined as:

where stands for stochastic.

Then by definition . We keep the same symbol as the standard transfer entropy because in stationary processes the backward transfer entropy is the standard transfer entropy (calculated on forward trajectories) for negative shifts .

The fluctuation theorem for time series of signal-response models is written:

where we used the signal-response property of no feedback , the stationarity property , the correspondence , and the normalization property .

From the convexity of the exponential it follows the II Law of Information thermodynamics for time series of signal-response models:

and this is the central relation we wish to study in the Applications section hereafter.

In the limit of and constant nonzero diffusion coefficients, converges to the heat exchanged with the thermal bath attached to subsystem y, . Therefore, the inequality (19) converges to the Ito inequality (14) in its form for Markovian signal-response bipartite systems [21,24] as expected. Indeed, in signal-response models , , and (14) transforms into (19). Therefore (19) can be regarded as an extension to (non-bipartite) time series of the Ito inequality [10,21,24]. This shows that in time series it is the asymmetry of the interaction between subsystems x and y, namely the absence of feedback, that links irreversibility with information.

Please note that is equivalent to the original time series irreversibility [26] because the x time series is Markovian in the absence of feedback.

In causal representations of correlated stationary processes, the factorization of is unnecessary, and only the structure of the transition probability has to be specified. Please note that the direction of the horizontal - arrow is never specified (see Figure 1 and Figure 2). In the complete (symmetric) case with feedback we also do not specify the direction of the horizontal - arrow because of the full degeneracy (see Figure 1). The importance of the causal representation is seen in signal-response models (Figure 2) because we could have decomposed the transition probability as well into the non-causal decomposition , but this does not lead to the fluctuation theorem (18).

5. Applications

5.1. The Basic Linear Response Model

We study the II Law for signal-response models (Equation (19)) in the BLRM, whose information processing properties are already discussed in [30]. The BLRM is composed of a fluctuating signal x described by the Ornstein-Uhlenbeck process [39,40], and a dynamic linear response y to this signal:

The response y is considered in the limit of weak coupling with the thermal bath , while the signal is attached to the source of noise, .

This model allows analytical representations for the mapping irreversibility (calculated in Appendix A) and the backward transfer entropy (calculated in Appendix B). We find that once the observational time is specified, and are both functions of just the two parameters and , which describe respectively the time scale of the fluctuations of the signal and the time scale of the response to a deterministic input. In addition, if we choose to rescale the time units by to compare fluctuations of different timescales, we find that irreversibility measures are function of just the product that is then the only free parameter in the model.

Since the signal is a time-symmetric (reversible) process, , the backward transfer entropy is the lower bound on the total entropy production in the BLRM.

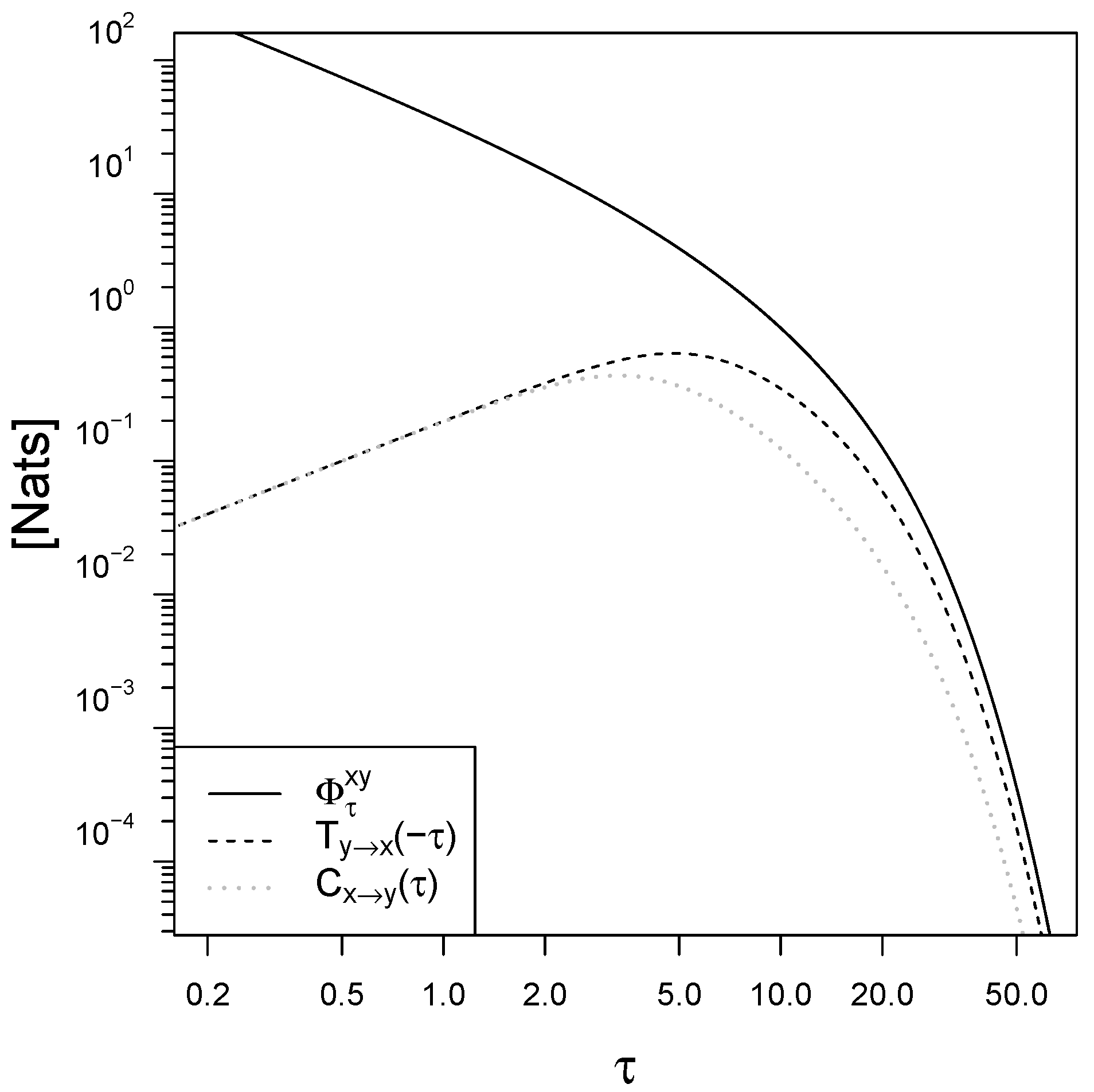

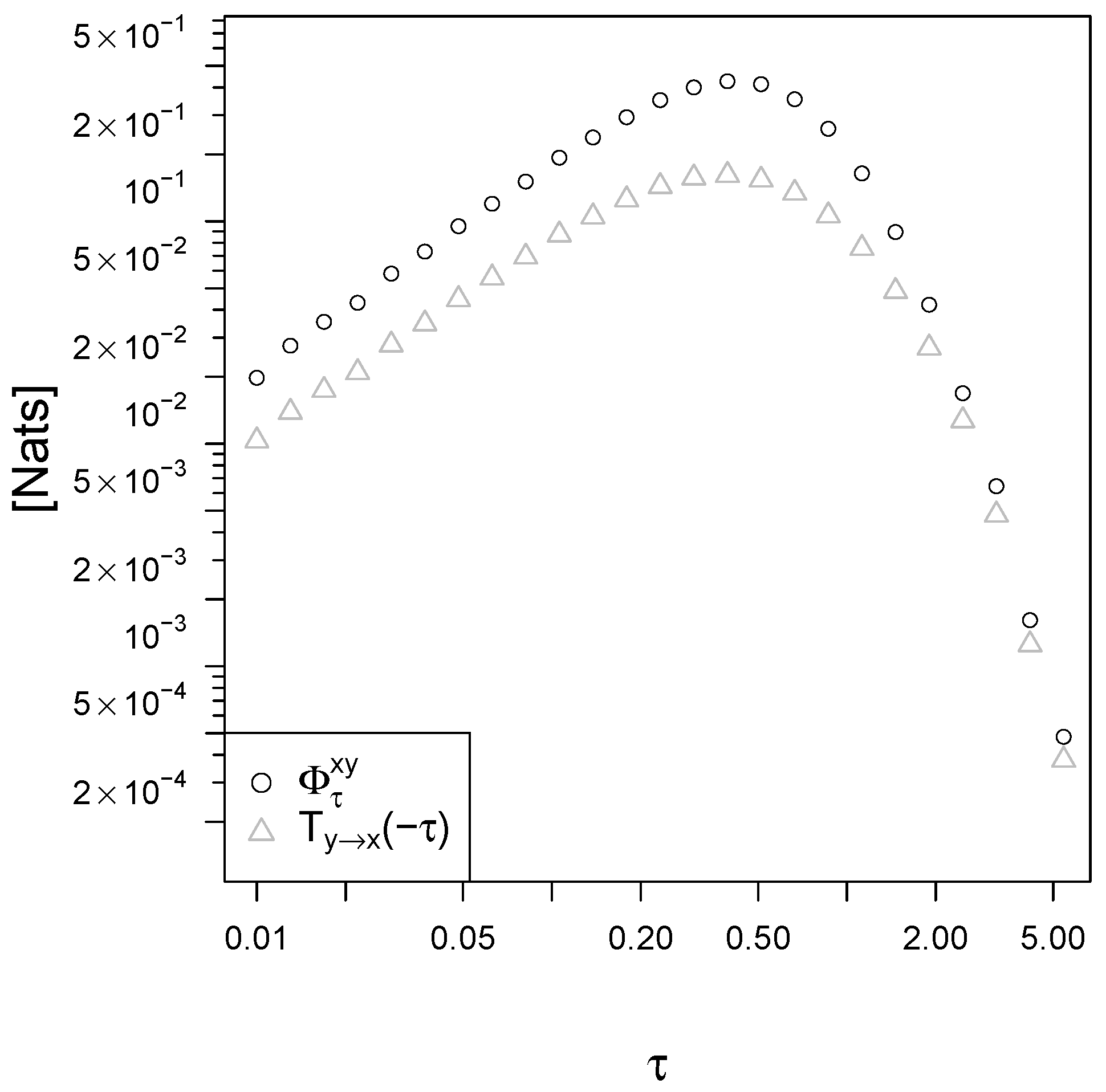

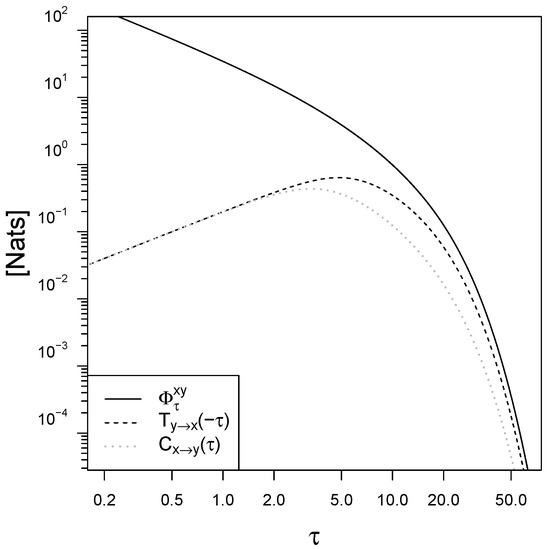

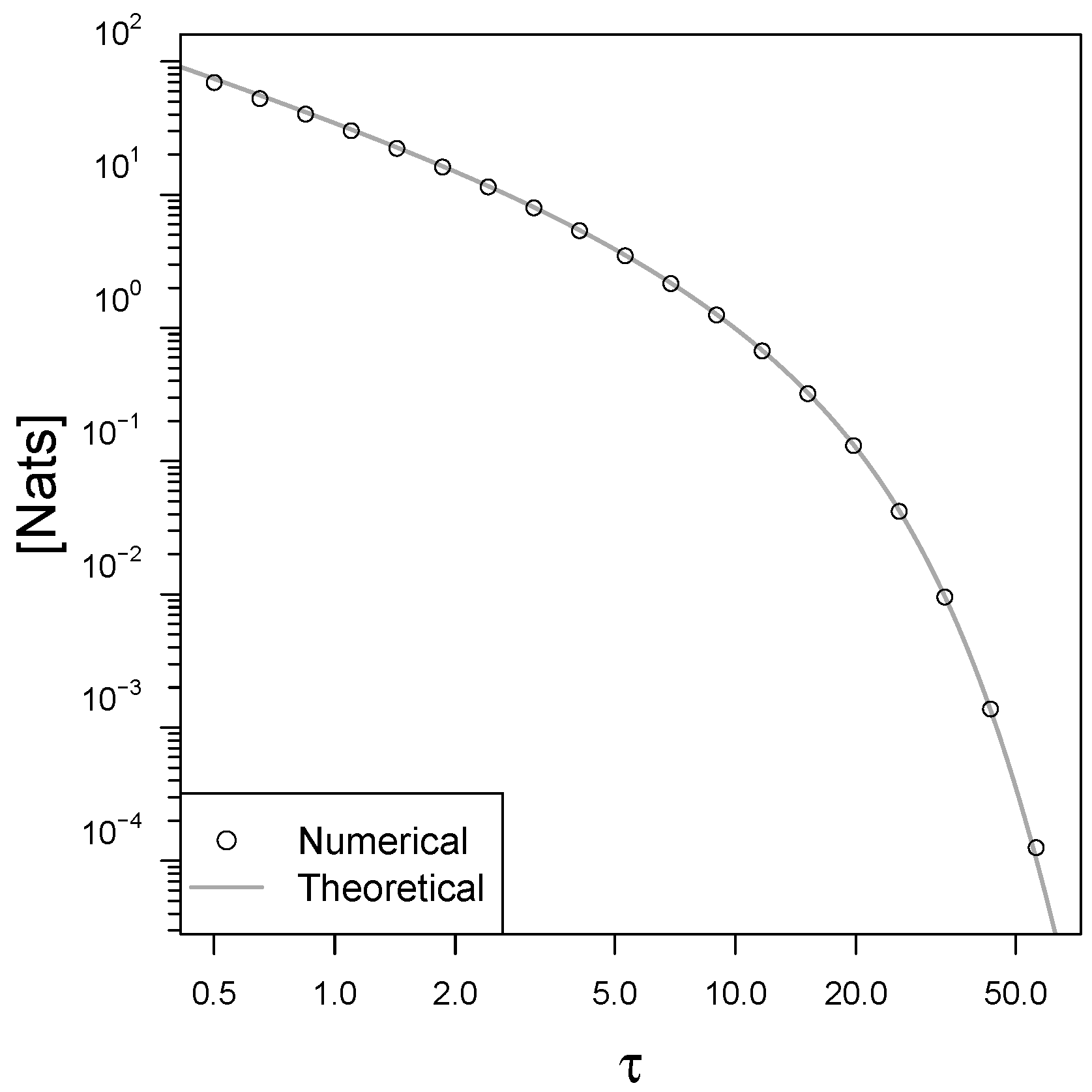

The plot in Figure 3 shows the mapping irreversibility and the backward transfer entropy as a function of the observational time . In the limit of small , the entropy production diverges because of the deterministic nature of the response dynamics (the standard deviation on the determination of the velocity due to instantaneous movements of the signal vanishes as ). The backward transfer entropy instead vanishes for because the Brownian motion has nonzero quadratic variation [32] and is the dominating term in the signal dynamics for small time intervals. In the limit of large observational time intervals the entropy production is asymptotically double the backward transfer entropy that is its lower bound given by the II Law for signal-response models (Equation (19)), . Interestingly, this limit of 2 is valid for any choice of the parameters in the BLRM.

Figure 3.

Mapping irreversibility , backward transfer entropy and causal influence in the BLRM as a function of the observational time interval . The parameters are and . All graphs are produced using R [41].

Let us recall the definition of causal influence as a measure of information flow for time series [30]:

is the redundancy measure quantifying that fraction of time-lagged mutual information that the signal gives on the evolution of the response that is already contained in the knowledge of the current state of the response [42].

Interestingly, for small observational time , the causal influence of the signal on the evolution of the response converges to the backward transfer entropy of the response on the past of the signal , and they both vanish with . Also, the causal influence rate defined as converges to the Horowitz-Esposito [18] information flow (details in Appendix C).

For large observational time instead the causal influence converges to the standard (forward) transfer entropy . Also, in this limit , the causal influence is an eighth of the entropy production for any choice of the parameters in the BLRM.

In general, the limit corresponds to recording the system state at a rate that is much slower compared to any stationary dynamics, so that only an exponentially small time-delayed information flow is observed. Similarly, time asymmetries in the dynamics become less visible and the irreversibility measures vanish.

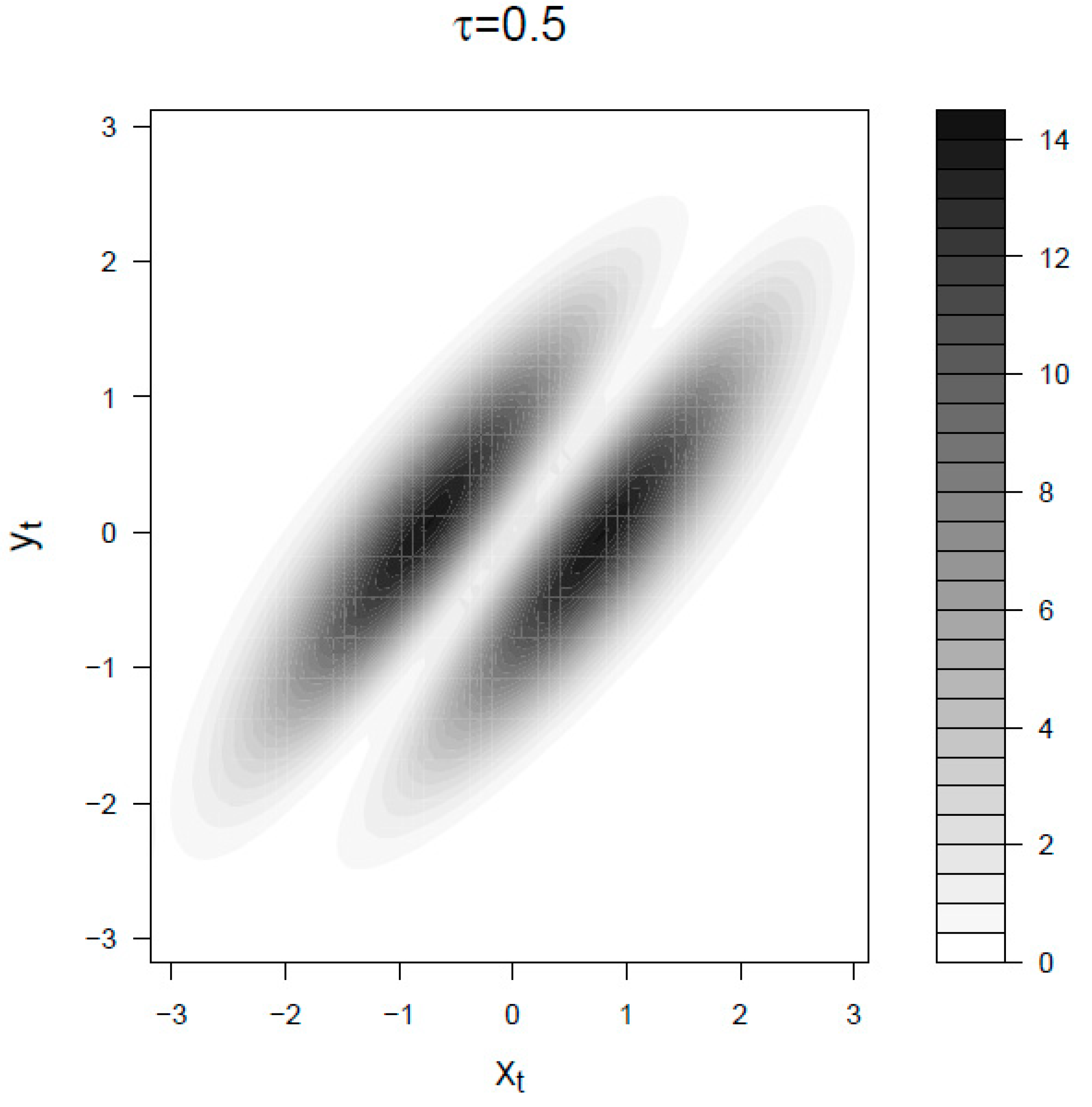

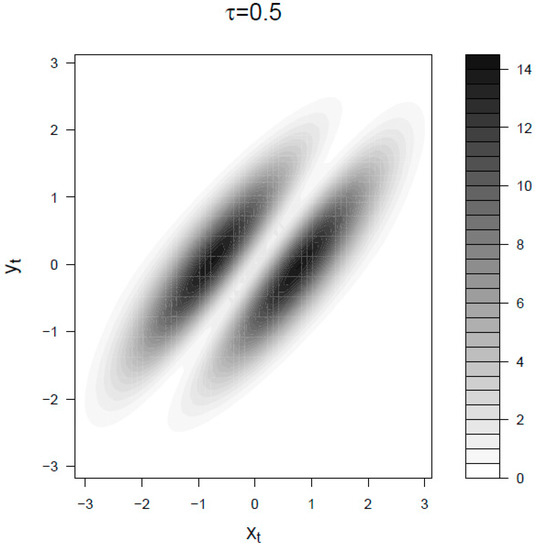

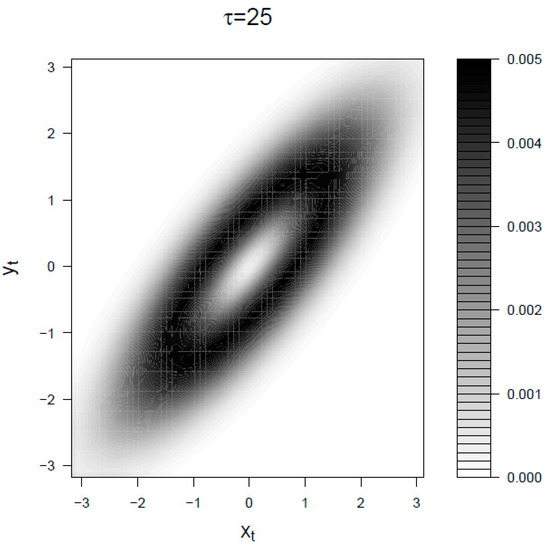

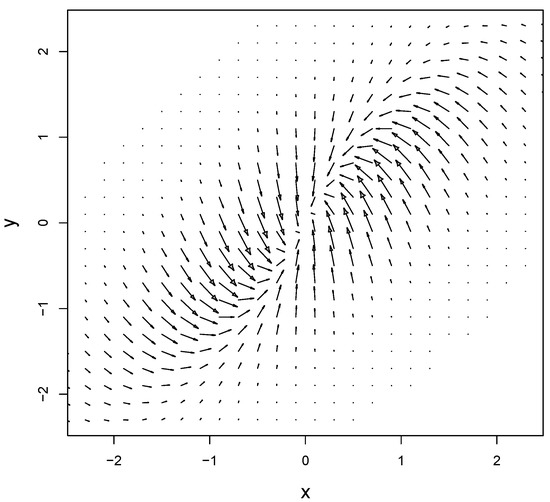

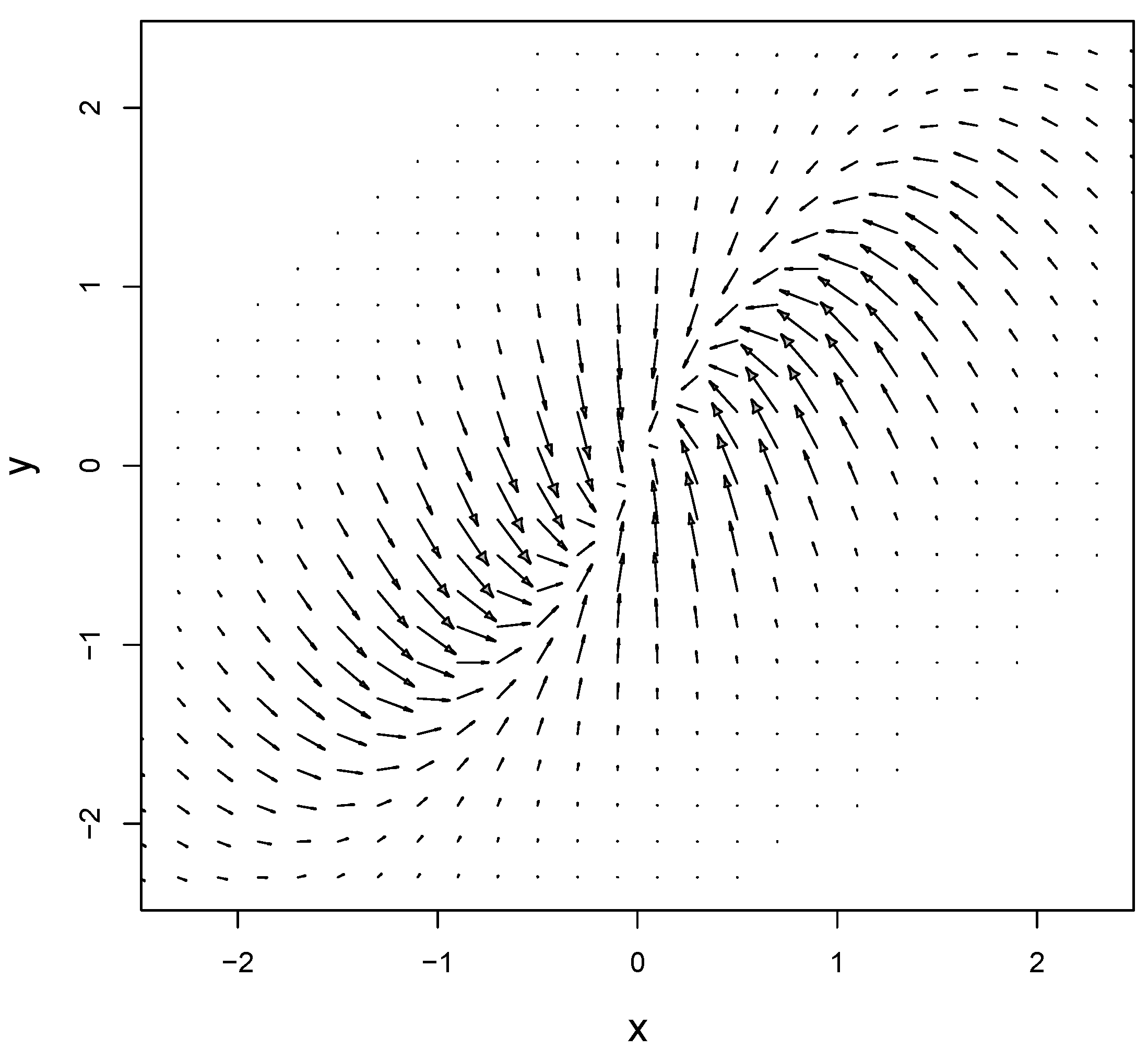

Let us now consider the mapping irreversibility density in the BLRM for small and large observational time . In Figure 4 we choose a smaller than the characteristic response time and smaller than the characteristic time of fluctuations . In the limit the signal dynamics is dominated by noise and the entropy production is mainly given by movements of the response y. The two spots correspond to the points where the product of the density times the absolute value of the instant velocity is larger. For longer intervals (that is the case of Figure 5) the history of the signal becomes relevant because it determined the present value of the response, therefore the irreversibility density is also distributed on those points of the diagonal (corresponding to roughly ) where the absolute value of the signal is big enough. Also, consequently, in this regime the backward transfer entropy is a meaningful lower bound to the entropy production, that is Equation (19).

Figure 4.

Mapping irreversibility density for the BLRM at . The parameters are and . Both and the space are expressed in units of standard deviations.

Figure 5.

Mapping irreversibility density for the BLRM at . The parameters are and . Both and the space are expressed in units of standard deviations.

5.2. Receptor-Ligand Systems

The receptor-ligand interaction is the fundamental mechanism of molecular recognition in biology and is a recurring motif in signaling pathways [43,44]. The fraction of activated receptors is part of the cell’s representation of the outside world, it is the cell’s estimate on the concentration of ligands in the environment, upon which it bases its protein expression and response to external stress.

If we could experimentally keep the concentration of ligands fixed we would still get a fluctuating number of activated receptors due to the intrinsic stochasticity of the macroscopic description of chemical reactions. Recent studies allowed a theoretical understanding of the origins of the macroscopic “noise” (i.e., the output variance in the conditional probability distributions), and raised questions about the optimality of the input distributions in terms of information transmission [45,46,47,48].

Here we consider the dynamical aspects of information processing in receptor-ligand systems [49,50], where the response is integrated over time. If the perturbation of the receptor-ligand binding on the concentration of free ligands is negligible, that is in the limit of high ligand concentration, receptor-ligand systems can be modeled as nonlinear signal-response models [51]. We write our model of receptor-ligand systems in the Ito representation [32] as:

The fluctuations of the ligand concentration x are described by a mean-reverting geometric Brownian motion, with an average in arbitrary units. The response, that is the fraction of activated receptors y, is driven by a Hill-type interaction with the signal with cooperativity coefficient h, and chemical bound/unbound rates and . For simplicity, the dynamic range of the response is set to be coincident with the mean value of the ligand concentration that means choosing a Hill constant . The form of the y noise is set by the biological constraint . For simplicity, we choose a cooperativity coefficient of that is the smallest order of sigmoidal functions.

The mutual information between the concentration of ligands and the fraction of activated receptors in a cell is a natural choice for quantifying its sensory properties [52]. Here we argue that in the case of signal-response models, the conditional entropy production is the relevant measure, because it quantifies how the dynamics of the signal produces irreversible transitions in the dynamics of the response, which is closely related to the concept of causation. Besides, our measure of causal influence [30] has yet not been generalized to the nonlinear case, while the entropy production has a consistent thermodynamical interpretation [31].

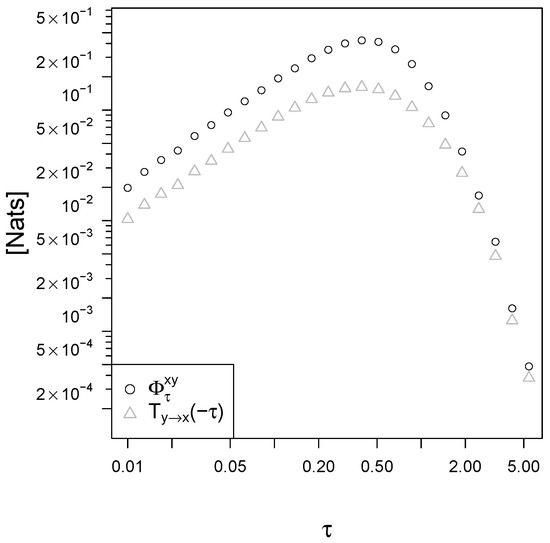

We simulated the receptor-ligand model of Equation (22), and we evaluated numerically the mapping irreversibility and the backward transfer entropy using a multivariate Gaussian approximation for the conditional probabilities (details in Appendix E). The II Law for signal-response models sets and proves to be a useful tool for receptor-ligand systems, as it is seen if Figure 6. Please note that the numerical estimation of the entropy production requires statistically many more samples compared to the backward transfer entropy: depends on (4 dimensions) while depends on (3 dimensions). In a real biological experimental setting, the sampling process is expensive, and the backward transfer entropy is therefore a useful lower bound for the entropy production, and an interesting characterization of the system to be used when the number of samples is not large enough.

Figure 6.

Mapping irreversibility and backward transfer entropy in our model of receptor-ligand systems (Equation (22)). The parameters are , , and .

The intrinsic noise of the response is the dominant term in the response dynamics for small intervals , and is the dominant term for the signal. This makes both and vanish in the limit . In the limit of large observational time , as it is also the case for the BLRM and in any stationary process, the entropy production for the corresponding time series and all the information measures are vanishing, because the memory of the system is damped exponentially over time by the relaxation parameter ( in the BLRM). Therefore, to better detect the irreversibility of a process one must choose an appropriate observational time . In the receptor-ligand model of Equation (22) with parameters , and we see that the optimal observational time is around (see Figure 6). Here for "optimal" we mean the observational time that corresponds to the highest mapping irreversibility . In general, one might be interested in inferring the irreversibility rate (that is in the limit ) looking at time series data with finite sampling interval . In the receptor-ligand model of Figure 6 the irreversibility rate converges to 2.

6. Discussion

To put in perspective our work let us recall that the well-established integral fluctuation theorem for stochastic trajectories [34] leads to a total irreversible entropy production with zero lower bound, that is the standard II Law of thermodynamics. Modern inequalities such as the II Law of Information thermodynamics [21,23,24] describe how the information continuously shared between subsystems can lead to an "apparent" negative entropy production in (one of) the subsystems. Nevertheless, these do not bring to any difference in the total joint irreversible entropy production whose lower bound is still zero.

Our aim here was to characterize cases in which more informative lower bounds on the total irreversible entropy production can be provided. Ito-Sagawa [10] already showed that for Bayesian controlled systems (where a parameter can be varied to perform work) a general fluctuation theorem for the subsystems and the relative lower bound on entropy production is linked to the topology of the Bayesian network representation associated with the stochastic dynamics of the system. This connection seems to be even stronger in the case of the total joint (uncontrolled) system that is the object of our study. We show in the bidimensional case of a pair of signal-response variables how a missing arrow in the Bayesian network describing the dynamics leads to a fluctuation theorem.

The detailed fluctuation theorem linking work dissipation and the irreversibility of trajectories in nonequilibrium transformations [5,8] holds in mechanical systems attached to heat reservoirs. We are interested here in the irreversibility of trajectories in more general models, and especially those featuring asymmetric interactions, since that is a widespread feature in models of biological systems or in asset pricing models in quantitative finance. In particular, we do not adopt a Hamiltonian description of work and heat or a microscopic reversibility assumption, and the detailed fluctuation theorem (8) is, properly, not a theorem but itself a definition of irreversibility.

We study time series resulting from a discretization with observational time of continuous stochastic processes. Importantly the underlying bipartite process appears, at limited time resolution, as a non-bipartite process. As a consequence, there is no general convergence of the time series irreversibility to the physical entropy production except for special cases such as Langevin systems with nonzero constant diffusion coefficients. Our mapping irreversibility (8) is the Markovian approximation of the time series irreversibility definition given in [26]. While it is well defined for any stationary process, it describes the statistical properties of long time series only in the Markovian case.

For general interacting dynamics like (1) we are not able to provide a more significant lower bound to the mapping irreversibility than the standard II Law of thermodynamics (10). A more informative lower bound on the mapping irreversibility is found for signal-response models described by the absence of feedback. This sets the backward transfer entropy as a lower bound to the conditional entropy production, and describes the connection between the irreversibility of time series and the discrete information flow towards past between variables.

Importantly, the relation between irreversibility and information measures is not given in general for time series because the results on continuous bipartite systems do not generalize to the time series irreversibility. It appears exactly because of the absence of feedback, and of the corresponding non-complete causal representation. We restrict ourselves to the bivariate case here, but we conjecture that fluctuation theorems for multidimensional stochastic autonomous dynamics should arise in general as a consequence of missing arrows in the (non-complete, see e.g., Figure 2) causal representation of the dynamics in terms of Bayesian networks.

In our opinion, a general relation connecting the incompleteness of the causal representation of the dynamics and fluctuation theorems is still lacking.

Finally, let us note that exponential averages such as our integral fluctuation theorem (18) are dominated by (exponentially) rare realizations [53], and the corresponding II Law inequalities such as our (19) are often poorly saturated bounds. In the receptor-ligand model discussed in section II.B the backward transfer entropy lower bound is roughly one half of the mapping irreversibility, and this is also the case in the BLRM for large where the ratio converges exactly to . This limitation is quite general, see for example the information thermodynamic bounds on signaling robustness given in [54].

We also introduced a discussion about the observational time in data analysis. In a biological model of receptor-ligand systems we showed that it must be fine-tuned for a robust detection of the irreversibility of the process, which is related to the concept of causation [30] and therefore to the efficiency of biological coupling between signaling and response.

Author Contributions

All authors contributed equally to this work.

Funding

Work at Humboldt-Universität zu Berlin was supported by the DFG (Graduiertenkolleg 1772 for Computational Systems Biology).

Acknowledgments

We thank Wolfgang Giese and Jesper Romers for useful discussions. We thank Marco Scazzocchio for numerical methods and algorithms expertise.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OU | Ornstein-Uhlenbeck process |

| BLRM | basic linear signal-response model |

Appendix A. Mapping Irreversibility in the BLRM

Let us consider an ensemble of stochastic trajectories generated with the BLRM (Equation (20)). The mapping irreversibility here is the Kullback–Leibler divergence [27] between the probability density of pairs of successive states separated by a time interval of the original (infinitely long and stationary) trajectory and the probability density of the same pairs of successive states of the time-reversed conjugate of the original trajectory (Equation (8)). For the sake of clarity, we use here in this appendix the full formalism rather than the compact one based on the functional form .

The time-reversed density of a particular couple of successive states, and , is equivalent to the original density of the exchanged couple of states, and . Therefore the density is the transpose of the density .

The mapping irreversibility for the BLRM is then written as:

The BLRM is ergodic, therefore the densities and can be empirically sampled looking at a single infinitely long trajectory.

The causal structure of the BLRM (and of any signal-response model, see Figure 2) is such that the evolution of the signal is not influenced by the response, . Then we can write the joint probability densities of couples of successive states over a time interval of the original trajectory as:

We need to evaluate all these probabilities. Since we are dealing with linear models, these are all Gaussian distributed, and we will calculate only the expected value and the variance of the relevant variables involved.

The system is Markovian, with , and the Bayes rule assumes the form . Then we calculate the conditional expected value for the signal given a condition for its past and another condition for its future as:

Now we can calculate the full-conditional expectation of the response:

One can immediately check that the limits for small and large time intervals verify respectively and .

The causal order for the evolution of the signal is such that if . Then we can calculate:

Let us write the full-conditional expectation of the squared response as a function of the expectations we just calculated:

A relevant feature of linear response models is that the conditional variances do not depend on the particular values of the conditioning variables [30]. Here we consider the full-conditional variance , and it will be independent of the conditions , , and . Then the remaining terms in sum up to:

where we used the fact that is symmetric in and . We recall that for functions with the symmetry it holds: .

The limits for small and large time intervals verify respectively and .

The factorization of the probability density into conditional densities (Equation (A2)) leads to a decomposition of the mapping irreversibility. Here we show that in the BLRM all these terms are zero except for the two terms corresponding to the full-conditional density of the evolution of the response in the original trajectory and in the time-reversed conjugate.

For a stationary stochastic process such as the BLRM it holds , then these two terms cancel:

The contribution from the signal in the mapping irreversibility is also zero since the Ornstein-Uhlenbeck process is reversible, :

The mapping irreversibility is therefore:

where in the last passage we exploited the fact that all the probability densities are Gaussian distributed. Solving the integrals, we get the mapping irreversibility for the BLRM as a function of the time interval :

is proportional to , therefore the mapping irreversibility is a function of just the two parameters and (and of the observational time ).

Figure A1.

Numerical verification of the analytical solution for the entropy production with observational time in the BLRM. The parameters are and . The slight down-deviation for small is due to the finite box length in the discretized space, while the up-deviation for is due to the finite number of samples.

Figure A1.

Numerical verification of the analytical solution for the entropy production with observational time in the BLRM. The parameters are and . The slight down-deviation for small is due to the finite box length in the discretized space, while the up-deviation for is due to the finite number of samples.

Appendix B. Backward Transfer Entropy in the BLRM

In the BLRM, where all densities are Gaussian distributed, the backward transfer entropy is equivalent to the time-reversed Granger causality [55]:

We must calculate the conditional variance . Let us recall the relation for the value of the response as a function of the whole history of the signal trajectory:

Then we write the conditional squared response as:

Since is expected to be independent of and , then the remaining terms after calculation sum up to:

where was already calculated in [30]. Then the backward transfer entropy is:

Appendix C. The Causal Influence Rate Converges to the Horowitz-Esposito Information Flow in the BLRM

We introduced the Horowitz-Esposito information flow [18,36] in Equation (3). In our stationary processes framework, the two components of the information flow are related by , so that the information flow is unidirectional and necessarily asymmetric when present. The y variable in the BLRM is measuring the x variable, therefore the information is flowing in the direction, and we wish to calculate the parameter dependence of the positive :

We see that the information flow is a function of probability currents. We plot the current for the BLRM in Figure A2.

Figure A2.

Probability currents in the BLRM, estimated with . The parameters are and . The space coordinates are in units of the standard deviation.

Figure A2.

Probability currents in the BLRM, estimated with . The parameters are and . The space coordinates are in units of the standard deviation.

The probability current in the y direction for the BLRM is given by . Then we calculate:

where in the second passage we used partial integration and is exponentially vanishing for because is a Gaussian, . In the last passage we identified . Since the BLRM is a stationary process the time derivatives of expectations vanish, then , and we find . Then using the BLRM expression [30] for the variance of the response we obtain:

The Horowitz-Esposito information flow in the BLRM is equal to the inverse of the deterministic response time to perturbations . Interestingly, this is independent of the time scale of fluctuations . Let us consider a fixed , then if is small we have very fast fluctuations and the response is not able to follow the signal with accuracy and the mutual information is small. Nevertheless, the information flow does not decrease because the dynamics of the y variable is driven by the x position for every possible situation even if not strongly correlated.

Importantly, in the small observational time limit our definition of causal influence [30] converges in rate to the Horowitz-Esposito information flow:

Appendix D. Numerical Convergence of the Mapping Irreversibility to the Entropy Production in the Feedback Cooling Model

The feedback cooling model [19,36] describes a Brownian particle with velocity x and viscous damping , that is under the feedback control of the measurement device y. The variable y is a low-pass filter of noisy measurements on x. The SDE system describing the process is written:

where is the feedback intensity. The mapping irreversibility (8) converges in the limit of small observational time to the physical entropy production (5) if the conditional probability converges almost surely to the bipartite form . Importantly, the convergence has to be faster than so that in the limit of continuous trajectories we can almost surely neglect the term .

The knowledge of acts only on the estimate of (with ) because the diffusion coefficients are constant. Since the system (A21) is linear, the Kullback–Leibler divergence can be expressed in terms of conditional expectations:

The conditional variance is of order and differs from only with a term of order so that for .

While the conditional variances are constant, the conditional expectation depend linearly on (and on , ), therefore it is sufficient to look at the conditional correlation , given that . By numerical simulation we checked that in the limit for the feedback cooling model (A21). Importantly, we checked that with there is no convergence, as it is also the case for the BLRM.

For the case of nonconstant diffusion coefficients (multiplicative noise) the argument on the conditional variances does not hold, and we are not sure of the convergence. The idea is that there should be an intermediate case between vanishing with constant and diverging with , and this could be the case of multiplicative noise. Such characterization is beyond the scope of this paper.

Appendix E. Numerical Estimation of the Entropy Production in the Bivariate Gaussian Approximation

We calculate numerically the mapping irreversibility as an average of the spatial density of irreversibility , . In the computer algorithm the space is discretized in boxes , and for each box we estimate the conditional correlation of future values , the conditional correlation of past values , the expected values for both variables in future (, ) and past states (, ), and the standard deviations on those , , , . The spatial density evaluated in the box is then calculated as the bidimensional Kullback–Leibler divergence in the Gaussian approximation [56]:

The effect of the finite width of the discretization is attenuated by estimating all the quantities taking into account the starting point within the box , subtracting the difference to the mean values for each box. For example, when we sample for the estimate of the conditional average we would collect samples .

References

- Jarzynski, C. Equalities and inequalities: Irreversibility and the second law of thermodynamics at the nanoscale. Annu. Rev. Condens. Matter Phys. 2011, 2, 329–351. [Google Scholar] [CrossRef]

- Parrondo, J.M.; van den Broeck, C.; Kawai, R. Entropy production and the arrow of time. New J. Phys. 2009, 11, 073008. [Google Scholar] [CrossRef]

- Feng, E.H.; Crooks, G.E. Length of time’s arrow. Phys. Revi. Lett. 2008, 101, 090602. [Google Scholar] [CrossRef] [PubMed]

- Jarzynski, C. Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997, 78, 2690. [Google Scholar] [CrossRef]

- Crooks, G.E. Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E 1999, 60, 2721. [Google Scholar] [CrossRef]

- Evans, D.J.; Searles, D.J. The fluctuation theorem. Adv. Phys. 2002, 51, 1529–1585. [Google Scholar] [CrossRef]

- Kawai, R.; Parrondo, J.; van den Broeck, C. Dissipation: The phase-space perspective. Phys. Rev. Lett. 2007, 98, 080602. [Google Scholar] [CrossRef] [PubMed]

- Jarzynski, C. Hamiltonian derivation of a detailed fluctuation theorem. J. Stat. Phys. 2000, 98, 77–102. [Google Scholar] [CrossRef]

- Chernyak, V.Y.; Chertkov, M.; Jarzynski, C. Path-integral analysis of fluctuation theorems for general Langevin processes. J. Stat. Mech. Theory Exp. 2006, 2006, P08001. [Google Scholar] [CrossRef]

- Ito, S.; Sagawa, T. Information thermodynamics on causal networks. Phys. Rev. Lett. 2013, 111, 180603. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Nonequilibrium thermodynamics of feedback control. Phys. Rev. E 2012, 85, 021104. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Generalized Jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 2010, 104, 090602. [Google Scholar] [CrossRef]

- Szilard, L. On the decrease of entropy in a thermodynamic system by the intervention of intelligent beings. Syst. Res. Behav. Sci. 1964, 9, 301–310. [Google Scholar] [CrossRef]

- Martínez, I.A.; Roldán, É.; Dinis, L.; Petrov, D.; Parrondo, J.M.; Rica, R.A. Brownian carnot engine. Nat. Phys. 2016, 12, 67–70. [Google Scholar] [CrossRef] [PubMed]

- Ciliberto, S. Experiments in stochastic thermodynamics: Short history and perspectives. Phys. Rev. X 2017, 7, 021051. [Google Scholar] [CrossRef]

- Toyabe, S.; Sagawa, T.; Ueda, M.; Muneyuki, E.; Sano, M. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Nat. Phys. 2010, 6, 988–992. [Google Scholar] [CrossRef]

- Koski, J.V.; Maisi, V.F.; Pekola, J.P.; Averin, D.V. Experimental realization of a Szilard engine with a single electron. Proc. Natl. Acad. Sci. USA 2014, 111, 13786–13789. [Google Scholar] [CrossRef] [PubMed]

- Horowitz, J.M.; Esposito, M. Thermodynamics with continuous information flow. Phys. Rev. X 2014, 4, 031015. [Google Scholar] [CrossRef]

- Rosinberg, M.L.; Horowitz, J.M. Continuous information flow fluctuations. EPL (Europhysics Letters) 2016, 116, 10007. [Google Scholar] [CrossRef]

- Horowitz, J.M. Multipartite information flow for multiple Maxwell demons. J. Stat. Mech. Theory Exp. 2015, 2015, P03006. [Google Scholar] [CrossRef]

- Ito, S. Backward transfer entropy: Informational measure for detecting hidden Markov models and its interpretations in thermodynamics, gambling and causality. Sci. Rep. 2016, 6. [Google Scholar] [CrossRef] [PubMed]

- Spinney, R.E.; Lizier, J.T.; Prokopenko, M. Transfer entropy in physical systems and the arrow of time. Phys. Rev. E 2016, 94, 022135. [Google Scholar] [CrossRef] [PubMed]

- Parrondo, J.M.; Horowitz, J.M.; Sagawa, T. Thermodynamics of information. Nat. Phys. 2015, 11, 131–139. [Google Scholar] [CrossRef]

- Ito, S. Unified framework for the second law of thermodynamics and information thermodynamics based on information geometry. arXiv, 2018; arXiv:1810.09545. [Google Scholar]

- Ito, S. Stochastic thermodynamic interpretation of information geometry. Phys. Rev. Lett. 2018, 121, 030605. [Google Scholar] [CrossRef] [PubMed]

- Roldán, É.; Parrondo, J.M. Entropy production and Kullback-Leibler divergence between stationary trajectories of discrete systems. Phys. Rev. E 2012, 85, 031129. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Sekimoto, K. Langevin equation and thermodynamics. Prog. Theor. Phys. Supp. 1998, 130, 17–27. [Google Scholar] [CrossRef]

- Crooks, G.E.; Still, S.E. Marginal and conditional second laws of thermodynamics. arXiv, 2016; arXiv:1611.04628. [Google Scholar]

- Auconi, A.; Giansanti, A.; Klipp, E. Causal influence in linear Langevin networks without feedback. Phys. Rev. E 2017, 95, 042315. [Google Scholar] [CrossRef]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 126001. [Google Scholar] [CrossRef]

- Shreve, S.E. Stochastic Calculus for Finance II: Continuous-Time Models; Springer Science & Business Media: New York, NY, USA, 2004; Volume 11. [Google Scholar]

- Taniguchi, T.; Cohen, E. Onsager-Machlup theory for nonequilibrium steady states and fluctuation theorems. J. Stat. Phys. 2007, 126, 1–41. [Google Scholar] [CrossRef]

- Seifert, U. Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 2005, 95, 040602. [Google Scholar] [CrossRef]

- Risken, H. Fokker-planck equation. In The Fokker-Planck Equation; Springer: Berlin, Germany, 1996; pp. 63–95. [Google Scholar]

- Horowitz, J.M.; Sandberg, H. Second-law-like inequalities with information and their interpretations. New J. Phys. 2014, 16, 125007. [Google Scholar] [CrossRef]

- Gomez-Marin, A.; Parrondo, J.M.; van den Broeck, C. Lower bounds on dissipation upon coarse graining. Phys. Rev. E 2008, 78, 011107. [Google Scholar] [CrossRef] [PubMed]

- Ito, S.; Sagawa, T. Information flow and entropy production on Bayesian networks. In Mathematical Foundations and Applications of Graph Entropy; Wiley-VCH Verlag GmbH & Co. KGaA: Weinheim, Germany, 2016; pp. 63–100. [Google Scholar]

- Uhlenbeck, G.E.; Ornstein, L.S. On the theory of the Brownian motion. Phys. Rev. 1930, 36, 823. [Google Scholar] [CrossRef]

- Gillespie, D.T. Exact numerical simulation of the Ornstein-Uhlenbeck process and its integral. Phys. Rev. E 1996, 54, 2084. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; Foundation for Statistical Computing: Vienna, Austria, 2014; Available online: www.r-project.org (accessed on 14 February 2019).

- Barrett, A.B. Exploration of synergistic and redundant information sharing in static and dynamical Gaussian systems. Phys. Rev. E 2015, 91, 052802. [Google Scholar] [CrossRef] [PubMed]

- Klipp, E.; Liebermeister, W.; Wierling, C.; Kowald, A.; Herwig, R. Systems Biology: A Textbook; John Wiley & Sons; Wiley-VCH Verlag GmbH & Co. KGaA: Weinheim, Germany, 2016. [Google Scholar]

- Kholodenko, B.N. Cell-signalling dynamics in time and space. Nat. Rev. Mol. Cell Biol. 2006, 7, 165. [Google Scholar] [CrossRef] [PubMed]

- Bialek, W.; Setayeshgar, S. Physical limits to biochemical signaling. Proc. Natl. Acad. Sci. USA 2005, 102, 10040–10045. [Google Scholar] [CrossRef]

- Tkačik, G.; Callan, C.G.; Bialek, W. Information flow and optimization in transcriptional regulation. Proc. Natl. Acad. Sci. USA 2008, 105, 12265–12270. [Google Scholar] [CrossRef]

- Crisanti, A.; de Martino, A.; Fiorentino, J. Statistics of optimal information flow in ensembles of regulatory motifs. Phys. Rev. E 2018, 97, 022407. [Google Scholar] [CrossRef]

- Waltermann, C.; Klipp, E. Information theory based approaches to cellular signaling. Biochimica et Biophysica Acta (BBA)-General Subjects 2011, 1810, 924–932. [Google Scholar] [CrossRef]

- Di Talia, S.; Wieschaus, E.F. Short-term integration of Cdc25 dynamics controls mitotic entry during Drosophila gastrulation. Dev. Cell 2012, 22, 763–774. [Google Scholar] [CrossRef] [PubMed]

- Nemenman, I. Gain control in molecular information processing: Lessons from neuroscience. Phys. Biol. 2012, 9, 026003. [Google Scholar] [CrossRef] [PubMed]

- Di Talia, S.; Wieschaus, E.F. Simple biochemical pathways far from steady state can provide switchlike and integrated responses. Biophys. J. 2014, 107, L1–L4. [Google Scholar] [CrossRef] [PubMed]

- Tkačik, G.; Walczak, A.M.; Bialek, W. Optimizing information flow in small genetic networks. Phys. Rev. E 2009, 80, 031920. [Google Scholar] [CrossRef] [PubMed]

- Jarzynski, C. Rare events and the convergence of exponentially averaged work values. Phys. Rev. E 2006, 73, 046105. [Google Scholar] [CrossRef] [PubMed]

- Ito, S.; Sagawa, T. Maxwell’s demon in biochemical signal transduction with feedback loop. Nat. Commun. 2015, 6, 7498. [Google Scholar] [CrossRef] [PubMed]

- Barnett, L.; Barrett, A.B.; Seth, A.K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 2009, 103, 238701. [Google Scholar] [CrossRef]

- Duchi, J. Derivations for Linear Algebra and Optimization. Available online: https://docplayer.net/30887339-Derivations-for-linear-algebra-and-optimization.html (accessed on 11 February 2019).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).