A Novel Infrared and Visible Image Information Fusion Method Based on Phase Congruency and Image Entropy

Abstract

1. Introduction

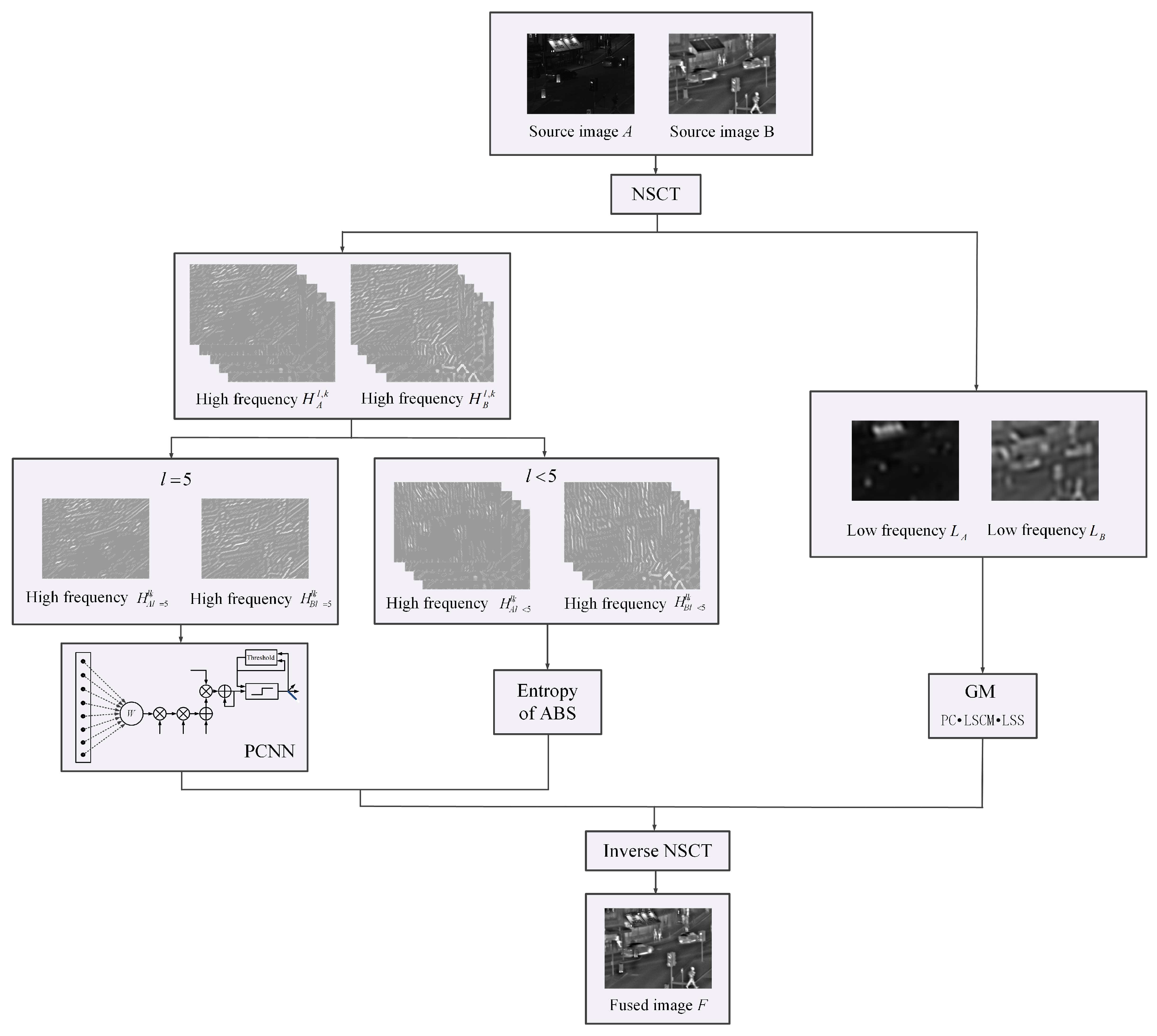

- The high- and low-frequency components of source images are processed separately based on their own features.

- It applies PCNN and ABS to high-frequency sub-bands of different layers, which achieves a more precise decomposition of high-frequency components.

- The proposed image fusion algorithm can capture the details of source images well by integrating the advantages of NSCT, PCNN, and PC.

2. The Proposed Algorithm

2.1. NSCT

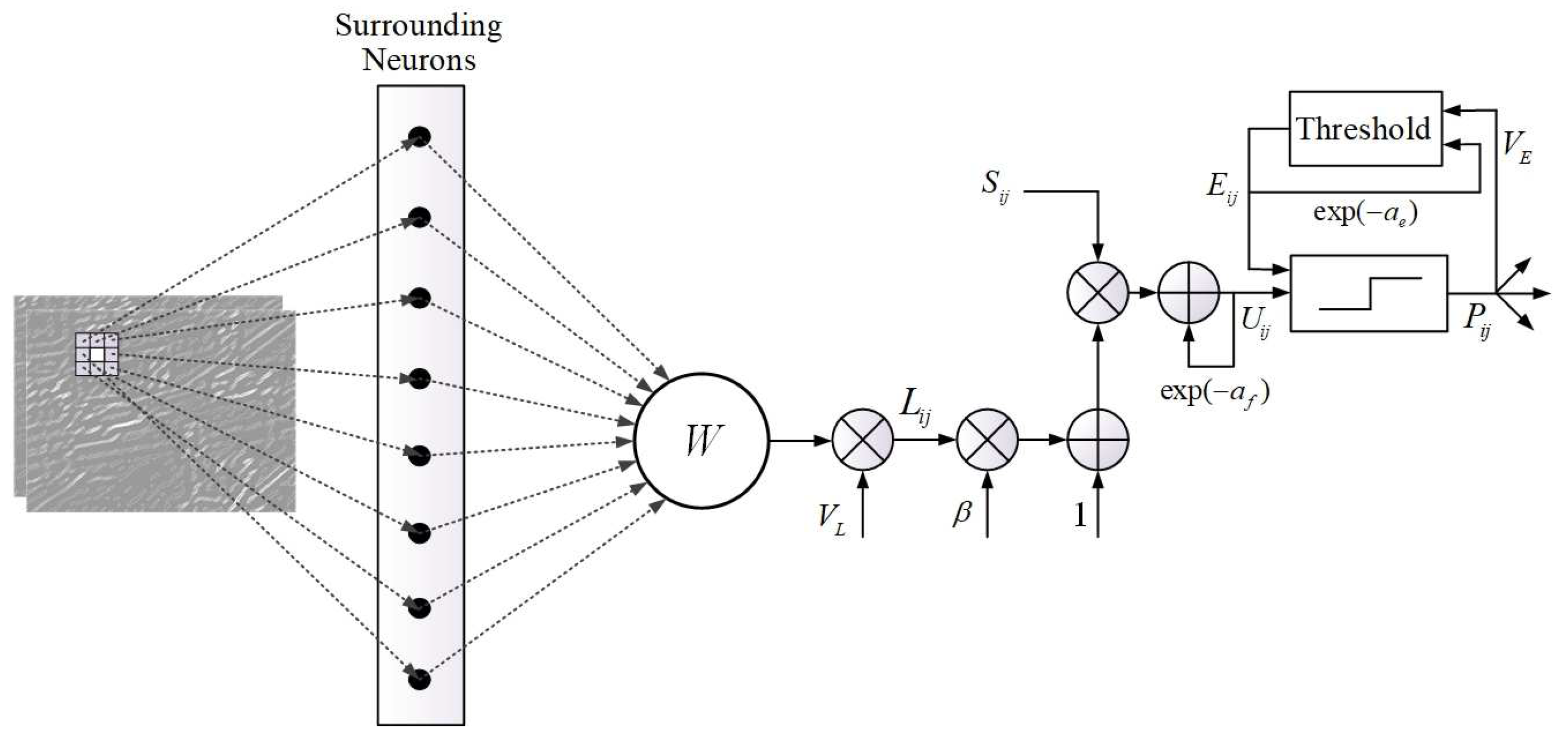

2.2. Fusion of High-Frequency Sub-Bands

2.3. Fusion Rule of Low-Frequency Sub-Bands

| Algorithm 1 The proposed infrared-visible image fusion algorithm |

Input:

Input:

|

3. Comparative Experiments

3.1. Experiment Preparation

3.2. Objective Evaluation Metrics

3.3. Experiment Results of Infrared-Visible Image Fusion

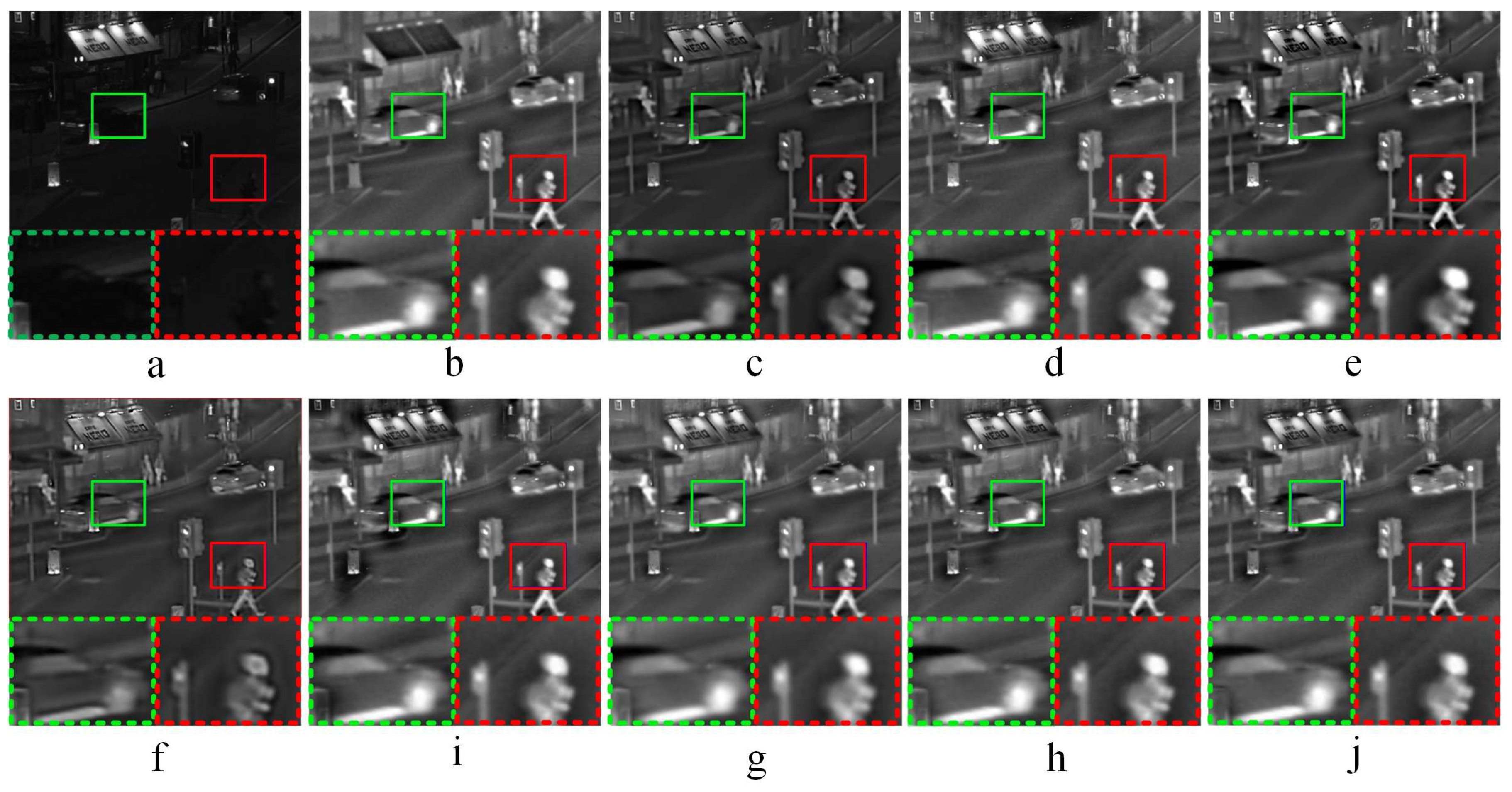

3.3.1. Comparative Experiments—1

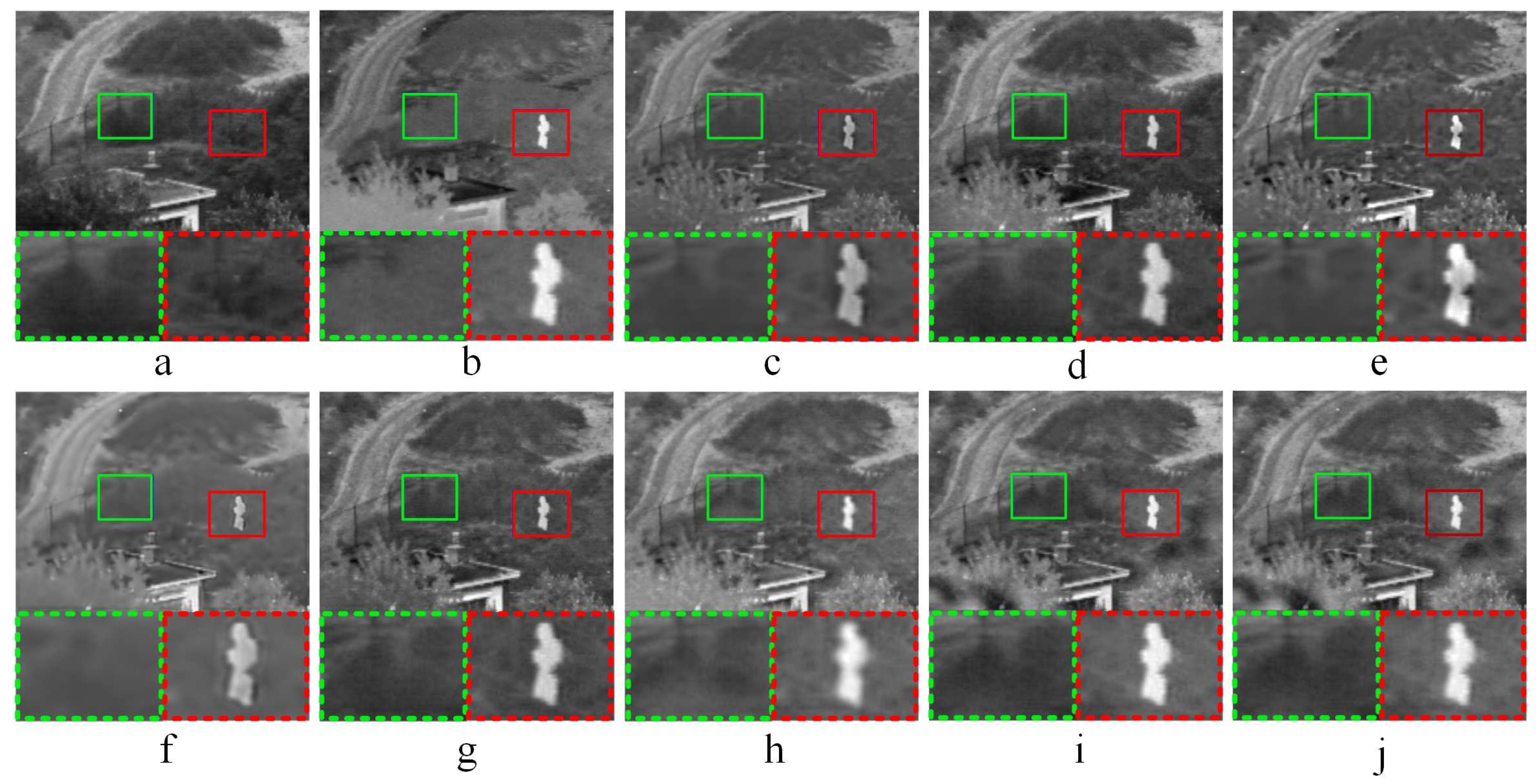

3.3.2. Comparative Experiments—2

3.3.3. Comparative Experiments—3

3.3.4. Comparative Experiments—4

3.3.5. Analysis of Comparative Experiment Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Qi, G.; Zhu, Z.; Chen, Y.; Wang, J.; Zhang, Q.; Zeng, F. Morphology-based visible-infrared image fusion framework for smart city. Int. J. Simul. Process Model. 2018, 13, 523–536. [Google Scholar] [CrossRef]

- Liu, C.H.; Qi, Y.; Ding, W.R. Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys. Technol. 2017, 83, 94–102. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Yu, Z.; Mao, C. Multifocus image fusion by combining with mixed-order structure tensors and multiscale neighborhood. Inf. Sci. 2016, 349, 25–49. [Google Scholar] [CrossRef]

- Li, Y.; Sun, Y.; Zheng, M.; Huang, X.; Qi, G.; Hu, H.; Zhu, Z. A Novel Multi-Exposure Image Fusion Method Based on Adaptive Patch Structure. Entropy 2018, 20, 935. [Google Scholar] [CrossRef]

- Yin, L.; Zheng, M.; Qi, G.; Zhu, Z.; Jin, F.; Sim, J. A Novel Image Fusion Framework Based on Sparse Representation and Pulse Coupled Neural Network. IEEE Access 2019, 7, 98290–98305. [Google Scholar] [CrossRef]

- Naik, V.V.; Gharge, S. Satellite image resolution enhancement using DTCWT and DTCWT based fusion. In Proceedings of the International Conference on Advances in Computing, Jaipur, India, 21–24 September 2016; pp. 1957–1962. [Google Scholar]

- Zhu, Z.; Zheng, M.; Qi, G.; Wang, D.; Xiang, Y. A Phase Congruency and Local Laplacian Energy Based Multi-Modality Medical Image Fusion Method in NSCT Domain. IEEE Access 2019, 7, 20811–20824. [Google Scholar] [CrossRef]

- Zhang, Q.; Maldague, X. An adaptive fusion approach for infrared and visible images based on NSCT and compressed sensing. Infrared Phys. Technol. 2016, 74, 11–20. [Google Scholar] [CrossRef]

- Li, H.; Qiu, H.; Yu, Z.; Zhang, Y. Infrared and visible image fusion scheme based on NSCT and low-level visual features. Infrared Phys. Technol. 2016, 76, 174–184. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Qi, G.; Zhu, Z.; Erqinhu, K.; Chen, Y.; Chai, Y.; Sun, J. Fault-diagnosis for reciprocating compressors using big data and machine learning. Simul. Model. Pract. Theory 2018, 80, 104–127. [Google Scholar] [CrossRef]

- Li, H.; Qiu, H.; Yu, Z.; Li, B. Multifocus image fusion via fixed window technique of multiscale images and non-local means filtering. Sign Process. 2017, 138, 71–85. [Google Scholar] [CrossRef]

- Ding, W.; Bi, D.; He, L.; Fan, Z. Infrared and visible image fusion method based on sparse features. Infrared Phys. Technol. 2018, 92, 372–380. [Google Scholar] [CrossRef]

- Kong, W.; Wang, B.; Yang, L. Technique for infrared and visible image fusion based on non-subsampled shearlet transform and spiking cortical model. Infrared Phys. Technol. 2015, 71, 87–98. [Google Scholar] [CrossRef]

- Xiang, T.; Li, Y.; Gao, R. A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys. Technol. 2015, 69, 53–61. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. Infrared and visible image fusion based on random projection and sparse representation. Int. J. Remote Sens. 2014, 35, 1640–1652. [Google Scholar]

- Yin, M.; Liu, X.; Liu, Y.; Chen, X. Medical Image Fusion With Parameter-Adaptive Pulse Coupled Neural Network in Nonsubsampled Shearlet Transform Domain. IEEE Trans. Instrum. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef]

- Li, Y.; Sun, Y.; Huang, X.; Qi, G.; Zheng, M.; Zhu, Z. An Image Fusion Method Based on Sparse Representation and Sum Modified-Laplacian in NSCT Domain. Entropy 2018, 20, 522. [Google Scholar] [CrossRef]

- Li, H.; He, X.; Tao, D.; Tang, Y.; Wang, R. Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recognit. 2018, 79, 130–146. [Google Scholar] [CrossRef]

- Li, H.; Wang, Y.; Yang, Z.; Wang, R.; Li, X.; Tao, D. Discriminative dictionary learning-based multiple component decomposition for detail-preserving noisy image fusion. IEEE Trans. Instrum. Meas. 2019. [Google Scholar] [CrossRef]

- Cvejic, N.; Canagarajah, C.N.; Bull, D.R. Image fusion metric based on mutual information and Tsallis entropy. Electron. Lett. 2006, 42, 626–627. [Google Scholar] [CrossRef]

- Liu, Z.; Blasch, E.; Xue, Z.; Zhao, J.; Laganière, R.; Wu, W. Objective Assessment of Multiresolution Image Fusion Algorithms for Context Enhancement in Night Vision: A Comparative Study. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 94–109. [Google Scholar] [CrossRef] [PubMed]

- Petrovi, V. Subjective tests for image fusion evaluation and objective metric validation. Inf. Fusion 2007, 8, 208–216. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A Novel Multi-modality Image Fusion Method Based on Image Decomposition and Sparse Representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Chen, Y.; Blum, R.S. A new automated quality assessment algorithm for image fusion. Image Vis. Comput. 2009, 27, 1421–1432. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z. Simultaneous image fusion and denoising with adaptive sparse representation. Image Process. IET 2014, 9, 347–357. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Cheng, J.; Peng, H. A medical image fusion method based on convolutional neural networks. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–7. [Google Scholar]

- Kim, M.; Han, D.K.; Ko, H. Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion 2016, 27, 198–214. [Google Scholar] [CrossRef]

- Li, M.; Yuan, X.; Luo, Z.; Qiu, X. Infrared and Visual Image Fusion Based on NSST and Improved PCNN. J. Phys. Conf. Ser. 2018, 1069, 012151. [Google Scholar] [CrossRef]

| ASR | 0.4123 | 0.6470 | 1.9354 | 0.5334 | 0.4250 |

| CNN | 0.4402 | 0.4528 | 2.0952 | 0.5288 | 0.4582 |

| CT | 0.3931 | 0.5639 | 1.8511 | 0.4965 | 0.3848 |

| KIM | 0.3896 | 0.6011 | 1.8408 | 0.4966 | 0.4062 |

| MSR-SR | 0.4195 | 0.6888 | 2.0132 | 0.5524 | 0.4563 |

| NSST-PCNN | 0.4697 | 0.6082 | 2.1546 | 0.5308 | 0.4299 |

| NSCT-PC | 0.4262 | 0.6639 | 2.0337 | 0.5608 | 0.4352 |

| Proposed | 0.4541 | 0.7122 | 2.1813 | 0.5622 | 0.4811 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, X.; Qi, G.; Wei, H.; Chai, Y.; Sim, J. A Novel Infrared and Visible Image Information Fusion Method Based on Phase Congruency and Image Entropy. Entropy 2019, 21, 1135. https://doi.org/10.3390/e21121135

Huang X, Qi G, Wei H, Chai Y, Sim J. A Novel Infrared and Visible Image Information Fusion Method Based on Phase Congruency and Image Entropy. Entropy. 2019; 21(12):1135. https://doi.org/10.3390/e21121135

Chicago/Turabian StyleHuang, Xinghua, Guanqiu Qi, Hongyan Wei, Yi Chai, and Jaesung Sim. 2019. "A Novel Infrared and Visible Image Information Fusion Method Based on Phase Congruency and Image Entropy" Entropy 21, no. 12: 1135. https://doi.org/10.3390/e21121135

APA StyleHuang, X., Qi, G., Wei, H., Chai, Y., & Sim, J. (2019). A Novel Infrared and Visible Image Information Fusion Method Based on Phase Congruency and Image Entropy. Entropy, 21(12), 1135. https://doi.org/10.3390/e21121135