Abstract

In recent years the advances in Artificial Intelligence (AI) have been seen to play an important role in human well-being, in particular enabling novel forms of human-computer interaction for people with a disability. In this paper, we propose a sEMG-controlled 3D game that leverages a deep learning-based architecture for real-time gesture recognition. The 3D game experience developed in the study is focused on rehabilitation exercises, allowing individuals with certain disabilities to use low-cost sEMG sensors to control the game experience. For this purpose, we acquired a novel dataset of seven gestures using the Myo armband device, which we utilized to train the proposed deep learning model. The signals captured were used as an input of a Conv-GRU architecture to classify the gestures. Further, we ran a live system with the participation of different individuals and analyzed the neural network’s classification for hand gestures. Finally, we also evaluated our system, testing it for 20 rounds with new participants and analyzed its results in a user study.

1. Introduction

According to the World Health Organization (WHO) (https://www.who.int/disabilities/world_report/2011/report/en/), about 15% of the world’s population lives with some form of disability, of whom many suffer from musculoskeletal or nervous system impairments. Rehabilitation processes may enable a patient to restore their functional health. Rehabilitation refers to a set of interventions needed of repeating exercises to strengthen the damaged body part. A rehabilitation process can be exhausting, painful and difficult and the exercises must be performed in a specific way to allow patients to maximize their chances of recovery and regain maximum self-sufficiency [1,2].

In recent years, there has been an increasing interest in rehabilitation methods using advances in technology with the aim of enhancing patient comfort. Certain points need to be considered for rehabilitation exercises to be more efficient. The process should be user friendly and lead the patients to feel motivated to engage in the exercise and enjoy the experience [3,4]. Applying competitive stimuli and scoring mechanisms to the exercises (according to the correct movements) can help fulfill specific objectives during the rehabilitative process. Moreover, the rehabilitation exercises must be customizable depending on the therapist’s indications and the therapist should be able to apply any suitable adjustments in the line with the patient’s conditions [5,6].

Interactive systems, which support interaction between humans and machines, such as Augmented Reality (AR) and Virtual Reality (VR), have increased the possibilities of helping the rehabilitation process. As progress in rehabilitation needs time, much effort has focused on using these interactive systems to reduce the need for a patient’s regular attendance at rehabilitation clinics and to provide the opportunity to perform a set of physical exercises in a home-based environment [7,8,9].

However, a major significant drawback of these kinds of applications is that they increase the likelihood of the exercises being performed in a casual and incorrect manner. If the therapist simply relies on the final results of each section and fails to control the complete process, the effects of the exercise may be inadequate [1].

Virtual Reality (VR) forms part of the most recent generation of computer gaming. This technology provides the opportunity to experience action games from a first-person perspective in a simulated world. The newest rehabilitation methods leverage Machine Learning techniques and VR systems. These classifiers receive labeled data from sensors and/or cameras for each movement performed by healthy subjects to learn its high-level features [10,11,12,13].

Since electromyography (EMG) sensors can record the electrical activity produced by muscles, many studies have focused on the effectiveness of these sensors in the rehabilitation process [14,15,16,17]. A surface EMG (sEMG) is a useful non-intrusive sensor to facilitate the study of muscle activity from skin. In this work, we evaluate sEMG data for gesture recognition tasks during the rehabilitation process by means of implementing of Deep Learning systems.

This paper presents a dataset with 7 static hand gestures acquired via sEMG from 15 individuals. The novel dataset was tested by a proposed neural network architecture. In addition this paper introduces a rehabilitation game controlled by seven dissimilar hand gestures for patients who require a recovery process due to their experiencing limitations in everyday functioning caused by aging or a health condition. The main contributions of this paper are:

- A novel dataset acquired from 15 subjects with 7 dissimilar hand gestures.

- A deep learning-based method for hand gesture recognition via sEMG signals.

- A live 3D game for rehabilitation, that leverages AI (hand gesture recognition), to create a compelling experience for the user (rich visual stimuli).

The rest of the paper is organized as follows: Section 2 reviews the state of the art related to existing hand gesture datasets recorded using EMG and sEMG sensors. Moreover, we review existing learning-based approaches and their use in rehabilitation processes. Next, in Section 3, the details of the 3D game and the capture device we used to compose the dataset are described. In addition, this section describes the system and architecture of the neural network used to train the hand gesture recognition dataset and the EMG-based rehabilitation system. Section 4 presents the results obtained and describes the experimental process. In Section 5 we describe the experience of users and their opinions. Section 6 presents the main conclusions of our work and suggests some future research lines.

2. Related Work

Every year, some 800,000 people in the United States suffer a stroke and approximately two-thirds of these individuals survive and require rehabilitation. The goal of rehabilitation is to relearn skills that are suddenly lost after a stroke to improve the patients’ level of independence and help them return to their former social activities. Based on the literature, Post-Stroke Depression (PSD) is the most common neuropsychiatric consequence after stroke onset [18] that should be considered during the rehabilitation. The presence of PSD is correlated with failure to return to previous social activities and can have negative effects on the rehabilitation process [19]. Recent trends in PSD have led to a proliferation of studies suggesting rehabilitation exercises should use a reward system in order to motivate patients to to engage more completely with the process and to help avoid depression [20].

The rapid development of artificial intelligence has led to emerging areas of research in rehabilitation methods. One of the most auspicious treatments is VR, which can provide visual stimuli to assist in enhancing locomotor system progress. Its effectiveness in patients with different conditions has been studied [21,22,23].

The efficiency and effectiveness of wearable sensors have been studied in various rehabilitation contexts [24,25,26,27]. Electromyography sensors are one of the most widely used type of wearable sensors in rehabilitation tasks; they, enable the professional in charge to study and analyze the muscular activities of patients during the process [17,28,29,30].

Moreover, the function of electromyography signal in some clinical applications, human computer interaction, muscle computer interface and interactive computer gaming is considerable [31,32].

The role of muscular activity signals captured by EMG sensors has been noteworthy in the field of hand gesture recognition [33,34,35]. Several public datasets exist, which have been acquired by high-density surface electromyography (HD-sEMG) or regular sEMG electrodes, such as CapgMyo [36], csl-hdemg [37], or NinaPro (Non Invasive Adaptive Hand Prosthetics) [38,39]. The mentioned datasets have been recorded by high frequency electrodes, provide a wide variety of dynamic hand gestures in the associated studies. In CapgMyo (includes 8 hand gestures) and csl-hdemg (includes 27 finger gestures), datasets were recorded by a large number of HD-sEMG ( 128 and 192 sensors respectively) with a high sampling rate using dense arrays of individual electrodes, in order to obtain information from the muscles [36,37]. Datasets which are acquired by HD-sEMG signals are not appropriate under our framework in this research and only NinaPro databases contain regular sEMG electrodes in their recording process. The NinaPro database was recorded from a sample of 78 participants (in different phases) and 52 different gestures. In this project, 10–12 electrodes (Otto Bock 13-E200 (Ontario, Canada), Delsys Trigno (Natick, MA, USA)) were placed on forearms, plus one CyberGlove II with 22 sensors to capture 3D hand poses [38]. In the continuation the 5th Ninapro database is recorded from 10 subjects performing 52 dynamic hand movements. The database has been recorded via 38 sensors (two Myo armbands + Cyberglove 2) and includes three axis accelerometer information. All movements in the dataset are dynamic and it does not consist of static gestures [39]. Because of the multiplicity of costly sensors and dynamic hand movements, we decided to work with our previous dataset and extend it with a more static gesture.

However in the present work, we decided to use a sub-dataset of the dataset acquired in our previous work, which was recorded from hand gestures using low-cost sensors. Our dataset consisted of static hand gestures and we decided to study the behavior of a system with a smaller training group than in previous works [40,41].

Debate continues about the best techniques for data extraction of EMG signals [42], although deep learning techniques are the subjects of great interest as novel methods of feature extraction for gesture classification [43,44,45]. There are several studies on EMG signals captured by the Myo armband with low frequency (200 Hz) and applied neural network models for dynamic gesture recognition tasks [39,40,43]. In recent years, there have been an number of studies on the capability of this user-friendly armband in rehabilitation issues [46,47,48].

The original software (proprietary) from the Myo armband contains only five hand gestures and its default system has a considerable error rate in predicting correct hand gestures in real time. Hence, in the present work, we decided to expand the number of gestures through the use of deep learning techniques. In our previous work [40], we examined the efficiency of gated recurrent unit (GRU) architecture [49] on raw sEMG signals for six gestures and created an application for controlling domestic robots to help disabled people [41].

The results of recurrent neural networks (RNN) demonstrate that this type of architecture is a suitable choice in sequential problems [50,51], as well as for GRU in raw speech signals [52].

Convolutional GRU (ConvGRU) architecture is widely used for learning spatio-temporal features from videos [53,54,55]. Therefore, we decided to implement a ConvGRU-based neural network architecture. The proposed architecture takes raw sEMG signal as input and is able to estimate user hand gesture as output.

3. Sensor-Based Rehabilitation System

The rehabilitation system is divided into four phases: Database and Recording Hardware, Neural Network Architecture, Rehabilitation API and System Description.

3.1. Database and Recording Hardware

In our previous work, we created a dataset of six dissimilar static hand gestures acquired from 35 healthy subjects. Each gesture was recorded for 10 s by maintaining the hand gesture and moving arms in various directions. The dataset collected, which contains approximately 41,000 samples, was set as input to train a 3-GRU layer neural network. EMG signals were applied as raw data for the training system, which achieved an accuracy of 77.85% in a test examination with new participants [40].

The goal of this work was to add more gestures and a new neural network architecture to minimize the prediction time and maximize the system’s accuracy. Our previous study [40] found, the Myo armband was unable to distinguish more than 6–7 gestures accurately. Hence, in this work we randomly chose 15 subjects from the previous dataset, acquiring a further gesture (Neutral) from each one to study the results with a new proposed architecture. The additional gesture was recorded in the same condition as the previous gestures. The armband was placed at the height of the Radio-humeral joint, with participants being asked to maintain the requested hand gesture, move their arms in various directions and avoid completely bending the forearm at the elbow joint. The raw signals recorded from the EMG sensors were transmitted via Bluetooth (at 200 Hz) in eight channels for each samples.

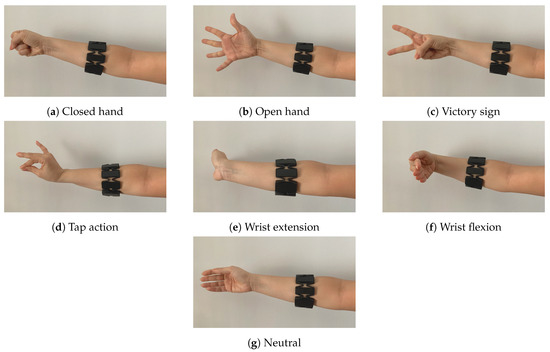

The new sub-dataset contains 18,500 samples from 15 healthy subjects for 7 gestures (open hand, closed hand, victory sign, tap, wrist flexion, wrist extension and neutral) (See Figure 1). The dataset is publicly available in our website (http://www.rovit.ua.es/dataset/emgs/).

Figure 1.

Hand gestures.

3.2. Neural Network Architecture

A variety of methods are used to assess gestures; each has its particular advantages and drawbacks [56,57,58,59]. The most popular neural network for sEMG-based gesture recognition is the convolutional neural network (CNN), although attention has recently been increasing in Recurrent neural networks (RNN) for the same task. The results show the veracity of both architectures in sEMG-based gesture recognition. CNN-LSTM models have also been proposed for gesture recognition and extracting features from RGB videos [60]. However, in this work we propose a combination of CNN and GRU models to apply to sEMG signals.

Since the dataset was acquired as signals, we chose to work with one-dimensional, convolutional blocks for the proposed neural network. No filter and pre-processing process on network input was implemented.

The Myo signals are zero-centered by default and a normalization method was not necessary. With the aim of supervised learning, we implemented two methods for labeling our outputs. First, we used a one-hot encoded vector and introduced the correct gesture with the value of 1 in the intended index of the vector and incorrect gestures were given the value of 0. Second, we used soft label assignment for our supervised learning and gave an insignificant probability to the incorrect gestures (0.01) and high probability to the correct ones.

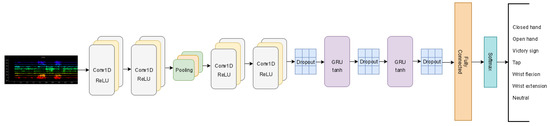

To improve the system’s accuracy, the window method was implemented as the data augmentation technique. We maintained the results of previous experiments and considered a sliding window with 188 as the look-back per sample and 20 as the offset in each window. In the proposed Conv-GRU architecture, we implemented two layers of Convolutional 1D with 64 feature detectors with a kernel size 3 applied to each feature detector. The next layer was a Max Pooling layer that slides a window of 3 across our data and this was followed by two Convolutional 1D layers with 128 filters and a kernel size 3. In addition, to avoid the possibility of over-fitting a dropout layer with 0.5 probability of neuron activation was considered. To extract temporal features, two GRU layers were added. Each GRU layer was composed of 150 units and to further reduce the likelihood of over-fitting and to enhance the model’s capabilities, a dropout layer with a rate of 0.5 was applied after each GRU layer.

For the final part of our proposed network, we used a fully connected (FC) layer with seven neurons matching the number of gestures. It uses the Softmax activation function to produce a probability distribution over the number of classes and control the actions of extreme values. The architecture described is shown in Figure 2.

Figure 2.

Proposed neural network architecture for hand gesture recognition.

All the parameters, such as number of layers, number of filters per layer, activation functions and dropout rates, were empirically chosen. In our experiments, we used an Adam optimizer with a constant learning rate of 0.0001 and a batch size of 500 for 300 epochs. For the loss function, the categorical-crossentropy function was chosen.

3.3. 3D Game Experience

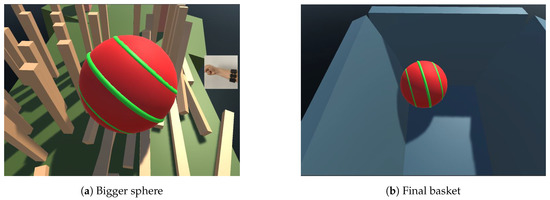

In the present work, we used Unity engine to create a three-dimensional game based on hand gestures. The game was created with a reward system to elicit positively-valenced emotions and so facilitate the rehabilitation process. In the proposed game, 5 hand gestures were selected to control a sphere to overcome different obstacles (by increasing speed, going backwards, jumping, making the sphere bigger or smaller) and then fall into a basket (See Figure 3). The final goal of the game is to overcome all the obstacles and drop the ball into a basket, which is designed to provide patients with the reward of successfully completing the task.

Figure 3.

Three-dimensional game.

3.4. System Description

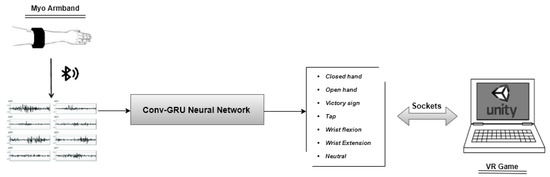

In order to send information from the neural network to the 3D game, we used socket connections. The sEMG data was transmitted via Bluetooth to the Conv-GRU neural network. The proposed network analyzed the information and classified the gesture. The predicted gesture was sent to the game through socket connections. Unity received the class of gesture and ran the appropriate function introduced to perform the gesture. The system described is shown in Figure 4.

Figure 4.

Proposed System.

4. Experiments and Results

In order for the experiments and to yield realistic results, we divided 15 subjects into five different groups. To control for bias, a test examination was carried out in all groups. We implemented the leave-one-out Cross-Validation technique and the training process was conducted via GeForce GTX 1080Ti GPU. In each training section, four groups were used to train the system and the samples were split into 75% for training data and 25% for validation data. The validation data contained information from the same subjects as the training data, but at different moments, and the neural network did not see them during the training process.

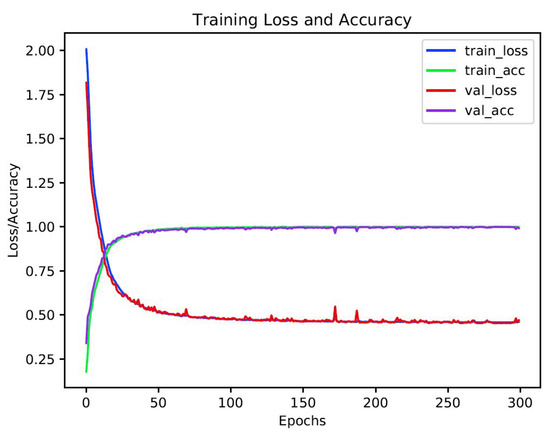

The training results after 300 epochs were convincing enough to do the test. In both implemented methods (one-hot vector and label smoothing) training accuracy was around 99.4–99.8%, followed by validation accuracy with an insignificant difference (See Figure 5).

Figure 5.

Loss and accuracy graph.

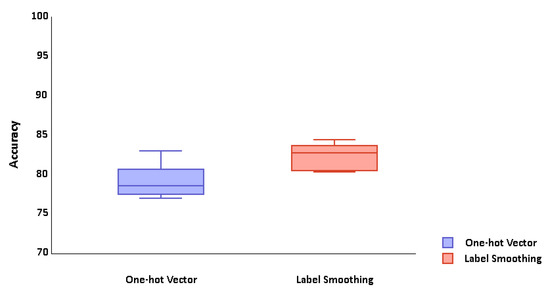

The training process was repeated five times for both methods. Each time, one group was left out for testing and four groups were used to train the system. This process took 11 h. The average results of cross validation were considerably different. the average test accuracy for labeling by one-hot vector was 79.07%, and 82.15% for the label smoothing method (See Figure 6). As the significant difference in test results shows, the label smoothing method achieves greater accuracy. Consequently, we chose to train the neural network with this method and to implement the rehabilitation system.

Figure 6.

Cross validation graph.

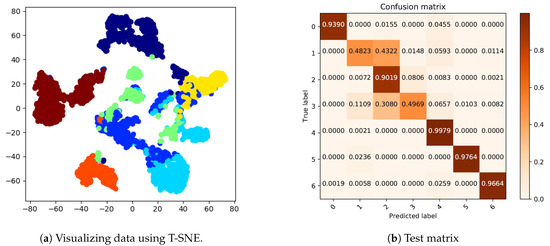

For the output, we implemented a T-distributed Stochastic Neighbor Embedding (T-SNE) algorithm to reduce the dimension in 2D and visualize high-dimensional data by categorizing them by features [61]. This nonlinear dimensionality reduction allowed us to see how our proposed neural network was able to extract the characteristics of each hand gesture. Each cluster represents a hand gesture and the proposed neural network exhaustively classified sEMG signals (See Figure 7a).

Figure 7.

Confusion matrix.

In addition, to gather more details about the errors in hand gesture prediction, a confusion matrix was created (See Figure 7b).

As can be observed in the confusion matrix, the system could reasonably classify the closed hand, victory sign, wrist flexion, wrist extension and neutral gestures (there is a probability of a maximum ±0.05 error in each row or column, due to the rounding of numbers). However, the system had difficulties in distinguishing the open hand and tap gestures from the others and both had a significant conflict with the victory sign gesture.

Thereafter, we used five gestures (closed hand, tap, wrist flexion, wrist extension and neutral) to control the sphere in the game and left the other two gestures unused so as to give future users the opportunity of adding their desired actions to the game. The sphere should pass obstacles by changing the sphere size (making it bigger or smaller), jumping, accelerating and going backwards.

According to the system’s run time, each gesture was predicted in 39ms and we were able to send data to Unity through the socket. The socket connection was a two-way communication link between Python and Unity, running on a combination of an IP address and a port number. However 39 ms was insufficient time for unity to finish the movement before receiving another command. To prevent this polarization, a minimum delay (1 s) was applied between each sending of data from the neural network to the game.

5. User Study

With a view to having more realistic results, the system was tested live with 4 participants. All subjects were new and their information was unseen by the system during the training process. Participants were given a spoken explanation of the game and the gesture available for playing. Each subject was allowed two rounds to familiarize themselves with the route and obstacles. A round needs approximately one minute to be completed and each participant played five rounds. In addition, from one round to another, two minutes rest was considered (See Table 1).

Table 1.

Results for new subjects and their opinion.

Regarding the results of the live system and the users’ opinions, in the last round, the found it difficult to make the wrist flexion and wrist extension gestures perfectly as their hands were tired. However, the system was able to recognize the gestures correctly and the main reason for lost rounds was bad timing of gesture performance (each time the sphere hit the ground, the game was restarted). In spite of the results, competitiveness and focus on outcomes led the players to request more rounds.

6. Conclusions

This work proposed a new Conv-GRU architecture for the problem of gesture recognition. We also proposed a 3D game for use in rehabilitation processes. The new approach was trained and tested for seven static hand gestures. In the training process, the system achieved 99.8% in training accuracy and 99.48% in validation accuracy. The proposed network was tested with new subjects and obtained 82.15% accuracy in gesture recognition. An EMG-based system was designed to study its effectiveness and assistance in rehabilitation methods. Four new subjects took part in a live test and played a game based on controlling a sphere with hand gestures. Moreover regarding our experimental results, we demonstrated that the Conv-GRU network is sufficiently accurate to be used in gesture recognition systems and the proposed sensor based 3D game can be extended for application to rehabilitation techniques. To demonstrate our results, we have published a video in YouTube. (https://www.youtube.com/watch?v=ATNbnDGpMCk&feature=youtu.be).

For future work, we consider further hand gestures and harder levels for the rehabilitation 3D game. additionally, it is important to find means to increase the accuracy of the learning based system and also develop the system for inserting customized hand gestures for each patient in case of need.

Author Contributions

Authors contributed as follows: methodology, N.N.; software, N.N.; data acquisition, N.N.; validation, S.O.-E. and M.C.; investigation, N.N.; resources, N.N.; data curation, N.N.; writing—original draft preparation, N.N.; writing—review and editing, S.O.-E. and M.C.; visualization, N.N.; supervision, S.O.-E. and M.C.; project administration, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Spanish Government PID2019-104818RB-I00 grant, supported with Feder funds.

Acknowledgments

The authors would like to thank all the participants for taking part in the data collection experiments. We would also like to thank NVIDIA for the generous donation of a Titan Xp and a Quadro P6000.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Merians, A.S.; Poizner, H.; Boian, R.F.; Burdea, G.C.; Adamovich, S.V. Sensorimotor Training in a Virtual Reality Environment: Does It Improve Functional Recovery Poststroke? Neurorehabilit. Neural Repair 2006, 20, 252–267. [Google Scholar] [CrossRef]

- Ustinova, K.I.; Perkins, J.; Leonard, W.A.; Ingersoll, C.D.; Hausebeck, C. Virtual reality game-based therapy for persons with TBI: A pilot study. In Proceedings of the 2013 International Conference on Virtual Rehabilitation (ICVR), Philadelphia, PA, USA, 26–29 August 2013; pp. 87–93. [Google Scholar]

- Dukes, P.S.; Hayes, A.; Hodges, L.F.; Woodbury, M. Punching ducks for post-stroke neurorehabilitation: System design and initial exploratory feasibility study. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 47–54. [Google Scholar]

- Barresi, G.; Mazzanti, D.; Caldwell, D.; Brogni, A. Distractive User Interface for Repetitive Motor Tasks: A Pilot Study. In Proceedings of the 2013 Seventh International Conference on Complex, Intelligent, and Software Intensive Systems, Taichung, Taiwan, 3–5 July 2013; pp. 588–593. [Google Scholar]

- Placidi, G.; Avola, D.; Iacoviello, D.; Cinque, L. Overall design and implementation of the virtual glove. Comput. Biol. Med. 2013, 43, 1927–1940. [Google Scholar] [CrossRef] [PubMed]

- Gama, A.D.; Chaves, T.M.; Figueiredo, L.S.; Baltar, A.; Ma, M.; Navab, N.; Teichrieb, V.; Fallavollita, P. MirrARbilitation: A clinically-related gesture recognition interactive tool for an AR rehabilitation system. Comput. Methods Programs Biomed. 2016, 135, 105–114. [Google Scholar] [CrossRef] [PubMed]

- Brokaw, E.B.; Lum, P.S.; Cooper, R.A.; Brewer, B.R. Using the kinect to limit abnormal kinematics and compensation strategies during therapy with end effector robots. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–6. [Google Scholar]

- Rado, D.; Sankaran, A.; Plasek, J.M.; Nuckley, D.J.; Keefe, D.F. Poster: A Real-Time Physical Therapy Visualization Strategy to Improve Unsupervised Patient Rehabilitation. In Proceedings of the IEEE Visualization, Atlantic City, NJ, USA, 11–16 October 2009. [Google Scholar]

- Khademi, M.; Hondori, H.M.; Dodakian, L.; Cramer, S.; Lopes, C.V. Comparing “pick and place” task in spatial Augmented Reality versus non-immersive Virtual Reality for rehabilitation setting. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 4613–4616. [Google Scholar]

- Liao, Y.; Vakanski, A.; Xian, M. A deep learning framework for assessment of quality of rehabilitation exercises. arXiv 2019, arXiv:1901.10435. [Google Scholar]

- Liao, Y.; Vakanski, A.; Xian, M. A Deep Learning Framework for Assessing Physical Rehabilitation Exercises. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 468–477. [Google Scholar] [CrossRef]

- Cano Porras, D.; Siemonsma, P.; Inzelberg, R.; Zeilig, G.; Plotnik, M. Advantages of virtual reality in the rehabilitation of balance and gait. Neurology 2018, 90, 1017–1025. [Google Scholar] [CrossRef]

- Feng, H.; Li, C.; Liu, J.; Wang, L.; Ma, J.; Li, G.; Gan, L.; Shang, X.; Wu, Z. Virtual Reality Rehabilitation Versus Conventional Physical Therapy for Improving Balance and Gait in Parkinson’s Disease Patients: A Randomized Controlled Trial. Med. Sci. Monit. Int. Med. J. Exp. Clin. Res. 2019, 25, 4186–4192. [Google Scholar] [CrossRef]

- John, J.; Wild, J.; Franklin, T.D.; Woods, G.W. Patellar pain and quadriceps rehabilitation: An EMG study. Am. J. Sport. Med. 1982, 10, 12–15. [Google Scholar] [CrossRef]

- Mulas, M.; Folgheraiter, M.; Gini, G. An EMG-controlled exoskeleton for hand rehabilitation. In Proceedings of the 9th International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005; pp. 371–374. [Google Scholar]

- Sarasola-Sanz, A.; Irastorza-Landa, N.; López-Larraz, E.; Bibián, C.; Helmhold, F.; Broetz, D.; Birbaumer, N.; Ramos-Murguialday, A. A hybrid brain-machine interface based on EEG and EMG activity for the motor rehabilitation of stroke patients. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 895–900. [Google Scholar]

- Liu, L.; Chen, X.; Lu, Z.; Cao, S.; Wu, D.; Zhang, X. Development of an EMG-ACC-Based Upper Limb Rehabilitation Training System. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 244–253. [Google Scholar] [CrossRef]

- Berg, A.; Palomäki, H.; Lehtihalmes, M.; Lönnqvist, J.; Kaste, M. Poststroke depression: An 18-month follow-up. Stroke 2003, 34, 138–143. [Google Scholar] [CrossRef]

- Paolucci, S.; Antonucci, G.A.; Grasso, M.G.; Morelli, D.; Troisi, E.M.; Coiro, P.; Angelis, D.D.; Rizzi, F.; Bragoni, M. Post-stroke depression, antidepressant treatment and rehabilitation results. A case-control study. Cerebrovasc. Dis. 2001, 12, 264–271. [Google Scholar] [CrossRef] [PubMed]

- Rincon, A.L.; Yamasaki, H.; Shimoda, S. Design of a video game for rehabilitation using motion capture, EMG analysis and virtual reality. In Proceedings of the 2016 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 24–26 February 2016; pp. 198–204. [Google Scholar]

- Vourvopoulos, A.; Badia, S.B. Motor priming in virtual reality can augment motor-imagery training efficacy in restorative brain-computer interaction: A within-subject analysis. J. Neuroeng. Rehabil. 2016, 13, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Perez-Marcos, D.; Chevalley, O.H.; Schmidlin, T.W.; Garipelli, G.; Serino, A.; Vuadens, P.; Tadi, T.; Blanke, O.; Millán, J.D.R. Increasing upper limb training intensity in chronic stroke using embodied virtual reality: A pilot study. J. Neuroeng. Rehabil. 2017, 14, 119. [Google Scholar] [CrossRef] [PubMed]

- Adamovich, S.V.; Fluet, G.G.; Tunik, E.; Merians, A.S. Sensorimotor training in virtual reality: A review. NeuroRehabilitation 2009, 25, 29–44. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.H.; Chen, P.C.; Liu, K.C.; Chan, C.T. Wearable Sensor-Based Rehabilitation Exercise Assessment for Knee Osteoarthritis. Sensors 2015, 15, 4193–4211. [Google Scholar] [CrossRef] [PubMed]

- Saito, H.; Watanabe, T.; Arifin, A. Ankle and Knee Joint Angle Measurements during Gait with Wearable Sensor System for Rehabilitation. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Munich, Germany, 7–12 September 2009. [Google Scholar]

- Bonato, P. Advances in wearable technology and applications in physical medicine and rehabilitation. J. Neuroeng. Rehabil. 2005, 2, 2. [Google Scholar] [CrossRef] [PubMed]

- Patel, S.; Park, H.J.; Bonato, P.; Chan, L.E.; Rodgers, M.M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2011, 9, 21. [Google Scholar] [CrossRef]

- Leonardis, D.D.; Barsotti, M.; Loconsole, C.; Solazzi, M.; Troncossi, M.; Mazzotti, C.; Parenti-Castelli, V.; Procopio, C.; Lamola, G.; Chisari, C.; et al. An EMG-Controlled Robotic Hand Exoskeleton for Bilateral Rehabilitation. IEEE Trans. Haptics 2015, 8, 140–151. [Google Scholar] [CrossRef]

- Ganesan, Y.; Gobee, S.; Durairajah, V. Development of an Upper Limb Exoskeleton for Rehabilitation with Feedback from EMG and IMU Sensor. Procedia Comput. Sci. 2015, 76, 53–59. [Google Scholar] [CrossRef]

- Abdallah, I.B.; Bouteraa, Y.; Rekik, C. Design and Development of 3D Printed Myoelectric Robotic Exoskeleton for Hand Rehabilitation. Int. J. Smart Sens. Intell. Syst. 2017, 10, 341–366. [Google Scholar] [CrossRef]

- Kim, J.; Bee, N.; Wagner, J.; André, E. Emote to Win: Affective Interactions with a Computer Game Agent; Gesellschaft fur Informatik e.V.: Bonn, Germany, 2004; pp. 159–164. [Google Scholar]

- Kim, J.; Mastnik, S.; André, E. EMG-based hand gesture recognition for realtime biosignal interfacing. In Proceedings of the 13th International Conference on Intelligent User Interfaces, Gran Canaria, Spain, 13–16 January 2008; pp. 30–39. [Google Scholar]

- Qi, J.; Jiang, G.; Li, G.; Sun, Y.; Tao, B. Surface EMG hand gesture recognition system based on PCA and GRNN. Neural Comput. Appl. 2019, 32, 6343–6351. [Google Scholar] [CrossRef]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.L.; Yang, C.Y. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Su, H.; Ovur, S.E.; Zhou, X.; Qi, W.; Ferrigno, G.; Momi, E. Depth vision guided hand gesture recognition using electromyographic signals. Adv. Robot. 2020, 34, 985–997. [Google Scholar] [CrossRef]

- Geng, W.; Du, Y.M.; Jin, W.; Wei, W.; Hu, Y.H.; Li, J. Gesture recognition by instantaneous surface EMG images. Sci. Rep. 2016, 6, 36571. [Google Scholar] [CrossRef] [PubMed]

- Amma, C.; Krings, T.; Böer, J.; Schultz, T. Advancing Muscle-Computer Interfaces with High-Density Electromyography. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015. [Google Scholar]

- Atzori, M.; Gijsberts, A.; Heynen, S.; Hager, A.G.M.; Deriaz, O.; van der Smagt, P.; Castellini, C.; Caputo, B.; Muller, H. Building the Ninapro database: A resource for the biorobotics community. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 1258–1265. [Google Scholar]

- Pizzolato, S.; Tagliapietra, L.; Cognolato, M.; Reggiani, M.; Müller, H.; Atzori, M. Comparison of six electromyography acquisition setups on hand movement classification tasks. PLoS ONE 2017, 12, e0186132. [Google Scholar] [CrossRef]

- Nasri, N.; Orts, S.; Gomez-Donoso, F.; Cazorla, M. Inferring Static Hand Poses from a Low-Cost Non-Intrusive sEMG Sensor. Sensors 2019, 19, 371. [Google Scholar] [CrossRef]

- Nasri, N.; Gomez-Donoso, F.; Orts, S.; Cazorla, M. Using Inferred Gestures from sEMG Signal to Teleoperate a Domestic Robot for the Disabled. In Proceedings of the IWANN, Gran Canaria, Spain, 12–14 June 2019. [Google Scholar]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Allard, U.C.; Nougarou, F.; Fall, C.L.; Giguère, P.; Gosselin, C.; Laviolette, F.; Gosselin, B. A convolutional neural network for robotic arm guidance using sEMG based frequency-features. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2464–2470. [Google Scholar]

- Du, Y.; Wong, Y.; Jin, W.; Wei, W.; Hu, Y.; Kankanhalli, M.S.; Geng, W. Semi-Supervised Learning for Surface EMG-based Gesture Recognition. In Proceedings of the IJCAI, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Liu, G.; Zhang, L.; Han, B.; Zhang, T.; Wang, Z.; Wei, P. sEMG-Based Continuous Estimation of Knee Joint Angle Using Deep Learning with Convolutional Neural Network. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; pp. 140–145. [Google Scholar]

- Zhang, J.; Dai, J.; Chen, S.; Xu, G.; Gao, X. Design of Finger Exoskeleton Rehabilitation Robot Using the Flexible Joint and the MYO Armband. In Proceedings of the ICIRA, Shenyang, China, 8–11 August 2019. [Google Scholar]

- Longo, B.; Sime, M.M.; Bastos-Filho, T. Serious Game Based on Myo Armband for Upper-Limb Rehabilitation Exercises. In Proceedings of the XXVI Brazilian Congress on Biomedical Engineering, Armacao de Buzios, Brazil, 21–25 October 2019. [Google Scholar]

- Widodo, M.S.; Zikky, M.; Nurindiyani, A.K. Guide Gesture Application of Hand Exercises for Post-Stroke Rehabilitation Using Myo Armband. In Proceedings of the 2018 International Electronics Symposium on Knowledge Creation and Intelligent Computing (IES-KCIC), Bali, Indonesia, 29–30 October 2018; pp. 120–124. [Google Scholar]

- Cho, K.; van Merrienboer, B.; Gülçehre, Ç.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. arXiv 2014, arXiv:1409.3215. [Google Scholar]

- Chung, J.; Gülçehre, Ç.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Ballas, N.; Yao, L.; Pal, C.J.; Courville, A.C. Delving Deeper into Convolutional Networks for Learning Video Representations. arXiv 2016, arXiv:1511.06432. [Google Scholar]

- Jiang, R.; Zhao, L.; Wang, T.; Wang, J.; Zhang, X. Video Deblurring via Temporally and Spatially Variant Recurrent Neural Network. IEEE Access 2020, 8, 7587–7597. [Google Scholar] [CrossRef]

- Tian, L.; Li, X.; Ye, Y.; Xie, P.; Li, Y. A Generative Adversarial Gated Recurrent Unit Model for Precipitation Nowcasting. IEEE Geosci. Remote Sens. Lett. 2020, 17, 601–605. [Google Scholar] [CrossRef]

- Asadi-Aghbolaghi, M.; Clapes, A.; Bellantonio, M.; Escalante, H.J.; Ponce-López, V.; Baró, X.; Guyon, I.; Kasaei, S.; Escalera, S. A survey on deep learning based approaches for action and gesture recognition in image sequences. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 476–483. [Google Scholar]

- Devineau, G.; Moutarde, F.; Xi, W.; Yang, J. Deep learning for hand gesture recognition on skeletal data. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 106–113. [Google Scholar]

- Wei, W.; Dai, Q.; Wong, Y.; Hu, Y.; Kankanhalli, M.; Geng, W. Surface-Electromyography-Based Gesture Recognition by Multi-View Deep Learning. IEEE Trans. Biomed. Eng. 2019, 66, 2964–2973. [Google Scholar] [CrossRef] [PubMed]

- Atzori, M.; Cognolato, M.; Müller, H. Deep learning with convolutional neural networks applied to electromyography data: A resource for the classification of movements for prosthetic hands. Front. Neurorobotics 2016, 10, 9. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Song, Q.; Han, H.; Cheng, J. Sequentially supervised long short-term memory for gesture recognition. Cogn. Comput. 2016, 8, 982–991. [Google Scholar] [CrossRef]

- Maaten, L.v.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).