Dental Caries Diagnosis and Detection Using Neural Networks: A Systematic Review

Abstract

1. Introduction

“Dental caries is a biofilm-mediated, diet-modulated, multifactorial, non-communicable, dynamic disease resulting in net mineral loss of dental hard tissues. It is determined by biological, behavioral, psychosocial, and environmental factors. As a consequence of this process, a caries lesion develops”.[10]

2. Materials and Methods

2.1. Review Questions

- (1)

- What are the neural networks used to detect and diagnosis dental caries?

- (2)

- How is the database used in the construction of these networks?

- (3)

- How are caries lesions defined, and in which teeth are they detected?

- (4)

- What are the outcome metrics and the values obtained by those neural networks?

2.2. Search Strategy

2.3. Study Selection and Items Collected

2.4. Inclusion and Exclusion Criteria

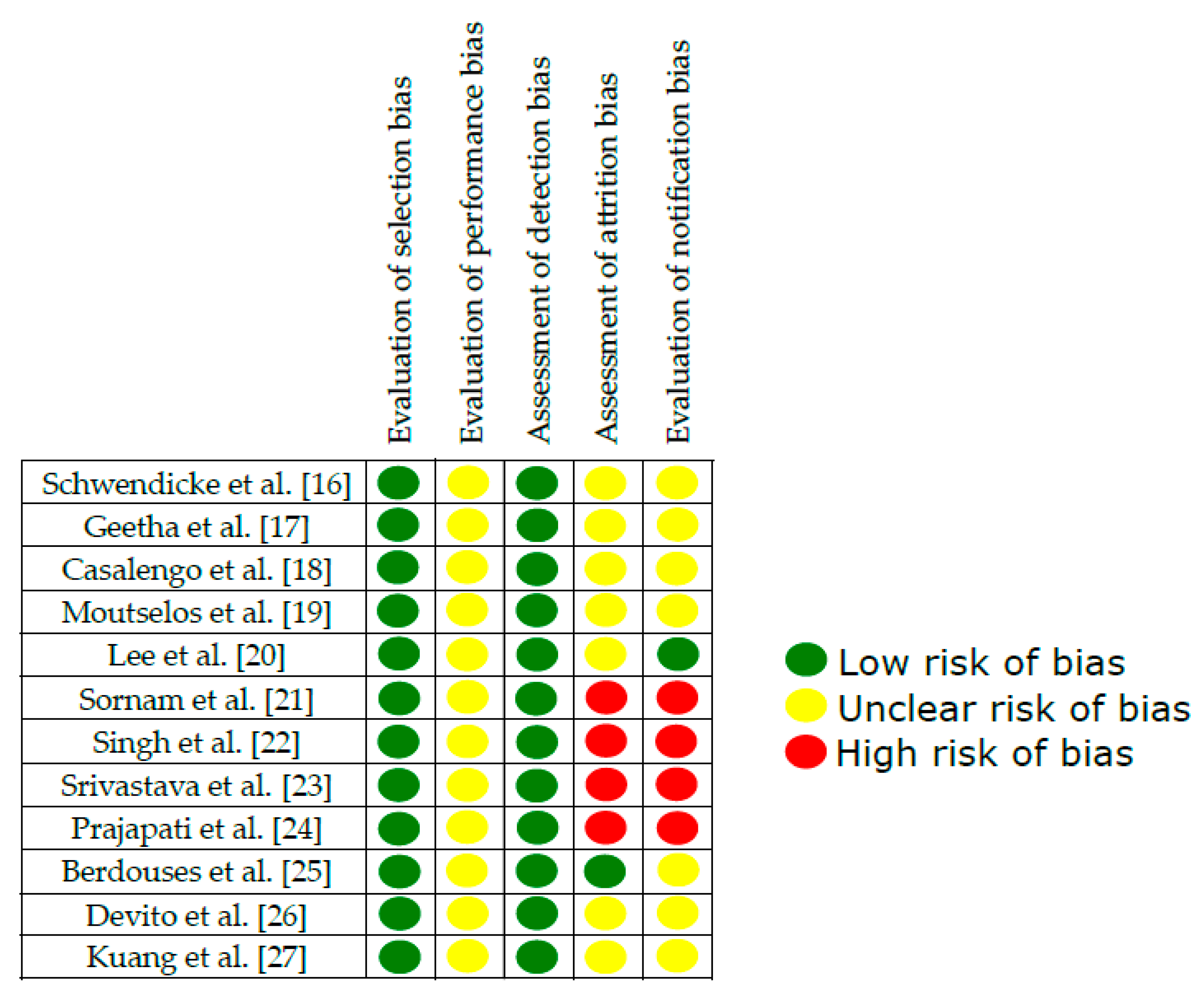

2.5. Study Quality Assessment

2.6. Statistical Analysis

3. Results

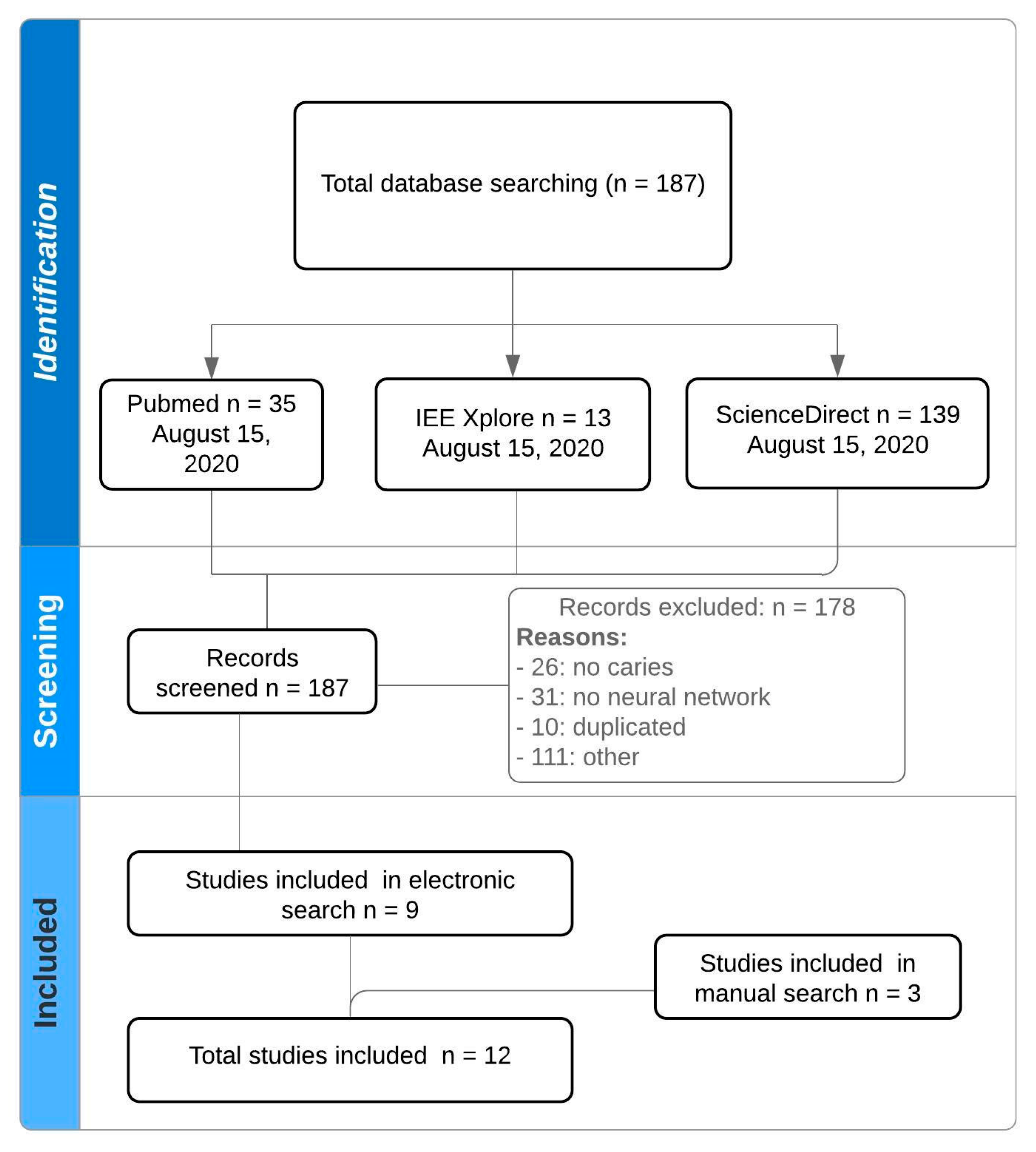

3.1. Study Selection

3.2. Relevant Data about the Image Database and Neural Network of the Included Studies

3.3. Relevant Data about Caries of the Included Studies

3.4. Study Quality Assessment

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Pauwels, R. A brief introduction to concepts and applications of artificial intelligence in dental imaging. Oral Radiol. 2020. [Google Scholar] [CrossRef]

- Chen, Y.W.; Stanley, K.; Att, W. Artificial intelligence in dentistry: Current applications and future perspectives. Quintessence Int. 2020, 51, 248–257. [Google Scholar] [CrossRef]

- Kohli, M.; Prevedello, L.M.; Filice, R.W.; Geis, J.R. Implementing Machine Learning in Radiology Practice and Research. Am. J. Roentgenol. 2017, 208, 754–760. [Google Scholar] [CrossRef]

- Clarke, A.M.; Friedrich, J.; Tartaglia, E.M.; Marchesotti, S.; Senn, W.; Herzog, M.H. Human and Machine Learning in Non-Markovian Decision Making. PLoS ONE 2015, 10, e0123105. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Buda, M.; Saha, A.; Bashir, M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reson. Imaging 2019, 49, 939–954. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Tzschoppe, M.; Paris, S. Radiographic caries detection: A systematic review and meta-analysis. J. Dent. 2015, 43, 924–933. [Google Scholar] [CrossRef]

- Gupta, M.; Srivastava, N.; Sharma, M.; Gugnani, N.; Pandit, I. International Caries Detection and Assessment System (ICDAS): A New Concept. Int. J. Clin. Pediatr. Dent. 2011, 4, 93–100. [Google Scholar] [CrossRef]

- Machiulskiene, V.; Campus, G.; Carvalho, J.C.; Dige, I.; Ekstrand, K.R.; Jablonski-Momeni, A.; Maltz, M.; Manton, D.J.; Martignon, S.; Martinez-Mier, E.A.; et al. Terminology of Dental Caries and Dental Caries Management: Consensus Report of a Workshop Organized by ORCA and Cariology Research Group of IADR. Caries Res. 2020, 54, 7–14. [Google Scholar] [CrossRef]

- Dikmen, B. Icdas II Criteria (International Caries Detection and Assessment System). J. Istanb. Univ. Fac. Dent. 2015, 49, 63. [Google Scholar] [CrossRef]

- Valizadeh, S.; Tavakkoli, M.A.; Vasigh, H.K.; Azizi, Z.; Zarrabian, T. Evaluation of Cone Beam Computed Tomography (CBCT) System: Comparison with Intraoral Periapical Radiography in Proximal Caries Detection. J. Dent. Res. Dent. Clin. Dent. Prospect. 2012, 6, 1–5. [Google Scholar] [CrossRef]

- Abogazalah, N.; Ando, M. Alternative methods to visual and radiographic examinations for approximal caries detection. J. Oral Sci. 2017, 59, 315–322. [Google Scholar] [CrossRef]

- Zhang, Z.; Qu, X.; Li, G.; Zhang, Z.; Ma, X. The detection accuracies for proximal caries by cone-beam computerized tomography, film, and phosphor plates. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2011, 111, 103–108. [Google Scholar] [CrossRef]

- Centre for Reviews and Dissemination. Systematic Reviews: CRD Guidance for Undertaking Reviews in Health Care; University of York: York, UK, 2009; ISBN 978-1-900640-47-3. [Google Scholar]

- Schwendicke, F.; Elhennawy, K.; Paris, S.; Friebertshäuser, P.; Krois, J. Deep Learning for Caries Lesion Detection in Near-Infrared Light Transillumination Images: A Pilot Study. J. Dent. 2019, 103260. [Google Scholar] [CrossRef]

- Geetha, V.; Aprameya, K.S.; Hinduja, D.M. Dental caries diagnosis in digital radiographs using back-propagation neural network. Health Inf. Sci. Syst. 2020, 8, 8–14. [Google Scholar] [CrossRef] [PubMed]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schürmann, F.; Krejci, I.; Markram, H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef]

- Moutselos, K.; Berdouses, E.; Oulis, C.; Maglogiannis, I. Recognizing Occlusal Caries in Dental Intraoral Images Using Deep Learning. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1617–1620. [Google Scholar]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Sornam, M.; Prabhakaran, M. A new linear adaptive swarm intelligence approach using back propagation neural network for dental caries classification. In Proceedings of the IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017; pp. 2698–2703. [Google Scholar]

- Singh, P.; Sehgal, P. Automated caries detection based on Radon transformation and DCT. In Proceedings of the 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 3–5 July 2017; pp. 1–6. [Google Scholar]

- Srivastava, M.M.; Kumar, P.; Pradhan, L.; Varadarajan, S. Detection of Tooth caries in Bitewing Radiographs using Deep Learning. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; p. 4. [Google Scholar]

- Prajapati, S.A.; Nagaraj, R.; Mitra, S. Classification of dental diseases using CNN and transfer learning. In Proceedings of the 5th International Symposium on Computational and Business Intelligence (ISCBI), Dubai, UAE, 11–14 August 2017; pp. 70–74. [Google Scholar]

- Berdouses, E.D.; Koutsouri, G.D.; Tripoliti, E.E.; Matsopoulos, G.K.; Oulis, C.J.; Fotiadis, D.I. A computer-aided automated methodology for the detection and classification of occlusal caries from photographic color images. Comput. Biol. Med. 2015, 62, 119–135. [Google Scholar] [CrossRef]

- Devito, K.L.; de Souza Barbosa, F.; Filho, W.N.F. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2008, 106, 879–884. [Google Scholar] [CrossRef]

- Kuang, W.; Ye, W. A Kernel-Modified SVM Based Computer-Aided Diagnosis System in Initial Caries. In Proceedings of the Second International Symposium on Intelligent Information Technology Application, Shanghai, China, 20–22 December 2008; pp. 207–211. [Google Scholar]

- Bussaneli, D.G.; Boldieri, T.; Diniz, M.B.; Lima Rivera, L.M.; Santos-Pinto, L.; Cordeiro, R.D.C.L. Influence of professional experience on detection and treatment decision of occlusal caries lesions in primary teeth. Int. J. Paediatr. Dent. 2015, 25, 418–427. [Google Scholar] [CrossRef]

- Burnham, K.P.; Anderson, D.R. Model Selection and Multimodel Inference; Burnham, K.P., Anderson, D.R., Eds.; Springer: New York, NY, USA, 2004; ISBN 978-0-387-95364-9. [Google Scholar]

- Mutasa, S.; Sun, S.; Ha, R. Understanding artificial intelligence based radiology studies: What is overfitting? Clin. Imaging 2020, 65, 96–99. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Shokri, A.; Kasraei, S.; Lari, S.; Mahmoodzadeh, M.; Khaleghi, A.; Musavi, S.; Akheshteh, V. Efficacy of denoising and enhancement filters for detection of approximal and occlusal caries on digital intraoral radiographs. J. Conserv. Dent. 2018, 21, 162. [Google Scholar] [CrossRef]

- Belém, M.D.F.; Ambrosano, G.M.B.; Tabchoury, C.P.M.; Ferreira-Santos, R.I.; Haiter-Neto, F. Performance of digital radiography with enhancement filters for the diagnosis of proximal caries. Braz. Oral Res. 2013, 27, 245–251. [Google Scholar] [CrossRef]

- Kositbowornchai, S.; Basiw, M.; Promwang, Y.; Moragorn, H.; Sooksuntisakoonchai, N. Accuracy of diagnosing occlusal caries using enhanced digital images. Dentomaxillofac. Radiol. 2004, 33, 236–240. [Google Scholar] [CrossRef] [PubMed]

- Bulman, J.S.; Osborn, J.F. Measuring diagnostic consistency. Br. Dent. J. 1989, 166, 377–381. [Google Scholar] [CrossRef]

| Database | Search Strategy | Search Data |

|---|---|---|

| MEDLINE/PubMed | (deep learning OR artificial intelligence OR neural network *) AND caries NOT review | 15 August 2020 |

| IEEE Xplore | (deep learning OR artificial intelligence OR neural network) AND caries AND (detect OR detection OR diagnosis) | 15 August 2020 |

| ScienceDirect | (deep learning OR artificial intelligence OR neural network) AND caries AND (detect OR detection OR diagnosis) | 15 August 2020 |

| Authors | Neural Network Task | Image | Total Image Database | Database Characteristics (Pixels and Examiners) | Neural Network | Image Exclusion Criterion | Database Modification (Resized and Other) | Journal | Year |

|---|---|---|---|---|---|---|---|---|---|

| Schwendicke et al. [16] | Classification | Near-infrared light transillumination | 226 | Pixel: 435 × 407 × 3. Examiners: two (clinical experience, 8–11 years) | Resnet18, Resnext50 | - | Resized pixel: 224 × 224 | Journal of Dentistry | 2020 |

| Geetha et al. [17] | Classification | Intra-oral digital radiography | 105 | Pixel: Examiners: a dentist | ANN with 10-fold cross validation | - | Resized pixel: 256 × 256 | Health Information Science and Systems | 2020 |

| Casalengo et al. [18] | Segmentation | Near-infrared transillumination | 217 | Pixel: Examiners: by experts | CNN trained on a semantic segmentation task | - | Resized pixel: 256 × 320 | Journal of Dental Research | 2019 |

| Moutselos et al. [19] | Segmentation and classification | In vivo with an intraoral camera | 87 | - | DNN Mask R-CNN, which extends Faster R-CNN by adding an FCN for predicting object masks. |

| - | Conf Proc IEEE Eng Med Biol Soc | 2019 |

| Lee et al. [20] | Classification | Periapical | 3000 | Pixel: Examiners: four calibrated board-certified dentists | CNN |

| Resized pixel: 299 × 299 Other: standardized contrast between gray/white matter and lesions. | Journal of Dentistry | 2018 |

| Sornam et al. [21] | Classification | Periapical | 120 | - | Feedforward Neural Network | - | - | IEEE International Conference on Power, Control, Signals, and Instrumentation Engineering (ICPCSI-2017) | 2017 |

| Singh et al. [22] | Detection | Panoramic radiographs | 93 | - | Radon Transformation (RT) and Discrete Cosine Transformation (DCT). | - | Resized pixel: 500 × 500 | 2017 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT) | 2017 |

| Srivastava et al. [23] | Segmentation | Bitewing | 3000 | Pixel: Examiners: by certified dentists | FCNN (deep fully convolutional neural network) | - | - | NIPS 2017 workshop on Machine Learning for Health (NIPS 2017 ML4H) | 2017 |

| Prajapati et al. [24] | Classification | Radiovisiography | 251 | - | CNN | - | Resized pixel: 500 × 748 | 5th International Symposium on Computational and Business Intelligence | 2017 |

| Berdouses et al. [25] | Detection and classification | - | 103 | Pixel: Examiners: two | - | - | - | Computers in Biology and Medicine | 2015 |

| Devito et al. [26] | Detection | Bitewing | 160 | Pixel: Examiners: 25 | Multilayer perceptron neural | - | - | Oral Med Oral Pathol Oral Radiol Endod | 2008 |

| Kuang et al. [27] | Segmentation | X-ray images | - | Pixel: 1000 × 800 Examiners: - | Back propagation Neural Network | - | - | Second International Symposium on Intelligent Information Technology Application | 2008 |

| Authors | Type of Study | Caries Definition | Caries Type Detected | Teeth | Outcome Metrics | Outcome Metrics Values |

|---|---|---|---|---|---|---|

| Schwendicke et al. [16] | in vitro | - | Occlusal and/or proximal caries | Premolar and molar | AUC, sensitivity, specificity, and positive/negative predictive values | 0.74, 0.59, 0.76, 0.63, and 0.73 |

| Geetha et al. [17] | in vitro | Loss of mineralization of these structures (radiolucent) | - | - | Accuracy, false positive rate, ROC, and precision | 0.971, 0.028, 0.987 |

| Casalengo et al. [18] | clinical | - | - | Upper and lower molars and premolars | IOU/AUC | 72.7/83.6 and 85.6% |

| Moutselos et al. [19] | Classified from 1 to 6 using the ICDAS II classification system. | Caries on occlusal surfaces | - | Accuracy | 0.889 | |

| Lee et al. [20] | in vitro | - | Dental caries, including enamel and dentinal carious lesions | Premolar, molar, and both premolar and molar | Accuracy, sensitivity, specificity, PPV, NPV, ROC curve, and AUC | 82, 81, 83, 82.7, 81.4 |

| Sornam et al. [21] | in vitro | - | - | - | Accuracy | 99% |

| Singh et al. [22] | in vitro | - | - | - | Accuracy | 86% |

| Srivastava et al. [23] | in vitro | - | - | - | Recall/Precision/F1-Score | 0.805/0.615/0.7 |

| Prajapati et al. [24] | in vitro | - | - | - | Accuracy | 0.875 |

| Berdouses et al. [25] | in vitro | ICDAS II | Pre-cavitated lesion and cavitated occlusal lesion | Posterior extracted human teeth | Accuracy | 80% |

| Devito et al. [26] | in vitro | - | sound, enamel caries, enamel-dentine junction caries and, dentinal caries | Premolar and molar | ROC | 0.717 |

| Kuang et al. [27] | in vitro | - | Initial caries | - | Accuracy | 68.57% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prados-Privado, M.; García Villalón, J.; Martínez-Martínez, C.H.; Ivorra, C.; Prados-Frutos, J.C. Dental Caries Diagnosis and Detection Using Neural Networks: A Systematic Review. J. Clin. Med. 2020, 9, 3579. https://doi.org/10.3390/jcm9113579

Prados-Privado M, García Villalón J, Martínez-Martínez CH, Ivorra C, Prados-Frutos JC. Dental Caries Diagnosis and Detection Using Neural Networks: A Systematic Review. Journal of Clinical Medicine. 2020; 9(11):3579. https://doi.org/10.3390/jcm9113579

Chicago/Turabian StylePrados-Privado, María, Javier García Villalón, Carlos Hugo Martínez-Martínez, Carlos Ivorra, and Juan Carlos Prados-Frutos. 2020. "Dental Caries Diagnosis and Detection Using Neural Networks: A Systematic Review" Journal of Clinical Medicine 9, no. 11: 3579. https://doi.org/10.3390/jcm9113579

APA StylePrados-Privado, M., García Villalón, J., Martínez-Martínez, C. H., Ivorra, C., & Prados-Frutos, J. C. (2020). Dental Caries Diagnosis and Detection Using Neural Networks: A Systematic Review. Journal of Clinical Medicine, 9(11), 3579. https://doi.org/10.3390/jcm9113579