A Novel Deep Learning Model for Sea State Classification Using Visual-Range Sea Images

Abstract

:1. Introduction

1.1. Related Work

1.1.1. Sea State Datasets

1.1.2. Sea State Classification Using Deep Learning Models

1.2. Problem Statement

| Research Question 1: | How to design, build, and test a large-scale visual-range sea state image dataset for deep learning-based image classification models? |

| Research Objective 1: | To design and develop a novel large-scale visual-range sea state image dataset suitable for deep learning-based image classification models. |

| Research Question 2: | How to optimally classify sea state from visual-range sea surface images using a deep learning model? |

| Research Objective 2: | To develop and validate a novel deep learning-based sea state classification model that can optimally classify sea states from a visual-range sea image. |

- (a)

- Formulation of comprehensive guidelines for the development of a novel visual-range sea state image dataset.

- (b)

- Development of a novel visual-range sea state image dataset for training and testing purposes of deep learning-based sea state image classification models.

- (c)

- Comprehensive benchmarking of state-of-the-art deep learning classification models on developed sea state image dataset.

- (d)

- Development of a novel deep learning-based sea state image classification model for sea state monitoring at coastal and offshore locations.

2. Materials and Methods

2.1. Novel Visual-Range Sea State Image Dataset Design and Development

2.1.1. Ground Truth Reference for the Identification of Sea States in an Image

2.1.2. Sensor Selection, Setup, and Calibration

- (i)

- The date and inner clocks of the weather station and video camera are synchronized.

- (ii)

- The weather station is setup by entering required parameters, such as unit of measure, longitude and latitude values of the field observation site, estimated weather station height above sea level, and wireless data transmission parameters, etc.

- (iii)

- The weather station is carefully mounted at a location where wind flow is not obstructed by any surrounding object.

- (iv)

- The weather station is operational before video recording is started and data transmission between the weather station and data logger device is verified.

- (v)

- The video camera is either mounted on a tripod or handheld.

- (vi)

- The field of observation is set to either the sea surface or sea plus sky.

- (vii)

- Audio recording is disabled, and the videos are recorded in auto mode and at a maximum resolution of 1920 × 1080 pixels.

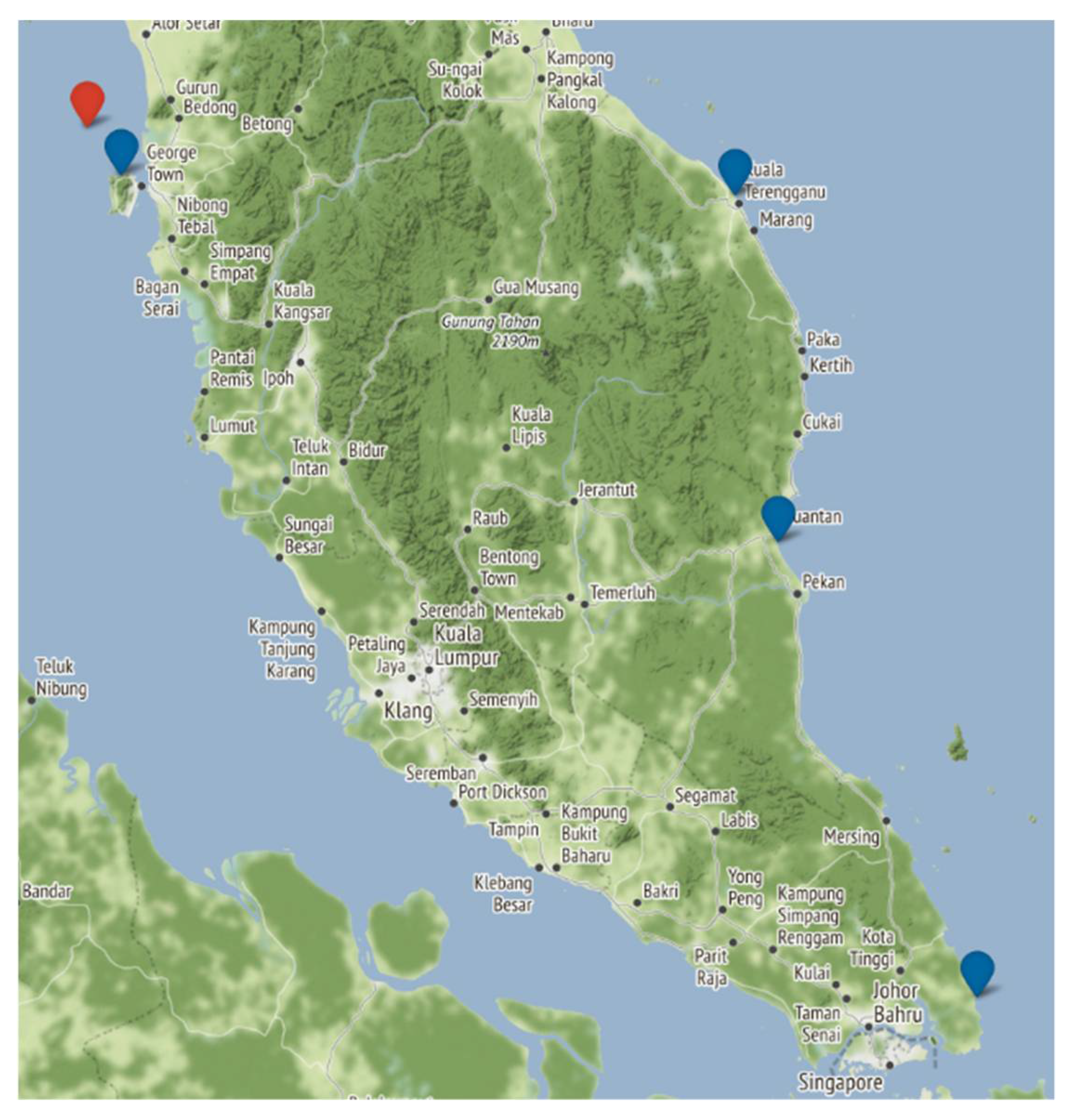

2.1.3. Selecting a Field Observation Site

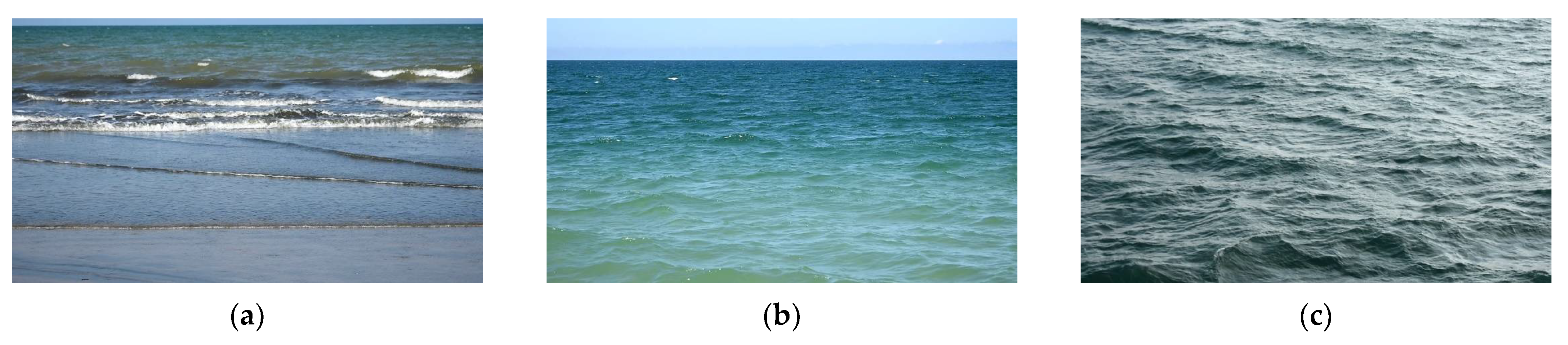

2.1.4. Achieving Illumination and Weather Feature Diversity

2.1.5. Defining Optimal Range of Image Instances per Class for the Dataset

2.2. Data Collection and Preprocessing

2.2.1. Wind and Video Data Collection and Preprocessing

2.2.2. Sea State Estimation in Video

2.2.3. Image Extraction from Video Source

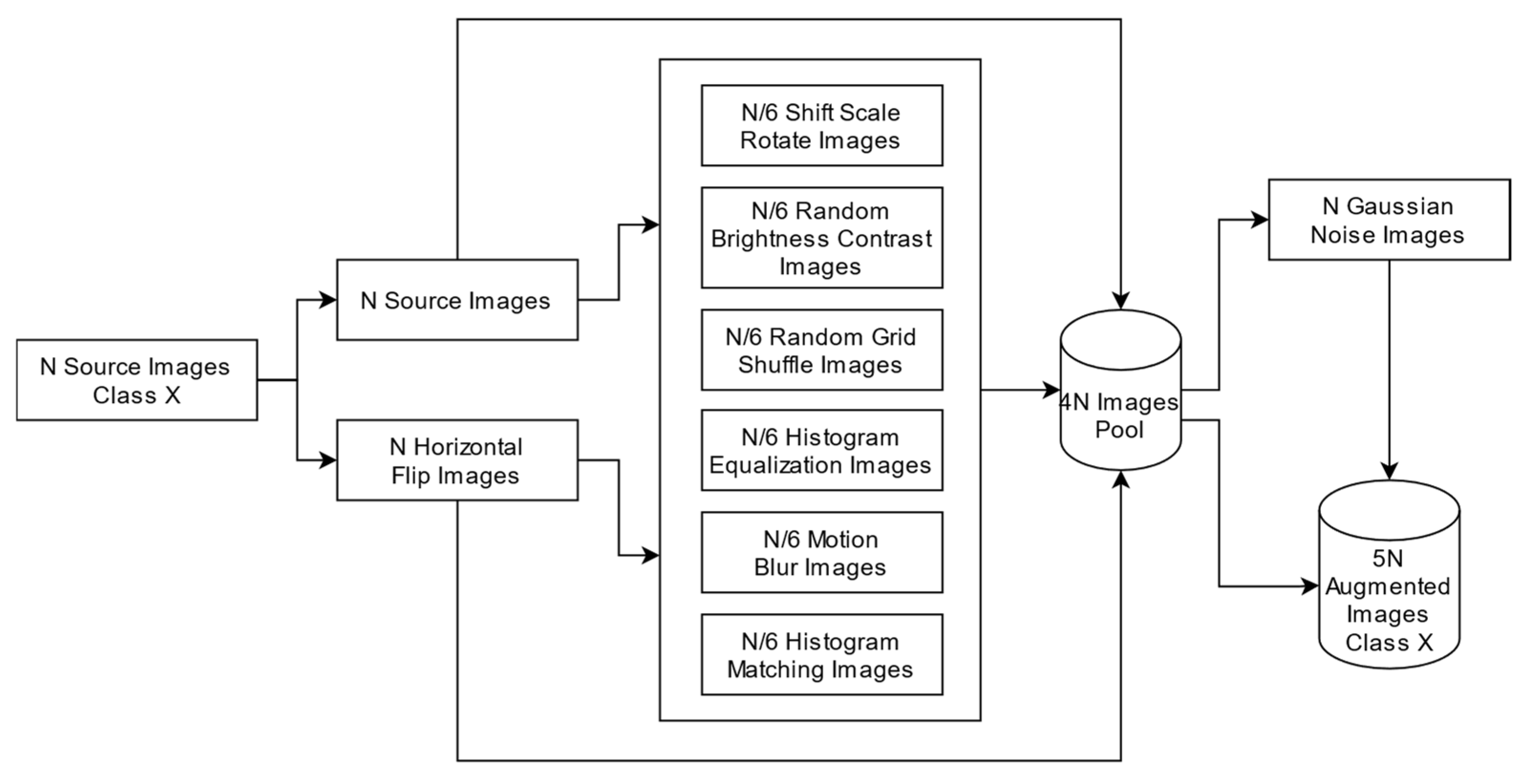

2.2.4. Handling Class Imbalance and Defining Dataset Splits

- The fixed maximum number of image instances per class is defined as N.

- Videos in each class are manually split into disjointed training, validation, and testing sets.

- From each video in a disjointed set, a set-specific fixed number of instances are randomly selected to populate raw training, validation, and testing image pools.

- A few exceptions are made when certain videos in a set have a lower number of instances than the set-specific fixed number. In this case, all instances from such videos are selected.

- From each class’s training, validation, and testing pool, image instances are randomly selected such that they are equal to N and follow a 60:20:20 ratio.

2.2.5. Data Augmentation

2.2.6. Image Naming Conventions

2.3. Hardware and Software Environments

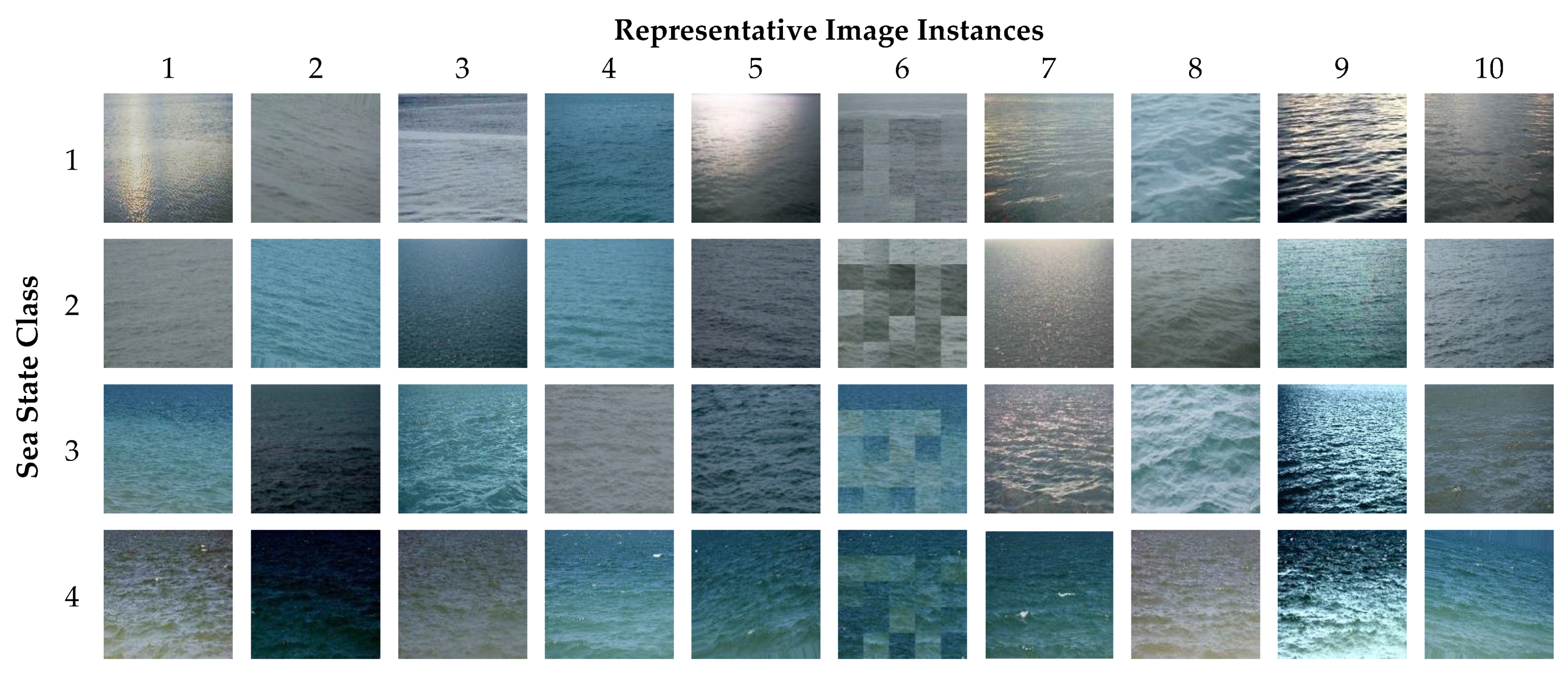

3. Visual-Range Sea State Image Dataset

3.1. Dataset Development

3.1.1. Narrowing of Sea State Classes

3.1.2. The Final Dataset

3.2. MU-SSiD Classification Accuracy Benchmark Experiments

3.2.1. AlexNet

3.2.2. VGG Family (VGG-16, VGG-19)

3.2.3. Inception Family (GoogLeNet, Inception-v3, Inception-ResNet-V2)

3.2.4. ResNet Family (ResNet-18; ResNet-50; ResNet-101)

3.2.5. SqueezeNet

3.2.6. MobileNet-v2

3.2.7. Xception

3.2.8. Darknet Family (DarkNet-19; DarkNet-53)

3.2.9. DenseNet-201

3.2.10. NASNet Family (NASNet-Mobile; NASNetlarge)

3.2.11. ShuffleNet

3.2.12. EfficientNet-b0

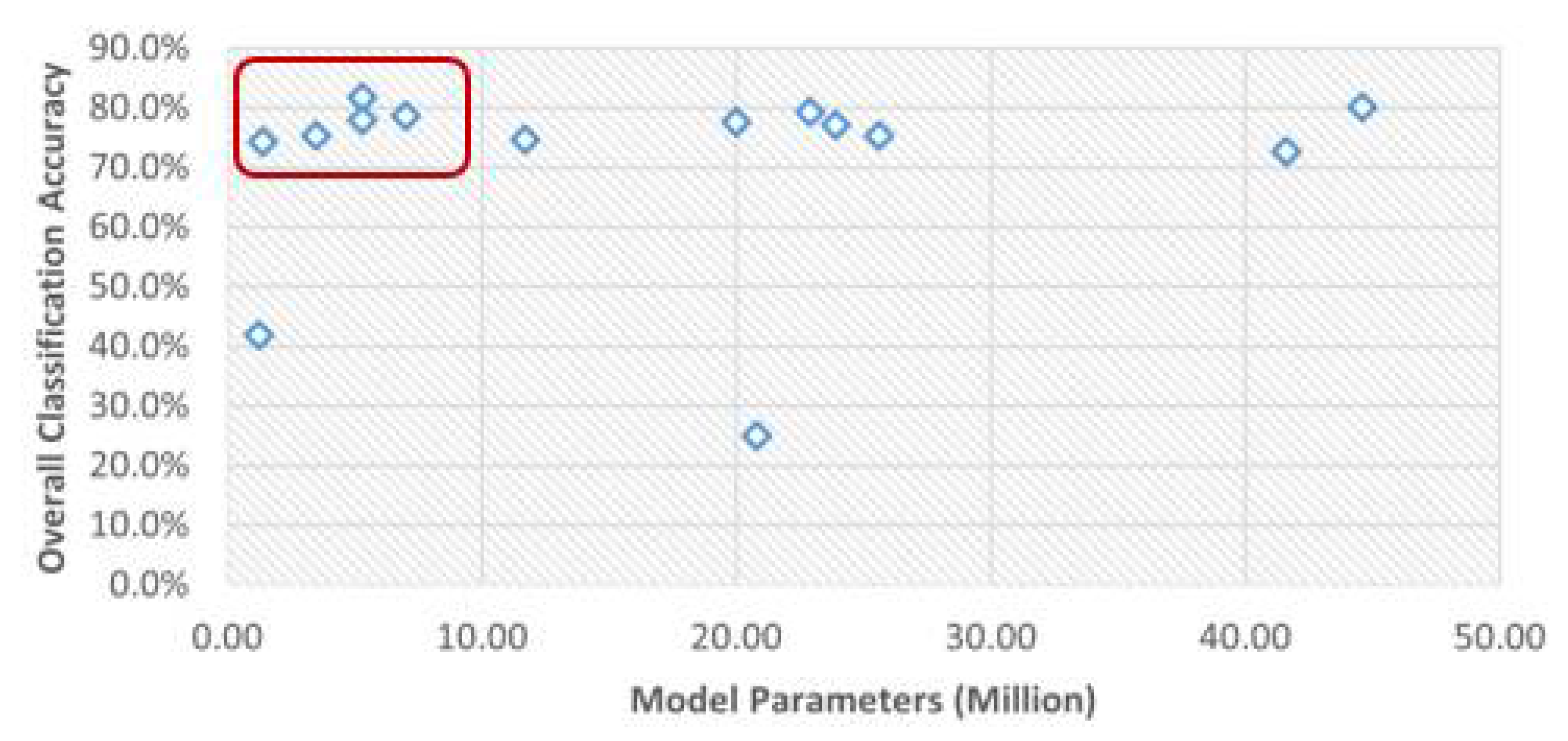

3.3. Benchmark Classification Accuracy Experiment Results and Discussion

4. Proposed Sea State Classification Model

4.1. Optimal Classification Model Identification

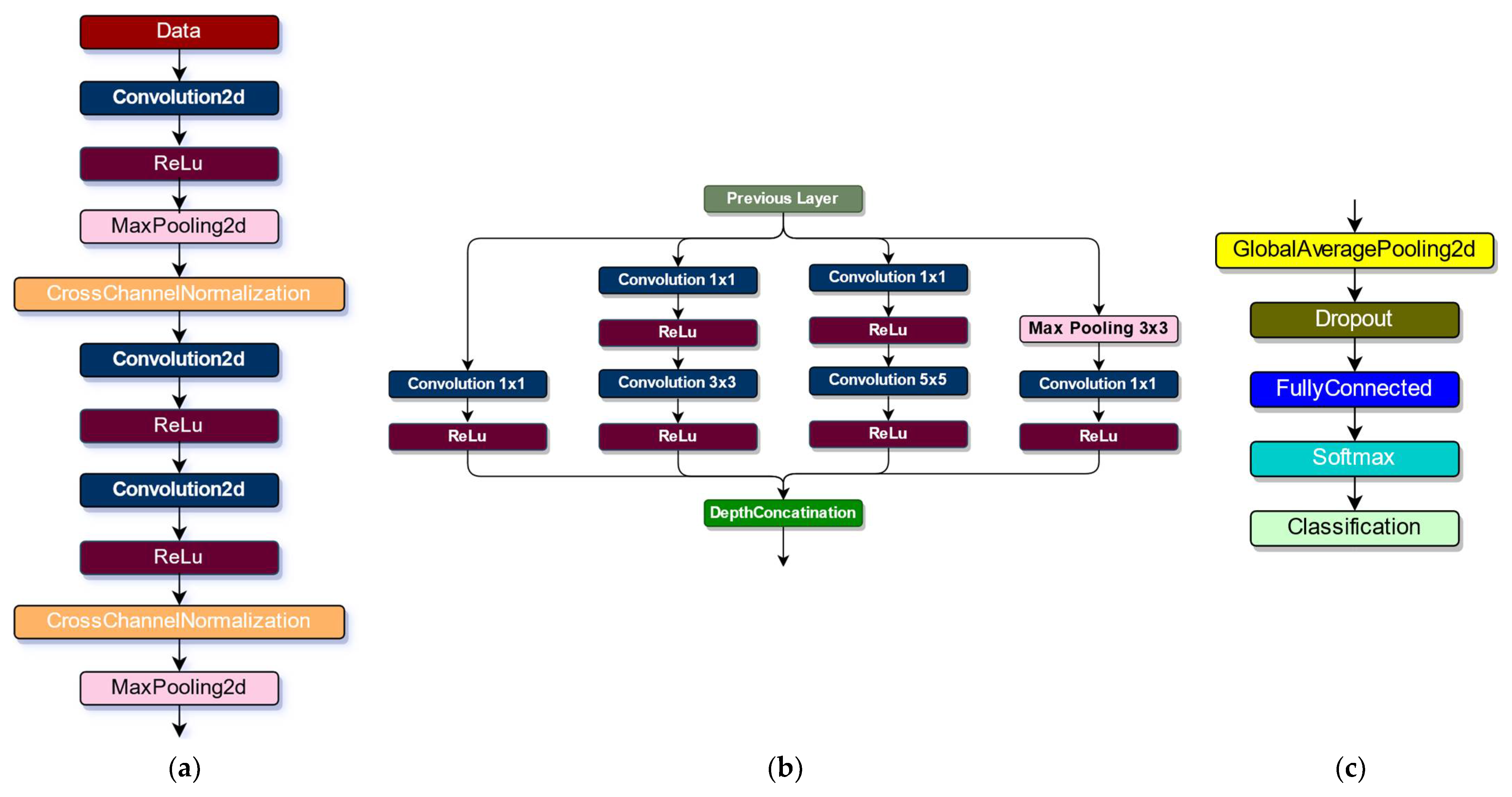

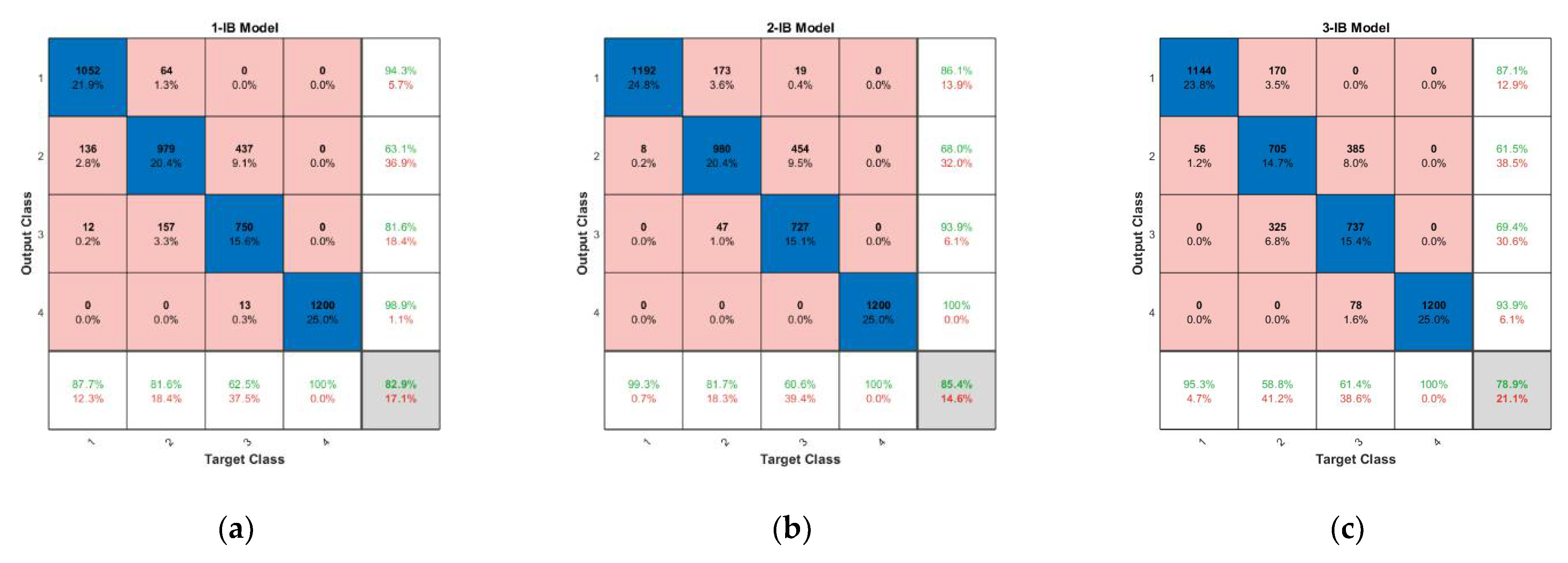

4.2. Climbing the Pinnacle of Classification Accuracy for GoogLeNet

4.3. Proposed Model Development

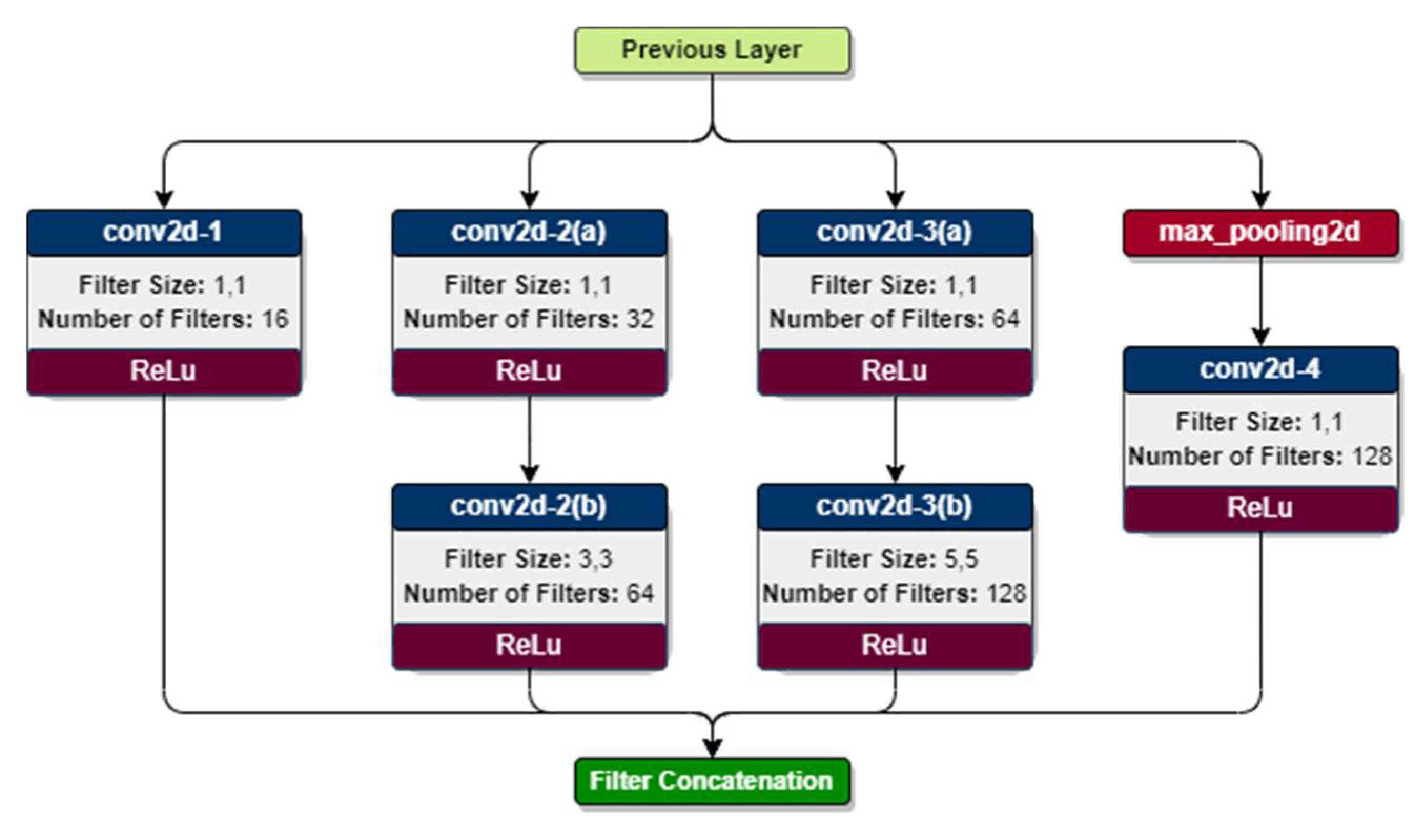

4.3.1. Design Improvement 1—Filter Parameters

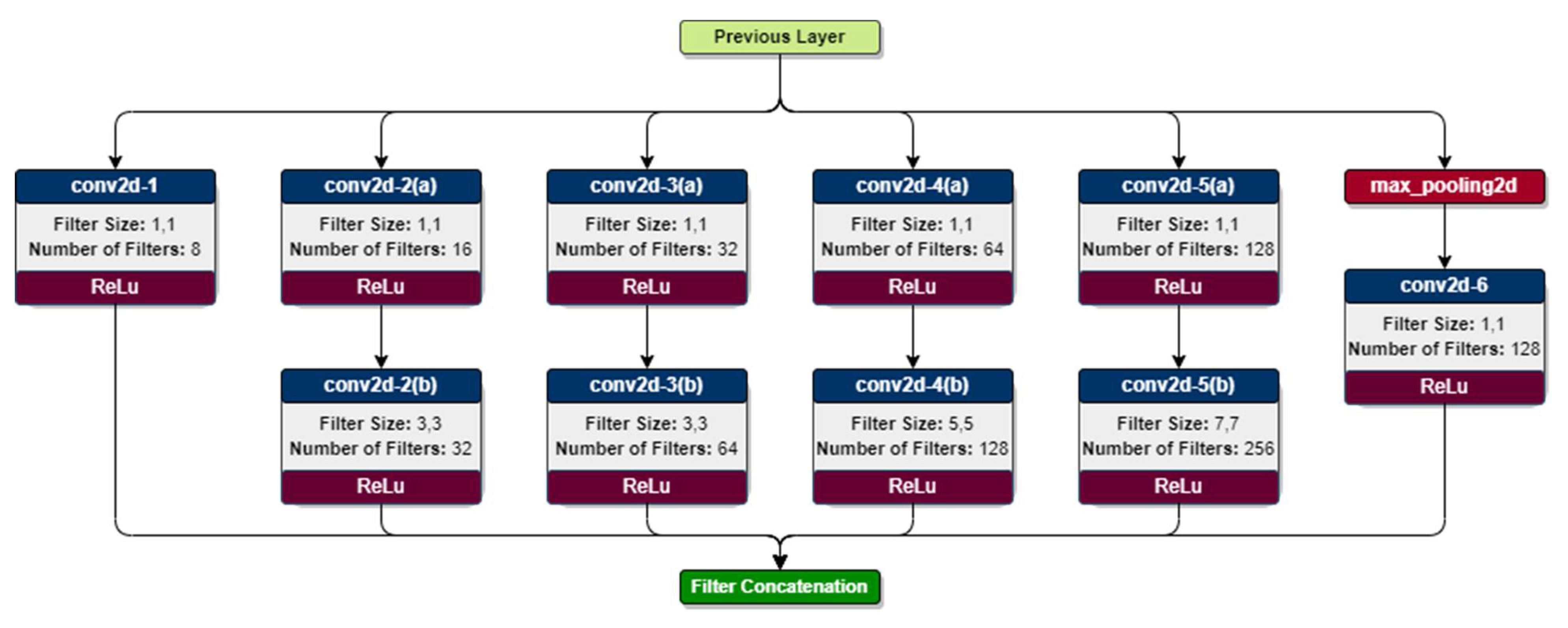

4.3.2. Design Improvement 2—Increasing Width of Inception Block

4.3.3. Design Improvement 3—Retrieving Contextual Information

4.3.4. Design Improvement 4—Minimizing Overfitting

5. Proposed Models Evaluation and Discussion

6. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Literature Search Method

- Domain-specific keywords are chosen and based on their combinations, six search queries are generated.

- The queries are executed on three search engines, i.e., (i) Web of Science, (ii) Scopus, and (iii) Google Scholar.

- For each query, studies conducted in English from 2018 till 2022 are filtered.

- The titles of the first 25 studies (sorted by relevance) are reviewed and relevant studies are shortlisted.

- The abstracts of shortlisted studies (whose full manuscripts are available) are reviewed, and the most relevant studies are selected for literature review.

| Domain | Keywords | Queries | Search Engines |

|---|---|---|---|

| Dataset | Sea state, Image, Dataset | Sea state, Sea state image, Sea state dataset, Sea state image dataset | Web of Science, Scopus, Google Scholar |

| Classification | Sea state, Image, Classification | Sea state classification, Sea state image classification |

Appendix B. Literature Search Results

| SN | Research Domain | Study Title | Relevance | Document Available | Final Status |

|---|---|---|---|---|---|

| S1 | Dataset | Observing sea states | No | - | Excluded |

| S2 | Dataset | A global sea state dataset from spaceborne synthetic aperture radar wave mode data | Direct | Yes | Included |

| S3 | Dataset | A large-scale under-sea dataset for marine observation | No | - | Excluded |

| S4 | Dataset | Deep-sea visual dataset of the South China sea | No | - | Excluded |

| S5 | Dataset | East China Sea coastline dataset (1990–2015) | No | - | Excluded |

| S6 | Dataset | Estimating pixel to metre scale and sea state from remote observations of the ocean surface | Indirect | Yes | Included |

| S7 | Dataset | Heterogeneous integrated dataset for maritime intelligence, surveillance, and reconnaissance | No | - | Excluded |

| S8 | Dataset | MOBDrone: A drone video dataset for man overboard rescue | No | - | Excluded |

| S9 | Dataset | Modeling and analysis of motion data from dynamically positioned vessels for sea state estimation | Indirect | Yes | Included |

| S10 | Dataset | North SEAL: a new dataset of sea level changes in the North Sea from satellite altimetry | No | - | Excluded |

| S11 | Dataset | Ocean wave height inversion under low sea state from horizontal polarized X-band nautical radar images | Indirect | Yes | Included |

| S12 | Dataset | On the application of multifractal methods for the analysis of sea surface images related to sea state determination | Indirect | Yes | Included |

| S13 | Dataset | Sea state events in the marginal ice zone with TerraSAR-X satellite images | No | - | Excluded |

| S14 | Dataset | Sea state from single optical images: A methodology to derive wind-generated ocean waves from cameras, drones and satellites | Indirect | Yes | Included |

| S15 | Dataset | Sea state parameters in highly variable environment of Baltic sea from satellite radar images | Indirect | Yes | Included |

| S16 | Dataset | Ship detection based on M2Det for SAR images under heavy sea state | No | - | Excluded |

| S17 | Dataset | Study on sea clutter suppression methods based on a realistic radar dataset | No | - | Excluded |

| S18 | Dataset | The sea state CCI dataset v1: towards a sea state climate data record based on satellite observations | Direct | Yes | Included |

| S19 | Dataset | Towards development of visual-range sea state image dataset for deep learning models | Indirect | Yes | Included |

| S20 | Dataset | Uncertainty in temperature and sea level datasets for the Pleistocene glacial cycles: Implications for thermal state of the subsea sediments | No | - | Excluded |

| S21 | Classification | Application of deep learning in sea states images classification | Direct | Yes | Included |

| S22 | Classification | Deep learning for wave height classification in satellite images for offshore wind access | Indirect | No | Excluded |

| S23 | Classification | Estimation of sea state from Sentinel-1 Synthetic aperture radar imagery for maritime situation awareness | No | - | Excluded |

| S24 | Classification | Sea state identification based on vessel motion response learning via multi-layer classifiers | Indirect | Yes | Included |

| S25 | Classification | SpectralSeaNet: Spectrogram and convolutional network-based sea state estimation | Indirect | Yes | Included |

| S26 | Classification | Wave height inversion and sea state classification based on deep learning of radar sea clutter data | Indirect | Yes | Included |

References

- Asariotis, R.; Assaf, M.; Benamara, H.; Hoffmann, J.; Premti, A.; Rodríguez, L.; Weller, M.; Youssef, F. Review of Maritime Transport 2018; United Nations Publications: New York, NY, USA, 2018. [Google Scholar]

- Pradeepkiran, J.A. Aquaculture Role in Global Food Security with Nutritional Value: A Review. Transl. Anim. Sci. 2019, 3, 903–910. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Legorburu, I.; Johnson, K.; Kerr, S. Offshore Oil and Gas, 1st ed.; Rivers Publishers: Gistrup, Denmark, 2018; ISBN 9788793609266. [Google Scholar]

- Leposa, N. Problematic Blue Growth: A Thematic Synthesis of Social Sustainability Problems Related to Growth in the Marine and Coastal Tourism. Sustain. Sci. 2020, 15, 1233–1244. [Google Scholar] [CrossRef] [Green Version]

- Laing, A.K.; Gemmill, W.; Magnusson, A.K.; Burroughs, L.; Reistad, M.; Khandekar, M.; Holthuijsen, L.; Ewing, J.A.; Carter, D.J.T. Guide to Wave Analysis and Forecasting, 2nd ed.; World Meteorological Organization: Geneva, Switzerland, 1998; ISBN 9263127026. [Google Scholar]

- Leykin, I.A.; Donelan, M.A.; Mellen, R.H.; McLaughlin, D.J. Asymmetry of Wind Waves Studied in a Laboratory Tank. Nonlinear Process. Geophys. 1995, 2, 280–289. [Google Scholar] [CrossRef] [Green Version]

- Dosaev, A.; Troitskaya, Y.I.; Shrira, V.I. On the Physical Mechanism of Front–Back Asymmetry of Non-Breaking Gravity–Capillary Waves. J. Fluid Mech. 2021, 906. [Google Scholar] [CrossRef]

- White, L.H.; Hanson, J.L. Automatic Sea State Calculator. Ocean. Conf. Rec. 2000, 3, 1727–1734. [Google Scholar]

- Dodet, G.; Piolle, J.F.; Quilfen, Y.; Abdalla, S.; Accensi, M.; Ardhuin, F.; Ash, E.; Bidlot, J.R.; Gommenginger, C.; Marechal, G.; et al. The Sea State CCI Dataset v1: Towards a Sea State Climate Data Record Based on Satellite Observations. Earth Syst. Sci. Data 2020, 12, 1929–1951. [Google Scholar] [CrossRef]

- Vannak, D.; Liew, M.S.; Yew, G.Z. Time Domain and Frequency Domain Analyses of Measured Metocean Data for Malaysian Waters. Int. J. Geol. Environ. Eng. 2013, 7, 549–554. [Google Scholar]

- Umair, M.; Hashmani, M.A.; Hasan, M.H.B. Survey of Sea Wave Parameters Classification and Prediction Using Machine Learning Models. In Proceedings of the 2019 1st International Conference on Artificial Intelligence and Data Sciences (AiDAS), Ipoh, Malaysia, 19 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Thomas, T.J.; Dwarakish, G.S. Numerical Wave Modelling—A Review. Aquat. Procedia 2015, 4, 443–448. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Z.; Lei, Y.; Wang, T.; Patel, P.; Jani, A.B.; Curran, W.J.; Liu, T.; Yang, X. Automatic Multi-Needle Localization in Ultrasound Images Using Large Margin Mask RCNN for Ultrasound-Guided Prostate Brachytherapy. Phys. Med. Biol. 2020, 65, 205003. [Google Scholar] [CrossRef]

- González, R.E.; Muñoz, R.P.; Hernández, C.A. Galaxy Detection and Identification Using Deep Learning and Data Augmentation. Astron. Comput. 2018, 25, 103–109. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; An, R.; Liu, S.; Cui, J.; Shang, X. Predicting and Understanding Student Learning Performance Using Multi-Source Sparse Attention Convolutional Neural Networks. IEEE Trans. Big Data 2021, 1–14. [Google Scholar] [CrossRef]

- Pan, M.; Liu, Y.; Cao, J.; Li, Y.; Li, C.; Chen, C.H. Visual Recognition Based on Deep Learning for Navigation Mark Classification. IEEE Access 2020, 8, 32767–32775. [Google Scholar] [CrossRef]

- Escorcia-Gutierrez, J.; Gamarra, M.; Beleño, K.; Soto, C.; Mansour, R.F. Intelligent Deep Learning-Enabled Autonomous Small Ship Detection and Classification Model. Comput. Electr. Eng. 2022, 100, 107871. [Google Scholar] [CrossRef]

- Singleton, F. The Beaufort Scale of Winds - Its Relevance, and Its Use by Sailors. Weather 2008, 63, 37–41. [Google Scholar] [CrossRef]

- Li, X.M.; Huang, B.Q. A Global Sea State Dataset from Spaceborne Synthetic Aperture Radar Wave Mode Data. Sci. Data 2020, 7, 261. [Google Scholar] [CrossRef]

- Loizou, A.; Christmas, J. Estimating Pixel to Metre Scale and Sea State from Remote Observations of the Ocean Surface. Int. Geosci. Remote Sens. Symp. 2018, 2018, 3513–3516. [Google Scholar] [CrossRef]

- Cheng, X.; Li, G.; Skulstad, R.; Chen, S.; Hildre, H.P.; Zhang, H. Modeling and Analysis of Motion Data from Dynamically Positioned Vessels for Sea State Estimation. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; Volume 2019, pp. 6644–6650. [Google Scholar]

- Wang, L.; Hong, L. Ocean Wave Height Inversion under Low Sea State from Horizontal Polarized X-Band Nautical Radar Images. J. Spat. Sci. 2021, 66, 75–87. [Google Scholar] [CrossRef]

- Ampilova, N.; Soloviev, I.; Kotopoulis, A.; Pouraimis, G.; Frangos, P. On the Application of Multifractal Methods for the Analysis of Sea Surface Images Related to Sea State Determination. In Proceedings of the 14th International Conference onCommunications, Electromagnetics and Medical Applications, Florence, Italy, 2–3 October 2019; pp. 32–35. [Google Scholar]

- Almar, R.; Bergsma, E.W.J.; Catalan, P.A.; Cienfuegos, R.; Suarez, L.; Lucero, F.; Lerma, A.N.; Desmazes, F.; Perugini, E.; Palmsten, M.L.; et al. Sea State from Single Optical Images: A Methodology to Derive Wind-Generated Ocean Waves from Cameras, Drones and Satellites. Remote Sens. 2021, 13, 679. [Google Scholar] [CrossRef]

- Rikka, S.; Pleskachevsky, A.; Uiboupin, R.; Jacobsen, S. Sea State Parameters in Highly Variable Environment of Baltic Sea from Satellite Radar Images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2965–2968. [Google Scholar]

- Umair, M.; Hashmani, M.A. Towards Development of Visual-Range Sea State Image Dataset for Deep Learning Models. In Proceedings of the 2021 International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), Bandung, Indonesia, 1–2 December 2021; pp. 23–27. [Google Scholar]

- Tu, F.; Ge, S.S.; Choo, Y.S.; Hang, C.C. Sea State Identification Based on Vessel Motion Response Learning via Multi-Layer Classifiers. Ocean Eng. 2018, 147, 318–332. [Google Scholar] [CrossRef]

- Cheng, X.; Li, G.; Skulstad, R.; Zhang, H.; Chen, S. SpectralSeaNet: Spectrogram and Convolutional Network-Based Sea State Estimation. In Proceedings of the IECON 2020 the 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 5069–5074. [Google Scholar]

- Liu, N.; Jiang, X.; Ding, H.; Xu, Y.; Guan, J. Wave Height Inversion and Sea State Classification Based on Deep Learning of Radar Sea Clutter Data. In Proceedings of the 10th International Conference on Control, Automation and Information Sciences, ICCAIS 2021, Xi’an, China, 14–17 October 2021; pp. 34–39. [Google Scholar]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on Deep Learning with Class Imbalance. J. Big Data 2019, 6. [Google Scholar] [CrossRef]

- Zhang, K.; Yu, Z.; Qu, L. Application of Deep Learning in Sea States Images Classification. In Proceedings of the 2021 7th Annual International Conference on Network and Information Systems for Computers, Guiyang, China, 23–25 July 2021; pp. 976–979. [Google Scholar] [CrossRef]

- Owens, E.H. Douglas Scale; Peterson Scale; Sea StateSea Conditions. In Beaches and Coastal Geology; Schwartz, M., Ed.; Springer: New York, NY, USA, 1984; p. 722. ISBN 978-0-387-30843-2. [Google Scholar]

- Patino, L.; Cane, T.; Vallee, A.; Ferryman, J. PETS 2016: Dataset and Challenge. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Las Vegas, NV, USA, 26–1 July 2016; pp. 1240–1247. [Google Scholar] [CrossRef] [Green Version]

- Hashmani, M.A.; Umair, M. A Novel Visual-Range Sea Image Dataset for Sea Horizon Line Detection in Changing Maritime Scenes. J. Mar. Sci. Eng. 2022, 10, 193. [Google Scholar] [CrossRef]

- Suresh, R.R.V.; Annapurnaiah, K.; Reddy, K.G.; Lakshmi, T.N.; Balakrishnan Nair, T.M. Wind Sea and Swell Characteristics off East Coast of India during Southwest Monsoon. Int. J. Ocean. Oceanogr. 2010, 4, 35–44. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- Park, S.; Kwak, N. Analysis on the Dropout Effect in Convolutional Neural Networks. In Lecture Notes in Computer Science (LNCS); Springer: Cham, Switzerland, 2017; Volume 10112, pp. 189–204. [Google Scholar] [CrossRef]

| SN | Study’s Primary Research Domain | Data Source | Data Format |

|---|---|---|---|

| S2 [19] | Sea state dataset development | Spaceborne advanced synthetic aperture radar | NetCDF |

| S18 [9] | Sea state dataset development | Spaceborne altimeter radar | Numeric |

| S6 [20] | Wind speed, period, wavelength, and highest value of significant wave height estimation | X-band radar | Image |

| S9 [21] | Sea state estimation | Simulated ship motion sensors | Numeric |

| S11 [22] | Significant wave height estimation | X-band radar | Image |

| S12 [23] | Sea state determination | Optical sensor | Image |

| S14 [24] | Significant wave height estimation | Aerial and spaceborne optical sensor | Image |

| S15 [25] | Significant wave height and wind speed estimation | Synthetic aperture radar | Image |

| S19 [26] | Sea state dataset development | Optical sensor | Image |

| SN | Model | Dataset Source | Classified Sea States | Reported Classification Accuracy |

|---|---|---|---|---|

| S24 [27] | ANFIS, RF, and PSO | Ship motion sensors | Eight | 74.4~96.5% |

| S25 [28] | 2D CNN | Simulated ship movement sensors | Five | 92.3~94.6% |

| S26 [29] | CNN | X-band radar | Three | 93.9~95.7% |

| S21 [31] | ResNet-152 | Optical sensor | N/A | N/A |

| Sea State | Wind Speed (Knots) | Sea Surface Visual Characteristics |

|---|---|---|

| 0 | <1 | Sea surface like a mirror. |

| 1 | 1–3 | Ripple with the appearance of scales are formed, but without foam crests. |

| 2 | 4–6 | Small wavelets, still short, but more pronounced. Crests have a glassy appearance and do not break. |

| 3 | 7–10 | Large wavelets. Crests begin to break. Foam of glassy appearance. Perhaps scattered white horses. |

| 4 | 11–16 | Small waves, becoming larger; fairly frequent white horses. |

| Video Information | Wind Speed Based Sea State Classification | Sea Surface Features Based Empirical Sea State Classification | Sea Surface Features Based Sea State Classification | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Sea State 0 | Sea State 1 | Sea State 2 | Sea State 3 | Sea State 4 | |||||||

|  |  |  |  | |||||||

| Date | Time | Video | Prevailing Wind Speed (knots) | 30-min Average Wind Speed | Wind Speed Based Sea State | Sea Like a Mirror | Ripples with Appearance of Scales, No Foam Crests | Small Wavelets, Crests of Glassy Appearance, Not Breaking | Large Wavelets, Crest Begins to Break, Scattered Whitecaps | Small Waves Becoming Longer, Numerous Whitecaps | |

| 14/07/22 | 17:06:02 | DSC_1844 | 3.5 | 5.0 | 2 | No | No | Yes | No | No | 2 |

| Wind Speed Range (Knots) | Sea State |

|---|---|

| 0–0.9 | 0 |

| 1–3.9 | 1 |

| 4–6.9 | 2 |

| 7–10.9 | 3 |

| 11–16.9 | 4 |

| Augmentation Type | Post Augmentation Observation | Label Preservation | Selection Status |

|---|---|---|---|

| Vertical flip | Sea state’s visual attributes (i.e., shape, crest, white caps, etc.) do not remain intact. | No | Rejected |

| Horizontal flip | Sea state’s visual attributes (i.e., shape, crest, white caps, etc.) remains intact. | Yes | Selected |

| Channel isolation | Image and white cap’s natural color do not remain intact. | No | Rejected |

| Brightness and contrast | Introduces a wide range of illuminations. | Yes | Selected |

| Cropping | This may result in the loss of distinguishable sea state features such as white caps. | No | Rejected |

| Rotation | Imitates optical sensor’s roll effect. | Yes | Selected |

| Image shift | Imitates optical sensor’s pitch and yaw effect. | Yes | Selected |

| Noise injection | Improves model’s generalization ability. | Yes | Selected |

| Motion blur | Imitates optical sensor’s movement. | Yes | Selected |

| Sharpening | More appropriate for object detection. | Yes | Rejected |

| Random grid shuffle | Shuffle’s patches within the image while keeping the sea state’s visual attributes (i.e., shape, crest, white caps, etc.) intact. | Yes | Selected |

| Histogram matching | Imitates different sea surface colors in target image. | Yes | Selected |

| Histogram equalization | Improves the image’s contrast level. | Yes | Selected |

| Random cropping and patching | Sea state’s visual attributes (i.e., shape, crest, white caps, etc.) do not remain intact. | No | Rejected |

| SN | Detail | Suffix |

|---|---|---|

| 1 | First overlapping image from a frame | FA |

| 2 | Second overlapping image from a frame | FB |

| 3 | Gaussian noise | GN |

| 4 | Horizontal flip | HF |

| 5 | Histogram matching | HM |

| 6 | Histogram equalization | HE |

| 7 | Motion blur | MB |

| 8 | Random brightness and contrast | RBC |

| 9 | Random grid shuffle | RGS |

| 10 | Shift scale rotate | SSR |

| 11 | Image size 224 × 224 pixels | 224 × 224 |

| Hardware Environment | Software Environment |

|---|---|

|

|

| Sea State | Number of Source Videos | Extracted Images from Source Videos | Overall Percent Share |

|---|---|---|---|

| 0 | 2 | 8730 | 10.2% |

| 1 | 31 | 32,854 | 38.5% |

| 2 | 27 | 22,472 | 26.3% |

| 3 | 18 | 12,223 | 14.3% |

| 4 | 5 | 8951 | 10.5% |

| Total | 83 | 85,230 | 100% |

| Sea State | Training Set | Validation Set | Testing Set | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Source Videos | Total Images | Selected Images | Source Videos | Total Images | Selected Images | Source Videos | Total Images | Selected Images | |

| 1 | 16 | 17,548 | 3600 | 8 | 10,223 | 1200 | 7 | 5083 | 1200 |

| 2 | 12 | 11,385 | 3600 | 8 | 6060 | 1200 | 7 | 5027 | 1200 |

| 3 | 7 | 5448 | 3600 | 6 | 3744 | 1200 | 5 | 3031 | 1200 |

| 4 | 2 | 5712 | 3600 | 1 | 1866 | 1200 | 2 | 1373 | 1200 |

| Total | 37 | 40,093 | 14,400 | 23 | 21,893 | 3600 | 21 | 14,514 | 3600 |

| Sea State | Training Set | Validation Set | Testing Set | Total |

|---|---|---|---|---|

| Image Instances after Augmentation | Image Instances after Augmentation | Image Instances | ||

| 1 | 18,000 | 6000 | 1200 | 25,200 |

| 2 | 18,000 | 6000 | 1200 | 25,200 |

| 3 | 18,000 | 6000 | 1200 | 25,200 |

| 4 | 18,000 | 6000 | 1200 | 25,200 |

| Total | 72,000 | 24,000 | 4800 | 100,800 |

| SN | Model | Depth | Parameters (Million) |

|---|---|---|---|

| 1 | SqueezeNet | 18 | 1.24 |

| 2 | ShuffleNet | 50 | 1.4 |

| 3 | MobileNet-v2 | 53 | 3.5 |

| 4 | NASNet-Mobile | - | 5.3 |

| 5 | EfficientNet-b0 | 82 | 5.3 |

| 6 | GoogLeNet | 22 | 7.0 |

| 7 | ResNet-18 | 18 | 11.7 |

| 8 | DenseNet-201 | 201 | 20.0 |

| 9 | DarkNet-19 | 19 | 20.8 |

| 10 | Xception | 71 | 22.9 |

| 11 | Inception-v3 | 48 | 23.9 |

| 12 | ResNet-50 | 50 | 25.6 |

| 13 | DarkNet-53 | 53 | 41.6 |

| 14 | ResNet-101 | 101 | 44.6 |

| 15 | Inception-ResNet-V2 | 164 | 55.9 |

| 16 | AlexNet | 8 | 61.0 |

| 17 | nasnetlarge | - | 88.9 |

| 18 | VGG-16 | 16 | 138.0 |

| 19 | VGG-19 | 19 | 144.0 |

| SN | Parameter | Value |

|---|---|---|

| 1 | Solver | SGDM |

| 2 | Initial learning rate | 0.01 |

| 3 | Validation frequency | 50 |

| 4 | Max epochs | 10 |

| 5 | Mini batch size | 32 |

| 6 | Execution environment | GPU |

| 7 | L2 regularization | 0.0001 |

| 8 | Validation patience | 5 |

| SN | Pretrained Model | Sea State Wise Classification Accuracy (%) | Overall Classification Accuracy (%) | Per-Image Classification Time (s) | |||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||||

| 1 | NASNet-Mobile | 90.2% | 76.2% | 60.6% | 100.0% | 81.8% | 0.127 |

| 2 | ResNet-101 | 97.0% | 58.5% | 65.5% | 100.0% | 80.2% | 0.034 |

| 3 | Xception | 91.4% | 74.5% | 51.2% | 100.0% | 79.3% | 0.025 |

| 4 | GoogLeNet | 81.7% | 70.2% | 62.9% | 100.0% | 78.7% | 0.019 |

| 5 | EfficientNet-b0 | 72.6% | 78.7% | 60.3% | 100.0% | 77.9% | 0.080 |

| 6 | DenseNet-201 | 68.8% | 81.8% | 60.2% | 100.0% | 77.7% | 0.115 |

| 7 | Inception-v3 | 85.1% | 64.9% | 58.3% | 100.0% | 77.1% | 0.029 |

| 8 | MobileNet-v2 | 91.8% | 67.1% | 42.8% | 100.0% | 75.4% | 0.020 |

| 9 | ResNet-50 | 78.6% | 81.2% | 41.5% | 100.0% | 75.3% | 0.020 |

| 10 | ResNet-18 | 82.8% | 71.9% | 43.6% | 100.0% | 74.6% | 0.013 |

| 11 | ShuffleNet | 90.6% | 61.6% | 45.4% | 100.0% | 74.4% | 0.026 |

| 12 | DarkNet-53 | 90.4% | 67.2% | 33.2% | 100.0% | 72.7% | 0.027 |

| 13 | SqueezeNet | 35.6% | 85.0% | 37.2% | 9.6% | 41.9% | 0.012 |

| 14 | DarkNet-19 | 100.0% | 0.0% | 0.0% | 0.0% | 25.0% | 0.016 |

| SN | Model | Model Depth | Number of Layers | Number of Connections | Approximate Model Training Time (min) | Overall Classification Accuracy | Per-Image Classification Time (s) |

|---|---|---|---|---|---|---|---|

| 1 | NASNet-Mobile | N/A | 913 | 1072 | 58 | 81.8% | 0.127 |

| 2 | ResNet-101 | 101 | 347 | 379 | 37 | 80.2% | 0.034 |

| 3 | Xception | 71 | 170 | 181 | 79 | 79.3% | 0.025 |

| 4 | GoogLeNet | 22 | 144 | 170 | 14 | 78.7% | 0.019 |

| 5 | EfficientNet-b0 | 82 | 290 | 363 | 34 | 77.9% | 0.080 |

| SN | GoogLeNet Variation | Classification Accuracy (%) | Overall Classification Accuracy (%) | Per-Image Classification Time (s) | |||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||||

| 1 | 1-IB | 87.7% | 81.6% | 62.5% | 100.0% | 82.9% | 0.008 |

| 2 | 2-IB | 99.3% | 81.7% | 60.6% | 100.0% | 85.4% | 0.009 |

| 3 | 3-IB | 95.3% | 58.8% | 61.4% | 100.0% | 78.9% | 0.014 |

| SN | Proposed Model | Sea State Wise Classification Accuracy (%) | Overall Classification Accuracy (%) | Per-image Classification Time (s) | |||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||||

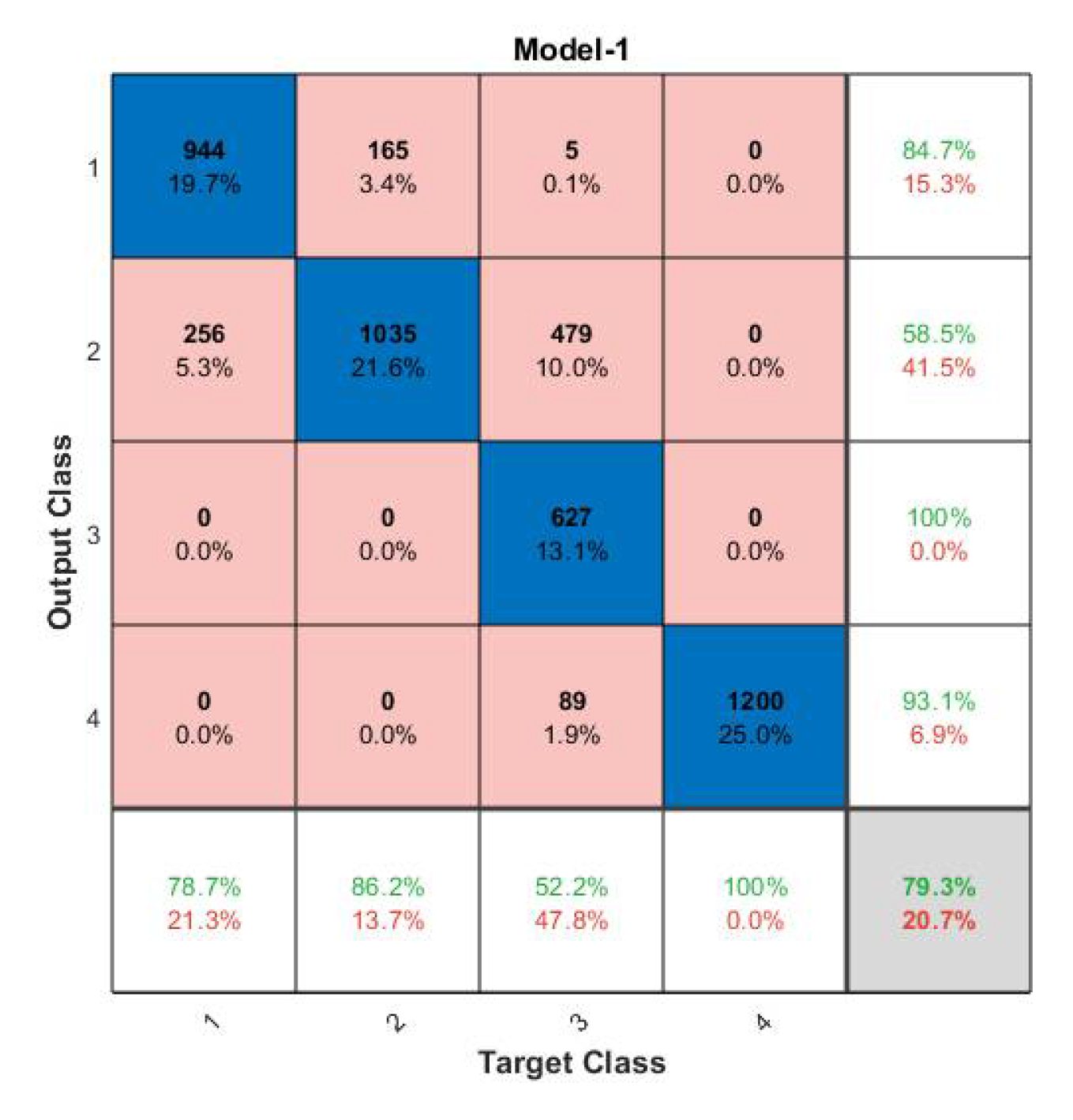

| 1 | Model 1 | 78.7% | 86.2% | 52.2% | 100.0% | 79.3% | 0.008 |

| 2 | Model 2 | 86.6% | 87.7% | 45.9% | 100.0% | 80.0% | 0.009 |

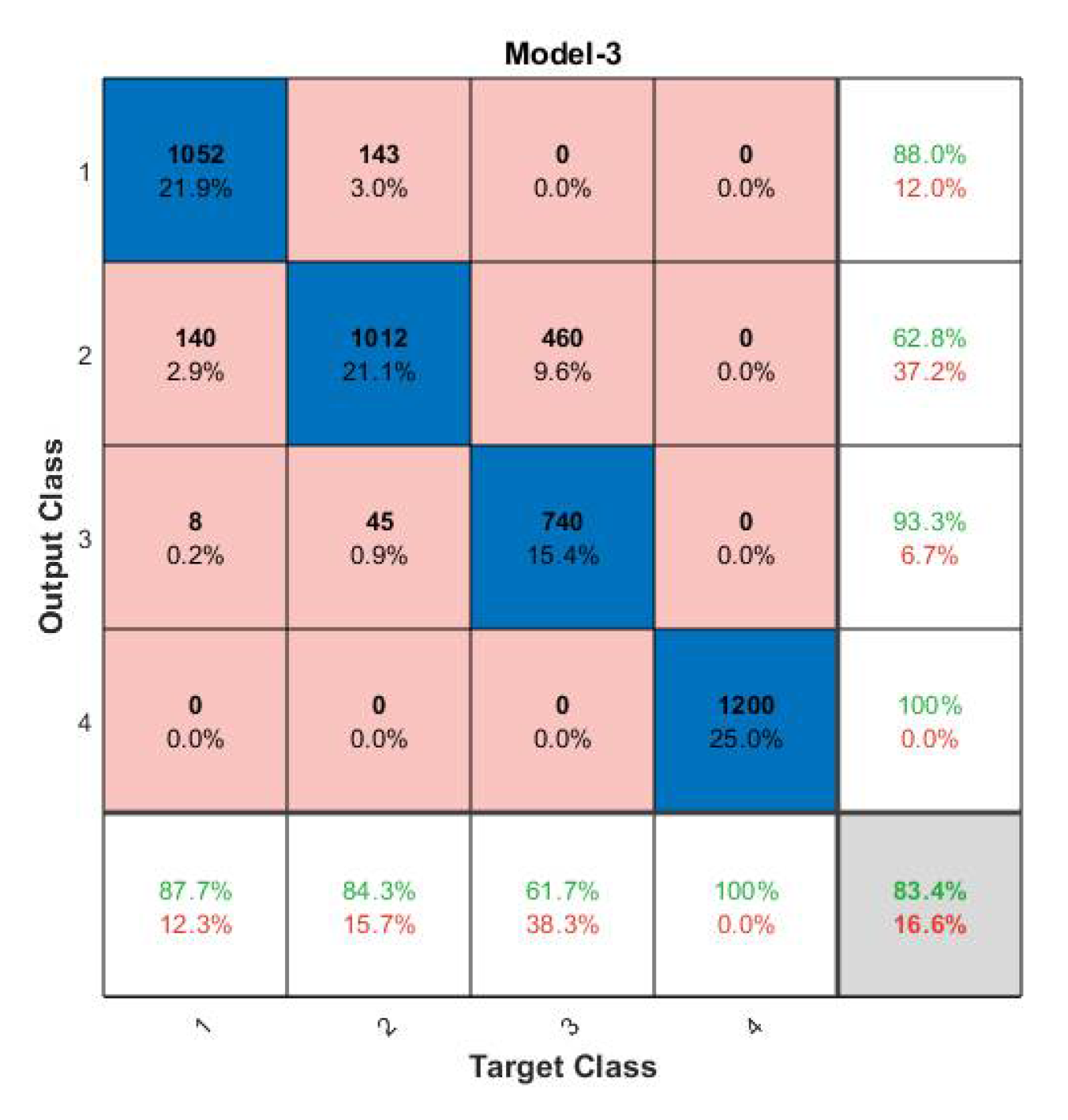

| 3 | Model 3 | 87.7% | 84.3% | 61.7% | 100.0% | 83.4% | 0.011 |

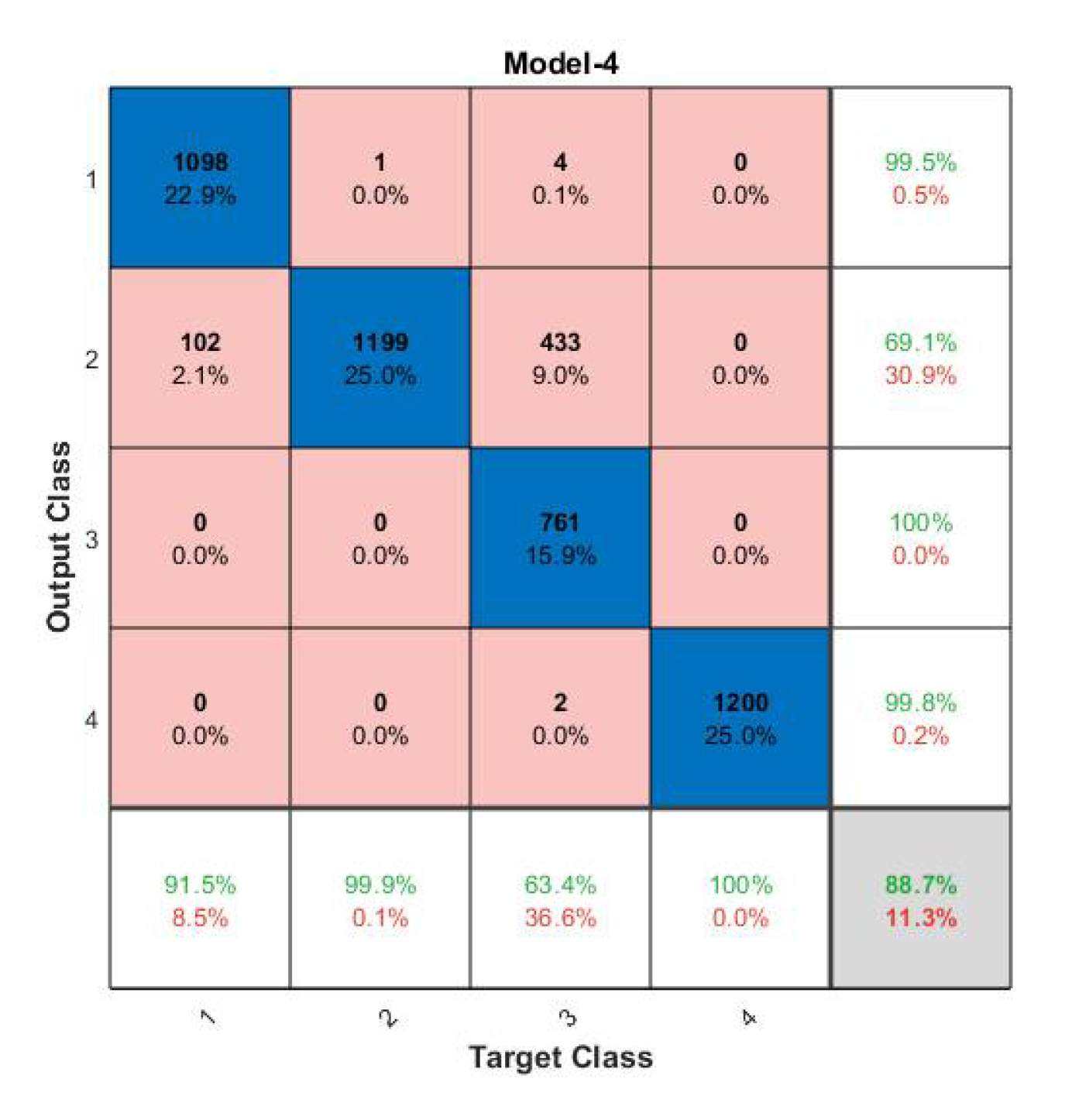

| 4 | Model 4 | 91.5% | 99.9% | 63.4% | 100.0% | 88.7% | 0.011 |

| SN | Model | Overall Classification Accuracy (%) | 95% Confidence Interval | Model Training Time (s) | Per-image Classification Time (s) | ||

|---|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | ||||||

| 1 | State-of-the-art models | NASNet-Mobile | 81.8% | 80.6% | 82.8% | 3513 | 0.127 |

| 2 | ResNet-101 | 80.2% | 79.1% | 81.3% | 2249 | 0.034 | |

| 3 | Xception | 79.3% | 78.1% | 80.4% | 4749 | 0.025 | |

| 4 | GoogLeNet | 78.7% | 77.5% | 79.8% | 892 | 0.019 | |

| 5 | EfficientNet-b0 | 77.9% | 76.7% | 79.0% | 2090 | 0.080 | |

| 6 | DenseNet-201 | 77.7% | 76.5% | 78.8% | 12,945 | 0.115 | |

| 7 | Inception-v3 | 77.1% | 75.8% | 78.2% | 2656 | 0.029 | |

| 8 | MobileNet-v2 | 75.4% | 74.2% | 76.6% | 1106 | 0.020 | |

| 9 | ResNet-50 | 75.3% | 74.0% | 76.5% | 1964 | 0.020 | |

| 10 | ResNet-18 | 74.6% | 73.3% | 75.7% | 822 | 0.013 | |

| 11 | ShuffleNet | 74.4% | 73.1% | 75.6% | 1371 | 0.026 | |

| 12 | DarkNet-53 | 72.7% | 71.4% | 73.9% | 9510 | 0.027 | |

| 13 | SqueezeNet | 41.9% | 40.4% | 43.2% | 659 | 0.012 | |

| 14 | DarkNet-19 | 25.0% | 23.7% | 26.2% | 2824 | 0.016 | |

| 15 | GoogLeNet Variations | 1-IB | 82.9% | 81.8% | 84.0% | 707 | 0.008 |

| 16 | 2-IB | 85.4% | 84.3% | 86.3% | 515 | 0.009 | |

| 17 | 3-IB | 78.9% | 77.7% | 80.0% | 621 | 0.014 | |

| 18 | Proposed Models | Model 1 | 79.3% | 78.1% | 80.4% | 1166 | 0.008 |

| 19 | Model 2 | 80.0% | 78.9% | 81.1% | 429 | 0.009 | |

| 20 | Model 3 | 83.4% | 82.3% | 84.4% | 650 | 0.011 | |

| 21 | Model 4 (MUSeNet) | 88.7% | 87.7% | 89.5% | 521 | 0.011 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Umair, M.; Hashmani, M.A.; Hussain Rizvi, S.S.; Taib, H.; Abdullah, M.N.; Memon, M.M. A Novel Deep Learning Model for Sea State Classification Using Visual-Range Sea Images. Symmetry 2022, 14, 1487. https://doi.org/10.3390/sym14071487

Umair M, Hashmani MA, Hussain Rizvi SS, Taib H, Abdullah MN, Memon MM. A Novel Deep Learning Model for Sea State Classification Using Visual-Range Sea Images. Symmetry. 2022; 14(7):1487. https://doi.org/10.3390/sym14071487

Chicago/Turabian StyleUmair, Muhammad, Manzoor Ahmed Hashmani, Syed Sajjad Hussain Rizvi, Hasmi Taib, Mohd Nasir Abdullah, and Mehak Maqbool Memon. 2022. "A Novel Deep Learning Model for Sea State Classification Using Visual-Range Sea Images" Symmetry 14, no. 7: 1487. https://doi.org/10.3390/sym14071487

APA StyleUmair, M., Hashmani, M. A., Hussain Rizvi, S. S., Taib, H., Abdullah, M. N., & Memon, M. M. (2022). A Novel Deep Learning Model for Sea State Classification Using Visual-Range Sea Images. Symmetry, 14(7), 1487. https://doi.org/10.3390/sym14071487