Calibration of High-Impact Short-Range Quantitative Precipitation Forecast through Frequency-Matching Techniques

Abstract

1. Introduction

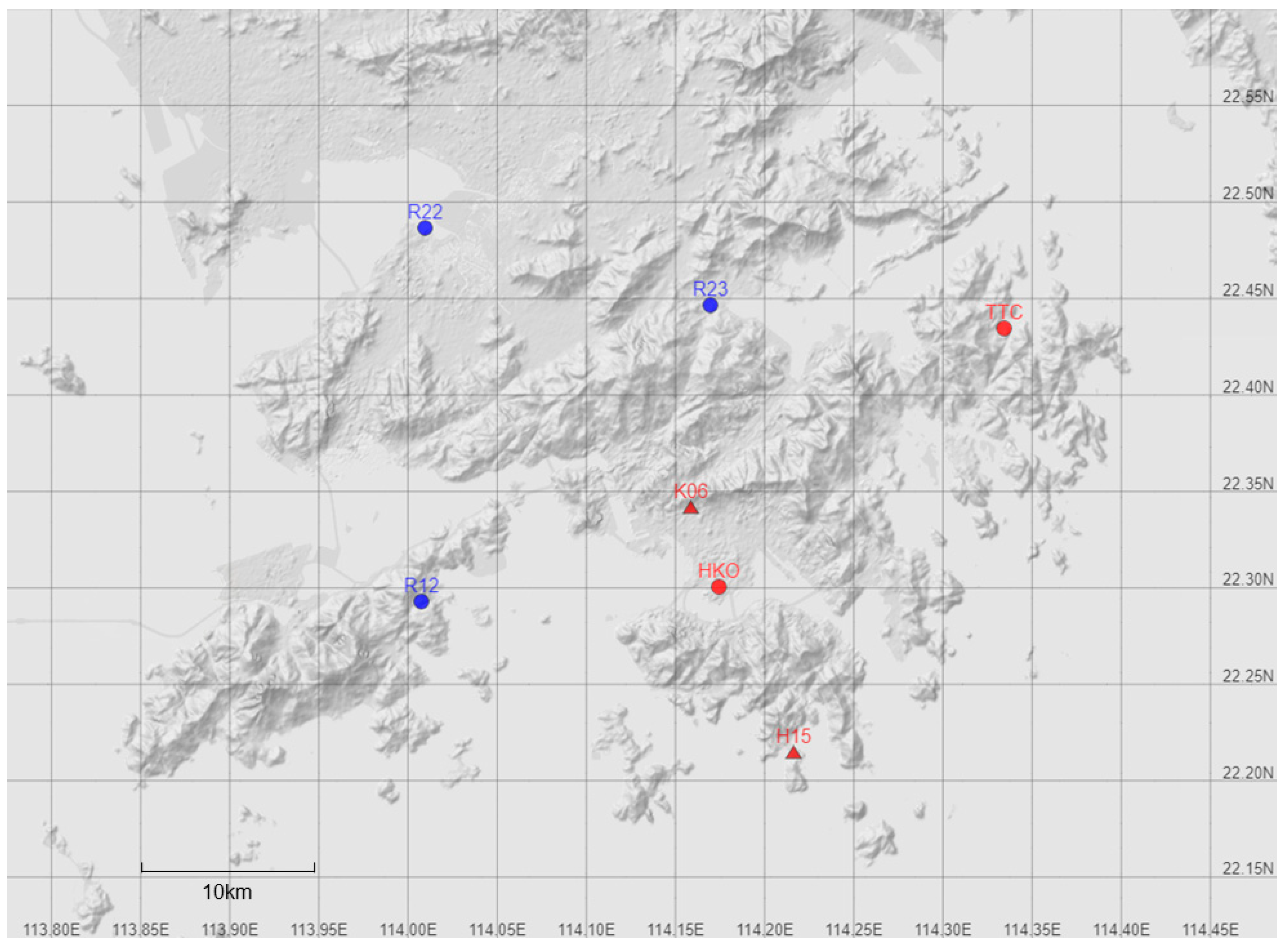

2. Data and Methodology

2.1. The f-t Conversion

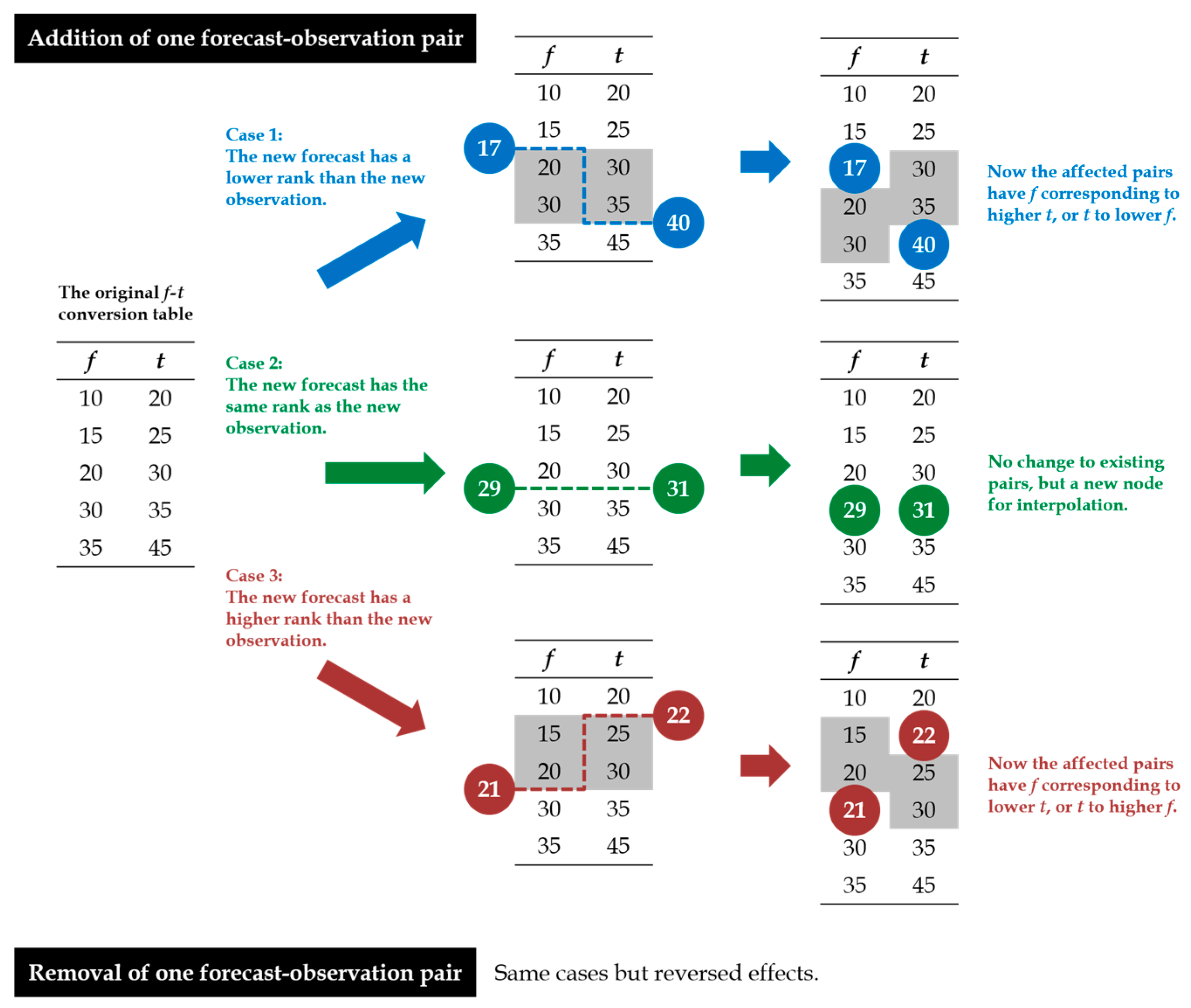

2.2. The Adaptive Table Method

2.3. The Sliding Window Method

2.4. Verification Metrics

3. Results and Discussion

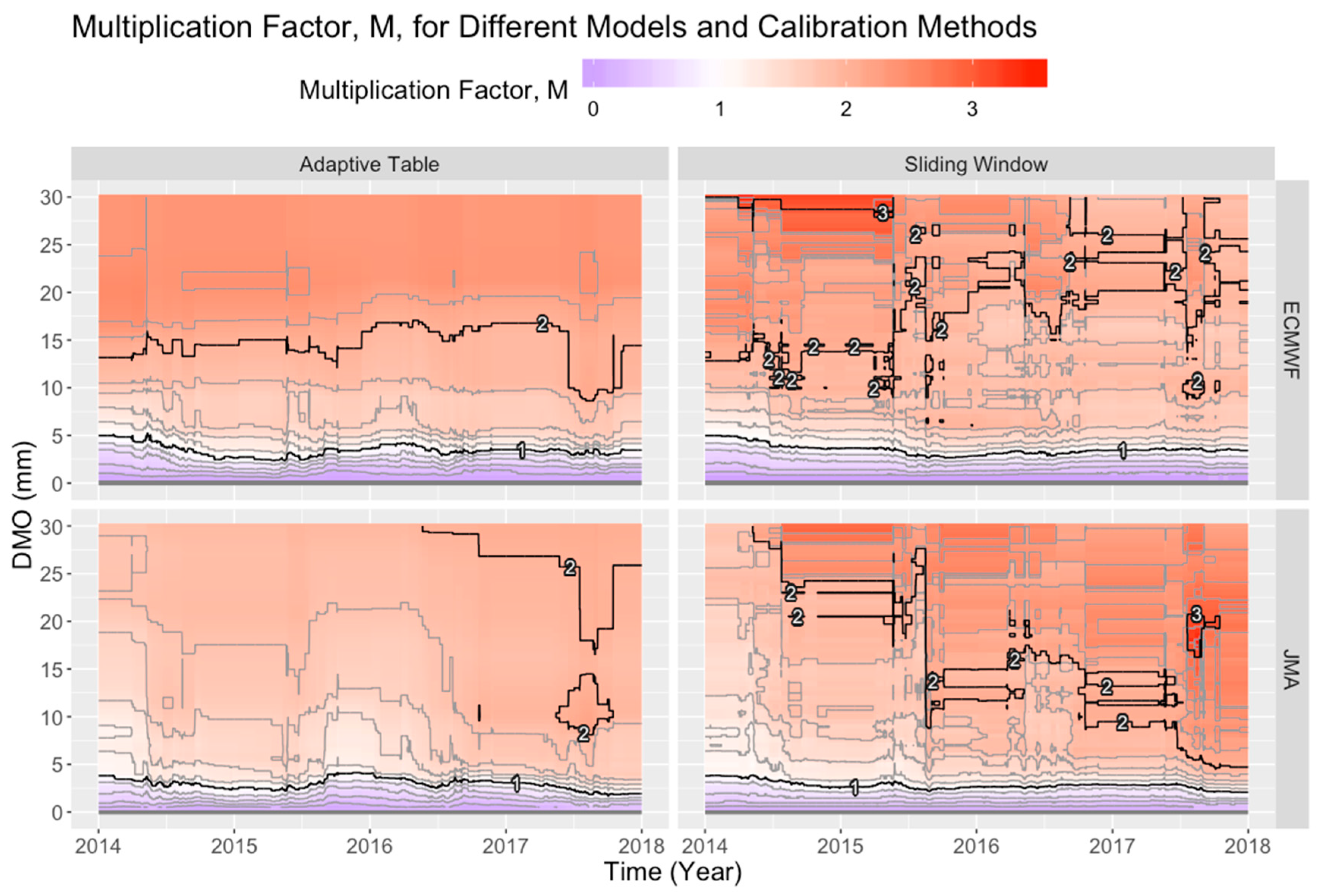

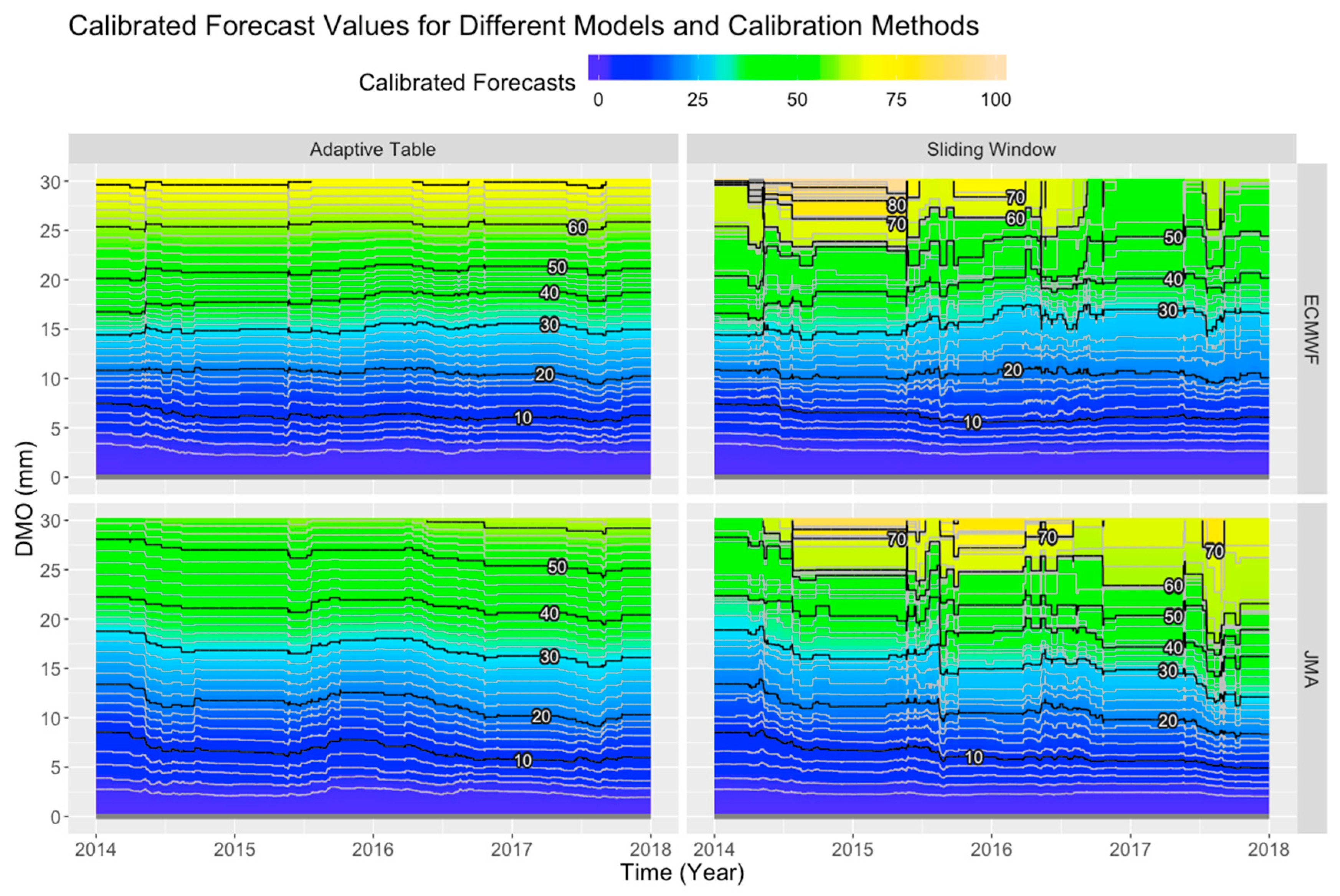

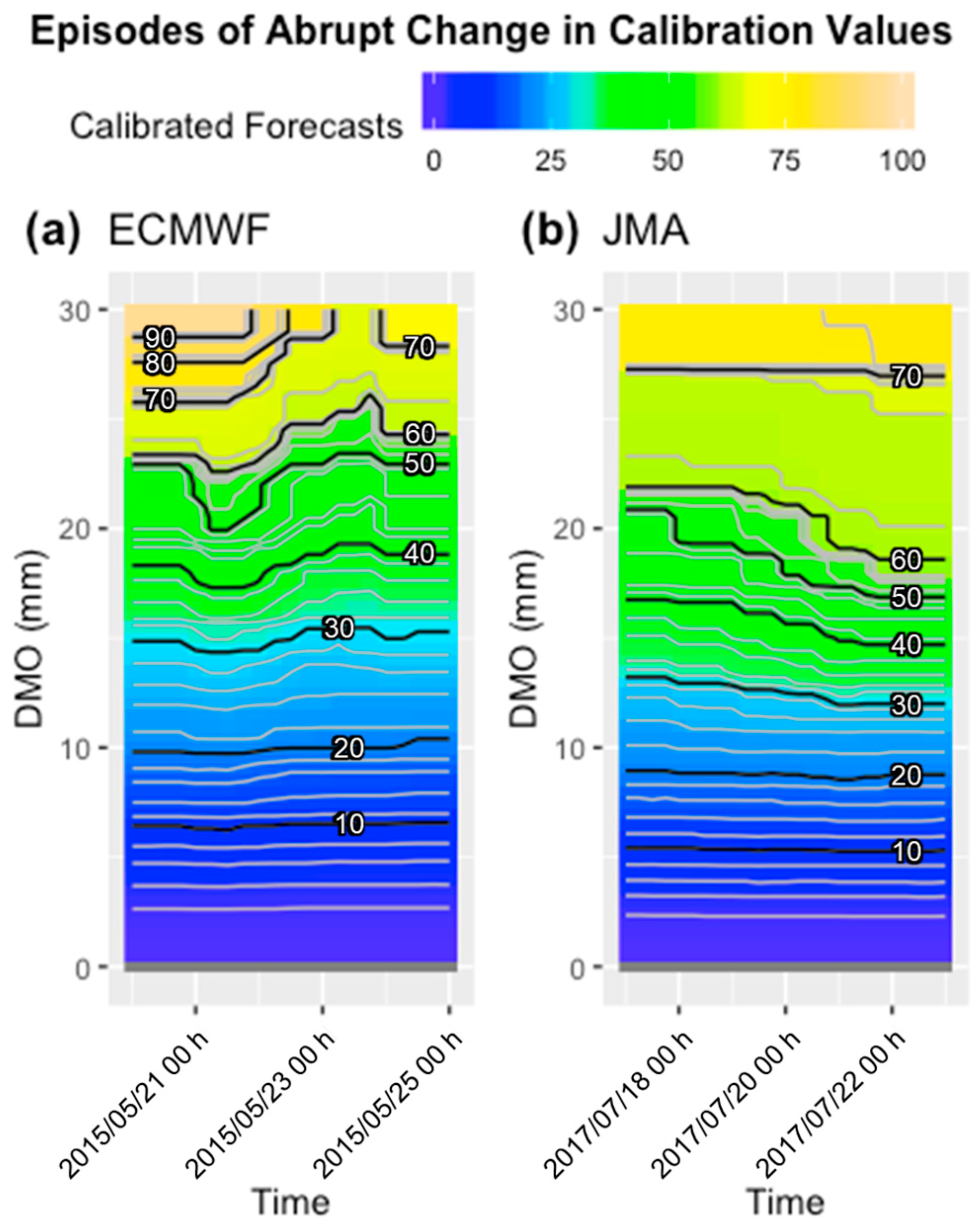

3.1. The Stability of the Calibration Methods

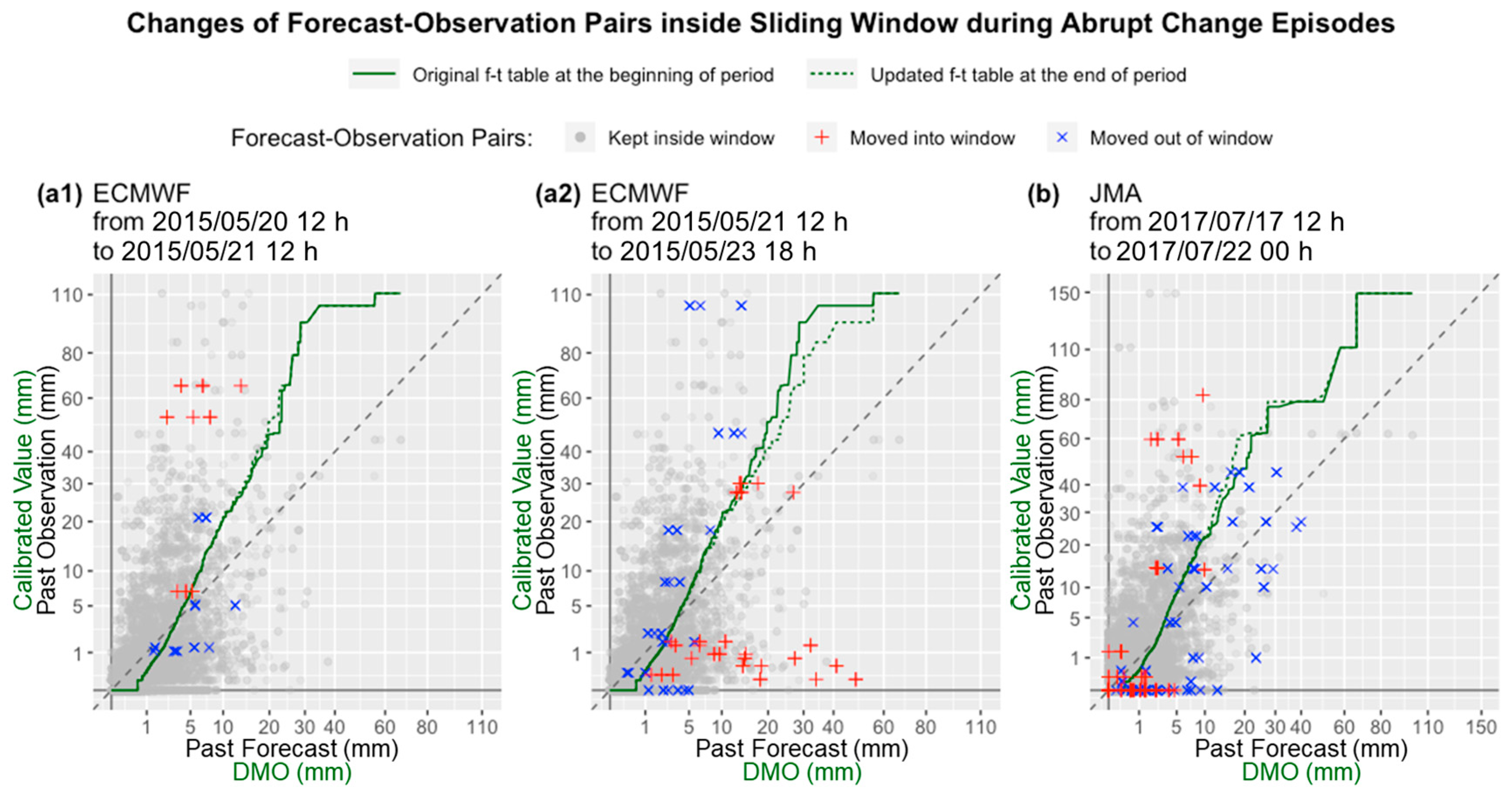

3.2. The Behavior of Updates in the Sliding Window Method

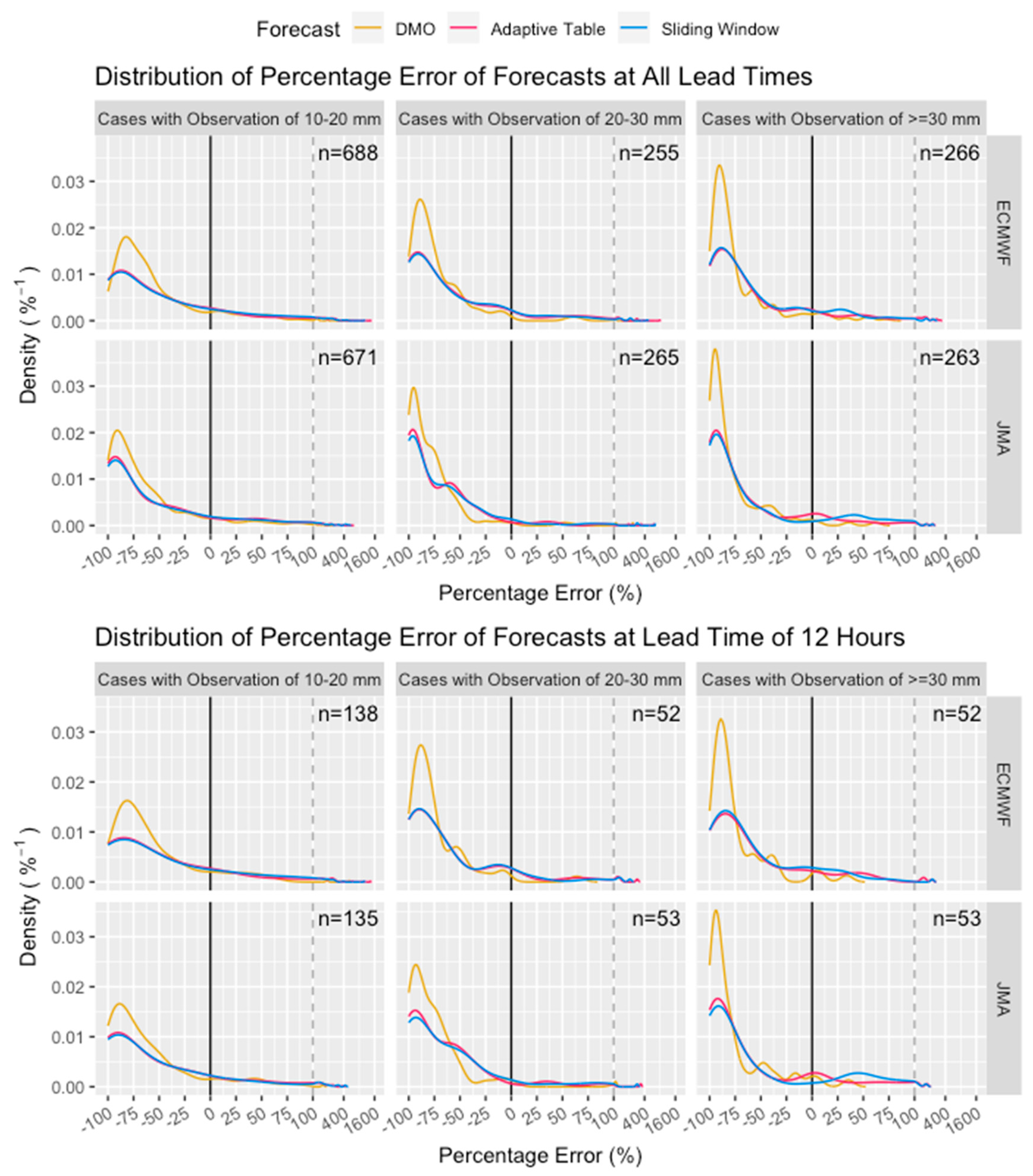

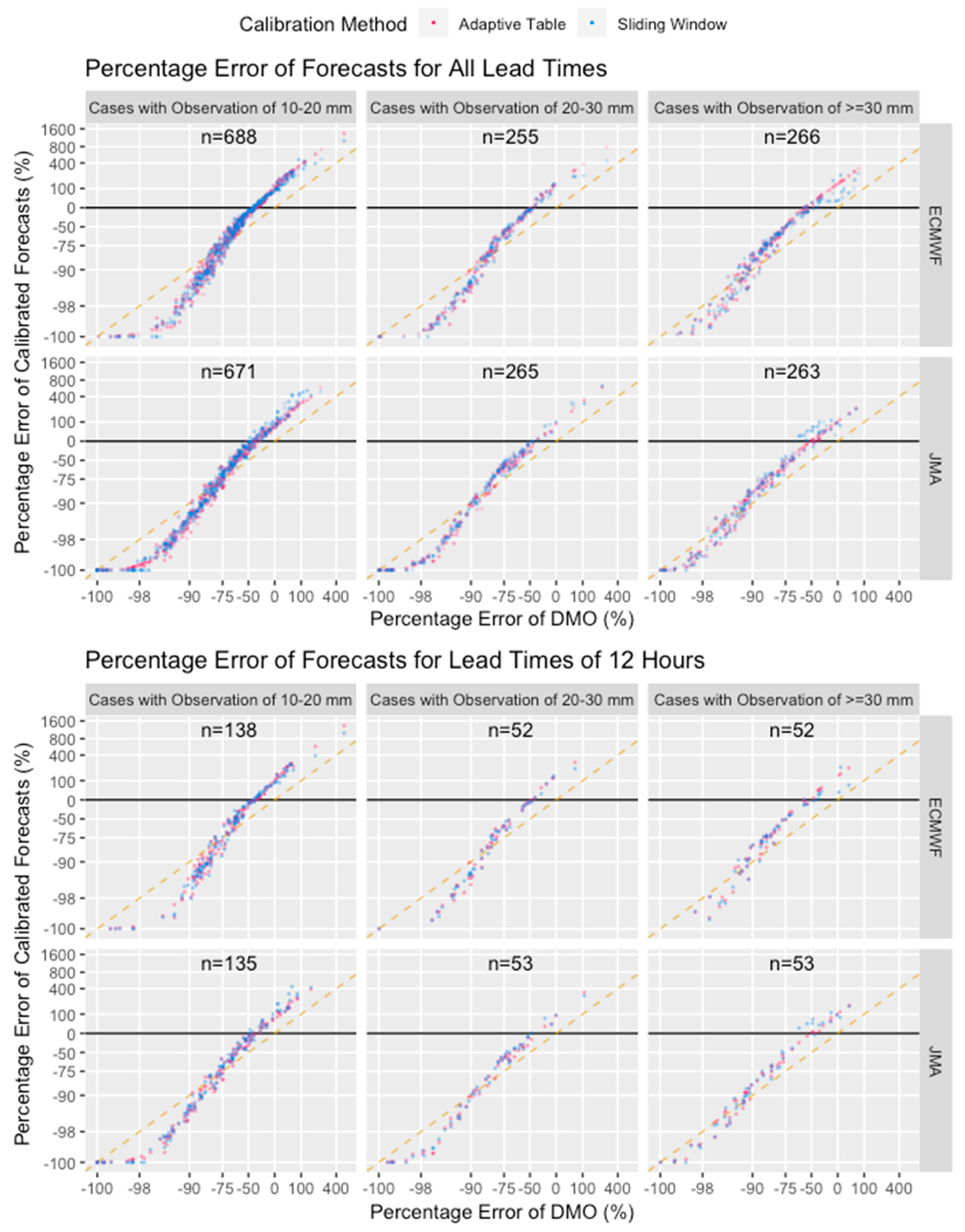

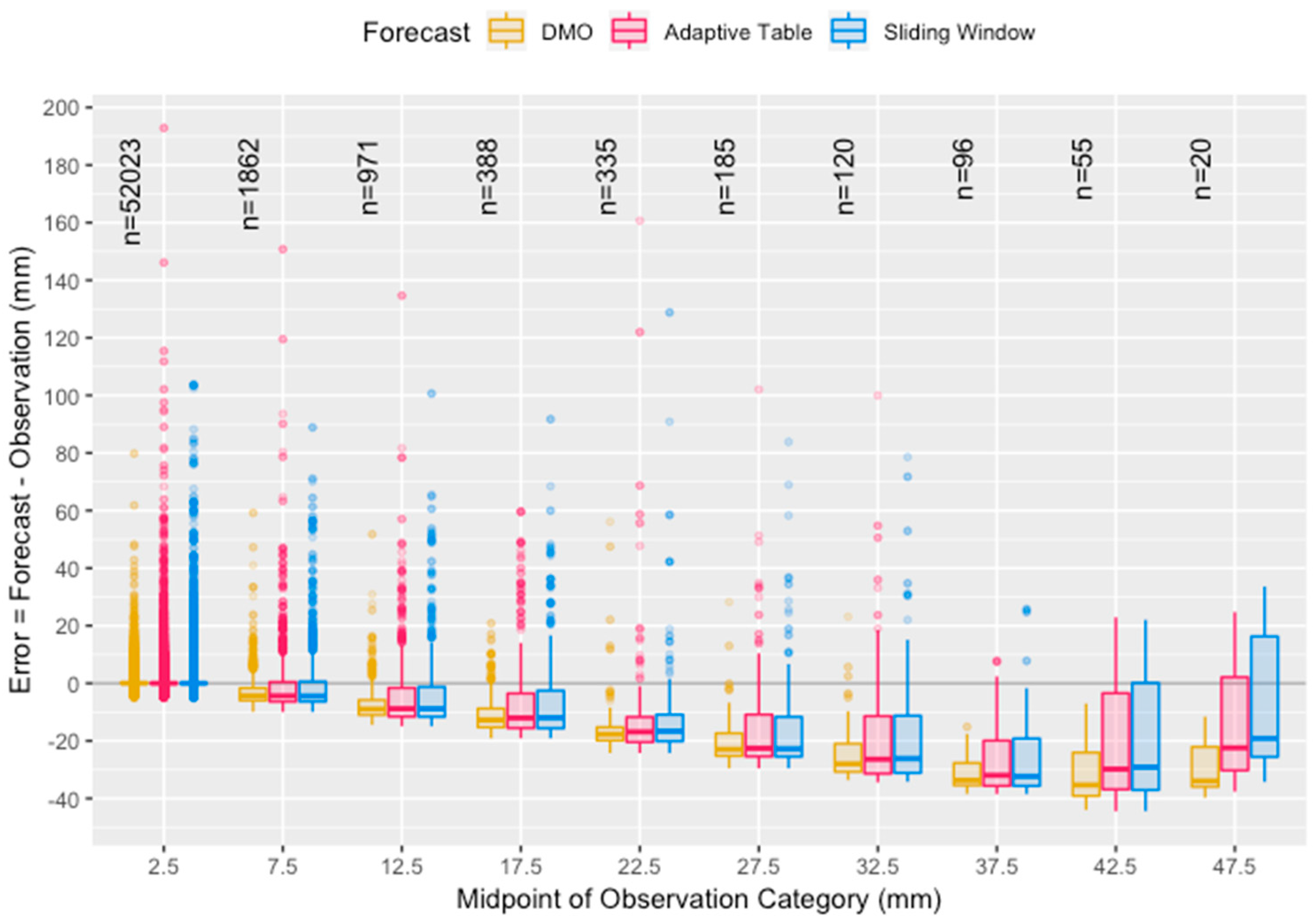

3.3. Performance of the Calibration Methods in Terms of Percentage Errors

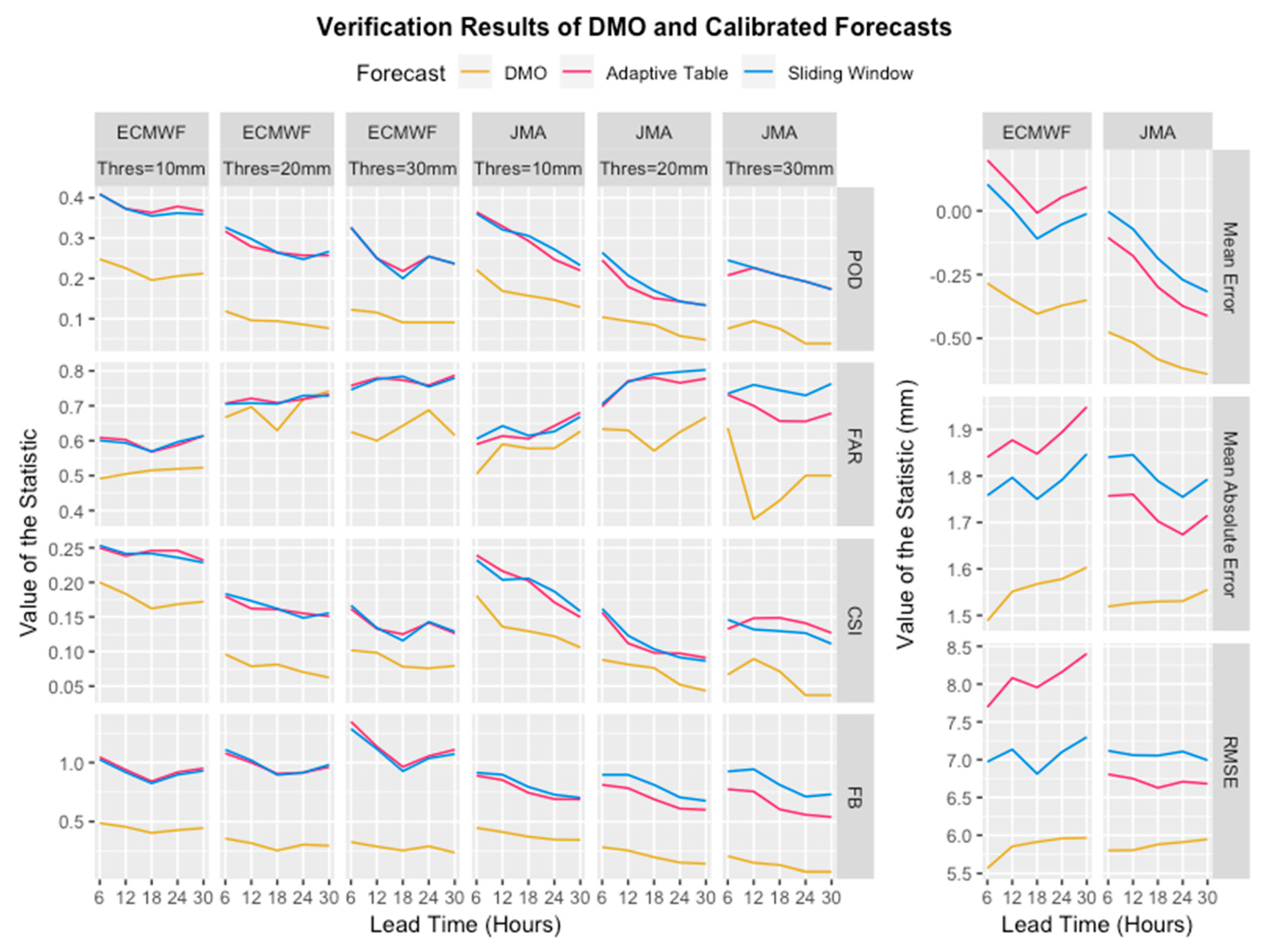

3.4. Performance of the Calibration Methods in Terms of Verification Metrics

4. Case Studies of Actual Rainfall Events

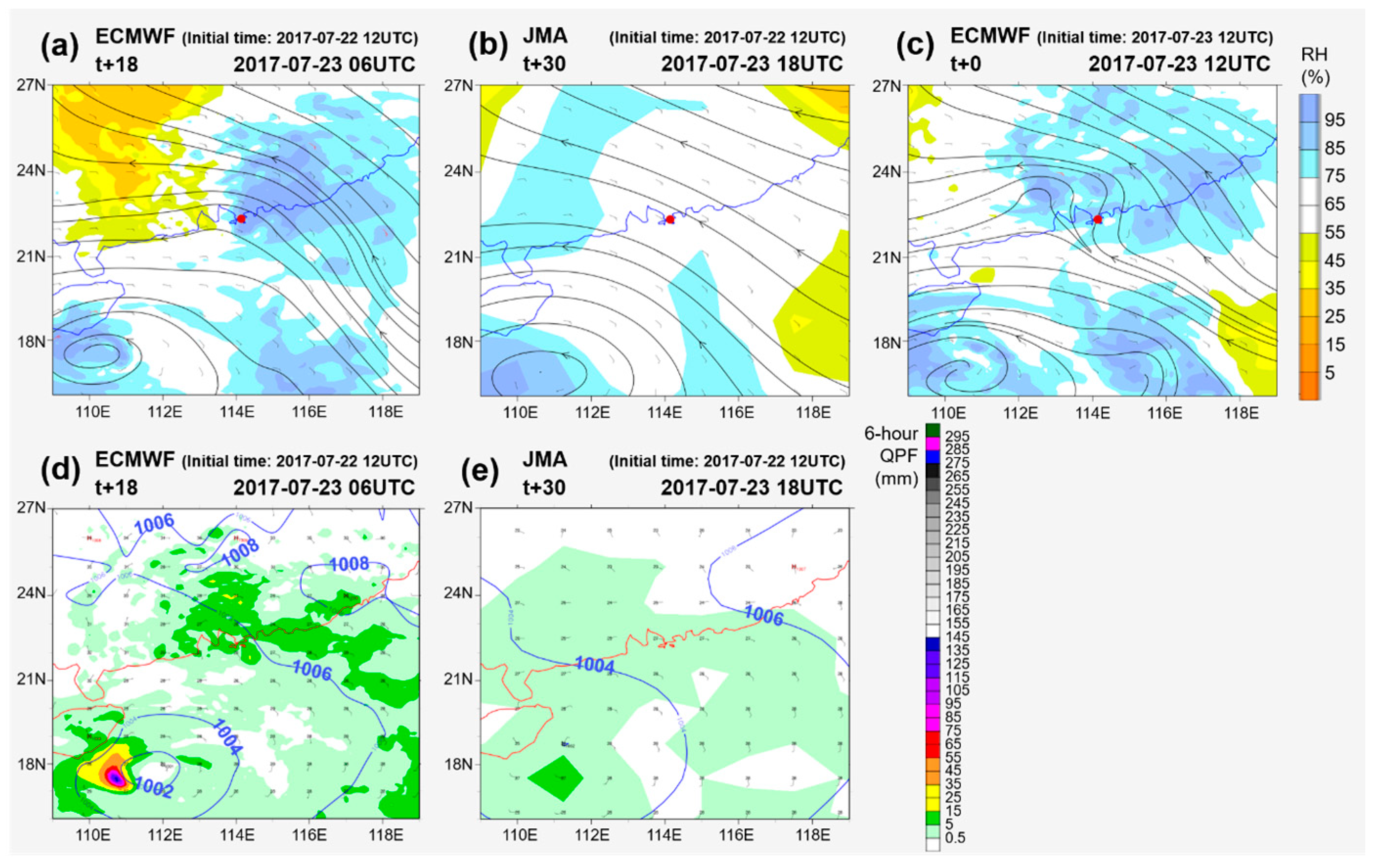

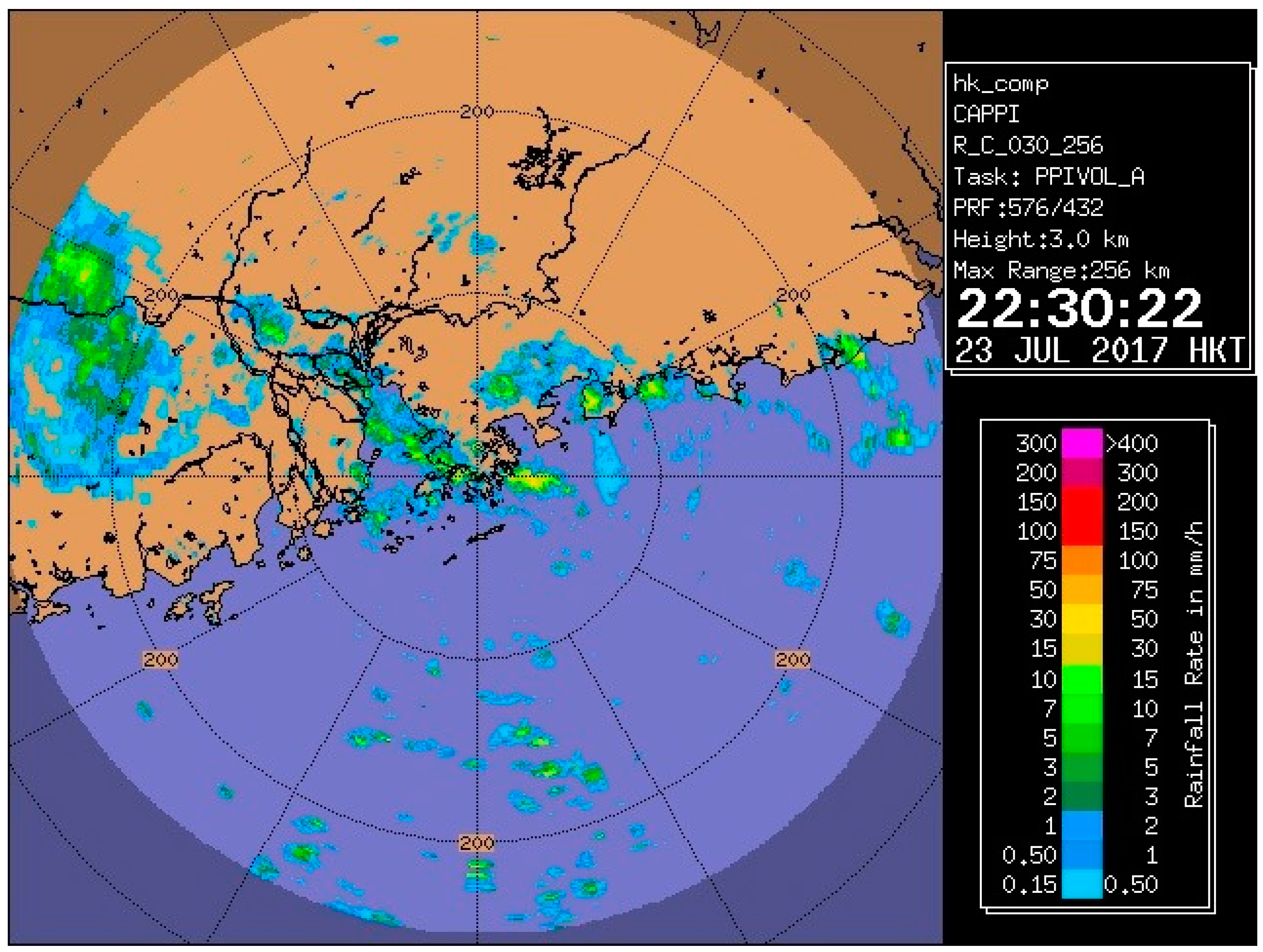

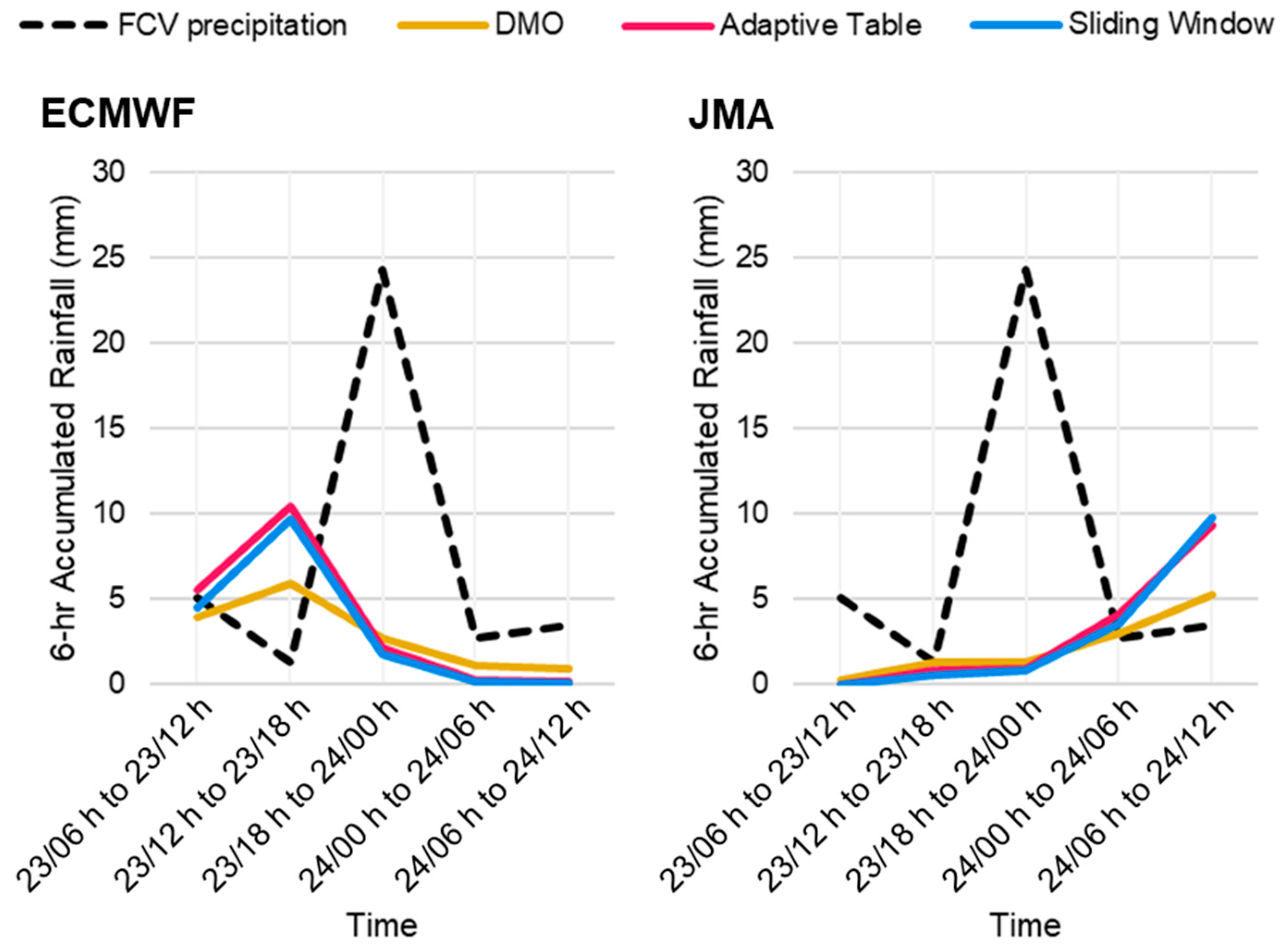

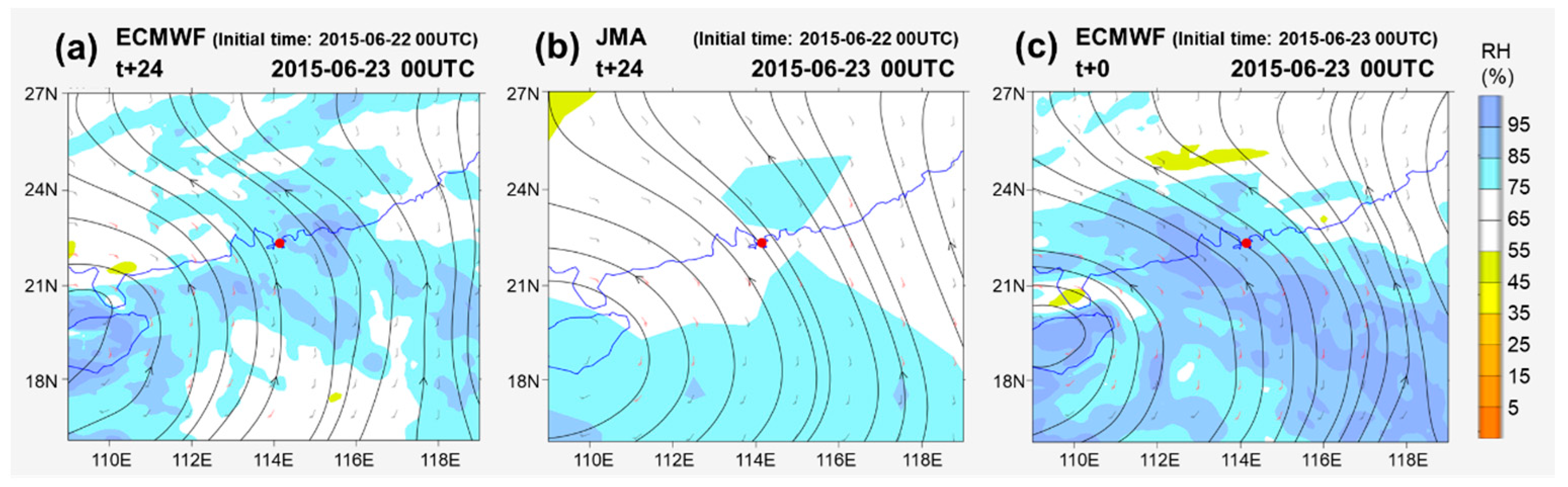

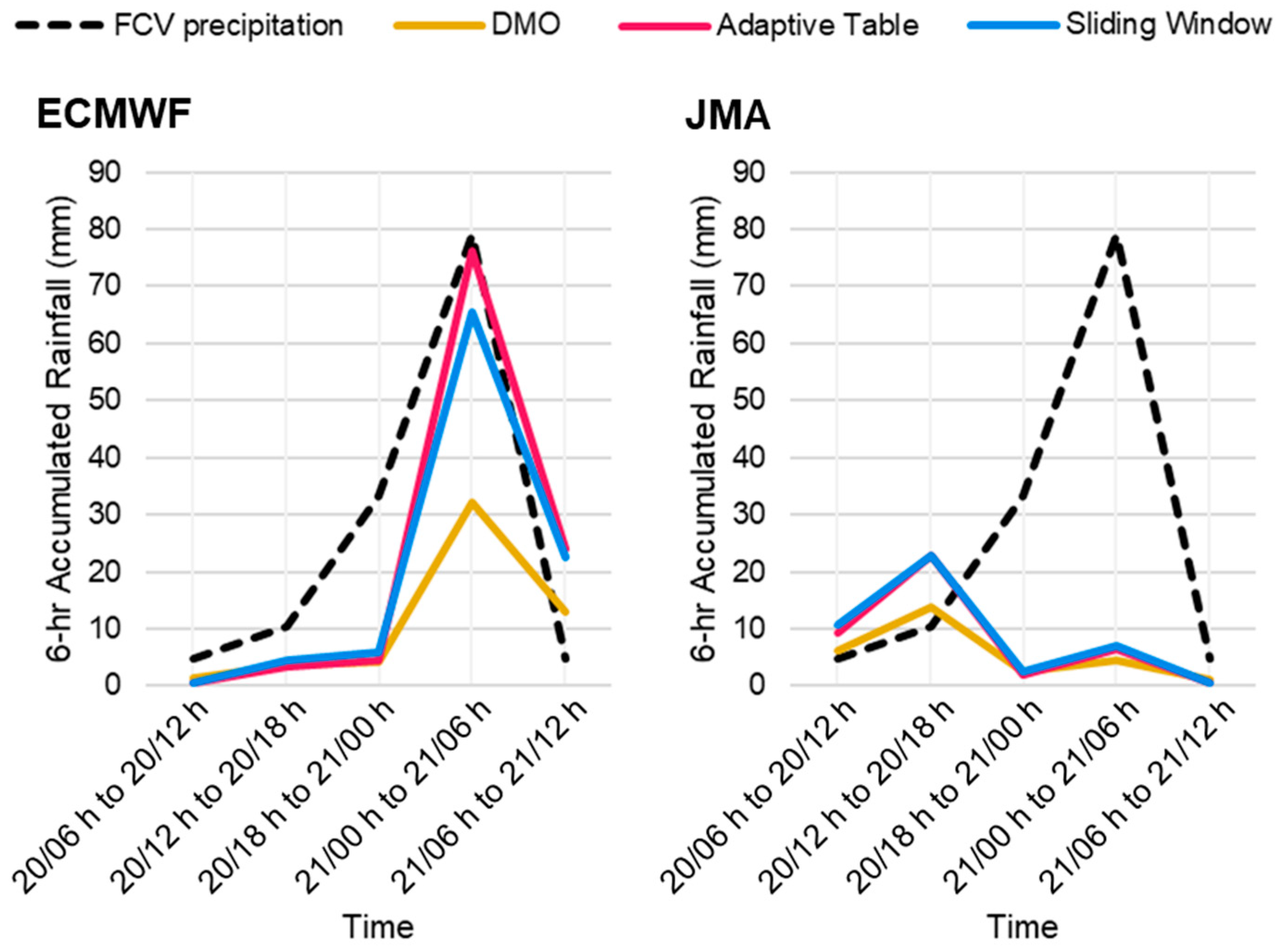

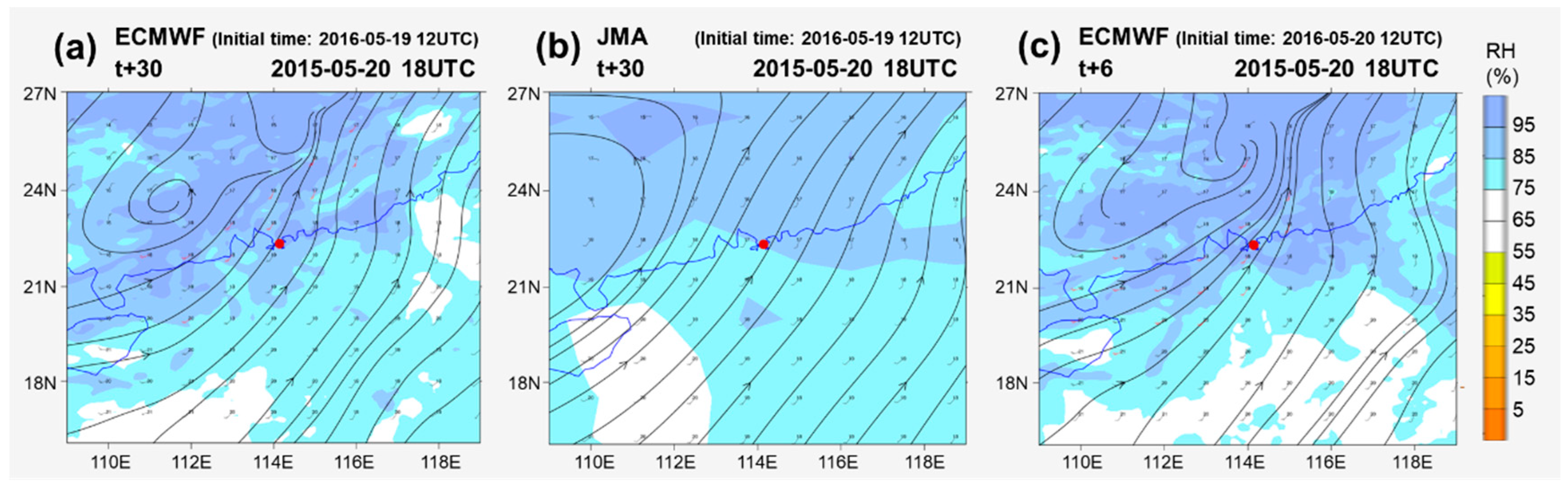

4.1. Failure due to Limitation of Global NWP Models

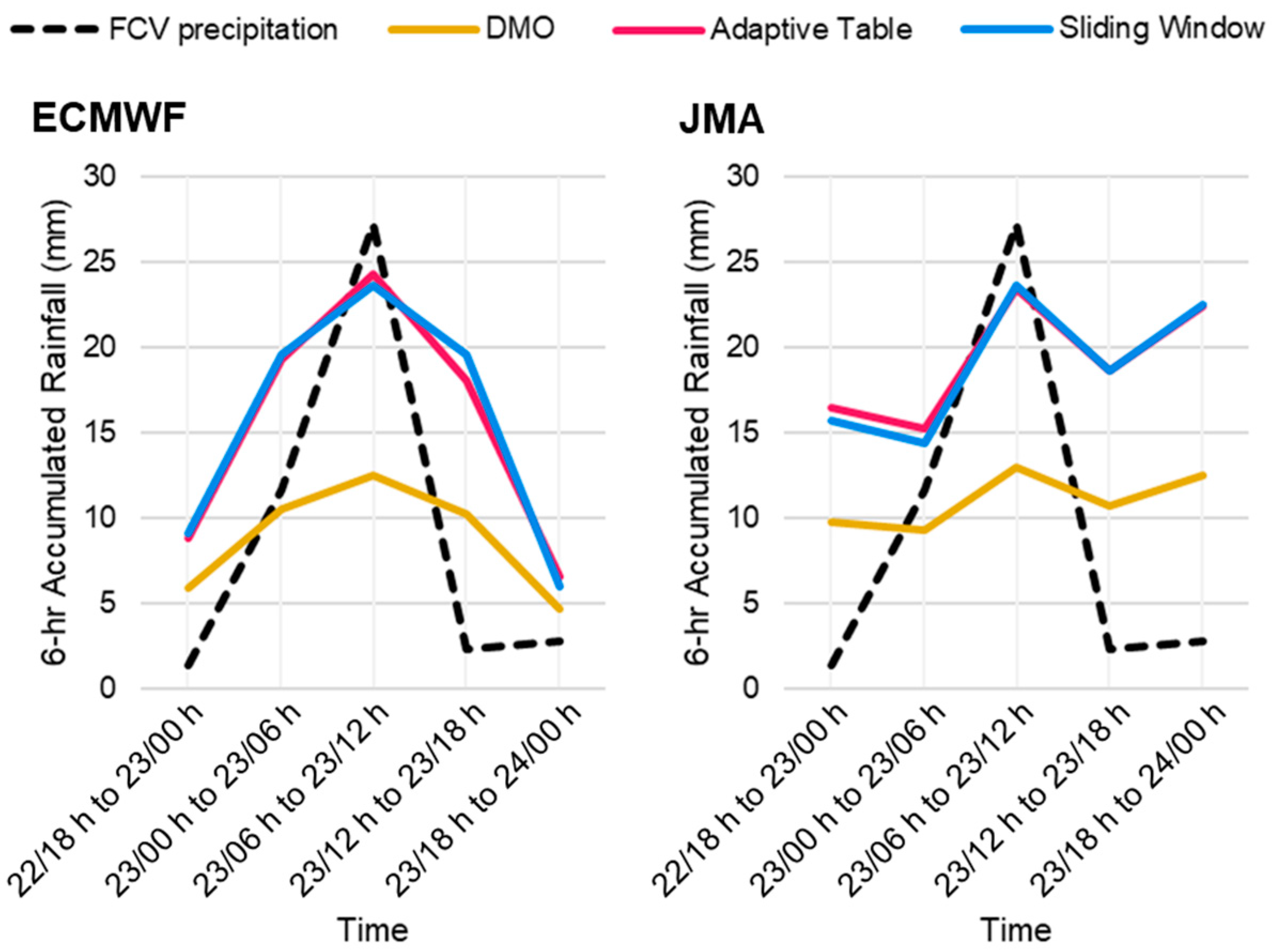

4.2. A Successful Example

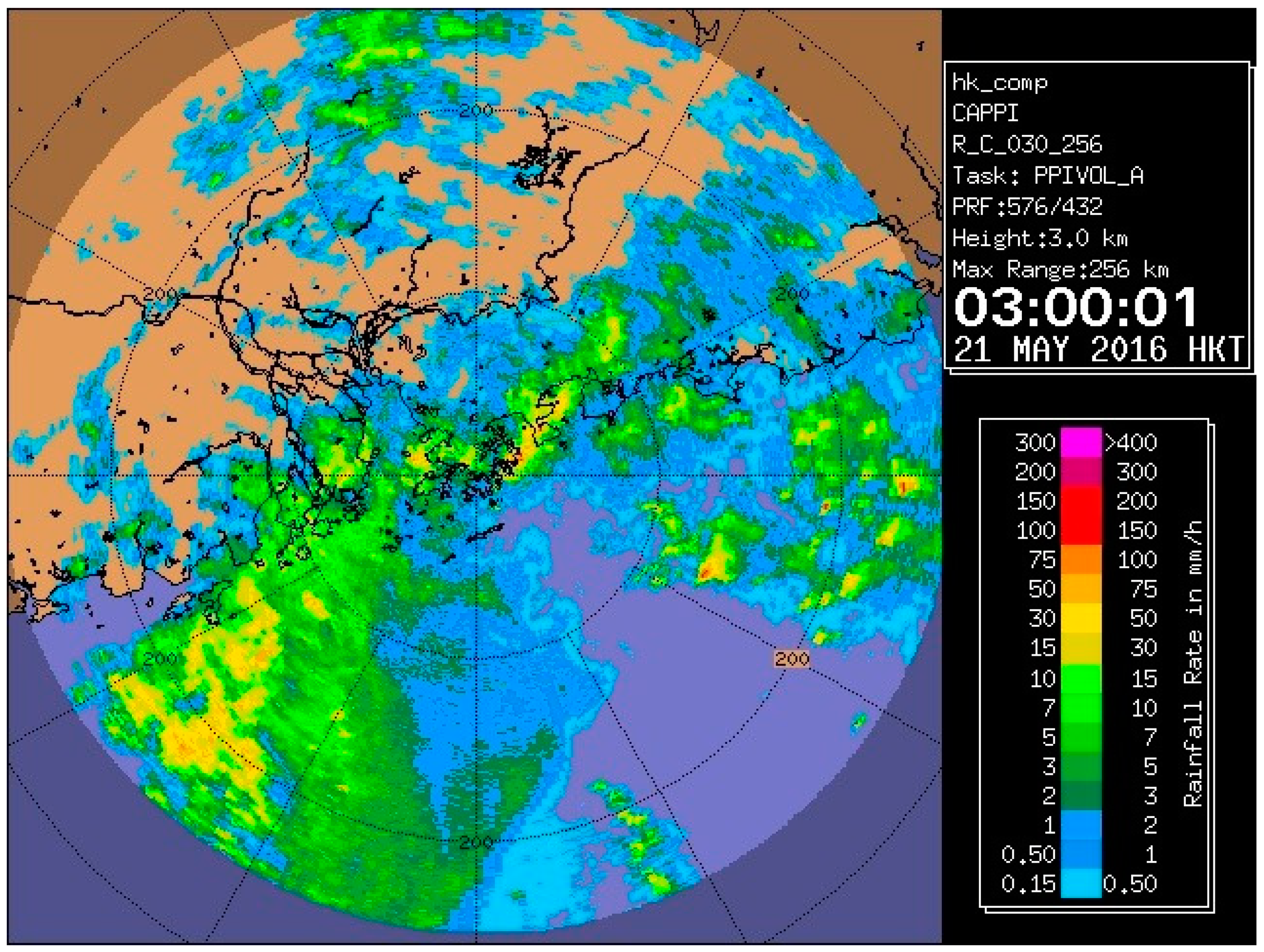

4.3. Diverged Performance with a Trough in Springtime

5. Conclusions and Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, P.W.; Lai, E.S.T. Short-range quantitative precipitation forecasting in Hong Kong. J. Hydrol. 2004, 288, 189–209. [Google Scholar] [CrossRef]

- Climate of Hong Kong. Available online: https://www.weather.gov.hk/en/cis/climahk.htm (accessed on 6 February 2021).

- Climate Change in Hong Kong. Available online: https://www.hko.gov.hk/en/climate_change/obs_hk_rainfall.htm (accessed on 6 February 2021).

- Sun, Y.; Stein, M.L. A stochastic space-time model for intermittent precipitation occurrences. Ann. Appl. Stat. 2015, 9, 2110–2132. [Google Scholar] [CrossRef]

- Rezacova, D.; Zacharov, P.; Sokol, Z. Uncertainty in the area-related QPF for heavy convective precipitation. Atmos. Res. 2009, 93, 238–246. [Google Scholar] [CrossRef]

- Damrath, U.; Doms, G.; Fruhwald, D.; Heise, E.; Richter, B.; Steppeler, J. Operational quantitative precipitation forecasting at the German Weather Service. J. Hydrol. 2000, 239, 260–285. [Google Scholar] [CrossRef]

- Li, P.W.; Lai, E.S.T. Applications of radar-based nowcasting techniques for mesoscale weather forecasting in Hong Kong. Meteor. Appl. 2004, 11, 253–264. [Google Scholar] [CrossRef]

- Woo, W.C.; Wong, W.K. Operational application of optical flow techniques to radar-based rainfall nowcasting. Atmosphere 2017, 8, 48. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the 29th Conference on Neural Information Processing Systems (NIPS 2015), Montreal, QC, Canada, 10 December 2015; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Available online: https://arxiv.org/abs/1506.04214 (accessed on 11 February 2021).

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Deep Learning for Precipitation Nowcasting: A Benchmark and a New Model. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 5 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; [Google Scholar]

- Nguyen, M.; Nguyen, P.; Vo, T.; Hoang, L. Deep Neural Networks with Residual Connections for Precipitation Forecasting. In Proceedings of the CIKM ‘17: 2017 ACM International Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar]

- Wu, K.; Shen, Y.; Wang, S. 3D Convolutional Neural Network for Regional Precipitation Nowcasting. J. Image Signal Process. 2018, 7, 200–212. [Google Scholar] [CrossRef]

- Li, P.W.; Wong, W.K.; Lai, E.S.T. RAPIDS—A New Rainstorm Nowcasting System in Hong Kong. In Proceedings of the WMO/WWRP International Symposium on Nowcasting and Very-short-range Forecasting, Toulouse, France, 5–9 September 2005; The Hong Kong Observatory: Hong Kong, China, 2005. [Google Scholar]

- Wong, W.K.; Chan, P.W.; Ng, C.K. Aviation Applications of a New Generation of Mesoscale Numerical Weather Prediction System of the Hong Kong Observatory. In Proceedings of the 24th Conference on Weather and Forecasting/20th Conference on Numerical Weather Prediction, American Meteorological Society, Seattle, WA, USA, 24–27 January 2011; The Hong Kong Observatory: Hong Kong, China, 2011. [Google Scholar]

- Yessad, K.; Wedi, N.P. The Hydrostatic and Nonhydrostatic Global Model IFS/ARPEGE: Deep-Layer Model Formulation and Testing. In ECMWF Technical Memorandum; European Centre for Medium-Range Weather Forecasts (ECMWF): Reading, UK, 2011. [Google Scholar]

- Brown, J.D.; Seo, D.; Du, J. Verification of Precipitation Forecasts from NCEP’s Short-Range Ensemble Forecast (SREF) System with Reference to Ensemble Streamflow Prediction Using Lumped Hydrologic Models. J. Hydrometeor. 2012, 13, 808–836. [Google Scholar] [CrossRef]

- Manzato, A.; Pucillo, A.; Cicogna, A. Improving ECMWF-based 6-hours maximum rain using instability indices and neural networks. Atmos. Res. 2019, 217, 184–197. [Google Scholar] [CrossRef]

- Dong, Q. Calibration and Quantitative Forecast of Extreme Daily Precipitation Using the Extreme Forecast Index (EFI). J. Geosci. Environ. Prot. 2018, 6, 143–164. [Google Scholar] [CrossRef]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian Model Averaging to Calibrate Forecast Ensembles. Mon. Wea. Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef]

- Hamill, T.M.; Whitaker, J.S.; Wei, X. Ensemble Reforecasting: Improving Medium-Range Forecast Skill Using Retrospective Forecasts. Mon. Wea. Rev. 2015, 133, 1434–1447. [Google Scholar] [CrossRef]

- Messner, J.W.; Mayr, G.J.; Wilks, D.S.; Zeileis, A. Extending Extended Logistic Regression: Extended versus Separate versus Ordered versus Censored. Mon. Wea. Rev. 2014, 142, 3003–3014. [Google Scholar] [CrossRef]

- Voisin, N.; Schaake, J.C.; Lettenmeier, D.P. Calibration and downscaling methods for quantitative ensemble precipitation forecasts. Wea. Forecast. 2010, 25, 1603–1627. [Google Scholar] [CrossRef]

- Zhu, Y.; Luo, Y. Precipitation Calibration Based on the Frequency-Matching Method. Wea. Forecast. 2015, 30, 1109–1124. [Google Scholar] [CrossRef]

- Scheuerer, M.; Hamill, T.M. Statistical Postprocessing of Ensemble Precipitation Forecasts by Fitting Censored, Shifted Gamma Distributions. Mon. Wea. Rev. 2015, 143, 4578–4596. [Google Scholar] [CrossRef]

- Wilk, M.B.; Gnanadesikan, R. Probability plotting methods for the analysis for the analysis of data. Biometrika 1968, 55, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Japan Meteorological Agency. Chapter 4: Application Products of NWP. In Outline of the Operational Numerical Weather Prediction at the Japan Meteorological Agency; Japan Meteorological Agency: Tokyo, Japan, 2013. [Google Scholar]

- Li, K.K.; Chan, K.L.; Woo, W.C.; Cheng, T.L. Improving Performances of Short-Range Quantitative Precipitation Forecast through Calibration of Numerical Prediction Models. In Proceedings of the 28th Guangdong-Hong Kong-Macao Seminar on Meteorological Science and Technology, Hong Kong, China, 13–15 January 2014; The Hong Kong Observatory: Hong Kong, China, 2014. [Google Scholar]

- Donaldson, R.J.; Dyer, R.M.; Kraus, M.J. An Objective Evaluator of Techniques for Predicting Severe Weather Events, Preprints. In Proceedings of the Ninth Conference on Severe Local Storms, Norman, Oklahoma, OK, USA, 21–23 October 1975; American Meteorological Society: Boston, MA, USA, 1975; pp. 321–326. [Google Scholar]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences, 4th ed.; Elsevier: Cambridge, MA, USA, 2019; pp. 369–483. [Google Scholar]

| f (Sorted Past Forecasts, DMO) (mm) | t (Sorted Past Observations, Calibrated Value) (mm) |

|---|---|

| 0.0 | 0.0 |

| 0.8 | 0.1 |

| 1.0 | 0.2 |

| 1.2 | 0.3 |

| 1.3 | 0.4 |

| 1.5 | 0.5 |

| 2.3 | 1.0 |

| 3.0 | 1.5 |

| 3.4 | 2.0 |

| 3.8 | 2.5 |

| 4.0 | 3.0 |

| 4.2 | 3.5 |

| 4.5 | 4.0 |

| 4.7 | 4.5 |

| 5.0 | 5.0 |

| 5.6 | 6.0 |

| 6.0 | 7.0 |

| 6.6 | 8.0 |

| 6.9 | 9.0 |

| 7.5 | 10.0 |

| 9.4 | 15.0 |

| 10.8 | 20.0 |

| 14.4 | 30.0 |

| 16.7 | 40.0 |

| 20.1 | 50.0 |

| 25.4 | 60.0 |

| Event Observed | |||

| Yes | No | ||

| Event Forecast | Yes | a | b |

| No | c | d | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chong, M.-L.; Wong, Y.-C.; Woo, W.-C.; Tai, A.P.K.; Wong, W.-K. Calibration of High-Impact Short-Range Quantitative Precipitation Forecast through Frequency-Matching Techniques. Atmosphere 2021, 12, 247. https://doi.org/10.3390/atmos12020247

Chong M-L, Wong Y-C, Woo W-C, Tai APK, Wong W-K. Calibration of High-Impact Short-Range Quantitative Precipitation Forecast through Frequency-Matching Techniques. Atmosphere. 2021; 12(2):247. https://doi.org/10.3390/atmos12020247

Chicago/Turabian StyleChong, Man-Lok, Yat-Chun Wong, Wang-Chun Woo, Amos P. K. Tai, and Wai-Kin Wong. 2021. "Calibration of High-Impact Short-Range Quantitative Precipitation Forecast through Frequency-Matching Techniques" Atmosphere 12, no. 2: 247. https://doi.org/10.3390/atmos12020247

APA StyleChong, M.-L., Wong, Y.-C., Woo, W.-C., Tai, A. P. K., & Wong, W.-K. (2021). Calibration of High-Impact Short-Range Quantitative Precipitation Forecast through Frequency-Matching Techniques. Atmosphere, 12(2), 247. https://doi.org/10.3390/atmos12020247