Clothing-Agnostic Pre-Inpainting Virtual Try-On

Abstract

1. Introduction

2. Related Work

2.1. Evaluation of Generative Models

2.2. Virtual Try-On Models

3. Proposed Method

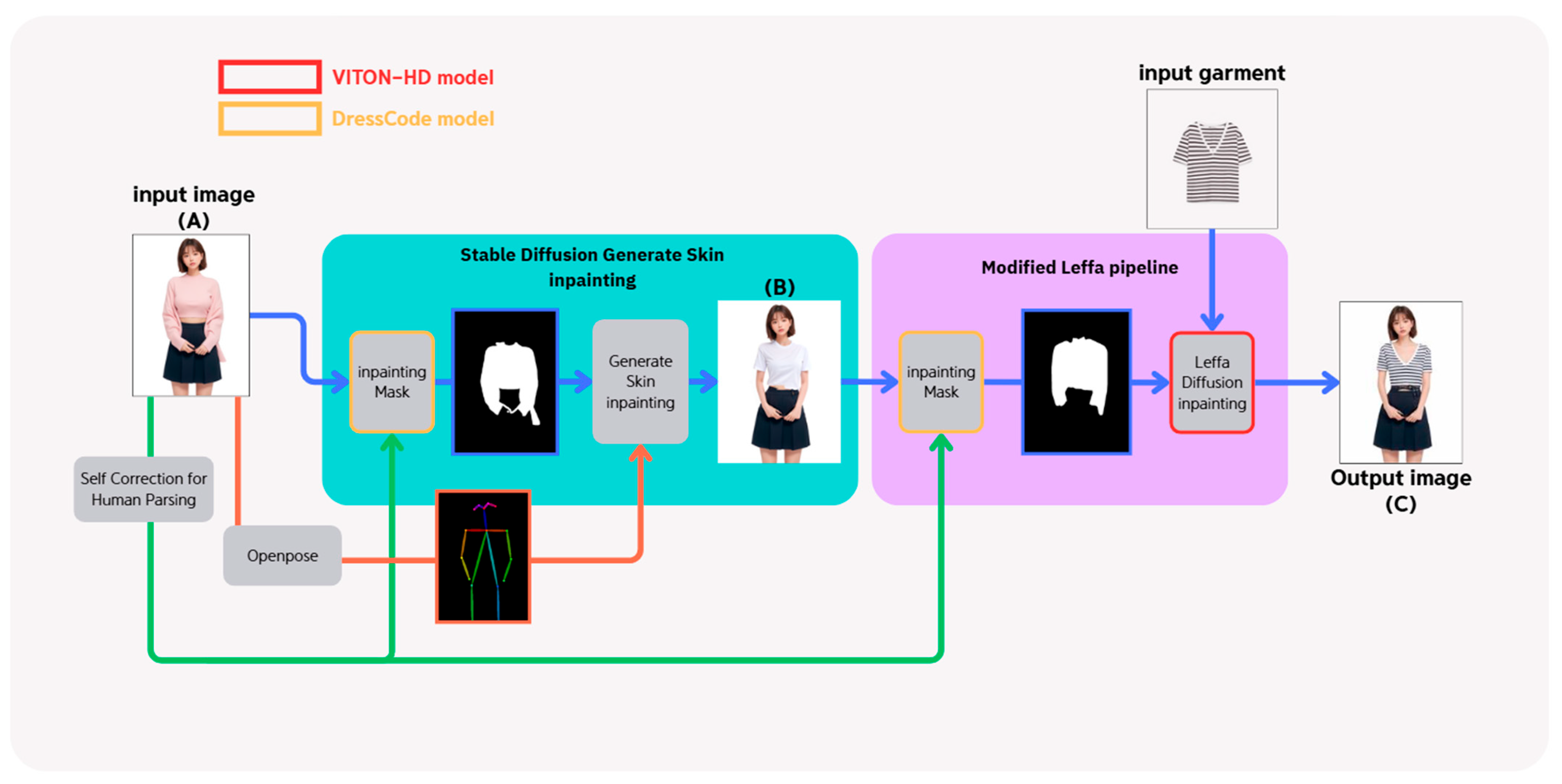

3.1. System Flow: Concept of CaP-VTON

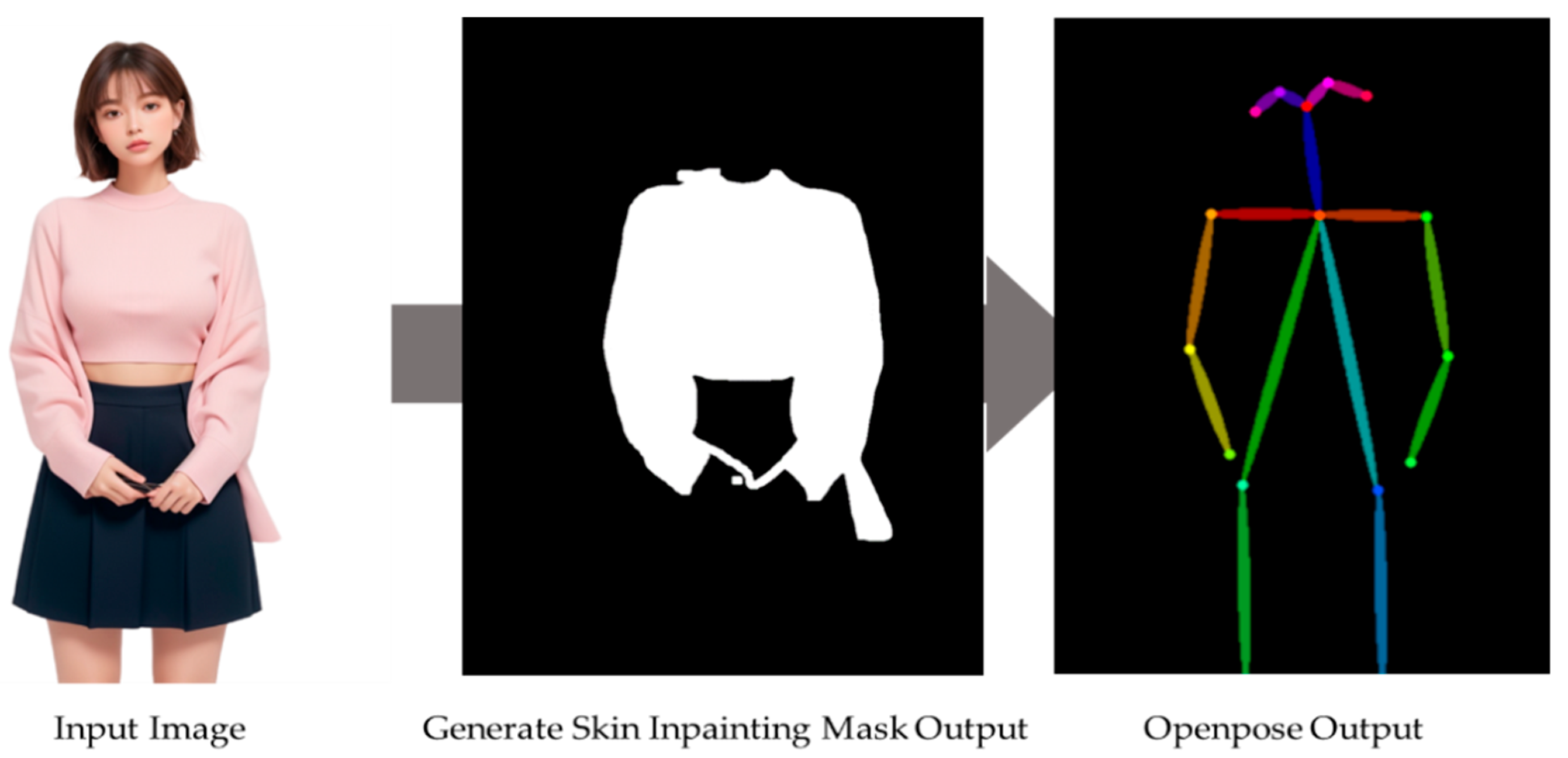

3.2. Stable Diffusion Generate Skin Inpainting: Removing Existing Clothing Silhouette

3.2.1. Generating Input Information to Build Synthetic Model

3.2.2. MajicMix Realistic Model-Based High Quality Skin Synthesis

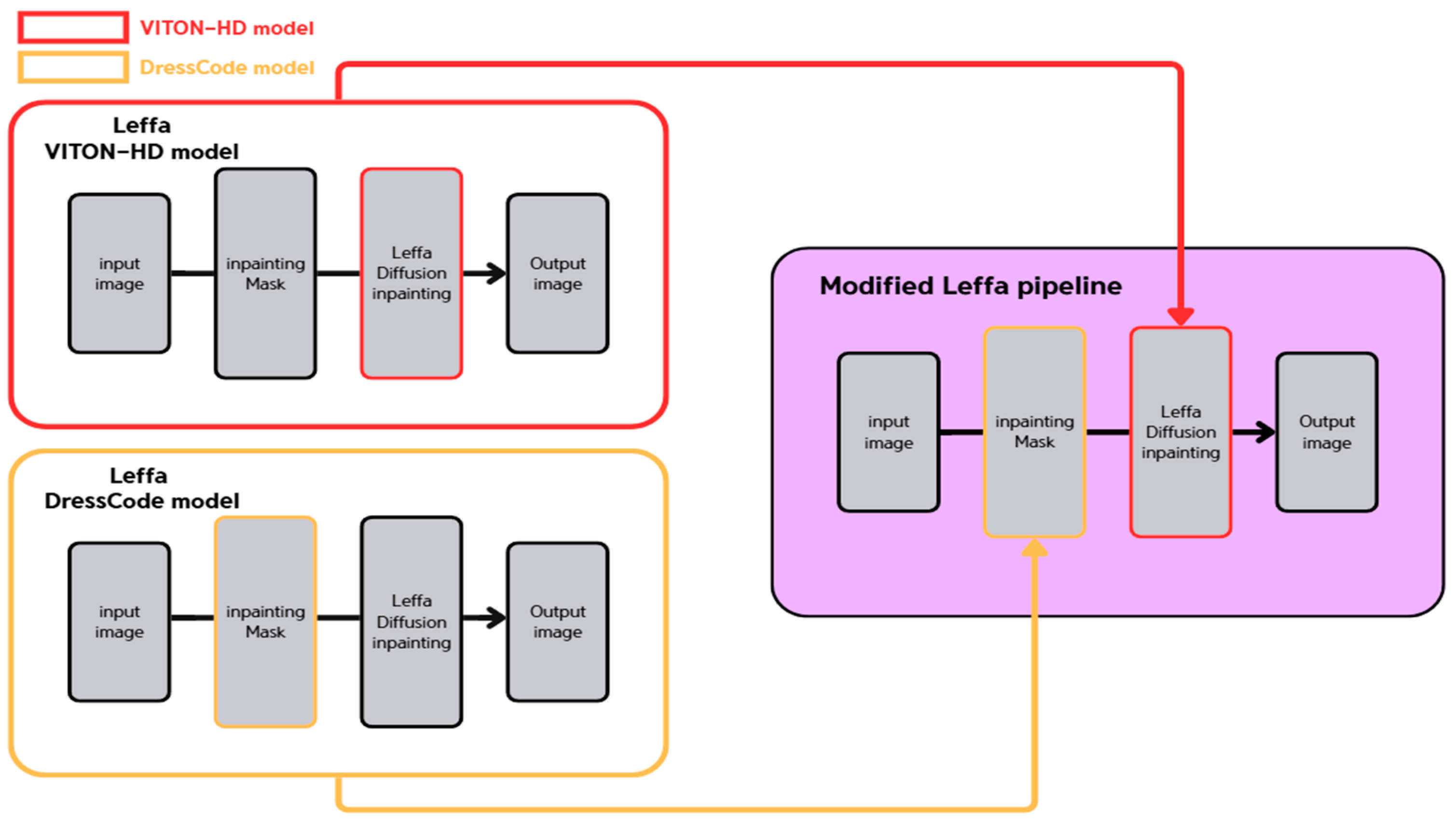

3.3. Modified Leffa Pipeline: Multi-Category Enabled Based on DressCode

4. Experimental Results

4.1. Experimental Environment

4.2. Results

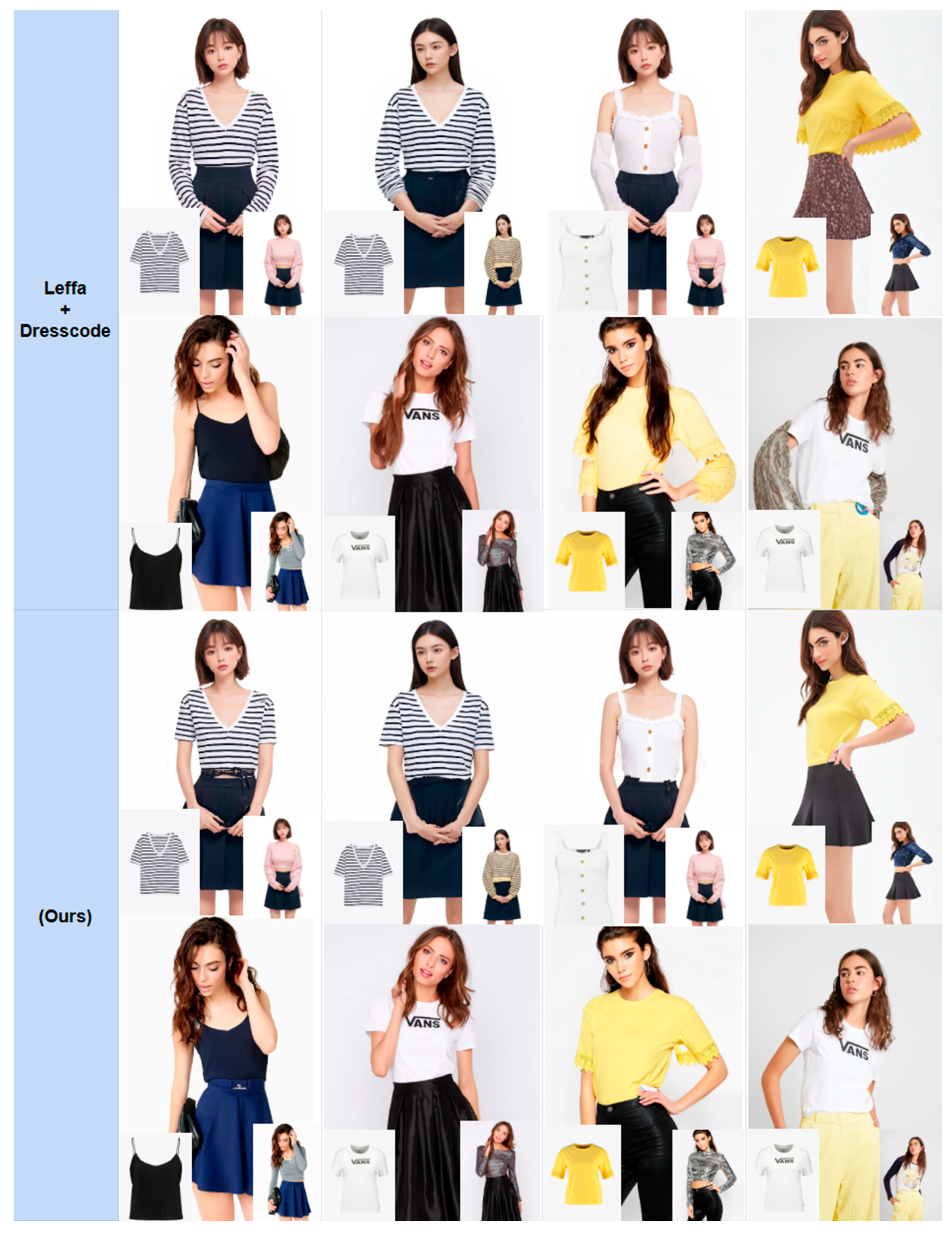

4.2.1. Visual Comparison of the Synthesis Results

4.2.2. Comparison for Image Synthesis Image Quantity

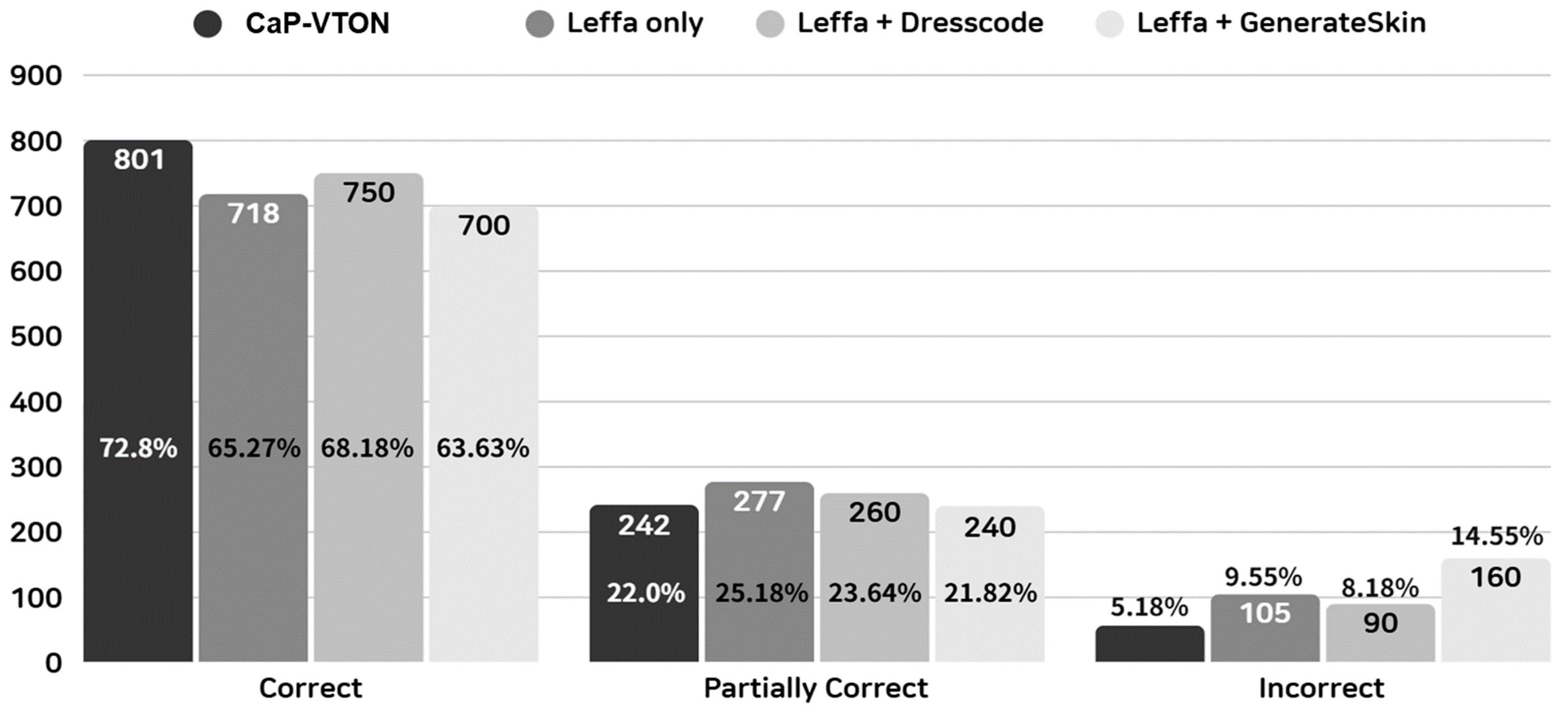

4.2.3. Ablation Study

4.2.4. Evaluation Based on Short-Sleeve Synthesis Probability

5. Discussion

5.1. Improved Entire Pipeline

5.2. Support for Replacement Across Different Clothing Types

5.3. Clothing-Agnostic Properties

5.4. Limitations of FID and Interpretation in Virtual Try-On

5.5. Limitations of Our Model

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhou, Z.; Liu, S.; Han, X.; Liu, H.; Ng, K.W.; Xie, T.; Cong, Y.; Li, H.; Xu, M.; Pérez-Rúa, J.M.; et al. Learning Flow Fields in Attention for Controllable Person Image Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025; pp. 2491–2501. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6626–6637. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, H.; Liang, X.; Xhen, Y.; Lin, L. Toward Characteristic-Preserving Image-Based Virtual Try-On Network (CP-VTON). In Computer Vision—ECCV 2018. Part XIII; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 607–623. [Google Scholar] [CrossRef]

- Choi, S.; Park, S.; Lee, M.; Choo, J. VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14131–14140. [Google Scholar] [CrossRef]

- Yang, H.; Min, S.; Chen, X.; Wang, Y.; Lin, L. Towards Photo-Realistic Virtual Try-On by Adaptively Generating-Preserving Image Content (ACGPN). In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11854–11863. [Google Scholar] [CrossRef]

- Lee, J.; Kim, S.; Kim, D.; Sohn, K. HR-VITON: High-Resolution Virtual Try-On via Joint Layout and Texture Learning. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 280–296. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirze, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar] [CrossRef]

- Kim, J.; Gu, G.; Park, M.; Park, S.; Choo, J. StableVITON: Learning Semantic Correspondence with Latent Diffusion Model for Virtual Try-On. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 8176–8185. [Google Scholar] [CrossRef]

- Choi, Y.; Kwak, S.; Lee, K.; Choi, H.; Shin, J. IDM-VTON: Improving Diffusion Models for Authentic Virtual Try-On in the Wild. arXiv 2024, arXiv:2403.05139. [Google Scholar] [CrossRef]

- Morelli, D.; Baldrati, A.; Cartella, G.; Cornia, M.; Bertini, M.; Cucchiara, R. LaDI-VTON: Latent Diffusion Textual-Inversion Enhanced Virtual Try-On. In Proceedings of the ACM International Conference on Multimedia (ACM MM), Ottawa, ON, Canada, 27 October–3 November 2023. [Google Scholar] [CrossRef]

- Xu, Y.; Gu, T.; Chen, W.; Chen, C. OOTDiffusion: Outfitting Fusion Based Latent Diffusion for Controllable Virtual Try-On. arXiv 2024, arXiv:2403.01779. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, L. Attention Is All You Need. In Advances in Neural Information Processing Systems (NeurIPS); Curran Associates, Inc.: Red Hook, NY, USA, 2017. [Google Scholar] [CrossRef]

- Chong, Z.; Dong, X.; Li, H.; Zhang, S.; Zhang, W.; Zhang, X.; Zhao, H.; Jiang, D.; Liang, X. CatVTON: Concatenation Is All You Need for Virtual Try-On with Diffusion Models. In Proceedings of the International Conference on Learning Representations (ICLR), Singapore, 24–28 April 2025. [Google Scholar] [CrossRef]

- Zhu, L.; Yang, D.; Zhu, T.; Reda, F.; Chan, W.; Saharia, C.; Norouzi, M.; Kemelmacher-Shlizerman, I. Tryondiffusion: A tale of two unets. In Proceedings of the Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 4606–4615. Available online: https://arxiv.org/abs/2306.08276 (accessed on 10 July 2025).

- Zhang, K.; Sun, M.; Sun, J.; Zhao, B.; Zhang, K.; Sun, Z.; Tan, T. Human Diffusion: A Coarse-To-Fine Alignment Diffusion Framework for Controllable Text-Driven Person Image Generation. 2023. Available online: https://arxiv.org/abs/2211.06235 (accessed on 10 July 2025).

- Li, P.; Song, G.; Zhang, Y.; Tong, Z.; Wei, X.; Liu, Y.; Yang, X. Self-Correction for Human Parsing. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4213–4225. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar] [CrossRef]

- Zhang, L.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3813–3824. [Google Scholar] [CrossRef]

- Merjic. “majicMIX Realistic v7: Stable Diffusion Checkpoint Merge”. HuggingFace, 2023. Available online: https://huggingface.co/imagepipeline/MajicMIX-realistic (accessed on 25 November 2025).

| Model | Paired | Unpaired | ||

|---|---|---|---|---|

| FID ↓ | SSIM ↑ | LPIPS ↓ | FID ↓ | |

| VITON-HD | 4.54 | 0.899 | 0.048 | 8.52 |

| DressCode | 5.05 | 0.949 | 0.021 | 10.73 |

| Model | Paired | Unpaired | ||

|---|---|---|---|---|

| FID | SSIM | LPIPS | FID | |

| CatVTON | 5.42 | 0.870 | 0.057 | 9.02 |

| IDM-VTON | 5.76 | 0.850 | 0.063 | 9.84 |

| OOTDiffusion | 9.30 | 0.819 | 0.088 | 12.41 |

| Leffa | 4.54 | 0.899 | 0.048 | 8.52 |

| CaP-VTON (Ours) | 6.55 | 0.897 | 0.062 | 11.28 |

| Model | Paired | Unpaired | ||

|---|---|---|---|---|

| FID | SSIM | LPIPS | FID | |

| Leffa only | 4.54 | 0.899 | 0.048 | 8.52 |

| Leffa-DressCode | 5.05 | 0.949 | 0.021 | 10.73 |

| Leffa-Generate Skin | 8.77 | 0.7284 | 0.089 | 17.14 |

| CaP-VTON(Ours) | 6.55 | 0.8966 | 0.0617 | 11.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Lee, H.J.; Lee, J.; Lee, T. Clothing-Agnostic Pre-Inpainting Virtual Try-On. Electronics 2025, 14, 4710. https://doi.org/10.3390/electronics14234710

Kim S, Lee HJ, Lee J, Lee T. Clothing-Agnostic Pre-Inpainting Virtual Try-On. Electronics. 2025; 14(23):4710. https://doi.org/10.3390/electronics14234710

Chicago/Turabian StyleKim, Sehyun, Hye Jun Lee, Jiwoo Lee, and Taemin Lee. 2025. "Clothing-Agnostic Pre-Inpainting Virtual Try-On" Electronics 14, no. 23: 4710. https://doi.org/10.3390/electronics14234710

APA StyleKim, S., Lee, H. J., Lee, J., & Lee, T. (2025). Clothing-Agnostic Pre-Inpainting Virtual Try-On. Electronics, 14(23), 4710. https://doi.org/10.3390/electronics14234710