1. Introduction

Urban environments are dynamic systems that undergo continuous structural transformations due to construction, demolition, and vertical development. Monitoring these changes is essential for effective urban planning, infrastructure management, environmental assessment, and disaster response. Remote sensing techniques have long been used for urban change detection, providing an efficient means to analyze spatial and temporal variations over large areas. With the growing availability of high-resolution satellite and aerial imagery, detecting subtle urban transformations has become increasingly feasible [

1].

Traditional two-dimensional (2D) change detection methods, such as image differencing, principal component analysis (PCA), and change vector analysis (CVA), primarily rely on spectral information. While these approaches can identify surface-level changes, they often fail to capture vertical modifications—such as building height variations—that are common in dense urban environments. The absence of elevation information can lead to misclassifications, especially in areas where spectral similarity between different structures is high [

2].

To overcome these limitations, three-dimensional (3D) change detection methods have gained attention by incorporating Digital Surface Models (DSMs) alongside optical imagery [

3]. DSMs provide crucial elevation information that reflects structural characteristics of the built environment, enabling a more complete understanding of urban dynamics. However, traditional pixel-based or rule-based 3D approaches often struggle with complex spatial patterns and noise in high-resolution data.

Recent advances in deep learning, particularly Convolutional Neural Networks (CNNs), have demonstrated remarkable potential for automatic feature extraction and pattern recognition in remote sensing [

4]. Encoder–decoder architectures such as UNet and its variants have shown strong performance in semantic segmentation tasks, enabling models to learn both local and contextual information. Building on this progress, hybrid architectures that combine robust encoders like ResNet with advanced decoders such as UNet++ [

5] have been introduced to enhance feature propagation and capture fine structural details.

In this study, we propose a deep learning-based framework for 3D urban change detection that jointly utilizes multi-temporal optical imagery and DSM data. The approach is implemented in two stages: binary change detection to distinguish change from no-change areas for comparison with classical methods, and multi-class detection to classify specific change types, including new construction, complete destruction, building height increase, and height decrease. By integrating spectral and elevation information within a unified CNN framework, this study aims to achieve more accurate and detailed detection of urban structural changes.

2. Methods

2.1. Dataset Description

The dataset used in this study is the 3DCD dataset [

6], which contains paired Digital Surface Model (DSM) and RGB imagery acquired at two different time points: 2010 and 2017. It consists of 423 image patches, each with a spatial resolution of 0.5 m and a size of 400 × 400 pixels. To ensure compatibility with the deep learning framework, all patches were resampled to 512 × 512 pixels. Data augmentation techniques, including rotation, flipping, and Gaussian blur, were applied to increase data diversity and reduce overfitting.

At the first stage of processing, the ground truth data were transformed into a binary change map distinguishing between change and no-change areas, focusing specifically on building-related changes such as new constructions, demolitions, and height alterations. As it clear, the input data to the proposed method are orthophotos and DSM datasets. By entering this dataset, the proposed method will start.

2.2. Proposed Method

The proposed deep learning framework combines a ResNet34 [

7] encoder with a UNet++ [

8] decoder to capture both low-level and high-level spatial features. The encoder extracts hierarchical feature representations from the input data, while the decoder reconstructs detailed spatial structures, ensuring precise delineation of change boundaries. The network input consists of stacked bands from both time points, including RGB and DSM layers.

Training was conducted using the Adam optimizer for efficient parameter updating and faster convergence. The Dice Loss function was employed to handle class imbalance and to directly optimize for segmentation overlap accuracy [

9]. Dice Loss is defined as:

The initial product of all change detection methods is a binary map. This output is subsequently enhanced to a multi-class change map through the application of the PCA-Kmeans procedure, utilizing both DSM and RGB data.

2.3. Comparative Methods

To evaluate the performance of the proposed approach, it was compared with three classical change detection methods:

Change Vector Analysis (CVA), which measures spectral differences between the two dates.

The PCA–KMeans method combines Principal Component Analysis (PCA) for dimensionality reduction with K-Means clustering for change classification. This process is applied to orthophotos, where PCA first reduces the data’s dimensionality before K-Means performs the final classification.

Random Forest, a machine learning classifier that uses an ensemble of decision trees to identify changed and unchanged pixels.

2.4. Evaluation Metrics

Model performance was assessed using Accuracy, Recall, and F1-score, which together provide a balanced assessment of the model’s precision and ability to detect all relevant changes.

3. Results and Discussion

The proposed framework was evaluated in two stages: binary change detection for comparison with classical methods, and multi-class change detection for detailed structural interpretation. In the first stage, the performance of the proposed ResNet34–UNet++ model was compared against three traditional methods, including Change Vector Analysis (CVA), PCA–KMeans clustering, and the Random Forest classifier. The quantitative results of the binary change detection experiment are presented in

Table 1, showing the values of overall accuracy, recall, and F1-score for each method.

The results indicate that the proposed deep learning model substantially outperformed all traditional approaches across all evaluation metrics. The most notable difference appears in the recall value, where the proposed method achieved 0.9829, indicating that almost all change areas were successfully detected. A high recall in this context demonstrates that the model was able to identify nearly all relevant regions of change while minimizing missed detections. In contrast, traditional approaches such as CVA and PCA–KMeans showed significantly lower recall, suggesting that many true change areas were not detected. This weakness is particularly evident in complex urban environments, where spectral variations alone are insufficient to capture structural transformations.

While PCA–KMeans exhibited moderate recall, its low accuracy indicates that it often misclassified areas unrelated to meaningful structural changes—such as seasonal variations in vegetation or shadows—as change. Similarly, CVA was sensitive to spectral differences but failed to distinguish between real building-related changes and irrelevant variations, producing a high number of false detections in non-urban regions. The Random Forest method achieved relatively high accuracy (0.9125), suggesting that it correctly avoided labeling many unchanged areas. However, its low F1-score and recall reveal that it struggled to delineate the full spatial extent of actual changes, especially when building alterations occurred gradually or partially within a patch.

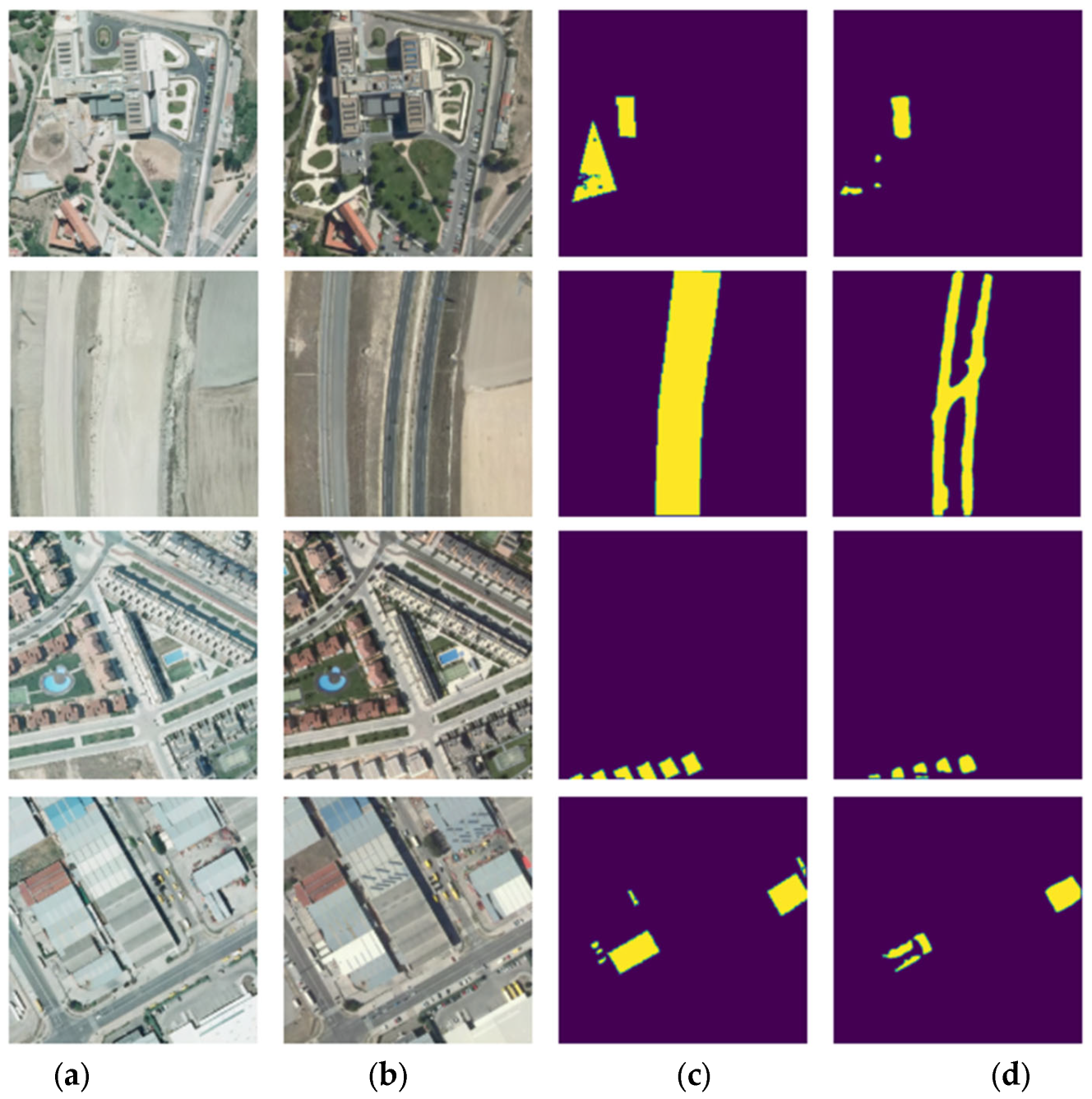

In contrast, the proposed ResNet34–UNet++ framework achieved both high recall and high accuracy, demonstrating its ability to capture nearly all true change regions while maintaining strong discrimination against irrelevant areas. The integration of DSM data provided valuable elevation information, allowing the model to distinguish genuine building-related changes from spectral noise or vegetation dynamics. Moreover, visual inspection of the change maps (

Figure 1) confirms that the proposed model produced sharp and well-defined object boundaries, accurately outlining the contours of new constructions, demolished structures, and modified rooftops. The network effectively preserved spatial detail and minimized noise, resulting in coherent and realistic change patterns.

Following the binary classification stage, the model was retrained for multi-class change detection, enabling the identification of specific structural change types, including new construction, complete destruction, height increase, and height decrease. The quantitative performance of the multi-class model is summarized in

Table 2 and

Table 3.

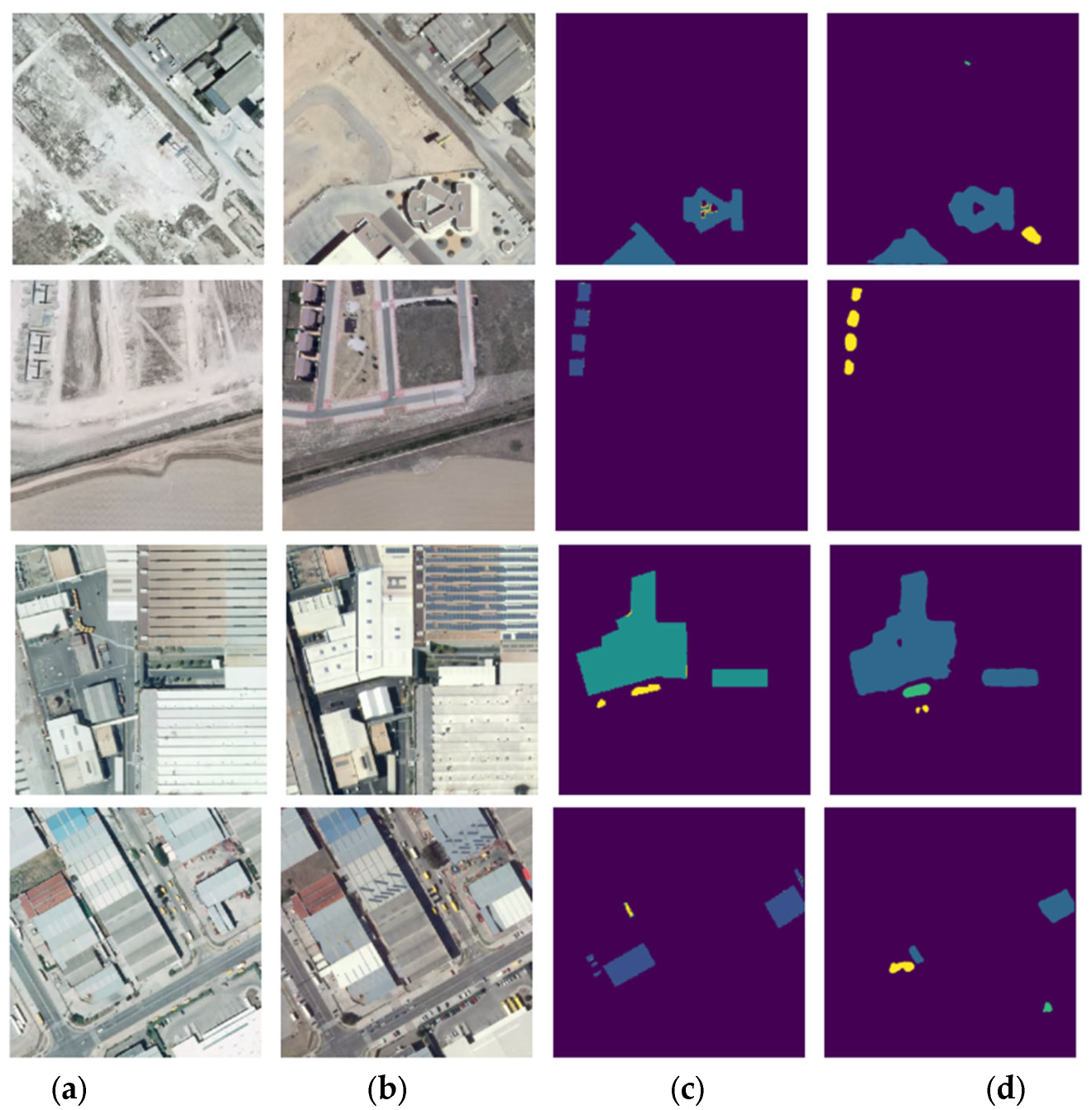

The multi-class results further confirm the robustness of the proposed model. The nearly identical values for accuracy, recall, and F1-score indicate a consistent and balanced performance across all change categories. Visual inspection of the predicted maps (

Figure 2) demonstrates that the model successfully differentiates between various change types and preserves the geometric integrity of urban features.

The use of DSM information proved particularly beneficial in identifying vertical changes such as building height increases and decreases, which are often overlooked in 2D-based approaches.

Overall, both quantitative and qualitative analyses confirm that the integration of spectral and elevation data through the ResNet34–UNet++ architecture provides a reliable and precise framework for 3D urban change detection. The proposed method demonstrates superior performance in identifying complex structural changes, accurately delineating object boundaries, and reducing false alarms in non-relevant regions, making it a promising approach for operational urban monitoring and planning applications.

4. Conclusions

The analysis revealed a clear pattern of urban transformation between 2010 and 2017, primarily driven by new building constructions and vertical expansions in developed areas. Most detected changes were concentrated in regions that experienced rapid urban growth, showing the increasing density and modernization of built-up zones. In contrast, limited destruction or reduction in height was observed, indicating a general trend of expansion rather than replacement.

The results also showed that changes were well localized, aligning closely with actual urban structures, while non-urban regions, such as vegetation and open land, remained largely unchanged. This highlights the reliability of the detected patterns in representing meaningful structural modifications. The boundaries of changed buildings were accurately delineated, revealing not only the presence but also the spatial extent of transformations across the city.

Overall, the findings emphasize the dynamic nature of urban development within the study period. The extracted change patterns provide valuable insights into how construction activities have reshaped the urban landscape over time, offering a robust foundation for future urban planning, infrastructure management, and environmental impact assessment.

Author Contributions

Conceptualization, A.E. and M.H.; methodology, A.E.; software, A.E.; validation, M.H. and A.E.; data curation, A.E.; writing—original draft preparation, A.E.; writing—review and editing, A.E. and M.H.; visualization, A.E.; supervision, M.H.; project administration, M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DSM | Digital Surface Model |

| PCA | Principal Component Analysis |

| CVA | Change Vector Analysis |

| CNN | Convolutional Neural Network |

References

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2013, 52, 406–417. [Google Scholar] [CrossRef]

- Kılıç, D.K.; Nielsen, P. Comparative analyses of unsupervised PCA K-means change detection algorithm from the viewpoint of follow-up plan. Sensors 2022, 22, 9172. [Google Scholar] [CrossRef] [PubMed]

- Gstaiger, V.; Tian, J.; Kiefl, R.; Kurz, F. 2D vs. 3D change detection using aerial imagery to support crisis management of large-scale events. Remote Sens. 2018, 10, 2054. [Google Scholar] [CrossRef]

- Meshkini, K.; Bovolo, F.; Bruzzone, L. A 3D CNN approach for change detection in HR satellite image time series based on a pretrained 2D CNN. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 143–150. [Google Scholar] [CrossRef]

- Brahim, E.; Amri, E.; Barhoumi, W.; Bouzidi, S. Fusion of UNet and ResNet decisions for change detection using low and high spectral resolution images. Signal Image Video Process. 2024, 18, 695–702. [Google Scholar] [CrossRef]

- Coletta, V.; Marsocci, V.; Ravanelli, R. 3DCD: A new dataset for 2D and 3D change detection using deep learning techniques. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 1349–1354. [Google Scholar] [CrossRef]

- Gao, M.; Qi, D.; Mu, H.; Chen, J. A transfer residual neural network based on ResNet-34 for detection of wood knot defects. Forests 2021, 12, 212. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Granada, Spain, 20 September 2018; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Wang, P.; Chung, A.C. Focal dice loss and image dilation for brain tumor segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Granada, Spain, 20 September 2018; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |