1. Introduction

In recent years, intelligent systems capable of understanding and generating human-like content have taken center stage, driving a wave of transformation across different fields. These advanced models are not only revolutionizing how we interact with computers but also redefining creativity, problem-solving, and automation. From generating text, images, and videos to engaging in natural conversations, translating languages, and even debugging complex code, their capabilities mark a significant shift in the digital landscape. As these systems continue to evolve, they are unlocking groundbreaking possibilities, reshaping industries, and pushing the boundaries of what machines can achieve.

According to [

1], the most widely used large language models (LLMs) today include ChatGPT, Gemini, Claude, Mistral, and Llama, with ChatGPT remaining the most dominant overall. However, ref. [

2], found that students in the programming field also frequently use tools like Chegg and Bing Chat (now known as Microsoft Copilot). These two platforms, while not consistently ranked among the top general-purpose LLMs, are often favored for their academic focus and support in educational contexts.

LLMs have significantly simplified various aspects of modern life, including reducing complexity in the field of programming [

3]. For instance, a study by [

4] found that LLMs have encouraged some students to become active questioners by frequently initiating interactions with the tool and seeking information to deepen their understanding. In contrast, other students preferred a more passive approach, engaging minimally and choosing instead to observe and process information are referred to as silent listeners. While the study provides valuable insight into how students interact with AI agents, it does not evaluate the effectiveness of these interactions on learning outcomes.

For many IT students, AI tools serve as active tutors, offering instant explanations of programming concepts and code snippets. Additionally, this immediate feedback loop enables students to debug errors much faster, understand complicated syntax, and complete tasks efficiently. LLM also provide support to students who may struggle with language barriers or lack access to an actual tutor [

5]. Despite this convenience, it may come with a cost, in which students may start to rely too much on the tool, utilizing it for completing tasks without fully engaging in the learning process [

6,

7,

8].

This over-reliance would become a hindrance to developing core problem-solving skills such as debugging logic errors and the comprehension of algorithm design on their own [

6].

The accessibility of LLMs has contributed to a rise in academic dishonesty, as students are increasingly using AI-generated content to complete assignments and exams, often presenting it as their own work [

9]. However, ref. [

10] found that integrating LLM tools into students’ collaborative programming improved their computational thinking and reduced cognitive load. In contrast, ref. [

11] observed that over-reliance on LLM tools for tasks requiring independent cognitive effort has significantly impacted academic performance, resulting in only surface-level understanding for some students.

The growing dependence on LLMs among IT students is influenced by several factors. One major contributor is the increasing complexity of programming tasks, as assignments become more intricate, students often struggle to develop solutions independently. In such cases, LLM tools become an appealing alternative, offering quick code generation, debugging support, and step-by-step assistance, which helps manage these challenges efficiently [

12]. Additionally, ref. [

13] emphasizes that many students face conceptual gaps in their understanding of programming. To bridge these gaps, they often turn to LLMs for explanations and ready-made solutions. While these tools can support learning, excessive reliance may lead to superficial understanding, as students might use AI-generated code without fully understanding the underlying logic or principles they are expected to master. It is thus crucial for students to understand the best ways of using LLM tools. A key aspect of effectively using LLM tools is prompting. Good prompting techniques often result in the desired output from LLM tools.

Prompting first emerged in natural language processing (NLP) tasks in 2018 with a single dataset and was further refined in 2021 with multiple datasets. By 2022, text-to-code and code-to-code techniques were introduced and have continued to evolve. The text-to-code approach involves describing programming problems in natural language, while the code-to-code strategy uses partial code snippets or hints to help ChatGPT generate complete solutions.

Students’ prompting strategies play a crucial role in how they use LLMs for programming tasks. Well-structured prompts lead to more accurate responses, making learning and solving coding problems easier for students. As LLMs become more common in education, understanding how to prompt them is essential for maximizing their benefits. There has been extensive research examining how students formulate prompts when interacting with large language models, aiming to identify strategies that optimise response quality and support deeper learning.

The work reported in [

14] presented a qualitative analysis of students’ prompts, identifying three distinct prompting strategies. Moreover, their research also uncovered additional prompting techniques that students use when interacting with ChatGPT. One such strategy is Single Copy & Paste Prompting, where a student copies a question from the problem statement and pastes it directly into the ChatGPT prompt field without modification. The student then adopts the response generated by ChatGPT and submits it to the Moodle quiz without any further revisions. Another strategy is Single Reformulated Prompting, in which a student rephrases the question either partially or entirely in their own words before prompting ChatGPT. The student then uses the AI-generated response and submits it to the Moodle quiz without making any changes. Similarly, Multiple-Question Prompting occurs when a student asks ChatGPT multiple questions before selecting and using the response provided by the model.

While existing studies have begun to explore how students interact with LLMs during programming tasks and have categorized various prompting strategies, such as copy-paste, reformulation, and iterative prompting there remains a limited understanding of how these strategies influence learning and skill development. Much of the current literature is descriptive, focusing on how students use these tools, rather than evaluative, assessing whether different prompting behaviors result in meaningful improvements in programming comprehension, problem-solving ability, or academic performance. Consequently, there is a need for deeper, outcome-focused research that examines whether and how prompting strategies used by IT students with LLM tools directly impact their learning. This study, therefore, seeks to investigate the prompting techniques IT students employ when using LLMs and evaluate the possible impact on their learning.

3. Results

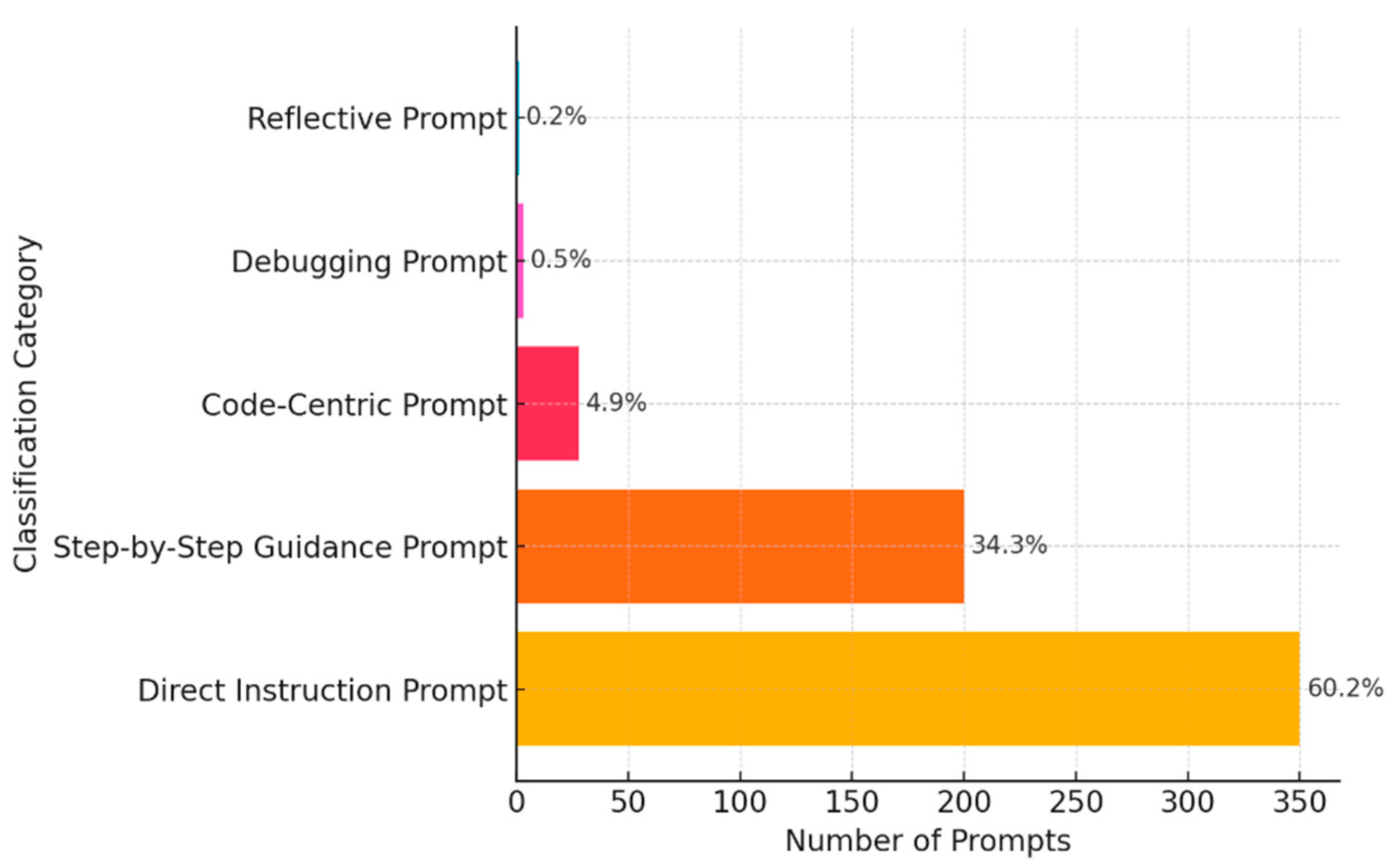

This study investigated the prompting strategies used by Information Technology students when working with LLMs to complete programming-related tasks. Students were asked to complete tasks across core programming modules as part of their coursework. While the task content varied, the prompts provided valuable insights into how students engaged with the AI tool. From this, we identified five dominant prompting strategies that influenced student learning, as shown in

Figure 1.

3.1. Direct Instruction Prompts

This was the most frequently used strategy, accounting for approximately 60.2% of all student prompts. Many students used prompts that asked LLM tools to define concepts or explain programming ideas. For instance, one student asked, “What is supervised learning and how does it work?”, while another typed, “Explain the difference between classification and regression in machine learning”. This kind of prompting is similar to what students might do when reading a textbook or asking a direct question in class.

Across the dataset, similar patterns appeared in prompts related to databases and programming tools. Examples included, “What is a relational database?” or “How do I connect to SQL in Visual Studio?” These types of prompts suggest that students were primarily aiming to understand key terms or foundational ideas before attempting the actual task. This strategy proved very popular with students. Most responses from the LLM were simple and easy to follow, which helped students get started. However, in many cases, students did not ask follow-up questions or attempt to apply what they had learned unless the task explicitly required it. This limited their opportunity to explore the topic more deeply.

Therefore, while this type of prompt helped students obtain quick and relevant information, relying on it alone may not support deep or independent learning—especially when students do not push themselves to explore further. From a Bloom’s Taxonomy perspective, these prompts reflect lower-order thinking skills such as “remembering” and “understanding”. There was limited evidence of constructivist learning, as students relied on the tool for direct answers rather than building knowledge through active exploration. Similarly, there were few signs of self-directed learning, since students seldom followed up or reflected beyond the initial response.

3.2. Step by Step Guidance Prompts

This strategy made up 34.3% of the student prompts and reflected a more structured and deliberate approach. Some students broke their task into clear steps. One example involved a student who broke the task into clear steps, first asking what supervised learning is, then identifying beginner-friendly algorithms, and finally requesting a simple implementation in Python. A similar pattern continued in prompts such as, “How do I connect to a database in C#?”, followed by “How do I insert data using a button click?”, and later, “How do I display the inserted data in a DataGridView?” These prompts showed that the student was progressing from understanding the tools, to implementing functionality, and then verifying output all in a logical order.

The use of step-by-step guidance prompts indicates that students were not merely seeking final answers but were instead using ChatGPT to support a structured learning process. By breaking down complex programming problems into smaller, manageable steps—such as starting with a conceptual query (“What is supervised learning?”) and progressing toward practical implementation (“Show me a minimal Python example”)—students were able to guide the LLM interaction in a way that mirrored instructional scaffolding. This sequencing allowed them to build understanding incrementally, reducing cognitive overload and making it easier to process and apply new information. The prompt structure also appeared to encourage deeper engagement, as students refined their understanding by comparing outputs, testing solutions, and adjusting their queries accordingly. In this way, the LLM functioned as a responsive learning tool, offering just-in-time feedback that supported students in moving from basic comprehension to application and analysis. While this approach aligns well with self-regulated learning and constructivist principles, it also highlights a potential risk: students may become overly reliant on predefined prompt structures, which could hinder their ability to tackle more complex, less familiar problems independently. For educators, this underscores the importance of teaching students not only how to use AI tools effectively but also how to critically evaluate and adapt their own learning strategies over time.

3.3. Code Centric Prompts

This prompt style accounted for 4.9% of the data and was characterized by a strong focus on code generation. Some students jumped straight into implementation. Prompts included “Generate a classification model in Python using scikit-learn” and “Build a supervised learning model with Python code.” Others submitted prompts like “Give me the full code to connect C# to SQL and add data” and “Build a CRUD app in Windows Forms.” These students typically pasted the code into their development environment without much explanation or reflection.

The frequent use of direct code-generation prompts—such as “Write a Python function for…” or “Generate code to solve…”—suggests that some students were primarily focused on completing tasks rather than understanding the underlying principles. This pattern, especially common among novice programmers, points to a surface approach to learning, where the primary goal is task performance rather than conceptual mastery. Within the framework of Bloom’s Taxonomy, this strategy remains largely at the “remembering” and “applying” levels, with limited evidence of progression to “analyzing” or “evaluating”, which require deeper engagement with logic, structure, and reasoning. Students often accepted and submitted the first code output generated, rarely modifying or testing alternative solutions—an indication of minimal reflection or critical assessment. From a constructivist perspective, this approach underutilizes the learner’s active role in meaning-making and problem-solving. Constructivist learning emphasizes the construction of knowledge through experience, interaction, and reflection; however, these direct prompts suggest a transactional model of learning, where the LLM is treated as a source of ready-made solutions rather than a tool for exploration or conceptual growth. While these prompts can be helpful in time-constrained scenarios, they risk undermining the development of higher-order thinking skills and independent problem-solving capabilities. Thus, educators should encourage students to move beyond passive code consumption and engage in iterative processes—debugging, evaluating alternatives, and justifying choices—to support deeper learning and knowledge transfer.

3.4. Reflective Prompts

Only 0.2% of prompts were reflective, showing curiosity and a desire to understand reasoning or compare alternatives. Some students submitted prompts like, “Why is supervised learning better than unsupervised in some cases?” and “What are the pros and cons of using decision trees?” Others asked, “What are the different ways to connect a database in C#?” or “Which database type is best for a small inventory system?”. The use of troubleshooting prompts—such as requests for help with specific error messages or unexpected output—demonstrates a more reflective and diagnostic engagement with the LLM. These prompts typically occurred after students had already attempted a solution, indicating a shift from code generation to problem analysis. Within Bloom’s Taxonomy, this behaviour reflects movement into the “analyzing” and “evaluating” stages, where learners are required to identify the causes of problems, interpret system feedback, and make informed decisions about revisions. Unlike direct code-generation prompts, which often bypass critical reasoning, troubleshooting prompts necessitate a deeper cognitive investment. From a constructivist learning perspective, this interaction embodies the principle that knowledge is actively constructed through experience, reflection, and iterative problem-solving. When students used ChatGPT to examine errors and explore alternative explanations, they engaged in an authentic learning process that mirrors expert behaviour.

However, it is noteworthy—and concerning—that only a small percentage of prompts fell into this category. Given the nature of software development as a discipline grounded in debugging, problem decomposition, and iterative refinement, this limited use of troubleshooting strategies suggests a gap in how students are approaching computational thinking. The low frequency of these higher-order prompts raises pedagogical questions about students’ readiness to engage with the core practices of the discipline. Furthermore, the effectiveness of such prompts was highly dependent on how specifically and contextually they were framed. Vague queries often returned unhelpful responses, whereas well-structured prompts—accompanied by relevant code snippets or error logs—enabled more meaningful feedback. This indicates that while LLMs can support higher-order thinking, their value is maximized when students approach them as tools for hypothesis testing and refinement rather than as simple troubleshooting shortcuts. Encouraging learners to articulate their debugging rationale not only enhances metacognitive awareness but also promotes the development of transferable skills essential for independent programming beyond AI-assisted contexts.

3.5. Debugging Prompts

Debugging accounted for 0.5% of the prompts, where students used the LLM to identify and resolve errors in their code. Examples included prompts such as, “Why am I getting a value error in this Python model?” and “How do I fix this scikit-learn import error?” Others asked, “Why won’t my SQL query insert data?” or “How do I fix a null exception in my C# form?”. This strategy reflected active problem-solving and trial-and-error learning. While it wasn’t always clear whether students fully understood the fixes, the prompts showed persistence and a willingness to engage with the debugging process.

Prompts that asked for explanations—such as “Explain what this code does” or “Why is this algorithm better?”—reflected a more conceptual engagement with the LLM and demonstrated an intent to understand rather than simply produce or fix code. These prompts align with the “understanding”, “analyzing”, and in some cases, “evaluating” levels of Bloom’s Taxonomy, as they require the learner to interpret, compare, and make judgments about code logic and design choices. From a constructivist learning perspective, such prompts indicate a shift from procedural knowledge to conceptual understanding. When students actively sought to clarify how or why a certain approach worked, they were engaging in reflective learning—constructing meaning through dialogue and integrating new information with prior knowledge. These interactions are pedagogically valuable, as they promote not only comprehension but also critical thinking and abstraction, which are essential for long-term skill development in software engineering.

However, similar to troubleshooting prompts, explanation-seeking prompts were relatively rare. This limited use is particularly concerning in the context of software development, a discipline that depends heavily on understanding how and why code behaves as it does. The low frequency of these conceptual prompts may signal a tendency among students to prioritise task completion over deep learning—potentially reinforced by the immediate utility and responsiveness of LLMs. Furthermore, the quality of these explanatory prompts varied significantly. Students who provided context—such as including code snippets or posing comparisons between algorithms—received richer, more accurate explanations. In contrast, vague or overly general questions yielded less informative responses. This suggests that while LLMs have the capacity to foster conceptual engagement, their effectiveness is mediated by the student’s ability to formulate thoughtful, specific queries. Supporting students in asking better questions—ones that elicit deeper understanding—should therefore be a key instructional goal. Doing so not only enhances metacognitive awareness but also cultivates the analytical mindset required for professional software development.

4. Discussion

The present study set out to determine whether students’ interactions with a LLM demonstrably supported learning in an undergraduate software-development context. The prompt log tells a consistent story: most exchanges sat at Bloom’s “remembering” and “applying” levels, with students requesting ready-made code or syntax explanations and seldom progressing to the “analyzing”, “evaluating”, or “creating” stages that typify expert programming practice [

20]. Less than 12% of all prompts fell into the higher-order categories of troubleshooting or reflective explanation, mirroring patterns of surface engagement reported in similar AI-assisted coding studies [

21]. In constructivist terms, learners treated the model as an answer dispenser rather than a partner in knowledge construction, thereby bypassing the productive struggle that underpins conceptual change [

22,

23].

This limited cognitive reach is unsurprising when one considers the design of the prompts themselves. Straightforward requests such as “Write a Python function that sorts a list” require little cognitive elaboration; the LLM supplies a working snippet, and the task appears complete. Cognitive-load theory predicts that such minimal-guidance tasks reduce germane load—learning-relevant effort—while preserving extraneous load, because students do not need to organise or integrate new information [

24,

25]. The result, as our qualitative inspection confirmed, is code that “works” but a mental model that remains opaque. Without deliberate opportunities to interrogate, debug, or adapt that output, students accumulate what [

26] call surface knowledge—rapidly gained, rapidly lost.

From a constructivist perspective, the scarcity of explanation-seeking and troubleshooting prompts is particularly troubling. Knowledge in software engineering is built through cycles of hypothesis, test, and revision [

27]. When only a small minority of learners ask “Why is this algorithm preferable?” or “Explain the cause of this stack-trace”, opportunities to connect new code to prior mental schemata are forfeited. Consequently, the interaction pattern we observed cannot be said to exhibit learning in the strong sense of conceptual growth or transferable problem-solving skill [

28]. We therefore make the following two recommendations to lecturers:

4.1. Re-Design Assessment Questions

Instructors can push students beyond procedural recall by structuring tasks that require higher-order engagement. Questions that ask students to critique alternative implementations, interpret error logs, or refactor inefficient code oblige them to operate at Bloom’s “analyzing” and “evaluating” levels. Empirical work in computer-science education shows that when prompts embed such metacognitive triggers, learners spend more time reasoning and produce higher-quality solutions [

29]. Rubrics should therefore allocate explicit marks for explanation, justification, and iteration, not merely for a runnable script.

4.2. Teach Prompt Engineering as a Literacy

Prompting is itself a learnable skill [

30]. Short workshops can demonstrate how to (a) provide sufficient context, (b) decompose complex problems, and (c) request stepwise reasoning or code annotations. When students use targeted prompts—e.g., “Given this traceback, describe two possible causes and suggest fixes with time-complexity estimates”—the LLM supplies feedback that naturally scaffolds inquiry, aligning with the fading-scaffold model of cognitive apprenticeship [

31]. Early evidence indicates that explicit instruction in prompt design increases the proportion of explanation-seeking interactions by up to 30% and correlates with gains on post-test debugging tasks [

32].

Together, these interventions reposition the LLM from an automated code generator to a catalytic tutor—one that supports learners in constructing, evaluating, and extending their own understanding. Future research should test the long-term impact of prompt-literacy curricula and higher-order assessment designs on students’ ability to transfer debugging and design skills to unfamiliar, LLM-free environments.

5. Conclusions

By exploring how IT students prompted LLMs during programming tasks, this study uncovered five core strategies that shaped their learning experiences. These ranged from seeking simple explanations to building complex solutions through structured and iterative dialogue. Each approach offered insight into how students used AI tools to support or sometimes short-circuit their understanding.

The analysis showed that while all five strategies were used by students, they varied in depth and impact. Direct Instruction helped with quick concept recall but rarely went beyond surface-level understanding. Step-by-Step Guidance encouraged deeper thinking and gradual skill-building. Code-Centric prompts offered speed but carried the risk of passive learning. Debugging Prompts highlighted problem-solving and resilience, while Reflective Prompts reflected critical thinking and evaluation.

What becomes clear is that prompting is not just a technical skill it’s a learning habit. Students who approached LLMs tools with purpose, reflection, and a desire to understand are likely to get more out of the experience. These insights suggest that teaching students how to prompt more effectively through guided practice, modeling, or classroom discussions could strengthen their learning outcomes. In short, the way students engage with AI matters. By fostering better prompting habits, educators can help students become more independent, reflective, and capable learners in an AI-augmented educational environment.