Deep Learning for Soybean Monitoring and Management

Abstract

1. Introduction

2. Definitions and Acronyms

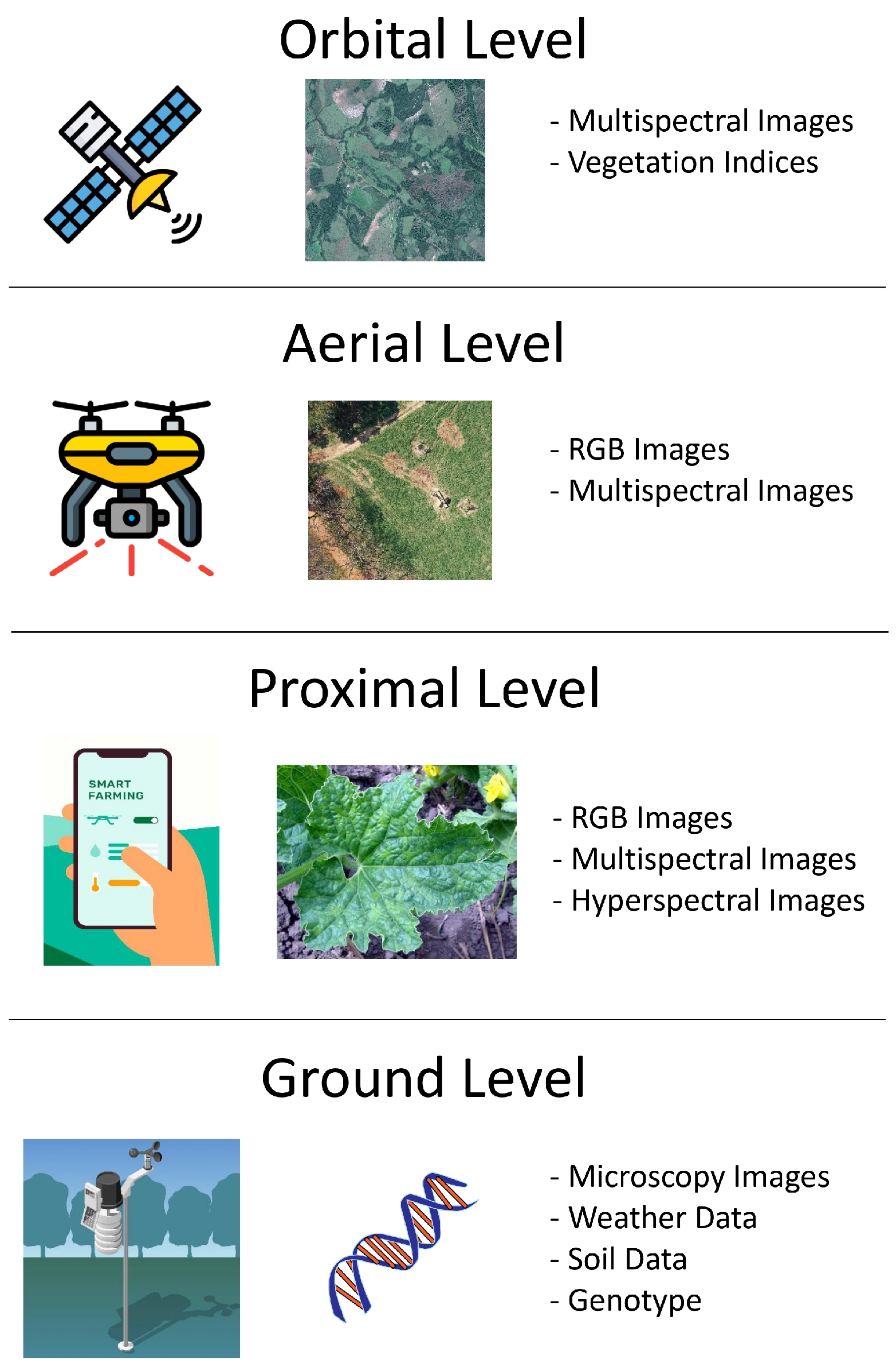

3. Literature Review

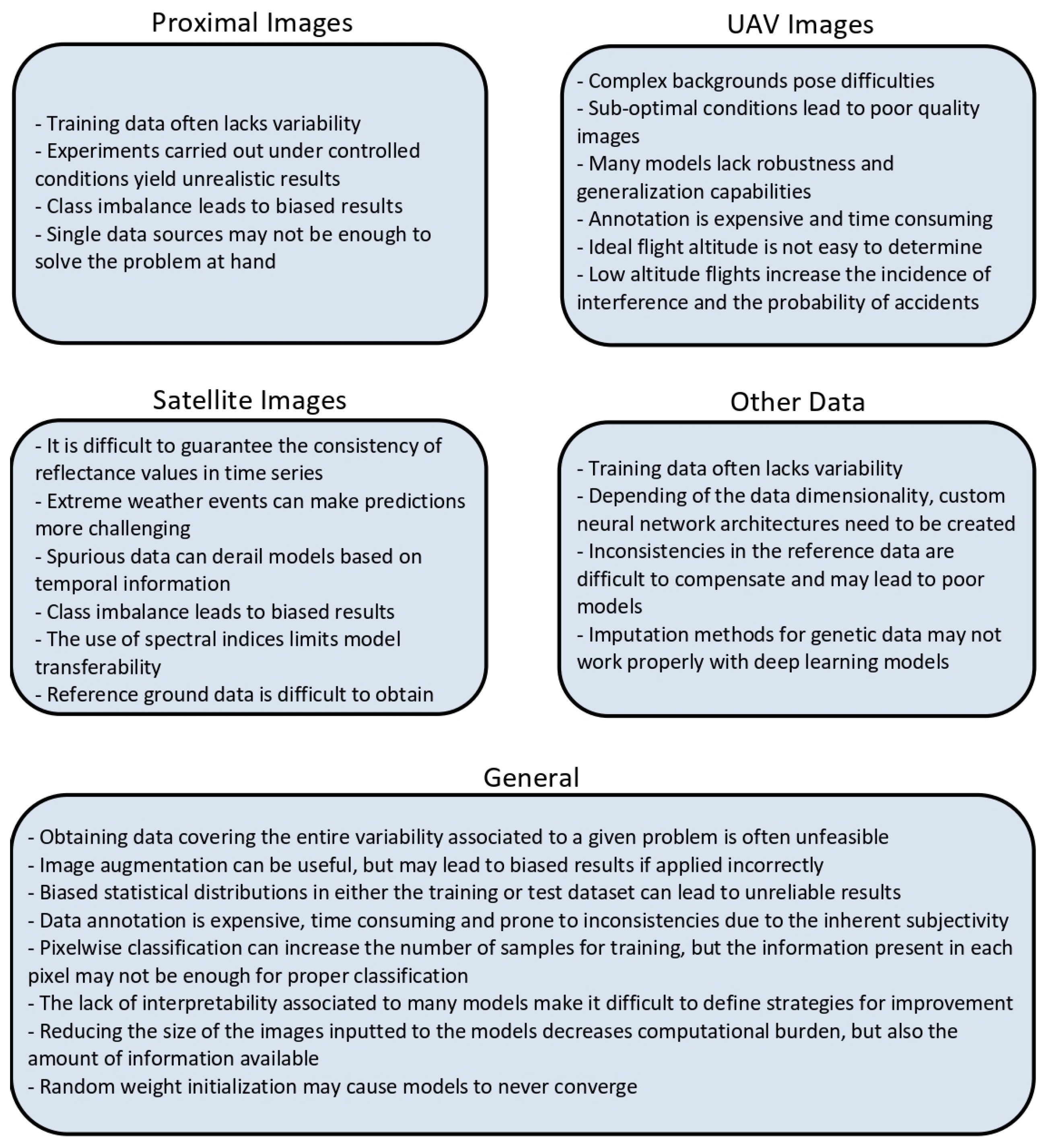

3.1. Proximal Images as Main Input Data

3.2. UAV Images as Main Input Data

3.3. Satellite Images as Main Input Data

3.4. Other Types of Data as Main Input for the Models

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bevers, N.; Sikora, E.J.; Hardy, N.B. Soybean disease identification using original field images and transfer learning with convolutional neural networks. Comput. Electron. Agric. 2022, 203, 107449. [Google Scholar] [CrossRef]

- Savary, S.; Willocquet, L.; Pethybridge, S.; Esker, P.; McRoberts, N.; Nelson, A. The global burden of pathogens and pests on major food crops. Nat. Ecol. Evol. 2019, 3, 430–439. [Google Scholar] [CrossRef]

- Gui, J.; Xu, H.; Fei, J. Non-Destructive Detection of Soybean Pest Based on Hyperspectral Image and Attention-ResNet Meta-Learning Model. Sensors 2023, 23, 678. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Jin, N.; Yu, Q. Impacts of climate change and crop management practices on soybean phenology changes in China. Sci. Total Environ. 2020, 707, 135638. [Google Scholar] [CrossRef] [PubMed]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Jha, K.; Doshi, A.; Patel, P.; Shah, M. A comprehensive review on automation in agriculture using artificial intelligence. Artif. Intell. Agric. 2019, 2, 1–12. [Google Scholar] [CrossRef]

- Bock, C.H.; Pethybridge, S.J.; Barbedo, J.G.A.; Esker, P.D.; Mahlein, A.K.; Ponte, E.M.D. A phytopathometry glossary for the twenty-first century: Towards consistency and precision in intra- and inter-disciplinary dialogues. Trop. Plant Pathol. 2022, 47, 14–24. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Li, D.; Li, C.; Yao, Y.; Li, M.; Liu, L. Modern imaging techniques in plant nutrition analysis: A review. Comput. Electron. Agric. 2020, 174, 105459. [Google Scholar] [CrossRef]

- Castro, W.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Gonçalves, W.; Rodrigues, L.; Santos, M.; Jank, L.; Barrios, S.; Valle, C.; et al. Deep Learning Applied to Phenotyping of Biomass in Forages with UAV-Based RGB Imagery. Sensors 2020, 20, 4802. [Google Scholar] [CrossRef]

- Flores, P.; Zhang, Z.; Igathinathane, C.; Jithin, M.; Naik, D.; Stenger, J.; Ransom, J.; Kiran, R. Distinguishing seedling volunteer corn from soybean through greenhouse color, color-infrared, and fused images using machine and deep learning. Ind. Crops Prod. 2021, 161, 113223. [Google Scholar] [CrossRef]

- Uzal, L.; Grinblat, G.; Namías, R.; Larese, M.; Bianchi, J.; Morandi, E.; Granitto, P. Seed-per-pod estimation for plant breeding using deep learning. Comput. Electron. Agric. 2018, 150, 196–204. [Google Scholar] [CrossRef]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of deep learning models for classifying and detecting common weeds in corn and soybean production systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Deep learning applied to plant pathology: The problem of data representativeness. Trop. Plant Pathol. 2022, 47, 85–94. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Unsupervised deep learning and semi-automatic data labeling in weed discrimination. Comput. Electron. Agric. 2019, 165, 104963. [Google Scholar] [CrossRef]

- Meir, Y.; Barbedo, J.G.A.; Keren, O.; Godoy, C.V.; Amedi, N.; Shalom, Y.; Geva, A.B. Using Brainwave Patterns Recorded from Plant Pathology Experts to Increase the Reliability of AI-Based Plant Disease Recognition System. Sensors 2023, 23, 4272. [Google Scholar] [CrossRef]

- Sugiyama, M.; Nakajima, S.; Kashima, H.; Buenau, P.; Kawanabe, M. Direct Importance Estimation with Model Selection and Its Application to Covariate Shift Adaptation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; Platt, J., Koller, D., Singer, Y., Roweis, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2007; Volume 20. [Google Scholar]

- Shook, J.; Gangopadhyay, T.; Wu, L.; Ganapathysubramanian, B.; Sarkar, S.; Singh, A.K. Crop yield prediction integrating genotype and weather variables using deep learning. PLoS ONE 2021, 16, e0252402. [Google Scholar] [CrossRef]

- da Silva, L.A.; Bressan, P.O.; Gonçalves, D.N.; Freitas, D.M.; Machado, B.B.; Gonçalves, W.N. Estimating soybean leaf defoliation using convolutional neural networks and synthetic images. Comput. Electron. Agric. 2019, 156, 360–368. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.; Ying, Y.; Lin, T. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Computer Vision and Artificial Intelligence for Fish Recognition, Monitoring, and Management. Fishes 2022, 7, 335. [Google Scholar] [CrossRef]

- Barnhart, I.H.; Lancaster, S.; Goodin, D.; Spotanski, J.; Dille, J.A. Use of open-source object detection algorithms to detect Palmer amaranth (Amaranthus palmeri) in soybean. Weed Sci. 2022, 70, 648–662. [Google Scholar] [CrossRef]

- Chandel, N.S.; Chakraborty, S.K.; Rajwade, Y.A.; Dubey, K.; Tiwari, M.K.; Jat, D. Identifying crop water stress using deep learning models. Neural Comput. Appl. 2021, 33, 5353–5367. [Google Scholar] [CrossRef]

- Falk, K.G.; Jubery, T.Z.; Mirnezami, S.V.; Parmley, K.A.; Sarkar, S.; Singh, A.; Ganapathysubramanian, B.; Singh, A.K. Computer vision and machine learning enabled soybean root phenotyping pipeline. Plant Methods 2020, 16, 5. [Google Scholar] [CrossRef]

- Gonçalves, J.P.; Pinto, F.A.; Queiroz, D.M.; Villar, F.M.; Barbedo, J.G.; Ponte, E.M.D. Deep learning architectures for semantic segmentation and automatic estimation of severity of foliar symptoms caused by diseases or pests. Biosyst. Eng. 2021, 210, 129–142. [Google Scholar] [CrossRef]

- Guo, Y.; Gao, Z.; Zhang, Z.; Li, Y.; Hu, Z.; Xin, D.; Chen, Q.; Zhu, R. Automatic and Accurate Acquisition of Stem-Related Phenotypes of Mature Soybean Based on Deep Learning and Directed Search Algorithms. Front. Plant Sci. 2022, 13, 906751. [Google Scholar] [CrossRef]

- He, H.; Ma, X.; Guan, H.; Wang, F.; Shen, P. Recognition of soybean pods and yield prediction based on improved deep learning model. Front. Plant Sci. 2023, 13, 1096619. [Google Scholar] [CrossRef]

- Karlekar, A.; Seal, A. SoyNet: Soybean leaf diseases classification. Comput. Electron. Agric. 2020, 172, 105342. [Google Scholar] [CrossRef]

- Li, Y.; Jia, J.; Zhang, L.; Khattak, A.M.; Sun, S.; Gao, W.; Wang, M. Soybean Seed Counting Based on Pod Image Using Two-Column Convolution Neural Network. IEEE Access 2019, 7, 64177–64185. [Google Scholar] [CrossRef]

- de Castro Pereira, R.; Hirose, E.; Ferreira de Carvalho, O.L.; da Costa, R.M.; Borges, D.L. Detection and classification of whiteflies and development stages on soybean leaves images using an improved deep learning strategy. Comput. Electron. Agric. 2022, 199, 107132. [Google Scholar] [CrossRef]

- Rairdin, A.; Fotouhi, F.; Zhang, J.; Mueller, D.S.; Ganapathysubramanian, B.; Singh, A.K.; Dutta, S.; Sarkar, S.; Singh, A. Deep learning-based phenotyping for genome wide association studies of sudden death syndrome in soybean. Front. Plant Sci. 2022, 13, 966244. [Google Scholar] [CrossRef]

- Sabóia, H.d.S.; Mion, R.L.; Silveira, A.d.O.; Mamiya, A.A. Real-time selective spraying for viola rope control in soybean and cotton crops using deep learning. Eng. Agríc. 2022, 42, e20210163. [Google Scholar] [CrossRef]

- Srilakshmi, A.; Geetha, K. A novel framework for soybean leaves disease detection using DIM-U-net and LSTM. Multimed. Tools Appl. 2023, 82, 28323–28343. [Google Scholar] [CrossRef]

- Tang, J.; Wang, D.; Zhang, Z.; He, L.; Xin, J.; Xu, Y. Weed identification based on K-means feature learning combined with convolutional neural network. Comput. Electron. Agric. 2017, 135, 63–70. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; de Souza Belete, N.A.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Wang, B.; Li, H.; You, J.; Chen, X.; Yuan, X.; Feng, X. Fusing deep learning features of triplet leaf image patterns to boost soybean cultivar identification. Comput. Electron. Agric. 2022, 197, 106914. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, K.; Meng, J. Identification of Soybean Leaf Diseases via Deep Learning. J. Inst. Eng. (India) Ser. A 2019, 100, 659–666. [Google Scholar] [CrossRef]

- Xiang, S.; Wang, S.; Xu, M.; Wang, W.; Liu, W. YOLO POD: A fast and accurate multi-task model for dense Soybean Pod counting. Plant Methods 2023, 19, 8. [Google Scholar] [CrossRef]

- Yang, S.; Zheng, L.; He, P.; Wu, T.; Sun, S.; Wang, M. High-throughput soybean seeds phenotyping with convolutional neural networks and transfer learning. Plant Methods 2021, 17, 50. [Google Scholar] [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Tulpan, D.; Eskandari, M. Using Hybrid Artificial Intelligence and Evolutionary Optimization Algorithms for Estimating Soybean Yield and Fresh Biomass Using Hyperspectral Vegetation Indices. Remote Sens. 2021, 13, 2555. [Google Scholar] [CrossRef]

- Yu, X.; Chen, C.; Gong, Q.; Lu, L. Application of Data Enhancement Method Based on Generative Adversarial Networks for Soybean Leaf Disease Identification. Am. J. Biochem. Biotechnol. 2022, 18, 417–427. [Google Scholar] [CrossRef]

- Zhang, K.; Wu, Q.; Chen, Y. Detecting soybean leaf disease from synthetic image using multi-feature fusion faster R-CNN. Comput. Electron. Agric. 2021, 183, 106064. [Google Scholar] [CrossRef]

- Zhang, X.; Cui, J.; Liu, H.; Han, Y.; Ai, H.; Dong, C.; Zhang, J.; Chu, Y. Weed Identification in Soybean Seedling Stage Based on Optimized Faster R-CNN Algorithm. Agriculture 2023, 13, 175. [Google Scholar] [CrossRef]

- Zhang, C.; Lu, X.; Ma, H.; Hu, Y.; Zhang, S.; Ning, X.; Hu, J.; Jiao, J. High-Throughput Classification and Counting of Vegetable Soybean Pods Based on Deep Learning. Agronomy 2023, 13, 1154. [Google Scholar] [CrossRef]

- Zhao, G.; Quan, L.; Li, H.; Feng, H.; Li, S.; Zhang, S.; Liu, R. Real-time recognition system of soybean seed full-surface defects based on deep learning. Comput. Electron. Agric. 2021, 187, 106230. [Google Scholar] [CrossRef]

- Zhao, J.; Kaga, A.; Yamada, T.; Komatsu, K.; Hirata, K.; Kikuchi, A.; Hirafuji, M.; Ninomiya, S.; Guo, W. Improved Field-Based Soybean Seed Counting and Localization with Feature Level Considered. Plant Phenomics 2023, 5, 0026. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Zhou, L.; Zhang, C.; Bao, Y.; Wu, B.; Chu, H.; Yu, Y.; He, Y.; Feng, L. Identification of Soybean Varieties Using Hyperspectral Imaging Coupled with Convolutional Neural Network. Sensors 2019, 19, 4065. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Zhang, J.; Chao, M.; Xu, X.; Song, P.; Zhang, J.; Huang, Z. A Rapid and Highly Efficient Method for the Identification of Soybean Seed Varieties: Hyperspectral Images Combined with Transfer Learning. Molecules 2020, 25, 152. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Data Fusion in Agriculture: Resolving Ambiguities and Closing Data Gaps. Sensors 2022, 22, 2285. [Google Scholar] [CrossRef]

- Sun, J.; Di, L.; Sun, Z.; Shen, Y.; Lai, Z. County-Level Soybean Yield Prediction Using Deep CNN-LSTM Model. Sensors 2019, 19, 4363. [Google Scholar] [CrossRef] [PubMed]

- Babu, V.; Ram, N. Deep residual CNN with contrast limited adaptive histogram equalization for weed detection in soybean crops. Trait. Signal 2020, 39, 717–722. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Menezes, G.K.; Astolfi, G.; Martins, J.A.C.; Tetila, E.C.; da Silva Oliveira Junior, A.; Gonçalves, D.N.; Marcato Junior, J.; Silva, J.A.; Li, J.; Gonçalves, W.N.; et al. Pseudo-label semi-supervised learning for soybean monitoring. Smart Agric. Technol. 2023, 4, 100216. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Teodoro, P.E.; Teodoro, L.P.R.; Baio, F.H.R.; da Silva Junior, C.A.; dos Santos, R.G.; Ramos, A.P.M.; Pinheiro, M.M.F.; Osco, L.P.; Gonçalves, W.N.; Carneiro, A.M.; et al. Predicting Days to Maturity, Plant Height, and Grain Yield in Soybean: A Machine and Deep Learning Approach Using Multispectral Data. Remote Sens. 2021, 13, 4632. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Da Silva Oliveira, A.; Alvarez, M.; Amorim, W.P.; De Souza Belete, N.A.; Da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Tian, F.; Vieira, C.C.; Zhou, J.; Zhou, J.; Chen, P. Estimation of Off-Target Dicamba Damage on Soybean Using UAV Imagery and Deep Learning. Sensors 2023, 23, 3241. [Google Scholar] [CrossRef] [PubMed]

- Trevisan, R.; Pérez, O.; Schmitz, N.; Diers, B.; Martin, N. High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks. Remote Sens. 2020, 12, 3617. [Google Scholar] [CrossRef]

- Yang, Q.; She, B.; Huang, L.; Yang, Y.; Zhang, G.; Zhang, M.; Hong, Q.; Zhang, D. Extraction of soybean planting area based on feature fusion technology of multi-source low altitude unmanned aerial vehicle images. Ecol. Inform. 2022, 70, 101715. [Google Scholar] [CrossRef]

- Zhang, Z.; Khanal, S.; Raudenbush, A.; Tilmon, K.; Stewart, C. Assessing the efficacy of machine learning techniques to characterize soybean defoliation from unmanned aerial vehicles. Comput. Electron. Agric. 2022, 193, 106682. [Google Scholar] [CrossRef]

- Zhang, S.; Feng, H.; Han, S.; Shi, Z.; Xu, H.; Liu, Y.; Feng, H.; Zhou, C.; Yue, J. Monitoring of Soybean Maturity Using UAV Remote Sensing and Deep Learning. Agriculture 2023, 13, 110. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, J.; Ye, H.; Ali, M.L.; Chen, P.; Nguyen, H.T. Yield estimation of soybean breeding lines under drought stress using unmanned aerial vehicle-based imagery and convolutional neural network. Biosyst. Eng. 2021, 204, 90–103. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Bhadra, S.; Maimaitiyiming, M.; Brown, D.R.; Sidike, P.; Fritschi, F.B. Field-scale crop yield prediction using multi-temporal WorldView-3 and PlanetScope satellite data and deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 174, 265–281. [Google Scholar] [CrossRef]

- Bi, L.; Hu, G.; Raza, M.M.; Kandel, Y.; Leandro, L.; Mueller, D. A Gated Recurrent Units (GRU)-Based Model for Early Detection of Soybean Sudden Death Syndrome through Time-Series Satellite Imagery. Remote Sens. 2020, 12, 3621. [Google Scholar] [CrossRef]

- Khaki, S.; Pham, H.; Wang, L. Simultaneous corn and soybean yield prediction from remote sensing data using deep transfer learning. Sci. Rep. 2021, 11, 11132. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zeng, H.; Zhang, M.; Wu, B.; Zhao, Y.; Yao, X.; Cheng, T.; Qin, X.; Wu, F. A county-level soybean yield prediction framework coupled with XGBoost and multidimensional feature engineering. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103269. [Google Scholar] [CrossRef]

- Schwalbert, R.A.; Amado, T.; Corassa, G.; Pott, L.P.; Prasad, P.; Ciampitti, I.A. Satellite-based soybean yield forecast: Integrating machine learning and weather data for improving crop yield prediction in southern Brazil. Agric. For. Meteorol. 2020, 284, 107886. [Google Scholar] [CrossRef]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Zhang, S.; Ban, X.; Xiao, T.; Huang, L.; Zhao, J.; Huang, W.; Liang, D. Identification of Soybean Planting Areas Combining Fused Gaofen-1 Image Data and U-Net Model. Agronomy 2023, 13, 863. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Akintayo, A.; Tylka, G.L.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. A deep learning framework to discern and count microscopic nematode eggs. Sci. Rep. 2018, 8, 9145. [Google Scholar] [CrossRef]

- Khaki, S.; Wang, L.; Archontoulis, S.V. A CNN-RNN Framework for Crop Yield Prediction. Front. Plant Sci. 2020, 10, 1750. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, D.; He, F.; Wang, J.; Joshi, T.; Xu, D. Phenotype Prediction and Genome-Wide Association Study Using Deep Convolutional Neural Network of Soybean. Front. Genet. 2019, 10, 1091. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 2019, 180, 96–107. [Google Scholar] [CrossRef]

- Stokel-Walker, C.; Noorden, R.V. The promise and peril of generative AI. Nature 2023, 614, 214–216. [Google Scholar] [CrossRef] [PubMed]

| Acronym | Meaning |

|---|---|

| AI | Artificial Intelligence |

| AtLSTM | Attention-based Long Short-Term Memory |

| CAE | Convolutional Auto-Encoder |

| CIR | Color Infrared |

| CNN | Convolutional Neural Network |

| CSAE | Convolutional Selective Autoencoder |

| DCNN | Deep Convolutional Neural Network |

| DIM-U-Net | Dense Inception Module based U-Net |

| DRCNN | Deep Residual Convolutional Neural Network |

| DRNN | Deep Recurrent Neural Network |

| FCDNN | Fully Connected Deep Neural Network |

| FPN | Feature Pyramid Network |

| GAN | Generative Adversarial Network |

| IoU | Intersection over Union |

| JULE | Joint Unsupervised Learning |

| LSTM | Long Short-Term Memory |

| R-CNN | Region-based Convolutional Neural Network |

| RMSE | Root Mean Squared Error |

| SNP | Single Nucleotide Polymorphism |

| SR-AE | Sparse Regularized Auto Encoder |

| SSD | Singleshot Detector |

| UAV | Unmanned Aerial Vehicles |

| VGG | Visual Geometry Group |

| VI | Vegetation Index |

| YOLO | You Only Look Once |

| Ref. | Context | Image Type | Main Techniques | Accuracy |

|---|---|---|---|---|

| [13] | Weed detection | RGB | VGG16, ResNet50, InceptionV3 | 0.99 |

| [22] | Weed detection | RGB | Faster R-CNN, SSD, YOLO v5 | 0.72 |

| [1] | Disease recognition | RGB | DenseNet201 | 0.97 |

| [23] | Water stress detection | RGB | AlexNet, GoogLeNet, Inception V3 | 0.93 |

| [24] | Root phenotyping | RGB | CAE | 0.66–0.99 |

| [15] | Weed detection | RGB | JULE, DeepCluster | 0.97 |

| [11] | Volunteer corn detection | RGB, CIR | GoogleNet | 0.99 |

| [25] | Disease severity | RGB | FPN, U-Net, DeepLabv3+ | 0.95–0.98 |

| [3] | Pest detection | HS | Attention-ResNet | 0.95 |

| [26] | Stem phenotyping | RGB | YOLO X | 0.94 |

| [27] | Pod detection, yield prediction | RGB | YOLO v5 | 0.94 |

| [28] | Disease recognition | RGB | DCNN | 0.98 |

| [29] | Seed counting | RGB | Two-column CNN | 0.82–0.94 |

| [30] | Pest detection | RGB | Modified YOLO v4 | 0.87 |

| [31] | Disease severity | RGB | RetinaNet | 0.64–0.65 |

| [32] | Weed detection | RGB | Faster R-CNN, YOLO v3 | 0.89–0.98 |

| [19] | Defoliation estimation | RGB, synthetic | AlexNet, VGGNet and ResNet | 0.98 |

| [33] | Disease recognition | RGB | DIM-U-Net, SR-AE, LSTM | 0.99 |

| [34] | Weed detection | RGB | DCNN | 0.93 |

| [35] | Pest detection | RGB | Several CNNs | 0.94 |

| [12] | Seed-per-pot estimation | RGB | DCNN | 0.86 |

| [36] | Cultivar identification | RGB | ResNet-50, DenseNet-121, DenseNet | 0.84 |

| [37] | Disease recognition | RGB | AlexNet, GoogLeNet, ResNet-50 | 0.94 |

| [38] | Pod counting | RGB | YOLO POD | 0.97 |

| [39] | Seed phenotyping | RGB, synthetic | Mask R-CNN | 0.84–0.90 |

| [40] | Yield prediction, biomass | HS | DCNN | 0.76–0.91 |

| [41] | Disease recognition | RGB | GAN | 0.96 |

| [42] | Disease recognition | RGB | Faster R-CNN | 0.83 |

| [43] | Weed detection | RGB | Faster R-CNN | 0.99 |

| [44] | Pod counting | RGB | R-CNN, YOLO v3, YOLO v4, YOLO X | 0.90–0.98 |

| [45] | Seed defect recognition | RGB | MobileNet V2 | 0.98 |

| [46] | Seed counting | RGB | P2PNet-Soy | 0.87 |

| [47] | Cultivar identification | HS | DCNN | 0.90 |

| [48] | Cultivar identification | HS | Several CNNs | 0.90–0.97 |

| Ref. | Context | Image Type | Main Techniques | Accuracy |

|---|---|---|---|---|

| [51] | Weed detection | RGB | DRCNN | 0.97 |

| [22] | Weed detection | RGB | Faster R-CNN, SSD, YOLO v5 | 0.72 |

| [52] | Weed detection | RGB | DCNN | 0.98 |

| [15] | Weed detection | RGB | JULE, DeepCluster | 0.97 |

| [53] | Yield prediction | RGB, MS, Thermal | DCNN | 0.72 |

| [54] | Weed detection | RGB | VGG19 | 0.70 |

| [55] | Weed detection | RGB | MobileNetV2, ResNet50, CNN | 0.98 |

| [56] | Weed detection | RGB | Faster RCNN | 0.67–0.85 |

| [57] | Yield prediction, plant height | MS, VI | Fully Connected CNN | 0.42–0.77 |

| [35] | Pest detection | RGB | Several CNNs | 0.94 |

| [58] | Disease recognition | RGB | Several CNNs | 0.99 |

| [59] | Herbicide damage | RGB | DenseNet121 | 0.82 |

| [60] | Maturity estimation | RGB | DCNN | 3.00 |

| [61] | Crop mapping | RGB, MS, VI | U-Net | 0.94 |

| [62] | Defoliation estimation | RGB | DefoNet CNN | 0.91 |

| [63] | Maturity estimation | RGB | DS-SoybeanNet CNN | 0.86–0.99 |

| [64] | Yield estimation | RGB, MS | DCNN | 0.78 |

| Ref. | Context | Image Type | Main Techniques | Accuracy |

|---|---|---|---|---|

| [67] | Disease detection | MS (VI) | FCDNN | 0.76–0.86 |

| [68] | Yield prediction | MS | DCNN (YieldNet) | 0.80–0.88 |

| [69] | Yield prediction | MS (VI), weather | DCNN | 0.76 |

| [66] | Yield prediction | MS | 2D and 3D ResNet-18 | 0.86–0.87 |

| [70] | Yield prediction | MS (VI), weather | LSTM | 0.32–0.68 |

| [50] | Yield prediction | MS (VI), weather | Deep CNN-LSTM | 0.74 |

| [71] | Crop mapping | MS | LSTM | 0.82–0.86 |

| [20] | Crop mapping | MS | AtLSTM | 0.98 |

| [72] | Crop mapping | MS | U-Net | 0.81–0.92 |

| Ref. | Context | Data Type | Main Techniques | Accuracy |

|---|---|---|---|---|

| [74] | Nematode counting | Microscopy | CSAE | 0.94–0.95 |

| [75] | Yield prediction | Soil, weather, yield, management | DCNN, DRNN | 0.86–0.88 |

| [69] | Yield prediction | MS, weather | DCNN | 0.76 |

| [76] | Phenotype prediction | SNPs | Dual stream CNN | 0.40–0.67 |

| [18] | Yield prediction | Genotype, weather | LSTM | 0.73–0.79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbedo, J.G.A. Deep Learning for Soybean Monitoring and Management. Seeds 2023, 2, 340-356. https://doi.org/10.3390/seeds2030026

Barbedo JGA. Deep Learning for Soybean Monitoring and Management. Seeds. 2023; 2(3):340-356. https://doi.org/10.3390/seeds2030026

Chicago/Turabian StyleBarbedo, Jayme Garcia Arnal. 2023. "Deep Learning for Soybean Monitoring and Management" Seeds 2, no. 3: 340-356. https://doi.org/10.3390/seeds2030026

APA StyleBarbedo, J. G. A. (2023). Deep Learning for Soybean Monitoring and Management. Seeds, 2(3), 340-356. https://doi.org/10.3390/seeds2030026