Neuromorphic Photonic On-Chip Computing

Abstract

1. Introduction

1.1. Neuromorphic Electronic Computing: On-Chip AI

1.2. Photonics: A Bright Solution Looking for a Dark Problem!

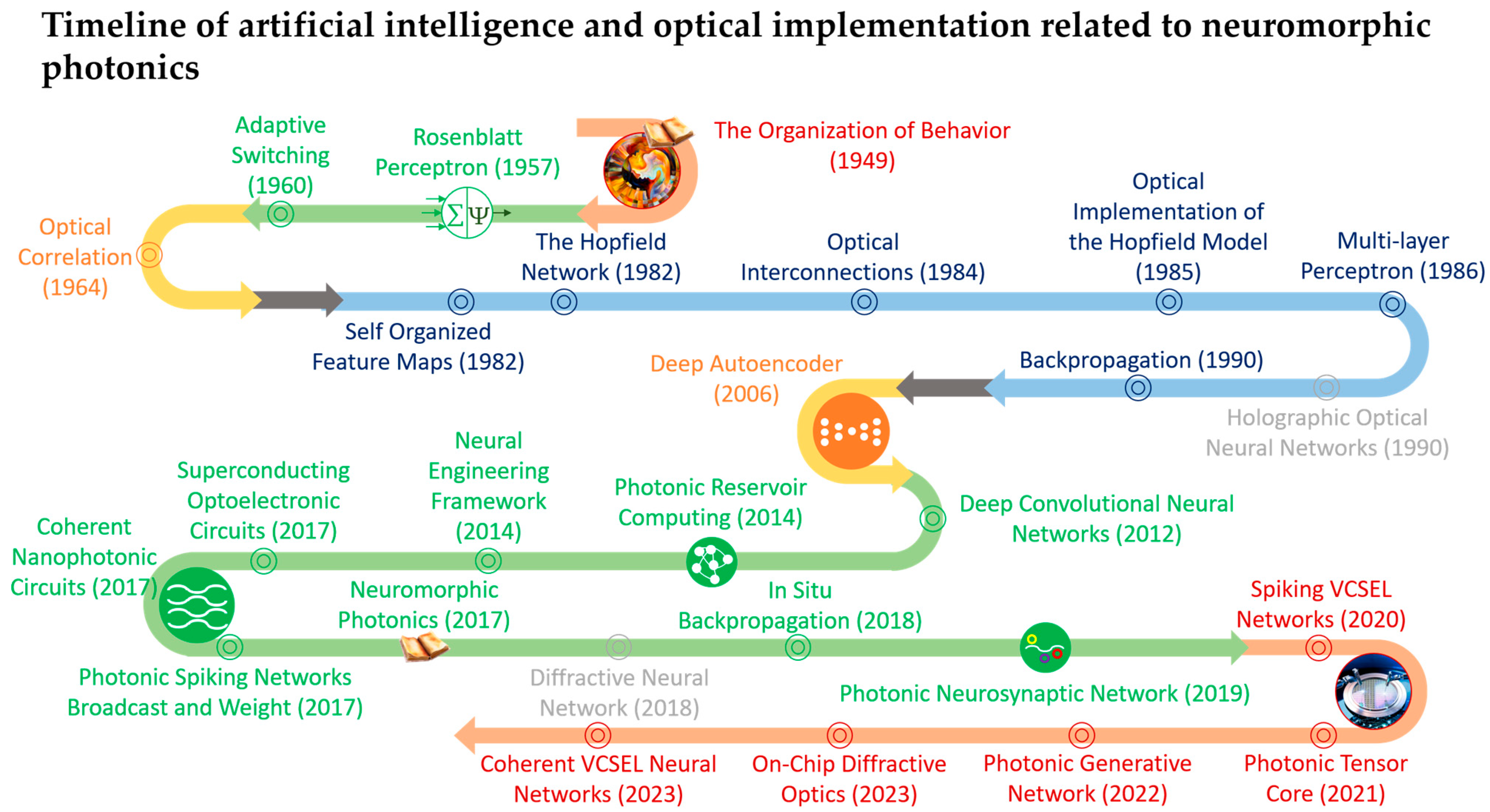

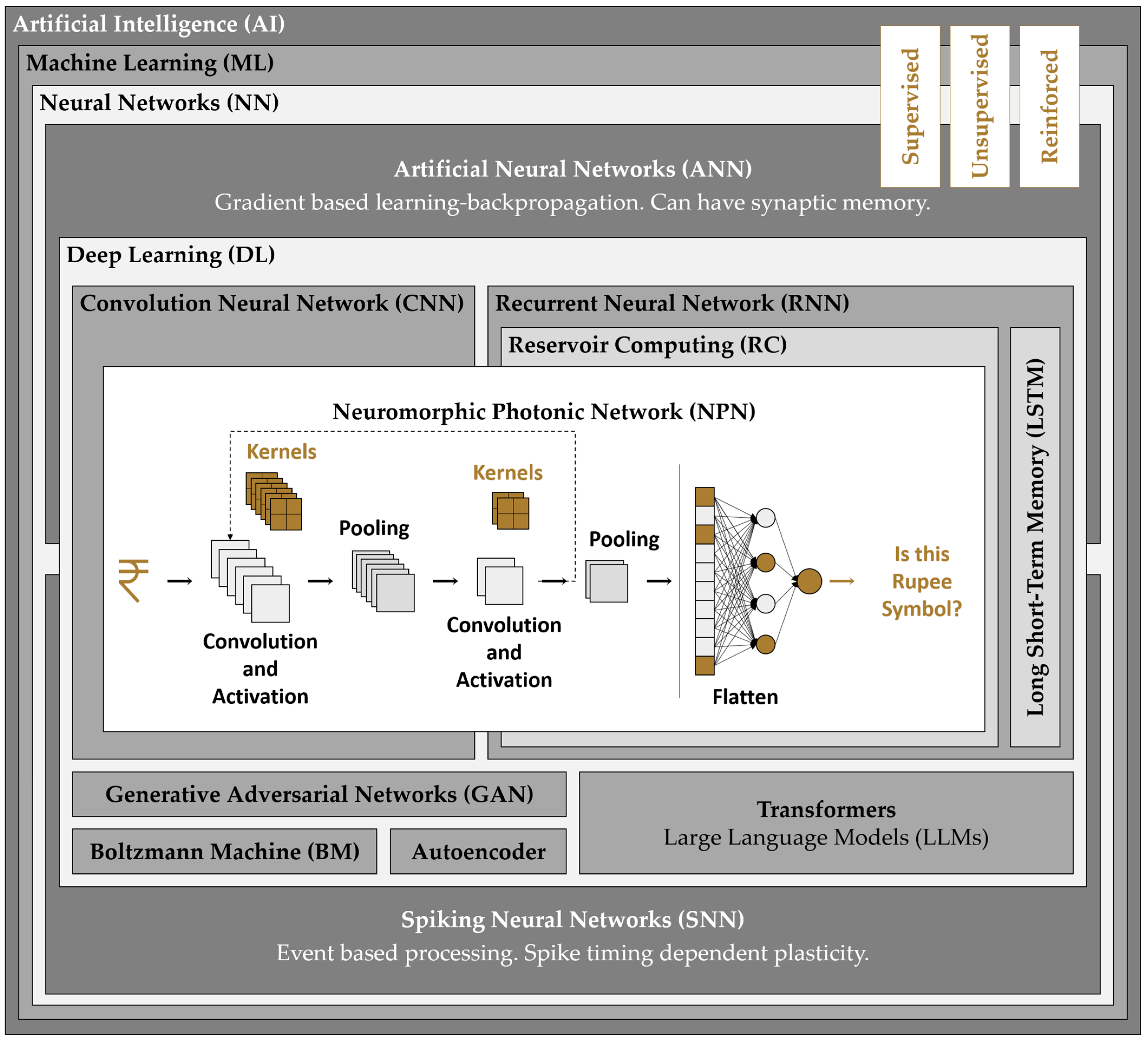

1.3. Neuromorphic Photonics in the Background of AI Technology Revolution

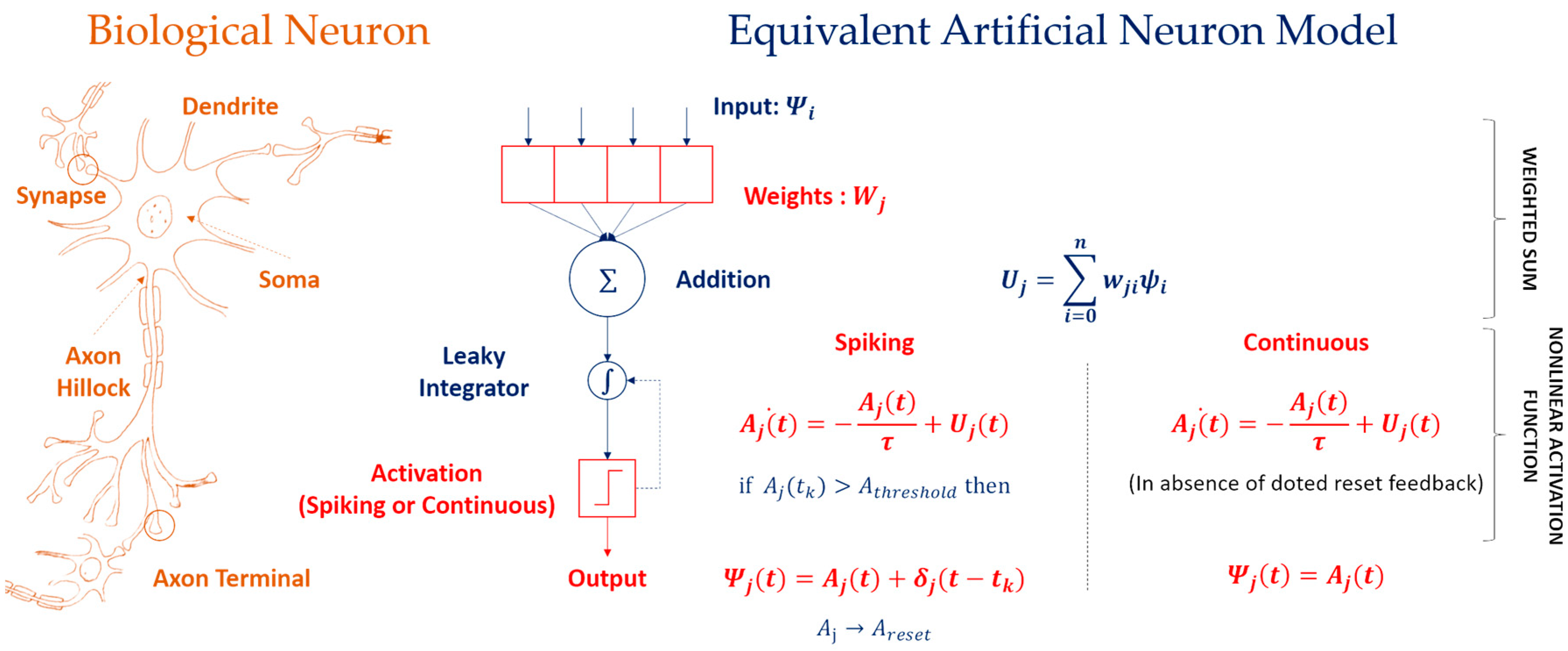

2. Neuromorphic Photonics Processing Node

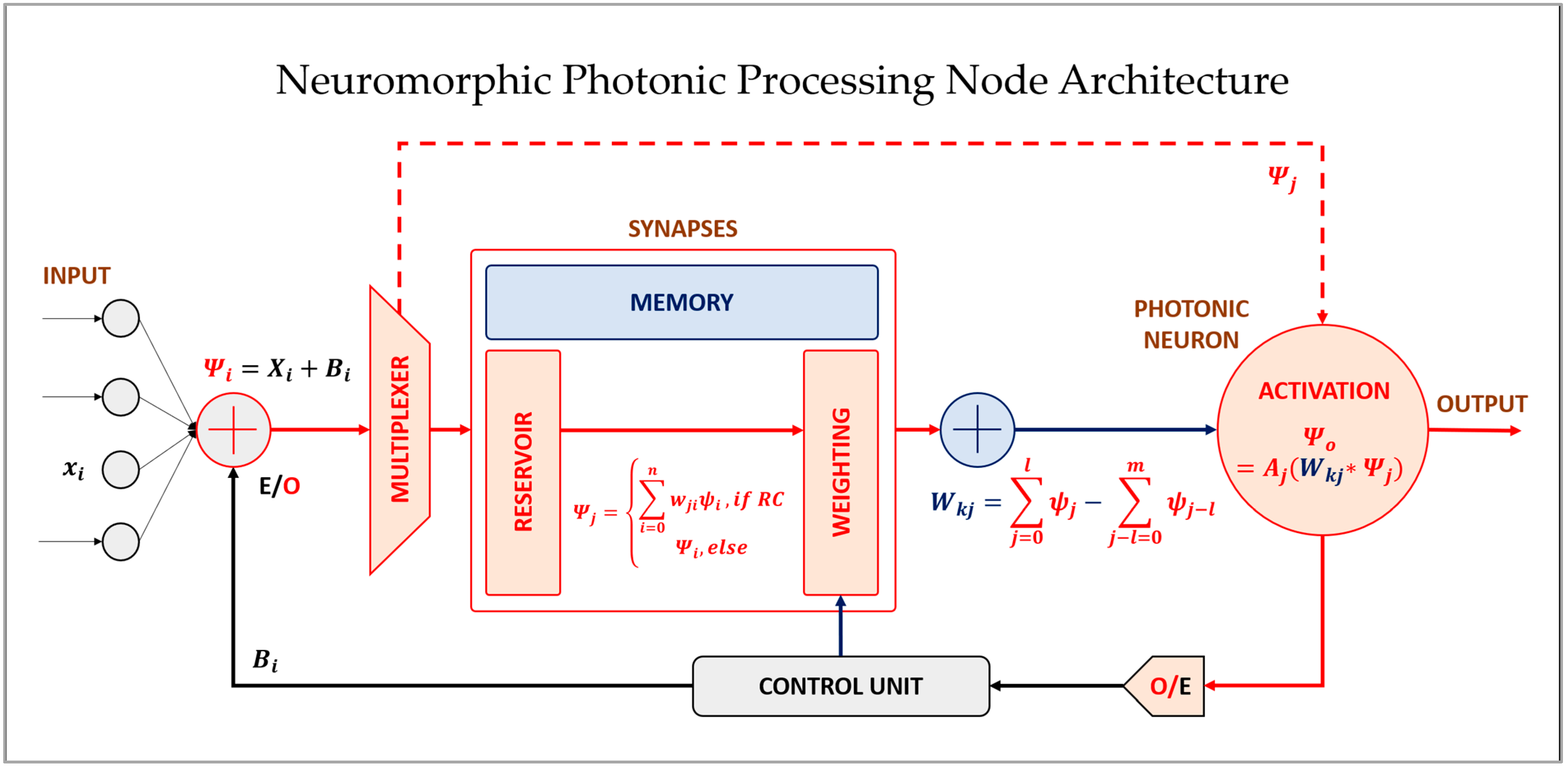

2.1. Neuromorphic Photonic Processing-Node Architecture

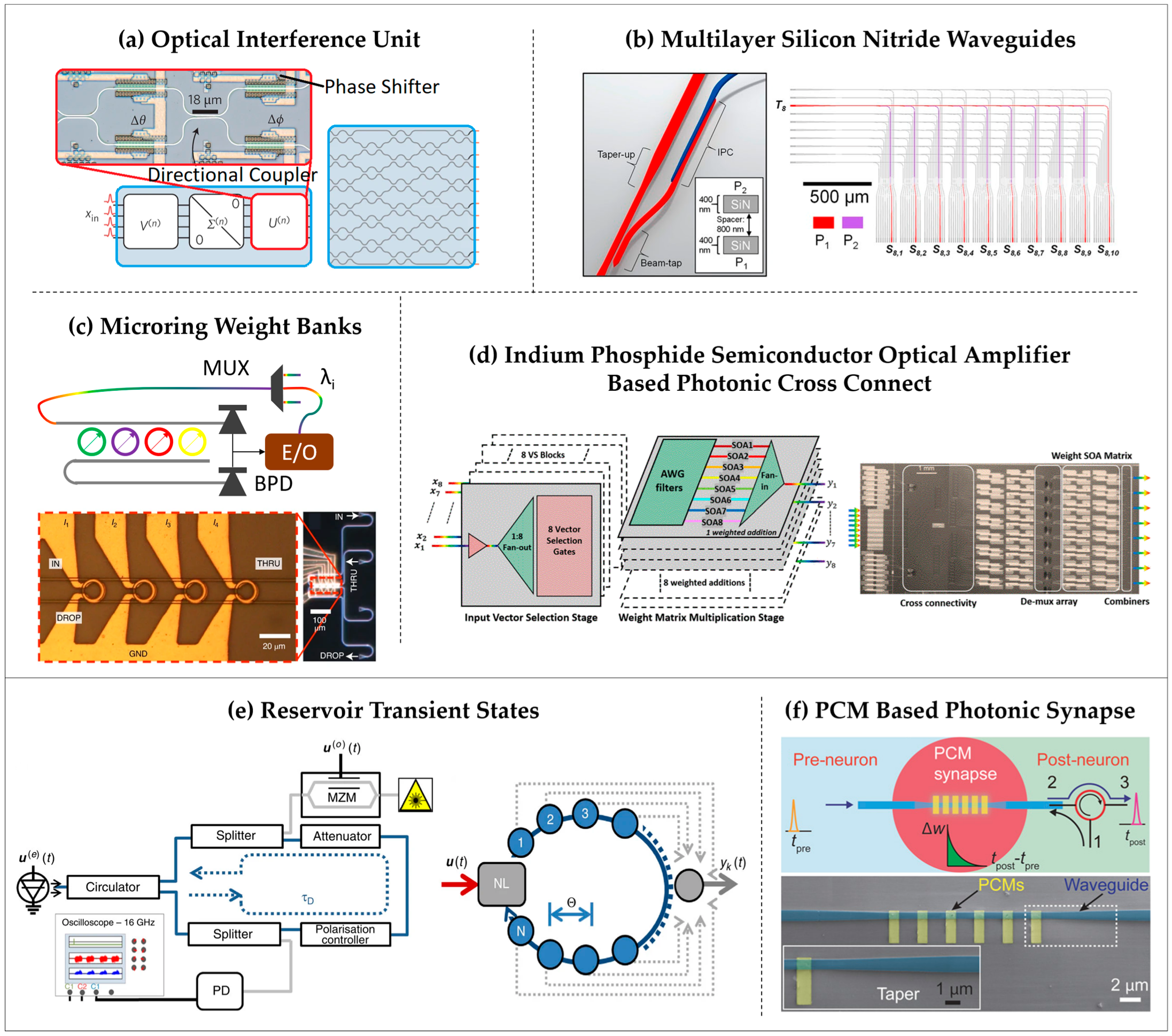

2.2. Weights (Synapses): Linear Operation

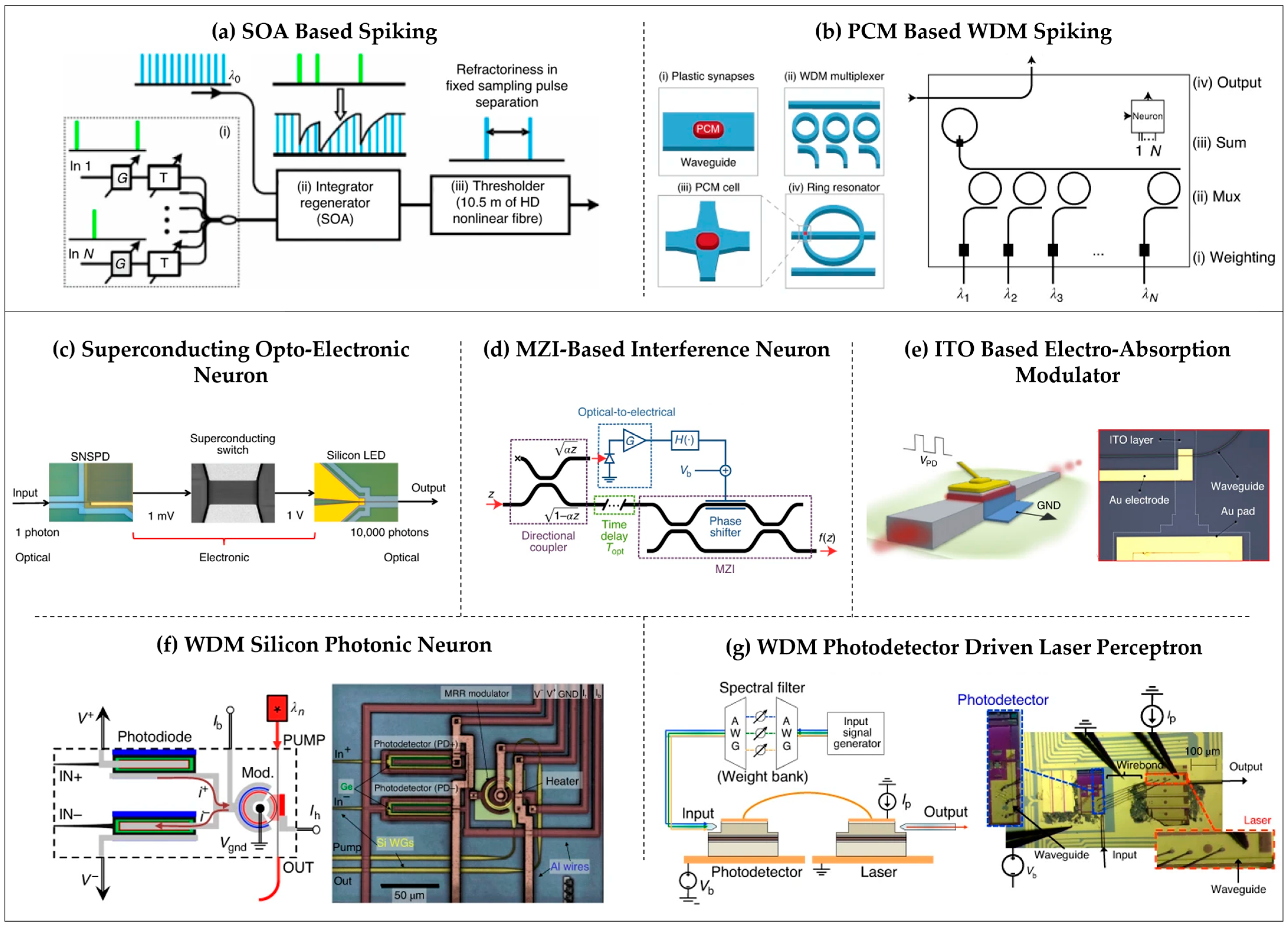

2.3. Photonic Neuron: Nonlinear Activation

3. Neuromorphic Photonic Networks

3.1. Neuromorphic Photonic Network: A Combined Structure

- Instead of traditional convolutional layers, the ReConN employs NPPNs to perform convolutional operations directly on input photonic signals, enabling them to extract spatial features from the input data and apply nonlinear activation functions simultaneously.

- NPPNs in RC mode, followed by unit weighting within the synapse for pooling operations (Figure 1), eliminate the need for different variety of layers. NPPNs dynamically aggregate information across spatial dimensions of the input data, facilitating downsampling and feature selection, while preserving the advantages of photonic processing.

- A defining feature of the ReConN’s is its incorporation of a recurrent feedback loop enabled by the inherent memory (synaptic or delayed) properties of NPPNs. The output from the post-NPPNs is fed back into the pre-NPPNs in the network, allowing for iterative refinement of representations over multiple time steps and capturing temporal dependencies in the input data.

- Following the recurrent processing stage, the output is flattened for processing (say classification). This final layer utilizes standard classification techniques to map the learned features to specific output classes, enabling the network to make accurate predictions based on the input data.

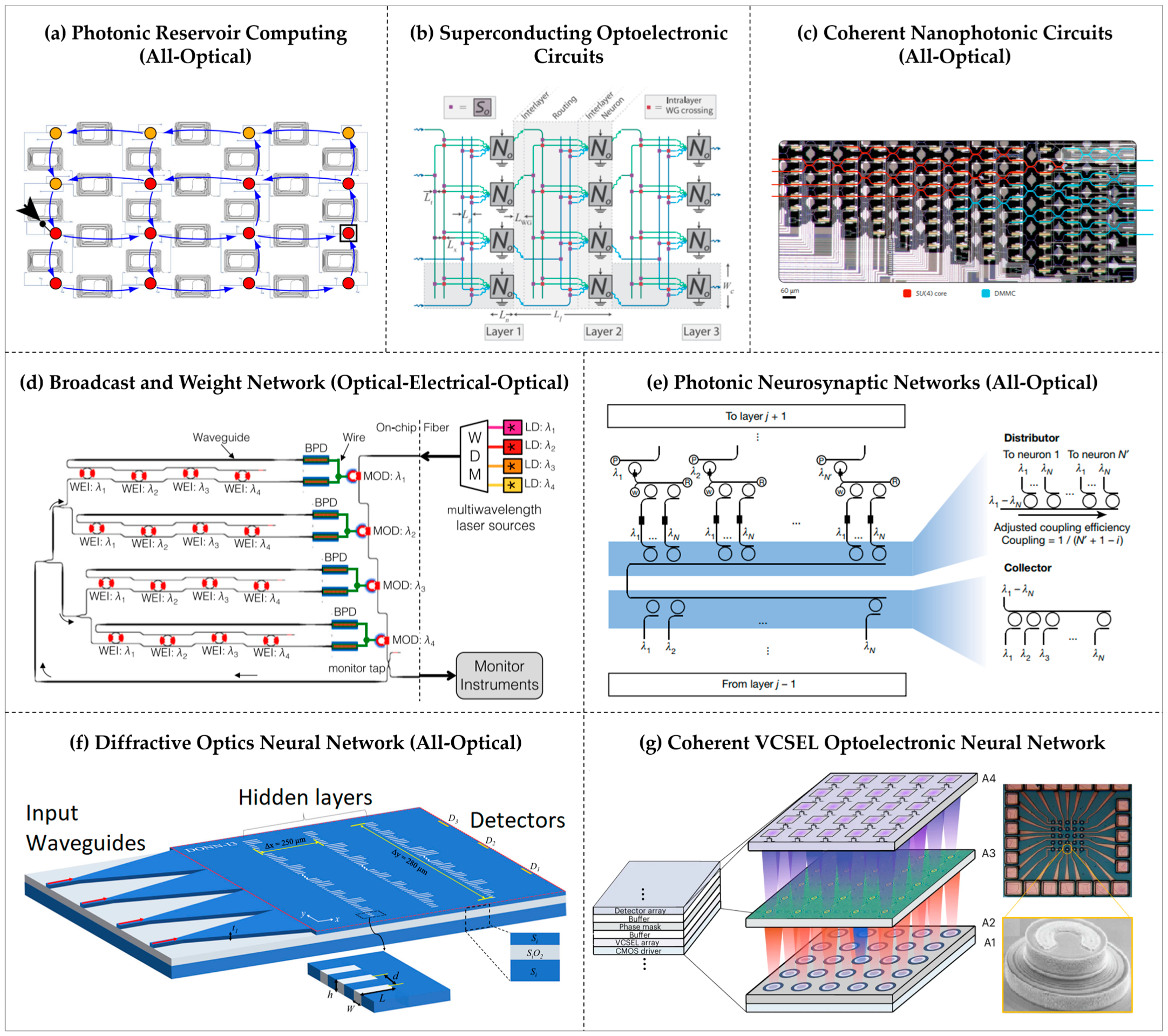

3.2. Neuromorphic Photonic Approaches

3.3. Algorithms and Methods for Training Neuromorphic Photonic Networks

4. Discussion

4.1. Exploring the Current State of the Art: Challenges and Solutions

4.1.1. Synergistic Co-Integration of Photonics with Electronics

4.1.2. On-Chip Light Sources on Semiconductor Platform

4.2. Advancements and Future Directions in Scientific Inquiry

4.2.1. Fabrication Challenges

4.2.2. Integration of Photonic Components

4.2.3. Synaptic Memory

4.3. Envisioning the Future of Neuromorphic Photonics: A Visionary Perspective

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Batra, G.; Jacobson, Z.; Madhav, S.; Queirolo, A.; Santhanam, N. Artificial-Intelligence Hardware: New Opportunities for Semiconductor Companies; McKinsey&Company: New York, NY, USA, 2019. [Google Scholar]

- Rosenblatt, F. The Perceptron, a Perceiving and Recognizing Automaton Project Para; Cornell Aeronautical Laboratory: Buffalo, NY, USA, 1957. [Google Scholar]

- Nahmias, M.A.; de Lima, T.F.; Tait, A.N.; Peng, H.-T.; Shastri, B.J.; Prucnal, P.R. Photonic Multiply-Accumulate Operations for Neural Networks. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 1–18. [Google Scholar] [CrossRef]

- Wetzstein, G.; Ozcan, A.; Gigan, S.; Fan, S.; Englund, D.; Soljačić, M.; Denz, C.; Miller, D.A.B.; Psaltis, D. Inference in Artificial Intelligence with Deep Optics and Photonics. Nature 2020, 588, 39–47. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Choquette, J. NVIDIA Hopper H100 GPU: Scaling Performance. IEEE Micro 2023, 43, 9–17. [Google Scholar] [CrossRef]

- Yonghui, W.; Mike, S.; Zhifeng, C.; Quoc, V.L.; Mohammad, N.; Wolfgang, M. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar] [CrossRef]

- Huang, C.; Sorger, V.J.; Miscuglio, M.; Al-Qadasi, M.; Mukherjee, A.; Lampe, L.; Nichols, M.; Tait, A.N.; Ferreira de Lima, T.; Marquez, B.A.; et al. Prospects and Applications of Photonic Neural Networks. Adv. Phys. X 2022, 7, 1981155. [Google Scholar] [CrossRef]

- Pei, J.; Deng, L.; Song, S.; Zhao, M.; Zhang, Y.; Wu, S.; Wang, G.; Zou, Z.; Wu, Z.; He, W.; et al. Towards Artificial General Intelligence with Hybrid Tianjic Chip Architecture. Nature 2019, 572, 106–111. [Google Scholar] [CrossRef]

- Tesla Dojo Technology. A Guide to Tesla’s Configurable Floating Point Formats & Arithmetic. Available online: https://digitalassets.tesla.com/tesla-contents/image/upload/tesla-dojo-technology.pdf (accessed on 1 March 2024).

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten Digit Recognition with a Back-Propagation Network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Capper, D.; Jones, D.T.W.; Sill, M.; Hovestadt, V.; Schrimpf, D.; Sturm, D.; Koelsche, C.; Sahm, F.; Chavez, L.; Reuss, D.E.; et al. DNA Methylation-Based Classification of Central Nervous System Tumours. Nature 2018, 555, 469–474. [Google Scholar] [CrossRef]

- Stewart, T.C.; Eliasmith, C. Large-Scale Synthesis of Functional Spiking Neural Circuits. Proc. IEEE 2014, 102, 881–898. [Google Scholar] [CrossRef]

- Hodgkin, A.L.; Huxley, A.F. A Quantitative Description of Membrane Current and Its Application to Conduction and Excitation in Nerve. J. Physiol. 1952, 117, 500–544. [Google Scholar] [CrossRef] [PubMed]

- Nahmias, M.A.; Shastri, B.J.; Tait, A.N.; Prucnal, P.R. A Leaky Integrate-and-Fire Laser Neuron for Ultrafast Cognitive Computing. IEEE J. Sel. Top. Quantum Electron. 2013, 19, 1–12. [Google Scholar] [CrossRef]

- Izhikevich, E.M. Simple Model of Spiking Neurons. IEEE Trans. Neural. Netw. 2003, 14, 1569–1572. [Google Scholar] [CrossRef]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A Million Spiking-Neuron Integrated Circuit with a Scalable Communication Network and Interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef]

- Tait, A.N.; Ferreira De Lima, T.; Nahmias, M.A.; Miller, H.B.; Peng, H.T.; Shastri, B.J.; Prucnal, P.R. Silicon Photonic Modulator Neuron. Phys. Rev. Appl. 2019, 11. [Google Scholar] [CrossRef]

- Feldmann, J.; Youngblood, N.; Wright, C.D.; Bhaskaran, H.; Pernice, W.H.P. All-Optical Spiking Neurosynaptic Networks with Self-Learning Capabilities. Nature 2019, 569, 208–214. [Google Scholar] [CrossRef]

- Williamson, I.A.D.; Hughes, T.W.; Minkov, M.; Bartlett, B.; Pai, S.; Fan, S. Reprogrammable Electro-Optic Nonlinear Activation Functions for Optical Neural Networks. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 1–12. [Google Scholar] [CrossRef]

- Buckley, S.; Chiles, J.; McCaughan, A.N.; Moody, G.; Silverman, K.L.; Stevens, M.J.; Mirin, R.P.; Nam, S.W.; Shainline, J.M. All-Silicon Light-Emitting Diodes Waveguide-Integrated with Superconducting Single-Photon Detectors. Appl. Phys. Lett. 2017, 111. [Google Scholar] [CrossRef]

- Amin, R.; George, J.K.; Sun, S.; Ferreira de Lima, T.; Tait, A.N.; Khurgin, J.B.; Miscuglio, M.; Shastri, B.J.; Prucnal, P.R.; El-Ghazawi, T.; et al. ITO-Based Electro-Absorption Modulator for Photonic Neural Activation Function. APL Mater. 2019, 7. [Google Scholar] [CrossRef]

- Nahmias, M.A.; Tait, A.N.; Tolias, L.; Chang, M.P.; Ferreira de Lima, T.; Shastri, B.J.; Prucnal, P.R. An Integrated Analog O/E/O Link for Multi-Channel Laser Neurons. Appl. Phys. Lett. 2016, 108. [Google Scholar] [CrossRef]

- McCaughan, A.N.; Verma, V.B.; Buckley, S.M.; Allmaras, J.P.; Kozorezov, A.G.; Tait, A.N.; Nam, S.W.; Shainline, J.M. A Superconducting Thermal Switch with Ultrahigh Impedance for Interfacing Superconductors to Semiconductors. Nat. Electron. 2019, 2, 451–456. [Google Scholar] [CrossRef]

- Rosenbluth, D.; Kravtsov, K.; Fok, M.P.; Prucnal, P.R. A High Performance Pulse Processing Device. Opt. Express 2009, 17, 22767. [Google Scholar] [CrossRef]

- Romeira, B.; Javaloyes, J.; Ironside, C.N.; Figueiredo, J.M.L.; Balle, S.; Piro, O. Excitability and Optical Pulse Generation in Semiconductor Lasers Driven by Resonant Tunneling Diode Photo-Detectors. Opt. Express 2013, 21, 20931. [Google Scholar] [CrossRef]

- Coomans, W.; Gelens, L.; Beri, S.; Danckaert, J.; Van der Sande, G. Solitary and Coupled Semiconductor Ring Lasers as Optical Spiking Neurons. Phys. Rev. E 2011, 84, 036209. [Google Scholar] [CrossRef]

- Brunstein, M.; Yacomotti, A.M.; Sagnes, I.; Raineri, F.; Bigot, L.; Levenson, A. Excitability and Self-Pulsing in a Photonic Crystal Nanocavity. Phys. Rev. A 2012, 85, 031803. [Google Scholar] [CrossRef]

- Gupta, S.; Gahlot, S.; Roy, S. Design of Optoelectronic Computing Circuits with VCSEL-SA Based Neuromorphic Photonic Spiking. Optik 2021, 243, 167344. [Google Scholar] [CrossRef]

- Shastri, B.J.; Tait, A.N.; Ferreira de Lima, T.; Pernice, W.H.P.; Bhaskaran, H.; Wright, C.D.; Prucnal, P.R. Photonics for Artificial Intelligence and Neuromorphic Computing. Nat. Photonics 2021, 15, 102–114. [Google Scholar] [CrossRef]

- Shekhar, S.; Bogaerts, W.; Chrostowski, L.; Bowers, J.E.; Hochberg, M.; Soref, R.; Shastri, B.J. Roadmapping the next Generation of Silicon Photonics. Nat. Commun. 2024, 15, 751. [Google Scholar] [CrossRef] [PubMed]

- Berggren, K.; Xia, Q.; Likharev, K.K.; Strukov, D.B.; Jiang, H.; Mikolajick, T.; Querlioz, D.; Salinga, M.; Erickson, J.R.; Pi, S.; et al. Roadmap on Emerging Hardware and Technology for Machine Learning. Nanotechnology 2021, 32, 012002. [Google Scholar] [CrossRef]

- Furber, S.B.; Galluppi, F.; Temple, S.; Plana, L.A. The SpiNNaker Project. Proc. IEEE 2014, 102, 652–665. [Google Scholar] [CrossRef]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.-M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A Mixed-Analog-Digital Multichip System for Large-Scale Neural Simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Goodman, J.W. Fan-in and Fan-out with Optical Interconnections. Opt. Acta Int. J. Opt. 1985, 32, 1489–1496. [Google Scholar] [CrossRef]

- Hebb, D.O. The Organization of Behavior: A Neuropsychological Theory; Psychology Press: London, UK, 2005. [Google Scholar]

- Widrow, B.; Hoff, M.E. Others Adaptive Switching Circuits. In Proceedings of the IRE WESCON Convention Record; Institute of Radio Engineers: New York, NY, USA, 1960; Volume 4, pp. 96–104. [Google Scholar]

- Kohonen, T. Self-Organized Formation of Topologically Correct Feature Maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar] [CrossRef]

- Lugt, A.V. Signal Detection by Complex Spatial Filtering. IEEE Trans. Inf. Theory 1964, 10, 139–145. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef]

- Farhat, N.H.; Psaltis, D.; Prata, A.; Paek, E. Optical Implementation of the Hopfield Model. Appl. Opt. 1985, 24, 1469. [Google Scholar] [CrossRef] [PubMed]

- Goodman, J.W.; Leonberger, F.J.; Kung, S.-Y.; Athale, R.A. Optical Interconnections for VLSI Systems. Proc. IEEE 1984, 72, 850–866. [Google Scholar] [CrossRef]

- Psaltis, D.; Brady, D.; Gu, X.-G.; Lin, S. Holography in Artificial Neural Networks. Nature 1990, 343, 325–330. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural. Inf. Process Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Vandoorne, K.; Mechet, P.; Van Vaerenbergh, T.; Fiers, M.; Morthier, G.; Verstraeten, D.; Schrauwen, B.; Dambre, J.; Bienstman, P. Experimental Demonstration of Reservoir Computing on a Silicon Photonics Chip. Nat. Commun. 2014, 5, 4541. [Google Scholar] [CrossRef] [PubMed]

- Shainline, J.M.; Buckley, S.M.; Mirin, R.P.; Nam, S.W. Superconducting Optoelectronic Circuits for Neuromorphic Computing. Phys. Rev. Appl. 2017, 7, 034013. [Google Scholar] [CrossRef]

- Shen, Y.; Harris, N.C.; Skirlo, S.; Prabhu, M.; Baehr-Jones, T.; Hochberg, M.; Sun, X.; Zhao, S.; Larochelle, H.; Englund, D.; et al. Deep Learning with Coherent Nanophotonic Circuits. Nat. Photonics 2017, 11, 441–446. [Google Scholar] [CrossRef]

- Tait, A.N.; De Lima, T.F.; Zhou, E.; Wu, A.X.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Neuromorphic Photonic Networks Using Silicon Photonic Weight Banks. Sci. Rep. 2017, 7, 7430. [Google Scholar] [CrossRef]

- Nahmias, M.A.; Shastri, B.J.; Tait, A.N.; Ferreira de Lima, T.; Prucnal, P.R. Neuromorphic Photonics. Opt. Photonics News. 2018, 29, 34. [Google Scholar] [CrossRef]

- Lin, X.; Rivenson, Y.; Yardimci, N.T.; Veli, M.; Luo, Y.; Jarrahi, M.; Ozcan, A. All-Optical Machine Learning Using Diffractive Deep Neural Networks. Science 2018, 361, 1004–1008. [Google Scholar] [CrossRef]

- Feldmann, J.; Youngblood, N.; Karpov, M.; Gehring, H.; Li, X.; Stappers, M.; Le Gallo, M.; Fu, X.; Lukashchuk, A.; Raja, A.S.; et al. Parallel Convolutional Processing Using an Integrated Photonic Tensor Core. Nature 2021, 589, 52–58. [Google Scholar] [CrossRef]

- Wu, C.; Yang, X.; Yu, H.; Peng, R.; Takeuchi, I.; Chen, Y.; Li, M. Harnessing Optoelectronic Noises in a Photonic Generative Network. Sci. Adv. 2022, 8, abm2956. [Google Scholar] [CrossRef] [PubMed]

- Fu, T.; Zang, Y.; Huang, Y.; Du, Z.; Huang, H.; Hu, C.; Chen, M.; Yang, S.; Chen, H. Photonic Machine Learning with On-Chip Diffractive Optics. Nat. Commun. 2023, 14, 70. [Google Scholar] [CrossRef]

- Hughes, T.W.; Minkov, M.; Shi, Y.; Fan, S. Training of Photonic Neural Networks through in Situ Backpropagation and Gradient Measurement. Optica 2018, 5, 864. [Google Scholar] [CrossRef]

- Robertson, J.; Hejda, M.; Bueno, J.; Hurtado, A. Ultrafast Optical Integration and Pattern Classification for Neuromorphic Photonics Based on Spiking VCSEL Neurons. Sci. Rep. 2020, 10, 6098. [Google Scholar] [CrossRef]

- Chen, Z.; Sludds, A.; Davis, R.; Christen, I.; Bernstein, L.; Ateshian, L.; Heuser, T.; Heermeier, N.; Lott, J.A.; Reitzenstein, S.; et al. Deep Learning with Coherent VCSEL Neural Networks. Nat. Photonics 2023, 17, 723–730. [Google Scholar] [CrossRef]

- Sawada, J.; Akopyan, F.; Cassidy, A.S.; Taba, B.; Debole, M.V.; Datta, P.; Alvarez-Icaza, R.; Amir, A.; Arthur, J.V.; Andreopoulos, A.; et al. TrueNorth Ecosystem for Brain-Inspired Computing: Scalable Systems, Software, and Applications. In Proceedings of the SC16: International Conference for High Performance Computing, Networking, Storage and Analysis, Salt Lake City, UT, USA, 13–18 November 2016; pp. 130–141. [Google Scholar]

- Siegel, J. With a Systems Approach to Chips, Microsoft Aims to Tailor Everything ‘from Silicon to Service’ to Meet AI Demand. Microsoft News, 15 November 2023. Available online: https://news.microsoft.com/source/features/ai/in-house-chips-silicon-to-service-to-meet-ai-demand/ (accessed on 1 June 2025).

- Miller, D.A.B. Rationale and Challenges for Optical Interconnects to Electronic Chips. Proc. IEEE 2000, 88, 728–749. [Google Scholar] [CrossRef]

- Nozaki, K.; Matsuo, S.; Fujii, T.; Takeda, K.; Shinya, A.; Kuramochi, E.; Notomi, M. Femtofarad Optoelectronic Integration Demonstrating Energy-Saving Signal Conversion and Nonlinear Functions. Nat. Photonics 2019, 13, 454–459. [Google Scholar] [CrossRef]

- Han, J.; Jentzen, A.; Weinan, E. Solving High-Dimensional Partial Differential Equations Using Deep Learning. Proc. Natl. Acad. Sci. USA 2018, 115, 8505–8510. [Google Scholar] [CrossRef]

- Tait, A.N.; Ma, P.Y.; Ferreira de Lima, T.; Blow, E.C.; Chang, M.P.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Demonstration of Multivariate Photonics: Blind Dimensionality Reduction with Integrated Photonics. J. Light. Technol. 2019, 37, 5996–6006. [Google Scholar] [CrossRef]

- Markram, H. The Human Brain Project. Sci. Am. 2012, 306, 50–55. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023. [Google Scholar] [CrossRef]

- Goodman, J.W.; Dias, A.R.; Woody, L.M. Fully Parallel, High-Speed Incoherent Optical Method for Performing Discrete Fourier Transforms. Opt. Lett. 1978, 2, 1. [Google Scholar] [CrossRef]

- Keyes, R.W. Optical Logic-in the Light of Computer Technology. Opt. Acta: Int. J. Opt. 1985, 32, 525–535. [Google Scholar] [CrossRef]

- Patel, D.; Ghosh, S.; Chagnon, M.; Samani, A.; Veerasubramanian, V.; Osman, M.; Plant, D.V. Design, Analysis, and Transmission System Performance of a 41 GHz Silicon Photonic Modulator. Opt. Express 2015, 23, 14263. [Google Scholar] [CrossRef]

- Argyris, A. Photonic Neuromorphic Technologies in Optical Communications. Nanophotonics 2022, 11, 897–916. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Dong, J.; Cheng, J.; Dong, W.; Huang, C.; Shen, Y.; Zhang, Q.; Gu, M.; Qian, C.; Chen, H.; et al. Photonic Matrix Multiplication Lights up Photonic Accelerator and Beyond. Light Sci. Appl. 2022, 11, 30. [Google Scholar] [CrossRef] [PubMed]

- Carolan, J.; Harrold, C.; Sparrow, C.; Martín-López, E.; Russell, N.J.; Silverstone, J.W.; Shadbolt, P.J.; Matsuda, N.; Oguma, M.; Itoh, M.; et al. Universal Linear Optics. Science 2015, 349, 711–716. [Google Scholar] [CrossRef]

- Moridsadat, M.; Tamura, M.; Chrostowski, L.; Shekhar, S.; Shastri, B.J. Design Methodology for Silicon Organic Hybrid Modulators: From Physics to System-Level Modeling. arXiv 2024. [Google Scholar] [CrossRef]

- Gaeta, A.L.; Lipson, M.; Kippenberg, T.J. Photonic-Chip-Based Frequency Combs. Nat. Photonics 2019, 13, 158–169. [Google Scholar] [CrossRef]

- Xavier, J.; Probst, J.; Becker, C. Deterministic Composite Nanophotonic Lattices in Large Area for Broadband Applications. Sci. Rep. 2016, 6, 38744. [Google Scholar] [CrossRef]

- Xavier, J.; Probst, J.; Back, F.; Wyss, P.; Eisenhauer, D.; Löchel, B.; Rudigier-Voigt, E.; Becker, C. Quasicrystalline-Structured Light Harvesting Nanophotonic Silicon Films on Nanoimprinted Glass for Ultra-Thin Photovoltaics. Opt. Mater. Express 2014, 4, 2290. [Google Scholar] [CrossRef]

- Stojanović, V.; Ram, R.J.; Popović, M.; Lin, S.; Moazeni, S.; Wade, M.; Sun, C.; Alloatti, L.; Atabaki, A.; Pavanello, F.; et al. Monolithic Silicon-Photonic Platforms in State-of-the-Art CMOS SOI Processes [Invited]. Opt. Express 2018, 26, 13106. [Google Scholar] [CrossRef]

- Sun, C.; Wade, M.T.; Lee, Y.; Orcutt, J.S.; Alloatti, L.; Georgas, M.S.; Waterman, A.S.; Shainline, J.M.; Avizienis, R.R.; Lin, S.; et al. Single-Chip Microprocessor That Communicates Directly Using Light. Nature 2015, 528, 534–538. [Google Scholar] [CrossRef] [PubMed]

- Bogaerts, W.; Chrostowski, L. Silicon Photonics Circuit Design: Methods, Tools and Challenges. Laser Photon. Rev. 2018, 12, 201700237. [Google Scholar] [CrossRef]

- Tait, A.N.; De Lima, T.F.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Continuous Calibration of Microring Weights for Analog Optical Networks. IEEE Photonics Technol. Lett. 2016, 28, 887–890. [Google Scholar] [CrossRef]

- Ríos, C.; Youngblood, N.; Cheng, Z.; Le Gallo, M.; Pernice, W.H.P.; Wright, C.D.; Sebastian, A.; Bhaskaran, H. In-Memory Computing on a Photonic Platform. Sci. Adv. 2019, 5, aau5759. [Google Scholar] [CrossRef]

- Rios, C.; Stegmaier, M.; Hosseini, P.; Wang, D.; Scherer, T.; Wright, C.D.; Bhaskaran, H.; Pernice, W.H.P. Integrated All-Photonic Non-Volatile Multi-Level Memory. Nat. Photonics 2015, 9, 725–732. [Google Scholar] [CrossRef]

- Cheng, Z.; Ríos, C.; Pernice, W.H.P.; Wright, C.D.; Bhaskaran, H. On-Chip Photonic Synapse. Sci. Adv. 2017, 3, 1700160. [Google Scholar] [CrossRef]

- Seoane, J.J.; Parra, J.; Navarro-Arenas, J.; Recaman, M.; Schouteden, K.; Locquet, J.P.; Sanchis, P. Ultra-High Endurance Silicon Photonic Memory Using Vanadium Dioxide. NPJ Nanophotonics 2024, 1, 37. [Google Scholar] [CrossRef]

- Pintus, P.; Dumont, M.; Shah, V.; Murai, T.; Shoji, Y.; Huang, D.; Moody, G.; Bowers, J.E.; Youngblood, N. Integrated Non-Reciprocal Magneto-Optics with Ultra-High Endurance for Photonic in-Memory Computing. Nat. Photonics 2024, 19, 54–62. [Google Scholar] [CrossRef]

- Betker, J.; Goh, G.; Jing, L.; Brooks, T.; Wang, J.; Li, L.; Ouyang, L.; Zhuang, J.; Lee, J.; Guo, Y.; et al. Improving Image Generation with Better Captions. Available online: https://cdn.openai.com/papers/dall-e-3.pdf (accessed on 25 February 2024).

- Xu, B.; Huang, Y.; Fang, Y.; Wang, Z.; Yu, S.; Xu, R. Recent Progress of Neuromorphic Computing Based on Silicon Photonics: Electronic–Photonic Co-Design, Device, and Architecture. Photonics 2022, 9, 698. [Google Scholar] [CrossRef]

- Bai, Y.; Xu, X.; Tan, M.; Sun, Y.; Li, Y.; Wu, J.; Morandotti, R.; Mitchell, A.; Xu, K.; Moss, D.J. Photonic Multiplexing Techniques for Neuromorphic Computing. Nanophotonics 2023, 12, 795–817. [Google Scholar] [CrossRef]

- Marković, D.; Mizrahi, A.; Querlioz, D.; Grollier, J. Physics for Neuromorphic Computing. Nat. Rev. Phys. 2020, 2, 499–510. [Google Scholar] [CrossRef]

- Jaeger, H.; Haas, H. Harnessing Nonlinearity: Predicting Chaotic Systems and Saving Energy in Wireless Communication. Science 2004, 304, 78–80. [Google Scholar] [CrossRef] [PubMed]

- Maass, W. Networks of Spiking Neurons: The Third Generation of Neural Network Models. Neural Netw. 1997, 10, 1659–1671. [Google Scholar] [CrossRef]

- Song, S.; Miller, K.D.; Abbott, L.F. Competitive Hebbian Learning through Spike-Timing-Dependent Synaptic Plasticity. Nat. Neurosci 2000, 3, 919–926. [Google Scholar] [CrossRef]

- Reck, M.; Zeilinger, A.; Bernstein, H.J.; Bertani, P. Experimental Realization of Any Discrete Unitary Operator. Phys. Rev. Lett. 1994, 73, 58–61. [Google Scholar] [CrossRef]

- Chiles, J.; Buckley, S.M.; Nam, S.W.; Mirin, R.P.; Shainline, J.M. Design, Fabrication, and Metrology of 10 × 100 Multi-Planar Integrated Photonic Routing Manifolds for Neural Networks. APL Photonics 2018, 3, 5039641. [Google Scholar] [CrossRef]

- Jayatilleka, H.; Murray, K.; Guillén-Torres, M.Á.; Caverley, M.; Hu, R.; Jaeger, N.A.F.; Chrostowski, L.; Shekhar, S. Wavelength Tuning and Stabilization of Microring-Based Filters Using Silicon in-Resonator Photoconductive Heaters. Opt. Express 2015, 23, 25084. [Google Scholar] [CrossRef]

- Tait, A.N.; Jayatilleka, H.; De Lima, T.F.; Ma, P.Y.; Nahmias, M.A.; Shastri, B.J.; Shekhar, S.; Chrostowski, L.; Prucnal, P.R. Feedback Control for Microring Weight Banks. Opt. Express 2018, 26, 26422. [Google Scholar] [CrossRef]

- Bogaerts, W.; De Heyn, P.; Van Vaerenbergh, T.; De Vos, K.; Kumar Selvaraja, S.; Claes, T.; Dumon, P.; Bienstman, P.; Van Thourhout, D.; Baets, R. Silicon Microring Resonators. Laser Photon. Rev. 2012, 6, 47–73. [Google Scholar] [CrossRef]

- Tait, A.N.; Wu, A.X.; De Lima, T.F.; Zhou, E.; Shastri, B.J.; Nahmias, M.A.; Prucnal, P.R. Microring Weight Banks. IEEE J. Sel. Top. Quantum Electron. 2016, 22, 312–325. [Google Scholar] [CrossRef]

- Shi, B.; Calabretta, N.; Stabile, R. Deep Neural Network Through an InP SOA-Based Photonic Integrated Cross-Connect. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 1–11. [Google Scholar] [CrossRef]

- Brunner, D.; Soriano, M.C.; Mirasso, C.R.; Fischer, I. Parallel Photonic Information Processing at Gigabyte per Second Data Rates Using Transient States. Nat. Commun. 2013, 4, 2368. [Google Scholar] [CrossRef]

- Komljenovic, T.; Davenport, M.; Hulme, J.; Liu, A.Y.; Santis, C.T.; Spott, A.; Srinivasan, S.; Stanton, E.J.; Zhang, C.; Bowers, J.E. Heterogeneous Silicon Photonic Integrated Circuits. J. Light. Technol. 2016, 34, 20–35. [Google Scholar] [CrossRef]

- Wang, Y.; Lv, Z.; Chen, J.; Wang, Z.; Zhou, Y.; Zhou, L.; Chen, X.; Han, S. Photonic Synapses Based on Inorganic Perovskite Quantum Dots for Neuromorphic Computing. Adv. Mater. 2018, 30, 201802883. [Google Scholar] [CrossRef]

- Sorianello, V.; Midrio, M.; Contestabile, G.; Asselberghs, I.; Van Campenhout, J.; Huyghebaert, C.; Goykhman, I.; Ott, A.K.; Ferrari, A.C.; Romagnoli, M. Graphene–Silicon Phase Modulators with Gigahertz Bandwidth. Nat. Photonics 2018, 12, 40–44. [Google Scholar] [CrossRef]

- He, M.; Xu, M.; Ren, Y.; Jian, J.; Ruan, Z.; Xu, Y.; Gao, S.; Sun, S.; Wen, X.; Zhou, L.; et al. High-Performance Hybrid Silicon and Lithium Niobate Mach–Zehnder Modulators for 100 Gbit S−1 and beyond. Nat. Photonics 2019, 13, 359–364. [Google Scholar] [CrossRef]

- Harris, N.C.; Ma, Y.; Mower, J.; Baehr-Jones, T.; Englund, D.; Hochberg, M.; Galland, C. Efficient, Compact and Low Loss Thermo-Optic Phase Shifter in Silicon. Opt. Express 2014, 22, 10487. [Google Scholar] [CrossRef]

- Hill, M.T.; Frietman, E.E.E.; de Waardt, H.; Khoe, G.-d.; Dorren, H.J.S. All Fiber-Optic Neural Network Using Coupled SOA Based Ring Lasers. IEEE Trans Neural. Netw. 2002, 13, 1504–1513. [Google Scholar] [CrossRef]

- Selmi, F.; Braive, R.; Beaudoin, G.; Sagnes, I.; Kuszelewicz, R.; Barbay, S. Relative Refractory Period in an Excitable Semiconductor Laser. Phys. Rev. Lett. 2014, 112, 183902. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Ren, G.; Feleppa, T.; Liu, X.; Boes, A.; Mitchell, A.; Lowery, A.J. Self-Calibrating Programmable Photonic Integrated Circuits. Nat. Photonics 2022, 16, 595–602. [Google Scholar] [CrossRef]

- Hamerly, R.; Bandyopadhyay, S.; Englund, D. Asymptotically Fault-Tolerant Programmable Photonics. Nat. Commun 2022, 13, 6831. [Google Scholar] [CrossRef]

- Beri, S.; Mashall, L.; Gelens, L.; Van der Sande, G.; Mezosi, G.; Sorel, M.; Danckaert, J.; Verschaffelt, G. Excitability in Optical Systems Close to -Symmetry. Phys. Lett. A 2010, 374, 739–743. [Google Scholar] [CrossRef]

- Gelens, L.; Mashal, L.; Beri, S.; Coomans, W.; Van der Sande, G.; Danckaert, J.; Verschaffelt, G. Excitability in Semiconductor Microring Lasers: Experimental and Theoretical Pulse Characterization. Phys. Rev. A 2010, 82, 063841. [Google Scholar] [CrossRef]

- Peng, H.T.; Nahmias, M.A.; De Lima, T.F.; Tait, A.N.; Shastri, B.J.; Prucnal, P.R. Neuromorphic Photonic Integrated Circuits. IEEE J. Sel. Top. Quantum Electron. 2018, 24, 2840448. [Google Scholar] [CrossRef]

- Wang, Y.E.; Wei, G.Y.; Brooks, D. Benchmarking TPU, GPU, and CPU Platforms for Deep Learning. arXiv 2019. [Google Scholar] [CrossRef]

- Davies, M. Benchmarks for Progress in Neuromorphic Computing. Nat. Mach. Intell. 2019, 1, 386–388. [Google Scholar] [CrossRef]

- Xiang, S.; Han, Y.; Song, Z.; Guo, X.; Zhang, Y.; Ren, Z.; Wang, S.; Ma, Y.; Zou, W.; Ma, B.; et al. A Review: Photonics Devices, Architectures, and Algorithms for Optical Neural Computing. J. Semicond. 2021, 42, 023105. [Google Scholar] [CrossRef]

- Dong, B.; Aggarwal, S.; Zhou, W.; Ali, U.E.; Farmakidis, N.; Lee, J.S.; He, Y.; Li, X.; Kwong, D.-L.; Wright, C.D.; et al. Higher-Dimensional Processing Using a Photonic Tensor Core with Continuous-Time Data. Nat. Photonics 2023, 17, 1080–1088. [Google Scholar] [CrossRef]

- Bueno, J.; Maktoobi, S.; Froehly, L.; Fischer, I.; Jacquot, M.; Larger, L.; Brunner, D. Reinforcement Learning in a Large-Scale Photonic Recurrent Neural Network. Optica 2018, 5, 756. [Google Scholar] [CrossRef]

- Freiberger, M.; Katumba, A.; Bienstman, P.; Dambre, J. Training Passive Photonic Reservoirs with Integrated Optical Readout. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1943–1953. [Google Scholar] [CrossRef] [PubMed]

- Boshgazi, S.; Jabbari, A.; Mehrany, K.; Memarian, M. Virtual Reservoir Computer Using an Optical Resonator. Opt. Mater. Express 2022, 12, 1140. [Google Scholar] [CrossRef]

- Duport, F.; Schneider, B.; Smerieri, A.; Haelterman, M.; Massar, S. All-Optical Reservoir Computing. Opt. Express 2012, 20, 22783. [Google Scholar] [CrossRef]

- Shainline, J.M.; Buckley, S.M.; McCaughan, A.N.; Chiles, J.T.; Jafari Salim, A.; Castellanos-Beltran, M.; Donnelly, C.A.; Schneider, M.L.; Mirin, R.P.; Nam, S.W. Superconducting Optoelectronic Loop Neurons. J. Appl. Phys. 2019, 126, 5096403. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Wang, R.; Chen, J.; Zou, W. High-Accuracy Optical Convolution Unit Architecture for Convolutional Neural Networks by Cascaded Acousto-Optical Modulator Arrays. Opt. Express 2019, 27, 19778. [Google Scholar] [CrossRef]

- Zhang, H.; Gu, M.; Jiang, X.D.; Thompson, J.; Cai, H.; Paesani, S.; Santagati, R.; Laing, A.; Zhang, Y.; Yung, M.H.; et al. An Optical Neural Chip for Implementing Complex-Valued Neural Network. Nat. Commun. 2021, 12, 457. [Google Scholar] [CrossRef]

- Zhu, H.H.; Zou, J.; Zhang, H.; Shi, Y.Z.; Luo, S.B.; Wang, N.; Cai, H.; Wan, L.X.; Wang, B.; Jiang, X.D.; et al. Space-Efficient Optical Computing with an Integrated Chip Diffractive Neural Network. Nat. Commun. 2022, 13, 1044. [Google Scholar] [CrossRef]

- Roques-Carmes, C.; Shen, Y.; Zanoci, C.; Prabhu, M.; Atieh, F.; Jing, L.; Dubček, T.; Mao, C.; Johnson, M.R.; Čeperić, V.; et al. Heuristic Recurrent Algorithms for Photonic Ising Machines. Nat. Commun. 2020, 11, 249. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Yi, S.; Zou, W. High-Order Tensor Flow Processing Using Integrated Photonic Circuits. Nat. Commun. 2022, 13, 7970. [Google Scholar] [CrossRef]

- Xu, X.; Tan, M.; Corcoran, B.; Wu, J.; Boes, A.; Nguyen, T.G.; Chu, S.T.; Little, B.E.; Hicks, D.G.; Morandotti, R.; et al. 11 TOPS Photonic Convolutional Accelerator for Optical Neural Networks. Nature 2021, 589, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Yu, H.; Lee, S.; Peng, R.; Takeuchi, I.; Li, M. Programmable Phase-Change Metasurfaces on Waveguides for Multimode Photonic Convolutional Neural Network. Nat. Commun. 2021, 12, 96. [Google Scholar] [CrossRef]

- Robertson, J.; Kirkland, P.; Alanis, J.A.; Hejda, M.; Bueno, J.; Di Caterina, G.; Hurtado, A. Ultrafast Neuromorphic Photonic Image Processing with a VCSEL Neuron. Sci. Rep. 2022, 12, 4874. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, J.; Dan, Y.; Lanqiu, Y.; Dai, J.; Han, X.; Sun, X.; Xu, K. Efficient Training and Design of Photonic Neural Network through Neuroevolution. Opt. Express 2019, 27, 37150. [Google Scholar] [CrossRef]

- Antonik, P.; Marsal, N.; Brunner, D.; Rontani, D. Bayesian Optimisation of Large-Scale Photonic Reservoir Computers. Cogn. Comput. 2023, 15, 1452–1460. [Google Scholar] [CrossRef]

- Scellier, B.; Bengio, Y. Equilibrium Propagation: Bridging the Gap between Energy-Based Models and Backpropagation. Front Comput. Neurosci. 2017, 11, 00024. [Google Scholar] [CrossRef]

- Xu, T.; Zhang, W.; Zhang, J.; Luo, Z.; Xiao, Q.; Wang, B.; Luo, M.; Xu, X.; Shastri, B.J.; Prucnal, P.R.; et al. Control-Free and Efficient Silicon Photonic Neural Networks via Hardware-Aware Training and Pruning. arXiv 2024, arXiv:10.48550/arXiv.2401.08180. [Google Scholar]

- Zhao, X.; Lv, H.; Chen, C.; Tang, S.; Liu, X.; Qi, Q. On-Chip Reconfigurable Optical Neural Networks. Preprints 2021, 1–21. [Google Scholar] [CrossRef]

- Sebastian, A.; Le Gallo, M.; Burr, G.W.; Kim, S.; Bright Sky, M.; Eleftheriou, E. Tutorial: Brain-Inspired Computing Using Phase-Change Memory Devices. J. Appl. Phys. 2018, 124, 5042413. [Google Scholar] [CrossRef]

- Sun, J.; Kumar, R.; Sakib, M.; Driscoll, J.B.; Jayatilleka, H.; Rong, H. A 128 Gb/s PAM4 Silicon Microring Modulator with Integrated Thermo-Optic Resonance Tuning. J. Light. Technol. 2019, 37, 110–115. [Google Scholar] [CrossRef]

- Zhou, Z.; Yin, B.; Michel, J. On-Chip Light Sources for Silicon Photonics. Light Sci. Appl. 2015, 4, e358. [Google Scholar] [CrossRef]

- Prorok, S.; Petrov, A.Y.; Eich, M.; Luo, J.; Jen, A.K.-Y. Trimming of High-Q-Factor Silicon Ring Resonators by Electron Beam Bleaching. Opt. Lett. 2012, 37, 3114. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; Taheriniya, S.; Varri, A.; Ulanov, M.; Konyshev, I.; Krämer, L.; McRae, L.; Ebert, F.L.; Bankwitz, J.R.; Ma, X.; et al. Mode Conversion Trimming in Asymmetric Directional Couplers Enabled by Silicon Ion Implantation. Nano Lett. 2024, 24, 10813–10819. [Google Scholar] [CrossRef]

- Schrauwen, J.; Van Thourhout, D.; Baets, R. Trimming of Silicon Ring Resonator by Electron Beam Induced Compaction and Strain. Opt. Express 2008, 16, 3738. [Google Scholar] [CrossRef] [PubMed]

- Pérez, D.; Gasulla, I.; Das Mahapatra, P.; Capmany, J. Principles, Fundamentals, and Applications of Programmable Integrated Photonics. Adv. Opt. Photonics 2020, 12, 709. [Google Scholar] [CrossRef]

- Varri, A.; Taheriniya, S.; Brückerhoff-Plückelmann, F.; Bente, I.; Farmakidis, N.; Bernhardt, D.; Rösner, H.; Kruth, M.; Nadzeyka, A.; Richter, T.; et al. Scalable Non-Volatile Tuning of Photonic Computational Memories by Automated Silicon Ion Implantation. Adv. Mater. 2024, 36, 202310596. [Google Scholar] [CrossRef]

- Li, R.; Gong, Y.; Huang, H.; Zhou, Y.; Mao, S.; Wei, Z.; Zhang, Z. Photonics for Neuromorphic Computing: Fundamentals, Devices, and Opportunities. Adv. Mater. 2024, 37, 2312825. [Google Scholar] [CrossRef]

- Chen, S.; Li, W.; Wu, J.; Jiang, Q.; Tang, M.; Shutts, S.; Elliott, S.N.; Sobiesierski, A.; Seeds, A.J.; Ross, I.; et al. Electrically Pumped Continuous-Wave III–V Quantum Dot Lasers on Silicon. Nat. Photonics 2016, 10, 307–311. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, M.; Chen, X.; Bertrand, M.; Shams-Ansari, A.; Chandrasekhar, S.; Winzer, P.; Lončar, M. Integrated Lithium Niobate Electro-Optic Modulators Operating at CMOS-Compatible Voltages. Nature 2018, 562, 101–104. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ji, X.; Shen, J.; He, A.; Su, Y. What Can Be Integrated on the Silicon Photonics Platform and How? APL Photonics 2024, 9, 0220463. [Google Scholar] [CrossRef]

- Patel, D.; Samani, A.; Veerasubramanian, V.; Ghosh, S.; Plant, D.V. Silicon Photonic Segmented Modulator-Based Electro-Optic DAC for 100 Gb/s PAM-4 Generation. IEEE Photonics Technol. Lett. 2015, 27, 2433–2436. [Google Scholar] [CrossRef]

- Lam, S.; Khaled, A.; Bilodeau, S.; Marquez, B.A.; Prucnal, P.R.; Chrostowski, L.; Shastri, B.J.; Shekhar, S. Dynamic Electro-Optic Analog Memory for Neuromorphic Photonic Computing. arXiv 2024. [Google Scholar] [CrossRef]

| NPN Type [Ref.] | Synapse | Synaptic Memory | Photonic Neuron | Physics | Topology | Remarks |

|---|---|---|---|---|---|---|

| RC [49] | Node of reservoir with multiple feedback loops | 280 spy interconnection delay | Intrinsic nonlinearity of photodetector | Superposition principle | Reservoir | No power consumption in the reservoir and high bit rate scalability (>100 Gbit/s). Cannot be generalized for complex computing application. |

| SOC [50] | Interplanar or lateral waveguide coupler with electromechanically tunable coupling | MEMS capacitor. | Phase-change nanowires from superconducting-to-normal metal above a threshold induced by photon absorption arranged in parallel or series detector | Superconductivity and MEMS capacitance | ANN and SNN | Highly scalable, zero static power dissipation, extraordinary device efficiencies. Require cryogenic temperature (2K). Bandwidth limited to 1 GHz. |

| CNC [51] | OIU consisting of beamsplitters and phase shifters for unitary transformation and attenuators for diagonal matrix | NA | Nonlinear mathematical saturable absorber function | TO effect | Two-layer DNN | Can implement any arbitrary ANN. May allow online training. Bulky and requires high driving voltage. |

| B&W [52] | Reconfigurable TO-MRR filters | NA | Mach–Zehnder modulator | TO effect | CTRNN | Capable of implementing generalized reconfigurable RNN. Bandwidth limited to 1 GHz. |

| MN [22] | Optical waveguides integrated PCM on top, controlling propagating optical mode | GST Phase. | Optical ReLU designed via MRR-PCM on top | WDM and PCM dynamic | ANN | No waveguide crossings, no accumulation of errors and signal contamination. The endurance of GST is of ~104 switching cycles and sub-nanosecond operation speed. |

| DO [57] | Pretrained phase values on distinct hidden layers via SSSD | NA | Diffractive unit composed of three identical SSSD | Huygens–Fresnel principle and TO effect | Three-layer DNN | Scalable, simple structure design, and all-optical passive operation. Requiring external algorithmic compensation. |

| VNN [60] | Homodyne detection with phase-encoding | NA | Homodyne VCSEL nonlinearity | Lasing principle | Three-layer ANN | Scalable and can be integrated into 2D/3D arrays and inline nonlinearity. Limited by phase stability and integration scale. |

| NPN Type | Device Basic Unit [Reference] | Networks and Training | Comparison | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Topology | Training | Data (Train/Test) % | Application | Remark or Accuracy Exp. (Sim.) | NBUs/mm2 | Operational Power (pJ/FLOP) | Throughput (TOPS) | ||

| RC | Spiral nodes [49] | Reservoir | Fivefold cross-validation, ridge regression, and winner-takes-all approach | 10000 bits for Boolean task and 5-bit headers | Arbitrary Boolean logic and 5-bit header recognition | >99 (-) | 62,500 | 0 | 0.4 |

| SOC | SNSPD [50] | ANN And SNN | Backpropagation and STDP | - | - | Designed for Scalability | 7 to 4000 | 0.00014 | 19.6 |

| CNC | Tunable MZI [51] | Two-layer DNN | SGD | 360 data points (50:50) | Vowel recognition | 76.7 (91.7) | <10 | 0.07600 | 6.4 |

| B&W | TO-MMR [52] | CTRNN | Bifurcation analysis | 500 data points from 0.05 to 0.85 | Lorenz attractor | B&W is Isomorphic to CTRNN | 1600 | 288.0000 | 1.2 |

| MN | X-PCM [55] | CNN | Backpropagation | MNIST handwritten digits | Digit recognition | 95.3 (96.1) | <5 | 0.00590 | 28.8 |

| DO ## | SWU [57] | Three-layer DNN | Pretrained backpropagation (adaptive moment estimation) | Iris (80:20) and MNIST handwritten digits (85:15) | Classification | 90 (90) and 86 (96.3) | 2000 | 0.00001 | 13,800.0 |

| VNN | VCSEL arrays [60] | Optical NN | Backpropagation | MNIST handwritten digits | Image classification | 93.1 (95.1) | 6 | 0.007 | 50 |

| For more comprehensive information, readers may also refer to other reported works [56,58,59,110,111,118,127,128,129,130,131]. | |||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gupta, S.; Xavier, J. Neuromorphic Photonic On-Chip Computing. Chips 2025, 4, 34. https://doi.org/10.3390/chips4030034

Gupta S, Xavier J. Neuromorphic Photonic On-Chip Computing. Chips. 2025; 4(3):34. https://doi.org/10.3390/chips4030034

Chicago/Turabian StyleGupta, Sujal, and Jolly Xavier. 2025. "Neuromorphic Photonic On-Chip Computing" Chips 4, no. 3: 34. https://doi.org/10.3390/chips4030034

APA StyleGupta, S., & Xavier, J. (2025). Neuromorphic Photonic On-Chip Computing. Chips, 4(3), 34. https://doi.org/10.3390/chips4030034