Predicting Maturity of Coconut Fruit from Acoustic Signal with Applications of Deep Learning †

Abstract

1. Introduction

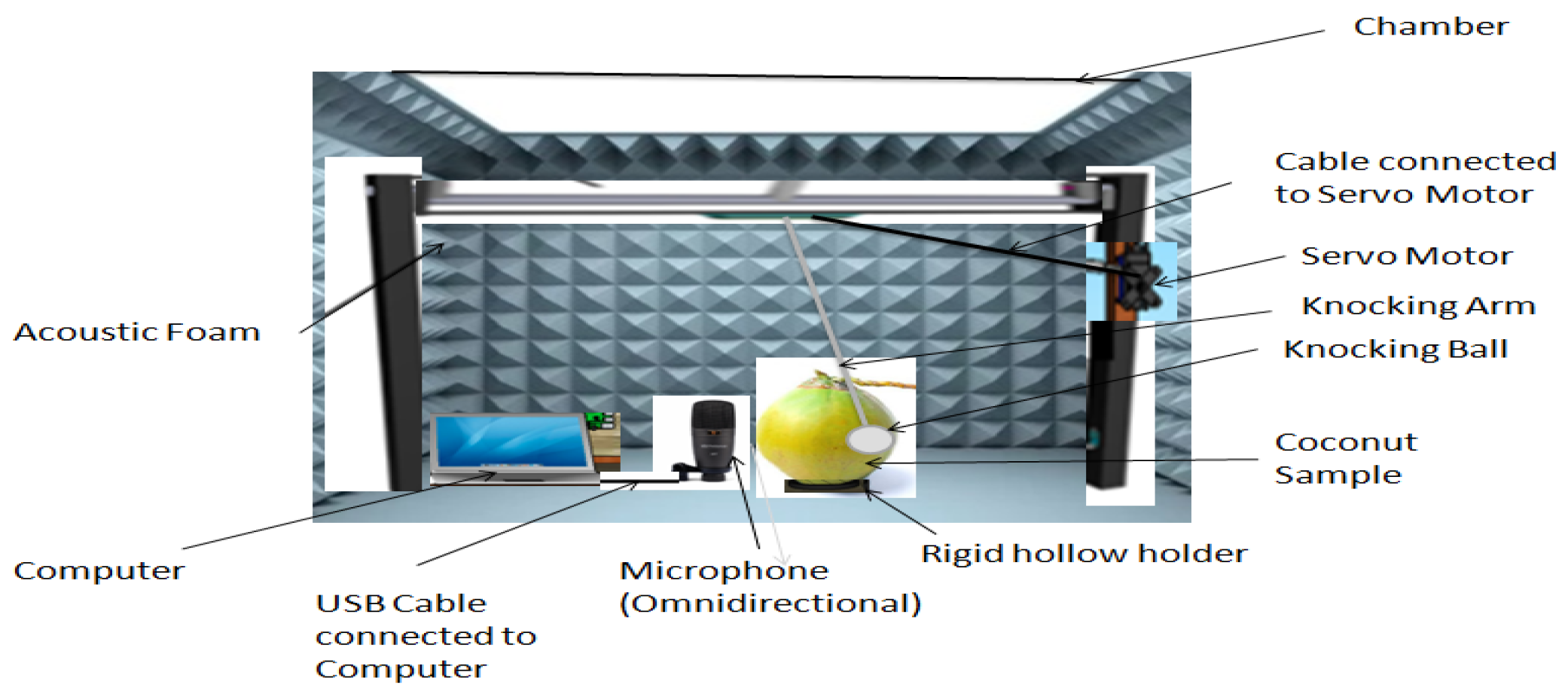

2. Materials and Methods

2.1. Data

2.2. Proposed Scheme

2.2.1. Wavelet Scattering Transform (WST)

2.2.2. Customized CNN

2.2.3. Pretrained CNNs

- ResNet50ResNet50 is a convolutional neural network (CNN) based on a deep residual learning framework while training very deep networks with hundreds of layers [14]. This architecture introduces the idea of the Residual Network (ResNet) to address the problems of vanishing and exploding gradients. ResNet50 consists of a total of 50 layers (48 convolutional layers, 1 max pooling layer, and 1 average pooling layer), and the network structure is divided into 4 blocks when each block has a set of residual blocks. The residual blocks are constructed to preserve information from earlier layers and this enables the network to learn better representations for the input data. The main feature of ResNet is the skip connection to propagate information over layers to enable building deeper networks.

- InceptionV3InceptionV3 [15] is a CNN-based network introducing “inception module”, which is composed of a concatenated layer with 1 × 1, 3 × 3, and 5 × 5 convolutions. The InceptionV3 model has a total of 42 layers and consists of multiple layers of convolutional and pooling operations, followed by fully connected layers. One of the key features of InceptionV3 is its ability to scale to large datasets and to handle images of varying resolutions and sizes. The method has reduced the number of parameters accelerating the training rate. The other name of this network is GoogLeNet model. The advantages of InceptionV3 are as follows: factorization into smaller convolutions, grid size reduction, and use of auxiliary classifiers to tackle the vanishing gradient problem during the training of a very deep network [16].

- MobileNetV2MobileNetV2 [17] is a convolutional neural network consisting of 53 layers and uses depth-wise separable convolutions to reduce the model size and complexity. The computationally expensive convolutional layers are then replaced here by depthwise separable convolution, which is constructed by a 3 × 3 depthwise convolutional layer followed by a 1 × 1 convolutional layer. MobileNetV2 is a modified version of the MobileNetV1 network achieved by adding inverted residuals and linear bottleneck layers [18]. Both ReLU6 activation function and linear activation function are used in the MobileNetV2 [19]. The inclusion of a linear activation function is made possible in the MobileNetV2 network to reduce the information loss by considering an inverse residual structure with a low-dimensional feature at the final output. Moreover, in contrast to the ResNet network, this network provides shortcut connections only when stride = 1 [20].

3. Results and Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Caladcada, J.A.; Cabahug, S.; Catamcoa, M.R.; Villaceran, P.E.; Cosgafa, L.; Cabizares, k.N.; Hermosilla, M.; Piedad, E.J. Determining Philippine coconut maturity level using machine learning algorithms based on acoustic signal. Comput. Electron. Agric. 2020, 172, 105327. [Google Scholar] [CrossRef]

- Subramanian, P.; Sankar, T.S. Coconut Maturity Recognition Using Convolutional Neural Network. In Computer Vision and Machine Learning in Agriculture; Uddin, M.S., Bansal, J.C., Eds.; Algorithms for Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2. [Google Scholar] [CrossRef]

- El-Shafeiy, E.; Abohany, A.A.; Elmessery, W.M.; El-Mageed, A.A.A. Estimation of coconut maturity based on fuzzy neural network and sperm whale optimization. Neural Comput. Appl. 2023, 35, 19541–19564. [Google Scholar] [CrossRef]

- Selshia, A. Coconut Palm Disease And Coconut Maturity Prediction Using Image Processing And Deep Learning. Int. J. Creat. Res. Thoughts (IJCRT) 2023, 11, 260–265. [Google Scholar]

- Parvathi, S.; Selvi, S.T. Detection of maturity stages of coconuts in complex background using Faster R-CNN model. Biosyst. Eng. 2021, 202, 119–132. [Google Scholar] [CrossRef]

- Fadchar, N.A.; Cruz, J.C.D. A Non-Destructive Approach of Young Coconut Maturity Detection using Acoustic Vibration and Neural Network. In Proceedings of the 16th IEEE International Colloquium on Signal Processing & Its Applications (CSPA), Langkawi, Malaysia, 28–29 February 2020. [Google Scholar]

- Fadchar, N.A.; Cruz, J.C.D. Design and Development of a Neural Network—Based Coconut Maturity Detector Using Sound Signatures. In Proceedings of the 7th IEEE International Conference on Industrial Engineering and Applications (ICIEA), Bangkok, Thailand, 16–21 April 2020. [Google Scholar]

- Javel, I.M.; Bandala, A.A.; Salvador, R.C., Jr.; Bedruz, R.A.R.; Dadios, E.P.; Vicerra, R.R.P. Coconut Fruit Maturity Classification Using Fuzzy Logic. In Proceedings of the 10th IEEE International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, 29 November–2 December 2018. [Google Scholar]

- Varur, S.; Mainale, S.; Korishetty, S.; Shanbhag, A.; Kulkarni, U.; Meena, S.M. Classification of Maturity Stages of Coconuts using Deep Learning on Embedded Platforms. In Proceedings of the 3rd IEEE International Conference on Smart Data Intelligence (ICSMDI), Trichy, India, 30–31 March 2023. [Google Scholar]

- Caladcada, J.A.; Piedadb, E.J. Acoustic dataset of coconut (Cocos nucifera) based on tapping system. Data Brief 2023, 47, 1–7. [Google Scholar] [CrossRef]

- Parmar, S.; Paunwala, C. A novel and efficient Wavelet Scattering Transform approach for primitive-stage dyslexia-detection using electroencephalogram signals. Healthc. Anal. 2023, 3, 100194. [Google Scholar] [CrossRef]

- Tanveer, M.H.; Zhu, H.; Ahmed, W.; Thomas, A.; Imran, B.M.; Salman, M. Mel-Spectrogram and Deep CNN Based Representation Learning from Bio-Sonar Implementation on UAVs. In Proceedings of the IEEE International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 8–10 January 2021. [Google Scholar]

- Sattar, F. A New Acoustical Autonomous Method for Identifying Endangered Whale Calls: A Case Study of Blue Whale and Fin Whale. Sensors 2023, 23, 3048. [Google Scholar] [CrossRef] [PubMed]

- Wisdom, M.L. 2023. Available online: https://wisdomml.in/understanding-resnet-50-in-depth-architecture-skip-connections-and-advantages-over-other-networks/ (accessed on 15 August 2023).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Srinivas, K.; Gagana Sri, R.; Pravallika, K.; Nishitha, K.; Polamuri, S.R. COVID-19 prediction based on hybrid Inception V3 with VGG16 using chest X-ray images. Multimed Tools Appl. 2023, 1–18. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Yong, L.; Ma, L.; Sun, D.; Du, L. Application of MobileNetV2 to waste classification. PLoS ONE 2023, 18, e0282336. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Wu, Z.Z.; Wu, Z.J.; Zou, L.; Xu, L.X.; Wang, X.F. Lightweight Neural Network Based Garbage Image Classification Using a Deep Mutual Learning. In Proceedings of the International Symposium on Parallel Architectures, Algorithms and Programming, Shenzhen, China, 28–30 December 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 212–223. [Google Scholar]

| Types of Coconuts | Number of Samples |

|---|---|

| Premature coconut | 8 |

| Mature coconut | 36 |

| Overmature coconut | 78 |

| Method | Accuracy (%) (Mean ± Std) | F1 Score (Mean ± Std) |

|---|---|---|

| WST + Customized CNN | 61.30 ± 2.48 | 0.29 ± 0.03 |

| ResNet50: 84.25 ± 8.59 | 0.74 ± 0.19 | |

| WST + Pretrained CNN | InceptionV3: 77.32 ± 10.28 | 0.48 ± 0.12 |

| MobileNetV2: 73.12 ± 6.30 | 0.53 ± 0.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sattar, F. Predicting Maturity of Coconut Fruit from Acoustic Signal with Applications of Deep Learning. Biol. Life Sci. Forum 2024, 30, 16. https://doi.org/10.3390/IOCAG2023-16880

Sattar F. Predicting Maturity of Coconut Fruit from Acoustic Signal with Applications of Deep Learning. Biology and Life Sciences Forum. 2024; 30(1):16. https://doi.org/10.3390/IOCAG2023-16880

Chicago/Turabian StyleSattar, Farook. 2024. "Predicting Maturity of Coconut Fruit from Acoustic Signal with Applications of Deep Learning" Biology and Life Sciences Forum 30, no. 1: 16. https://doi.org/10.3390/IOCAG2023-16880

APA StyleSattar, F. (2024). Predicting Maturity of Coconut Fruit from Acoustic Signal with Applications of Deep Learning. Biology and Life Sciences Forum, 30(1), 16. https://doi.org/10.3390/IOCAG2023-16880