A Two-Stage Numerical Algorithm for the Simultaneous Extraction of All Zeros of Meromorphic Functions

Abstract

1. Introduction

- (i)

- We use a new effective empirical method for locating the poles of f only by tracking its phase;

- (ii)

- Our algorithm may not require the computation of any derivatives, depending on the choice of the method at the second stage;

- (iii)

- We compute all the zeros of f at once with high accuracy.

2. Description of the Algorithm

2.1. The General Algorithm

- Stage 1. Take a rectangle containing the domain and compute in any node of a mesh of points. Identifying the points where , for a preselected real number K (a suitable choice is ), cover the rectangle with squares or circles with side (radius) r, which is preselected depending on the function f, namely, the closer the poles or the poles and roots of f are to each other, a smaller r should be chosen. To sift out the false poles, we track the changing of on these squares (circles). Note that, decreases around a pole. If more than one pole or pole with roots are detected in some of the squares, we chose a smaller r and track the changing of on the newly taken squares (circles). This procedure is repeated until we isolate all the poles of f. Then, by setting to be the domains containing the poles of f, we obtain a domainin which the function f is analytic. Finally, setting Γ to be the closure of D and applying Theorem 2, we obtain the coefficients of the corresponding polynomial P.

- Stage 2. Choose an initial vector and a simultaneous method to apply it for computing all the zeros of P.

2.2. Our Implementation

- Stage 1. In our implementation, we consider as a square with side R meshed by points and cover it with circles with radius r which is different in the different examples. Identifying the nodes with , on any of the circles we apply Cauchy’s argument principle and track the continuity of by the function unwrap(angle(f)) of MATLAB in order to detect the number of poles in it. Thus, we extract the domain D in which the function f is analytic. Then, computing the integrals in Theorem 2 by the vectorized adaptive quadrature ([25]), we get the coefficients of the polynomial P.

- Stage 2. Using the second coefficient and the degree n of the polynomial P, we generate Aberth’s initial approximation (see [26]) , which is given as follows:where . Then, in order to avoid any usage of derivatives, we use the following family of cubically convergent simultaneous methods that has been constructed and studied in [27,28]:with the iteration function being defined bywhere , while W is the above-defined Weierstrass correction.

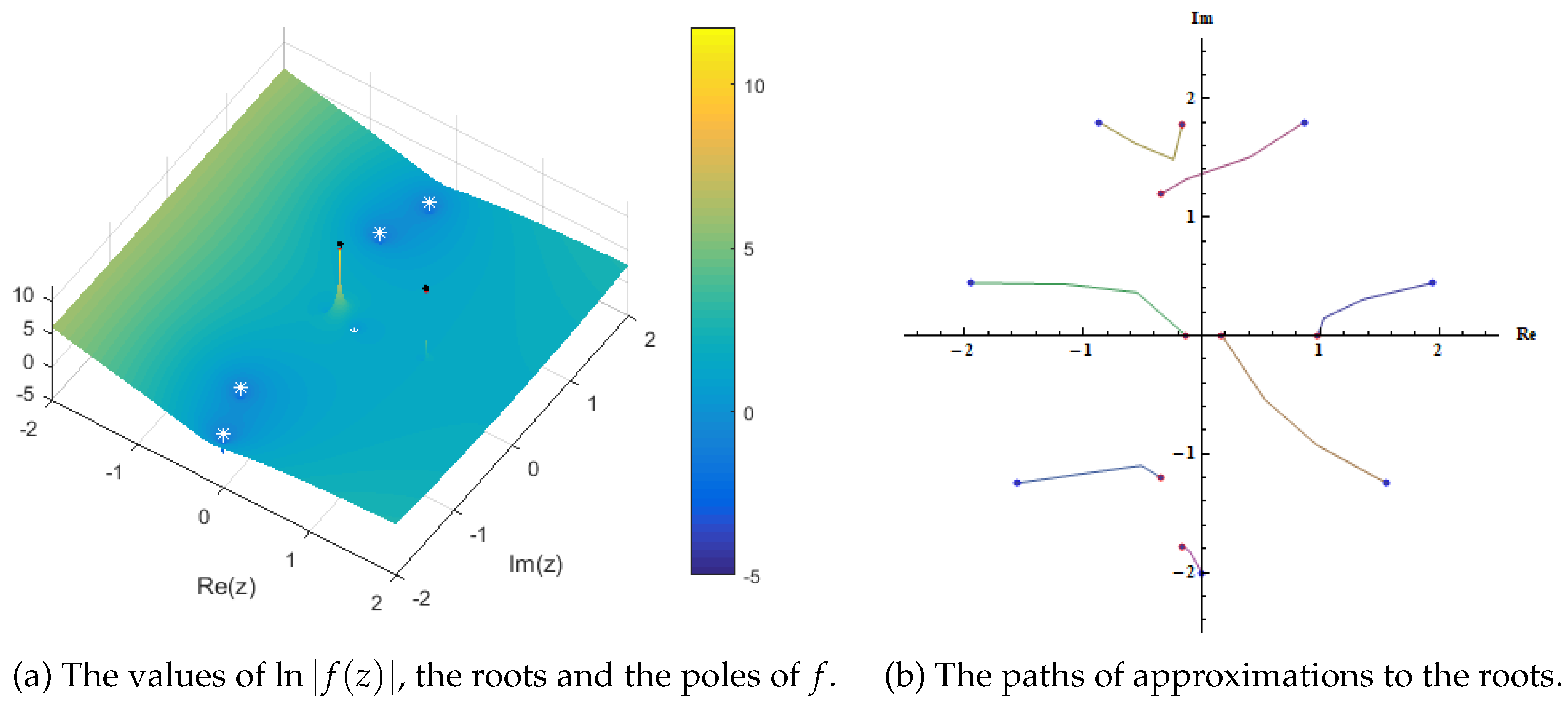

3. Numerical Examples

- A posteriori error estimate. Let P be a complex polynomial of degree and let be a sequence of vectors in with pairwise distinct coordinates. Then, for every , there is a vector of the roots of f such thatwhere the number τ and the function are defined by

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Halley, E. A new, exact, and easy method of finding the roots of any equations generally, and that without any previous reduction. Philos. Trans. R. Soc. 1694, 18, 136–148. (In Latin) [Google Scholar] [CrossRef]

- Chebyshev, P. Complete Works of P.L. Chebyshev; USSR Academy of Sciences: Moscow, Russia, 1973; pp. 7–25. Available online: http://books.e-heritage.ru/book/10079542 (accessed on 1 January 2020). (In Russian)

- Proinov, P.D. General local convergence theory for a class of iterative processes and its applications to Newton’s method. J. Complexity 2009, 25, 38–62. [Google Scholar] [CrossRef]

- Ivanov, S.I. A general approach to the study of the convergence of Picard iteration with an application to Halley’s method for multiple zeros of analytic functions. J. Math. Anal. Appl. 2022, 513, 126238. [Google Scholar] [CrossRef]

- Kostadinova, S.G.; Ivanov, S.I. Chebyshev’s Method for Multiple Zeros of Analytic Functions: Convergence, Dynamics and Real-World Applications. Mathematics 2024, 12, 3043. [Google Scholar] [CrossRef]

- Pan, V.Y. Solving a polynomial equation: Some history and recent progress. SIAM Rev. 1997, 39, 187–220. [Google Scholar] [CrossRef]

- Petković, M. Point Estimation of Root Finding Methods; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 2008; Volume 1933. [Google Scholar] [CrossRef]

- McNamee, J.M.; Pan, V. Numerical Methods for Roots of Polynomials. Part II; Studies in Computational Mathematics; Elsevier: Amsterdam, The Netherlands, 2013; Volume 16. [Google Scholar]

- Proinov, P.D.; Ivanov, S.I. On the convergence of Halley’s method for multiple polynomial zeros. Mediterr. J. Math. 2015, 12, 555–572. [Google Scholar] [CrossRef]

- Proinov, P.D.; Ivanov, S.I. On the convergence of Halley’s method for simultaneous computation of polynomial zeros. J. Numer. Math. 2015, 23, 379–394. [Google Scholar] [CrossRef]

- Ivanov, S.I. On the convergence of Chebyshev’s method for multiple polynomial zeros. Results Math. 2016, 69, 93–103. [Google Scholar] [CrossRef]

- Ivanov, S.I. Unified Convergence Analysis of Chebyshev-Halley Methods for Multiple Polynomial Zeros. Mathematics 2022, 10, 135. [Google Scholar] [CrossRef]

- Weierstrass, K. Neuer Beweis des Satzes, dass jede ganze rationale Function einer Veränderlichen dargestellt werden kann als ein Product aus linearen Functionen derselben Veränderlichen. Sitzungsber. Königl. Preuss. Akad. Wiss. Berlinn 1891, II, 1085–1101. Available online: https://biodiversitylibrary.org/page/29263197 (accessed on 1 January 2020).

- Dochev, K.; Byrnev, P. Certain modifications of Newton’s method for the approximate solution of algebraic equations. USSR Comput. Math. Math. Phys. 1964, 4, 174–182. [Google Scholar] [CrossRef]

- Ehrlich, L. A modified Newton method for polynomials. Commun. ACM 1967, 10, 107–108. [Google Scholar] [CrossRef]

- Börsch-Supan, W. Residuenabschätzung für Polynom-Nullstellen mittels Lagrange Interpolation. Numer. Math. 1970, 14, 287–296. [Google Scholar] [CrossRef]

- Lehmer, D.H. The Graeffe process as applied to power series. MTOAC 1945, 1, 377–383. [Google Scholar] [CrossRef]

- Delves, L.M.; Lyness, J.N. A Numerical Method for Locating the Zeros of an Analytic Function. Math. Comput. 1967, 21, 543–560. [Google Scholar] [CrossRef]

- Davies, B. Locating the zeros of an analytic function. Math. Comput. 1986, 66, 36–49. [Google Scholar] [CrossRef]

- Tovmasyan, N.; Kosheleva, T. On a certain method for finding zeros of analytic functions and its application to solving boundary value problems. Sib. Math. J. 1995, 36, 988–998. [Google Scholar] [CrossRef]

- Giri, D.D.V. Complex Variable Theorems for Finding Zeroes and Poles of Transcendental Functions. J. Phys. Conf. Ser. 2019, 1334, 012005. [Google Scholar] [CrossRef]

- Chen, H. On locating the zeros and poles of a meromorphic function. J. Comput. Appl. Math. 2022, 402, 113796. [Google Scholar] [CrossRef]

- Dziedziewicz, S.; Warecka, M.; Lech, R.; Kowalczyk, P. Self-adaptive mesh generator for global complex roots and poles finding algorithm. IEEE Trans. Microw. Theory Tech. 2023, 71, 2854–2863. [Google Scholar] [CrossRef]

- Frantsuzov, V.A.; Artemyev, A.V. A global argument-based algorithm for finding complex zeros and poles to investigate plasma kinetic instabilities. J. Comput. Appl. Math. 2025, 456, 116217. [Google Scholar] [CrossRef]

- Shampine, L. Vectorized adaptive quadrature in MATLAB. J. Comput. Appl. Math. 2008, 211, 131–140. [Google Scholar] [CrossRef]

- Aberth, O. Iteration Methods for Finding all Zeros of a Polynomial Simultaneously. Math. Comp. 1973, 27, 339–344. [Google Scholar] [CrossRef]

- Ivanov, S.I. A unified semilocal convergence analysis of a family of iterative algorithms for computing all zeros of a polynomial simultaneously. Numer. Algorithms 2017, 75, 1193–1204. [Google Scholar] [CrossRef]

- Pavkov, T.M.; Kabadzhov, V.G.; Ivanov, I.K.; Ivanov, S.I. Local convergence analysis of a one parameter family of simultaneous methods with applications to real-world problems. Algorithms 2023, 16, 103. [Google Scholar] [CrossRef]

- Proinov, P.D.; Ivanov, S.I. A new family of Sakurai-Torii-Sugiura type iterative methods with high order of convergence. J. Comput. Appl. Math. 2024, 436, 115428. [Google Scholar] [CrossRef]

- Kravanja, P.; Barel, M.V.; Haegemans, A. On computing zeros and poles of meromorphic functions. In Computational Methods and Function Theory; World Scientific Publishing Co. Pte Ltd.: Singapore, 1999; Volume 1997, pp. 359–369. [Google Scholar] [CrossRef]

- Gritton, K.S.; Seader, J.; Lin, W.J. Global homotopy continuation procedures for seeking all roots of a nonlinear equation. Comput. Chem. Eng. 2001, 25, 1003–1019. [Google Scholar] [CrossRef]

| Function | Method | Initial Guess | Root | k | |

|---|---|---|---|---|---|

| Newton | 14 | ||||

| Halley | 6 | ||||

| Chebyshev | The method diverges | ||||

| Newton | 5 | ||||

| Halley | 4 | ||||

| Chebyshev | 4 | ||||

| Newton | The method diverges | ||||

| Halley | 3 | ||||

| Chebyshev | The method diverges | ||||

| Polynomial | k | ||||

|---|---|---|---|---|---|

| 6 | |||||

| 10 | |||||

| 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ivanov, I.K.; Ivanov, S.I. A Two-Stage Numerical Algorithm for the Simultaneous Extraction of All Zeros of Meromorphic Functions. AppliedMath 2025, 5, 138. https://doi.org/10.3390/appliedmath5040138

Ivanov IK, Ivanov SI. A Two-Stage Numerical Algorithm for the Simultaneous Extraction of All Zeros of Meromorphic Functions. AppliedMath. 2025; 5(4):138. https://doi.org/10.3390/appliedmath5040138

Chicago/Turabian StyleIvanov, Ivan K., and Stoil I. Ivanov. 2025. "A Two-Stage Numerical Algorithm for the Simultaneous Extraction of All Zeros of Meromorphic Functions" AppliedMath 5, no. 4: 138. https://doi.org/10.3390/appliedmath5040138

APA StyleIvanov, I. K., & Ivanov, S. I. (2025). A Two-Stage Numerical Algorithm for the Simultaneous Extraction of All Zeros of Meromorphic Functions. AppliedMath, 5(4), 138. https://doi.org/10.3390/appliedmath5040138