1. Introduction

With advancements in technology, capabilities in data storage, transfer rates, and computational power are rapidly increasing, leading to the generation of massive datasets. This creates a growing need for new techniques for the real-time control of complex systems. The modeling and control of such systems are central to various applications in physical, biological, and engineering domains. These include epidemic control, internet traffic regulation, energy infrastructure optimization, and more.

The analysis and control of complex systems require the development of innovative quantitative methods and data-driven techniques. One such approach is dynamic mode decomposition (DMD) [

1], which has become a prominent tool for identifying spatiotemporal coherent structures from high-dimensional data. DMD provides a numerical approximation to Koopman spectral analysis and can therefore be applied to nonlinear dynamical systems [

2,

3]. Over the past decade, the DMD method has gained popularity across a wide range of applications, including video processing [

4], epidemiology [

5], neuroscience [

6], financial trading [

7,

8,

9], robotics [

10], cavity flows [

11,

12], and various jet flows [

2,

13]. For an overview of the DMD literature, we refer the reader to [

14,

15,

16,

17].

In recent years, a variety of advanced control strategies and mathematical models have been proposed to address complex systems in different fields. For instance, Liang et al. [

18] explored decentralized control for networked systems under asymmetric information conditions, while Fahim et al. [

19] developed a mathematical framework for liquidity risk contagion in banking systems using optimal control approaches. Shahgholian and Fathollahi [

20] focused on improving load frequency control in multi-resource energy systems, particularly through superconducting magnetic energy storage, and Ndeke et al. [

21] provided a detailed methodology for modeling voltage source converters in electrical circuits.

In practical settings, the modeling of high-dimensional, complex dynamical systems often requires accounting for external inputs or control actions. The standard DMD framework does not include control effects and may therefore fail to accurately predict system behavior under actuation. To address this, a modification known as dynamic mode decomposition with control (DMDc) was proposed in [

22]. DMDc incorporates both state measurements and external inputs to capture the underlying system dynamics. In this work, we present an alternative formulation for implementing the DMDc method. Before introducing the new approach, we will briefly review the standard DMD and DMDc methodologies.

1.1. Dynamic Mode Decomposition (DMD Method)

For completeness, we begin with a brief overview of the standard DMD framework, following the formulation in [

14]. Let us consider a sequential set of snapshot vectors:

where each snapshot

. These data vectors may originate from simulations, experiments, or real-world measurements collected at discrete times

, assumed to be uniformly spaced by a time interval

, over the interval

.

The DMD method begins by arranging the data into two large matrices:

The central assumption of the method is that a linear operator

A exists such that we have the following:

which, in matrix form, leads to:

Performing spectral decomposition of A yields the so-called dynamic mode decomposition of the dataset be italic D. The eigenvectors and eigenvalues of A are approximated and referred to as the DMD modes and DMD eigenvalues, respectively.

To compute the best-fit approximation of

A, we apply the Moore–Penrose pseudoinverse

to both sides of Equation (

4):

A practical approach for computing this approximation uses the singular value decomposition (SVD) of

:

where

and

are unitary matrices, and

is a diagonal matrix of singular values. The symbol * denotes complex conjugate transpose.

In high-dimensional settings where , direct analysis of A becomes computationally expensive. DMD addresses this by projecting A onto a lower-dimensional subspace using a reduced SVD. This is motivated by the often low-dimensional nature of the essential system dynamics.

Let the reduced (or truncated) SVD of

X be as follows:

where

r is the truncation rank,

,

, and

. Substituting into Equation (

5), we obtain a reduced-order representation of

A. By taking the basis transformation

, the reduced-order model can be derived:

which yields the following:

where

and

are the reduced operator and reduced snapshot matrix, respectively.

By performing eigendecomposition on

:

we obtain the eigenvalues

and eigenvectors

W of the reduced system. Notably, the eigenvalues of

are also eigenvalues of the original matrix

A.

To compute the DMD modes of the full system, we lift the reduced eigenvectors back into the original space. Two formulations are commonly used:

The exact DMD modes, introduced by Tu et al. [

14]:

The projected DMD modes, as originally proposed in [

1]:

The modes from Equation (

10) are exact eigenvectors of

A, while those from Equation (

11) represent their projection onto the POD subspace.

1.2. Dynamic Mode Decomposition with Control

(DMDc)

Dynamic mode decomposition with control (DMDc) is an extension of the classical DMD method, developed to analyze multidimensional systems that are influenced by external inputs or disturbances [

22]. Unlike standard DMD, which considers only the autonomous system dynamics, DMDc incorporates time-dependent control inputs, enabling it to distinguish between internal system behavior and input-driven effects.

To account for the control influence, a new data matrix—analogous to the snapshot matrices

X and

Y from (

2)—is introduced:

where each

, and

q is the number of input variables.

The DMDc model assumes a linear relationship between consecutive system states and the inputs:

where

is the system (DMD) operator, and

is the input matrix. Using snapshot matrices, this model can be written as follows:

Assuming

A and

B are unknown, we rewrite Equation (

14) in block matrix form:

where

The DMDc procedure seeks the best-fit approximation of

G that satisfies the relation

. To achieve this, we apply a truncated singular value decomposition (SVD) to the matrix

:

where

,

, and

.

Using this decomposition, the matrix

G is approximated by the following:

We partition

as follows:

with

and

. The matrices

A and

B are then approximated as follows:

To obtain a reduced-order representation of the dynamics, we project the system onto a lower-dimensional subspace. Unlike standard DMD, where the projection is based on

X, here, we use the SVD of

Y to define the transformation:

where

,

, and

. Typically, the reduced dimension,

r, is smaller than

p from the decomposition of

.

Applying this projection yields reduced-order operators:

with

and

. Alternatively, one may choose to project using the SVD of

X, but this leads to a different reduced basis.

The DMD modes are derived from the eigendecomposition of

:

where the eigenvalues

describe the system’s temporal behavior, and the DMDc modes are obtained via the following:

The following algorithm (Algorithm 1) summarizes the steps of implementing the DMDc scheme:

| Algorithm 1 Standard DMDc procedure |

Collect data and construct the matrices X, Y, and as in ( 2) and ( 12). Define the combined matrix as in ( 16). Perform reduced SVD of :

truncated to rank p. Perform reduced SVD of Y:

truncated to rank r. Compute the reduced-order operators: Compute the spectral decomposition of : Calculate the DMDc modes:

|

2. Alternative DMDc Algorithm

To obtain a low-rank approximation of the operator

A from Equation (

13), the standard DMDc algorithm exploits the low-dimensional structure of the data. In this section, we propose an alternative formulation of the DMDc method, as also presented in [

23].

Let the data matrices

X,

Y, and

be defined as in Equations (

2) and (

12). Define the block matrix

as in (

16). We aim to compute the best-fit linear operator

G from Equation (

16) using the Moore–Penrose pseudoinverse:

where

denotes the pseudoinverse of

. If the rows of

are linearly independent,

can be computed via SVD or as

.

We now express

in block form to separate the contributions of the state and control inputs:

where

corresponds to the state snapshot matrix

X, and

corresponds to the control input matrix

. This decomposition allows us to isolate the influence of

X and

on

Y.

Substituting into (

24), the operators

A and

B are approximated as follows:

where

and

are the least-squares estimates of the system and input matrices, obtained from projecting

Y onto the subspaces of

X and

.

If a reduced SVD of

as in (

17) is used, then the estimates

and

coincide with the matrices

and

defined in (

19).

We now perform a reduced-order SVD on

(instead of

Y) to obtain a compact representation of the system dynamics:

where

contains the leading left singular vectors,

is a diagonal matrix with the top

r singular values, and

contains the right singular vectors. The truncation parameter

r defines the rank of the reduced subspace.

Using this decomposition, we define reduced-order approximations of

A and

B via projection onto the subspace defined by

:

where

and

are reduced approximations of the system and input matrices. Note that this reduced-order formulation differs from the one in (

21) obtained via the standard DMDc method.

We compute the eigenstructure of

:

where

W contains the eigenvectors of

and

is a diagonal matrix of its eigenvalues.

To reconstruct the DMD modes in the full state space, we apply the following transformation:

where the columns of

are the DMD modes of the approximated operator

A.

We then summarize the results in the next algorithm Algorithm 2:

| Algorithm 2 Alternative DMDc Algorithm |

Collect data and construct the matrices X, Y, and as in ( 2) and ( 12). Define as in ( 16). Compute the pseudoinverse and express it in block form: Perform a truncated SVD of :

with truncation rank r. Compute the reduced-order operators: Compute the eigendecomposition of : Reconstruct the DMD modes:

|

In what follows, we will demonstrate that Algorithm 2 identifies all nonzero eigenvalues of the operator A. We begin by proving the following theorem.

Theorem 1. Let the matrix , defined in (28), have an eigenpair , where . Then the pair is an eigenpair of A, where we have the following: Proof. We aim to show that if

, then

, defined via (

31), satisfies

, i.e., it is an eigenvector of

A with eigenvalue

.

Recall from (

26) that we approximate:

. Also, from the reduced SVD in (

27), we have:

. Combining these, we write the action of

A on

as follows:

Then, substituting the definition of

from (

31):

We now observe that this expression is equivalent to applying the matrix

, as defined in (

28). Hence, we have the following:

Since

is an eigenpair of

, we have the following:

Thus, satisfies the eigenvalue equation for A.

It remains to verify that

. Suppose

, i.e.,

Then, multiplying both sides by

, we obtain the following:

which implies

, contradicting the assumption

. Hence,

, and we conclude that

is indeed an eigenpair of

A. The theorem is proved. □

We will now show that Algorithm 2 produces all non-zero eigenvalues of

A. Let us assume that

, for

, and

. Hence

Therefore, , because if , then , and . Hence, is an eigenvector of with the corresponding eigenvalue , and it is discovered by Algorithm 2.

The alternative DMDc algorithm constructs a low-rank approximation of the system operator A by projecting the data onto a reduced subspace derived from the singular value decomposition (SVD) of the input data matrix. This approach yields a reduced-order operator that captures the dominant system dynamics. The corresponding eigenvectors are then lifted back to the original state space via the transformation , recovering the DMD modes.

However, due to the nature of this projection, only the nonzero eigenvalues of A are preserved. Eigenvectors associated with zero eigenvalues are mapped to the zero vector and effectively discarded, as the transformation inherently maps any eigenvector associated with a zero eigenvalue to the zero vector. While this filtering enhances numerical robustness by eliminating uninformative or noise-dominated directions, it also means that the algorithm does not recover DMD modes corresponding to structurally zero dynamics. In some control or system identification tasks, these missing modes may still carry important information.

Zero eigenvalues typically correspond to stationary modes or equilibrium components that do not evolve over time. Although they reflect invariant properties of the system, such modes are often disregarded in dynamic analyses like DMD, where the focus lies on capturing temporal evolution and transient behavior. Nevertheless, in scenarios where equilibrium behavior is of interest, such as in stability analysis or controller design, these zero modes may be of practical significance.

Computational Complexity

The overall computational structure of the alternative Algorithm 2 is comparable to that of the classical DMDc (Algorithm 1), with similar effort required for Steps 1–3 and Step 5. However, the key differences arise in Steps 4 and 6, where Algorithm 2 offers a more streamlined approach. These differences are summarized in

Table 1, which compares the reduced operators and the expressions used for computing the DMD modes in both methods.

From a computational standpoint, Algorithm 1 requires a greater number of matrix multiplications and intermediate storage. Specifically, it involves at least six intermediate matrices and five matrix multiplications to compute the reduced operator . In contrast, Algorithm 2 uses only four intermediate matrices and requires three multiplications due to its more direct formulation. The computation of the DMD modes is also more straightforward in Algorithm 2.

This reduction in computational complexity makes Algorithm 2 particularly suitable for high-dimensional systems and for use in online or real-time applications, where efficiency and scalability are critical. Moreover, the simplified structure facilitates easier implementation and adaptation to large-scale, data-driven control problems.

3. Numerical Illustrations

In this section, we present a series of numerical experiments to demonstrate the performance of the proposed algorithm. Dynamic mode decomposition with control (DMDc) is a generalization of the standard DMD framework that captures the underlying dynamics of a system through measurements of both the state and external inputs.

The new formulation (Algorithm 2), introduced in

Section 2, offers significant computational advantages over the classical DMDc algorithm (Algorithm 1). In all examples below, we compare the performance and outputs of both methods.

Example 1. Unstable linear system with inputs

Algorithm 2 is first applied to a simple two-dimensional unstable linear system with input-driven stabilization. This canonical setup has been considered in several prior works, see [

15,

22,

24]. The system is defined as follows:

For

or

, the system is unstable. We set the parameters to:

,

,

, and apply a proportional controller

. This stabilizes the system by moving the unstable eigenvalue inside the unit circle. The fixed point of the system is at the origin

, illustrated in

Figure 1.

We perform ten iterations with the initial condition

, and construct the following data matrices from the first five snapshots:

Both algorithms produce identical DMD modes:

with corresponding DMD eigenvalues

and

.

Example 2. Nonlinear system with inputs.

Next, we consider a nonlinear dynamical system with external input:

where

,

, and

, following examples in [

14,

24,

25,

26].

To enable a Koopman-based analysis, we augment the state with a nonlinear observable:

The system is as follows:

We collect measurements of the observables over 10 iterations, starting from the initial condition

. The input

u consists of random signals sampled from a uniform distribution. The following matrices are constructed from the first five time snapshots:

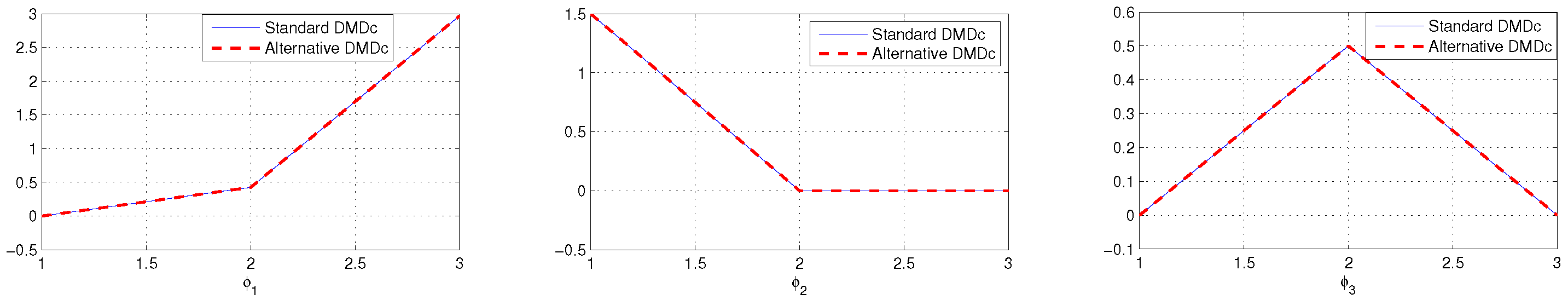

Both algorithms yield the same DMD modes (see

Figure 2) corresponding to the eigenvalues

,

, and

.

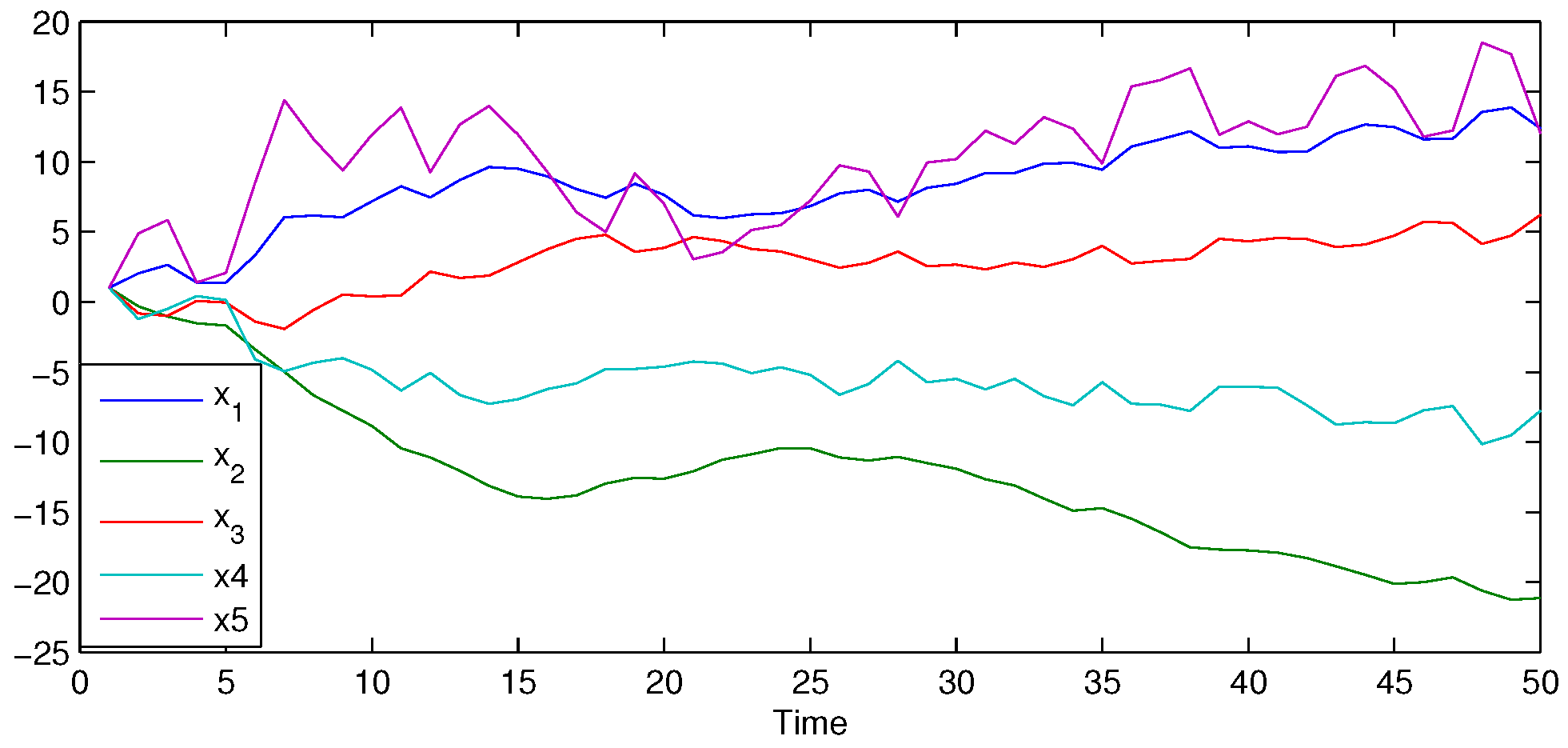

Example 3. Large-scale stable linear systems.

Finally, we examine a large-scale linear system where the number of measurements significantly exceeds the dimensionality of the underlying dynamics.

The system exhibits a low-dimensional attractor. We construct a discrete-time linear state-space model using MATLAB’s drss function. The configuration includes:

State dimension: 5

Input dimension: 2

Output dimension: 50

This yields matrices

,

,

, and

. The input sequence

is generated via MATLAB’s

randn function. The initial state is set as

. Using these, we compute the data matrices

X and

Y, whose dynamics are shown in

Figure 3.

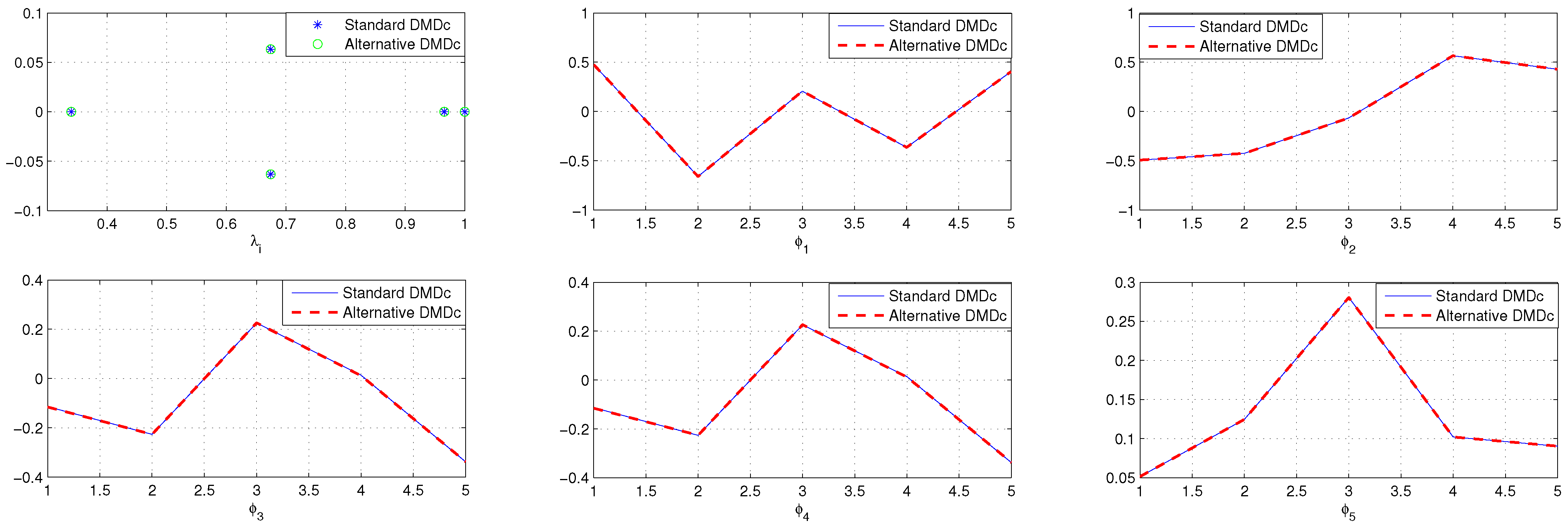

The DMD modes and eigenvalues computed using both algorithms are displayed in

Figure 4.

The results show complete agreement between the classical and alternative algorithms in both computed eigenvalues and DMD modes across all examples.

4. Conclusions

In this paper, we proposed an alternative formulation of the dynamic mode decomposition with control (DMDc), based on a structured low-rank approximation of the data matrices. We introduced and analyzed a new algorithm (Algorithm 2), which is shown to be more computationally efficient in terms of resource usage compared to the standard approach. Unlike traditional DMDc, this method constructs a reduced-order model using the singular value decomposition (SVD) of a block matrix, . This allows for the direct computation of the reduced dynamics and control matrix without requiring a full SVD of the output matrix Y. Theoretical analysis has shown that all nonzero eigenvalues of the original system matrix A are captured by the eigenvalues of , and a precise transformation allows recovery of the corresponding DMD modes.

The proposed algorithm simplifies the computational pathway by decoupling the effects of state and control inputs during model identification. This decoupling eliminates the need for certain matrix inversions and complex operator approximations inherent in traditional DMDc methods. As a result, it reduces memory usage and minimizes matrix multiplications. These features make the method particularly advantageous for large-scale systems, streaming data environments, and real-time control applications, where both computational speed and resource efficiency are critical. A comparison of matrix operations shows that Algorithm 2 requires storing fewer matrices and performing fewer multiplications than the standard approach, all while maintaining accuracy. Numerical examples have confirmed that the proposed method yields results identical to those obtained from the standard DMDc algorithm. Overall, the proposed method provides a practical, computationally efficient, and theoretically sound alternative to standard DMDc. Its modular structure and minimal resource footprint make it a promising tool for data-driven modeling and control in high-dimensional or rapidly changing environments.

However, despite its computational advantages, the proposed approach has certain limitations. One important feature of the formulation is that modes associated with zero eigenvalues are naturally excluded through the projection mechanism, which can enhance numerical robustness by focusing the model on the most dynamically significant behaviors. While this exclusion is often beneficial, it may be problematic in systems where zero dynamics have physical significance or are essential for stability analysis. In such cases, the method might overlook important behaviors. Additionally, the algorithm assumes that the data matrices are sufficiently rank-deficient and well-conditioned, which may not be the case in the presence of high noise levels or missing data.

Future work could focus on addressing these limitations by integrating regularization techniques, robust estimation strategies, and methods for explicitly capturing zero dynamics when necessary. Furthermore, extending the algorithm to work within adaptive or streaming frameworks for online learning and control would be a valuable direction, particularly for real-time applications in large-scale systems or robotics.